Beruflich Dokumente

Kultur Dokumente

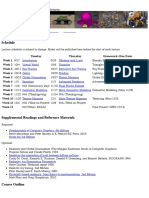

Computerarchitecture Abhishekmail 130520052349 Phpapp02

Hochgeladen von

arjoghoshCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Computerarchitecture Abhishekmail 130520052349 Phpapp02

Hochgeladen von

arjoghoshCopyright:

Verfügbare Formate

COMPUTER ARCHITECTURE

MICROPROCESSOR

IT IS ONE OF THE GREATEST

ACHIEVEMENTS OF THE 20

TH

CENTURY.

IT USHERED IN THE ERA OF WIDESPREAD

COMPUTERIZATION.

EARLY ARCHITECTURE

VON NEUMANN ARCHITECTURE, 1940

PROGRAM IS STORED IN MEMORY.

SEQUENTIAL OPERATION

ONE INST IS RETRIEVED AT A TIME, DECODED

AND EXECUTED

LIMITED SPEED

Conventional 32 bit

Microprocessors

Higher data throughput with 32 bit wide data bus

Larger direct addressing range

Higher clock frequencies and operating speeds as a result

of improvements in semiconductor technology

Higher processing speeds because larger registers require

fewer calls to memory and reg-to-reg transfers are 5 times

faster than reg-to-memory transfers

Conventional 32 bit

Microprocessors

More insts and addressing modes to improve software

efficiency

More registers to support High-level languages

More extensive memory management & coprocessor

capabilities

Cache memories and inst pipelines to increase processing

speed and reduce peak bus loads

Conventional 32 bit

Microprocessors

To construct a complete general purpose 32 bit

microprocessor, five basic functions are necessary:

ALU

MMU

FPU

INTERRUPT CONTROLLER

TIMING CONTROL

Conventional Architecture

KNOWN AS VON NEUMANN ARCHITECTURE

ITS MAIN FEATURES ARE:

A SINGLE COMPUTING ELEMENT INCORPORATING A

PROCESSOR, COMM. PATH AND MEMORY

A LINEAR ORGANIZATION OF FIXED SIZE MEMORY

CELLS

A LOW-LEVEL MACHINE LANGUAGE WITH INSTS

PERFORMING SIMPLE OPERATIONS ON ELEMENTARY

OPERANDS

SEQUENTIAL, CENTRALIZED CONTROL OF

COMPUTATION

Conventional Architecture

Single processor configuration:

PROCESSOR

MEMORY INPUT-OUTPUT

Conventional Architecture

Multiple processor configuration with a global bus:

SYSTEM

INPUT-

OUTPUT

GLOBAL

MEMORY

PROCESSORS

WITH LOCAL

MEM & I/O

Conventional Architecture

THE EARLY COMP ARCHITECTURES WERE

DESIGNED FOR SCIENTIFIC AND COMMERCIAL

CALCULATIONS AND WERE DEVEPOED TO

INCREASE THE SPEED OF EXECUTION OF SIMPLE

INSTRUCTIONS.

TO MAKE THE COMPUTERS PERFORM MORE

COMPLEX PROCESSES, MUCH MORE COMPLEX

SOFTWARE WAS REQUIRED.

Conventional Architecture

ADVANCEMENT OF TECHNOLOGY ENHANCED

THE SPEED OF EXECUTION OF PROCESSORS BUT

A SINGLE COMM PATH HAD TO BE USED TO

TRANSFER INSTS AND DATA BETWEEN THE

PROCESSOR AND THE MEMORY.

MEMORY SIZE INCREASED. THE RESULT WAS

THE DATA TRANSFER RATE ON THE MEMORY

INTERFACE ACTED AS A SEVERE CONSTRAINT

ON THE PROCESSING SPEED.

Conventional Architecture

AS HIGHER SPEED MEMORY BECAME

AVAILABLE, THE DELAYS INTRODUCED BY THE

CAPACITANCE AND THE TRANSMISSION LINE

DELAYS ON THE MEMORY BUS AND THE

PROPAGATION DELAYS IN THE BUFFER AND

ADDRESS DECODING CIRCUITRY BECAME MORE

SIGNIFICANT AND PLACED AN UPPER LIMIT ON

THE PROCESSING SPEED.

Conventional Architecture

THE USE OF MULTIPROCESSOR BUSES WITH THE

NEED FOR ARBITRATION BETWEEN COMPUTERS

REQUESTING CONTROL OF THE BUS REDUCED

THE PROBLEM BUT INTRODUCED SEVERAL WAIT

CYCLES WHILE THE DATA OR INST WERE

FETCHED FROM MEMORY.

ONE METHOD OF INCREASING PROCESSING

SPEED AND DATA THROUGHPUT ON THE

MEMORY BUS WAS TO INCREASE THE NUMBER

OF PARALLEL BITS TRANSFERRED ON THE BUS.

Conventional Architecture

THE GLOBAL BUS AND THE GLOBAL MEMORY

CAN ONLY SERVE ONE PROCESSOR AT A TIME.

AS MORE PROCESSORS ARE ADDED TO

INCREASE THE PROCESSING SPEED, THE GLOBAL

BUS BOTTLENECK BECAME WORSE.

IF THE PROCESSING CONSISTS OF SEVERAL

INDEPENDENT TASKS, EACH PROC WILL

COMPETE FOR GLOBAL MEMORY ACCESS AND

GLOBAL BUS TRANSFER TIME.

Conventional Architecture

TYPICALLY, ONLY 3 OR 4 TIMES THE SPEED OF A SINGLE

PROCESSOR CAN BE ACHIEVED IN MULTIPROCESSOR

SYSTEMS WITH GLOBAL MEMORY AND A GLOBAL BUS.

TO REDUCE THE EFFECT OF GLOBAL MEMORY BUS AS A

BOTTLENECK, (1) THE LENGTH OF THE PIPE WAS

INCREASED SO THAT THE INST COULD BE BROKEN

DOWN INTO BASIC ELEMENTS TO BE MANIPULATED

SIMULTANEOUSLY AND (2) CACHE MEM WAS

INTRODUCED SO THAT INSTS AND/OR DATA COULD BE

PREFETCHED FROM GLOBAL MEMORY AND STORED IN

HIGH SPEED LOCAL MEMORY.

PIPELINING

THE PROC. SEPARATES EACH INST INTO ITS BASIC

OPERATIONS AND USES DEDICATED EXECUTION UNITS

FOR EACH TYPE OF OPERATION.

THE MOST BASIC FORM OF PIPELINING IS TO PREFETCH

THE NEXT INSTRUCTION WHILE SIMULTANEOULY

EXECUTING THE PREVIOUS INSTRUCTION.

THIS MAKES USE OF THE BUS TIME WHICH WOULD

OTHERWISE BE WASTED AND REDUCES INSTRUCTION

EXECUTION TIME.

PIPELINING

TO SHOW THE USE OF A PIPELINE, CONSIDER THE

MULTIPLICATION OF 2 DECIMAL NOS: 3.8 X 10

2

AND

9.6X10

3

. THE PROC. PERFORMS 3 OPERATIONS:

A: MULTIPLIES THE MANTISSA

B: ADDS THE EXPONENTS

C: NORMALISES THE RESULT TO PLACE THE DECIMAL

POINT IN THE CORRECT POSITION.

IF 3 EXECUTION UNITS PERFORMED THESE

OPERATIONS, OPS.A & B WOULD DO NOTHING WHILE C

IS BEING PERFORMED.

IF A PIPELINE WERE IMPLEMENTED, THE NEXT

NUMBER COULD BE PROCESSED IN EXECUTION UNITS A

AND B WHILE C WAS BEING PERFORMED.

PIPELINING

TO GET A ROUGH INDICATION OF PERFORMANCE

INCREASE THROUGH PIPELINE, THE STAGE EXECUTION

INTERVAL MAY BE TAKEN TO BE THE EXECUTION TIME

OF THE SLOWEST PIPELINE STAGE.

THE PERFORMANCE INCREASE FROM PIPELINING IS

ROUGHLY EQUAL TO THE SUM OF THE AVERAGE

EXECUTION TIMES FOR ALL STAGES OF THE PIPELINE,

DIVIDED BY THE AVERAGE VALUE OF THE EXECUTION

TIME OF THE SLOWEST PIPELINE STAGE FOR THE INST

MIX CONSIDERED.

PIPELINING

NON-SEQUENTIAL INSTS CAUSE THE

INSTRUCTIONS BEHIND IN THE PIPELINE TO BE

EMPTIED AND FILLING TO BE RESTARTED.

NON-SEQUENTIAL INSTS. TYPICALLY COMPRISE

15 TO 30% OF INSTRUCTIONS AND THEY REDUCE

PIPELINE PERFORMANCE BY A GREATER

PERCENTAGE THAN THEIR PROBABILITY OF

OCCURRENCE.

CACHE MEMORY

VON-NEUMANN SYSTEM PERFORMANCE IS

CONSIDERABLY EFFECTED BY MEMORY ACCESS TIME

AND MEMORY BW (MAXIMUM MEMORY TRANSFER

RATE).

THESE LIMITATIONS ARE SPECIALLY TIGHT FOR 32 BIT

PROCESSORS WITH HIGH CLOCK SPEEDS.

WHILE STATIC RAM WITH 25ns ACCESS TIMES ARE

CAPABLE OF KEEPING PACE WITH PROC SPEED, THEY

MUST BE LOCATED ON THE SAME BOARD TO MINIMISE

DELAYS, THUS LIMITING THE AMOUNT OF HIGH SPEED

MEMORY AVAILABLE.

CACHE MEMORY

DRAM HAS A GREATER CAPACITY PER CHIP AND

A LOWER COST, BUT EVEN THE FASTEST DRAM

CANT KEEP PACE WITH THE PROCESSOR,

PARTICULARLY WHEN IT IS LOCATED ON A

SEPARATE BOARD ATTACHED TO A MEMORY

BUS.

WHEN A PROC REQUIRES INST/DATA FROM/TO

MEMORY, IT ENTERS A WAIT STATE UNTIL IT IS

AVAILABLE. THIS REDUCES PROCESSORS

PERFORMANCE.

CACHE MEMORY

CACHE ACTS AS A FAST LOCAL STORAGE

BUFFER BETWEEN THE PROC AND THE MAIN

MEMORY.

OFF-CHIP BUT ON-BOARD CACHE MAY REQUIRE

SEVERAL MEMORY CYCLES WHEREAS ON-CHIP

CACHE MAY ONLY REQUIRE ONE MEMORY

CYCLE, BUT ON-BOARD CACHE CAN PREVENT

THE EXCESSIVE NO. OF WAIT STATES IMPOSED

BY MEMORY ON THE SYSTEM BUS AND IT

REDUCES THE SYSTEM BUS LOAD.

CACHE MEMORY

THE COST OF IMPLEMENTING AN ON-BOARD

CACHE IS MUCH LOWER THAN THE COST OF

FASTER SYSTEM MEMORY REQUIRED TO

ACHIEVE THE SAME MEMORY PERFORMANCE.

CACHE PERFORMANCE DEPENDS ON ACCESS

TIME AND HIT RATIO, WHICH IS DEPENDENT ON

THE SIZE OF THE CACHE AND THE NO. OF BYTES

BROUGHT INTO CACHE ON ANY FETCH FROM

THE MAIN MEMORY (THE LINE SIZE).

CACHE MEMORY

INCREASING THE LINE SIZE INCREASES THE

CHANCE THAT THERE WILL BE A CACHE HIT ON

THE NEXT MEMORY REFERENCE.

IF A 4K BYTE CACHE WITH A 4 BYTE LINE SIZE

HAS A HIT RATIO OF 80%, DOUBLING THE LINE

SIZE MIGHT INCREASE THE HIT RATIO TO 85%

BUT DOUBLING THE LINE SIZE AGAIN MIGHT

ONLY INCREASE THE HIT RATIO TO 87%.

CACHE MEMORY

OVERALL MEMORY PERFORMANCE IS A

FUNCTION OF CACHE ACCESS TIME, CACHE HIT

RATIO AND MAIN MEMORY ACCESS TIME FOR

CACHE MISSES.

A SYSTEM WITH 80% CACHE HIT RATIO AND

120ns CACHE ACCESS TIME ACCESSES MAIN

MEMORY 20% OF THE TIME WITH AN ACCESS

TIME OF 600 ns. THE AV ACCESS TIME IN ns WILL

BE (0.8x120) +[0.2x(600 + 120)]= 240

CACHE DESIGN

PROCESSORS WITH DEMAND PAGED VIRTUAL

MEMORY SYSTEMS REQUIRE AN ASSOCIATIVE

CACHE.

VIRTUAL MEM SYSTEMS ORGANIZE ADDRESSES

BY THE START ADDRESSES FOR EACH PAGE AND

AN OFFSET WHICH LOCATES THE DATA WITHIN

THE PAGE.

AN ASSOCIATIVE CACHE ASSOCIATES THE

OFFSET WITH THE PAGE ADDRESS TO FIND THE

DATA NEEDED.

CACHE DESIGN

WHEN ACCESSED, THE CACHE CHECKS TO SEE IF IT

CONTAINS THE PAGE ADDRESS (OR TAG FIELD); IF SO, IT

ADDS THE OFFSET AND, IF A CACHE HIT IS DETECTED,

THE DATA IS FETCHED IMMEDIATELY FROM THE

CACHE.

PROBLEMS CAN OCCUR IN A SINGLE SET-ASSOCIATIVE

CACHE IF WORDS WITHIN DIFFERENT PAGES HAVE THE

SAME OFFSET.

TO MINIMISE THIS PROBLEM A 2-WAY SET-ASSOCIATIVE

CACHE IS USED. THIS IS ABLE TO ASSOCIATE MORE

THAN ONE SET OF TAGS AT A TIME ALLOWING THE

CACHE TO STORE THE SAME OFFSET FROM TWO

DIFFERENT PAGES.

CACHE DESIGN

A FULLY ASSOCIATIVE CACHE ALLOWS ANY

NUMBER OF PAGES TO USE THE CACHE

SIMULTANEOUSLY.

A CACHE REQUIRES A REPLACEMENT

ALGORITHM TO FIND REPLACEMENT CACHE

LINES WHEN A MISS OCCURS.

PROCESSORS THAT DO NOT USE DEMAND PAGED

VIRTUAL MEMORY, CAN EMPLOY A DIRECT

MAPPED CACHE WHICH CORRESPONDS EXACTLY

TO THE PAGE SIZE AND ALLOWS DATA FROM

ONLY ONE PAGE TO BE STORED AT A TIME.

MEMORY ARCHITECTURES

32 BIT PROCESSORS HAVE INTRODUCED 3 NEW

CONCEPTS IN THE WAY THE MEMORY IS

INTERFACED:

1. LOCAL MEMORY BUS EXTENSIONS

2. MEMORY INTEREAVING

3. VIRTUAL MEMORY MANAGEMENT

LOCAL MEM BUS EXTENSIONS

IT PERMITS LARGER LOCAL MEMORIES TO BE

CONNECTED WITHOUT THE DELAYS CAUSED BY BUS

REQUESTS AND BUS ARBITRATION FOUND ON

MULTIPROCESSOR BUSES.

IT HAS BEEN PROVIDED TO INCREASE THE SIZE OF THE

LOCAL MEMORY ABOVE THAT WHICH CAN BE

ACCOMODATED ON THE PROCESSOR BOARD.

BY OVERLAPPING THE LOCAL MEM BUS AND THE

SYSTEM BUS CYCLES IT IS POSSIBLE TO ACHIEVE

HIGHER MEM ACCESS RATES FROM PROCESSORS WITH

PIPELINES WHICH PERMIT THE ADDRESS OF THE NEXT

MEMORY REFERENCE TO BE GENERATED WHILE THE

PREVIOUS DATA WORD IS BEING FETCHED.

MEMORY INTERLEAVING

PIPELINED PROCESSORS WITH THE ABILITY TO

GENERATE THE ADDRESS OF THE NEXT MEMORY

REFERENCE WHILE FETCHING THE PREVIOUS

DATA WORD WOULD BE SLOWED DOWN IF THE

MEMORY WERE UNABLE TO BEGIN THE NEXT

MEMORY ACCESS UNTIL THE PREVIOUS MEM

CYCLE HAD BEEN COMPLETED.

THE SOLUTION IS TO USE TWO-WAY MEMORY

INTERLEAVING. IT USES 2 MEM BOARDS- 1 FOR

ODD ADDRESSES AND 1 FOR EVEN ADDRESSES.

MEMORY INTERLEAVING

ONE BOARD CAN BEGIN THE NEXT MEM CYCLE

WHILE THE OTHER BOARD COMPLETES THE

PREVIOUS CYCLE.

THE SPEED ADV IS GREATEST WHEN MULTIPLE

SEQUENTIAL MEM ACCESSES ARE REQUIRED

FOR BURST I/O TRANSFERS BY DMA.

DMA DEFINES A BLOCK TRANSFER IN TERMS OF

A STARTING ADDRESS AND A WORD COUNT FOR

SEQUENTIAL MEM ACCESSES.

MEMORY INTERLEAVING

TWO WAY INTERLEAVING MAY NOT PREVENT

MEM WAIT STATES FOR SOME FAST SIGNAL

PROCESSING APPLICATIONS AND SYSTEMS HAVE

BEEN DESIGNED WITH 4 OR MORE WAY

INTERLEAVING IN WHICH THE MEM BOARDS ARE

ASSIGNED CONSECUTIVE ADDRESSES BY A

MEMORY CONTROLLER.

Conventional Architecture

EVEN WITH THESE ENHANCEMENTS, THE SEQUENTIAL

VON NEUMANN ARCHITECTURE REACHED THE LIMITS

IN PROCESSING SPEED BECAUSE THE SEQUENTIAL

FETCHING OF INSTS AND DATA THROUGH A COMMON

MEMORY INTERFACE FORMED THE BOTTLENECK.

THUS, PARALLEL PROC ARCHITECTURES CAME INTO

BEING WHICH PERMIT LARGE NUMBER OF COMPUTING

ELEMENTS TO BE PROGRAMMED TO WORK TOGETHER

SIMULTANEOUSLY. THE USEFULNESS OF PARALLEL

PROCESSOR DEPENDS UPON THE AVAILABILITY OF

SUITABLE PARALLEL ALGORITHMS.

HOW TO INCREASE THE SYSTEM SPEED?

1. USING FASTER COMPONENTS. COSTS MORE,

DISSIPATE CONSIDERABLE HEAT.

THE RATE OF GROWTH OF SPEED USING

BETTER TECHNOLOGY IS VERY SLOW. eg., IN

80S BASIC CLOCK RATE WAS 50 MHz AND

TODAY IT IS AROUND 2 GHz, DURING THIS

PERIOD SPEED OF COMPUTER IN SOLVING

INTENSIVE PROBLEMS HAS GONE UP BY A

FACTOR OF 100,000. IT IS DUE TO THE

INCREASED ARCHITECTURE.

HOW TO INCREASE THE SYSTEM SPEED?

2. ARCHITECTURAL METHODS:

A. USE PARALLELISM IN SINGLE PROCESSOR

[ OVERLAPPING EXECUTION OF NO OF INSTS

(PIPELINING)]

B. OVERLAPPING OPERATION OF DIFFERENT

UNITS

C. INCREASE SPEED OF ALU BY EXPLOITING

DATA/TEMPORAL PARALLELISM

D. USING NO OF INTERCONNECTED PROCESSORS

TO WORK TOGETHER

PARALLEL COMPUTERS

THE IDEA EMERGED AT CIT IN 1981

A GROUP HEADED BY CHARLES SEITZ AND

GEOFFREY FOX BUILT A PARALLEL COMPUTER

IN 1982

16 NOS 8085 WERE CONNECTED IN A HYPERCUBE

CONFIGURATION

ADV WAS LOW COST PER MEGAFLOP

PARALLEL COMPUTERS

BESIDES HIGHER SPEED, OTHER FEATURES OF

PARALLEL COMPUTERS ARE:

BETTER SOLUTION QUALITY: WHEN ARITHMETIC

OPS ARE DISTRIBUTED, EACH PE DOES SMALLER

NO OF OPS, THUS ROUNDING ERRORS ARE

REDUCED

BETTER ALGORITHMS

BETTER AND FASTER STORAGE

GREATER RELIABILITY

CLASSIFICATION OF COMPUTER

ARCHITECTURE

FLYNNS TAXONOMY: IT IS BASED

UPON HOW THE COMPUTER

RELATES ITS INSTRUCTIONS TO THE

DATA BEING PROCESSED

SISD

SIMD

MISD

MIMD

FLYNNS TAXONOMY

SISD: CONVENTIONAL VON-NEUMANN SYSTEM.

CONTROL

UNIT

PROCESSOR

INST STREAM

DATA

STREAM

FLYNNS TAXONOMY

SIMD: IT HAS A SINGLE STREAM OF VECTOR

INSTS THAT INITIATE MANY OPERATIONS. EACH

ELEMENT OF A VECTOR IS REGARDED AS A

MEMBER OF A SEPARATE DATA STREAM GIVING

MULTIPLE DATA STREAMS.

CONTROL

UNIT

INST

STREAM

PROCESSOR

PROCESSOR

PROCESSOR

DATA STREAM 1

DATA STREAM 2

DATA STREAM 3

SYNCHRONOUS

MULTIPROCESSOR

FLYNNS TAXONOMY

MISD: NOT POSSIBLE

C U 1

C U 2

PU 3

PU 2

C U 3

PU 1

INST STREAM 1

INST STREAM 2

INST STREAM 3

DS

FLYNNS TAXONOMY

MIMD: MULTIPROCESSOR CONFIGURATION AND

ARRAY OF PROCESSORS.

CU 1

CU 2

CU 3

IS 1

IS 2

IS 3

DS 1

DS 2

DS 3

FLYNNS TAXONOMY

MIMD COMPUTERS COMPRISE OF INDEPENDENT

COMPUTERS, EACH WITH ITS OWN MEMORY,

CAPABLE OF PERFORMING SEVERAL

OPERATIONS SIMULTANEOUSLY.

MIMD COMPS MAY COMPRISE OF A NUMBER OF

SLAVE PROCESSORS WHICH MAY BE

INDIVIUALLY CONNECTED TO MULTI-ACCESS

GLOBAL MEMORY BY A SWITCHING MATRIX

UNDER THE CONTROL OF MASTER PROCESSOR.

FLYNNS TAXONOMY

THIS CLASSIFICATION IS TOO BROAD.

IT PUTS EVERYTHING EXCEPT

MULTIPROCESSORS IN ONE CLASS.

IT DOES NOT REFLECT THE CONCURRENCY

AVAILABLE THROUGH THE PIPELINE

PROCESSING AND THUS PUTS VECTOR

COMPUTERS IN SISD CLASS.

SHORES CLASSIFICATION

SHORE CLASSIFIED THE COMPUTERS ON THE BASIS

OF ORGANIZATION OF THE CONSTITUENT ELEMENTS

OF THE COMPUTER.

SIX DIFFERENT KINDS OF MACHINES WERE

RECOGNIZED:

1. CONVENTIONAL VON NEWMANN ARCHITECTURE

WITH 1 CU, 1 PU, IM AND DM. A SINGLE DM READ

PRODUCES ALL BITS FOR PROCESSING BY PU. THE PU

MAY CONTAIN MULTIPLE FUNCTIONAL UNITS WHICH

MAY OR MAY NOT BE PIPELINED. SO, IT INCLUDES

BOTH THE SCALAR COMPS (IBM 360/91, CDC7600)AND

PIPELINED VECTOR COMPUTERS (CRAY 1, CYBER 205)

SHORES CLASSIFICATION

TYPE I:

IM

CU

HORIZONTAL PU

WORD SLICE DM

NOTE THAT THE PROCESSING IS

CHARACTERISED AS

HORIZONTAL (NO OF BITS IN

PARALLEL AS A WORD)

SHORES CLASSIFICATION

MACHINE 2: SAME AS MACHINE 1 EXCEPT THAT

SM FETCHES A BIT SLICE FROM ALL THE WORDS

IN THE MEMORY AND PU IS ORGANIZED TO

PERFORM THE OPERATIONS IN A BIT SERIAL

MANNER ON ALL THE WORDS.

IF THE MEMORY IS REGARDED AS A 2D ARRAY

OF BITS WITH ONE WORD STORED PER ROW,

THEN THE MACHINE 2 READS VERTICAL SLICE

OF BITS AND PROCESSES THE SAME, WHEREAS

THE MACHINE 1 READS AND PROCESSES

HORIZONTAL SLICE OF BITS. EX. MPP, ICL DAP

SHORES CLASSIFICATION

MACHINE 2:

IM

CU

VERTICAL

PU

BIT SLICE

DM

SHORES CLASSIFICATION

MACHINE 3: COMBINATION OF 1 AND 2.

IT COULD BE CHARACTERISED HAVING A

MEMORY AS AN ARRAY OF BITS WITH BOTH

HORIZONTAL AND VERTICAL READING AND

PROCESSING POSSIBLE.

SO, IT WILL HAVE BOTH VERTICAL AND

HORIZONTAL PROCESSING UNITS.

EXAMPLE IS OMENN 60 (1973)

SHORES CLASSIFICATION

MACHINE 3:

IM

CU

VERTICAL

PU

HORIZON

TAL PU

DM

SHORES CLASSIFICATION

MACHINE 4: IT IS OBTAINED BY REPLICATING THE PU

AND DM OF MACHINE 1.

AN ENSEMBLE OF PU AND DM IS CALLED PROCESSING

ELEMENT (PE).

THE INSTS ARE ISSUED TO THE PEs BY A SINGLE CU. PEs

COMMUNICATE ONLY THROUGH CU.

ABSENCE OF COMM BETWEEN PEs LIMITS ITS

APPLICABILITY

EX: PEPE(1976)

SHORES CLASSIFICATION

MACHINE 4:

IM

CU

PU PU PU

DM DM DM

SHORES CLASSIFICATION

MACHINE 5: SIMILAR TO MACHINE 4 WITH THE

ADDITION OF COMMUNICATION BETWEEN

PE.EXAMPLE: ILLIAC IV

IM

CU

PU PU PU

DM DM DM

SHORES CLASSIFICATION

MACHINE 6:

MACHINES 1 TO 5 MAINTAIN SEPARATION

BETWEEN DM AND PU WITH SOME DATA BUS OR

CONNECTION UNIT PROVIDING THE

COMMUNICATION BETWEEN THEM.

MACHINE 6 INCLUDES THE LOGIC IN MEMORY

ITSELF AND IS CALLED ASSOCIATIVE

PROCESSOR.

MACHINES BASED ON SUCH ARCHITECTURES

SPAN A RANGE FROM SIMPLE ASSOCIATIVE

MEMORIES TO COMPLEX ASSOCIATIVE PROCS.

SHORES CLASSIFICATION

MACHINE 6:

IM

CU

PU + DM

FENGS CLASSIFICATION

FENG PROPOSED A SCHEME ON THE BASIS OF

DEGREE OF PARALLELISM TO CLASSIFY

COMPUTER ARCHITECTURE.

MAXIMUM NO OF BITS THAT CAN BE PROCESSED

EVERY UNIT OF TIME BY THE SYSTEM IS CALLED

MAXIMUM DEGREE OF PARALLELISM

FENGS CLASSIFICATION

BASED ON FENGS SCHEME, WE HAVE

SEQUENTIAL AND PARALLEL OPERATIONS AT

BIT AND WORD LEVELS TO PRODUCE THE

FOLLOWING CLASSIFICATION:

WSBS NO CONCEIVABLE IMPLEMENTATION

WPBS STARAN

WSBP CONVENTIONAL COMPUTERS

WPBP ILLIAC IV

o THE MAX DEGREE OF PARALLELISM IS GIVEN BY

THE PRODUCT OF THE NO OF BITS IN THE WORD

AND NO OF WORDS PROCESSED IN PARALLEL

HANDLERS CLASSIFICATION

FENGS SCHEME, WHILE INDICATING THE

DEGREE OF PARALLELISM DOES NOT ACCOUNT

FOR THE CONCURRENCY HANDLED BY THE

PIPELINED DESIGNS.

HANDLERS SCHEME ALLOWS THE PIPELINING

TO BE SPECIFIED.

IT ALLOWS THE IDENTIFICATION OF

PARALLELISM AND DEGREE OF PIPELINING

BUILT IN THE HARDWARE STRUCTURE

HANDLERS CLASSIFICATION

HANDLER DEFINED SOME OF THE TERMS AS:

PCU PROCESSOR CONTROL UNITS

ALU ARITHMETIC LOGIC UNIT

BLC BIT LEVEL CIRCUITS

PE PROCESSING ELEMENTS

A COMPUTING SYSTEM C CAN THEN BE CHARATERISED

BY A TRIPLE AS T(C) = (KxK', DxD', WxW')

WHERE K=NO OF PCU, K'= NO OF PROCESSORS THAT

ARE PIPELINED, D=NO OF ALU,D'= NO OF PIPELINED

ALU, W=WORDLENGTH OF ALU OR PE AND W'= NO OF

PIPELINE STAGES IN ALU OR PE

COMPUTER PROGRAM ORGANIZATION

BROADLY, THEY MAY BE CLASSIFIED AS:

CONTROL FLOW PROGRAM

ORGANIZATION

DATAFLOW PROGRAM ORGANIZATION

REDUCTION PROGRAM ORGANIATION

COMPUTER PROGRAM ORGANIZATION

IT USES EXPLICIT FLOWS OF CONTROL INFO TO

CAUSE THE EXECUTION OF INSTS.

DATAFLOW COMPS USE THE AVAILABILITY OF

OPERANDS TO TRIGGER THE EXECUTION OF

OPERATIONS.

REDUCTION COMPUTERS USE THE NEED FOR A

RESULT TO TRIGGER THE OPERATION WHICH

WILL GENERATE THE REQUIRED RESULT.

COMPUTER PROGRAM ORGANIZATION

THE THREE BASIC FORMS OF COMP PROGRAM

ORGANIZATION MAY BE DESCRIBED IN TERMS

OF THEIR DATA MECHANISM (WHICH DEFINES

THE WAY A PERTICULAR ARGUMENT IS USED BY

A NUMBER OF INSTRUCTIONS) AND THE

CONTROL MECHANISM (WHICH DEFINES HOW

ONE INST CAUSES THE EXECUTION OF ONE OR

MORE OTHER INSTS AND THE RESULTING

CONTROL PATTERN).

COMPUTER PROGRAM ORGANIZATION

CONTROL FLOW PROCESSORS HAVE A BY

REFERENCE DATA MECHANISM (WHICH USES

REFERENCES EMBEDDED IN THE INSTS BEING

EXECUTED TO ACCESS THE CONTENTS OF THE

SHARED MEMORY) AND TYPICALLY A

SEQUENTIAL CONTROL MECHANISM ( WHICH

PASSES A SINGLE THREAD OF CONTROL FROM

INSTRUCTION TO INSTRUCTION).

COMPUTER PROGRAM ORGANIZATION

DATAFLOW COMPUTERS HAVE A BY VALUE

DATA MECHANISM (WHICH GENERATES AN

ARGUMENT AT RUN-TIME WHICH IS REPLICATED

AND GIVEN TO EACH ACCESSING INSTRUCTION

FOR STORAGE AS A VALUE) AND A PARALLEL

CONTROL MECHANISM.

BOTH MECHANISMS ARE SUPPORTED BY DATA

TOKENS WHICH CONVEY DATA FROM PRODUCER

TO CONSUMER INSTRUCTIONS AND CONTRIBUTE

TO THE ACTIVATION OF CONSUMER INSTS.

COMPUTER PROGRAM ORGANIZATION

TWO BASIC TYPES OF REDUCTION PROGRAM

ORGANIZATIONS HAVE BEEN DEVELOPED:

A. STRING REDUCTION WHICH HAS A BY VALUE

DATA MECHANISM AND HAS ADVANTAGES

WHEN MANIPULATING SIMPLE EXPRESSIONS.

B. GRAPH REDUCTION WHICH HAS A BY

REFERENCE DATA MECHANISM AND HAS

ADVANTAGES WHEN LARGER STRUCTURES

ARE INVOLVED.

COMPUTER PROGRAM ORGANIZATION

CONTROL-FLOW AND DATA-FLOW PROGRAMS

ARE BUILT FROM FIXED SIZE PRIMITIVE INSTS

WITH HIGHER LEVEL PROGRAMS CONSTRUCTED

FROM SEQUENCES OF THESE PRIMITIVE

INSTRUCTIONS AND CONTROL OPERATIONS.

REDUCTION PROGRAMS ARE BUILT FROM HIGH

LEVEL PROGRAM STRUCTURES WITHOUT THE

NEED FOR CONTROL OPERATORS.

COMPUTER PROGRAM ORGANIZATION

THE RELATIONSHIP OF THEDATA AND CONTROL

MECHANISMS TO THE BASIC COMPUTER

PROGRAM ORGANIZATIONS CAN BE SHOWN AS

UNDER:

DATA MECHANISM

BY VALUE BY REFERENCE

CONTROL MECHANISM

SEQUENTIAL VON-NEUMANN CON.FLOW

PARALLEL DATA FLOW PARALLEL CONTROL FLOW

RECURSIVE STRING REDUCTION GRAPH REDUCTION

MACHINE ORGANIZATION

MACHINE ORGANIZATION CAN BE CLASSIFIED

AS FOLLOWS:

CENTRALIZED: CONSISTING OF A SINGLE

PROCESSOR, COMM PATH AND MEMORY. A

SINGLE ACTIVE INST PASSES EXECUTION TO A

SPECIFIC SUCCESSOR INSTRUCTION.

o TRADITIONAL VON-NEUMANN PROCESSORS

HAVE CENTRALIZED MACHINE ORGANIZATION

AND A CONTROL FLOW PROGRAM

ORGANIZATION.

MACHINE ORGANIZATION

PACKET COMMUNICATION: USING A CIRCULAR

INST EXECUTION PIPELINE IN WHICH

PROCESSORS, COMMUNICATIONS AND

MEMORIES ARE LINKED BY POOLS OF WORK.

o NEC 7281 HAS A PACKET COMMUNICATION

MACHINE ORGANIZATION AND DATAFLOW

PROGRAM ORGANIZATION.

MACHINE ORGANIZATION

EXPRESSION MANIPULATION WHICH USES IDENTICAL

RESOURCES IN A REGULAR STRUCTURE, EACH

RESOURCE CONTAINING A PROCESSOR,

COMMUNICATION AND MEMORY. THE PROGRAM

CONSISTS OF ONE LARGE STRUCTURE, PARTS OF

WHICH ARE ACTIVE WHILE OTHER PARTS ARE

TEMPORARILY SUSPENDED.

AN EXPRESSION MANIPULATION MACHINE MAY BE

CONSTRUCTED FROM A REGULAR STRUCTURE OF T414

TRANSPUTERS, EACH CONTAINING A VON-NEUMANN

PROCESSOR, MEMORY AND COMMUNICATION LINKS.

MULTIPROCESSING SYSTEMS

IT MAKES USE OF SEVERAL PROCESSORS, EACH

OBEYING ITS OWN INSTS, USUALLY

COMMUNICATING VIA A COMMON MEMORY.

ONE WAY OF CLASSIFYING THESE SYSTEMS IS

BY THEIR DEGREE OF COUPLING.

TIGHTLY COUPLED SYSTEMS HAVE PROCESSORS

INTERCONNECTED BY A MULTIPROCESSOR

SYSTEM BUS WHICH BECOMES A PERFORMANCE

BOTTLENECK.

MULTIPROCESSING SYSTEMS

INTERCONNECTION BY A SHARED MEMORY IS LESS

TIGHTLY COUPLED AND A MULTIPORT MEMORY MAY

BE USED TO REDUCE THE BUS BOTTLENECK.

THE USE OF SEVERAL AUTONOMOUS SYSTEMS, EACH

WITH ITS OWN OS, IN A CLUSTER IS MORE LOOSELY

COUPLED.

THE USE OF NETWORK TO INTERCONNECT SYSTEMS,

USING COMM SOFTWARE, IS THE MOST LOOSELY

COUPLED ALTERNATIVE.

MULTIPROCESSING SYSTEMS

DEGREE OF COUPLING:

NETWORK

SW

NETWORK

SW

NETWORK LINK

OS OS

CLUSTER LINK

SYSTEM

MEMORY

SYSTEM

MEMORY

SYSTEM BUS

CPU CPU

MULTIPROCESSOR BUS

MULTIPROCESSING SYSTEMS

MULTIPROCESSORS MAY ALSO BE CLASSIFIED

AS AUTOCRATIC OR EGALITARIAN.

AUTOCRATIC CONTROL IS SHOWN WHERE A

MASTER-SLAVE RELATIONSHIP EXISTS BETWEEN

THE PROCESSORS.

EGALITARIAN CONTROL GIVES ALL PROCESSORS

EQUAL CONTROL OF SHARED BUS ACCESS.

MULTIPROCESSING SYSTEMS

MULTIPROCESSING SYSTEMS WITH SEPARATE

PROCESSORS AND MEMORIES MAY BE

CLASSIFIED AS DANCE HALL CONFIGURATIONS

IN WHICH THE PROCESSORS ARE LINED UP ON

ONE SIDE WITH THE MEMORIES FACING THEM.

CROSS CONNECTIONS ARE MADE BY A

SWITCHING NETWORK.

MULTIPROCESSING SYSTEMS

DANCE HALL CONFIGURATION:

CPU 1

CPU 2

CPU 3

CPU 4

SWITCHI

NG

NETWO

RK

MEM 1

MEM 2

MEM 3

MEM 4

MULTIPROCESSING SYSTEMS

ANOTHER CONFIGURATION IS BOUDOIR CONFIG IN WHICH EACH

PROCESSOR IS CLOSELY COUPLED WITHITS OWN MEMORY AND A

NETWORK OF SWITCHES IS USED TO LINK THE PROCESSOR-MEMORY

PAIRS.

CPU 1

MEM 1

CPU 2

MEM 2

CPU 3

MEM 3

CPU 4

MEM 4

SWITCHING

NETWORK

MULTIPROCESSING SYSTEMS

ANOTHER TERM, WHICH IS USED TO DESCRIBE A

FORM OF PARALLEL COMPUTING IS

CONCURRENCY.

IT DENOTES INDEPENDENT, AYNCHRONOUS

OPERATION OF A COLLECTION OF PARALLEL

COMPUTING DEVICES RATHER THAN THE

SYNCHRONOUS OPERATION OF DEVICES IN A

MULTIPROCESSOR SYSTEM.

SYSTOLIC ARRAYS

IT MAY BE TERMED AS MISD SYSTEM.

IT IS A REGULAR ARRAY OF PROCESSING ELEMENTS,

EACH COMMUNICATING WITH ITS NEAREST

NEIGHBOURS AND OPERATING SYNCHRONOUSLY

UNDER THE CONTROL OF A COMMON CLOCK WITH A

RATE LIMITED BY THE SLOWEST PROCESSOR IN THE

ARRAY.

THE ERM SYSTOLIC IS DERIVED FROM THR RHYTHMIC

CONTRACTION OF THE HEART, ANALOGOUS TO THE

RHYTHMIC PUMPING OF DATA THROUGH AN ARRAY OF

PROCESSING ELEMENTS.

WAVEFRONT ARRAY

IT IS A REGULAR ARRAY OF PROCESSING ELEMENTS,

EACH COMMUNICATING WITH ITS NEAREST

NEIGHBOURS BUT OPERATING WITH NO GLOBAL

CLOCK.

IT EXHIBITS CONCURRENCY AND IS DATA DRIVEN.

THE OPERATION OF EACH PROCESSOR IS CONTROLLED

LOCALLY AND IS ACTIVATED BY THE ARRIVAL OF DATA

AFTER ITS PREVIOUS OUTPUT HAS BEEN DELIVERED TO

THE APPROPRIATE NEIGHBOURING PROCESSOR.

WAVEFRONT ARRAY

PROCESSING WAVEFRONTS DEVELOP ACROSS

THE ARRAY AS PROCESSORS PASS ON THE

OUTPUT DATA TO THEIR NEIGHBOUR. HENCE

THE NAME.

GRANULARITY OF PARALLELISM

PARALLEL PROCESSING EMPHASIZES THE USE OF

SEVERAL PROCESSING ELEMENTS WITH THE

MAIN OBJECTIVE OF GAINING SPEED IN

CARRYING OUT A TIME CONSUMING COMPUTING

JOB

A MULTI-TASKING OS EXECUTES JOB

CONCURRENTLY BUT THE OBJECTIVE IS TO

EFFECT THE CONTINUED PROGRESS OF ALL THE

TASKS BY SHARING THE RESOURCES IN AN

ORDERLY MANNER.

GRANULARITY OF PARALLELISM

THE PARALLEL PROCESSING EMPHASIZES THE

EXPLOITATION OF CONCURRENCY AVAILABLE

IN A PROBLEM FOR CARRYING OUT THE

COMPUTATION BY EMPLOYING MORE THAN ONE

PROCESSOR TO ACHIEVE BETTER SPEED AND/OR

THROUGHPUT.

THE CONCURRENCY IN THE COMPUTING

PROCESS COULD BE LOOKED UPON FOR

PARALLEL PROCESSING AT VARIOUS LEVELS

(GRANULARITY OF PARALLELISM) IN THE

SYSTEM.

GRANULARITY OF PARALLELISM

THE FOLLOWING GRANULARITIES OF PARALLELISM

MAY BE IDENTIFIED IN ANY EXISTING SYSTEM:

o PROGRAM LEVEL PARALLELISM

o PROCESS OR TASK LEVEL PARALLELISM

o PARALLELISM AT THE LEVEL OF GROUP OF

STATEMENTS

o STATEMENT LEVEL PARALLELISM

o PARALLELISM WITHIN A STATEMENT

o INSTRUCTION LEVEL PARALLELISM

o PARALLELISM WITHIN AN INSTRUCTION

o LOGIC AND CIRCUIT LEVEL PARALLELISM

GRANULARITY OF PARALLELISM

THE GRANULARITIES ARE LISTED IN THE

INCREASING DEGREE OF FINENESS.

GRANULARITIES AT LEVELS 1,2 AND 3 CAN BE

EASILY IMPLEMENTED ON A CONVENTIONAL

MULTIPROCESSOR SYSTEM.

MOST MULTI-TASKING OS ALLOW CREATION

AND SCHEDULING OF PROCESSES ON THE

AVAILABLE RESOURCES.

GRANULARITY OF PARALLELISM

SINCE A PROCESS REPRESENTS A SIZABLE CODE IN

TERMS OF EXECUTION TIME, THE OVERLOADS IN

EXPLOITING THE PARALLELISM AT THESE

GRANULARITIES ARE NOT EXCESSIVE.

IF THE SAME PRINCIPLE IS APPLIED TO THE NEXT FEW

LEVELS, INCREASED SCHEDULING OVERHEADS MAY

NOT WARRANT PARALLEL EXECUTION

IT IS SO BECAUSE THE UNIT OF WORK OF A MULTI-

PROCESSOR IS CURRENTLY MODELLED AT THE LEVEL

OF A PROCESS OR TASK AND IS REASONABLY

SUPPORTED ON THE CURRENT ARCHITECTURES.

GRANULARITY OF PARALLELISM

THE LAST THREE LEVELS ARE BEST HANDLED BY

HARDWARE. SEVERAL MACHINES HAVE BEEN BUILT TO

PROVIDE THE FINE GRAIN PARALLELISM IN VARYING

DEGREES.

A MACHINE HAVING INST LEVEL PARALLELISM

EXECUTES SEVERAL INSTS SIMULTANEOUSLY.

EXAMPLES ARE PIPELINE INST PROCESSORS,

SYNCHRONOUS ARRAY PROCESSORS, ETC.

CIRCUIT LEVEL PARALLELISM EXISTS IN MOST

MACHINES IN THE FORM OF PROCESSING MULTIPLE

BITS/BYTES SIMULTANEOUSLY.

PARALLEL ARCHITECTURES

THERE ARE NUMEROUS ARCHITECTURES THAT HAVE

BEEN USED IN THE DESIGN OF HIGH SPEED COMPUTERS.

IT FALLS BASICALLY INTO 2 CLASSES:

GENERAL PURPOSE &

SPECIAL PURPOSE

o GENERAL PURPOSE ARCHITECTURES ARE DESIGNED TO

PROVIDE THE RATED SPEEDS AND OTHER COMPUTING

REQUIREMENTS FOR VARIETY OF PROBLEMS WITH

SAME PERFORMANCE.

PARALLEL ARCHITECTURES

THE IMPORTANT ARCHITECTURAL IDEAS BEING

USED IN DESIGNING GEN PURPOSE HIGH SPEED

COMPUTERS ARE:

PIPELINED ARCHITECTURES

ASYNCHRONOUS MULTI-PROCESSORS

DATA-FLOW COMPUTERS

PARALLEL ARCHITECTURES

THE SPECIAL PURPOSE MACHINES HAVE TO EXCEL FOR

WHAT THEY HAVE BEEN DESIGNED. IT MAY OR MAY

NOT DO SO FOR OTHER APPLICATIONS. SOME OF THE

IMPORTANT ARCHITECTURAL IDEAS FOR DEDICATED

COMPUTERS ARE:

SYNCHRONOUS MULTI-PROCESSORS(ARRAY

PROCESSOR)

SYSTOLIC ARRAYS

NEURAL NETWORKS

ARRAY PROCESSORS

IT CONSISTS OF SEVERAL PE, ALL OF WHICH EXECUTE

THE SAME INST ON DIFFERENT DATA.

THE INSTS ARE FETCHED AND BROADCAST TO ALL THE

PE BY A COMMON CU.

THE PE EXECUTE INSTS ON DATA RESIDING IN THEIR

OWN MEMORY.

THE PE ARE LINKED VIA AN INTERCONNECTION

NETWORK TO CARRY OUT DATA COMMUNICATION

BETWEEN THEM.

ARRAY PROCESSORS

THERE ARE SEVERAL WAYS OF CONNECTING PE

THESE MACHINES REQUIRE SPECIAL

PROGRAMMING EFFORTS TO ACHIEVE THE

SPEED ADVANTAGE

THE COMPUTATIONS ARE CARRIED OUT

SYNCHRONOUSLY BY THE HW AND THEREFORE

SYNC IS NOT AN EXPLICIT PROBLEM

ARRAY PROCESSORS

USING AN INTERCONNECTION NETWORK:

PE1 PE2 PE3 PE4 PEn

CU AND

SCALAR

PROCESS

OR

INTERCONNECTION

NETWORK

ARRAY PROCESSORS

USING AN ALIGNMENT NETWORK:

PE0 PE1 PE2 PEn

ALIGNMENT NETWORK

MEM O MEM1 MEM2 MEMK

CONTROL

UNIT AND

SCALAR

PROCESSOR

CONVENTIONAL MULTI-PROCESSORS

ASYNCHRONOUS MULTIPROCESSORS

BASED ON MULTIPLE CPUs AND MEM BANKS

CONNECTED THROUGH EITHER A BUS OR

CONNECTION NETWORK IS A COMMONLY USED

TECHNIQUE TO PROVIDE INCREASED

THROUGHPUT AND/OR RESPONSE TIME IN A

GENERAL PURPOSE COMPUTING ENVIRONMENT.

CONVENTIONAL MULTI-PROCESSORS

IN SUCH SYSTEMS, EACH CPU OPERATES

INDEPENDENTLY ON THE QUANTUM OF WORK GIVEN

TO IT

IT HAS BEEN HIGHLY SUCCESSFUL IN PROVIDING

INCREASED THROUGHPUT AND/OR RESPONSE TIME IN

TIME SHARED SYSTEMS.

EFFECTIVE REDUCTION OF THE EXECUTION TIME OF A

GIVEN JOB REQUIRES THE JOB TO BE BROKEN INTO

SUB-JOBS THAT ARE TO BE HANDLED SEPARATELY BY

THE AVAILABLE PHYSICAL PROCESSORS.

CONVENTIONAL MULTI-PROCESSORS

IT WORKS WELL FOR TASKS RUNNING MORE OR

LESS INDEPENDENTLY ie., FOR TASKS HAVING

LOW COMMUNICATION AND SYNCHRONIZATION

REQUIREMENTS.

COMM AND SYNC IS IMPLEMENTED EITHER

THROUGH THE SHARED MEMORY OR BY

MESSAGE SYSTEM OR THROUGH THE HYBRID

APPROACH.

CONVENTIONAL MULTI-PROCESSORS

SHARED MEMORY ARCHITECTURE:

MEMORY

CPU CPU CPU

COMMON BUS ARCHITECTURE

MEM0 MEM1 MEMn

PROCESSOR MEMORY SWITCH

CPU CPU CPU

SWITCH BASED

MULTIPROCESSOR

CONVENTIONAL MULTI-PROCESSORS

MESSAGE BASED ARCHITECTURE:

PE 1 PE 2 PE n

CONNECTION NETWORK

CONVENTIONAL MULTI-PROCESSORS

HYBRID ARCHITECTURE:

PE 1 PE 2 PE n

CONNECTION NETWORK

MEM 1 MEM 2 MEM k

CONVENTIONAL MULTI-PROCESSORS

ON A SINGLE BUS SYSTEM, THERE IS A LIMIT ON THE

NUMBER OF PROCESSORS THAT CAN BE OPERATED IN

PARALLEL.

IT IS USUALLY OF THE ORDER OF 10.

COMM NETWORK HAS THE ADVANTAGE THAT THE NO

OF PROCESSORS CAN GROW WITHOUT LIMIT, BUT THE

CONNECTION AND COMM COST MAY DOMINATE AND

THUS SATURATE THE PERFORMANCE GAIN.

DUE TO THIS REASON, HYBRID APPROACH MAY BE

FOLLOWED

MANY SYSTEMS USE A COMMON BUS ARCH FOR

GLOBAL MEM, DISK AND I/O WHILE THE PROC MEM

TRAFFIC IS HANDLED BY SEPARATE BUS.

DATA FLOW COMPUTERS

A NEW FINE GRAIN PARALLEL PROCESSING

APPROACH BASED ON DATAFLOW COMPUTING

MODEL HAS BEEN SUGGESTED BY JACK DENNIS

IN 1975.

HERE, A NO OF DATA FLOW OPERATORS, EACH

CAPABLE OF DOING AN OPERATION ARE

EMPLOYED.

A PROGRAM FOR SUCH A MACHINE IS A

CONNECTION GRAPH OF THE OPERATORS.

DATA FLOW COMPUTERS

THE OPERATORS FORM THE NODES OF THE

GRAPH WHILE THE ARCS REPRESENT THE DATA

MOVEMENT BETWEEN NODES.

AN ARC IS LABELED WITH A TOKEN TO INDICATE

THAT IT CONTAINS THE DATA.

A TOKEN IS GENERATED ON THE OUTPUT OF A

NODE WHEN IT COMPUTES THE FUNCTION

BASED ON THE DATA ON ITS INPUT ARCS.

DATA FLOW COMPUTERS

THIS IS KNOWN AS FIRING OF THE NODE.

A NODE CAN FIRE ONLY WHEN ALL OF ITS INPUT

ARCS HAVE TOKENS AND THERE IS NO TOKEN

ON THE OUTPUT ARC.

WHEN A NODE FIRES, IT REMOVES THE INPUT

TOKENS TO SHOW THAT THE DATA HAS BEEN

CONSUMED.

USUALLY, COMPUTATION STARTS WITH

ARRIVAL OF DATA ON THE INPUT NODES OF THE

GRAPH.

DATA FLOW COMPUTERS

DATA FLOW GRAPH FOR THE COMPUTATION:

A = 5 + C D

5

C

D

+

-

COMPUTATION PROGRESSES

AS PER DATA AVAILABILITY

DATA FLOW COMPUTERS

MANY CONVENTIONAL MACHINES EMPLOYING

MULTIPLE FUNCTIONAL UNITS EMPLOY THE DATA

FLOW MODEL FOR SCHEDULING THE FUNCTIONAL

UNITS.

EXAMPLE EXPERIMENTAL MACHINES ARE

MANCHESTER MACHINE (1984) AND MIT MACHINE.

THE DATA FLOW COMPUTERS PROVIDE FINE

GRANULARITY OF PARALLEL PROCESSING, SINCE THE

DATA FLOW OPERATORS ARE TYPICALLY

ELEMENTARY ARITHMETIC AND LOGIC OPERATORS.

DATA FLOW COMPUTERS

IT MAY PROVIDE AN EFFECTIVE SOLUTION FOR USING

VERY LARGE NUMBER OF COMPUTING ELEMENTS IN

PARALLEL.

WITH ITS ASYNCHRONOUS DATA DRIVEN CONTROL, IT

HAS A PROMISE FOR EXPLOITATION OF THE

PARALLELISM AVAILABLE BOTH IN THE PROBLEM AND

THE MACHINE.

CURRENT IMPLEMENTATIONS ARE NO BETTER THAN

CONVENTIONAL PIPELINED MACHINES EMPLOYING

MULTIPLE FUNCTIONAL UNITS.

SYSTOLIC ARCHITECTURES

THE ADVENT OF VLSI HAS MADE IT POSSIBLE TO

DEVELOP SPECIAL ARCHITECTURES SUITABLE

FOR DIRECT IMPLEMENTATION IN VLSI.

SYSTOLIC ARCHITECTURES ARE BASICALLY

PIPELINES OPERATING IN ONE OR MORE

DIMENSIONS.

THE NAME SYSTOLIC HAS BEEN DERIVED FROM

THE ANALOGY OF THE OPERATION OF BLOOD

CIRCULATION SYSTEM THROUGH THE HEART.

SYSTOLIC ARCHITECTURES

CONVENTIONAL ARCHITECTURES OPERATE ON

THE DATA USING LOAD AND STORE OPERATIONS

FROM THE MEMORY.

PROCESSING USUALLY INVOLVES SEVERAL

OPERATIONS.

EACH OPERATION ACCESSES THE MEMORY FOR

DATA, PROCESSES IT AND THEN STORES THE

RESULT. THIS REQUIRES A NO OF MEM

REFERENCES.

SYSTOLIC ARCHITECTURES

CONVENTIONAL PROCESSING:

MEMORY

F1 F2 Fn

MEMORY

F1 F2 Fn

SYSTOLIC PROCESSING

SYSTOLIC ARCHITECTURES

IN SYSTOLIC PROCESSING, DATA TO BE PROCESSED

FLOWS THROUGH VARIOUS OPERATION STAGES AND

THEN FINALLY IT IS PUT IN THE MEMORY.

SUCH AN ARCHITECTURE CAN PROVIDE BERY HIGH

COMPUTING THROUGHPUT DUE TO REGULAR

DATAFLOW AND PIPELINE OPERATION.

IT MAY BE USEFUL IN DESIGNING SPECIAL PROCESSORS

FOR GRAPHIC, SIGNAL & IMAGE PROCESSING.

PERFORMANCE OF

PARALLEL COMPUTERS

AN IMPORTANT MEASURE OF PARALLEL

ARCHITECTURE IS SPEEDUP.

LET n = NO. OF PROCESSORS; Ts = SINGLE PROC.

EXEC TIME; Tn = N PROC. EXEC. TIME,

THEN

SPEEDUP S = Ts/Tn

AMDAHLS LAW

1967

BASED ON A VERY SIMPLE OBSERVATION.

A PROGRAM REQUIRING TOTAL TIME T FOR

SEQUENTIAL EXECUTION SHALL HAVE SOME

PART WHICH IS INHERENTLY SEQUENTIAL.

IN TERMS OF TOTAL TIME TAKEN TO SOLVE THE

PROBLEM, THIS FRACTION OF COMPUTING TIME

IS AN IMPORTANT PARAMETER.

AMDAHLS LAW

LET f = SEQ. FRACTION FOR A GIVEN PROGRAM.

AMDAHLS LAW STATES THAT THE SPEED UP OF

A PARALLEL COMPUTER IS LIMITED BY

S <= 1/[f + (1 f )/n]

SO, IT SAYS THAT WHILE DESIGNING A

PARALLEL COMP, CONNECT SMALL NO OF

EXTREMELY POWERFUL PROCS AND LARGE NO

OF INEXPENSIVE PROCS.

AMDAHLS LAW

CONSIDER TWO PARALLEL COMPS. Me AND Mi.

Me IS BUILT USING POWERFUL PROCS. CAPABLE

OF EXECUTING AT A SPEED OF M MEGAFLOPS.

THE COMP Mi IS BUILT USING CHEAP PROCS.

AND EACH PROC. OF Mi EXECUTES r.M

MEGAFLOPS, WHERE 0 < r < 1

IF THE MACHINE Me ATTEMPTS A COMPUTATION

WHOSE INHERENTLY SEQ.FRACTION f > r THEN

Mi WILL EXECUTE COMPS. MORE SLOWLY THAN

A SINGLE PROC. OF Mi.

AMDAHLS LAW

PROOF:

LET W = TOTAL WORK; M = SPEED OF Mi (IN Mflops)

R.m = SPEED OF PE OF Ma; f.W = SEQ WORK OF JOB;

T(Ma) = TIME TAKEN BY Ma FOR THE WORK W,

T(Mi) = TIME TAKEN BY Mi FOR THE WORK W, THEN

TIME TAKEN BY ANY COMP =

T = AMOUNT OF WORK/SPEED

AMDAHLS LAW

T(Ma) = TIME FOR SEQ PART + TIME FOR

PARALLEL PART

= ((f.W)/(r.M)) + [((1-f).W/n)/(r.M)] = (W/M).(f/r) IF n IS

INFINITELY LARGE.

T(Me) = (W/M) [ASSUMING ONLY 1 PE]

SO IF f > r, THEN T(Ma) > T(Mi)

AMDAHLS LAW

THE THEOREM IMPLIES THAT A SEQ COMPONENT

FRACTION ACCEPTABLE FOR THE MACHINE Mi

MAY NOT BE ACCEPTABLE FOR THE MACHINE

Ma.

IT IS NOT GOOD TO HAVE A LARGER PROCESSING

POWER THAT GOES AS A WASTE. PROCS MUST

MAINTAIN SOME LEVEL OF EFFICIENCY.

AMDAHLS LAW

RELATION BETWEEN EFFICIENCY e AND SEQ FRACTION

r:

S <= 1/[f + (1 f )/n]

EFFICIENCY e = S/n

SO, e <= 1/[f.n + 1 f ]

IT SAYS THAT FOR CONSTANT EFFICIENCY, THE

FRACTION OF SEQ COMP OF AN ALGO MUST BE

INVERSELY PROPORTIONAL TO THE NO OF

PROCESSORS.

THE IDEA OF USING LARGE NO OF PROCS MAY THUS BE

GOOD FOR ONLY THOSE APPLICATIONS FOR WHICH IT

IS KNOWN THAT THE ALGOS HAVE A VERY SMALL SEQ

FRACTION f.

MINSKYS CONJECTURE

1970

FOR A PARALLEL COMPUTER WITH n PROCS, THE

SPEEDUP S SHALL BE PROPORTIONAL TO log

2

n.

MINSKYS CONJECTURE WAS VERY BAD FOR THE

PROPONENTS OF LARGE SCALE PARALLEL

ARCHITECTURES.

FLYNN & HENNESSY (1980) THEN GAVE THAT

SPEEDUP OF n PROCESSOR PARALEL SYSTEM IS

LIMITED BY S<= [n/(log

2

n)]

PARALLEL ALGORITHMS

IMP MEASURE OF THE PERFORMANCE OF ANY

ALGO IS ITS TIME AND SPACE COMPLEXITY.

THEY ARE SPECIFIED AS SOME FUNCTION OF THE

PROBLEM SIZE.

MANY TIMES, THEY DEPEND UPON THE USED

DATA STRUCTURE.

SO, ANOTHER IMP MEASURE IS THE

PREPROCESSING TIME COMPLEXITY TO

GENERATE THE DESIRED DATA STRUCTURE.

PARALLEL ALGORITHMS

PARALLEL ALGOS ARE THE ALGOS TO BE RUN

ON PARALLEL MACHINE.

SO, COMPLEXITY OF COMM AMONGST

PROCESORS ALSO BECOMES AN IMPORTANT

MEASURE.

SO, AN ALGO MAY FARE BADLY ON ONE

MACHINE AND MUCH BETTER ON THE OTHER.

PARALLEL ALGORITHMS

DUE TO THIS REASON, MAPPING OF THE ALGO

ON THE ARCHITECTURE IS AN IMP ACTIVITY IN

THE STUDY OF PARALLEL ALGOS.

SPEEDUP AND EFFICIENCY ARE ALSO IMP

PERFORMANCE MEASURES FOR A PARALLEL

ALGO WHEN MAPPED ON TO A GIVEN

ARCHITECTURE.

PARALLEL ALGORITHMS

A PARALLEL ALGO FOR A GIVEN PROBLEM

MAY BE DEVELOPED USING ONE OR MORE OF

THE FOLLOWING:

1. DETECT AND EXPLOIT THE INHERENT

PARALLELISM AVAILABLE IN THE EXISTING

SEQUENTIAL ALGORITHM

2. INDEPENDENTLY INVENT A NEW PARALLEL

ALGORITHM

3. ADAPT AN EXISTING PARALLEL ALGO THAT

SOLVES A SIMILAR PROBLEM.

DISTRIBUTED PROCESSING

PARALLEL PROCESSING DIFFERS FROM DISTRIBUTED

PROCESSING IN THE SENSE THAT IT HAS (1) CLOSE

COUPLING BETWEEN THE PROCESSORS & (2)

COMMUNICATION FAILURES MATTER A LOT.

PROBLEMS MAY ARISE IN DISTRIBUTED PROCESSING

BECAUSE OF (1) TIME UNCERTAINTY DUE TO DIFFERING

TIME IN LOCAL CLOCKS, (2) INCOMPLETE INFO ABOUT

OTHER NODES IN THE SYSTEM, (3) DUPLICATE INFO

WHICH MAY NOT BE ALWAYS CONSISTENT.

PIPELINING PROCESSING

A PIPELINE CAN WORK WELL WHEN:

1. THE TIME TAKEN BY EACH STAGE IS NEARLY

THE SAME.

2. IT REQUIRES A STEADY STEAM OF JOBS,

OTHERWISE UTILIZATION WILL BE POOR.

3. IT HONOURS THE PRECEDENCE CONSTRAINTS

OF SUB-STEPS OF JOBS.

IT IS THE MOST IMP PROPERTY OF PIPELINE. IT

ALLOWS PARALLEL EXECUTION OF JOBS

WHICH HAVE NO PARALLELISM WITHIN

INDIVIDUAL JOBS THEMSELVES.

PIPELINING PROCESSING

IN FACT, A JOB WHICH CAN BE BROKEN INTO A NO OF

SEQUENTIAL STEPS IS THE BASIS OF PIPELINE

PROCESSING.

THIS IS DONE BY INTRODUCING TEMPORAL

PARALLELISM WHICH MEANS EXECUTING DIFFERENT

STEPS OF DIFFERENT JOBS INSIDE THE PIPELINE.

THE PERFORMANCE IN TERMS OF THROUGHPUT IS

GUARANTEED IF THERE ARE ENOUGH JOBS TO BE

STREAMED THROUGH THE PIPELINE, ALTHOUGH AN

INDIVIDUAL JOB FINISHES WITH A DELAY EQUALLING

THE TOTAL DELAY OF ALL THE STAGES.

PIPELINING PROCESSING

THE FOURTH IMP THING IS THAT THE STAGES IN

THE PIPELINE ARE SPECIALIZED TO DO

PARTICULAR SUBFUNCTIONS, UNLIKE IN

CONVENTIONAL PARALLEL PROCESSORS WERE

EQUIPMENT IS REPLICATED.

IT AMOUNTS TO SAYING THAT DUE TO

SPECIALIZATION, THE STAGE PROC COULD BE

DESIGNED WITH BETTER COST AND SPEED,

OPTIMISED FOR THE SPECIALISED FUNCTION OF

THE STAGE

PERFORMANCE MEASURES

OF PIPELINE

EFFICIENCY, SPEEDUP AND THROUGHPUT

EFFICIENCY: LET n BE THE LENGTH OF PIPE AND

m BE THE NO OF TASKS RUN ON THE PIPE, THEN

EFFICIENCY e CAN BE DEFINED AS

e = [(m.n)/((m+n-1).(n))]

WHEN n>>m, e TENDS TO m/n (A SMALL FRACTION)

WHEN n<<m, e TENDS TO 1

WHEN n = m, e IS APPROX 0.5 (m,n > 4)

PERFORMANCE MEASURES

OF PIPELINE

SPEEDUP = S = [((n.ts).m)/((m+n-1).ts)]

= [(m.n)/(n+m-1)]

WHEN n>>m, S=m (NO. OF TASKS RUN)

WHEN n<<m, S=n (NO OF STAGES)

WHEN n = m, S=n/2 (m,n > 4)

PERFORMANCE MEASURES

OF PIPELINE

THROUGHPUT = Th = [m/((n+m-1).ts)] = e/ts WHERE ts IS

TIME THAT ELAPSES AT 1 STAGE.

WHEN n>>m, Th = m/(n.ts)

WHEN n<<m, Th = 1/ts

WHEN n = m, Th = 1/(2.ts) (n,m > 4)

SO, SPEEDUP IS A FUNCTION OF n AND ts. FOR A GIVEN

TECHNOLOGY ts IS FIXED, SO AS LONG AS ONE IS FREE

TO CHOOSE n, THERE IS NO LIMIT ON THE SPEEDUP

OBTAINABLE FROM A PIPELINED MECHANISM.

OPTIMAL PIPE SEGMENTATION

IN HOW MANY SUBFUNCTIONS A FUNCTION

SHOULD BE DIVIDED?

LET n = NO OF STAGES, T= TIME FOR NON-

PIPELINED IMPLEMENTATION, D = LATCH DELAY

AND c = COST OF EACH STAGE

STAGE COMPUTE TIME = T/n (SINCE T IS DIVIDED

EQUALLY FOR n STAGES)

PIPELINE COST= c.n + k WHERE k IS A CONSTANT

REFLECTING SOME COST OVERHEAD.

OPTIMAL PIPE SEGMENTATION

SPEED (TIME PER OUTPUT) = (T/n + D)

ONE OF THE IMPORTANT PERFORMANCE

MEASURE IS THE PRODUCT OF SPEED AND COST

DENOTED BY p.

p = [(T/n) + D).(c.n +k)] = T.c +D.c.n + (k.T)/n + k.D

TO OBTAIN A VALUE OF n WHICH GIVES BEST

PERFORMANCE, WE DIFFERENTIATE p w r t n AND

EQUATE IT TO ZERO

dp/dn = D.c (k.T)/n

2

= 0

n = SQRT [(k.T)/(D.c)]

PIPELINE CONTROL

IN A NON-PIPELINED SYSTEM, ONE INST IS FULLY EXECUTED

BEFORE THE NEXT ONE STARTS, THUS MATCHING THE ORDER

OF EXECUTION.

IN A PIPELINED SYSTEM, INST EXECUTION IS OVERLAPPED. SO,

IT CAN CAUSE PROBLEMS IF NOT CONSIDERED PROPERLY IN

THE DESIGN OF CONTROL.

EXISTENCE OF SUCH DEPENDENCIES CAUSES HAZARDS

CONTROL STRUCTURE PLAYS AN IMP ROLE IN THE

OPERATIONAL EFFICIENCY AND THROUGHPUT OF THE

MACHINE.

PIPELINE CONTROL

THERE ARE 2 TYPES OF CONTROL STRUCTURES

IMPLEMENTED ON COMMERCIAL SYSTEMS.

THE FIRST ONE IS CHARACTERISED BY A STREAMLINE FLOW

OF THE INSTS IN THE PIPE.

IN THIS, INSTS FOLLOW ONE AFTER ANOTHER SUCH THAT

THE COMPLETION ORDERING IS THE SAME AS THE ORDER OF

INITIATION.

THE SYSTEM IS CONCEIVED AS A SEQUENCE OF FUNCTIONAL

MODULES THROUGH WHICH THE INSTS FLOW ONE AFTER

ANOTHER WITH AN INTERLOCK BETWEEN THE ADJACENT

STAGES TO ALLOW THE TRANSFER OF DATA FROM ONE

STAGE TO ANOTHER.

PIPELINE CONTROL

THE INTERLOCK IS NECESSARY BECAUSE THE PIPE IS

ASYNCHRONOUS DUE TO VARIATIONS IN THE SPEEDS

OF DIFFERENT STAGES.

IN THESE SYSTEMS, THE BOTTLRNECKS APPEAR

DYNAMICALLY AT ANY STAGE AND THE INPUT TO IT IS

HALTED TEMPORARILY.

THE SECOND TYPE OF CONTROL IS MORE FLEXIBLE,

POWERFUL BUT EXPENSIVE.

PIPELINE CONTROL

IN SUCH SYSTEMS, WHEN A STAGE HAS TO SUSPEND THE

FLOW OF A PARTICULAR INSTRUCTION, IT ALLOWS OTHER

INSTS TO PASS THROUGH THE STAGE RESULTING IN AN OUT-

OF-TURN EXECUTION OF THE INSTS.

THE CONTROL MECHANISM IS DESIGNED SUCH THAT EVEN

THOUGH THE INSTS ARE EXECUTED OUT-OF-TURN, THE

BEHAVIOUR OF THE PROGRAM IS SAME AS IF THEY WERE

EXECUTED IN THE ORIGINAL SEQUENCE.

SUCH CONTROL IS DESIRABLE IN A SYSTEM HAVING

MULTIPLE ARITHMETIC PIPELINES OPERATING IN PARALLEL.

PIPELINE HAZARDS

THE HARDWARE TECHNIQUE THAT DETECTS AND

RESOLVES HAZARDS IS CALLED INTERLOCK.

A HAZARD OCCURS WHENEVER AN OBJECT WITHIN

THE SYSTEM (REF, FLAG, MEM LOCATION) IS ACCESSED

OR MODIFIED BY 2 SEPARATE INSTS THAT ARE CLOSE

ENOUGH IN THE PROGRAM SUCH THAT THEY MAY BE

ACTIVE SIMULTANEOUSLY IN THE PIPELINE.

HAZARDS ARE OF 3 KINDS: RAW, WAR AND WAW

PIPELINE HAZARDS

ASSUME THAT AN INST j LOGICALLY FOLLOWS AN INST i.

RAW HAZARD: IT OCCURS BETWEEN 2 INSTS WHEN INST j

ATTEMPTS TO READ SOME OBJECT THAT IS BEING MODIFIED

BY INST i.

WAR HAZARD: IT OCCURS BETWEEN 2 INSTS WHEN THE INST j

ATTEMPTS TO WRITE ONTO SOME OBJECT THAT IS BEING

READ BY THE INST i.

WAW HAZARD: IT OCCURS WHEN THE INST j ATTEMPTS TO

WRITE ONTO SOME OBJECT THAT IS ALSO REQUIRED TO BE

MODIFIED BY THE INST i.

PIPELINE HAZARDS

THE DOMAIN (READ SET) OF AN INST k, DENOTED BY D

k

,

IS THE SET OF ALL OBJECTS WHOSE CONTENTS ARE

ACCESSED BY THE INST k.

THE RANGE (WRITE SET) OF AN INST k, DENOTED BY R

k

,

IS THE SET OF ALL OBJECTS UPDATED BY THE INST k.

A HAZARD BETWEEN 2 INSTS i AND j (WHERE j FOLLOWS

i) OCCURS WHENEVER ANY OF THE FOLLOWING HOLDS:

R

i

* D

j

<>{ } (RAW)

D

i

* R

j

<> { } (WAR)

R

i

* R

j

<> { } (WAW), WHERE * IS INTERSECTION

OPERATION AND { } IS EMPTY SET.

HAZARD DETECTION & REMOVAL

TECHNIQUES USED FOR HAZARD DETECTION CAN BE

CLASSIFIED INTO 2 CLASSES:

CENTRALIZE ALL THE HAZARD DETECTION IN ONE

STAGE (USUALLY IU) AND COMPARE THE DOMAIN AND

RANGE SETS WITH THOSE OF ALL THE INSTS INSIDE

THE PIPELINE

ALLOW THE INSTS TO TRAVEL THROUGH THE PIPELINE

UNTIL THE OBJECT EITHER FROM THE DOMAIN OR

RANGE IS REQUIRED BY THE INST. AT THIS POINT,

CHECK IS MADE FOR A POTENTIAL HAZARD WITH ANY

OTHER INST INSIDE THE PIPELINE.

HAZARD DETECTION & REMOVAL

FIRST APPROACH IS SIMPLE BUT SUSPENDS THE

INST FLOW IN THE IU ITSELF, IF THE INST

FETCHED IS IN HAZARD WITH THOSE INSIDE THE

PIPELINE.

THE SECOND APPROACH IS MORE FLEXIBLE BUT

THE HARDWARE REQUIRED GROWS AS A

SQUARE OF THE NO OF STAGES.

HAZARD DETECTION & REMOVAL

THERE ARE 2 APPROACHS FOR HAZARD REMOVAL:

SUSPEND THE PIPELINE INITIATION AT THE POINT OF

HAZARD. THUS, IF AN INST j DISCOVERS THAT THERE IS

A HAZARD WITH THE PREVIOUSLY INITIATED INST i,

THEN ALL THE INSTS j+1, j+2, ARE STOPPED IN THEIR

TRACKS TILL THE INST i HAS PASSED THE POINT OF

HAZARD.

SUSPEND j BUT ALLOW THE INSTS j+1, j+2, TO FLOW.

HAZARD DETECTION & REMOVAL

THE FIRST APPROACH IS SIMPLE BUT PENALIZES ALL THE

INSTS FOLLOWING j.

SECOND APPROACH IS EXPENSIVE.

IF THE PIPELINE STAGES HAVE ADDITIONAL BUFFERS BESIDES

A STAGING LATCH, THEN IT IS POSSIBLE TO SUSPEND AN INST

BECAUSE OF HAZARD.

AT EACH POINT IN THE PIPELINE, WHERE DATA IS TO BE

ACCESSED AS AN INPUT TO SOME STAGE AND THERE IS A RAW

HAZARD, ONE CAN LOAD ONE OF THE STAGING LATCH NOT

WITH THE DATA BUT ID OF THE STAGE THAT WILL PRODUCE

IT.

HAZARD DETECTION & REMOVAL

THE WAITING INST THEN IS FROZEN AT THIS STAGE UNTIL

THE DATA IS AVAILABLE.

SINCE THE STAGE HAS MULTIPLE STAGING LATCHES IT CAN

ALLOW OTHER INSTS TO PASS THROUGH IT WHILE THE RAW

DEPENDENT ONE IS FROZEN.

ONE CAN INCLUDE LOGIC IN THE STAGE TO FORWARD THE

DATA WHICH WAS IN RAW HAZARD TO THE WAITING STAGE.

THIS FORM OF CONTROL ALLOWS HAZARD RESOLUTION

WITH THE MINIMUM PENALTY TO OTHER INSTS.

HAZARD DETECTION & REMOVAL

THIS TECHNIQUE IS KNOWN BY THE NAME INTERNAL

FORWARDING SINCE THE STAGES ARE DESIGNED TO

CARRY OUT AUTOMATIC ROUTING OF THE DATA TO

THE REQUIRED PLACE USING IDENTIFICATION CODES

(IDs).

IN FACT, MANY OF THE DATA DEPENDENT

COMPUTATIONS ARE CHAINED BY MEANS OF ID TAGS

SO THAT UNNECESSARY ROUTING IS ALSO AVOIDED.

MULTIPROCESSOR SYSTEMS

IT IS A COMPUTER SYSTEM COMPRISING OF TWO OR MORE

PROCESSORS.

AN INTERCONNECTION NETWORK LINKS THESE

PROCESSORS.

THE MAIN OBJECTIVE IS TO ENHANCE THE PERFORMANCE

BY MEANS OF PARALLEL PROCESSING.

IT FALLS UNDER THE MIMD ARCHITECTURE.

BESIDES HIGH PERFORMANCE, IT PROVIDES THE

FOLLOWING BENEFITS: FAULT TOLERANCE & GRACEFUL

DEGRADATION; SCALABILITY & MODULAR GROWTH

CLASSIFICATION OF MULTI-PROCESSORS

MULTI-PROCESSOR ARCHITECTURE:

TIGHTLY COUPLED LOOSELY COUPLED

UMA NUMA NORMA

NO REMOTE MEMORY ACCESS

IN A TIGHTLY COUPLED MULTI-PROCESSOR, MULTIPLE PROCS SHARE

INFO VIA COMMON MEM. HENCE, ALSO KNOWN AS SHARED MEM MULTI-

PROCESSOR SYSTEM. BESIDES GLOBAL MEM, EACH PROC CAN ALSO

HAVE LOCAL MEM DEDICATED TO IT.

DISTRIBUTED MEM MULTI-

PROCESSOR SYSTEM

SYMMETRIC

MULTIPROCESSOR

IN UMA SYSTEM, THE ACCESS TIME FOR MEM IS EQUAL

FOR ALL THE PROCESSORS.

A SMP SYSTEM IS AN UMA SYSTEM WITH IDENTICAL

PROCESSORS, EQUALLY CAPABLE IN PERFORMING

SIMILAR FUNCTIONS IN AN IDENTICAL MANNER.

ALL THE PROCS. HAVE EQUAL ACCESS TIME FOR THE MEM

AND I/O RESOURCES.

FOR THE OS, ALL SYSTEMS ARE SIMILAR AND ANY PROC.

CAN EXECUTE IT.

THE TERMS UMA AND SMP ARE INTERCHANGABLY USED.

Das könnte Ihnen auch gefallen

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- UT Dallas Syllabus For cs4341.001.09s Taught by (Moldovan)Dokument4 SeitenUT Dallas Syllabus For cs4341.001.09s Taught by (Moldovan)UT Dallas Provost's Technology GroupNoch keine Bewertungen

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Aml Questionnaire For Smes: CheduleDokument5 SeitenAml Questionnaire For Smes: CheduleHannah CokerNoch keine Bewertungen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Good Practice On The Project "Improve The Food Security of Farming Families Affected by Volatile Food Prices" (Nutrition Component) in CambodiaDokument2 SeitenGood Practice On The Project "Improve The Food Security of Farming Families Affected by Volatile Food Prices" (Nutrition Component) in CambodiaADBGADNoch keine Bewertungen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Safe and Gentle Ventilation For Little Patients Easy - Light - SmartDokument4 SeitenSafe and Gentle Ventilation For Little Patients Easy - Light - SmartSteven BrownNoch keine Bewertungen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- IM0973567 Orlaco EMOS Photonview Configuration EN A01 MailDokument14 SeitenIM0973567 Orlaco EMOS Photonview Configuration EN A01 Maildumass27Noch keine Bewertungen

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Challan FormDokument2 SeitenChallan FormSingh KaramvirNoch keine Bewertungen

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- TP913Dokument5 SeitenTP913jmpateiro1985Noch keine Bewertungen

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- COST v. MMWD Complaint 8.20.19Dokument64 SeitenCOST v. MMWD Complaint 8.20.19Will HoustonNoch keine Bewertungen

- Modeling Cover Letter No ExperienceDokument7 SeitenModeling Cover Letter No Experienceimpalayhf100% (1)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hortors Online ManualDokument11 SeitenHortors Online Manualtshepang4228Noch keine Bewertungen

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Blockchain Unit Wise Question BankDokument3 SeitenBlockchain Unit Wise Question BankMeghana50% (4)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Fouzia AnjumDokument3 SeitenFouzia AnjumAbdul SyedNoch keine Bewertungen

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- 036 ColumnComparisonGuideDokument16 Seiten036 ColumnComparisonGuidefarkad rawiNoch keine Bewertungen

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- Ultra Wideband TechnologyDokument21 SeitenUltra Wideband TechnologyAzazelNoch keine Bewertungen

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Caselet - LC: The Journey of The LCDokument5 SeitenCaselet - LC: The Journey of The LCAbhi JainNoch keine Bewertungen

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Organisational Structure of NetflixDokument2 SeitenOrganisational Structure of NetflixAnkita Das57% (7)

- Hyster Forklift Class 5 Internal Combustion Engine Trucks g019 h13xm h12xm 12ec Service ManualsDokument23 SeitenHyster Forklift Class 5 Internal Combustion Engine Trucks g019 h13xm h12xm 12ec Service Manualsedwinodom070882sad100% (71)

- Juegos PPCDokument8 SeitenJuegos PPCikro995Noch keine Bewertungen

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Peace Corps Guatemala Welcome Book - June 2009Dokument42 SeitenPeace Corps Guatemala Welcome Book - June 2009Accessible Journal Media: Peace Corps DocumentsNoch keine Bewertungen

- CS 148 - Introduction To Computer Graphics and ImagingDokument3 SeitenCS 148 - Introduction To Computer Graphics and ImagingMurtaza TajNoch keine Bewertungen

- Output Vat Zero-Rated Sales ch8Dokument3 SeitenOutput Vat Zero-Rated Sales ch8Marionne GNoch keine Bewertungen

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Ad CVDokument2 SeitenAd CVzahid latifNoch keine Bewertungen

- ETA-1 Service PDFDokument44 SeitenETA-1 Service PDFgansolNoch keine Bewertungen

- BSC IT SyllabusDokument32 SeitenBSC IT Syllabusஜூலியன் சத்தியதாசன்Noch keine Bewertungen

- Details For Order #002 5434861 1225038: Not Yet ShippedDokument1 SeiteDetails For Order #002 5434861 1225038: Not Yet ShippedSarai NateraNoch keine Bewertungen

- Network Administration and Mikrotik Router ConfigurationDokument17 SeitenNetwork Administration and Mikrotik Router ConfigurationbiswasjoyNoch keine Bewertungen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Octopus 900 Instructions For UseDokument18 SeitenOctopus 900 Instructions For UseAli FadhilNoch keine Bewertungen

- CasesDokument4 SeitenCasesSheldonNoch keine Bewertungen

- Vehicle Detection and Identification Using YOLO in Image ProcessingDokument6 SeitenVehicle Detection and Identification Using YOLO in Image ProcessingIJRASETPublicationsNoch keine Bewertungen

- CV Rafi Cargill, GAR, MCR, AM GROUP and Consultancy EraDokument6 SeitenCV Rafi Cargill, GAR, MCR, AM GROUP and Consultancy EranorulainkNoch keine Bewertungen

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)