Beruflich Dokumente

Kultur Dokumente

Metastable States in Asynchronous Digital Systems: Avoidable or Unavoidable?

Hochgeladen von

mravikrishnaraoOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Metastable States in Asynchronous Digital Systems: Avoidable or Unavoidable?

Hochgeladen von

mravikrishnaraoCopyright:

Verfügbare Formate

Metastable States in Asynchronous Digital Systems: Avoidable or Unavoidable?

Reinhard Mnner Physics Institute, University of Heidelberg, Heidelberg, Germany

Abstract The synchronization of asynchronous signals can lead to metastable behavior and malfunction of digital circuits. It is believed - but not proved - that metastability principally cannot be avoided. Confusion exists about its practical importance. This paper shows that metastable behavior can be avoided by usage of quantum synchronizers in principle, but not in practice, and that conventional synchronizers unavoidably show metastable behavior in principle, but not in practice, if properly designed. Index terms: metastability, synchronizer, arbiter, asynchronous inputs, quantum mechanics, macroscopic quantum effects, SQUID, macroscopic quantum tunneling

A previous version of this paper has been published in Microelectron. Reliab. 28, 2 (1988) 295-307; republished by permission of Pergamon Journals Ltd.

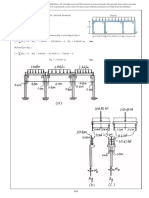

1. The problem Digital systems can be designed to operate synchronously or asynchronously. In totally synchronous systems, the state of all signals is important only at discrete moments in time. These moments are defined by a central clock (fig. 1). Between individual clock edges, signal transitions of any kind may happen. The only requirement is, that all signals are stable at the sampling time (in a practical system, stable means, e.g., all setup times of electronic devices are guaranteed). The state space of a synchronous system is therefore given by all combinations of the individual signal states and is finite. Totally synchronous devices are therefore called state machines. They make transitions only between a limited number of well defined states. Due to this deterministic behavior, most digital circuits are designed in this way, e.g., the main part of all microprocessors. central clock signal t sample times Fig. 1: Synchronous sampling of signals In totally asynchronous systems, a signal transition immediately triggers other state transitions. If it is guaranteed, that only one signal at a time changes its state - the so called fundamental requirement for asynchronous circuits -, the system behaves predictably. "At a time" means here the resolution time of the electronic devices used. If more than one signal changes its state during this resolution time, the system behavior is undefined. In complex asynchronous systems it is very difficult to fulfill the fundamental requirement. To do so, one would have to take into account all instants in time where transitions of input signals are allowed - an infinite number due to its continuous range -, all variations in the timing behavior of electronic components used transistors, capacitors, resistors etc. -, and all signal delays during transmission along cables, buses, on PC-boards, and within integrated circuits. Complex asynchronous digital circuits are therefore avoided wherever possible. Unfortunately, it is not always possible to operate totally synchronously. The typical example is a synchronous system which has to respond to external events, e.g., a

computer to an interrupt. Because the external event generally is generated independently and therefore asynchronously to the computers system clock, it has to be synchronized with it for proper operation. This is usually done with a D-flip-flop, whose data input is connected to the asynchronous event and whose clock input is driven by the system clock (fig. 2).

asynchronous input

synchronous output

system clock Fig. 2 : D-type flip-flop Seen from the point of view of digital electronics, a D-flip-flop is considered as a bistable device with only two possible output states (high or low), which can be switched at discrete instants in time - the clock sample times - according to the input state. A more detailed description takes into account the analog features making up the pseudo digital behavior. Basically, the flip-flop consists of two coupled amplifiers with positive feedback (fig. 3), whose output function has two minima, but is continuous (fig. 4). These minima are the two stable states of the device, corresponding to the digital high and low output levels. The D-flip-flop can be switched between both states by disturbing the electric balance sufficiently. If, however, the D-flip-flop is triggered exactly when its input state changes (critical triggering), it may enter a metastable state, where its output signal is neither high nor low. input a output 1

input b

output 2

Fig. 3 : Basic analog model of a flip-flop

potential energy

low metastable

high

output voltage

Fig. 4: Output states of the flip-flop Because this situation is very improbable, it is usually neglected. To consider its consequences, two cases can be distinguished, where the output is used as an input to a single gate or where the output is split and asserted to more than one gate input simultaneously. In the first case (fig. 5), the signal may arbitrarily be interpreted as high or low by the following gate. This does not lead to a malfunction of the system. The asynchronous signal is being recognized immediately or ignored until the next clock cycle, where critical triggering conditions not longer exist. It is possible as well, that the undefined state is passed on to further circuits. Even then, no further problems will arise, as long as the signal is fed only into a single successor. Due to small differences of the electrical characteristics, anyone of the following gates will interpret the undefined state as high or low and therefore take the decision, whether the asynchronous signal is accepted immediately or after a delay. In such a situation, it would not at all have been necessary to use a synchronizer. However, there is a chance that small electrical disturbances, like noise, cause the decision making gate to switch between its output states at an arbitrary time (fig. 6). This would generate a new asynchronous signal within the system, which may or may not cause problems according to the discussion given here. Even more serious is the fact, that the width of the generated signal also depends on noise. The circuit may therefore generate asynchronous, arbitrary short signals, i.e. glitches, which not only can cause malfunctions, but are very hard to detect in the debugging process. metastable asynchronous signal system clock D Q stable or metastable

other signals

Fig. 5: Synchronizer output fed into a single gate

metastable plus noise asynchronous signal system clock D Q

asynchronous output and/or glitches

other signals

Fig. 6: Generation of new asynchronous signals and glitches If the undefined output signal of the synchronizer is asserted to more than one gate simultaneously (fig. 7), some of them may interpret it as high, some as low, and still others may forward it as undefined. In this way, the digital system enters into states not foreseen during design, which may result in all kinds of errors. A typical example is a bus arbiter used to synchronize asynchronous bus requests. If one gate at the output of the synchronizing flip-flop is used to forward the bus grant signal and another gate, also driven by this output, to signal bus mastership, a situation may arise, where the arbiter forwards the grant and signals bus mastership. In this case, two masters may use the bus at the same time. metastable asynchronous signal system clock other signals Fig. 7: Synchronizer output fed into multiple gates It should be noted, that there is no simple way out of the problem. One wrong solution would be to allow the synchronizing flip-flop to enter a metastable state occasionally and to try to fix the situation by a second flip-flop. This cannot work in principle, because the output of the first synchronizer state might set up critical triggering conditions for the second one. Another wrong solution is a synchronizer consisting of a flip-flop and an analog comparator, which checks the output of the flip-flop for correct digital levels (fig. 8). The comparator output could therefore be used to disable succeeding circuits as stable and inverse to each other, stable but at the same level, or metastable

long as the synchronizer is in a metastable state - but it is a new asynchronous signal which again would have to be synchronized. asynchronous signal system clock D Q high min low max synch output valid synchronous output

Fig. 8: Wrong solution If, however, the requirements to the synchronizer are lowered, it is very easy to avoid malfunctions of the circuits even in the presence of metastable states of flip-flops. Recently, Chapiro [1] proposed to use a metastability detector as shown in figure 8 to stop the system clock as long as the output of the synchronizer is undefined (fig. 9). An undefined output is therefore never sampled and cannot cause the problems described above. Here, the asynchronous input signal is synchronized to a non-synchronous clock. This, however, may cause new problems. If the synchronizer is in a metastable state for a very long time, the system clock is stopped equally long. This is usually neither desirable nor possible, e.g., with commercial microprocessors. central clock asynch input synch output output invalid t Fig. 9: Avoiding errors by a non-synchronous clock A simpler solution, which does not stop the system for an arbitrary time, but only the synchronizer, is shown in figure 10. There, a defined output level is assigned to the metastable state - which can take arbitrary long - by shifting the upper margin of the logic low to a higher voltage. An undefined output level, which could cause malfunctions in succeeding circuits, is therefore only created during an actual transition

to or from the logic high state, i.e. for a limited time. By technological means, this time can be held shorter than a cycle of the system clock. potential energy

low metastable

high

output voltage

Fig. 10: Reassignment of logic levels Practical systems are not allowed to stop for an arbitrary time, nor can asynchronous inputs be ignored arbitrarily long. Therefore, the problem remains, to synchronize asynchronous inputs to a synchronous clock within a few clock cycles, i.e. within a limited time. Previous approaches to this problem are discussed below. This is followed by a proposal of a quantum mechanical synchronizer, which solves the basic problem, but, unfortunately, introduces new ones.

2. Previous work The existence of metastable states in synchronizers is known since a long time. However, still thirty years ago, it was tried to design synchronizing elements without metastable behavior. A short time later, Yoeli and Rinon already suspected the principal unavoidability of these states and suggested to design systems by explicitly taking care of undefined states using ternary logic [2]. This approach, however, proved to be not feasible for practical system design. Other authors accepted the metastable behavior of the synchronizing element and just tried to avoid its consequences. Friedman suggested to normalize the undefined logic output signal of the synchronizer in amplitude or width to get back valid digital signals [3]. Still ten years ago, many papers have been published concerning the problem of synchronizing asynchronous inputs [3,4,5] or the problem of arbitrating between asynchronous signals [6,7]. Four years ago, Barros and Johnson proved, that all previously proposed synchronizers, i.e. D-flip-flops, latches, inertial delays etc., show metastable behavior [9] and are equivalent in this respect. Since then it is generally believed, that metastable

states are unavoidable [10]. This is, however, still not proven. Meanwhile, many authors reported metastable behavior in practical circuits. Chaney and Rosenberger showed, that if a synchronizer enters a metastable state, it will leave it with a certain probability. The probability for still being metastable decreases roughly exponentially with time [11]. From such measurements, error rates have been computed under the assumption of typical decision times of 20 - 40 ns (for TTL logic). With conventional devices, the mean time between failures (MTBF) is in the range of some seconds to 1032 s [12]. Kleeman and Cantoni [13] generalized the theoretical analysis and showed, that a large number of input functions can lead to metastable states, provided that - in their terminology - the set of input functions is connected. This is equivalent to the condition a) that the amplitude of the asynchronous signal can have any value between the two digital levels and/or b) that the time between assertion of the asynchronous signal and assertion of the next clock edge can be arbitrary. If a synchronizer enters a metastable state, it will switch back to a stable, defined one, within a certain time. The output signal of a synchronizer must therefore only be used after a delay. This delay has to be taken long enough, so that the probability for the synchronizer to still be in the metastable state is negligible. This is not always the case with high speed systems, where long decision times degrade the system performance. Errors in high performance systems have been reported [14]. It is, however, possible to design a system to be fault tolerant in respect to synchronizer errors. One example is the multiprocessor system Heidelberg Polyp [15], where arbitration errors in a multiple bus interconnection network can be detected and corrected by retries [16].

3. Principal unavoidability Metastability is a very general phenomenon. It is found in most cases, where a system has more than one stable state. Examples for such systems are a D-flip-flop (two stable states) and a ball on an uneven ground (number of stable states = number of hollows). Thereby it is assumed, that the state of the system can be described by a set of continuous (real) variables. In case of the flip-flop, this is a single variable, the voltage of the Q-output. In case of the ball, there are the x- and y-coordinates, which correspond to a height z given by the shape of the ground. If a system is set up by

choosing random state variables, it usually will not remain in the selected state. In most cases, the feedback circuit of the flip-flop increases or decreases the output voltage as a function of the current state. The ball is accelerated towards a hollow by a force corresponding to the gradient of the gravitational potential. Such states are therefore called unstable. If, in contrast, the system is set up in a state, where, e.g., all feedback or gravitational forces are zero, it stays there. However, a small disturbance might move it into a neighboring state. If the system then tends to move back to the original state, this state is called stable. If it tends to move away from the original one, the state will eventually move to another, stable state. But this process may require a long time, if the original disturbance was small. So, such a state may - in contrast to its instability appear to be stable and is therefore called metastable. Devices like synchronizers, arbiters etc. are used to take decisions. A synchronizer has to tell whether a signal was asserted at a certain instant in time or not. An arbiter has to decide, which one of at least two requests should be granted. Such devices therefore have to have a number of stable states corresponding to the number of individual decisions possible. The state space of such systems - which is defined as all possible combinations of the state variables - can therefore be divided into at least two regions. If the system is within one of these regions, its current state is attracted towards a stable state belonging to this region. Between attracting regions, there are "neutral" regions, which have no influence on the state of the system (fig. 11). These regions correspond to metastable states. In the simplest case, the neutral regions are only the infinitely "thin" boundaries between attracting regions. In any way, two attracting regions have to have some kind of boundary between them for topological reasons. In this sense, all systems having at least two stable states must also have at least one metastable state.

Fig. 11: Two-dimensional attracting and neutral regions on a billiard table

If the system is in a metastable state, it is not attracted to any of the stable states and therefore cannot longer be used to select one of them. However, even a synchronizer having metastable states can be an useful device, because it is possible in principle to avoid entering metastable regions. For that, one has to ensure that state transitions are made only from one attracting region to another and not to the boundary between them or to any neutral region. In case of the flip-flop, enough charge has to be input to the circuit, to activate the feedback mechanism in the opposite direction. Such requirements are restrictions to the values allowed for the input function. Unfortunately, such restrictions are not possible in most cases. One example is again the synchronizer. Because the signals to be synchronized are - by definition - not correlated with the synchronizer's clock, they may be asserted at any time t. And because - again by definition - a synchronizer should make a transition between tn and tn+1 for input signals asserted before tn and should ignore input signals asserted later, there must be a critical timing between input signal and clock, which brings the system into a metastable state. Under these circumstances, all systems with more than one stable state will unavoidably show metastable behavior.

4. Principal avoidability The basic presupposition for the occurrence of metastable behavior in multistable devices is the existence of continuous functions for input and output signals. Continuity is necessary for the output signal, because noncontinuity implies the existence of at least two discrete states to which digital states could be assigned. Continuity is necessary for the input signals, because noncontinuity could be used to design the device in a way to avoid critical triggering conditions. If both conditions are fulfilled, all devices will show metastable behavior. Because synchronizers, arbiters etc. are devices to map a continuous input function onto a discrete set of output states, the continuity of the input function cannot be avoided. Otherwise, these devices would not be necessary. The only way to avoid metastable behavior therefore is to look for devices with some kind of inherent noncontinuity, i.e. with discrete internal states. This is possible by exploiting quantum effects, which are by their very own nature discrete.

10

Consider a very simple quantum system, a single electron. It has a spin - which can to some degree be envisioned as an angular momentum - of 1/2 h . This spin is accompanied by a magnetic moment. According to Heisenberg's principle of uncertainty, the spin vector cannot be measured completely, but only its projection onto an arbitrary spatial axis. This can be done (as in the Stern-Gerlach experiment [17]) by letting the electron pass through an inhomogeneous magnetic field. Such a field acts onto the electron's magnetic moment and creates a force on the electron, where the direction of the force depends on the orientation of the electron's spin in regard to the measuring axis. After passing through the apparatus, the electron can be detected, e.g., on a photographic plate, in only two different positions, corresponding to the two possible spin orientations. Quantum mechanics now predict - and this is proven to a very high degree - that no other outcome of the measurement is possible. Even if the electron's spin was not aligned with the measuring axis prior to the measurement, the measurement process itself enforces the spin of the electron to point either up or down [18]. The two spin orientations can therefore be interpreted as a high or low state in a digital circuit. The important point here is that after the measurement process, whatever time it takes, the system will be in one of two discrete states with certainty [19]. If the measurement is therefore carried out within one clock cycle of a synchronous digital system, the result of the measurement is guaranteed to be unique and stable regardless of the situation the electron was in prior to measurement. Without influencing the electron in any way, a measurement of its spin component in the direction of the magnetic field would only give an arbitrary decision. This would eliminate errors due to metastability, but would not allow to synchronize signals. To use this device as a synchronizer, there must be a way to influence the outcome of the measurement. This can, e.g., be done by placing the electron in a homogeneous magnetic field prior to the measurement. In this way, a torque is created that rotates the spin vector around the axis of the magnetic field with a characteristic frequency. The up or down state of the electron's spin can now be influenced by applying a second, much weaker, magnetic field whose direction rotates in a plane perpendicular to the first one with the same frequency [20]. In doing so, a classical magnetic moment would be turned continuously and deterministically. By repeatedly applying the second field for an appropriate time (corresponding to a 180 pulse), the direction of the magnetic moment could be switched forth and back as required. For a quantum mechanical system as the electron, only the average values of the spin component behave in this way. For a single measurement, only the probability is changed to give an up or down

11

result. But this uncertainty does not impair the correct operation of the device. For a synchronizer, it is not required to take the "right" decision all the time - after all, there is no "right" decision at the instant when the asynchronous signal changes its logic state. It is, however, important to ensure that the system takes any decision within the basic clock cycle of the synchronous circuit. However, by applying a 180 pulse each time the asynchronous signal changes its logic state, the probability for measuring the desired outcome is switched. This means that eliminating metastable states of the synchronizer is paid for by possibly delaying the required output. This degrades the system performance, but avoids fatal logic errors. In this sense, metastable states are in principle avoidable by exploiting quantum effects.

5. Practical unavoidability Unfortunately, practical restrictions make it unfeasible to exploit the ideal features of a quantum synchronizer completely. The problem is that the quantum mechanical measuring process consists in transforming a quantum mechanical quantity - in the example given above the electron's spin with values of 1/2 h - to a macroscopic quantity, e.g., a digital high or low state. In the Stern-Gerlach experiment, the spin is coupled to a continuous spatial variable, the separation of the electron's position after measurement. But this position not only depends on the electron's spin and the gradient of the inhomogeneous magnetic field, but also on the initial conditions of the electron, like position and linear momentum, before measurement. Both values cannot be known exactly, partly for quantum mechanical reasons (uncertainty principle) and partly for classical ones (values are given by a probability distribution). This uncertainty is projected onto the final state and gives rise to smoothing out the discrete set of output states. Additionally, it is unfeasible to use a simple quantum system like a single electron in practical circumstances as a decision making system. Instead, a macroscopic system has to be used, i.e. a system consisting of a high number of particles. If the individual particles have a discrete set of states, the system as a whole also has discrete states. However, because each particle is in each one of its states only with a certain probability < 1, the system as a whole can be in all possible combinations of these states with different probabilities. If, e.g., the number of particles is assumed as 103 (roughly the number of electrons representing one bit in a dynamic memory chip) and the number of

12

output states as 2, the system as a whole has ca. 2 1000 10 300 output states. In the Stern-Gerlach experiment, each one of the two possible states of the electron was smoothened spatially according to the distribution of initial values. In the macroscopic system consisting of 103 particles, each one of the 10300 states is smoothened. In practice, a quasi-continuous output function exists, giving again rise to metastable behavior. This problem could be solved by using a synchronizer depending on macroscopic quantum effects, e.g., a system which has - for quantum mechanical reasons - a discrete set of macroscopic states. One would hope that a measurement process forces such a system to take one of these states within the measuring time, as is the case with a single electron. Such a system is, e.g., a superconducting quantum interference device (SQUID [21]). A SQUID is a superconducting loop with at least one very narrow notch, a "weak link". A current in the loop creates a magnetic flux through the loop. This flux is quantized, i.e. it can take integer multiples of the flux quantum 0 only. The weak link is made so narrow that the critical current density - the value where superconductivity ceases - corresponds roughly to one flux quantum. If the current in the loop is increased slowly, the SQUID switches from flux state n0 to (n+1)0 as soon the critical current density Iup(nn+1) is reached (fig. 12). Decreasing the current again switches the SQUID back, but at the lower current Idown(n+1n), giving rise to a bistable behavior. In the following discussion, n is set to zero and only the first bistable region is considered. The transition between the two stable states follows a classical equation of motion [23]. According to this equation, the transition time depends on the normal resistance and the intrinsic capacitance of the weak link. Both values can be adjusted by choosing appropriate technological parameters, so that the transition is done within one cycle of the system's clock. Metastability problems are thereby avoided, because quantum mechanics ensure the existence of two macroscopically discrete states (after each measurement), and because classical physics direct the transition between them and ensure definite transition times.

13

0 magnetic flux

0/

I down I up loop current Fig. 12: Bistable behavior of SQUIDs However, quantum mechanics predict another effect preventing the usage of a SQUID as a synchronizer. The continuous input variable of the SQUID is the loop current, which is used to switch it between its output states. Below Idown and above Iup, the flux state is uniquely defined. However, the SQUID has to pass the region between Idown and Iup during the switching process. There, it can switch to both flux states. A classical system would take one of them and keep it. A quantum mechanical system like the SQUID, however, oscillates, in a sense, between both states. This effect is well known from other quantum mechanical systems with certain symmetry features, e.g., a NH3 molecule. There, the nitrogen atom can equally well be above or below the plane spanned by the three hydrogen atoms. If not disturbed, e.g., by measurements, the probability to be in one or the other state is given by P = cos 2 ( t), with some system dependent constant . This is an example of quantum mechanical tunneling. In analogy to this, a SQUID shows macroscopic tunnel effects. Each time a measurement of the magnetic flux is done, the system is forced to take on one of the two flux states. If the next measurement is done after a time very short compared to -1, P (1- 2t2/2)2 1- (t)2, i.e. the probability that the SQUID switches to the other state is also low [24]. One should note that a strictly continuous measuring process is not possible, because a measurement always is carried out by an interaction of discrete entities, e.g., electrons, photons or other particles. Therefore, even with a quasi-continuous measuring process, a finite probability remains, that the system switches its state between two such interactions. This can happen at any time, generating a new asynchronous signal at the output of such a synchronizer. The situation therefore is comparable to the one shown previously in fig. 6, where a gate produced asynchronous signals and glitches from the noisy output of a metastable flip-flop. So the SQUID again

14

can lead to metastable behavior in succeeding circuits, if it is triggered in the critical region between Idown and Iup. The question remains, whether there is a mechanism to stabilize the SQUID in one of its states, i.e. to prevent macroscopic quantum tunneling. If so, no asynchronous signals would be generated and the SQUID would operate as an ideal synchronizer. Unfortunately, this is not completely clear today. However, Caldeira and Leggett [25] investigated a system very similar to the SQUID, a superconducting Josephson contact of very small size (capacity 10 -13 F) at very low temperature ( 10 -2 K) [26]. If nearly isolated from external circuits, this system has many identical stable minima, like the SQUID has two. If the contact is undamped, the system can be prepared in any of these minima by choosing an appropriate external current across the contact. An undisturbed contact again executes coherent oscillations through all the equivalent minima. If, however, the contact is damped, e.g., by a bypass resistor, these oscillations are damped with an exponentially decreasing amplitude. Above a critical damping, eventually, the system stays stably in one of the minima. Increasing the damping instantly above this critical value, e.g., by closing a switch, can therefore be regarded as a quantum mechanical measuring process. In this description, the reduction of the system's state to a single specific value is caused by a dissipative coupling of the measuring object to the environment. This is a statistical process and is therefore not guaranteed to be finished in definite time. Rather, only a probability can be given that a stable state has been reached after switching on damping and this probability increases exponentially in time. This can directly be compared to the probability to find a conventional synchronizer in a stable state after critical triggering. So, prevention of macroscopic quantum tunneling seems to be possible, but not with certainty within a limited time.

6. Practical avoidability In spite of their principal and practical unavoidability, metastability in digital circuits is not a practical problem, if the circuits are properly designed. For real systems, it is unimportant whether a certain error will occur never or with a negligible probability. A reasonable requirement is that the error rate is comparable to the rate of other errors in the system. The system is then said to be balanced in this respect . This is usually the most economic solution.

15

The simplest way to keep the probability of errors due to metastable states of synchronizers low is to provide the synchronizer with enough decision time, i.e. to use its output only after a delay which is long compared to the time constant of the decision making process. This time constant depends on the technology used for the synchronizer circuit. According to Chaney [12], typical S-TTL D-flip-flops have a probability of only 10-12 to 10-18 to be still in the metastable state 40 ns after critical triggering. This corresponds roughly to two minimal clock cycles. In other words, if the output of a D-flip-flop is not used in the next clock cycle but one later, the mean time for finding its output at an undefined logic level is in the order of years. The additional wait cycle is short compared to the time required, e.g., for entering an interrupt routine. A delayed synchronization of interrupts is therefore uncritical. Degradation of the system throughput by synchronizer wait cycles can only be expected, where operations are performed, which take a comparable short time. This is, e.g., the case with a device arbitrating between single bus cycles. Several cases have been reported, where not enough decision time was given to the synchronizer and system errors showed up [14]. If a synchronizer is in a metastable state, it will leave it within a certain delay time with a very high probability. During this time, the output voltage increases or decreases exponentially towards the high or low state due to the internal feedback mechanism. In the first part of this switching process, the output is in the undefined voltage region. Here, statistical fluctuations may cause very fast switching processes of following logical devices. To avoid unpredictable behavior of the circuit due to this effect, the output of the synchronizer is usually clocked once more by a D-flip-flop (fig. 13). The second flip-flop is clocked after the decision delay of the first one. One should note that even in this setup the output of the second flip-flop principally can also show metastable behavior. There is a very small probability that the first flip-flop is triggered critically, enters a metastable state, and leaves it exactly so that the second flip-flop also is triggered critically. However, the probability for that can be held extremely low. If two S-TTL D-flip-flops are used with a clocking delay of 40 ns between them, this probability is 10-12 to 10-18 times the probability for setting up critical triggering conditions in the second stage, i.e. essentially zero. Black and Pri-Tal [27] give several hints for practical system designs considering metastability problems.

16

asynchronous signal system clock

synchronous output

delay

Fig. 13: Two-stage synchronizer 7. Conclusions This paper showed that metastability is a general phenomenon which arises wherever a continuous set of states has to be mapped onto a discrete one. Examples were given, how metastable synchronizers cause errors in electronic circuits. It was argued, that the metastability problem cannot be solved in principle with classical devices, but with quantum synchronizers, i.e. devices with a discrete set of states as given by quantum mechanics. However, other quantum effects like macroscopic quantum tunneling, were shown to prevent a practical application of quantum synchronizers. Finally it was shown, that metastable states - in spite of their principle unavoidability - can explicitly be taken into account in the design of a system. The system has to be slowed down, until the mean time between faults caused by metastable synchronizers is comparable to that caused by other error sources. Additionally, circuits using the output of synchronizers can be designed to operate fault tolerant. Such techniques can eliminate the need for synchronizer wait cycles at all.

Acknowledgments I would like to thank Heinz-Dieter Zeh, University of Heidelberg, Germany, and Ronit Tsach, Hebrew University of Jerusalem, Israel, for many fruitful discussions on physics and philosophy.

References [1] Chapiro D.M.: Reliable High-Speed Arbitration and Synchronization; IEEE Trans. Comp. C-36, 10 (1987) 1251 - 1255

17

[2] Yoeli M., Rinon S.: Application of Ternary Algebra to the Study of Static Hazards; J. ACM 11 (1964) 84 - 97 [3] Friedman A.D.: Feedback in Asynchronous Sequential Circuits; IEEE Trans. Electr. Comp. EC-15 (1966) 740 - 749 [4] Chaney T.J., Molnar C.E.: Anomalous Behavior of Synchronizer and Arbiter Circuits; IEEE Trans. Comp. C-22, 4 (1973) 421 - 422 [5] Couranz G.R., Wann D.F.: Theoretical and Experimental Behavior of Synchronizers Operating in the Metastable Region; IEEE Trans. Comp. C-24, 6 (1975) 604 - 616 [6] Wormald E.G.: A Note on Synchronizer or Interlock Maloperation; IEEE Trans. Comp. C-26 (1977) 317 - 318 [7] Plummer W.W.: Asynchronous Arbiters; IEEE Trans. Comp. C-21, 1 (1972) 37 - 42 [8] Pearce R.C., Field J.A., Little W.D.: Asynchronous Arbiter Module; IEEE Trans. Comp. C-24, 9 (1975) 931 - 932 [9] Barros J.C., Johnson B.W.: Equivalence of the Arbiter, the Synchronizer, the Latch, and the Inertial Delay; IEEE Trans. Comp. C-32, 7 (1983) 603 - 614 [10] Mead C., Convay L.: Introduction to VLSI Systems; Add.-Wesley, Reading, MA (1980) 236 - 242 [11] Chaney T.J., Rosenberger F.K.: Characterization and Scaling of MOS Flip-Flop Performance in Synchronizer Applications; Proc. Conf. VLSI Arch., Design, Fabr., Caltech (1979) 357 - 374 [12] Chaney T.J.: Measured Flip-Flop Responses to Marginal Triggering; IEEE Trans. Comp. C-32, 12 (1983) 1207 - 1209 [13] Kleeman L., Cantoni A.: On the Unavoidability of Metastable Behavior in Digital Systems; IEEE Trans. Comp. C-36, 1 (1987) 109 - 112 [14] Marrin K.: Metastability haunts VMEbus and MultibusII system designers; Comp. Design 24, 9 (1985) 29 - 32 [15] Mnner R., Shoemaker R.L., Bartels P.H.: The Heidelberg Polyp System; IEEE Micro 7, 1 (1987) 5 - 13 [16] Mnner R., Deluigi B., Saaler W., Sauer T., v. Walter P.: The POLYBUS - A Flexible and Fault-Tolerant Multiprocessor Interconnection; Interf. in Comp. 2, 1 (1984) 45 - 68 [17] Stern O., Gerlach W.: Der experimentelle Nachweis der Richtungsquantelung im Magnetfeld; Z. Physik 9 (1922) 349 - 352

18

[18] v. Neumann J.: Mathematical Foundations of Quantum Mechanics; Princeton Univ. Press, Princeton (1955) [19] Peierls R.: Observations in Quantum Mechanics and the "Collapse of the Wave Function"; in: Lahti P., Mittelstaedt P., Eds.: Proc. Symp. on the Foundations of Mod. Phys.; Joensuu, Finland (1985) 187 - 196 [20] Shankar R.: Principles of Quantum Mechanics; Plenum Press, New York (1980) pp. 396 [21] Zimmerman J.E., Thiene P., Harding J.T.: Design and Operation of Stable rfBiased Superconducting Point-Contact Quantum Devices, and a Note on the Properties of Perfect Clean Metal Contacts; J. Appl. Phys. 41, 4 (1970) 1572 1580 [22] Clarke J.: Principles and Applications of SQUIDs; Proc. IEEE 77, 8 (1989) 1208 - 1223 [23] De Bruyn Ouboter R.: Metastability in a SQUID and Macroscopic Quantum Tunneling; Proc. Intl Symp. Foundations of Quantum Mechanics, Tokyo (1983) 83 - 93 [24] Simonius M.: Spontaneous Symmetry Breaking and Blocking of Metastable States; Phys. Rev. Lett. 40, 15 (1978) 980 - 983 [25] Caldeira A.O., Leggett A.J.: Quantum Tunneling in a Dissipative System; Ann. Phys. 149 (1983) 374 - 456 [26] Guinea F., Schn G.: Coherent Charge Oscillations in Tunnel Junctions; Europhys. Lett. 1, 11 (1986) 585 - 593 [27] Black J., Pri-Tal S.: Keine Angst vor Metastabilitten; VMEbus 1, 1 (1987) 10 16; also in: VMEbus Systems, spring 1986

19

Das könnte Ihnen auch gefallen

- Reference Guide To Useful Electronic Circuits And Circuit Design Techniques - Part 2Von EverandReference Guide To Useful Electronic Circuits And Circuit Design Techniques - Part 2Noch keine Bewertungen

- Crossing The Synch AsynchDokument15 SeitenCrossing The Synch AsynchsamdakNoch keine Bewertungen

- Sequential Logic: Combinational Logic, Whose Output Is ADokument12 SeitenSequential Logic: Combinational Logic, Whose Output Is Asiji smNoch keine Bewertungen

- Simulation of Some Power System, Control System and Power Electronics Case Studies Using Matlab and PowerWorld SimulatorVon EverandSimulation of Some Power System, Control System and Power Electronics Case Studies Using Matlab and PowerWorld SimulatorNoch keine Bewertungen

- Psuedo Sequence SignalDokument14 SeitenPsuedo Sequence Signalzfa91Noch keine Bewertungen

- JUNE 2013 Solved Question Paper: 1 A: Compare Open Loop and Closed Loop Control Systems and Give Jun. 2013, 6 MarksDokument40 SeitenJUNE 2013 Solved Question Paper: 1 A: Compare Open Loop and Closed Loop Control Systems and Give Jun. 2013, 6 MarksmanjulaNoch keine Bewertungen

- Power System FaultDokument9 SeitenPower System Faultrohit91prajapatiNoch keine Bewertungen

- 1.8V 0.18 M CMOS Novel Successive Approximation ADC With Variable Sampling RateDokument5 Seiten1.8V 0.18 M CMOS Novel Successive Approximation ADC With Variable Sampling RateMiguel BrunoNoch keine Bewertungen

- A Cmos: Zero-Overhead Self-Timed 16011s DividerDokument3 SeitenA Cmos: Zero-Overhead Self-Timed 16011s DividerIndrasena Reddy GadeNoch keine Bewertungen

- A2 Electronics Project: 8bit Analogue To Digital Slope ConverterDokument48 SeitenA2 Electronics Project: 8bit Analogue To Digital Slope Converterhello12353Noch keine Bewertungen

- 132 - 136 Dinda Fadhya Darmidjas 21060118130105Dokument8 Seiten132 - 136 Dinda Fadhya Darmidjas 21060118130105Dinda FadhyaNoch keine Bewertungen

- Automatic Active Phase Selector For Single Phase Load From Three Phase SupplyDokument4 SeitenAutomatic Active Phase Selector For Single Phase Load From Three Phase SupplyMohan HalasgiNoch keine Bewertungen

- Automatic Synthesis of Gated Clocks For Power Reduction in Sequential CircuitsDokument21 SeitenAutomatic Synthesis of Gated Clocks For Power Reduction in Sequential CircuitsKavya NaiduNoch keine Bewertungen

- Numerical Oscillations in EMTP PDFDokument4 SeitenNumerical Oscillations in EMTP PDFCarlos Lino Rojas AgüeroNoch keine Bewertungen

- Modeling of Over Current Relay Using MATLAB Simulink ObjectivesDokument6 SeitenModeling of Over Current Relay Using MATLAB Simulink ObjectivesMashood Nasir67% (3)

- Lab Manual 2Dokument5 SeitenLab Manual 2Muhammad AnsNoch keine Bewertungen

- Simpower TaskDokument9 SeitenSimpower TaskFahad SaleemNoch keine Bewertungen

- Modeling of Definite Time Over-Current Relay Using MATLAB: Power System ProtectionDokument5 SeitenModeling of Definite Time Over-Current Relay Using MATLAB: Power System ProtectionHayat AnsariNoch keine Bewertungen

- Metastability and SynchronizationDokument48 SeitenMetastability and SynchronizationDollaRaiNoch keine Bewertungen

- Harmonic Distortion Analysis Software Combining EMTP and Monte Carlo MethodDokument11 SeitenHarmonic Distortion Analysis Software Combining EMTP and Monte Carlo MethodIvan Sanchez LoorNoch keine Bewertungen

- Lab Manual 3Dokument6 SeitenLab Manual 3Muhammad AnsNoch keine Bewertungen

- Bifurcation Analysis of PWM-1 Voltage-Mode-Controlled Buck Converter Using The Exact Discrete ModelDokument11 SeitenBifurcation Analysis of PWM-1 Voltage-Mode-Controlled Buck Converter Using The Exact Discrete Modelrohit17088Noch keine Bewertungen

- Transformer Differential ProtectionDokument5 SeitenTransformer Differential ProtectionVishwanath TodurkarNoch keine Bewertungen

- Unit No - II: Block Diagram RepresentationDokument56 SeitenUnit No - II: Block Diagram RepresentationNamrta DeokateNoch keine Bewertungen

- Implementation and Test Results of A Wide-Area Measurement-Based Controller For Damping Interarea Oscillations Considering Signal-Transmission DelayDokument8 SeitenImplementation and Test Results of A Wide-Area Measurement-Based Controller For Damping Interarea Oscillations Considering Signal-Transmission Delaya_almutairiNoch keine Bewertungen

- Logic Circuit & Switching Theory Sequencial Logic CircuitsDokument66 SeitenLogic Circuit & Switching Theory Sequencial Logic CircuitsAngelica FangonNoch keine Bewertungen

- Sample and Hold CircuitsDokument16 SeitenSample and Hold CircuitsPraveen AndrewNoch keine Bewertungen

- Cadence - Virtuoso: Amity UniversityDokument27 SeitenCadence - Virtuoso: Amity UniversityAkhil Aggarwal100% (1)

- Modeling of Definite Time Over-Current Relay With Auto Re-Closer Using MATLABDokument5 SeitenModeling of Definite Time Over-Current Relay With Auto Re-Closer Using MATLABHayat Ansari100% (1)

- Logic Circuit & Switching Theory Sequencial Logic CircuitsDokument67 SeitenLogic Circuit & Switching Theory Sequencial Logic CircuitsAngelica FangonNoch keine Bewertungen

- Group2 Ece142Dokument61 SeitenGroup2 Ece142Angelica FangonNoch keine Bewertungen

- Three Phase Fault Analysis With Auto Reset Technology On Temporary Fault or Remain Tripped OtherwiseDokument4 SeitenThree Phase Fault Analysis With Auto Reset Technology On Temporary Fault or Remain Tripped OtherwisePritesh Singh50% (2)

- Objectives:: Experiment 7 Open & Closed Loop SystemsDokument5 SeitenObjectives:: Experiment 7 Open & Closed Loop SystemsHennesey LouriceNoch keine Bewertungen

- Why Reset?: AdvantagesDokument2 SeitenWhy Reset?: Advantageslaxman289Noch keine Bewertungen

- Tutorial 5Dokument31 SeitenTutorial 5Sathish KumarNoch keine Bewertungen

- Coordination of DTOC Relays in A Power SystemDokument6 SeitenCoordination of DTOC Relays in A Power SystemHayat AnsariNoch keine Bewertungen

- Pwp711 Lab 1Dokument7 SeitenPwp711 Lab 1Tumelo ArnatNoch keine Bewertungen

- 9.0. Micropipelines: Two Conceptual FrameworksDokument15 Seiten9.0. Micropipelines: Two Conceptual FrameworksPaul RomanNoch keine Bewertungen

- Phase Interpolating CircuitsDokument21 SeitenPhase Interpolating Circuitsreader_188Noch keine Bewertungen

- Power Estimation Tool For Sub-Micron CMOS VLSI CircuitsDokument6 SeitenPower Estimation Tool For Sub-Micron CMOS VLSI CircuitskinchitNoch keine Bewertungen

- Lab 4 NDokument7 SeitenLab 4 NWaseem KambohNoch keine Bewertungen

- Connect Passive Components To Logic GatesDokument11 SeitenConnect Passive Components To Logic GatesqqazertyNoch keine Bewertungen

- CH 4Dokument41 SeitenCH 4bhagyavant4Noch keine Bewertungen

- 555 Timer As Mono Stable Multi VibratorDokument8 Seiten555 Timer As Mono Stable Multi VibratorchrisgeclayNoch keine Bewertungen

- Combinational Logic CircuitDokument29 SeitenCombinational Logic CircuitKalkidan SisayNoch keine Bewertungen

- Mod 5Dokument83 SeitenMod 5Jane BillonesNoch keine Bewertungen

- Lab 1Dokument19 SeitenLab 1MohammadnurBinSuraymNoch keine Bewertungen

- SN1-2223-Lab3 1-2 EngDokument4 SeitenSN1-2223-Lab3 1-2 EngBoualem MestafaNoch keine Bewertungen

- 1999-Lausanne StroblDokument9 Seiten1999-Lausanne StroblMiten ThakkarNoch keine Bewertungen

- Ejercicios Sensores en Tecnologías IntegradasDokument6 SeitenEjercicios Sensores en Tecnologías IntegradasMario Palmero DelgadoNoch keine Bewertungen

- Static 1 HazardDokument3 SeitenStatic 1 HazardAchiket SinghNoch keine Bewertungen

- Question # 1: Distribution SubstationsDokument5 SeitenQuestion # 1: Distribution SubstationsAqsa KiranNoch keine Bewertungen

- Universal CounterDokument5 SeitenUniversal CounterHimanshu NegiNoch keine Bewertungen

- Frequency RelayDokument9 SeitenFrequency RelayLanya AramNoch keine Bewertungen

- Fault Detection and Classification Using Machine Learning in MATLABDokument6 SeitenFault Detection and Classification Using Machine Learning in MATLABlaksh sharmaNoch keine Bewertungen

- PSP Manual 03Dokument8 SeitenPSP Manual 03Muhammad AnsNoch keine Bewertungen

- New Method of Power Swing Blocking For Digital Distance ProtectionDokument8 SeitenNew Method of Power Swing Blocking For Digital Distance ProtectionJohari Zhou Hao LiNoch keine Bewertungen

- 3.0 Expt No 03Dokument4 Seiten3.0 Expt No 03YASH KHATPENoch keine Bewertungen

- A Novel Ultra High-Speed Flip-Flop-Based Frequency Divider: Ravindran Mohanavelu and Payam HeydariDokument4 SeitenA Novel Ultra High-Speed Flip-Flop-Based Frequency Divider: Ravindran Mohanavelu and Payam Heydarirah0987Noch keine Bewertungen

- Basic of AccoutingDokument20 SeitenBasic of AccoutingmuntaquirNoch keine Bewertungen

- Perl CheatDokument2 SeitenPerl CheatmravikrishnaraoNoch keine Bewertungen

- GNU MakefileDokument192 SeitenGNU Makefilezmajzmaj321Noch keine Bewertungen

- New Text DocumentDokument1 SeiteNew Text DocumentmravikrishnaraoNoch keine Bewertungen

- New Text DocumentDokument1 SeiteNew Text DocumentmravikrishnaraoNoch keine Bewertungen

- The Now HabitDokument70 SeitenThe Now HabitDilek ENoch keine Bewertungen

- Tutorial: Makefiles: University of Illinois at Urbana-Champaign Department of Computer ScienceDokument5 SeitenTutorial: Makefiles: University of Illinois at Urbana-Champaign Department of Computer SciencemravikrishnaraoNoch keine Bewertungen

- Adv 7125Dokument16 SeitenAdv 7125georg1985Noch keine Bewertungen

- TTDokument2 SeitenTTmravikrishnaraoNoch keine Bewertungen

- PidDokument1 SeitePidmravikrishnaraoNoch keine Bewertungen

- Preset Pattern in Red ColorDokument5 SeitenPreset Pattern in Red ColormravikrishnaraoNoch keine Bewertungen

- Direct Marketing CRM and Interactive MarketingDokument37 SeitenDirect Marketing CRM and Interactive MarketingSanjana KalanniNoch keine Bewertungen

- Universal Beams PDFDokument2 SeitenUniversal Beams PDFArjun S SanakanNoch keine Bewertungen

- Book LoRa LoRaWAN and Internet of ThingsDokument140 SeitenBook LoRa LoRaWAN and Internet of ThingsNguyễn Hữu HạnhNoch keine Bewertungen

- Magnetic Properties of MaterialsDokument10 SeitenMagnetic Properties of MaterialsNoviNoch keine Bewertungen

- Ibps Po Mains Model Question Paper PDF Set 2Dokument92 SeitenIbps Po Mains Model Question Paper PDF Set 2DHIRAJNoch keine Bewertungen

- CASA 212 - 200 (NS) : NO Description P.N QTY ConditionDokument6 SeitenCASA 212 - 200 (NS) : NO Description P.N QTY ConditionsssavNoch keine Bewertungen

- BT5Dokument17 SeitenBT5Katkat MarasiganNoch keine Bewertungen

- BMR - Lab ManualDokument23 SeitenBMR - Lab ManualMohana PrasathNoch keine Bewertungen

- imageRUNNER+ADVANCE+C5051-5045-5035-5030 Parts CatalogDokument268 SeitenimageRUNNER+ADVANCE+C5051-5045-5035-5030 Parts CatalogDragos Burlacu100% (1)

- Australian Car Mechanic - June 2016Dokument76 SeitenAustralian Car Mechanic - June 2016Mohammad Faraz AkhterNoch keine Bewertungen

- 02 Adaptive Tracking Control of A Nonholonomic Mobile RobotDokument7 Seiten02 Adaptive Tracking Control of A Nonholonomic Mobile Robotchoc_ngoayNoch keine Bewertungen

- PPR 8001Dokument1 SeitePPR 8001quangga10091986Noch keine Bewertungen

- The Development of Silicone Breast Implants That Are Safe FoDokument5 SeitenThe Development of Silicone Breast Implants That Are Safe FomichelleflresmartinezNoch keine Bewertungen

- Dy DX: NPTEL Course Developer For Fluid Mechanics Dr. Niranjan Sahoo Module 04 Lecture 33 IIT-GuwahatiDokument7 SeitenDy DX: NPTEL Course Developer For Fluid Mechanics Dr. Niranjan Sahoo Module 04 Lecture 33 IIT-GuwahatilawanNoch keine Bewertungen

- Design and Analysis of Intez Type Water Tank Using SAP 2000 SoftwareDokument7 SeitenDesign and Analysis of Intez Type Water Tank Using SAP 2000 SoftwareIJRASETPublicationsNoch keine Bewertungen

- Workbook, Exercises-Unit 8Dokument6 SeitenWorkbook, Exercises-Unit 8Melanie ValdezNoch keine Bewertungen

- Problemas Del Capitulo 7Dokument26 SeitenProblemas Del Capitulo 7dic vilNoch keine Bewertungen

- Output LogDokument480 SeitenOutput LogBocsa CristianNoch keine Bewertungen

- Material DevOps Essentials DEPC enDokument88 SeitenMaterial DevOps Essentials DEPC enCharlineNoch keine Bewertungen

- MCQ (Chapter 6)Dokument4 SeitenMCQ (Chapter 6)trail meNoch keine Bewertungen

- Lesson 2 - Graphing Rational Numbers On A Number LineDokument9 SeitenLesson 2 - Graphing Rational Numbers On A Number Linehlmvuong123Noch keine Bewertungen

- Hetron CR 197Dokument3 SeitenHetron CR 197Faidhi SobriNoch keine Bewertungen

- Pipenet: A Wireless Sensor Network For Pipeline MonitoringDokument11 SeitenPipenet: A Wireless Sensor Network For Pipeline MonitoringMykola YarynovskyiNoch keine Bewertungen

- Feasibility Report, Modhumoti, Rev. - April-.2015-1Dokument19 SeitenFeasibility Report, Modhumoti, Rev. - April-.2015-1Jahangir RaselNoch keine Bewertungen

- Star - 6 ManualDokument100 SeitenStar - 6 ManualOskarNoch keine Bewertungen

- Lampiran Surat 739Dokument1 SeiteLampiran Surat 739Rap IndoNoch keine Bewertungen

- Manual TP5000Dokument206 SeitenManual TP5000u177427100% (4)

- Case Study ToshibaDokument6 SeitenCase Study ToshibaRachelle100% (1)

- HP Mini 210-2120br PC Broadcom Wireless LAN Driver v.5.60.350.23 Pour Windows 7 Download GrátisDokument5 SeitenHP Mini 210-2120br PC Broadcom Wireless LAN Driver v.5.60.350.23 Pour Windows 7 Download GrátisFernandoDiasNoch keine Bewertungen

- Fuel Supply Agreement - First DraftDokument104 SeitenFuel Supply Agreement - First DraftMuhammad Asif ShabbirNoch keine Bewertungen

- A Beginner's Guide to Constructing the Universe: The Mathematical Archetypes of Nature, Art, and ScienceVon EverandA Beginner's Guide to Constructing the Universe: The Mathematical Archetypes of Nature, Art, and ScienceBewertung: 4 von 5 Sternen4/5 (51)

- A Brief History of Time: From the Big Bang to Black HolesVon EverandA Brief History of Time: From the Big Bang to Black HolesBewertung: 4 von 5 Sternen4/5 (2193)

- Giza: The Tesla Connection: Acoustical Science and the Harvesting of Clean EnergyVon EverandGiza: The Tesla Connection: Acoustical Science and the Harvesting of Clean EnergyNoch keine Bewertungen

- Dark Matter and the Dinosaurs: The Astounding Interconnectedness of the UniverseVon EverandDark Matter and the Dinosaurs: The Astounding Interconnectedness of the UniverseBewertung: 3.5 von 5 Sternen3.5/5 (69)

- You Can't Joke About That: Why Everything Is Funny, Nothing Is Sacred, and We're All in This TogetherVon EverandYou Can't Joke About That: Why Everything Is Funny, Nothing Is Sacred, and We're All in This TogetherNoch keine Bewertungen

- Quantum Spirituality: Science, Gnostic Mysticism, and Connecting with Source ConsciousnessVon EverandQuantum Spirituality: Science, Gnostic Mysticism, and Connecting with Source ConsciousnessBewertung: 4 von 5 Sternen4/5 (6)

- The Inimitable Jeeves [Classic Tales Edition]Von EverandThe Inimitable Jeeves [Classic Tales Edition]Bewertung: 5 von 5 Sternen5/5 (3)

- The House at Pooh Corner - Winnie-the-Pooh Book #4 - UnabridgedVon EverandThe House at Pooh Corner - Winnie-the-Pooh Book #4 - UnabridgedBewertung: 4.5 von 5 Sternen4.5/5 (5)

- Summary and Interpretation of Reality TransurfingVon EverandSummary and Interpretation of Reality TransurfingBewertung: 5 von 5 Sternen5/5 (5)

- Knocking on Heaven's Door: How Physics and Scientific Thinking Illuminate the Universe and the Modern WorldVon EverandKnocking on Heaven's Door: How Physics and Scientific Thinking Illuminate the Universe and the Modern WorldBewertung: 3.5 von 5 Sternen3.5/5 (64)

- The Importance of Being Earnest: Classic Tales EditionVon EverandThe Importance of Being Earnest: Classic Tales EditionBewertung: 4.5 von 5 Sternen4.5/5 (44)

- Packing for Mars: The Curious Science of Life in the VoidVon EverandPacking for Mars: The Curious Science of Life in the VoidBewertung: 4 von 5 Sternen4/5 (1396)

- Lost in Math: How Beauty Leads Physics AstrayVon EverandLost in Math: How Beauty Leads Physics AstrayBewertung: 4.5 von 5 Sternen4.5/5 (125)

- Midnight in Chernobyl: The Story of the World's Greatest Nuclear DisasterVon EverandMidnight in Chernobyl: The Story of the World's Greatest Nuclear DisasterBewertung: 4.5 von 5 Sternen4.5/5 (410)

- The End of Everything: (Astrophysically Speaking)Von EverandThe End of Everything: (Astrophysically Speaking)Bewertung: 4.5 von 5 Sternen4.5/5 (157)

- The Beginning of Infinity: Explanations That Transform the WorldVon EverandThe Beginning of Infinity: Explanations That Transform the WorldBewertung: 5 von 5 Sternen5/5 (60)

- The Comedians in Cars Getting Coffee BookVon EverandThe Comedians in Cars Getting Coffee BookBewertung: 4.5 von 5 Sternen4.5/5 (8)

![The Inimitable Jeeves [Classic Tales Edition]](https://imgv2-2-f.scribdassets.com/img/audiobook_square_badge/711420909/198x198/ba98be6b93/1712018618?v=1)