Beruflich Dokumente

Kultur Dokumente

Building An Analytical Platform

Hochgeladen von

David Walker0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

711 Ansichten6 SeitenBusiness intelligence requirements are changing and business users are moving more and more from historical reporting into predictive analytics in an attempt to get both a better and deeper understanding of their data. Traditionally, building an analytical platform has required an expensive infrastructure and a considerable amount of time for setup and deployment. Here we look at a quick and simple alternative.

Originaltitel

Building an Analytical Platform

Copyright

© Attribution Non-Commercial (BY-NC)

Verfügbare Formate

PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenBusiness intelligence requirements are changing and business users are moving more and more from historical reporting into predictive analytics in an attempt to get both a better and deeper understanding of their data. Traditionally, building an analytical platform has required an expensive infrastructure and a considerable amount of time for setup and deployment. Here we look at a quick and simple alternative.

Copyright:

Attribution Non-Commercial (BY-NC)

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

711 Ansichten6 SeitenBuilding An Analytical Platform

Hochgeladen von

David WalkerBusiness intelligence requirements are changing and business users are moving more and more from historical reporting into predictive analytics in an attempt to get both a better and deeper understanding of their data. Traditionally, building an analytical platform has required an expensive infrastructure and a considerable amount of time for setup and deployment. Here we look at a quick and simple alternative.

Copyright:

Attribution Non-Commercial (BY-NC)

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 6

Building an Analytical Platform

3 SAP White Paper Building an Analytical Platform

I was recently asked to build an analyti-

cal platform for a project. But what is an

analytical platform? The client, a retailer,

described it as a database where it could

store data and as a front end where it

could do statistical work. This work

would range from simple means and

standard deviations through to more

complex predictive analytics that could

be used, for example, to analyze past

performance of a customer to assess the

likelihood that the customer will exhibit a

future behavior. Or it might involve using

models to classify customers into groups

and ultimately to bring the two processes

together into an area known as decision

models. The customer had also come up

with an innovative way to resource the

statistical skills needed. t had olered

work placements to masters degree

students studying statistics at the local

university and arranged for them to work

with the customer insight team to

describe and develop the advanced

models. All the customer needed was a

platform to work with.

From a systems architecture and

development perspective, we could

describe the requirements in three rela-

tively simple statements:

1. Build a database with a very simple

data model that could be easily

loaded, that was capable of support-

ing high-performance queries, and

that did not consume a massive

amount of disk space. It would also

ideally be capable of being placed in

the cloud.

2. Create a Web-based interface that

would allow users to securely log on,

to write statistical programs that

could use the database as a source of

data, and to output reports and graph-

ics and well as to populate other

tables (for example, target lists) as a

result of statistical models.

3. Provide a way to automate the

running of the statistical data models,

once developed, so that they can be

run without engaging the statistical

development resources.

Of course, time was of the essence

and costs had to be as low as possible

but weve come to expect that with

everypro|ectl

Step 1: The database

Our chosen solution for the database

was an SAP Sybase IQ database, a

technology our client was already famil-

iar with. SAP Sybase IQ is a column-store

database. This means that instead of

storing all the data in its rows, as many

other databases do, the data is organized

on disk by the columns. For example if a

colunn contains a leld lor country it will

have the text of each country (for exam-

ple, United Kingdom) stored many

times. In a column-store database the

text is stored only once and given a

unique ID. This is repeated for each

column and therefore the row of data

consists of a list of IDs linked to the data

held for each column.

This approach is very elcient lor

reporting and analytical databases.

Replacing text strings with an identiler

neans that signilcantly less space is

used. In our example, United Kingdom

would occupy 14 bytes, while the ID

might occupy only 1 byte reducing the

storage for that one value in that one

column by a ratio of 14:1 and this

conpression elect is repeated lor all

the data. Furthermore, because there is

less data on the disk, the time taken to

read the data from disk and to process

it lor queries is signilcantly reduced,

which massively speeds up the queries

too. Finally, each column is already

indexed, which again helps the overall

query speed.

An incidental but equally useful

consequence of using a column-store

database such as SAP Sybase IQ is that

there is no advantage in creating a star

schema as a data model. Instead, hold-

ing all the data in one large wide table is

at least as elcient. This is because stor-

ing each column with a key means that

the underlying storage of data is a star

schema. Creating a star schema in a

column-store database rather than a

large single table would mean incurring

unnecessary additional join and process-

ing overhead.

As a result of choosing SAP

Sybase IQs column-store database

we are able to have a data model that

consists of a number of simple single

table data sets (one table for each

dilerent type ol data to be analyzed)

that is quick to load and to query.

It should be noted that this type of

database solution is less elcient lor

online transaction processing (OLTP)

applications because of the cost of doing

small inserts and updates. However, this

is not relevant for this particular

usecase.

The solution can be deployed only on

a Linux platform. We use Linux for three

reasons. First, RStudio Server Edition is

not yet available for Microsoft Windows.

Second, precompiled packages for all

elements of the solution on Linux reduce

the install elort. And third, hosted Linux

environments are normally cheaper than

Windows environments due to the cost

of the operating system license. We

chose CentOS because it is a Red Hat

derivative that is free.

One additional advantage of this solu-

tion for some organizations is the ability

to deploy it in the cloud. Since the solu-

tion requires lles to be renotely deliv-

ered, and since all querying is done via a

Web interface, it is possible to use any

colocation or cloud-based hosting

provider. Colocation or cloud deploy-

nent olers a low startup cost, reduced

systems management overhead, and

access for both data delivery and data

access. The system requires SSH access

for management; FTP, SFTP, or SCP for

lle delivery, and the R8tudio Web service

port open. The RStudio server uses the

server login accounts for security but

can also be tied to existing LDAP

infrastructure.

Step 2: Statistical tools and Web

interface

There are a number of statistical tools

in the market. Most are very expensive,

prohibitively so in this case, and the

associated skills are hard to come by

and expensive. However, since 1993 an

open-source programming language

called R (www.r-project.org) for statisti-

cal computing and graphics has been

under development. It is now widely used

among statisticians for developing statis-

tical software and data analysis, is used

by many universities, and is predicted to

become the most widely used statistical

package by 2015. The R project provides

a command line and graphical interface

as well as a large open-source library of

useful routines (http://cran.r-project.

org) and it is available as packaged soft-

ware for most platforms including Linux.

In addition, a second open-source proj-

ect called RStudio (http://rstudio.org)

provides a single integrated development

environment for R and can be deployed

on a local machine or as a Web-based

service using the servers security model.

In this case, we implemented the server

edition in order to make the entire envi-

ronment Web based.

So in two simple steps (download and

install R, followed by download and

install RStudio) we can install a full

Web-based statistical environment. Note

that sone conlguring and prerequisite

packages may be required depending on

your environment, but these are well

documented on the source Web sites

and in general automatically download if

you are using a tool such as yum.

The next step was to get access to the

data held in our SAP Sybase IQ server.

This proved to also be very straightfor-

ward. There is a SAP Sybase white paper

(www.sybase.con/lles/Thankyou_

Fages/R_WF_V?.5lnal_l.pdl) that

describes the process that can be simply

stated as:

Install the R JDBC package

Set up a JDBC connection

Establish your connection

Query the table

We now have an R object that contains

data sourced from SAP Sybase IQ that

we can work with. And what is amazing is

that it took me less than half a day to

build the platform from scratch.

At this point data has to be loaded and

the statisticians can get to work.

Obviously this is more time consuming

than the build, and over the days and

weeks the analysts created their models

and produced the results.

For this exercise we used our in-house

extract, transform, and load (ETL) tool to

create a repeatable data extraction and

load process, but it would have been

possible to use any of a wide range of

tools that are available for this process

Step 3: Automatically running the

statistical models

Eventually a number of models for

analyzing the data had been created and

we were ready to move into a production

environment. We automated the load of

the data into the agreed single-table

structure and wanted to also run the

data models.

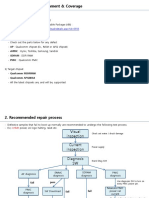

Analytical Platform Server

R/JDBC Connection

(S) FTP/SCP

File Delivery

R/JDBC

Connection

Write to

Database Any Network

Connected Computer

with a Browser Accessing

the R Studio Server Edition

SAP

Sybase

IQ

CentOS

2012 Data Management & Warehousing

Read File

ETL

Engine

R Studio

Server Edition

R

5 SAP White Paper Building an Analytical Platform

SAP Sybase IQ has the functionality

to create userdelned lunctions (UDFs).

These C++ programs talk to a process

known as Rserve, which in turn executes

the R program and returns the results

to SAP Sybase IQ. This allows R func-

tions to be embedded directly into SAP

Sybase IQ SQL commands. While setting

this up requires a little more program-

ming experience, it does mean that all

processing can be done within SAP

Sybase IQ.

Conversely, it is possible to run R from

the command line and call the program

that in turn uses the RJDBC connection

to read and write data to the database.

Having a choice of methods is very

helpful as it means that it can be inte-

grated with the ETL environment in the

most appropriate way. If the ETL tool

requires 8QL only, then the userdelned

function (UDF) route is the most attrac-

tive. However, if the ETL tool supports

host callouts (as ours does) then running

R programs from a command line callout

is quicker than developing the UDF.

ConCluSionS

Business intelligence requirements are

changing and business users are moving

more and more from historical reporting

into predictive analytics in an attempt to

get both a better and deeper under-

standing of their data.

Traditionally, building an analytical

platform has required an expensive infra-

structure and a considerable amount of

time for setup and deployment.

By combining the high performance,

low footprint of SAP Sybase IQ with the

open-source R and RStudio statistical

packages, it is possible to quickly deploy

an analytical platform in the cloud for

which there are readily available skills.

This infrastructure can be used both

for rapid prototyping on analytical

models and for running completed

models on new data sets to deliver

greater insight into the data.

ABout the Author

David Walker has been involved with business

intelligence and data warehousing for over

?0 years, lrst as a user, then with a soltware

house, and lnally with a hardware vendor

belore setting up his own consultancy lrn

Data Management & Warehousing (http://

datamgmt.com) in 1995.

David and his team have worked around

the world on projects designed to deliver

the naxinun benelt to the business by

converting data into information and by

lnding innovative ways to help businesses

exploit that information.

Davids project work has given him experi-

ence in a wide variety of industries including

teleconnunications, lnance, retail, nanu-

facturing, transportation, and public sector

as well as a broad and deep knowledge of

business intelligence and data warehousing

technologies.

www.sap.com/contactsap

12/08 2012 SAP AG. All rights reserved.

SAP, R/3, SAP NetWeaver, Duet, PartnerEdge, ByDesign,

SAP BusinessObjects Explorer, StreamWork, SAP HANA, and

other SAP products and services mentioned herein as well as

their respective logos are trademarks or registered trademarks

of SAP AG in Germany and other countries.

Business Objects and the Business Objects logo, BusinessObjects,

Crystal Reports, Crystal Decisions, Web Intelligence, Xcelsius, and other

Business Objects products and services mentioned herein as well as their

respective logos are trademarks or registered trademarks of Business

Objects Software Ltd. Business Objects is an SAP company.

Sybase and Adaptive Server, iAnywhere, Sybase 365, SQLAnywhere, and

other Sybase products and services mentioned herein as well as their

respective logos are trademarks or registered trademarks of Sybase Inc.

Sybase is an SAP company.

Crossgate, m@gic EDDY, B2B 360, and B2B 360 Services are registered

trademarks of Crossgate AG in Germany and other countries. Crossgate

is an SAP company.

All other product and service names mentioned are the trademarks of

their respective companies. Data contained in this document serves

inlornational purposes only. National product specilcations nay vary.

These materials are subject to change without notice. These materials

are provided by 8AF AO and its alliated conpanies (8AF Oroup)

for informational purposes only, without representation or warranty of

any kind, and SAP Group shall not be liable for errors or omissions with

respect to the materials. The only warranties for SAP Group products and

services are those that are set forth in the express warranty statements

accompanying such products and services, if any. Nothing herein should

be construed as constituting an additional warranty.

Das könnte Ihnen auch gefallen

- Data Driven Insurance UnderwritingDokument35 SeitenData Driven Insurance UnderwritingDavid Walker100% (1)

- Gathering Business Requirements For Data WarehousesDokument24 SeitenGathering Business Requirements For Data WarehousesDavid Walker100% (5)

- An Introduction To Data Virtualization in Business IntelligenceDokument18 SeitenAn Introduction To Data Virtualization in Business IntelligenceDavid Walker100% (1)

- Wallchart - Data Warehouse Documentation RoadmapDokument1 SeiteWallchart - Data Warehouse Documentation RoadmapDavid Walker100% (1)

- KeySum - Using Checksum KeysDokument2 SeitenKeySum - Using Checksum KeysDavid WalkerNoch keine Bewertungen

- BI SaaS & Cloud Strategies For TelcosDokument28 SeitenBI SaaS & Cloud Strategies For TelcosDavid WalkerNoch keine Bewertungen

- Struggling With Data ManagementDokument3 SeitenStruggling With Data ManagementDavid WalkerNoch keine Bewertungen

- Sample - Data Warehouse RequirementsDokument17 SeitenSample - Data Warehouse RequirementsDavid Walker100% (7)

- Storage Characteristics of Call Data Records in Column Store DatabasesDokument28 SeitenStorage Characteristics of Call Data Records in Column Store DatabasesDavid WalkerNoch keine Bewertungen

- Building A Data Warehouse of Call Data RecordsDokument5 SeitenBuilding A Data Warehouse of Call Data RecordsDavid WalkerNoch keine Bewertungen

- Wallchart - Continuous Data Quality ProcessDokument1 SeiteWallchart - Continuous Data Quality ProcessDavid WalkerNoch keine Bewertungen

- Implementing Netezza SpatialDokument16 SeitenImplementing Netezza SpatialDavid WalkerNoch keine Bewertungen

- In Search of Ellen Ankers v4Dokument24 SeitenIn Search of Ellen Ankers v4David WalkerNoch keine Bewertungen

- An Introduction To Social Network DataDokument40 SeitenAn Introduction To Social Network DataDavid Walker100% (1)

- Using The Right Data Model in A Data MartDokument26 SeitenUsing The Right Data Model in A Data MartDavid Walker100% (1)

- A Linux/Mac OS X Command Line InterfaceDokument3 SeitenA Linux/Mac OS X Command Line InterfaceDavid WalkerNoch keine Bewertungen

- Implementing BI & DW GovernanceDokument14 SeitenImplementing BI & DW GovernanceDavid Walker100% (1)

- ETIS11 - Agile Business Intelligence - PresentationDokument22 SeitenETIS11 - Agile Business Intelligence - PresentationDavid Walker0% (1)

- Open World 04 - Information Delivery - The Change in Data Management at Network Rail - PresentationDokument26 SeitenOpen World 04 - Information Delivery - The Change in Data Management at Network Rail - PresentationDavid WalkerNoch keine Bewertungen

- ETIS10 - BI Business RequirementsDokument25 SeitenETIS10 - BI Business RequirementsDavid Walker100% (1)

- ETIS10 - BI Governance Models and StrategiesDokument23 SeitenETIS10 - BI Governance Models and StrategiesDavid Walker50% (2)

- EOUG95 - Technical Architecture For The Data Warehouse - PaperDokument11 SeitenEOUG95 - Technical Architecture For The Data Warehouse - PaperDavid WalkerNoch keine Bewertungen

- ETIS09 - Black Swans and White Elephants - RoI in Business IntelligenceDokument25 SeitenETIS09 - Black Swans and White Elephants - RoI in Business IntelligenceDavid WalkerNoch keine Bewertungen

- White Paper - The Business Case For Business IntelligenceDokument12 SeitenWhite Paper - The Business Case For Business IntelligenceDavid Walker67% (3)

- A Study On The Activity, Ethology and Psychology of Fluorescent Plastic CubesDokument14 SeitenA Study On The Activity, Ethology and Psychology of Fluorescent Plastic CubesDavid Walker100% (1)

- IRM09 - What Can IT Really Deliver For BI and DW - PresentationDokument21 SeitenIRM09 - What Can IT Really Deliver For BI and DW - PresentationDavid WalkerNoch keine Bewertungen

- Pallas Architecture (UK)Dokument24 SeitenPallas Architecture (UK)David Walker100% (1)

- IOUG93 - Client Server Very Large Databases - PresentationDokument16 SeitenIOUG93 - Client Server Very Large Databases - PresentationDavid WalkerNoch keine Bewertungen

- EOUG95 - Technical Architecture For The Data Warehouse - PresentationDokument15 SeitenEOUG95 - Technical Architecture For The Data Warehouse - PresentationDavid WalkerNoch keine Bewertungen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (890)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (587)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (265)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (119)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Explain ThreeDokument5 SeitenExplain ThreesectuNoch keine Bewertungen

- Intermidiate Code GeneratorDokument29 SeitenIntermidiate Code GeneratorAkshat MittalNoch keine Bewertungen

- Linked Lists - Search, Deletion and Insertion: Reading: Savitch, Chapter10Dokument39 SeitenLinked Lists - Search, Deletion and Insertion: Reading: Savitch, Chapter10mhòa_43Noch keine Bewertungen

- IE426 - Optimization Models and Application: 1 Goal ProgrammingDokument10 SeitenIE426 - Optimization Models and Application: 1 Goal Programminglynndong0214Noch keine Bewertungen

- 1.1. HTML ElementsDokument47 Seiten1.1. HTML ElementsabinayaNoch keine Bewertungen

- ATM Case Study Code-JavaDokument9 SeitenATM Case Study Code-JavaRithika M Nagendiran100% (1)

- Nirmol BhokotDokument59 SeitenNirmol BhokotHimjyoti TalukdarNoch keine Bewertungen

- U500 Software and Libraries Version TrackingDokument62 SeitenU500 Software and Libraries Version TrackingramjoceNoch keine Bewertungen

- SAP Java Connector Release NotesDokument37 SeitenSAP Java Connector Release NotesPositive PauseNoch keine Bewertungen

- OAF (Oracle Application Framework)Dokument1.090 SeitenOAF (Oracle Application Framework)Chetan.thetiger100% (2)

- Composition of Functions Word ProblemsDokument4 SeitenComposition of Functions Word ProblemsHua Ai YongNoch keine Bewertungen

- Engrid OpenFOAM Stammtisch Stuttgart2009Dokument47 SeitenEngrid OpenFOAM Stammtisch Stuttgart2009Marcelo Guillaumon EmmelNoch keine Bewertungen

- 8051 Assembly Language ProgrammingDokument51 Seiten8051 Assembly Language ProgrammingVishal Gudla NagrajNoch keine Bewertungen

- BoardDiag ENGDokument20 SeitenBoardDiag ENGPavsterSizNoch keine Bewertungen

- DXF Codes and AutoLISP DaDokument4 SeitenDXF Codes and AutoLISP DaelhohitoNoch keine Bewertungen

- Opendss Cheatsheet: Command DescriptionDokument11 SeitenOpendss Cheatsheet: Command DescriptionLucas RamirezNoch keine Bewertungen

- Advanced Fortran Programming DURHAM UNIVERSITYDokument31 SeitenAdvanced Fortran Programming DURHAM UNIVERSITYAseem Kashyap100% (1)

- ACI Lab Presentation PDFDokument29 SeitenACI Lab Presentation PDFVenugopal Athiur RamachandranNoch keine Bewertungen

- Konfiguration Movilizer MFS SolutionDokument3 SeitenKonfiguration Movilizer MFS SolutionmuxerNoch keine Bewertungen

- The Digital Radiography SystemDokument9 SeitenThe Digital Radiography Systemrokonema nikorekaNoch keine Bewertungen

- TrixBox Commands Cheat SheetDokument2 SeitenTrixBox Commands Cheat SheetCarine Mynews100% (1)

- Mac Os X Manual Page - HdiutilDokument19 SeitenMac Os X Manual Page - Hdiutilaboy336Noch keine Bewertungen

- VTU ADA Lab ProgramsDokument31 SeitenVTU ADA Lab ProgramsanmolbabuNoch keine Bewertungen

- Fire WallDokument316 SeitenFire WallKedar Vishnu LadNoch keine Bewertungen

- Mysql Basic CommandDokument5 SeitenMysql Basic CommandANKIT GOYALNoch keine Bewertungen

- Albert YauDokument2 SeitenAlbert YausurendradevycsNoch keine Bewertungen

- HTML Notes For Class 10 Cbse 2Dokument7 SeitenHTML Notes For Class 10 Cbse 2proodootNoch keine Bewertungen

- Ipystata: Stata + Python + Jupyter Notebook "The Whole Is Greater Than The Sum of Its Parts." - AristotleDokument17 SeitenIpystata: Stata + Python + Jupyter Notebook "The Whole Is Greater Than The Sum of Its Parts." - Aristotlemitron123123Noch keine Bewertungen

- Manual FreeDokument958 SeitenManual FreeAaron Alberto SanchezNoch keine Bewertungen

- Fundamentals of N TierDokument169 SeitenFundamentals of N TierJason HallNoch keine Bewertungen