Beruflich Dokumente

Kultur Dokumente

Jurnal Jordan (Training Elman and Jordan Networks)

Hochgeladen von

anon_512672665Originalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Jurnal Jordan (Training Elman and Jordan Networks)

Hochgeladen von

anon_512672665Copyright:

Verfügbare Formate

Articial Intelligence in Engineering 13 (1999) 107117

Training Elman and Jordan networks for system identication using genetic algorithms

D.T. Pham*, D. Karaboga

Intelligent Systems Research Laboratory, University of Wales Cardiff, Cardiff CF2 3TE, UK Received 6 September 1996; received in revised form 5 August 1998; accepted 20 August 1998

Abstract Two of the well-known recurrent neural networks are the Elman network and the Jordan network. Recently, modications have been made to these networks to facilitate their applications in dynamic systems identication. Both the original and the modied networks have trainable feedforward connections. However, in order that they can be trained essentially as feedforward networks by means of the simple backpropagation algorithm, their feedback connections have to be kept constant. For the training to converge, it is important to select correct values for the feedback connections, but nding these values manually can be a lengthy trial-and-error process. This paper describes the use of genetic algorithms (GAs) to train the Elman and Jordan networks for dynamic systems identication. The GA is an efcient, guided, random search procedure which can simultaneously obtain the optimal weights of both the feedforward and feedback connections. 1999 Elsevier Science Ltd. All rights reserved. Keywords: Genetic Algorithms; Recurrent Neural Networks; Elman Networks; Jordan Networks; System Identication

1. Introduction From a structural point of view, there are two main types of neural networks: feedforward and recurrent neural networks. The interconnections between the neurons in a feedforward neural network (FNN) are such that the information can ow in only one direction: from input neurons to output neurons. In a recurrent neural network (RNN), two types of connections, the feedforward and feedback connections, allow information to propagate in two directions, from input neurons to output neurons and vice versa. FNNs have been successfully applied to the identication of dynamic systems [1,2]. However, they generally require a large number of input neurons and thus necessitate a long computation time as well as having a high probability of being affected by external noise. Also, it is difcult to train a FNN to act as an independent system simulator [1]. RNNs have attracted the attention of researchers in the eld of dynamic system identication since they do not suffer from the above problems [35]. Two simple types of RNNs are the Elman net [6] and the Jordan net [7]. Modied versions of these RNNs have been developed and their performance in system identication has been

* Corresponding author; e-mail: phamdt@cardiff.ac.uk. 0954-1810/99/$ - see front matter PII: S0954-181 0(98)00013-2

tested against the original nets by the authors group [5,8]. In that work, the training of the RNNs was implemented using the simple backpropagation algorithm [9]. To apply this algorithm, the RNNs were regarded as FNNs, with only the feedforward connections having to be trained. The feedback connections all had constant pre-dened weights, the values of which were xed experimentally by the user. In this paper, the use of genetic algorithms (GAs) to obtain the values of the weights of both the feedforward and feedback connections will be investigated. GAs are directed random search algorithms which are particularly effective for the optimisation of non-linear and multimodal functions [10]. One of the features of a GA, which distinguishes it from conventional optimisation methods such as hill-climbing, is its much greater ability to escape local minima. The paper presents the results obtained in evaluating the performance of the original Elman and Jordan nets and the modied nets when trained by a GA for dynamic system identication. Section 2 reviews the structures of these nets. Section 3 describes the GA adopted in this study and explains how the GA was used to train the given nets to identify a dynamic system. Section 4 gives the results obtained for three different Single-Input Single-Output (SISO) systems.

1999 Elsevier Science Ltd. All rights reserved.

108

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

Fig. 1. Structure of the original Elman net.

2. Elman net and Jordan net Fig. 1 depicts the original Elman net which is a neural network with three layers of neurons. The rst layer consists of two different groups of neurons. These are the group of external input neurons and the group of internal input neurons also called context units. The inputs to the context units are the outputs of the hidden neurons forming the second or hidden layer. The outputs of the context units and the external input neurons are fed to the hidden neurons. Context units are also known as memory units as they store the previous output of the hidden neurons. Although, theoretically, an Elman net with all feedback connections from the hidden layer to the context layer set to 1 can represent an arbitrary n th order system, where n is the number of context units, it cannot be trained to do so using the standard backpropagation (BP) algorithm [5]. By

introducing self-feedback connections to the context units of the original Elman net and thereby increasing its dynamic memory capacity, it is possible to apply the standard BP algorithm to teach the net that task [5]. The modied Elman net is shown in Fig. 2. The values of the self-connection weights are xed between 0 and 1 before starting training. The idea of introducing self-feedback connections for the context units was borrowed from the Jordan net shown in Fig. 3. This neural network also has three layers, with the main feedback connections taken from the output layer to the context layer. It has been shown theoretically [8] that the original Jordan net is not capable of representing arbitrary dynamic systems. However, by adding the feedback connections from the hidden layer to the context layer, similarly to the case of the Elman net, a modied Jordan net (see Fig. 4) is obtained that can be trained using the standard BP

Fig. 2. Structure of the modied Elman net.

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

109

Fig. 3. Structure of the original Jordan net.

algorithm to model different dynamic systems [8]. As with the modied Elman net, the values of the feedback connection weights have to be xed by the user if the standard BP algorithm is employed.

3. Genetic algorithms Genetic algorithms have been used in the area of neural networks for three main tasks: training the weights of connections, designing the structure of a network and nding an optimal learning rule [11]. The rst and second tasks have been studied by several researchers with promising results. However, most of the studies to date have been on feedforward neural networks rather than recurrent networks [12,13]. In this work, the GA is used to train the weights of recurrent neural networks, assuming that the structure of these networks has been decided. That is, the number of layers, the type and number of neurons in each layer, the pattern of

connections, the permissible ranges of trainable connection weights, and the values of constant connection weights, if any, are all known. Here, the GA dened by Grefenstette [14] is employed. This algorithm is summarised in Fig. 5. The initial set of solutions (initial population) is produced by a random number generator which is the rst component of the GA. The solutions in the population, representing possible RNNs, are evaluated and improved by applying operators called genetic operators. As seen in the owchart, the genetic operators used here are selection, crossover and mutation [15]. (A description of these operators is given in the Appendix for convenient reference.) Each solution in the initial and subsequent populations is a string comprising n elements, where n is the number of trainable connections (see Fig. 6). Each element is a 16-bit binary string holding the value of a trainable connection weight. It was found that sixteen bits gave adequate resolution for both the feedforward connections (which can have positive or negative weights) and the feedback connections (which have weight values in the range 01). A much

Fig. 4. Structure of the modied Jordan net.

110

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

ym k 1 for the RNN are compared and the differences ek 1 yp k 1 ym k 1 are computed. The sum of all ek 1 for the whole sequence is used as a measure of the goodness or tness of the particular RNN under consideration. These computations are carried out for all the networks of the current population. After the aforementioned genetic operators are applied, based on the tness values obtained, a new population is created and the above procedure repeated. The new population normally will have a greater average tness than preceding populations and eventually a RNN will emerge having connection weights adjusted such that it correctly models the input output behaviour of the given plant. The choice of the training input sequence is important to successful training. Sinusoidal and random input sequences are usually employed [8]. In the current investigation, a random sequence of 200 input signals was adopted. This number of input signals was found to be a good compromise between a lower number which might be insufcient to represent the inputoutput behaviour of a plant and a larger number which lengthens the training process.

4. Simulation results Simulations were conducted to study the ability of the four types of RNNs described in Section 2 to be trained by a GA to model three different dynamic plants. The rst plant was a third-order linear plant. The second and third plants were non-linear plants. A sampling period of 0.01 sec was assumed in all cases.

Fig. 5. Flowchart of the genetic algorithm used.

4.1. Plant 1 This plant had the following discrete inputoutput equation: yk 1 2:627771yk 0:697676yk 0:030862uk 2:333261yk 2 1 1

shorter string could cause the search to fail because of inadequate resolution and a longer string could delay the convergence process unacceptably. Note that from the point of view of the GA, all connection weights are handled in the same way. Training the feedback connections is carried out identically to training the feedforward connections, unlike the case of the commonly used backpropagation (BP) training algorithm. The use of a GA to train a RNN to model a dynamic plant is illustrated in Fig. 7. A sequence of input signals uk; k 0; 1; , is fed to both the plant and the RNN (represented by a solution string taken from the current population). The output signals yp k 1 for the plant and

0:017203uk 0:014086uk 2

The modelling was carried out using both the modied Elman and Jordan nets with all linear neurons. The training input signal, uk; k 0; 1; 199, was random and varied between 1.0 and 1.0. First, results were obtained by assuming that only the feedforward connections were trainable. The responses from the plant and the modied Elman and Jordan networks are presented in Figs. 8(a) and (b). The networks were subsequently trained with all connections modiable. The responses produced are shown in Figs. 9(a) and (b). 4.2. Plant 2

Fig. 6. Representation of the trainable weights of a RNN in string form.

This was a non-linear system with the following

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

111

Fig. 7. Scheme for training a recurrent neural network to identify a plant.

discrete-time equation: yk 1 yk=1:5 y k

2

0:3yk

0:5uk

The original Elman network and the modied Elman and Jordan networks with non-linear neurons in the hidden layer and linear neurons in the remaining layers were employed. The hyperbolic tangent function was adopted as the activation function of the non-linear neurons. The neural networks were trained using the same sequence of random input signals as mentioned previously. The responses obtained using the networks, taking only the feedforward connections as variable, are presented in Figs.10 (a),(b) and (c), respectively. The results produced when all the connections were trainable are given in Figs.11 (a),(b) and (c). 4.3. Plant 3 This was a non-linear system with the following discretetime equation: yk 1 1:752821yk 0:011698uk 1 y2 k 1 0:818731yk 0:010942uk 1 1=

networks with constant feedback connection weights and all-variable connection weights are presented in Tables 1 and 2 respectively. In all cases, when only the feedforward connection weights were modiable, the GA was run for 10000 generations and, when all the connection weights could be trained, the algorithm was implemented for 3000 generations. The other GA control parameters [14] were maintained in all simulations at the following values: Population size: 50 Crossover rate : 0.85 Mutation rate : 0.01 Generation gap : 0.90 The above control parameters are within ranges found by Grefenstette [14] to be suitable for a variety of optimisation problems. No other parameter values were tried in this work.

Table 1 MSE values for nets with constant feedback connection weights Plant 1 2 3 Original Elman Modied Elman 0.0001325 0.0012549 Modied Jordan 0.0002487 0.0006153

The original Elman network with non-linear neurons in the hidden layer and linear neurons in the remaining layers, as in the case of Plant 2, was employed. The hyperbolic tangent function was adopted as the activation function of the non-linear neurons. The response obtained using the network, taking only the feedforward connections as variable, is presented in Fig. 12. The result produced when all the connections were trainable is given in Fig. 13. It can be seen that a very accurate model of the plant could be obtained with this Elman network and therefore the more complex modied Elman and Jordan networks were not tried. The Mean-Square-Error (MSE) values computed for

0.0018662 0.0002261

Table 2 MSE values for nets with all variable connection weights Plant 1 2 3 Original Elman Modied Elman 0.0000548 0.0004936 Modied Jordan 0.0000492 0.0003812

0.0005874 0.0000193

112

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

Fig. 8. (a) Responses of the third-order plant (Plant 1) and the modied Elman net with constant feedback connection weights. (b) Responses of the third-order plant (Plant 1) and the modied Jordan net with constant feedback connection weights.

5. Discussion The experiments were also conducted for both rst and second-order linear plants although the results are not presented here. The rst-order plant was easily identied using the original Elman net structure. However, as expected from the theoretical analysis given in [8], the original Jordan net was unable to model the plant and produced

poor results. Both the original and modied Elman nets could identify the second-order plant successfully. Note that an original Elman net with an identical structure to that adopted for the original Elman net employed in this work and trained using the standard backpropagation algorithm had failed to identify the same plant [5]. The third-order plant could not be identied using the original Elman net but was successfully modelled by both

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

113

Fig. 9. (a) Responses of the third-order plant (Plant 1) and the modied Elman net with all variable connection weights. (b) Responses of the third-order plant (Plant 1) and the modied Jordan net with all variable connection weights.

the modied Elman and Jordan nets. This further conrms the advantages of the modications [5,8]. With Jordan nets, training was more difcult than with Elman nets. For all net structures, the training was signicantly faster when all connection weights were modiable than when only the feedforward connection weights could be changed. This was probably because of the fact that in the former case the GA had more freedom to evolve good

solutions. Thus, by using a GA, not only was it possible and simple to train the feedback connection weights, but the training time required was lower than for the feedforward connection weights alone. A drawback of the GA compared to the BP algorithm is that the GA is inherently slower as it has to operate on the weights of a population of neural networks whereas the BP algorithm only deals with the weights of one network.

114

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

Fig. 10. (a) Responses of the rst non-linear plant (Plant 2) and the original Elman net with constant feedback connection weights. (b) Responses of the rst non-linear plant (Plant 2) and the modied Elman net with constant feedback connection weights. (c) Responses of the rst non-linear plant (Plant 2) and the modied Jordan net with constant feedback connection weights.

6. Conclusion This paper has investigated the use of the GA to train recurrent neural networks for the identication of dynamic systems. The neural networks employed were the Elman net, the Jordan net and their modied versions. Identication results were obtained for linear and non-linear systems.

The main conclusion is that the GA was successful in training all but the original Jordan net at the expense of computation time. The fact that the GA could not train the original Jordan net has conrmed the nding of a previous investigation [8]. Conrmation has also been made of the superiority of the modied Elman net compared to the original version.

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

115

Fig. 11. (a) Responses of the rst non-linear plant (Plant 2) and the original Elman net with all variable connection weights. (b) Responses of the rst non-linear plant (Plant 2) and the modied Elman net with all variable connection weights. (c) Responses of the rst non-linear plant (Plant 2) and the modied Jordan net with all variable connection weights.

Acknowledgements The authors would like to thank the Higher Education Funding Council for Wales, TUBITAK (The Scientic and Technical Research Council of Turkey) and The Royal Society for their support of the work described in this paper.

Appendix A. Genetic operators A.1. Selection Various selection operators exist for deciding which strings are chosen for reproduction. The seeded selection operator simulates the biased roulette wheel technique to

116

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

Fig. 12. Responses of the second non-linear plant (Plant 3) and the original Elman net with constant feedback connection weights.

give strings with higher tnesses a greater chance of reproducing. With the random selection operator, a number of strings are randomly picked to pass from one generation to the next. The percentage picked is

controlled by a parameter known as the generation gap. The elite selection operator ensures that the ttest string in a generation passes unchanged to the next generation.

Fig. 13. Responses of the second non-linear plant (Plant 3) and the original Elman net with all variable connection weights.

D.T. Pham, D. Karaboga / Articial Intelligence in Engineering 13 (1999) 107117

117

References

[1] Pham DT, Liu X. Neural networks for identication, prediction and control. London: Springer-Verlag, 1995. [2] Yun L, Haubler A. Articial evolution of neural networks and its application to feedback control. Articial Intelligence in Engineering 1996;10(2):143152. [3] Pham DT, Liu X. Training of Elman networks and dynamic system modelling. Int. Journal of Systems Science 1996;27(2):221226. [4] Ku C-C, Lee KY. Diagonal recurrent neural networks for dynamic systems control. IEEE Trans. on Neural Networks 1995;6(1):144 156. [5] Pham DT, Liu X. Dynamic system identication using partially recurrent neural networks. Journal of Systems Engineering 1992;2(2):90 97. [6] Elman JL. Finding structure in time. Cognitive Science 1990;14:179 211. [7] Jordan MI. Attractor dynamics and parallelism in a connectionist sequential machine, Proc. Eighth Annual Conf. of the Cognitive Science Society, Amherst, MA, 1986, pp.531546. [8] Pham DT, Oh SJ. A recurrent backpropagation neural network for dynamic system identication. Journal of Systems Engineering 1992;2(4):213223. [9] Rumelhart DE, McClelland JL. Explorations in the Micro-Structure of Cognition. Parallel Distributed Processing, 1. Cambridge, MA: MIT Press, 1986. [10] Davis L. Handbook of Genetic Algorithms. New York, NY: Van Nostrand Reinhold, 1991. [11] Chalmers DJ. The evolution of learning: an experiment in genetic connectionism, In: Touretzky, D.S., Elman, J.L. and Hinton, G.E., (eds), Proc. 1990 Connectionist Models Summer School, 1990, pp.8190, Morgan Kaufmann, San Mateo, CA. [12] Whitely D, Hanson T. Optimising neural networks using faster, more accurate genetic search, In: Schaffer, J.D., (ed), Proc. Third Int. Conf. on Genetic Algorithms and Their Applications, 1989, pp.370374, Morgan Kaufmann, San Mateo, CA. [13] Harp SA, Samad T. Genetic synthesis of neural network architecture. In: Davis L, editor. Handbook of Genetic Algorithms, New York, NY: Van Nostrand Reinhold, 1991, pp.203221. [14] Grefenstette JJ. Optimization of control parameters for genetic algorithms. IEEE Trans. on Systems Man and Cybernetics 1986;SMC16(1):122128. [15] Pham DT, Karaboga D. Optimum design of fuzzy logic controllers using genetic algorithms. Journal of Systems Engineering 1991;1(2):114118.

Fig. A1. A simple crossover operation.

Fig. A2. Mutation operation.

A.2. Crossover This operator is used to create two new strings (children) from two existing strings (parents) picked from the current population by the selection operator according to a preset rate (the crossover rate). The crossover operator used in this work was the single-point operator. This operator cuts the selected parent strings at a randomly chosen point and then swaps their tails, which are the parts after the cutting point, to produce the children strings (see Fig. A1). The aim of the crossover operation is to combine parts of good strings to generate better strings. Crossover is generally most useful at the beginning of a search. A.3. Mutation The mutation operator randomly reverses the bit values of strings in the population according to a specied rate (the mutation rate) to produce new strings (see Fig. A2). The operator forces the algorithm to search new areas. Eventually, it helps the GA avoid premature convergence and nd the global optimal solution.

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5784)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (890)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (587)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (265)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (72)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2219)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (119)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Optimize Assignment Problem SolutionDokument16 SeitenOptimize Assignment Problem SolutionLudwig KelwulanNoch keine Bewertungen

- NCERT Solutions For CBSE Class 8 Maths Chapter 9 Algebraic Expressions and IdentitiesDokument20 SeitenNCERT Solutions For CBSE Class 8 Maths Chapter 9 Algebraic Expressions and IdentitiesAkash Kr DasNoch keine Bewertungen

- ID: Dd4ab4c4: A. B. C. DDokument30 SeitenID: Dd4ab4c4: A. B. C. DKaushal Kumar PatelNoch keine Bewertungen

- Numerical Methods Lesson Plan for Solving EquationsDokument6 SeitenNumerical Methods Lesson Plan for Solving EquationsANANDNoch keine Bewertungen

- MATH 4073 Numerical Analysis in Test Notes (Cheat Cheat Sheet) v4.0Dokument5 SeitenMATH 4073 Numerical Analysis in Test Notes (Cheat Cheat Sheet) v4.0jfishryanNoch keine Bewertungen

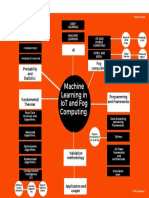

- Machine Learning in IoTDokument1 SeiteMachine Learning in IoTmkiadi2002Noch keine Bewertungen

- Artificial Intelligence - Model Question PaperDokument2 SeitenArtificial Intelligence - Model Question PaperDemon RiderNoch keine Bewertungen

- Module 3: Numerical Solutions to CE Problems via Bracketing MethodsDokument12 SeitenModule 3: Numerical Solutions to CE Problems via Bracketing MethodsGio MagayonNoch keine Bewertungen

- Calculus and Its Applications 11th Edition by Bittinger Ellenbogen Surgent ISBN Test BankDokument215 SeitenCalculus and Its Applications 11th Edition by Bittinger Ellenbogen Surgent ISBN Test Bankneil100% (19)

- AI GOAL STACK AND HIERARCHICAL PLANNINGDokument10 SeitenAI GOAL STACK AND HIERARCHICAL PLANNING321126510L03 kurmapu dharaneeswar100% (4)

- Lecture 1Dokument36 SeitenLecture 1nourhandardeer3Noch keine Bewertungen

- Computer Lab 3Dokument3 SeitenComputer Lab 3nathanNoch keine Bewertungen

- SchoolDokument153 SeitenSchoolLeôncioNoch keine Bewertungen

- How To Find Time Complexity of An Algorithm - Stack OverflowDokument15 SeitenHow To Find Time Complexity of An Algorithm - Stack OverflowlaureNoch keine Bewertungen

- DWDM PPTDokument35 SeitenDWDM PPTRakesh KumarNoch keine Bewertungen

- 4.1 Data Driven ModellingDokument4 Seiten4.1 Data Driven ModellingTharsiga ThevakaranNoch keine Bewertungen

- EASY-FIT: A Software System For Data Fitting in Dynamic SystemsDokument35 SeitenEASY-FIT: A Software System For Data Fitting in Dynamic SystemssansansansaniaNoch keine Bewertungen

- (2020) Gaussian Error Linear Units (Gelus)Dokument9 Seiten(2020) Gaussian Error Linear Units (Gelus)Mhd rdbNoch keine Bewertungen

- Job Shop Scheduling Using Ant Colony OptimizationDokument2 SeitenJob Shop Scheduling Using Ant Colony OptimizationarchtfNoch keine Bewertungen

- G8-W1 (Inc)Dokument24 SeitenG8-W1 (Inc)Marvelous VillafaniaNoch keine Bewertungen

- Numerical Methods in Civil Engg-Janusz ORKISZ PDFDokument154 SeitenNumerical Methods in Civil Engg-Janusz ORKISZ PDFRajesh CivilNoch keine Bewertungen

- CL244 Introduction to Numerical Analysis SourcesDokument38 SeitenCL244 Introduction to Numerical Analysis SourcesAnuj BhawsarNoch keine Bewertungen

- Comparison of Gauss Jacobi Method and Gauss Seidel Method Using ScilabDokument3 SeitenComparison of Gauss Jacobi Method and Gauss Seidel Method Using ScilabEditor IJTSRDNoch keine Bewertungen

- Boltzmann MachinesDokument7 SeitenBoltzmann Machinessharathyh kumarNoch keine Bewertungen

- Gujarat Technological University: InstructionsDokument11 SeitenGujarat Technological University: InstructionsdarshakvthakkarNoch keine Bewertungen

- Fourier Series Homework Solutions: MATH 1220 Spring 2008Dokument5 SeitenFourier Series Homework Solutions: MATH 1220 Spring 2008Alaa SaadNoch keine Bewertungen

- HH SuiteDokument5 SeitenHH Suiteemma698Noch keine Bewertungen

- University Updates: Text BooksDokument2 SeitenUniversity Updates: Text BooksmadhavNoch keine Bewertungen

- Bisection Method: 1 The Intermediate Value TheoremDokument3 SeitenBisection Method: 1 The Intermediate Value TheoremEgidius PutrandoNoch keine Bewertungen

- Gauss QuadratureDokument9 SeitenGauss QuadratureAnkur ChauhanNoch keine Bewertungen