Beruflich Dokumente

Kultur Dokumente

ANother Lecture Notes

Hochgeladen von

Leilani ManalaysayOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

ANother Lecture Notes

Hochgeladen von

Leilani ManalaysayCopyright:

Verfügbare Formate

LECTURE NOTES ON PHS 473: COMPUTATIONAL PHYSICS

BY

DR V.C. OZEBO

CONTENT

Use of numerical methods in Physics

Various methods of numerical differentiation and integration

Numerical solution of some differential equations of interest in Physics

Statistical analysis of data

CHAPTER ONE

ERRORS

An error may be defined as the difference between an exact and computed value,

Suppose the exact value of a solution of a computational problem is 1.023; now when a

computer or calculator is used, the solution is obtained as 1.022823; hence the error in this

calculation is (1.023 1.022823) 0.000177

Types of Error

Round-off error:- This error due to the rounding-off of a quantity due to limitations in the

digits.

Truncation error:-Truncation means cutting off the other digits i.e. no rounding off. For

instance, 1.8123459 may be truncated to 1.812345 due to a preset allowable number of digits.

Absolute Error:- The absolute value of an error is called the absolute error; that is;

Absolute error = | crror|

Relative error:- Relative error is the ratio of the absolute error to the absolute value of the exact

value.; That is

Relative error =

AbsoIutcco

| cxuctuIuc|

Percentage error:-This is equivalent to Relative error x 100

Inherent error:- In a numerical method calculation, we may have some basic mathematical

assumptions for simplifying a problem. Due to these assumptions, some errors are possible at the

beginning of the process itself. This error is known as inherent error.

Accumulated error:-Consider the following procedure:

+1

= 100

( i = 0,1,2, . . )

Therefore,

1

= 100

0

2

= 100

1

3

= 100

2

etc

Let the exact value of Y

0

= 9.98

Suppose we start with Y

0

= 10

Here, there is an inherent error of 0.02

Therefore,

1

= 100

0

= 100 x 10 = 1000

2

= 100

1

= 100 x 1000 = 100,000

3

= 100

2

= 100 x 100,000 = 10,000,000

The table below shows the exact and computed values,

Variable Exact Value Computed value Error

Y

0

9.98 10 0.02

Y

1

998 1000 2

Y

2

99800 100,000 200

Y

3

9980000 10,000,000 20,000

Notice above, how the error quantities accumulated. A small error of 0.02 at Y

0

leads to an error

of 20,000 in Y

3.

So, in a sequence of computations, an error in one value may affect the

computation of the next value and the error gets accumulated. This is called accumulated error.

Relative Accumulated Error

This is the ratio of the accumulated error to the exact value of that iteration. In the above

example, the relative accumulated error is shown below.

Variable Exact Value Computed value Accumulated

Error

Relative Accumulated error

Y

0

9.98 10 0.02 0.02 9.98 = 0.002004

Y

1

998 1000 2 2 998 = 0.002004

Y

2

99800 100,000 200 200 99800 = 0.002004

Y

3

9980000 10,000,000 20,000 20,000 9980000 = 0.002004

Notice that the relative accumulated error is same for all the values.

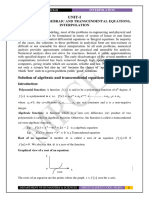

CHAPTER TWO

ROOT FINDING IN ONE DIMENSION

This involves searching for solutions to equations of the form: F( x) = 0

The various methods include:

1. Bisection Method

This is the simplest method of finding a root to an equation. Here we need two initial

guesses x

a

andx

b

which bracket the root.

Let F

u

= ( x

u

) and F

b

= ( x

b

) such that F

u

F

b

0 (see fig 1)

Figure1: Graphical representation of the bisection method showing two initial guesses (x

a

and

x

b

bracketting the root).

Clearly, if F

u

F

b

= 0 then one or both of x

u

and x

b

must be a root of F( x) = 0

The basic algorithm for the bisection method relies on repeated applications of:

Let x

c

=

( x

c

+x

b

)

2

If F

c

= f(c) = 0 then, x =x

c

is an exact solution,

Else if F

u

F

b

< 0 then the root lies in the interval ( x

u

, x

c

)

Else the root lies in the interval ( x

c

, x

b

)

By replacing the interval ( x

u

, x

b

) with either ( x

u

, x

c

) or ( x

c

, x

b

) ( whichever brackets the

root), the error in our estimation of the solution to F( x) = 0 is on the average, halved.

We repeat this interval halving until either the exact root has been found of the interval is

smaller than some specified tolerance.

Hence, the root bisection is a simple but slowly convergent method for finding a solution

of F( x) = 0, assuming the function f is continuous. It is based on the intermediate value

theorem, which states that if a continuous function f has opposite signs at some x = aand

x = b(>a) that is, either ( o) < 0, ( b) > 0 or( o) > 0, ( b) < 0, then f must be 0

somewhere on [ o, b] .

We thus obtain a solution by repeated bisection of the interval and in each iteration, we

pick that half which also satisfies that sign condition.

Example:

Given thatF( x) = x 2.44, solve using the method of root bisection, the form

F( x) = 0 .

Solution:

Given that F( x) = x 2.44 = 0

Therefore,

x 2.44 = 0

Direct method gives x = 2.44

But by root bisection;

Let the trial value of x = -1

X F(x) = x-2.44

Trial value -1 -3.44

0 -2.44

1 -1.44

2 -0.44

3 +0.56

It is clear from the table that the solution lies between x = 2 and x = 3.

Now choosing x = 2.5, we obtain F(x) = 0.06, we thus discard x = 3 since F(x) must lie

between 2.5 and 2. Bisecting 2 and 2.5, we have x = 2.25 with F(x) = -0.19.

Obviously now, the answer must lie between 2.25 and 2.5.

The bisection thus continues until we obtain F(x) very close to zero, with the two values

of x having opposite signs.

X F(x) = x-2.44

2.25 -0.19

2.375 -0.065

2.4375 -0.0025

2.50 -0.06

When the above is implemented in a computer program, it may be instructed to stop at

say, | F( x) | 10

-4

, since the computer may not get exactly to zero.

Full Algorithm

1. Define F(x)

2. Read x

1

, x

2

, values of x such that F(x

1

)F(x

2

) < 0

3. Read convergence term, s = 10

-6

, say.

4. Calculate F(y), y = (x

1

+x

2

) / 2

5. If abs(x

2

-x

1

) s, then y is a root. Go to 9

6. If abs(x

2

-x

1

) > s, Go to 7

7. If F(x

1

)F(x

2

) 0, (x

1

,y) contains a root, set x

2

= y and return to step 4

8. If not, (y, x

2

) contains a root, set x

1

= y and return to step 4

9. Write the root A

Flowchart for the Root Bisection Method

St ar t

Di mensi on

Def i ne F(x)

Input i nt er val l imi t s x1, x2, t ol er ance, s

Y = (x1 + x2) / 2

Abs (x2 x1) : s

>

F(x1)F(x2) : s

>

X2 = y

X1 = y

Out put Root = A

2. The RegulaFalsi (False position) Method

This method is similar to the bisection method in the sense that it requires two initial

guesses to bracketthe root. However, instead of simply dividing the region in two, a

linear interpolation is used to obtain a new point which is (hopefully, but not necessarily)

closer to the root than the equivalent estimate for the bisection method. A graphical

interpretation of this method is shown in figure 2.

Figure2: Root finding by the linear interpolation (regulafalsi) method. The two initial guesses x

a

and x

b

must bracket the root.

The basic algorithm for the method is:

Let x

c

= x

u

x

b

-x

c

]

b

-]

c

u

= x

b

x

b

-x

c

]

b

-]

c

b

=

x

c

]

b

-x

b

]

c

]

b

-]

c

If

c

= ( x

c

) = 0 thenx = x

c

is an exact solution..

Else if f

a

f

c

< 0 then the root lies in the interval (x

a

,x

c

)

Else the root lies in the interval (x

c

, x

b

)

Because the solution remains bracketed at each step convergence is guaranteed as was the

case for bisection method, The method is first order and is exact for linear f

Note also that the method should not be used near a solution.

Example

Find all real solutions of the equation x

4

= 2 by the method of false position.

Solution

Let x

a

= 1 and x

b

= 2

Now rewriting the equation in the form:x

4

2 = 0

Then f

a

= 1-2 = -1

f

b

= 8 -2 = 14

Therefore,

x

c

=

x

c

]

b

-x

b

]

c

]

b

-]

c

=

( 1) ( 14) -( 2) ( -1)

14-( -1)

=

16

15

= 1.07

c

= ( 1.07)

4

2 = 0.689

Now, from the algorithm, f

c

0, hence x

c

x, the exact solution.

Again, f

a

f

b

= (-1)(-0.689) = 0.689 > 0

Therefore, the roots lie in the interval x

c

, x

b

That is, (1.07, 2) (two roots)

3. The Newton-Raphson Method

This is another iteration method for solving equations of the form: F(x) = 0, where f is

assumed to have a continuous derivative f

. The method is commonly used because of its

simplicity and great speed. The idea is that we approximate the graph of f by suitable

tangents. Using an approximate value x

0

obtained from the graph of f, we let x be the

point of intersection of the x axis and the tangent to the curve of f at x

0

.

Figure 4: Illustration of the tangents to the curve in Newton-Raphson method

Then,

t an [ =

( x

0

) =

]( x

0

)

x

0

-x

1

,

Hence,

x

1

= x

0

]( x

0

)

]

( x

0

)

,

In the second step, we compute; x

2

= x

1

]( x

1

)

]

( x

1

)

,

And generally,

x

k+1

= x

k

( x

k

)

( x

k

)

X2 x1 x0

x

y

Y = f (x)

F(x)

Algorithm for the Newton-Raphson method

1. Define f(x)

2. Define f

(x)

3. Read x

0

, s (tolerance0

4. K = 0

5. x

k+1

= x

k

]( x

k

)

]

( x

k

)

,

6. Print k+1, x

k+1

, f(x

k+1

)

7. If | x

k+

x

k

| < s then go to 10

8. K = k+1

9. Go to step 5

10. Print The root is -----, x

k+1

11. End

Consider the equation: x

3

+ 2x

2

+ 2.2x + 0.4 = 0

Here, ( x) = x

3

+ 2x

2

+ 2.2x + 0.4

( x) = 3x

2

+ 4x + 2.2

Let the initial guess, x

0

= - 0.5

Let us now, write a FORTRAN program for solving the equation, using the Newton-

Raphsons method.

C NEWTON RAPHSON METHOD

DIMENSION X(100)

F(X) = X**3. + 2. *X*X + 2.2 *X + 0.4

F1(X) = 3. *X*X + 4. *X + 2.2

WRITE (*,*) TYPE THE INITIAL GUESS

READ (*,5) X(0)

5 FORMAT (F10.4)

WRITE (*,*) TYPE THE TOLERANCE VALUE

READ (*,6) S

6 FORMAT (F3.6)

WRITE (*,*) ITERATION X F(X)

K = 0

50 X(K+1) = X(K) F(X(K)) / F1(X(K))

WRITE (*,10) K+1, X(K+1), F(X(K+1))

10 FORMAT (1X, I6, 5X, F10.4, 5X, F10.4)

IF (ABS(X(K+1) X(K) .LE. S) GOTO 100

K = K+1

GOTO 50

100 WRITE (*,15) X(K+1)

15 FORMAT (1X, THE FINAL ROOT IS , F10.4)

STOP

END

Assignment

If S = 0.00005, manually find the root of the above example after 5 iterations.

CHOOSING THE INITIAL GUESS, X

0

In the Newton-Raphsons method, we have to start with an initial guess, x

0

. How do we

choose x

0

?

If f (a) and f (b) are of opposite signs, then there is at least one value of x between a andb

such thatf(x)= 0. We can start with f(0), find f(0), f(1), f(2) ----------------- . If there is a

number k such that f(k) and f(k+1) are of opposite signs then there is one root between k and

k+1, so we can choose the initial guess x

0

= k or x

0

= k+1.

Example;

Consider the equation: x

3

7x

2

+ x + 1 = 0

F(0) = 1 ( = +ve)

F(1) = -4 (= -ve)

Therefore, there is a root between 0 and 1, hence our initial guess x

0

, may be taken as 0 or 1

Example:

Evaluate a real root of x

3

+ 2.1x

2

+ 13.1x + 22.2 = 0, using the Newton Raphsons

method, correct to three decimal places.

Solution;

F(x) = x

3

+ 2.1x

2

+ 13.1x + 22.2

F(0) = 22.2 (positive)

Now, since all the coefficients are positive, we note that f(1), f(2), ------- are all positive. So

the equation has no positive root.

We thus search in the negative side:

F (-1) = 20.2 (positive)

F (-2) = +ve = f (-3) ------ f (-11). But f (-12) is negative, so we can choose x

0

= -11.

Iteration 1

F(x) f x

3

+ 2.1x

2

+ 13.1x + 22.2

F

(x) = f 3x

2

+ 24.2x + 13.1

Now, with x

0

= -11

F (x

0

) = F (-11) = 11.2

F

(x

0

) = F

(-11) = f 3( 11)

2

+ 24.2( 11) + 13.1 = 109.9

Therefore,

x

1

= x

0

]( x

0

)

]

( x

0

)

= 11

11.2

109.9

= -11.1019

Iteration 2

X

1

= -11.1019

F (x

1

) = f (-11.1019) = -0.2169

F

(x

1

) = F

(-11.1019) = 114.1906

Therefore,.

x

2

= x

1

]( x

1

)

]

( x

1

)

= - 11.1019

( - 0.2169)

114.1906

= - 11.100001

Iteration 3

X

2

= -11.100001

F (x

2

) = F ( - 11.100001) = - 0.0001131

F

(x

2

) = F

(-11.100001) = 114.1101

Therefore,

x

3

= x

2

]( x

2

)

]

( x

2

)

= - 11.100001

( - 0.000113)

114.1101

= - 11.1000000

Now, correct to three decimal places, x

2

= x

3

, and so, the real root is x = -11.1000.

Example 2

Set up a Newton-Raphson iteration for computing the square root x of a given positive

number c and apply it to c = 2

Solution

We have x = c, hence

F (x) = x

2

c = 0

( x) = 2x

Newton-Raphson formula becomes:

x

k+1

= x

k

( x

k

)

( x

k

)

= x

k

( x

k

2

c)

2x

k

=

2x

k

2

-x

k

2

+c

2x

k

=

x

k

2

+c

2x

k

=

1

2

, ( x

k

+

c

x

k

)

Therefore,

For c = 2, choosing x

0

= 1, we obtain:

X

1

= 1.500000, x

2

= 1.416667, x

3

= 1.414216, x

4

= 1.414214,

Now, x

4

is exact to 6 decimal places.

Now, what happens if

( x

k

) = 0?

Recall, if ( x) = 0,and

( x) = 0 , we have repeated roots or multiplicity (multiple roots).

The sign in this case will not change; the method hence breaks down. The method also fails

for a complex solution (i.e. x

2

+ 1 = 0)

4. The Secant Method

We obtain the Secant method from the Newton-Raphson method, replacing the

derivative F

(x) by the difference quotient:

( x

k

) =

]( x

k

) -]( x

k-1

)

x

k

-x

k-1

Then instead of using x

k+1

= x

k

]( x

k

)

]

( x

k

)

(as in Newton-Raphsons), we have

x

k+1

= x

k

( x

k

)

x

k

x

k-1

( x

k

) ( x

k-1

)

Geometrically, we intersect the x-axis at x

k+1

with the secant of f (x) passing through P

k-1

and P

k

in the figure below.

We thus, need two starting values x

0

and x

1

.

Y = f (x) Secant

P

k-1

S X

k-1

x

k

x

y

P

k

X

k+1

CHAPTER THREE

COMPUTATION OF SOME STANDARD FUNCTIONS

Consider the sine x series:

Sinx = x

x

3

3!

+

x

S

5!

----------

For a given value of x, sin x can be evaluated by summing up the terms of the right hand

side. Similarly, cosx, e

x

,etc can also be found from the following series;

Cosx = 1

x

2

2!

+

x

4

4!

----------

c

x

= 1 + x +

x

2

2!

+

x

3

3!

+ --------

Example:

Solve sin (0.25) correct to five decimal places.

Solution;

Given that x = 0.25

x

3

3!

=

x

3

6

=

( 0.25)

3

6

= 0.0026041

x

5

5!

=

x

5

120

=

( 0.25)

5

120

= 0.0000081

x

7

7!

=

x

7

5040

=

( 0.25)

7

5040

= 0.0000000

(correct to 6 D)

Therefore,

Sinx = x

x

3

3!

+

x

5

5!

= 0.25 0.002641 + 0.0000081

= 0.2475071 = 0.24751 (correct to 5D) in radians

1. Taylors Series Expansion

Let F(x) be a function. We want to write f(x) as a power series about a point x

0

. That is,

we want to write f(x) as:

( x) = c

0

+ c

1

( x x

0

) + c

2

( x x

0

)

2

+ ----------------------- (1)

Where c

0

, c

1

, c

2

, ----------, are constants.

We are interested in finding the constants c

o

, c

1

, ----------, given f(x) and x

0

.

Therefore, from equation (1),

c

0

= ( x

0

)

If we differentiate equation (1), we obtain:

C

1

+ 2c

2

( x x

0

) + 3c

3

( x x

0

)

2

+ (2)

Therefore,

( x

0

) = c

1

orc

1

=

( x

0

)

Differentiating equation (2), we obtain:

( x

0

) = 2c

2

+ 3( 2) ( c

3

) ( x x

0

)

Hence,

( x

0

) = 2c

2

orc

2

=

]

( x

0

)

2!

Proceeding like this, we shall get;

c

3

=

( x

0

)

3!

onJc

4

=

( )

( x

0

)

4!

In general,

c

k

=

( k)

( x

0

)

k!

Equation (1) thus becomes;

( x) = ( x

0

) +

]

( x

0

)

1!

( x x

0

) +

]

( x

0

)

2!

( x x

0

)

2

+ --------- (3)

This is called the Taylors series expansion about x

0

.

Taylors series can be expressed in various forms. Putting x = x

0

+ h in equation (3), we get

another form of Taylors series:

( x

0

+ ) = ( x

0

) +

]

( x

0

)

1!

+

]

( x

0

)

2!

2

+ ---------- (4)

Some authors use x in the place of h in equation (4), so we get yet another form of Taylors

series;

( x

0

+ x) = ( x

0

) +

]

( x

0

)

1!

x +

]

( x

0

)

2!

x

2

+ --------- (5)

2. The Maclaurins Series

The Taylors series of equation (5) about x

0

= 0 is called Maclaurins series of f(x), that is,

( x) = ( 0) +

]

( 0)

1!

x +

]

( 0)

2!

x

2

+ ----------------- (6)

3. Binomial Series

Consider,

( x) = ( 1 + x)

n

( x) = n( 1 + x)

n-1

( x) = n( n 1) ( 1 + x)

n-2

( x) = n( n 1) ( n 2) ( 1 + x)

n-3

Now applying the above to the Maclaurins series, we obtain, noting that;

( 0) = 1

( 0) = n

( 0) = n( n 1)

( 0) = n( n 1) ( n 2)

So we obtain:

( 1 + x)

n

= 1 + nx +

n( n 1)

2!

x

2

+

n( n 1) ( n 2)

3!

x

3

This is the Binomial series.

Example

Derive the Maclaurins series for c

-x

and hence, evaluate c

-0.2

correct to two decimal places.

Solution:

( x) = c

-x

, ( 0) = 1

( x) = c

-x

,

( 0) = 1

( x) = c

-x

,

( 0) = 1

( x) = c

-x

,

( 0) = 1

Now, by Maclaurins series,

( x) = ( 0) +

( 0)

1!

x +

( 0)

2!

x

2

+

That is,

c

-x

= 1

x

1!

+

x

2

2!

x

3

3!

+ ..

Therefore,

c

-0.2

= 1 0.2 +

( 0.2)

2

2

( 0.2)

3

6

+

( 0.2)

4

24

..

= 0.81 (to 2D).

CHAPTER FOUR

INTERPOLATION

Suppose F(x) is a function whose value at certain points x

o

, x

1

, ,x

n

are known. The values are

f(x

0

), f(x

1

), , f(x

n

). Consider a point x different from x

o

, x

1

, ,x

n

. F(x) is not known.

We can find an approximate value of F(x) from the known values. This method of finding F(x)

from these known values is called interpolation. We say that w interpolate F(x) from f(x

0

), f(x

1

),

, f(x

n

Linear Interpolation

Let x

0

, x

1

be two points and f

0

, f

1

be the function values at these two points respectively. Let x be

a point between x

0

and x

1.

We are interested in interpolating F(x) from the values F(x

0

) and F(x

1

).

Now consider the Taylors series:

( x

1

) = ( x

0

) +

( x

0

)

1!

( x

1

x

0

) + .

Considering only the first two terms, we have:

1

=

0

+

( x

0

) ( x

1

x

0

)

Therefore,

( x

0

) =

1

0

x

1

x

0

The Taylors series at x gives

F0 F(x) =? F1

x0 x x1

( x) = ( x

0

) +

( x

0

)

1!

( x x

0

) +

Also considering the first the first two terms, we have:

( x) = ( x

0

) +

( x

0

) ( x x

0

) =

0

+

(

1

0)

( x

1

x

0

)

( x x

0

)

Now let

x-x

0

x

1

-x

0

be denoted by p

We thus get; ( x) =

0

+ (

1

0

) p =

0

+ p

1

p

0

=

0

p

0

+ p

1

Therefore,

( x) = ( 1 p)

0

+ p

1

This is called the linear interpolation formula. Since x is a point between x

0

and x

1

, p is a non-

negative fractional value, i.e. 0 p 1

Example

Consider the following table:

x

7 19

15 35

Find the value of ( 10)

Solution:

x

0

= 7, x

1

= 19

0

= 15,

1

= 35

F0 =15 F(x) =? F1 =35

x0 =7 X =10 x1 =19

p =

x x

0

x

1

x

0

=

10 7

19 7

=

3

12

= 0.25

Therefore,

1 p = 1 0.25 = 0.75

Hence, ( x) = ( 1 p)

0

+ p

1

= (0.75 x 15) + (0.25 x 35) = 11.25 + 8.75

F(10) = 20

Lagrange Interpolation

Linear Lagrange interpolation is interpolation by the straight line through ( x

0

,

0

) , ( x

1

,

1

)

y

Thus, by that idea, the linear Lagrange polynomial P

1

is the sum P

1

= L

0

f

0

+ L

1

f

1

with L

0

, the

linear polynomial that is 1 at x

0

and 0 at x

1

.

Similarly, L

1

is 0 at x

0

and 1 at x

1

.

Therefore,

X1

x

x X0

F1

P1(x)

F0

Y = f (x)

I

0

( x) =

x x

1

x

0

x

1

, I

1

( x) =

x x

0

x

1

x

0

This gives the linear Lagrange polynomial;

P

1

( x) = L

0

f

0

+ L

1

f

1

=

x-x

1

x

0

-x

1

0

+

x-x

0

x

1

-x

0

1

Example 1

Compute l n 5.3 from l n 5.0 = 1.6094, l n 5.7 = 1.7405 by linear Lagrange interpolation and

determine the error from l n 5.3 = 1.6677.

Solution;

x

0

= 5.0

x

1

= 5.7

0

= l n 5.0

1

= l n 5.7

Therefore.

I

0

( 5.3) =

5.3 5.7

5.0 5.7

=

0.4

0.7

= 0.57

I

1

( 5.3) =

5.3 5.0

5.7 5.0

=

0.3

0.7

= 0.43

Hence,

l n 5.3 = I

0

( 5.3)

0

+ I

1

( 5.3)

1

= 0.57 x 1.6094 + 0.43 x 1.7405 = 1.6658

The error is 1.6677 1.6658 = 0.0019

The quadratic Lagrange Interpolation

This interpolation of given (x

0

,f

0

), (x

1

, f

1

), (x

2

, f

2

) by a second degree polynomial P

2

(x), which

by Lagranges idea, is:

P

2

( x) = I

0

( x)

0

+ I

1

( x)

1

+ I

2

( x)

2

With,

I

0

( x

0

) = 1, I

1

( x

1

) = 1, I

2

( x

2

) = 1 and

I

0

( x

1

) = I

0

( x

2

) = 0, e.t.c., we therefore claim that:

I

0

( x) =

l

0

( x)

l

0

( x

0

)

=

( x x

1

) ( x x

2

)

( x

0

x

1

) ( x

0

x

2

)

I

1

( x) =

l

1

( x)

l

1

( x

1

)

=

( x x

0

) ( x x

2

)

( x

1

x

0

) ( x

1

x

2

)

I

2

( x) =

l

2

( x)

l

2

( x

2

)

=

( x x

0

) ( x x

1

)

( x

2

x

0

) ( x

2

x

1

)

The above relations are valid since, the numerator makes I

k

(x

]

) = 0 i] k; and the

denominator makes I

k

( x

k

) = 1 because it equals the numerator at x = x

k

Example 2

Compute l n 5.3 by using the quadratic Lagrange interpolation, using the data of example 1

and l n 7.2 = 1.9741. Compute the error and compare the accuracy with the linear Lagrange

case.

Solution:

I

0

( 5.3) =

( x x

1

) ( x x

2

)

( x

0

x

1

) ( x

0

x

2

)

=

( 5.3 5.7) ( 5.3 7.2)

( 5.0 5.7) ( 5.0 7.2)

=

( -0.4) ( -1.9)

( -0.7) ( -2.2)

=

0.76

1.54

= 0.4935

I

1

( 5.3) =

( x x

0

) ( x x

2

)

( x

1

x

0

) ( x

1

x

2

)

=

( 5.3 5.0) ( 5.3 7.2)

( 5.7 5.0) ( 5.7 7.2)

=

( 0.3) ( 1.9)

( 0.7) ( 1.5)

=

0.57

1.05

= 0.5429

I

2

( 5.3) =

( x x

0

) ( x x

1

)

( x

2

x

0

) ( x

2

x

1

)

=

( 5.3 5.0) ( 5.3 5.7)

( 7.2 5.0) ( 7.2 5.7)

=

( 0.3) ( 0.4)

( 2.2) ( 1.5)

=

0.12

3.3

= 0.03636

Therefore,

l n 5.3 = I

0

( 5.3)

0

+ I

1

( 5.3)

1

+ I

2

( 5.3)

2

= 0.4935 x 1.6094 + 0.5429 x 1.7405 + (- 0.03636) x 1.9741

= 0.7942 + 0.9449 0.07177 =1.6673 (4D)

The error = 1.6677 -1.6673 = 0.0004

The above results show that the Lagrange quadratic interpolation is more accurate for this case.

Generally, the Lagrange interpolation polynomial may be written as:

( x) P

n

( x) = I

k

( x)

k

=

l

k

( x)

l

k

( x

k

)

n

k=0

n

k=0

k

Where,

I

k

( x

k

) = 1 onJ 0 ottcotcrnoJcs

CHAPTER FIVE

INTRODUCTION TO FINITE DIFFERENCES

Consider a function: ( x) = x

3

5x

2

+ 6

The table below illustrates various finite difference parameters.

x ( x)

2

3

4

..

0

1

2

3

4

5

6

6

2

-6

-12

-10

6

42

- 4

- 8

- 6

2

16

36

- 4

2

8

14

20

6

6

6

6

Notice that a constant (6) occurs in the forward difference at

3

(3

rd

forward difference)

It can be shown that:

3

=

J

3

Jx

3

Therefore,

J

3

Jx

3

= 6

J

2

Jx

2

= 6x + A

Jy

Jx

= 3x

2

+ Ax + B

Hence, = x

3

+

A

2

x

2

+ Bx + C

We now determine the constants:

At x = 0, ( x) = 6 = C

Therefore, ( x) = x

3

+

A

2

x

2

+ Bx + 6

At x = 1, ( x) = 2 (from the table)

Therefore, 2 = 1 +

A

2

+ B + 6, --------------------------- (1)

At x = 2, ( x) = 6

Hence,

6 = 8 +

A

2

( 4) + 2B + 6 --------------------------------- (2)

We then solve simultaneously for the other constants.

The first forward difference is generally taken as an approximation for the first difference, i.e.

( x) , (but will be exact if linear). Also,

2

( x) (but exact if quadratic).

Similarly,

3

( x) (but exact if cubic).

Note also that;

( x) will be linear if the constant terms occur at column, quadratic if they occur at

2

column and cubic if at

3

column.

Now,

( x) =

1

0

x

1

x

0

=

+1

x

+1

x

Let x

+1

x

= = interval,

Then,

( x) =

+1

Now, from the Taylors series expansion, let us on this occasion consider the expansion about a

point x

+1

=

( 1)

+

h

2

2!

+

h

3

3!

( 3)

+ . ------------------------------ (1)

In this and subsequently, we denote the nth derivative evaluated at x

by

( n)

Hence,

-1

=

( 1)

+

h

2

2!

( 2)

h

3

3!

( 3)

+ . ------------------------------ (2)

From equations one and two, three different expressions that approximate

( 1)

can be derived.

The first is from equation (1), considering the first two terms:

+1

( 1)

+

2

2!

( 2)

Therefore,

]

i+1

-]

i

h

=

+

h

2!

( 2)

Hence,

( 1)

[

d]

dx

x

i

=

]

i+1

-]

i

h

h

2!

( 2)

------------------------------------------ (3)

The quantity,

]

i+1

-]

i

h

is known as the forward difference and it is clearly a poor approximation,

since it is in error by approximately

h

2

( 2)

.

The second of the expressions is from equation (2), considering the first two terms:

-1

=

( 1)

2

2!

( 2)

Therefore,

]

i

-]

i-1

h

=

( 1)

h

2

2!

( 2)

Hence,

( 1)

[

d]

dx

x

i

=

]

i

-]

i-1

h

+

h

2

2!

( 2)

--------------------------------------------- (4)

Also, the quantity,

]

i

-]

i-1

h

is called the backward difference. The sign of the error is reversed,

compared to that of the forward difference.

The third expression is obtained by subtracting equation (2) from equation (1), we then have:

+1

-1

=

( 1)

+

( 1)

+ 2

3

3!

( 3)

= 2

( 1)

+ 2

3

( 3)

3!

Hence,

+1

-1

= 2 _

( 1)

+

2

( 3)

3!

_

Therefore,

+1

-1

2

=

( 1)

+

2

( 3)

3!

So,

( 1)

[

d]

dx

x

i

=

]

i+1

-]

i-1

2h

h

2

]

i

( 3)

3!

- ------------------------------------------------- (5)

The quantity,

]

i+1

-]

i-1

2h

is known as the central difference approximation to

( 1)

and can be seen

from equation (5) to be in error by approximately

h

2

6

( 3)

. Note that this is a better approximation

compared to either the forward or backward difference.

By a similar procedure, a central difference approximation to

( 2)

can be obtained:

( 2)

= [

d

2

]

dx

2

x

i

]

i+1

-2]

i

+]

i-1

h

2

-------------------------------------------- (6)

The error in this approximation, also known as the second difference of , is about

h

2

12

( 4)

It is obvious that the second difference approximation is far better that the first difference.

Example:

The following is copied from the tabulation of a second degree polynomial ( x) at values of x

from 1 to 12 inclusive.

2, 2,?,8, 14, 22, 32, 46, ?, 74, 92, 112

The entries marked ?were illegible and in addition, one error was made in transcription.

Complete and correct the table.

Solution:

S/N ( x)

2

1

2

3

4

5

6

7

8

9

10

11

12

2

2

?

8

14

22

32

46

?

74

92

112

0

?

?

6

8

10

14

?

?

18

20

?

?

?

2

2

4

?

?

?

2

Because, the polynomial is second degree, the 2

nd

differences (which are proportional to

d

2

]

dx

2

) should be

constant and clearly, this should be 2. Hence, the 6

th

value in

the

2

column should be 2 and also all the ?in this column.

Equally, the 7

th

value in the column should be 12 and not

14. And since all the values in the.

2

column are a constant

,2, the first two ? in column are 2 and 4 respectively and

the last two are 14 and 16 respectively. Working this

backward to ( x) column, the first ? = 4, while the 8

th

value

in this column = 44 and not 46.. Finally, the last ? in the

( x) column = 58

The entries should therefore read:

2, 2, 4, 8, 14, 22, 32, 44, 58, 74, 92, 112

CHAPTER SIX

SIMULTANEOUS LINEAR EQUATIONS

Consider a set of N simultaneous linear equations in N variables (unknowns), x

, i = 1,2, . N.

The equations take the general form:

A

11

x

1

+ A

12

x

2

+ + A

1N

x

N

= b

1

A

21

x

1

+ A

22

x

2

+ + A

2N

x

N

= b

2

------- ------ ---------- ----- ---- ------ (1)

A

N1

x

1

+ A

N2

x

2

+ + A

NN

x

N

= b

N

Where, A

]

are constants and form the elements of a square matrix A. The b

are given and form a

column vector b. If A is non-singular, equation (1) can be solved for the x

using the inverse of

A according to the formula; x = A

-1

b.

Systems of linear Equations

Consider the following system of linear equations:

o

11

x

1

+ o

12

x

2

+ + o

1n

x

n

= o

1,n+1

o

21

x

1

+ o

22

x

2

+ + o

2n

x

n

= o

2,n+1

o

n1

x

1

+ o

n2

x

2

+ + o

nn

x

n

= o

n,n+1

A solution to this system of equations is a set of values x

1

, x

2

, . . , x

n

which satisfies the above

equations.

Consider the matrix:

_

o

11

o

12

o

13

o

1n

o

21

o

22

o

23

o

2n

o

n1

o

n2

o

n3

o

nn

_

This called the coefficient matrix

The vector: _

o

1,n+1

o

n,n+1

_ is called the right hand side vector.

In some special cases, the solution can be got directly.

Cases 1

A square matrix is called a diagonal matrix if the diagonal entries alone are non-zeros. Suppose

the coefficient matrix is a diagonal matrix, i.e. the coefficient matrix is of the form:

o

11

0 0 . .0

0 o

22

0 0

0 0 0 . . o

nn

The equat i ons w i l l be of t he f or m:

o

11

x

1

= o

1,n+1

o

22

x

2

= o

2,n+1

o

nn

x

n

= o

n,n+1

In this case, the solution can be directly written as:

x

1

=

o

1,n+1

o

11

, x

2

=

o

2,n+1

o

22

, , x

n

=

o

n,n+1

o

nn

Case 2

A matrix is said to be lower triangular if all its upper diagonal entries are zeros.

Suppose the coefficient matrix is a lower diagonal matrix, i.e. it is of the following form:

o

11

0 0 .0

o

21

o

22

0. 0

o

n1

o

n2

o

n3

o

nn

The equations will be of the following form:

o

11

x

1

= o

1,n+1

o

21

x

1

+ o

22

x

2

= o

2,n+1

o

31

x

1

+ o

32

x

2

+ o

33

x

3

= o

3,n+1

o

n1

x

1

+ o

n2

x

2

+ o

n3

x

3

+ + o

nn

x

n

= o

n,n+1

From the first equation,

x

1

=

o

1,n+1

o

11

Substituting x

1

into the second equation, we have:

x

2

=

1

o

22

(o

2,n+1

o

21

x

1

)

Also, doing the same for x

2

:n

x

3

=

1

o

33

( o

3,n+1

o

31

x

1

o

32

x

2

)

Similarly, we can find x

4

, x

5

, . , x

n

. This is called forward substitution method.

Case 3

Suppose the coefficient is upper triangular. Then, the equations will be of the following form;

o

11

x

1

+ o

12

x

2

+ . + o

1n

x

n

= o

1,n+1

0 + o

22

x

2

+ . + o

2n

x

n

= o

2,n+1

+ o

nn

x

n

= o

n,n+1

Starting from the last equation,

x

n

=

o

n.n+1

o

nn

The( n 1) t equation can now be used to evaluate x

n-1

thus:

x

n-1

=

1

o

n-1,n-1

(o

n-1,n-1

o

n-1,n

x

n

)

In general, after evaluating x

n

, x

n-1

. . x

k+1

, we evaluate x

k

as:

x

k

=

1

o

kk

= ( o

k,n+1

o

k,k+1

x

k+1

o

kn

x

n

)

We can thus evaluate all the x

values. This is called backward substitutiion method.

Elementary Row Operations

Consider a matrix

A =

o

11

o

12

o

13

. o

1n

o

21

o

22

o

23

. o

2n

o

n1

o

n2

o

n3

o

nn

Operation 1

Multiplying each element of a row by a constant:

If the it row is multiplied by a constant k, we write: R

= kR

(read as R

becomes kR

).

Operation 2

Multiplying one row by a constant and subtracting it from another row, i.e. R

can be replaced by

R

kR

]

. We write R

kR

]

.

Operation 3

Two rows can be exchanged: If R

onJ R

]

are exchanged, we write R

R

]

.

When operation 1 is performed, the determinant is multiplied by k.

If operation 2 is performed on a matrix, its determinant value is not affected.

When operation 3 is performed, the sign of its determinant value reverses.

Now consider a matrix in which all the lower diagonal entries of the first column are zero;

A =

o

11

o

12

o

13

. . o

1n

0 o

22

o

23

. . o

2n

0 o

32

o

33

. . o

3n

..

0 a

n2

a

n3

..a

nn

| A| = o

11

_

o

22

o

23

. . o

2n

o

32

o

33

. . o

3n

o

n2

o

n3

. . o

nn

_

Pivotal Condensation

Consider a matrix,

A =

o

11

o

12

o

13

. . o

1n

o

21

o

22

o

23

. . o

2n

. . . . . .

a

n1

a

n2

a

n3

..a

nn

Also consider a row operation R R

2

u

21

u

11

R

1

, performed on the matrix A; then the

o

21

entry will become zero. Similarly, do the operation :R

u

i1

u

11

R

1

, for i = 2,3,4,n

Then the lower diagonal entries of the first column will become zero. Note that these operations

not affect the determinant value of A.

In the above operation, o

]

would have now become:

o

]

u

i1

u

11

o

1]

, that is: o

]

o

]

u

i1

u

11

o

1]

Therefore, according to the new notation:

A =

o

11

o

12

o

13

. . o

1n

0 o

22

o

23

. . o

2n

0 o

32

o

33

. . o

3n

..

0 a

n2

a

n3

..a

nn

| A| = o

11

_

o

22

o

23

. . o

2n

o

32

o

33

. . o

3n

o

n2

o

n3

. . o

nn

_

Now, we can once again repeat the above procedure on the reduced matrix to get determinant:

| A| = o

11

o

22

_

o

33

o

34

o

3n

o

n3

o

n4

o

nn

_

A was a nxn matrix. In the first step, we condensed it into a ( n 1) x( n 1) matrix. Now, it

has further been condensed into( n 2) x( n 2) matrix. Repeating the above procedure, we can

condense the matrix into1x1. So, determinant, A = o

11

. o

22

. o

33

. . o

nn

.

Algorithm Development

Let A be the given matrix,

1. Do the row operation R

u

i1

u

11

R

1

(fori = 2,3,4 . n)

This makes all the lower diagonal entries of the first column zero.

2. Do the row operation R

u

i2

u

22

R

2

(for i = 3,4 . n)

This also makes all the lower diagonal entries of the second column zero.

3. Do the row operation R

u

i3

u

33

R

3

(for i = 4,5 . n)

This makes all the lower diagonal entries of the third column zero.

In general, in order to make the lower diagonal entries of the k

th

column zero,

4. Do the row operation, R

u

ik

u

kk

R

k

for i = k + 1, k + 2 . n)

Doing the above operation for k = 1,2,3, . , n 1, makes all the lower diagonal entries of

the matrix zero. Hence, determinant A = o

11

. o

22

. o

33

, , o

nn

.

Notice that the following segment will do the required row operation:

rotio =

o

k

o

kk

For ] = 1 to n

o

]

= o

]

rotio o

k]

ncxt ]

This operation has to be repeated for i = k + 1 to n in order to make the lower diagonal entries

of the k

th

column zero.

The complete algorithm is show below:

1. Read n

2. or i = 1 to n

3. or ] = 1 to n

4. RcoJ o

]

5. ncxt ]

6. ncxt i

7. or k = 1 to n 1

8. or i = k + 1 to n

9. rotio =

u

ik

u

kk

10. or ] = 1 to n

11. o

]

= o

]

rotio o

k]

12. ncxt ]

13. ncxt i

14. ncxt k

15. ct = 1

16. or i = 1 to n

17. ct = ct o

18. ncxt i

19. Print ct

20. EnJ.

Practice Questions

1. Write a FORTRAN program to implement the pivotal condensation method, to find the

determinant of any matrix of order n.

2. Find the determinant of :

1.2 -2.1 3.2 4.3

-1.4 -2.6 3.0 4.1

-2.2 1.7 4.0 1.2

1.1 3.6 5.0 4.6

Using the pivotal condensation method,

Gauss Elimination Method

Consider the equation:

o

11

x

1

+ o

12

x

2

+ + o

1n

x

n

= o

1,n+1

o

21

x

1

+ o

22

x

2

+ + o

2n

x

n

= o

2,n+1

--- ------ ------ ------ ------- ------ ------

o

n1

x

1

+ o

n2

x

2

+ + o

nn

x

n

= o

n,n+1

This can be in matrix form and solved using the row operation which was done for the pivotal

condensation method.

The algorithm consists of three major steps thus:

(i) Read the matrix

(ii) Reduce it to upper triangular form

(iii) Use backward substitution to get the solution..

Algorithm:

Read Matrix A.

1. Read n

2. or i = 1 to n

3. or ] = 1 to n + 1

4. RcoJ o

]

5. Next j

6. Ncxt i

Reduce to upper Triangular

7. or k = 1 to n 1

8. or i = k + 1 to n

9. Rotio =

u

ik

u

kk

10. or ] = 1 to n + 1

11. o

]

= o

]

Rotio o

k]

12. Next j

13. Ncxt i

14. Ncxt k

Backward Substitution

15. x

n

=

u

n,n+1

u

nn

16. or k = n 1 to 1 stcp 1

17. x

k

= o

k,n+1

18. or ] = k + 1 to n

19. x

k

= x

k

o

k]

x

]

20. Next j

21. x

k

=

x

k

u

kk

22. Ncxt k

Print Answer

23. or i = 1 to n

24. print x

25. Ncxt i

26. EnJ

Example:

Solve the following system of equations by the Gauss elimination method:

x

1

+ x

2

+

1

2

x

3

+ x

4

= 3.5

x

1

+ 2x

2

+ x

4

= 2

3x

1

+ x

2

+ 2x

3

+ x

4

= 3

x

1

+ 2x

4

= 0

Solution: The matrix is:

1 1 0.5 1 3.5

-1 2 0 1 -2

-3 1 2 1 -3

-1 0 0 2 0

In order to make zero, the lower diagonal entries of the first column, do the following operations

R

2

R

2

+ R

1

R

3

R

3

+ 3R

1

R

4

R

4

+ R

1

These will yield:

1 1 0.5 1 3.5

0 3 0.5 2 1.5

0 4 3.5 4 7.5

0 1 0.5 3 3.5

Now do the operations:

R

3

R

3

4

3

R

2

R

4

R

4

R

2

3

These will yield:

1 1 0.5 1 3.5

0 3 0.5 2 1.5

0 0 2.833 1.33 5.5

0 0 0.66 2.33 3

Now, doing:

R

4

R

4

0.66

2.33

R

3

Will result to:

1 1 0.5 1 3.5

0 3 0.5 2 1.5

0 0 2.833 1.33 5.5

0 0 0 2.0196 1.70588

Now the equations become:

x

1

+ x

2

+

1

2

x

3

+ x

4

= 3.5 ------------------ (1)

3x

2

+

1

2

x

3

+ 2x

4

= 1.5 -------------- (2)

2.833x

3

+ 1.33x

4

= 5.5 ------------- (3)

2.0196 x

4

= 1.70588 ------- (4)

From equation (4),

x

4

= 0.84466

From equation (3),

2.833x

3

+ 1.33( 0.84466) = 5.5

x

3

= 1.544

Also, from equation (2),

3x

2

+

1

2

( 1.544) + 2( 0.84466) = 1.5

x

2

= 0.3204

Finally, equation (1) gives, after substituting x

4

, x

3

, onJ x

2

values:

x

1

= 2.2039

CHAPT ER SEVEN

DI FFERENT I AL EQUAT I ONS

The following are some differential equations:

y

i

= ( x

2

+ y) c

x

y

ii

= y

i

x + xy

2

xy

iii

+ ( 1 x

2

) yy

ii

+ y = ( x

2

1) c

If y

( k)

is the highest order derivative in a differential equation, the equation is said to be ak

th

order differential equation.

A solution to the differential equation is the value of y which satisfies the differential equation.

Example:

Consider the differential equation: y

ii

= 6x + 4

This is a second order differential equation. The function:

y = x

3

+ 2x

2

1

satisfies the differential equation, hence, y = x

3

+ 2x

2

1 is a solution to the differential

equation.

Numerical Solutions

Consider the equation: y

ii

= 6x + 4

A solution is y = x

3

+ 2x

2

1 , however, instead of writing the solution as a function of x,

we can find the numerical values of y for various pivotal values of x. The solution from x =

0 to x = 1 can be expressed as follows:

x 0 0.2 0.4 0.6 0.8 1.0

y 1 0.912 0.616 0.064 0.792 2.0

The values are got by the function y = x

3

+ 2x

2

1. This table of numerical values of y is

said to be a numerical solution to the differential equation.

The initial value Problem

Consider the differential equation: y

i

= ( x, y) ; y( x

0

) = y

0

This is a first order differential equation. Here, the y value at x

0

= y

0

. The solution y ot x

0

is

given, We must assume a small increment. i.e.

x

1

= x

0

+

x

2

= x

1

+

x

+1

= x

+

y

0

y

1

= ? y

2

= ? y

3

= ? y

4

= ?

x

0

x

1

x

2

x

3

x

4

Let us denote the y values at x

1

, x

2

, . as y

1

, y

2

. respectively.y

0

is given and so we must find

y

1

, y

2

. This differential equation is called an initial value problem.

Eulers Method

Consider the initial value problem: y

i

= ( x, y) ; y( x

0

) = y

0

yis a function of x, so we shall write that function as y( x)

Using the Taylors series expansion:

y( x

0

+ ) = y( x

0

) +

1!

y

i

( x

0

) +

2

2!

y

ii

( x

0

) +

Here, y( x

0

+ ) denotes y value at x

0

+

y

i

( x

0

) denotesy

i

value at x

0

+ , e.t.c.

Given;

y( x

0

) = y

0

y( x

0

+ ) = y( x

1

) = y

1

(say)

y

i

( x

0

) = y

i

ot x

0

But, y

i

= ( x, y)

y

i

( x

0

) = ( x

0

, y

0

)

Now, let

y

i

( x

0

) = ( x

0

, y

0

) =

0

cncc, y

i

( x

0

) =

0

Therefore, Taylors series expansion up to the first order term, gives:

y

1

= y

0

+

0

Similarly, we can derive:

y

2

= y

1

+

1

y

3

= y

2

+

2

In general,

y

+1

= y

, where

= ( x

, y

)

This is called the Eulers formula to solve an initial value problem.

Algorithm for Eulers method

1. cinc ( x, y)

2. RcoJ x

0

, y

0

, n,

3. or i = 0 to n 1 o

4. x

+1

= x

+

5. y

+1

= y

+ ( x

, y

)

6. Print x

+1

, y

+1

7. ncxt i

8. EnJ

Assignment: Implement the above in any programming language (FORTRAN of BASIC)

Example:

Solve the initial value problem: y

i

= x

2

+ y

2

; y( 1) = 0.8; x = 1( 0.5) 3

Solution:

Given: ( x, y) = x

2

+ y

2

, x

0

= 1, y

0

= 0.8, = 0.5, x = 1 to 3

y

0

= 0.8 y

1

= ? y

2

= ? y

3

= ? y

4

= ?

x

0

= 1 x

1

= 1.5 x

2

= 2 x

3

= 2.5 x

4

= 3

y

1

= y

0

+

0

But

0

= ( x

0

, y

0

) = ( 1, 0.8) = 1.64

Therefore, y

1

= 0.8 + ( 0.5) ( 1.64) = 1.62

y

2

= y

1

+

1

But

1

= ( x

1

, y

1

) = ( 1.5, 1.62) = 4.8744

Therefore, y

2

= 1.62 + ( 0.5) ( 4.8744) = 4.0572

y

3

= y

2

+

2

But

2

= ( x

2

, y

2

) = ( 2, 4.0572) = 20.460871

Therefore, y

3

= 4.0572 + ( 0.5) ( 20.460871) = 14.287635

y

4

= y

3

+

3

But

3

= ( x

3

, y

3

) = ( 2.5, 14.287635) = 210.38651

Therefore, y

4

= 14.287635 + ( 0.5) ( 210.38651) = 119.48088

So the numerical solution got by Eulers method is:

y

0

= 0.8 y

1

= 1.62 y

2

= 4.0572 y

3

= 14.287635 y

4

= 119.48088

x

0

= 1 x

1

= 1.5 x

2

= 2 x

3

= 2.5 x

4

= 3

Assignment: Using Eulers method, solve: 5

d

dx

= 3x

3

y; y( 0) = 1

For the interval 0 x 0.3, wit = 0.1

Back w ar d Eul er s Met hod

The formula for backward Eulers method is given by: y

+1

= y

+

+1

Where,

+1

= ( x

+1

, y

+1

)

For example, consider the initial value problem:

y

i

= 2x

3

y; y( 0) = 1; x = 0( 0.2) 0.4

Solution:

( x, y) = 2x

3

y

x

0

= 0, y

0

= 1, = 0.2

The backward Eulers method formula is: y

+1

= y

+

+1

y

+1

= y

+ ( 2x

+1

3

y

+1

)

Therefore,

y

+1

2x

+1

3

y

+1

= y

Hence,

y

= y

+1

( 1 2x

+1

3

)

OR

y

+1

=

y

( 1 2x

+1

3

)

y

0

= 1 y

1

= ? y

2

= ?

x

0

= 0 x

1

= 0.2 x

2

= 0.4

Now, put i = 0 in the formula:

y

1

=

y

0

( 1 2x

1

3

)

=

1

1 2( 0.2) ( 0.2)

3

= 1.0032102

Put i = 1 in the formula:

y

2

=

y

1

( 1 2x

2

3

)

=

1.0032102

1 2( 0.2) ( 0.4)

3

= 1.0295671

Therefore, the numerical solution to the problem is:

y

0

= 1 y

1

= 1.0032102 y

2

= 1.0295671

x

0

= 0 x

1

= 0.2 x

2

= 0.4

Euler-Richardsons Method

The formula is written as

y

+1

= y

+

3

[

+ 2

+

1

2

Where

= ( x

, y

) onJ

1+

1

2

= [x

+

1

2

, y

+

1

2

Also, x

+

1

2

= x

+

h

2

; y

+

1

2

= y

+

h

2

Algorithm

1. cinc ( , x)

2. RcoJ x

0

, y

0

, , n

3. or i = 0 to n 1 o

4. x

+

1

2

= x

+

h

2

5. y

+

1

2

= y

+

h

2

( x

, y

)

6. x

+1

= x

+

7. y

+1

= y

+

h

3

]( x

, y

) + 2( [x

+

1

2

, y

+

1

2

8. Print x

+1

, y

+1

9. Ncxt i

10. End

Let us now, develop a FORTRAN programme for the function: ( x, y) =

1

2

( 1 + x) y

2

i.e.y

i

=

1

2

( 1 + x) y

2

; y( 0) = 1; x = 0( 0.1) 0.6

Hence, x

0

= 0, y

0

= 1, = 0.1, n = 6

Note: Let XH = x

+1

and H = y

+1

C PROGRAM FOR EULER RICHARDSON

DIMENSION X(20), Y(20)

F(X,Y) = 0.5*(1. +X) *Y*Y

WRITE (*,*) ENTER X

0

, Y

0

, H , N VALUES

READ (*,5) X(0)

,

Y(0), H, N

5 FORMAT (3F15.5, I5)

WRITE (*,*) X(I), Y(I)

DO 25 I = 0, N-1

XM = X(I) + H/2.0

YM = Y(I) + H/2.0 * F(X(I), Y(I))

X(I+1) = X(I) +H

FI = F(X(I), Y(I))

FM = F(XM, YM)

Y(I+1) = Y(I) +H/3. * (FI + 2.0 * FM)

WRITE (*,15) X(I+1), Y(I+1)

15 FORMAT (1X, 2F15.5)

CONTINUE

STOP

END

T ayl or s Ser i es Met hod

Given that y is a function of x, it is written as y(x)

By Taylors series expansion;

y( x + ) = y( x) +

y

i

( x)

1!

+

y

ii

( x)

2!

2

+

y( x

+ ) = y

+

1!

y

i

+

2

2 !

y

ii

+

Where, y

i

= y

i

ot ( x

, y

)

y

ii

= y

ii

ot ( x

, y

)

y( x

+1

) = y

+

1!

y

i

+

2

2!

y

ii

+

Let the given initial value problem to be solved be:

y

i

= ( x, y) ; y( x

0

) = y

0

Now consider the problem:

y

i

= 4x

3

+ 1

y( 0) = 1.5

x = 0( 0.2) 0.8

Here,

y

i

= 4x

3

+ 1

y

i

= 4x

3

+ 1

y

ii

= 12x

2

y

ii

= 12x

2

y

iii

= 24xy

iii

= 24x

y

( )

= 24y

( )

= 24

y

( )

= 0y

( )

= 0

Therefore, Taylors expansion becomes:

y

+1

= y

+ ( 4x

3

+ 1) +

2

2

( 12x

2

) +

3

6

( 24x

) +

4

24

( 24)

Given that = 0.2

y

+1

= y

+ 0.8x

3

+ 0.2 + 0.24x

2

+ 0.052x

+ 0.0016

Hence, by putting i = 0, 1, 2, onJ 3 respectively, we can evaluate y

1

, y

2

, y

3

, y

4

.

T he Runge Kut t a Met hods

Consider the initial value problem:

y

i

= ( x, y) ; y( x

0

) = y

0

Sincey is a function of x, and it can be written as y(x)

Then by mean value theorem,

y( x

+ ) = y( x

) + y

i

( x

+ 0)

Where, 0 < 0 < 1

In our usual notation, this can be written as:

y

+1

= y

+ (x

+ 0 , y( x

+ 0) )

Now, choosing =

1

2

, we obtain:

y

+1

= y

+ _x

+

2

, y

+

2

]

And since Eulers method with spacing

h

2

, this formula may be expressed as:

J

1

= ( x

, y

)

J

2

= ( x

+

2

, y

+

J

1

2

)

Therefore,

y

+1

= y

+ J

2

This is called the second order Runge Kutta formula.

The third order formula is:

J

1

= ( x

, y

)

J

2

= ( x

+

2

, y

+

J

1

2

)

J

3

= ( x

+ , y

+ 2J

2

J

1

)

Therefore,

y

+1

= y

+

1

6

( J

1

+ 4J

2

+ J

3

)

The fourth order Runge Kutta formula is given as:

J

1

= ( x

, y

)

J

2

= ( x

+

2

, y

+

J

1

2

)

J

3

= _x

+

2

, y

+

J

2

2

]

J

4

= ( x

+ , y

+ J

3

)

Therefore,

y

+1

= y

+

1

6

( J

1

+ 2J

2

+ 3J

3

+ J

4

)

Example

Solve the initial value problem value using the Runge Kutta second order method.

Jy

Jx

= ( 1 + x

2

) y ; y( 0) = 1 ; x = 0( 0.2) 0.6

Solution:

( x, y) = ( 1 + x

2

) y ; x

0

= 0, y

0

= 1, = 0.2

y

0

= 1 y

1

= ? y

2

= ? y

3

= ?

x

0

= 0 x

1

= 0.2 x

2

= 0.4 x

3

= 0.6

To find y

1

J

1

= ( x

, y

) = 0.2( 1 + x

0

2

) y

0

= 0.2( 1) ( 1) = 0.2

J

2

= _x

+

2

, y

+

J

1

2

] = ( 0.1, 1.1) = 0.2( 1 + 0.01) 1.1

= 0.2222

Therefore,

y

1

= y

o

+ J

2

= 1 + 0.2222

= 1.2222

To find y

2

:

J

1

= ( x

1

, y

1

) = 0.2( 1 + x

1

2

) y

1

= 0.2( 1 + 0.04) ( 1.2222)

= 0.2542222

J

2

= _x

1

+

2

, y

1

+

J

1

2

] = ( 0.3, 1.349333) = 0.2( 1 + 0.09) ( 1.34933)

= 0.2941546

Then, y

2

= y

1

+ J

2

= 1.2222 + 0.2941546 = 1.5163768

To find y

3

J

1

= ( x

2

, y

2

) = 0.2( 1 + x

2

2

) y

2

= 0.2( 1 + 0.16) ( 1.5163768)

= 0.3517994

J

2

= _x

2

+

2

, y

2

+

J

1

2

] = ( 0.5, 1.6922785)

= 0.4230691

Then, y

3

= y

2

+ J

2

= 1.5163768 + 0.4230691

= 1.9394459

Assignment

Solve the problem given below, using the Runge Kutta fourth order method:

Jy

Jx

= ( 1 + x

2

) y ; y( 0) = 1 ; x = 0( 0.2) 0.6

CHAPT ER EI GHT

NUMERI CAL I NT EGRAT I ON

Methods:

1. Trapezoidal Formula

y = _( x) Jx (

y

0

+ y

n

2

b

u

+ y

1

+ y

2

+ . y

n-1

)

wcrc, y

= ( x

) , ( i = 0,1,2, . . , n)

=

b o

n

2. Simpsons Formula (Parabola formula)

y = _( x) Jx

3

b

u

[ y

0

+ y

2m

+ 2( y

2

+ y

4

+ . y

2m-2

) + 4( y

1

+ y

3

+ . + y

2m-1

) ]

wcrc =

b o

n

=

b o

2m

3. Newtons Formula (

3

8

rule)

y = _( x) Jx

3

8

b

u

[ y

0

+ y

3m

+ 2( y

3

+ y

6

+ . y

3m-3

) + 3( y

1

+ y

2

+ y

3

+ y

4

+ . + y

3m-2

) + y

3m-1

) ]

wcrc, =

b o

n

=

b o

3m

Example 1

Evaluate the integral, employing the trapezoidal rule, for n = 10

y = _c

-x

2

Jx

1

0

Solution:

Form a table of the integrand function

i x

2

y

0 0 0.0 1.0000

1 0.1 0.01 0.9900

2 0.2 0.04 0.9608

3 0.3 0.09 0.9139

4 0.4 0.16 0.8521

5 0.5 0.25 0.7755

6 0.6 0.36 0.6977

7 0.7 0.49 0.6125

8 0.8 0.64 0.5273

9 0.9 0.81 0.4449

10 1.0 1.00 0.3679

Applying the formula;

y = _( x) Jx (

y

0

+ y

n

2

b

u

+ y

1

+ y

2

+ . y

n-1

)

We note that :

1

2

( y

0

+ y

10

) + y

= 7.4620

9

=1

Therefore,

y = c

-x

2

Jx

1

0

= 7.4620 = 0.1 7.4620

0.746

Example 2

Compute the integral; y = c

x

2

Jx

1

0

by the Simpson formula, for n = 10

Solution:

Form a table of the function:

Values of y

= c

x

2

i x

2

or i = 0, 10 or c:cn i or oJJ i

0 0 0.00

1.0000

1 0.1 0.01 1.0101

2 0.2 0.04 1.0408

3 0.3 0.09 1.0942

4 0.4 0.16 1.1735

5 0.5 0.25 1.2840

6 0.6 0.36 1.4333

7 0.7 0.49 1.6323

8 0.8 0.64 1.8965

9 0.9 0.81 2.2479

10 1.0 1.00 2.7183

Summotion

3.7183 5.5441 7.2685

Applying the Simpsons formula:

_ c

x

2

Jx

1

30

[ ( 3.7183) + 2( 5.5441) + 4( 7.2685) ]

1

0

= 1.46268 1.4627

Example 3

Compute the integral, using the Newtons formula for h = 0.1

_

Jx

1 + x

0.6

0

Solution:

If = 0.1 tcn n =

b-u

h

=

0.6-0

0.1

= 6

Now form a table of the function:

Values of y

=

1

1+x

i

i x

1 + x

or i = 0, i = 6 or i = 3 or i = 1,2,4,5

0 0 1.00

1.0000

1 0.1 1.10

0.6250

0.9091

2 0.2 1.20 0.8333

3 0.3 1.30 0.7692

4 0.4 1.40 0.7143

5 0.5 1.50 0.6667

6 0.6 1.60

Summotion

1.6250 0.7692 3.1234

Applying the formula, we obtain;

y = _( x) Jx

3

8

b

u

[ y

0

+ y

3m

+ 2( y

3

+ y

6

+ . y

3m-3

) + 3( y

1

+ y

2

+ y

3

+ y

4

+ . + y

3m-2

) + y

3m-1

) ]

_

Jx

1 + x

0.6

0

3

8

0.1 ( 1.6250 + 1.5384 + 9.3702) 0.47001

Bi bl i ogr aphy

1. Er w i n Kr eyszi g ( 1993) Advanced Engi neer i ng Mat hemat i cs.

New Yor k : John Wi l ey & Sons I nc. Sevent h Edi t i on

2. Ri l ey, K.F., Hobson, M.P. and Bence, S.J. ( 1999) . Mat hemat i cal Met hods f or Physi cs

And Engi neer i ng. Cambr i dge: Uni ver si t y Pr ess. Low Pr i ce Edi t i on.

3. Xavi er , C.( 1985) : FORTRAN 77 and Numer i cal Met hods.

Das könnte Ihnen auch gefallen

- Lecture Notes On Phs 473: Computational PhysicsDokument59 SeitenLecture Notes On Phs 473: Computational Physicsanumercy PaulNoch keine Bewertungen

- 478 - LECTURE NOTES ON PHS 473-Main PDFDokument59 Seiten478 - LECTURE NOTES ON PHS 473-Main PDFJuan Pablo Ramirez MonsalveNoch keine Bewertungen

- CH2. Locating Roots of Nonlinear EquationsDokument17 SeitenCH2. Locating Roots of Nonlinear Equationsbytebuilder25Noch keine Bewertungen

- SEM 3 BC0043 1 Computer Oriented Numerical MethodsDokument20 SeitenSEM 3 BC0043 1 Computer Oriented Numerical MethodsRajat NandyNoch keine Bewertungen

- Numerical AnalysisDokument12 SeitenNumerical AnalysiskartikeyNoch keine Bewertungen

- Chapter1 2Dokument12 SeitenChapter1 2Muhamad Abid Abdul RazakNoch keine Bewertungen

- Chapter 3Dokument25 SeitenChapter 3Rohit GadekarNoch keine Bewertungen

- Numerical Methods: King Saud UniversityDokument24 SeitenNumerical Methods: King Saud UniversityMalikAlrahabiNoch keine Bewertungen

- Numerical Methods With Applications: Tutorial 2Dokument38 SeitenNumerical Methods With Applications: Tutorial 2Anonymous PYUokcCNoch keine Bewertungen

- Solution Inform Test 01Dokument5 SeitenSolution Inform Test 01Shawn SagarNoch keine Bewertungen

- Best Method For Solving Quadratic InequalitiesDokument7 SeitenBest Method For Solving Quadratic InequalitiesGoodmobo 123Noch keine Bewertungen

- PRELIMDokument22 SeitenPRELIMMarshall james G. RamirezNoch keine Bewertungen

- Shrodinger EqDokument22 SeitenShrodinger Eqawais33306Noch keine Bewertungen

- SelectionDokument15 SeitenSelectionMuhammad KamranNoch keine Bewertungen

- Salahaddin FalseDokument23 SeitenSalahaddin Falsehellobabm2Noch keine Bewertungen

- Roots of Equations: Reading Assignments: Section 5.1: Graphical Methods Section 5.2 Bisection MethodDokument52 SeitenRoots of Equations: Reading Assignments: Section 5.1: Graphical Methods Section 5.2 Bisection MethodmNoch keine Bewertungen

- Chapter 2Dokument17 SeitenChapter 2Omed. HNoch keine Bewertungen

- Calculus 1 Lecture Notes Section 2.9 1 of 6: F (X) 0. It Turns Out That F (X) 0 When X 1. XDokument6 SeitenCalculus 1 Lecture Notes Section 2.9 1 of 6: F (X) 0. It Turns Out That F (X) 0 When X 1. Xmasyuki1979Noch keine Bewertungen

- Root Approximation by Newton's Method, Bisection Method, Simple Iterative Method, and Second Method.Dokument40 SeitenRoot Approximation by Newton's Method, Bisection Method, Simple Iterative Method, and Second Method.Karl LeSénateur ViRilNoch keine Bewertungen

- MATH 115: Lecture XIV NotesDokument3 SeitenMATH 115: Lecture XIV NotesDylan C. BeckNoch keine Bewertungen

- Convolutions and The Discrete Fourier Transform: 3.1 The Cooley-Tukey AlgorithmDokument17 SeitenConvolutions and The Discrete Fourier Transform: 3.1 The Cooley-Tukey AlgorithmStu FletcherNoch keine Bewertungen

- Preparatory Questions: 1. Verify That The Function FDokument6 SeitenPreparatory Questions: 1. Verify That The Function FTOM DAVISNoch keine Bewertungen