Beruflich Dokumente

Kultur Dokumente

Evaluating The Optimal Placement of Binary Sensors

Hochgeladen von

Mandy DiazOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Evaluating The Optimal Placement of Binary Sensors

Hochgeladen von

Mandy DiazCopyright:

Verfügbare Formate

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.

1, January 2013

EVALUATING THE OPTIMAL PLACEMENT OF BINARY SENSORS

Stephen P. Emmons and Farhad Kamangar

The University of Texas at Arlington, Arlington, Texas, USA

stephen.emmons@mavs.uta.edu and kamangar@cse.uta.edu

ABSTRACT

Binary sensor arrays have many applications for detecting and tracking the location of an object. Many considerations factoring into the optimal design for such a system, including the accuracy of location, the extent of the sensor coverage, and the number and size of the sensors used. In this paper, we frame the problem by providing several definitions for sensor coverage and spatial probability for the general case, with special attention to sensor arrays in one dimension as a simplification for the purpose of clarity. We then propose a metric for measuring the overall the utility of a given set of choices that may be used in any algorithm for seeking an optimal outcome by maximizing this utility. Finally, we perform a numerical analysis of several variations of the the proposed metric, again in one dimensions, to illustrate its fitness for the task.

KEYWORDS

Binary Sensors, Optimization

1. INTRODUCTION

Binary sensor arrays have been used in many applications for detecting the presence of an object and tracking its location. Examples include finding the current location of an RFID tag within a warehouse and tracking it as it moves, detecting the presence of a harmful chemical or radioactive agent, or finding an intruder in a secure facility using motion detectors. In each application, there may be many factors that are involved in designing an optimal sensor array. Some applications may require a high degree of accuracy for the location of an object, but not 100% coverage. Other applications may only be concerned with ensuring that 100% coverage exists, but not with knowing exactly where an object is located. In this study, we propose a technique for balancing many factors that go into the design of a sensor array. We consider the precision with which we can take measurements, the coverage we achieve, and the costs associated with a given set of choices. We start with a brief survey of related work, followed by a precise definition of sensor coverage, the specific characteristics of a one-dimensional sensor array, and an explanation of how we will treat probability. Next we propose a utility function for evaluating the relative merits of various sensor arrays, and analyze several one-dimensional sensor placement schemes using it.

2. RELATED WORK

The problem of optimal sensor placement have been covered extensively in the literature. Many

DOI : 10.5121/ijist.2013.3101 1

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013

different criteria have been put forth as the basis for defining optimality. Often, the actual placement of sensors is of less importance than what information may be derived from them given a variety of assumptions. Chakrabarty et al. [4] focused attention on how to maximize coverage with a minimum of sensors. Their approach imposed a grid on a two- or three-dimensional field and uniquely identified each grid location with a single sensor. Aslam et al. [3] dealt primarily with tracking objects in binary sensor arrays using techniques for approximating object location based on proximity to nearby sensors. They took a minimalist view, assuming little or no control over the placement of the sensors. Shrivastava et al. [9] [8] looked at how n two-dimensional, omnidirectional binary sensor regions R partition the sensor field into a maximum of n2 n + 2 localization patches F where |F| |R|. This study did not consider any specific arrangements of the sensors, but instead used a generic sensor density as the mean distance among the sensors. They showed that the precision with which the location of some object within the sensing field is 1/R and that it improves (decreases in size) linearly as either or R increase in size. Sharif et al. [7] analyzed the cost effectiveness of directional sensors covering a large field. They employed a range of cost functions applied to sensor arrays of differing sizes in an effort to determine the optimal configuration. Krause et al. [5] delved extensively into the fundamentals of two-dimensional temperature sensor placement, considering aspects of variable sensor region size, sensor placement cost, and optimization of sensor placement using information theory. They showed that optimal placement of sensors is an NP-Hard problem and define optimization as based on a single criteria such as maximal information gain from the system. As a result, they gave special attention to algorithms to reduce the computational complexity of the optimization problem. More recently, Azadzadeh et al. [2] focused on how to maximize the number of unique localization patches found in two-dimensional, omnidirectional sensor arrays, and extended this analysis to directional sensors as well. They did not consider the role of sensor coverage in the sensor arrangement, focusing instead on the internal characteristics of the arrangement alone. The contribution of this paper is to directly address the question of sensor coverage and combine this along with other criteria into an overall measure of utility of a sensor array.

3. DEFINING SENSOR COVERAGE

We define sensor coverage as the portion of a field A over which we array a set of n sensor regions R = {r1...rn}. The sensor regions partition A to define a sensed portion of the overall field S = (R). The remaining un-sensed portion being S, such that A = S + (~S). Further, an object known to be in A must exist either in S or ~S. Figure 1 shows an example in two-dimensions where L1 and L2 represent locations that fall inside and outside of S respectively.

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013

Figure 1: Sensor coverage model Since we know that sensor regions may overlap, we define a partitioning function that produces a set of m patches F where (R) = F = {f1...fm}. Because these patches exactly cover the same portion of A as the sensor regions R, it follows that S = (F). Argawal and Sharir [1] showed that m O(nd) where d is the dimensionality of A, and that m n2 n + 2 in two dimensional case. Therefore, for any set of sensor regions R there is a maximum possible value for m or mmax. Figure 2 illustrates R and F in two dimensions where n = 2 and m = 3.

Figure 2: Two sensor regions creating three patches

4. SENSOR PLACEMENT IN ONE DIMENSION

Commonly, we see examples of sensor placement in two dimensions, but by considering binary sensor placement in one dimension, we reduce the complexity involved in studying possible scenarios. Given two sensor regions r1 and r2, we see in Figure 3 (a), (b), and (c) how they can overlap to produce one, three, or two patches, respectively.

Figure 3: Two sensors (a) at the same location, (b) partially overlapped,and (c) fully covering the field These examples let us examine the concept of average sensor density. Shrivastava et al. [9] [8] define sensor density as the number of sensor regions covering a given location. In Figure 3 (c), we see that the total field of A is completely covered by S with no overlap among the sensor

3

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013

regions R. This represents a sensor density of 1. Figure 3 (a) and (b) likewise have an average sensor density of 1, but have local sensor densities of 2 where the sensor regions overlap, and 0 where there is no coverage. These examples also show how the maximum number of patches for 2 sensor regions is 3. In the general case, the maximum number of patches in one dimension is m 2n 1 based on the theoretical limit of O(nd) where d = 1.

5. CLARIFYING SENSOR PROBABILITY

The probability of an object being detected by a sensor array may be defined as either temporal or spatial. Temporal probability concerns the likelihood of an object occurring in A over time, and subsequently, being detected in S. For the purpose of this analysis, we focus on spatial probability which deals with the likelihood that an object known to exist in A falls within S. In the spatial case, the probability that such an object falls in S is simply P(S), and outside of S or ~S is P(S). Given this, the following are true of S, ~S, and F: P(S) + P(~S) = 1 i=1-m P(fi) = P(S) i=1-m P(fi|S) = 1 (1) (2) (3)

These hold regardless of the spatial probability distribution of objects occurring in A. In the general case, we calculate the probability for a region r by using the continuous distribution function (cdf) of A and integrate over the range defined by r. As an example in one dimension, we calculate P(r) as follows: P(r) = rright-rleft cdf(x)dx We may likewise produce P(S) by summing the probabilities of each patch fi P(S) =i=1-m iright-ileft cdf(x)dx (4)

If an object may be found with equal probability throughout A, then its probability is a simple proportion of the sub-region size to the overall size of A. To facilitate this, we define the function to produce a set of scalar values D where (R) = D = {d1...dn}. Additionally, we define the related function such that ri R and di D then (ri) = di. In one dimension, simply returns the sensor region width, whereas in two dimensions, it would returns the region area. With , we calculate P(S) and patch probability P(f) as follows: f F, P(f) = (f) / (A) and P(f|S) = (f) / (S) (7)

P(S)= (S) / (A)

For the remainder of this analysis we limit ourselves to uniform spatial distribution.

6. MEASURING SENSOR ARRAY PERFORMANCE

In practice, the engineering design of a sensor system is intended to optimize several objectives

4

F as follows: (5)

(6)

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013

such as cost, efficiency, and accuracy. One way to determine if these objectives are met is to measure each factor and combine them together in a utility function. By maximizing such a function we can find the optimal design choices. We propose a utility function U that is a linear combination of three weighted objective functions, which are 1) measuring precision, 2) measuring coverage, and 3) measuring the intrinsic properties of the sensors represented by R. Each is paired with a weighting coefficient W = {w1, w2, w3} for balancing the relative value of the objectives. In practical situations, the actual values of W depend on factors such as cost of the sensors, required precision, and the consequences of missing an event due to lack of coverage. U(A,R,W) = w1 (A,R)+w2 (A,R)+w3 (A,R) The structure of U is simple with the real work taking place in the objective functions , , and , as discussed in the following sections. (8)

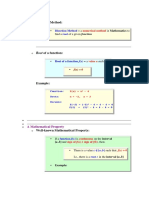

6.1. Considering Sensor Precision

The concept of patches F [2] more precisely identifies the location of a sensed object detected in S. Thus we may measure the precision of R in terms of F. For this analysis, we examine three definitions of the objective function for measuring precision, labelled A, B, and C. Shrivastava et al. [9] [8] argues for using the Lebesque norm L on F as a worst-case measure of precision, so we base A on this idea. The intuition is that we can reliably expect location precision to be better than the size of the largest patch in F. Thus precision increases as L decreases where L = max((F)). To calculate L and for subsequent uses, we need to convert the multi-dimensional regions and sub-regions of A into scalar values. For this and the rest of the objective functions, we want the values to be normalized and range from 0 to 1. Recognizing that 0 max((F)) max((R)), we define A as follows: A = (max((R)) max((F))) / max((R)) (9)

Next, we look to the concept of mean squared error to find the average accuracy for locations detected within R which also decreases as the precision increases. With this idea, we define B using to average the square of each patch size and scale it as with A. B = (max((R))2 i=1-m P(fi|R)(fi)2) / max((R)) 2 (10)

Note that the full equation for mean squared error would include the squaring of half the size of each patch, or a constant factor, that is here simply removed to maintain the normalized result from 0 to 1. For the definition of C, we look to Shannon entropy [6] as a means of maximizing the information flow from the sensor regions R. Although it is not properly a measure of precision, entropys measure of information provides a useful objective function C to use in contrast to the others. We calculate the entropy HS by treating F as a set of symbols and the probability distribution of an object being detected in any patch given its occurrence in S. HS =i=1-m P(fi|S)log2(P(fi|S)) (11)

5

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013

To understand this, the two binary sensors in Figure 2 may have states 00, 10, 11, and 01 where 1 signifies detecting an object, 0 otherwise. States 01, 11, and 10 would represent the patches f1, f2, and f3, respectively. These possible states represent symbols each with a probability of occurrence. Intuitively, we can imagine HS reaching its maximum when the arrangement of sensors R results in patches F with an equal likelihood of detecting an object in S. Since HS naturally increases from 0, we define C simply as HS by the maximum possible value for any R. This maximum occurs when (R) produces an F with the number of patches previously denoted as mmax. Thus, HSmax = log2(mmax). C = HS / HSmax (12)

6.2. Considering Sensor Coverage

Our objective function provides a measure of sensor coverage. Since we want a value ranging from 0 to 1, we conveniently chose the the spatial probability of an object known to be in A that is also in S, namely P(S).

6.3. Considering Intrinsic Sensor-Array Properties

The purpose of our objective function is to bring characteristics of the sensor array, regardless of the specific arrangement of sensor regions R, into U. Intuitively, we know that the size and number of the sensors must have some impact on our overall engineering design. At the very least, we would expect the cost of the solution to increase with the number of sensors. Since we have limited ourselves to uniform sensor region size, we define as a simple function of sensor count which we want to maximize with the fewest number of sensors. To do this, we use 1/n which is largest when the n = 1 and is undefined and uninteresting for sensor counts of 0.

7. STUDYING SENSOR PLACEMENT

With U, we have a metric for measuring the combined benefits of higher precision, higher coverage, and fewer sensors across many scenarios. And while we might use this approach to study either changes of field A or weights W for a given sensor array R, our primary concerns for the rest of this paper are scenarios where A and W are fixed and only the placement of the sensor regions R varies, the goal being to determine arrangements of R with maximal utility. It is important to note that by varying only sensor placement, the sensor count remains constant as does the value of . So that going forward, we only consider precision and coverage as defined by and . To facilitate our analysis, we derive three variations of utility functions UA, UB, and UC based on the three options for and choosing weights W = {1, 1, 0} to explicitly eliminate . UA = A + UB = B + UC = C + (13) (14) (15)

6

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013

Starting with two sensor regions at the same location as in Figure 3 (a), we may move them apart to a point where they form three equal patches as in Figure 3 (b) and continue until they are fully separated with no overlap as in Figure 3 (c). By setting the sensor width to 1, the width of the overall field to 2, and computing values for A, B, C, and , we can see that they achieve maximal values in different ways. Figure 4 plots each of these values on the Y-axis with the spacing from 0 to 1 on the X-axis.

Figure 4: Objective functions for two sensors moving from co-location to full separation Clearly from this example, we see that any choice for or by itself may give a different maximal result. The best choice for coverage occurs at full separation of the sensor regions, while the best choices for precision lie elsewhere with no clear criteria for choosing one or the other. By combining and to produce our three derived versions of U as in Figure 5, we see how that the overall utility is often maximally different than either taken separately.

Figure 5: Derived utility functions for two sensors moving from co-location to full separation

8. COMPARING EVEN AND RANDOM SPACING

Much of the literature assumes random placement of binary sensors in its analysis. Of course for well-known or fixed fields A, we may place the sensors in specific locations in an effort to maximize their effectiveness. For this study, we contrast random sensor placement with a continuation of the evenly-spaced sensor placment approach from the previous section. To test this idea, we define an overall field width of 100 and sensor regions of uniform width of 1, such that (A) = 100 and r R, (r) = 1. Next, we create scenarios with sensor densities of = 1.0 and 2.0, resulting in values of n = 100 and 200, respectively. For each sensor density , we then evenly space the sensor regions from 0 to 1 by increments of 0.001, and also generate 100 random scenarios where the placement of each may occur anywhere within A. Finally, we

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013

plot our three measures of sensor precision against the percentage of coverage A for each value of in Figure 6 and Figure 7.

Figure 6: Plot of precision objectives against percent coverage of A with density = 1.0 and indicating maximum utility U for each

Figure 7: Plot of precision objectives against percent coverage of A with density = 2.0 and indicating maximum utility U for each We are able to make several important observations from these numerical studies. Perhaps the most significant is that even spacing produces better results than random spacing in almost all cases, and that some evenly-spaced placement option is always superior to the best random result. As we might expect, the random results tend to cluster around an average coverage range that increases with . Whereas, the even spacing results for each variation of display a characteristic pattern of their underlying approach to measurement. Another important observation is the location of the maximum utility function U. For example, UB in Figure 6 achieves its maximum with only 70% coverage, given W = {1, 1, 0}. With even spacing, all measures display low values for the smallest possible coverage where spacing is 0 and all sensor regions R are co-located and have a coverage of max((R)) / (A) = 0.1. The measures quickly reach their maximum at spacing of max((R)) / n where the uniform sensor size is divided by sensor count to produce exactly n patches of equal size. When looking specifically at objective function A, we see that even spacing shows a linear decrease in precision as the largest patch increases in size to (A) / n when R achieves full coverage. However, multiple evaluations using random spacing almost always produced at least

8

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013

one patch based on an isolated sensor region such that max((F)) = 1, effectively making all results the same. With even spacing, result is not very different in practical terms since the mostly-linear relationship between A and leads to either choosing the maximum precision or maximum coverage option, and which choice is highly sensitive the weights W used. Both B and C, display an interesting pattern of local minima and maxima for the even- spacing scenarios. By closely inspecting the data, we see that the local minima occur when sensor boundaries align such that the number of patches generated for the arrangement drops significantly and/or result in high variance of size. Despite the differences in calculation, both approaches generate local minima for the same spacing intervals, with local maxima often at different spacing intervals. B based on the idea of average accuracy generally decreases as coverage increases despite the local fluctuations, which we would intuitively expect since the patch sizes are generally getting larger and the precision therefore must suffer. On the other hand, C based on entropy suggests the idea that many spacing choices may result in near-maximal information flow. Not all of the local maxima displayed for C are, in fact, equal as would be evident by closely inspecting the data, but they are, nevertheless, quite close for very different even-spacing configuration. This quality tends to result in the spacing whose local maxima for C is also closest to full coverage being selected as having the maximum utility for the UC variant. Intuitively, UC leads us to a desirable outcome where coverage is high, if not full, but the best choice takes into consideration the performance of that coverage as a source of information. Overall, the UA variant of utility does not effectively assist in balancing the considerations of precision and coverage, while both, UB and UC lead to maximum results that balance both.

9. CONCLUSIONS AND FUTURE WORK

By studying the utility function U, we find that the variations using mean squared error (B) and entropy (C) show promising results as a way to balance the competing factors of precision and coverage in the design of a sensor array. Additional work is necessary to apply this method to specific sensor array designs, as well as the selection of different weighting factors W, and especially the re-introduction of the objective function by using an non-zero w3. Other considerations for future study include non-uniform sensor size in the definition of , nonuniform spatial probability distribution of objects within and , and the role of temporal probability in the overall definition of U.

REFERENCES

[1] [2] [3] [4] P. Agarwal and M. Sharir. Arrangements and their Applications, pages 49119. Elsevier Science B. V., 1999. P. Asadzadeh, L. Kulik, E. Tanin, and A. Wirth. On optimal arrangements of binary sensors. In COSIT 2011, pages 168187. Springer-Verlag Berlin Heidelberg, 2011. J. Aslam, Z. Butler, F. Constantin, V. Crespi, G. Cybenko, and D. Rus. Tracking a moving object with a binary sensor network. In SenSys 03, pages 150161. ACM, November 2003. K. Chakrabarty, S. Iyengar, H. Qi, and E. Cho. Coding theory framework for target location in distributed sensor networks. In Information Technology: Coding and Computing, pages 130134, Las Vegas, NV, April 2001. 9

International Journal of Information Sciences and Techniques (IJIST) Vol.3, No.1, January 2013 [5] [6] [7] [8] A. Krause, A. Singh, and C. Guestrin. Near-optimal sensor placements in gaussian processes: Theory, efficient algorithms and empirical studies. Journal of Machine Learning Research, 9:235284, 2008. C. Shannon. A mathematical theory of communication. Bell System Technical Journal, 27:379423 and 623656, July, October 1948. B. Sharif and F. Kamalabadi. Optimal sensor array configuration in remote image formation. IEEE Trans. Image Process., 17(2):155166, February 2008. N. Shrivastava, R. Mudumbai, U. Madhow, and S. Suri. Target tracking with binary proximity sensors: Fundamental limits, minimal descriptions, and algorithms. In SenSys 06, pages 251264. ACM, November 2006. N. Shrivastava, R. Mudumbai, U. Madhow, and S. Suri. Target tracking with binary proximity sensors. ACM Transactions on Sensor Networks, 5(4):30:130:33, November 2009.

[9]

Authors

Stephen P. Emmons is a Ph.D. candidate in Computer Science and Engineering at the University of Texas at Arlington. He has had a career developing commercial software products for technical illustration, 3D solids modelling, and wireless sensor networking spanning over 30 years.

Professor Farhad Kamangar received his Ph.D. in electrical engineering from the University of Texas at Arlington in 1980 and M.S. in electrical engineering from UTA in 1977. He received his B.S. from the University of Tehran, Iran, in 1975. His research interests include image processing, robotics, signal processing, machine intelligence and computer graphics.

10

Das könnte Ihnen auch gefallen

- Sensor Deployment Using Particle Swarm Optimization: Nikitha KukunuruDokument7 SeitenSensor Deployment Using Particle Swarm Optimization: Nikitha KukunuruArindam PalNoch keine Bewertungen

- Critical Density Thresholds For Coverage in Wireless Sensor NetworksDokument6 SeitenCritical Density Thresholds For Coverage in Wireless Sensor NetworksjeevithaNoch keine Bewertungen

- Algorithms For Wireless Sensor NetworksDokument30 SeitenAlgorithms For Wireless Sensor Networkspshankar02Noch keine Bewertungen

- Sensors PDFDokument30 SeitenSensors PDFregregregeNoch keine Bewertungen

- Spatial Cluster Coverage of Sensor Networks: 2. Coverage in A Three-Dimensional Region at Critical PercolationDokument5 SeitenSpatial Cluster Coverage of Sensor Networks: 2. Coverage in A Three-Dimensional Region at Critical Percolationijcnsvol2no8Noch keine Bewertungen

- Direction FindingDokument5 SeitenDirection FindingOlariNoch keine Bewertungen

- Electrical and Computer Engineering Department University of Maryland, College Park, MD 20742, USADokument6 SeitenElectrical and Computer Engineering Department University of Maryland, College Park, MD 20742, USAdiedie_hervNoch keine Bewertungen

- Effective Coverage Control Using Dynamic Sensor Networks With Flocking and Guaranteed Collision AvoidanceDokument7 SeitenEffective Coverage Control Using Dynamic Sensor Networks With Flocking and Guaranteed Collision AvoidanceAmr MabroukNoch keine Bewertungen

- Sensor Network LocalizationDokument47 SeitenSensor Network Localizationgfdsal878Noch keine Bewertungen

- Mining Spectral Libraries To Study Sensors' Discrimination AbilityDokument9 SeitenMining Spectral Libraries To Study Sensors' Discrimination AbilityLídia ValerioNoch keine Bewertungen

- Subspace MethodsDokument47 SeitenSubspace MethodsSukanya VemulapalliNoch keine Bewertungen

- Energy Efficient Anchor-Based Localization Algorithm For WSNDokument8 SeitenEnergy Efficient Anchor-Based Localization Algorithm For WSNInternational Organization of Scientific Research (IOSR)Noch keine Bewertungen

- A Passive Localization Algorithm and Its AccuracyDokument12 SeitenA Passive Localization Algorithm and Its AccuracyJi Hau CherngNoch keine Bewertungen

- LISTS ALGS Elnahrawy04limitsDokument9 SeitenLISTS ALGS Elnahrawy04limitsLi XuanjiNoch keine Bewertungen

- On The Robustness of Grid-Based Deployment in Wireless Sensor NetworksDokument6 SeitenOn The Robustness of Grid-Based Deployment in Wireless Sensor NetworksGorishsharmaNoch keine Bewertungen

- On The Chances of Being Struck by Cloud-to-Ground Lightning: KriderDokument4 SeitenOn The Chances of Being Struck by Cloud-to-Ground Lightning: KriderItalo ChiarellaNoch keine Bewertungen

- Poster Abstract: Entropy-Based Sensor Selection For LocalizationDokument2 SeitenPoster Abstract: Entropy-Based Sensor Selection For LocalizationAMIT KUMARNoch keine Bewertungen

- Ad Hoc Networks: Tal Marian, Osnat (Ossi) Mokryn, Yuval ShavittDokument11 SeitenAd Hoc Networks: Tal Marian, Osnat (Ossi) Mokryn, Yuval ShavittJayraj SinghNoch keine Bewertungen

- Ultrasonic Sensor Network: Target Localization With Passive Self-LocalizationDokument9 SeitenUltrasonic Sensor Network: Target Localization With Passive Self-LocalizationAmit SinghNoch keine Bewertungen

- PXC 3873833Dokument5 SeitenPXC 3873833sohelquadriNoch keine Bewertungen

- Maximum Mutual Information Principle For Dynamic Sensor Query ProblemsDokument12 SeitenMaximum Mutual Information Principle For Dynamic Sensor Query ProblemsPalash SwarnakarNoch keine Bewertungen

- Coverage Enhancement of Average Distance Based Self-Relocation Algorithm Using Augmented Lagrange OptimizationDokument14 SeitenCoverage Enhancement of Average Distance Based Self-Relocation Algorithm Using Augmented Lagrange OptimizationijngnNoch keine Bewertungen

- Per Com 2009Dokument5 SeitenPer Com 2009GorishsharmaNoch keine Bewertungen

- VRC Sketch Radiance Caching For Participating MediaDokument1 SeiteVRC Sketch Radiance Caching For Participating MediaPavol IľkoNoch keine Bewertungen

- 2012 - Analysis of The Influence of Forestry Environments On The Accuracy of GPS Measurements by Means of Recurrent Neural NetworksDokument8 Seiten2012 - Analysis of The Influence of Forestry Environments On The Accuracy of GPS Measurements by Means of Recurrent Neural NetworksSilverio G. CortesNoch keine Bewertungen

- Localization in Sensor NetworksDokument50 SeitenLocalization in Sensor Networksgk_gbuNoch keine Bewertungen

- Data MiningDokument13 SeitenData MiningFahad KhanNoch keine Bewertungen

- Sparsity Estimation From Compressive Projections Via Sparse Random MatricesDokument18 SeitenSparsity Estimation From Compressive Projections Via Sparse Random MatricesZorba ZorbaNoch keine Bewertungen

- J. Parallel Distrib. Comput.: Barun Gorain Partha Sarathi MandalDokument9 SeitenJ. Parallel Distrib. Comput.: Barun Gorain Partha Sarathi MandalAnkurTiwariNoch keine Bewertungen

- A Denoising Approach To Multichannel Signal EstimationDokument4 SeitenA Denoising Approach To Multichannel Signal Estimationpraba821Noch keine Bewertungen

- Localizationmag10 (Expuesto)Dokument10 SeitenLocalizationmag10 (Expuesto)mmssrrrNoch keine Bewertungen

- Detection Classification OilDokument20 SeitenDetection Classification OilMiguel Angel Gamboa GamboaNoch keine Bewertungen

- (VK) 25. Bayesian Node LocalisationDokument4 Seiten(VK) 25. Bayesian Node LocalisationHarshNoch keine Bewertungen

- Subspace Histograms For Outlier Detection in Linear Time: Saket Sathe Charu C. AggarwalDokument25 SeitenSubspace Histograms For Outlier Detection in Linear Time: Saket Sathe Charu C. AggarwalBodhan ChakrabortyNoch keine Bewertungen

- A New Strategy To Cover Two Dimensional ROIDokument4 SeitenA New Strategy To Cover Two Dimensional ROIInternational Journal of Innovative Science and Research TechnologyNoch keine Bewertungen

- Baharav - Capacitive Touch Sensing Signal and Image Processing AlgorithmsDokument12 SeitenBaharav - Capacitive Touch Sensing Signal and Image Processing AlgorithmsHaipeng JinNoch keine Bewertungen

- Fuzzy Node Localization in Wireless Sensor Network: Suman Bhowmik Rajib Kar Chandan GiriDokument5 SeitenFuzzy Node Localization in Wireless Sensor Network: Suman Bhowmik Rajib Kar Chandan GiriassnadNoch keine Bewertungen

- Cram Er-Rao Bound Analysis On Multiple Scattering in Multistatic Point Scatterer EstimationDokument4 SeitenCram Er-Rao Bound Analysis On Multiple Scattering in Multistatic Point Scatterer EstimationkmchistiNoch keine Bewertungen

- A Class of Spectrum-Sensing Schemes For Cognitive Radio Under Impulsive Noise Circumstances: Structure and Performance in Nonfading and Fading EnvironmentsDokument18 SeitenA Class of Spectrum-Sensing Schemes For Cognitive Radio Under Impulsive Noise Circumstances: Structure and Performance in Nonfading and Fading EnvironmentsBedadipta BainNoch keine Bewertungen

- Broadband Source Angle Estimation Using: A Sparse Uniform Linear Acoustic ArrayDokument6 SeitenBroadband Source Angle Estimation Using: A Sparse Uniform Linear Acoustic Arrayscribd1235207Noch keine Bewertungen

- International Journal of Engineering Research and DevelopmentDokument5 SeitenInternational Journal of Engineering Research and DevelopmentIJERDNoch keine Bewertungen

- A Comparative Study of Different Entropies For Spectrum Sensing TechniquesDokument15 SeitenA Comparative Study of Different Entropies For Spectrum Sensing Techniquessuchi87Noch keine Bewertungen

- Optimal MTM Spectral Estimation Based Detection For Cognitive Radio in HDTVDokument5 SeitenOptimal MTM Spectral Estimation Based Detection For Cognitive Radio in HDTVAbdul RahimNoch keine Bewertungen

- Short 4Dokument7 SeitenShort 4issam sayyafNoch keine Bewertungen

- Dynamic Ultrasound Scatterer Simulation Model Using Field II and FEM For Speckle TrackingDokument5 SeitenDynamic Ultrasound Scatterer Simulation Model Using Field II and FEM For Speckle TrackingMohammed A. MaherNoch keine Bewertungen

- Compressive Sensing-Based Coprime Array Direction-Of-Arrival EstimationDokument6 SeitenCompressive Sensing-Based Coprime Array Direction-Of-Arrival Estimationzeeshan amirNoch keine Bewertungen

- Active, Optical Range Imaging Sensors Paul J. Besl Computer ScienceDokument26 SeitenActive, Optical Range Imaging Sensors Paul J. Besl Computer SciencetoddthefrogNoch keine Bewertungen

- Censoring Sensors1 PDFDokument4 SeitenCensoring Sensors1 PDFRex JimNoch keine Bewertungen

- Introduction To Synthetic Aperture Sonar: Roy Edgar HansenDokument27 SeitenIntroduction To Synthetic Aperture Sonar: Roy Edgar HansenKarelkatNoch keine Bewertungen

- Using Infrared Sensors For Distance Measurement in Mobile RobotsDokument12 SeitenUsing Infrared Sensors For Distance Measurement in Mobile Robotspritamchandra007Noch keine Bewertungen

- Cite453High Res Radar Via CSDokument10 SeitenCite453High Res Radar Via CSNag ChallaNoch keine Bewertungen

- Sparse Signal Reconstruction Using Basis Pursuit AlgorithmDokument5 SeitenSparse Signal Reconstruction Using Basis Pursuit AlgorithmdwipradNoch keine Bewertungen

- Versatile Medical Image Denoising AlgorithmDokument22 SeitenVersatile Medical Image Denoising AlgorithmSrinivas Kiran GottapuNoch keine Bewertungen

- Energy Detection Based Spectrum Sensing For Sensing Error Minimization in Cognitive Radio NetworksDokument5 SeitenEnergy Detection Based Spectrum Sensing For Sensing Error Minimization in Cognitive Radio NetworksmalebrancheNoch keine Bewertungen

- Geostatistical AnalystDokument18 SeitenGeostatistical AnalystauankNoch keine Bewertungen

- Measurement Design For Detecting Sparse SignalsDokument29 SeitenMeasurement Design For Detecting Sparse SignalsKlausNoch keine Bewertungen

- Energy Efficient and Scheduling Techniques For Increasing Life Time in Wireless Sensor NetworksDokument8 SeitenEnergy Efficient and Scheduling Techniques For Increasing Life Time in Wireless Sensor NetworksIAEME PublicationNoch keine Bewertungen

- Algorithms For Location Estimation Based On RSSI SamplingDokument15 SeitenAlgorithms For Location Estimation Based On RSSI SamplingLukmanulhakim MalawatNoch keine Bewertungen

- Mostly-Sleeping Wireless Sensor Networks: Connectivity, k -Coverage, and α-LifetimeDokument10 SeitenMostly-Sleeping Wireless Sensor Networks: Connectivity, k -Coverage, and α-Lifetimeaksagar22Noch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- International Journal of Network Security & Its Applications (IJNSA) - ERA, WJCI IndexedDokument2 SeitenInternational Journal of Network Security & Its Applications (IJNSA) - ERA, WJCI IndexedAIRCC - IJNSANoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- International Journal of Network Security & Its Applications (IJNSA) - ERA, WJCI IndexedDokument2 SeitenInternational Journal of Network Security & Its Applications (IJNSA) - ERA, WJCI IndexedAIRCC - IJNSANoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Lexical-Semantic Meanings and Stylistic Features of Causative Verbs in Uzbek LanguageDokument6 SeitenLexical-Semantic Meanings and Stylistic Features of Causative Verbs in Uzbek LanguageMandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- The Meaning of Causation in Linguocognitive AspectDokument5 SeitenThe Meaning of Causation in Linguocognitive AspectMandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- Call For Paper - International Journal of Information Science & Techniques (IJIST)Dokument2 SeitenCall For Paper - International Journal of Information Science & Techniques (IJIST)Mandy DiazNoch keine Bewertungen

- AVL TreeDokument36 SeitenAVL TreesalithakkNoch keine Bewertungen

- Fourier Series and Fourier TransformDokument3 SeitenFourier Series and Fourier TransformJohn FuerzasNoch keine Bewertungen

- CPM PertDokument21 SeitenCPM Pertkabina goleNoch keine Bewertungen

- Applied Sciences: Nonlinear Control Design of A Half-Car Model Using Feedback Linearization and An LQR ControllerDokument17 SeitenApplied Sciences: Nonlinear Control Design of A Half-Car Model Using Feedback Linearization and An LQR ControllerAntônio Luiz MaiaNoch keine Bewertungen

- Data Structures and Algorithms Lab Journal - Lab 1Dokument11 SeitenData Structures and Algorithms Lab Journal - Lab 1MZ MalikNoch keine Bewertungen

- Lec 09-Left Recursion RemovalDokument23 SeitenLec 09-Left Recursion RemovalShaheryar KhattakNoch keine Bewertungen

- CS Practical File 2Dokument36 SeitenCS Practical File 2Nikhil JohariNoch keine Bewertungen

- Assignment 2: SHOW ALL WORK, Clearly and in OrderDokument10 SeitenAssignment 2: SHOW ALL WORK, Clearly and in OrderMatt SguegliaNoch keine Bewertungen

- Comparison Study of Different Structures of PID ControllersDokument9 SeitenComparison Study of Different Structures of PID Controllers賴明宏Noch keine Bewertungen

- Zhang - A Novel Dynamic Wind Farm Wake Model Based On Deep LearningDokument13 SeitenZhang - A Novel Dynamic Wind Farm Wake Model Based On Deep LearningbakidokiNoch keine Bewertungen

- WEEK 5 SOLUTION METHODS FOR SIMULTANEOUS NLEs 2Dokument35 SeitenWEEK 5 SOLUTION METHODS FOR SIMULTANEOUS NLEs 2Demas JatiNoch keine Bewertungen

- F5 SteganographyDokument14 SeitenF5 SteganographyRatnakirti RoyNoch keine Bewertungen

- ME5107: Numerical Methods in Thermal EngineeringDokument21 SeitenME5107: Numerical Methods in Thermal EngineeringHemantNoch keine Bewertungen

- 1 2 Analyzing Graphs of Functions and RelationsDokument40 Seiten1 2 Analyzing Graphs of Functions and RelationsDenise WUNoch keine Bewertungen

- 1 - 8 Find The General Solution of Each Equation: Exercises B-4.1Dokument3 Seiten1 - 8 Find The General Solution of Each Equation: Exercises B-4.1Asad WadudNoch keine Bewertungen

- Experiment # 2: Communication Signals: Generation and Interpretation ObjectiveDokument19 SeitenExperiment # 2: Communication Signals: Generation and Interpretation ObjectiveSyed F. JNoch keine Bewertungen

- Cc-Lec Individual Assignment Week 8 Quality ControlDokument4 SeitenCc-Lec Individual Assignment Week 8 Quality ControlJ Pao Bayro LacanilaoNoch keine Bewertungen

- Time Value of Money Quiz ReviewerDokument3 SeitenTime Value of Money Quiz ReviewerAra FloresNoch keine Bewertungen

- Chapter - 5part1 DIGITAL ELECTRONICSDokument20 SeitenChapter - 5part1 DIGITAL ELECTRONICSNUR SYAFIQAH l UTHMNoch keine Bewertungen

- Intelligent Waste Classification System Using CNNDokument14 SeitenIntelligent Waste Classification System Using CNNkowsalya.cs21Noch keine Bewertungen

- 55646530njtshenye Assignment 4 Iop2601 1Dokument5 Seiten55646530njtshenye Assignment 4 Iop2601 1nthabisengjlegodiNoch keine Bewertungen

- Bisection MethodDokument15 SeitenBisection MethodSohar AlkindiNoch keine Bewertungen

- Algorithmics Assignment Page 1 of 2Dokument2 SeitenAlgorithmics Assignment Page 1 of 2Yong Xiang LewNoch keine Bewertungen

- The Intuition Behind PCA: Machine Learning AssignmentDokument11 SeitenThe Intuition Behind PCA: Machine Learning AssignmentPalash GhoshNoch keine Bewertungen

- AZ4030-Subject - 3 KS 04 - 3 KE 04 - Data Structures - Year - B.E. Third Semester (Computer Science & Engineering) (CBCS) Winter 2020Dokument7 SeitenAZ4030-Subject - 3 KS 04 - 3 KE 04 - Data Structures - Year - B.E. Third Semester (Computer Science & Engineering) (CBCS) Winter 2020Mickey MouseNoch keine Bewertungen

- 9 HTK TutorialDokument17 Seiten9 HTK TutorialAndrea FieldsNoch keine Bewertungen

- Energy Consumption ForecastingDokument39 SeitenEnergy Consumption ForecastingRao ZaeemNoch keine Bewertungen

- Copie de OSSU CS TimelineDokument8 SeitenCopie de OSSU CS TimelineAbdelmoumene MidounNoch keine Bewertungen

- Proof To Shannon's Source Coding TheoremDokument5 SeitenProof To Shannon's Source Coding TheoremDushyant PathakNoch keine Bewertungen

- Regression Analysis Research Paper TopicsDokument4 SeitenRegression Analysis Research Paper Topicsfvg4mn01100% (1)