Beruflich Dokumente

Kultur Dokumente

Linda Westfall

Hochgeladen von

Guna SekarOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Linda Westfall

Hochgeladen von

Guna SekarCopyright:

Verfügbare Formate

Linda Westfall

Linda Westfall is the President of The Westfall Team, which provides Software Metrics and Software Quality Engineering training and consulting services. Prior to starting her own business, Linda was the Senior Manager of the Quality Metrics and Analysis at DSC Communications where her team designed and implemented a corporate wide metric program. Linda has over twenty years of experience in real time software engineering, quality and metrics. She has worked as a Software Engineer, Systems Analyst, Software Process Engineer and Manager of Production Software. Very active professionally, Linda Westfall is Chair of the American Society for Quality (ASQ) Software Division. She has also served as the Software Divisions Program Chair and Certification Chair and on the ASQ National Certification Board.

Software Customer Satisfaction

Linda Westfall The Westfall Team

Abstract Satisfying our customers is an essential element to staying in business in this modern world of global competition. We must satisfy and even delight our customers with the value of our software products and services to gain their loyalty and repeat business. Customer satisfaction is therefore a primary goal of process improvement programs. So how satisfied are our customers? One of the best ways to find out is to ask them using Customer Satisfaction Surveys. These surveys can provide management with the information they need to determine their customer's level of satisfaction with their software products and with the services associated with those products. Software engineers and other members of the technical staff can use the survey information to identify opportunities for ongoing process improvements and to monitor the impact of those improvements. This paper includes details on designing your own software customer satisfaction questionnaire, tracking survey results and example reports that turn survey data into useful information. Focusing on Key Customer Quality Requirements When creating a Customer Satisfaction Survey, our first objective is to get the customer to participate. If the survey deals with issues that the customer cares about, they are more likely to participate. We also want to make sure that the survey is short and easy to complete in order to increase our chances of this happening. If the survey is long and detailed, the recipient is more likely to set it aside to complete later, only to have it disappear into the stacks of other papers on their desk. Therefore, the first step in creating a Customer Satisfaction Survey is to focus in on the customer's key quality requirements. When determining this list of key quality requirements it can be helpful to start by looking to the software quality literature and selecting those factors that are relevant to your specific products or services. For example, in his book Practical Software Metrics for Project Management and Process Improvement , Bob Grady discusses the FURPS+ quality model used at HewlettPackard. The elements of the FURPS+ model include Functionality, Usability, Reliability, Performance and Support. The plus (+) extends the acronym to include quality components that are specific to individual products. A second example is the ISO 9126 standard, Information Technology - Software Product Evaluation - Quality Characteristics and Guidelines for Their Use, that defines seven quality characteristics for software product evaluation including: Usability Reliability Efficiency Reusability Maintainability Portability Testability

In his book Measuring Customer Satisfaction, Bob Hayes has an example of a support survey based on the quality requirements of availability, responsiveness, timeliness, completeness, and professionalism. These general lists from the literature can be tailored to match the quality requirements of a specific software product or service. For example, if your software product has extensive user interfaces and is sold internationally, the ability to easily change the product to meet the needs of

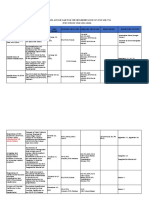

languages other than English may be a key quality requirement. An excellent source of information to use when making a tailoring decision is the people who created the software product or who provide the software services. They can have unique insight into their job functions and how they relate to meeting the customer's requirements. Another mechanism for determining the customer's quality requirements is the Critical Incident Approach describes by Bob Hayes in Measuring Customer Satisfaction. In this approach, customers are interviewed and each interviewee is asked to describe 5-10 positive and 5-10 negative encounters with the product or service that they have personally encountered in the past. The incidents are then used to generate categories of satisfaction items based on shared common words used in the incident description. For example, both positive and negative statements about how long they had to wait for help when they phoned the technical service support line would be grouped together into a length of wait for service category. These satisfaction items are then used to discover key customer quality requirements. For example, the "length of wait for service" item could be combined with the "ability to schedule a convenient field representative service call appointment" item and the "number of people transferred to" item, then summarized as the quality requirement called Availability of Service. Creating the Questionnaire After selecting the key quality requirements that will be the focus of the survey, the next step is to create the survey questionnaire. The questionnaire should start with an introduction that briefly states the purpose of the survey and includes the instructions for completing the survey. Figure 1 illustrates an example of a Software Customer Satisfaction Survey. The introduction is followed by the list of questions. This survey has two questions for each of the quality requirements of functionality (questions 9 & 10), usability (questions 7 & 8), initial reliability (questions 3 & 4), long-term reliability (questions 5 & 6), technical support (questions 11 & 12), installation (questions 1 & 2), documentation (questions 13 & 14) and training (questions 15 & 16). This adds redundancy to the questionnaire but it also adds a level of reliability to the survey results. Just like we would not try to determine a persons actual math aptitude by asking them a single math question, asking a single question about each quality requirement reduces the reliability of predicting the actual satisfaction level from the measured level. The questionnaire also has two questions that judge the customers overall satisfaction, one for the software product and one for the support services. The questionnaire in Figure 1 uses a scale of 1 to 5 to measure the customer satisfaction level. We could have simply asked the question "Are you satisfied or dissatisfied". However, from a statistical perspective, scales with only two response options are less reliable than scales with five response options. Studies have shown that reliability seems to level off after five scale points so further refinement of the scale does not add much value. Note that this example also asks the customer to rank the importance of each item. I will discuss the use of the importance index later in this paper. In addition to the basic questions on the questionnaire, additional demographic information should be gathered to aid in the detailed analysis of survey results (not shown in Figure 1). Again, the questions included in the demographic section should be tailored for individual organizations. For example, the demographic information on a survey for a provider of large software systems that are used by multiple individuals within a customer's organization might include: Product being surveyed Current software release being used Function/role of the individual completing the survey (e.g., purchaser, user/operator, installer, analyst, engineer/maintainer)

Figure 1: Example - Software Customer Satisfaction Survey

The ABC Software Company is committed to quality and customer satisfaction. We would like to know your opinion of our XYZ software product. Please indicate your level of satisfaction with and the importance to you of each of the following characteristics of our product. On a scale of 1 to 5, circle the appropriate number that indicates how satisfied you are with each of the following items. A score of 1 being very dissatisfied (VD) and 5 being very satisfied (VS). On a scale of 1 to 5, circle the appropriate number that indicates the importance to you of each of the following items. A score of 1 being very unimportant (VU) and 5 being very important (VI). Note that items 17 & 18 do not have importance scores since they are overall satisfaction items. In the Comment section after each question, please include reasons for your satisfaction or dissatisfaction with this item including specific examples where possible. Satisfaction 1. 2. Ease of installation of the software Comments: Completeness and accuracy of installation instructions Comments: Ability of the initially delivered software to function without errors or problems Comments: Ability of the initially delivered software to function without crashes or service interruptions Comments: Long term ability of the software to function without errors or problems Comments: Long term ability of the software to function without crashes or service interruptions Comments: Ability of the user to easily perform required tasks using the software Comments: User friendliness of the software Comments: Completeness of the software in providing all of the functions I need to do my job Comments: Technical leadership of the functionality of this product compared to other similar products Comments: Availability of the technical support Comments: Ability of technical support to solve my problems Comments: Completeness of the user documentation Comments: Usefulness of the user documentation Comments: Completeness of the training Comments: Usefulness of the training Comments: Overall, how satisfied are you with the XYZ software product? Comments: Overall, how satisfied are you with the XYZ software products support services? Comments: VD 1 1 VS 2 2 3 3 4 4 5 5 VU 1 1 Importance VI 2 2 3 3 4 4 5 5

3.

4.

5.

6.

7.

8. 9.

1 1

2 2

3 3

4 4

5 5

1 1

2 2

3 3

4 4

5 5

10.

11. 12. 13. 14. 15. 16. 17.

1 1 1 1 1 1 1

2 2 2 2 2 2 2

3 3 3 3 3 3 3

4 4 4 4 4 4 4

5 5 5 5 5 5 5

1 1 1 1 1 1

2 2 2 2 2 2

3 3 3 3 3 3

4 4 4 4 4 4

5 5 5 5 5 5

18.

Experience level of the individual completing the survey with this software product (e.g., less than 6 months, 6 months - 2 years, 3 - 5 years, 5-10 years, more than 10 years)

Finally, some of the most valuable information that comes from a Customer Satisfaction Survey may come to us not from the questions themselves but from the "comments" section. I recommend that each question include a "comments" section. This gives the respondee the opportunity to write down their comments as they are thinking about that specific question. Figure 1 demonstrates the placement of the comment areas, but on a real questionnaire more space would be provided for actual comments. I have found the following benefits from having a comment section for each question: Comments are more specific. The volume of comments received is greater. Comments are easier to interpret since they are in a specific context.

The last step in creating the questionnaire is to test it by conducting a pilot survey with a small group of customers. The pilot should test the questionnaire at the question level, insuring that each question produces a reasonable distribution, that the meaning the customer places on each question matches the intended meaning and that each question is not ambiguous, overly restrictive or overly general. The pilot should also take a macro view of testing the questionnaire, looking for problems with flow and sequence of the questions, question order or grouping that induces a bias, and issues with the time, length and format of the questionnaire. Terry Vavra's book, Improving Your Measurement of Customer Satisfaction, provides information on testing for and avoiding these mistakes. Who to Ask? The responses to our surveys may be very dependent on the role the respondee has in relationship to our software product. For example, if we again look at large software systems for large multi-user companies, the list of individual customer stakeholders might include: Purchasing Analysts Installers Users/Operators Engineers/Maintainers

If however, we are looking at shrink-wrapped software we might be more interested in customer groups by personal vs. business use or by whether they are using the product on a stand-alone PC or on a network. In their book, Exploring Requirements, Donald Gause and Gerald Weinberg outline a set of steps for determining user constituencies. The first step is to brainstorm a list of all possible users. This includes any group that is affected by or affects the product. The second step is to reduce this list by classifying them as friendly, unfriendly or one to ignore. For example, users that are trying to obtain unauthorized information from the system would be classified as unfriendly. Typically, for the purposes of Customer Satisfaction Surveys, we want to focus our efforts on those groups classified as friendly. There are several ways of dealing with this diversity in Customer Satisfaction Surveys. First, you may want to sample from your entire customer population and simply ask demographic questions like those above to help analyze the responses by customer group. Secondly, you may want to limit your survey to only one customer group. For example, if you notice that your sales have fallen in a particular market, you may want to survey only customers in that market. When sending questionnaires to a sample set of customers, your goal is to generalize the responses into information about the entire population of customers. In order to do this, you need to use random sampling techniques when selecting the sample. If you need to insure that you

have adequate coverage of all customer groups, you may need to use more sophisticated selection techniques like stratified sampling. Both Bob Hayes' and Terry Vavra's books discuss sampling techniques. Designing a Customer Satisfaction Database The results of conducting a Customer Satisfaction Survey are the accumulation of large amounts of data. Every item on the survey will have two responses (i.e., satisfaction level and importance) and potentially a verbose response to the "comment" area. Multiply that by the number of questions and by the number of respondees. Add the demographic data and the volume of data can become huge. I highly recommend that an automated database be created to record and manipulate this data. A well-designed database will also allow for easy data analysis and reporting of summarized information. The following paragraphs describe the basic record structure in an example relational customer satisfaction database. For smaller, simple surveys, this database could be implemented using a spreadsheet, however, for large amounts of data I recommend that a database tool be used to implement the database. Figure 2 illustrates the relationships between the records in an example database. There would be one customer record for each of the company's major customers (e.g., a supplier of telecommunications equipment might have major customers of Sprint, Bell South and Verizon). Figure 2: Example Customer Satisfaction Survey Database Design

Customer Record

(The customer record is not necessary if each respondee is a unique customer)

- A unique customer identifier - Demographic information about the customer (e.g., role, location, products purchased by the customer, sales volume)

Survey Record - A unique survey identifier - Demographic information about the respondee who completed the survey (e.g.,name, role and location, experience level with the product) - Demograph information about the product being surveyed (e.g., product name and type, software release identifier) - Software product associated with this survey - Date of the survey Question Record Response Record - A unique response identifier - Score for the satisfaction level - Score for the importance level - A unique question identifier - Question text Comment Record - A unique comment identifier - Comment text

A survey record is created for each Customer Satisfaction Survey returned or interview completed. Multiple individuals at each company could complete one or more surveys so there is a one-to-many relationship between a company record and survey records. There is a response record for each question asked on the survey, creating a one-to-many relationship between the survey and response records and each response record has a one-to-one relationship with a question record. This design allows different questions to be asked of different survey participants (e.g., installers might be asked a different set of questions than the user) or the flexibility of modifying the questions over time without redesigning the database. The response record also has a one-to-one relationship with a comment record if text was entered in the comment portion of the questionnaire.

Reporting Survey Results - Turning Data Into Information As mentioned above, the results of conducting a Customer Satisfaction Survey are the accumulation of large amounts of data. The trick is to convert this data into information that can be used by managers and members of the technical staff. I have found three reports to be very useful. The first report summarizes the current satisfaction/importance level for each key quality requirement. The second report examines the distribution of the detailed response data. The third report trends the satisfaction level for each key quality requirement over time. Summary of Current Satisfaction Levels Figure 3 illustrates an example of the first type of metric report that summarizes the survey results and indicates the current customer satisfaction level with each of the quality requirements. To produce this report, the survey responses to all questions related to that quality requirement are averaged for satisfaction level and for importance. For each requirement, these averaged values are plotted as a numbered bubble on an x-y graph. The number corresponds to the requirement number on the left. The dark blue area on this graph indicates the long-term goal of having an average satisfaction score of better than 4 for all quality requirements. The lighter blue area indicates a shorter-term goal of having an average score better than 3. Green bubbles indicate scores that meet the long-term goal, yellow bubbles indicate scores that meet the short-term goal and red bubbles indicate scores outside the goal. This reports allows the user to quickly identify quality requirements that are candidates for improvement efforts.

Customer satisfaction survey results

1. Installation 2. Initial software reliability 3. Long term reliability

4 5

4 8

5 6

5. Functionality of software 6. Technical support 7. Documentation 8. Training

Importance

4. Usability of software

7

3

1

2

Goal

1 1 2 3

Satisfaction

Figure 3: Example of Summary Report for Current Satisfaction Levels Note that the long and short-term goals do not take importance into consideration. Our goal is to increase customer satisfaction, not to increase the importance of any quality requirement in the eyes of the customer. If the importance level of a requirement is low, then one of two things may be true. First, we may have misjudged the importance level of that requirement to the customer and it may not be a key quality requirement. In this case we may want to consider dropping it from our future surveys. On the other hand, the requirement could be important but just not high on the customer's priorities at this time. So how do we tell the difference? We do this by running a correlation analysis between the overall satisfaction score and the corresponding individual scores for that requirement. This will validate the importance of the quality dimension in

predicting the overall customer satisfaction. Bob Hayes' book, Measuring Customer Satisfaction, discusses this correlation analysis in greater detail. From the report in Figure 3, it is possible to quickly identify Initial Software Reliability (bubble 2) and Documentation (bubble 7) as primary opportunities to improve customer satisfaction. By polling importance as well as satisfaction level in our survey, we can see that even though Documentation has a poorer satisfaction level, Initial Software Reliability is much more important to our customers and therefore would probably be given a higher priority. Detailed Data Analysis Figure 4 illustrates an example of the second type of metrics report that shows the distribution of satisfaction scores for three questions. Graphs where the scores are tightly clustered around the mean (question A) indicate a high level of agreement amongst the customers on their satisfaction level. Distributions that are widely spread (question B) and particularly bi-modal distributions (question C) are candidates for further detail analysis. When analyzing the current satisfaction level, the reports in Figures 3 and 4 can be produced for various sets of demographic data. For example, versions of these graphs can be produced for each customer, each software release or each respondee role. These results could then be compared with each other and with overall satisfaction levels to determine if the demographics had any impact on the results. For example: Is there a particular customer who is unhappy with our technical support? Has the most recent release of the software increased customer satisfaction with our software's functionality? Are the installers dissatisfied with our documentation while the users are happy with it?

This type of analysis can require detailed detective work and creativity in deciding what combinations to examine. However, having an automated database and reporting mechanism makes it easy to perform the various extractions needed for this type of investigation.

40 35 30 25 20 15 10 5 0 1 2 3

Question A

Figure 4: Example of Question Response Distribution Report for Current Satisfaction Levels

40 35 30 25 20 15 10 5 0 1 2 3

Question B

40 35 30 25 20 15 10 5 0 1 2 3

Question C

The "comment" data can also be very valuable when analyzing the survey data for root cause reasons for customer dissatisfaction. This is especially true if there are reoccurring themes in the comments. For example, if one particular feature is mentioned repeatedly in the Functionality comments or if multiple customers mention the unavailability of technical support on weekends. Satisfaction Level Trends Over Time Another way to summarize the results of our satisfaction surveys is to look at trends over time. Figure 5 illustrates an example of the third type of metric report that trends the Features Promised vs. Delivered based on quarterly surveys conducted over a period of 18 months. Again, the dark and light blue areas on this graph indicate the long and short-term satisfaction level goals. This particular graph has a single line indicating the overall satisfaction level for the product. However when analyzing these trends, the demographic data can be used to create multi-line graphs where each line represents a separate classification (e.g., a customer, a software release or a respondee role). This allows for easy comparisons to determine if the demographics had any impact on the trends.

Features Promised vs. Delivered

5

Goal

Satisfaction Level

4 3 2 1 3Q00 4Q00 1Q01 2Q01 3Q01 4Q01

Figure 5: Example of Reporting Trends Satisfaction Levels Over Time One note of caution is that to trend the results over time, the survey instrument must remain unchanged in the area being trend. Any rewording of the question can have major impacts on results and historic responses before the change should not be used in the trend. The primary purpose of trend analysis is to determine if the improvements we have made to our products, services or processes had an impact on the satisfaction level of our customers. It should be remembered however, that satisfaction is a trailing indicator. Customers have long memories; the dismal initial quality of a software version three releases back may still impact their perception of our product even if the last two versions have been superior. We should not get discouraged if we do not see the dramatic jumps in satisfaction we might expect with dramatic jumps in quality. Customer Satisfaction is a subjective measure. It is a measure of perception, not reality, although when it comes to a happy customer, perception is more important than reality. One phenomenon that I have noticed is that as our products, services and processes have improved, the expectations of our customers have increased. They continue to demand bigger, better, faster. This can result in a flat trend even though we are continuously improving. Or worse still a declining graph because we are not keeping up with the increases in our customer's expectations. Even though this can be discouraging, it is valuable information that we need to know in the very competitive world of software.

REFERENCES: Bob E. Hayes, Measuring Customer Satisfaction: Survey Design, Use, and Statistical Analysis nd Methods, 2 Edition, ASQ Quality Press, Milwaukee, Wisconsin, 1998. Terry G. Vavra, Improving Your Measurement of Customer Satisfaction: A Guide to Creating, Conducting, Analyzing, and Reporting Customer Satisfaction Measurement, ASQ Quality Press, Milwaukee, Wisconsin, 1997. Donald C. Gause & Gerald M. Weinberg, Exploring Requirements: Quality Before Design, Dorset House Publishing, New York, New York, 1989. Robert Grady, Practical Software Metrics for Project Management and Process Improvement , PTR Prentice Hall, Englewood Cliffs, New Jersey, 1992. Norman Fenton, Robin Whitty & Yoshinori Iizuka, Software Quality Assurance and Measurement: A Worldwide Perspective, International Thomson Computer Press, London, England, 1995.

Das könnte Ihnen auch gefallen

- Software As A Secure Service A Complete Guide - 2020 EditionVon EverandSoftware As A Secure Service A Complete Guide - 2020 EditionNoch keine Bewertungen

- Customer Satisfaction SurveysDokument9 SeitenCustomer Satisfaction Surveysajjdev7101542100% (1)

- Windows Mobile Software Development A Complete Guide - 2020 EditionVon EverandWindows Mobile Software Development A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile Backend As A Service A Complete Guide - 2020 EditionVon EverandMobile Backend As A Service A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software Reliability Testing A Complete Guide - 2020 EditionVon EverandSoftware Reliability Testing A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software Product Line A Complete Guide - 2020 EditionVon EverandSoftware Product Line A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile Application Development Platform A Complete Guide - 2019 EditionVon EverandMobile Application Development Platform A Complete Guide - 2019 EditionNoch keine Bewertungen

- Mobile Service Level Management Software A Complete Guide - 2020 EditionVon EverandMobile Service Level Management Software A Complete Guide - 2020 EditionNoch keine Bewertungen

- Application Platform As A Service A Complete Guide - 2020 EditionVon EverandApplication Platform As A Service A Complete Guide - 2020 EditionNoch keine Bewertungen

- Customer Service Software A Complete Guide - 2020 EditionVon EverandCustomer Service Software A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software As Service A Complete Guide - 2020 EditionVon EverandSoftware As Service A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile Enterprise Application Platform A Complete Guide - 2020 EditionVon EverandMobile Enterprise Application Platform A Complete Guide - 2020 EditionNoch keine Bewertungen

- Application Software Market A Complete Guide - 2019 EditionVon EverandApplication Software Market A Complete Guide - 2019 EditionNoch keine Bewertungen

- Software Market Applications A Complete Guide - 2020 EditionVon EverandSoftware Market Applications A Complete Guide - 2020 EditionNoch keine Bewertungen

- Maintenance Of Software A Complete Guide - 2020 EditionVon EverandMaintenance Of Software A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software Plus Services A Complete Guide - 2020 EditionVon EverandSoftware Plus Services A Complete Guide - 2020 EditionNoch keine Bewertungen

- Live Support Software A Complete Guide - 2020 EditionVon EverandLive Support Software A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile And Endpoint A Complete Guide - 2019 EditionVon EverandMobile And Endpoint A Complete Guide - 2019 EditionNoch keine Bewertungen

- Data Quality Issues In Financial Services A Complete Guide - 2020 EditionVon EverandData Quality Issues In Financial Services A Complete Guide - 2020 EditionNoch keine Bewertungen

- Firmware As A Service A Complete Guide - 2020 EditionVon EverandFirmware As A Service A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile App Development Platforms A Complete Guide - 2019 EditionVon EverandMobile App Development Platforms A Complete Guide - 2019 EditionNoch keine Bewertungen

- Software Quality Model A Complete Guide - 2020 EditionVon EverandSoftware Quality Model A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile Enterprise Application Framework A Complete Guide - 2020 EditionVon EverandMobile Enterprise Application Framework A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software Application Vendors A Complete Guide - 2020 EditionVon EverandSoftware Application Vendors A Complete Guide - 2020 EditionNoch keine Bewertungen

- Voice User Interface A Complete Guide - 2020 EditionVon EverandVoice User Interface A Complete Guide - 2020 EditionNoch keine Bewertungen

- Data Language Interface A Complete Guide - 2020 EditionVon EverandData Language Interface A Complete Guide - 2020 EditionNoch keine Bewertungen

- Loyalty Program Software A Complete Guide - 2020 EditionVon EverandLoyalty Program Software A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile App Projects A Complete Guide - 2019 EditionVon EverandMobile App Projects A Complete Guide - 2019 EditionNoch keine Bewertungen

- Innovative Software Applications A Complete Guide - 2020 EditionVon EverandInnovative Software Applications A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile Customer Service A Complete Guide - 2020 EditionVon EverandMobile Customer Service A Complete Guide - 2020 EditionNoch keine Bewertungen

- Application Software Development A Complete Guide - 2020 EditionVon EverandApplication Software Development A Complete Guide - 2020 EditionNoch keine Bewertungen

- Application Program Interface A Complete Guide - 2020 EditionVon EverandApplication Program Interface A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software As A Product A Complete Guide - 2020 EditionVon EverandSoftware As A Product A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile And Endpoint Technologies A Complete Guide - 2019 EditionVon EverandMobile And Endpoint Technologies A Complete Guide - 2019 EditionNoch keine Bewertungen

- Self Service Software A Complete Guide - 2020 EditionVon EverandSelf Service Software A Complete Guide - 2020 EditionNoch keine Bewertungen

- Vertical Market Software A Complete Guide - 2020 EditionVon EverandVertical Market Software A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software Peer Review A Complete Guide - 2020 EditionVon EverandSoftware Peer Review A Complete Guide - 2020 EditionNoch keine Bewertungen

- Unified Communications As A Service A Complete Guide - 2020 EditionVon EverandUnified Communications As A Service A Complete Guide - 2020 EditionNoch keine Bewertungen

- Enterprise Mobile Application A Complete Guide - 2020 EditionVon EverandEnterprise Mobile Application A Complete Guide - 2020 EditionNoch keine Bewertungen

- Mobile Data Terminal A Complete Guide - 2020 EditionVon EverandMobile Data Terminal A Complete Guide - 2020 EditionNoch keine Bewertungen

- Microsoft Office Mobile Apps A Complete Guide - 2020 EditionVon EverandMicrosoft Office Mobile Apps A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software Development Services A Complete Guide - 2020 EditionVon EverandSoftware Development Services A Complete Guide - 2020 EditionNoch keine Bewertungen

- Data control language Complete Self-Assessment GuideVon EverandData control language Complete Self-Assessment GuideNoch keine Bewertungen

- Software Performance Testing A Complete Guide - 2020 EditionVon EverandSoftware Performance Testing A Complete Guide - 2020 EditionNoch keine Bewertungen

- Software Quality Control A Complete Guide - 2020 EditionVon EverandSoftware Quality Control A Complete Guide - 2020 EditionNoch keine Bewertungen

- The Why What Who When and How of Software RequirementsDokument14 SeitenThe Why What Who When and How of Software RequirementsPiyush JhambNoch keine Bewertungen

- What Is Quality:: Unit 1Dokument11 SeitenWhat Is Quality:: Unit 1amirNoch keine Bewertungen

- How To Build A House of Quality (QFD) : Reading Time: About 8 MinDokument14 SeitenHow To Build A House of Quality (QFD) : Reading Time: About 8 MinCuenta PokemonNoch keine Bewertungen

- A Hand Book ON Billing and Customer Care System IN GSM NetworkDokument19 SeitenA Hand Book ON Billing and Customer Care System IN GSM NetworkGuna SekarNoch keine Bewertungen

- Introduction To Rational Rose: General Guidelines and Class DiagramsDokument17 SeitenIntroduction To Rational Rose: General Guidelines and Class DiagramsGuna SekarNoch keine Bewertungen

- Question AriesDokument8 SeitenQuestion AriesGuna SekarNoch keine Bewertungen

- Working With HCLDokument1 SeiteWorking With HCLGuna SekarNoch keine Bewertungen

- Maldives Case StudyDokument62 SeitenMaldives Case StudyScrappyyy11Noch keine Bewertungen

- LicenseDokument3 SeitenLicenseAxel SusantaNoch keine Bewertungen

- Chirskoff v. Commissioner of ImmigrationDokument3 SeitenChirskoff v. Commissioner of ImmigrationjeiromeNoch keine Bewertungen

- Technical Seminar: Sapthagiri College of EngineeringDokument18 SeitenTechnical Seminar: Sapthagiri College of EngineeringParinitha B SNoch keine Bewertungen

- School Forms Checking ReportDokument3 SeitenSchool Forms Checking Reportarmand resquir jrNoch keine Bewertungen

- Assignment in Secondary Education: A Lightening Hope at The End of Tunnels Towards The Formative AssessmentDokument4 SeitenAssignment in Secondary Education: A Lightening Hope at The End of Tunnels Towards The Formative AssessmentRishadNoch keine Bewertungen

- Electrical/Electronics Equipment Servicing Level - Iii: Based On (October, 2021 1) Occupational Standard (OS)Dokument112 SeitenElectrical/Electronics Equipment Servicing Level - Iii: Based On (October, 2021 1) Occupational Standard (OS)gmnatigizawNoch keine Bewertungen

- FECS-Scale-final FAMILY EXPERIENCES IN CHILDHOODDokument11 SeitenFECS-Scale-final FAMILY EXPERIENCES IN CHILDHOODNuria Fernández de MarticorenaNoch keine Bewertungen

- Diagnostic Test 2018Dokument2 SeitenDiagnostic Test 2018Allone ForitNoch keine Bewertungen

- Second Draft of Growth Mindset EssayDokument4 SeitenSecond Draft of Growth Mindset Essayapi-331187950Noch keine Bewertungen

- Santos, Kim Franz Field Study 2 Episode 1Dokument11 SeitenSantos, Kim Franz Field Study 2 Episode 1Kiem SantosNoch keine Bewertungen

- ANSWERS1Dokument1 SeiteANSWERS1Charan KNoch keine Bewertungen

- Dialectical Behavior Therapy For Pre-Adolescent Children: Helping Parents Help Their KidsDokument33 SeitenDialectical Behavior Therapy For Pre-Adolescent Children: Helping Parents Help Their KidsAzalea Mancilla De AlbaNoch keine Bewertungen

- Eyes Open Level 1 Workbook Sample UnitDokument11 SeitenEyes Open Level 1 Workbook Sample UnitValentina EstayNoch keine Bewertungen

- 1 - I Listen and DoDokument2 Seiten1 - I Listen and DoMìss Prē Ttÿ100% (1)

- The Use of Dwarf Tomato Cultivar For Genetic and Physiology Study Applicable For School EducationDokument7 SeitenThe Use of Dwarf Tomato Cultivar For Genetic and Physiology Study Applicable For School EducationPremier Publishers100% (1)

- Assignment On Church Organisation and AdministrationDokument6 SeitenAssignment On Church Organisation and AdministrationjasperNoch keine Bewertungen

- Dental Officer Information - e (1989)Dokument10 SeitenDental Officer Information - e (1989)Marian GarciaNoch keine Bewertungen

- 02 Hbet4503 TocDokument4 Seiten02 Hbet4503 TocTce ShikinNoch keine Bewertungen

- 25 Brain Exercises For Memory That Actually Help You Remember MoreDokument5 Seiten25 Brain Exercises For Memory That Actually Help You Remember MoreJose Pepe100% (2)

- Advantage of Taking An Abm Strand in SHS 2Dokument8 SeitenAdvantage of Taking An Abm Strand in SHS 2CHERAMAE MORALESNoch keine Bewertungen

- Ilp 11Dokument4 SeitenIlp 11api-557208367Noch keine Bewertungen

- Proposed Action Plan For The Implementation of Jdvp-Shs TVLDokument5 SeitenProposed Action Plan For The Implementation of Jdvp-Shs TVLKimberly Cler Suarez0% (1)

- 11 Math NcertDokument476 Seiten11 Math NcertvijayNoch keine Bewertungen

- Heidegger Arthur Fine and The NOADokument16 SeitenHeidegger Arthur Fine and The NOAChris WalczakNoch keine Bewertungen

- TP 4 Past SimpleDokument15 SeitenTP 4 Past SimpleAmira SirajaddinNoch keine Bewertungen

- Module Zero (Answers) SauraDokument10 SeitenModule Zero (Answers) Saurabilly sauraNoch keine Bewertungen

- Spanish Homework For BeginnersDokument7 SeitenSpanish Homework For Beginnersafetvdqbt100% (1)

- IIRS AnswersDokument12 SeitenIIRS Answers20at1a3145Noch keine Bewertungen

- Robie House PresentationDokument25 SeitenRobie House PresentationTâm Phan50% (2)