Beruflich Dokumente

Kultur Dokumente

c2

Hochgeladen von

Destinifyd MydestinyOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

c2

Hochgeladen von

Destinifyd MydestinyCopyright:

Verfügbare Formate

Chapter 2 Generating Random Numbers

with Specied Distributions

Simulation and valuation of nance instruments require numbers with speci-

ed distributions. For example, in Section 1.6 we have used numbers Z drawn

from a standard normal distribution, Z ^(0, 1). If possible the numbers

should be random. But the generation of random numbers by digital com-

puters, after all, is done in a deterministic and entirely predictable way. If

this point is to be stressed, one uses the term pseudo-random

1

.

Computer-generated random numbers mimic the properties of true ran-

dom numbers as much as possible. This is discussed for uniformly distributed

numbers in Section 2.1. Suitable transformations generate normally distribu-

ted numbers (Sections 2.2, 2.3). Section 2.3 includes the vector case, where

normally distributed numbers are calculated with prescribed correlation.

Another approach is to dispense with randomness and to generate quasi-

random numbers, which aim at avoiding one disadvantage of random num-

bers, namely, the potential lack of equidistributedness. The resulting low-

discrepancy numbers will be discussed in Section 2.5. These numbers are

used for the deterministic Monte Carlo integration (Section 2.4).

Denition 2.1 (sample from a distribution)

A sequence of numbers is called a sample from F if the numbers are

independent realizations of a random variable with distribution function

F.

If F is the uniform distribution over the interval [0, 1) or [0, 1], then we call

the samples from F uniform deviates (variates), notation |[0, 1]. If F is

the standard normal distribution then we call the samples from F standard

normal deviates (variates); as notation we use ^(0, 1). The basis of the

random-number generation is to draw uniform deviates.

1

Since in our context the predictable origin is clear we omit the modier

pseudo, and hereafter use the term random number. Similarly we talk

about randomness of these numbers when we mean apparent randomness.

R.U. Seydel, Tools for Computational Finance, Universitext,

DOI 10.1007/978-1-4471-2993-6_2, Springer-Verlag London Limited 2012

75

Chapter 2 Generating Random Numbers with Specied Distributions

2.1 Uniform Deviates

A standard approach to calculate uniform deviates is provided by linear con-

gruential generators.

2.1.1 Linear Congruential Generators

Choose integers M, a, b, with a, b < M, a ,= 0. For N

0

IN a sequence of

integers N

i

is dened by

Algorithm 2.2 (linear congruential generator)

Choose N

0

.

For i = 1, 2, ... calculate

N

i

= (aN

i1

+b) mod M

(2.1)

The modulo congruence N = Y mod M between two numbers N and Y is

an equivalence relation [Gen98]. The number N

0

is called the seed. Numbers

U

i

[0, 1) are dened by

U

i

= N

i

/M , (2.2)

and will be taken as uniform deviates. Whether the numbers U

i

are suitable

will depend on the choice of M, a, b and will be discussed next.

Properties 2.3 (periodicity)

(a) N

i

0, 1, ..., M 1

(b) The N

i

are periodic with period M.

(Because there are not M + 1 dierent N

i

. So two in N

0

, ..., N

M

must be equal, N

i

= N

i+p

with p M.)

Obviously, some peculiarities must be excluded. For example, N = 0 must be

ruled out in case b = 0, because otherwise N

i

= 0 would repeat. In case a = 1

the generator settles down to N

n

= (N

0

+nb) mod M. This sequence is too

easily predictable. Various other properties and requirements are discussed in

the literature, in particular in [Knu95]. In case the period is M, the numbers

U

i

are distributed evenly when exactly M numbers are needed. Then each

grid point on a mesh on [0,1] with mesh size

1

M

is occupied once.

After these observations we start searching for good choices of M, a, b.

There are numerous possible choices with bad properties. For serious com-

putations we recommend to rely on suggestions of the literature. [PrTVF92]

presents a table of quick and dirty generators, for example, M = 244944,

a = 1597, b = 51749. Criteria are needed to decide which of the many possible

generators are recommendable.

76

2.1 Uniform Deviates

2.1.2 Quality of Generators

What are good random numbers? A practical answer is the requirement that

the numbers should meet all aims, or rather pass as many tests as possible.

The requirements on good number generators can roughly be divided into

three groups.

The rst requirement is that of a large period. In view of Property 2.3

the number M must be as large as possible, because a small set of numbers

makes the outcome easier to predict a contrast to randomness. This leads

to select M close to the largest integer machine number. But a period p close

to M is only achieved if a and b are chosen properly. Criteria for relations

among M, p, a, b have been derived by number-theoretic arguments. This is

outlined in [Rip87], [Knu95]. For 32-bit computers, a common choice has been

M = 2

31

1, a = 16807, b = 0.

A second group of requirements are the statistical tests that check whether

the numbers are distributed as intended. The simplest of such tests evaluates

the sample mean and the sample variance s

2

(B1.11) of the calculated

random variates, and compares to the desired values of and

2

. (Recall

= 1/2 and

2

= 1/12 for the uniform distribution.) Another simple test is

to check correlations. For example, it would not be desirable if small numbers

are likely to be followed by small numbers.

A slightly more involved test checks how well the probability distribution

is approximated. This works for general distributions ( Exercise 2.14).

Here we briey summarize an approach for uniform deviates. Calculate j

samples from a random number generator, and investigate how the samples

distribute on the unit interval. To this end, divide the unit interval into

subintervals of equal length U, and denote by j

k

the number of samples

that fall into the kth subinterval

kU U < (k + 1)U .

Then j

k

/j should be close the desired probability, which for this setup is U.

Hence a plot of the quotients

j

k

jU

for all k

against kU should be a good approximation of 1, the density of the uniform

distribution. This procedure is just the simplest test; for more ambitious tests,

consult [Knu95].

The third group of tests is to check how well the random numbers dis-

tribute in higher-dimensional spaces. This issue of the lattice structure is

discussed next. We derive a priori analytical results on where the random

numbers produced by Algorithm 2.2 are distributed.

77

Chapter 2 Generating Random Numbers with Specied Distributions

2.1.3 Random Vectors and Lattice Structure

Random numbers N

i

can be arranged in m-tuples (N

i

, N

i+1

, ..., N

i+m1

) for

i 1. Then the tuples or the corresponding points (U

i

, ..., U

i+m1

) [0, 1)

m

are analyzed with respect to correlation and distribution. The sequences de-

ned by the generator of Algorithm 2.2 lie on (m1)-dimensional hyperplanes.

This statement is trivial since it holds for the M parallel planes through

U = i/M, i = 0, ..., M 1. But if all points fall on only a small number of

parallel hyperplanes (with large empty gaps in between), then the generator

would be impractical in many applications. Next we analyze the generator

whether such unfavorable planes exist, restricting ourselves to the case m = 2.

For m = 2 the hyperplanes are straight lines, and are dened by z

0

N

i1

+

z

1

N

i

= , with parameters z

0

, z

1

, . The modulus operation can be written

N

i

= (aN

i1

+b) mod M

= aN

i1

+b kM for kM aN

i1

+b < (k + 1)M ,

k an integer, k = k(i). A side calculation for arbitrary z

0

, z

1

shows

z

0

N

i1

+z

1

N

i

= z

0

N

i1

+z

1

(aN

i1

+b kM)

= N

i1

(z

0

+az

1

) +z

1

b z

1

kM

= M N

i1

z

0

+az

1

M

z

1

k

. .

=:c

+z

1

b .

We divide by M and obtain the equation of a straight line in the (U

i1

, U

i

)-

plane, namely,

z

0

U

i1

+ z

1

U

i

= c +z

1

bM

1

, (2.3)

one line for each parameter c. The points calculated by Algorithm 2.2 lie

on these straight lines. To eliminate the seed we take i > 1. For each tuple

(z

0

, z

1

), the equation (2.3) denes a family of parallel straight lines, one

for each number out of the nite set of cs. The question is whether there

exists a tuple (z

0

, z

1

) such that only few of the straight lines cut the square

[0, 1)

2

? In this case wide areas of the square would be free of random points,

which violates the requirement of a uniform distribution of the points. The

minimum number of parallel straight lines (hyperplanes) cutting the square,

or equivalently the maximum distance between them serve as measures of the

equidistributedness. We now analyze the number of straight lines, searching

for the worst case.

For integers (z

0

, z

1

) satisfying

z

0

+az

1

= 0 mod M (2.4)

the parameter c is integer. By solving (2.3) for c = z

0

U

i1

+z

1

U

i

z

1

bM

1

and applying 0 U < 1 we obtain the maximal interval I

c

such that for

78

2.1 Uniform Deviates

each integer c I

c

its straight line cuts or touches the square [0, 1)

2

. We

count how many such cs exist, and have the information we need. For some

constellations of a, M, z

0

and z

1

it may be possible that the points (U

i1

, U

i

)

lie on very few of these straight lines!

Example 2.4 N

i

= 2N

i1

mod 11 (that is, a = 2, b = 0, M = 11)

We choose z

0

= 2, z

1

= 1, which is one tuple satisfying (2.4), and

investigate the family (2.3) of straight lines

2U

i1

+U

i

= c

in the (U

i1

, U

i

)-plane. For U

i

[0, 1) we have 2 < c < 1. In view of

(2.4) c is integer and so only the two integers c = 1 and c = 0 remain.

The two corresponding straight lines cut the interior of [0, 1)

2

. As Figure

2.1 illustrates, the points generated by the algorithm form a lattice. All

points on the lattice lie on these two straight lines. The gure lets us

discover also other parallel straight lines such that all points are caught

(for other tuples z

0

, z

1

). The practical question is: What is the largest

gap? ( Exercise 2.1)

0

0.2

0.4

0.6

0.8

1

0 0.2 0.4 0.6 0.8 1

Fig. 2.1. The points (U

i1

, U

i

) of Example 2.4

79

Chapter 2 Generating Random Numbers with Specied Distributions

Example 2.5 N

i

= (1229N

i1

+ 1) mod 2048

The requirement of equation (2.4)

z

0

+ 1229z

1

2048

integer

is satised by z

0

= 1, z

1

= 5, because

1 + 1229 5 = 6144 = 3 2048 .

For c from (2.3) and U

i

[0, 1) we have

1

5

2048

< c < 5

5

2048

.

All points (U

i1

, U

i

) lie on only six straight lines, with c 1, 0, 1, 2, 3, 4,

see Figure 2.2. On the lowest straight line (c = 1) there is only one

point. The distance between straight lines measured along the vertical

U

i

axis is

1

z

1

=

1

5

.

0

0.2

0.4

0.6

0.8

1

0 0.2 0.4 0.6 0.8 1

Fig. 2.2. The points (U

i1

, U

i

) of Example 2.5

Higher-dimensional vectors (m > 2) are analyzed analogously. The gene-

rator called RANDU

N

i

= aN

i1

mod M , with a = 2

16

+ 3, M = 2

31

80

2.1 Uniform Deviates

may serve as example. Its random points in the cube [0, 1)

3

lie on only 15

planes (Exercise 2.2). For many applications this must be seen as a severe

defect.

In Example 2.4 we asked what the maximum gap between the parallel

straight lines is. In other words, we have searched for strips of maximum

size in which no point (U

i1

, U

i

) falls. Alternatively we can directly analyze

the lattice formed by consecutive points. For illustration consider again Fi-

gure 2.1. We follow the points starting with (

1

11

,

2

11

). By vectorwise adding

an appropriate multiple of (1, a) = (1, 2) the next two points are obtained.

Proceeding in this way one has to take care that upon leaving the unit square

each component with value 1 must be reduced to [0, 1) to observe mod M.

The reader may verify this with Example 2.4 and numerate the points of the

lattice in Figure 2.1 in the correct sequence. In this way the lattice can be

dened. This process of dening the lattice can be generalized to higher di-

mensions m > 2. ( Exercise 2.3)

A disadvantage of the linear congruential generators of Algorithm 2.2 is

the boundedness of the period by M and hence by the word length of the

computer. The situation can be improved by shuing the random numbers

in a random way. For practical purposes, the period gets close enough to

innity. (The reader may test this on Example 2.5.) For practical advice we

refer to [PrTVF92].

2.1.4 Fibonacci Generators

The original Fibonacci recursion motivates trying the formula

N

i+1

:= (N

i

+N

i1

) mod M .

It turns out that this rst attempt of a three-term recursion is not suitable

for generating random numbers ( Exercise 2.15). The modied approach

N

i+1

:= (N

i

N

i

) mod M (2.5)

for suitable , IN is called lagged Fibonacci generator. For many choices

of , the approach (2.5) leads to recommendable generators.

Example 2.6

U

i

:= U

i17

U

i5

,

in case U

i

< 0 set U

i

:= U

i

+ 1.0

The recursion of Example 2.6 immediately produces oating-point numbers

U

i

[0, 1). This generator requires a prologue in which 17 initial Us are ge-

nerated by means of another method. The generator can be run with varying

lags , . [KaMN89] recommends

81

Chapter 2 Generating Random Numbers with Specied Distributions

Algorithm 2.7 (Fibonacci generator)

Repeat: := U

i

U

j

if < 0, set := + 1

U

i

:=

i := i 1

j := j 1

if i = 0, set i := 17

if j = 0, set j := 17

Initialization: Set i = 17, j = 5, and calculate U

1

, ..., U

17

with a congru-

ential generator, for instance with M = 714025, a = 1366, b = 150889.

Set the seed N

0

= your favorite dream number, possibly inspired by the

system clock of your computer.

Figure 2.3 depicts 10000 random points calculated by means of Algorithm

2.7. Visual inspection suggests that the points are not arranged in some

apparent structure. The points appear to be suciently random. But the

generator provided by Example 2.6 is not sophisticated enough for ambitious

applications; its pseudo-random numbers are rather correlated.

A generator of uniform deviates that can be highly recommended is the

Mersenne twister [MaN98], it has a truly remarkable long period.

2.2 Extending to Random Variables From Other

Distributions

Frequently normal variates are needed. Their generation is based on uniform

deviates. The simplest strategy is to calculate

X :=

12

i=1

U

i

6, for U

i

|[0, 1] .

X has expectation 0 and variance 1. The Central Limit Theorem (Appen-

dix B1) assures that X is approximately normally distributed ( Exercise

2.4). But this crude attempt is not satisfying. Better methods calculate non

uniformly distributed random variables, for example, by a suitable transfor-

mation out of a uniformly distributed random variable [Dev86]. But the most

obvious approach inverts the distribution function.

82

2.2 Extending to Random Variables From Other Distributions

0

0.2

0.4

0.6

0.8

1

0 0.2 0.4 0.6 0.8 1

Fig. 2.3. 10000 (pseudo-)random points (U

i1

, U

i

), calculated with Algorithm 2.7

2.2.1 Inversion

The following theorem is the basis for inversion methods.

Theorem 2.8 (inversion)

Suppose U |[0, 1] and F be a continuous strictly increasing distribution

function. Then F

1

(U) is a sample from F.

Proof: Let P denote the underlying probability.

U |[0, 1] means P(U ) = for 0 1.

Consequently

P(F

1

(U) x) = P(U F(x)) = F(x) .

83

Chapter 2 Generating Random Numbers with Specied Distributions

Application

Following Theorem 2.8, the inversion method takes uniform deviates u

|[0, 1] and sets x = F

1

(u) ( Exercises 2.5, 2.16). To judge the inversion

method we consider the normal distribution as the most important example.

Neither for its distribution function F nor for its inverse F

1

there is a

closed-form expression ( Exercise 1.3). So numerical methods are used.

We discuss two approaches.

Numerical inversion means to calculate iteratively a solution x of the

equation F(x) = u for prescribed u. This iteration requires tricky termina-

tion criteria, in particular when x is large. Then we are in the situation u 1,

where tiny changes in u lead to large changes in x (Figure 2.4). The appro-

ximation of the solution x of F(x) u = 0 can be calculated with bisection,

or Newtons method, or the secant method ( Appendix C1).

Alternatively the inversion x = F

1

(u) can be approximated by a suitably

constructed function G(u),

G(u) F

1

(u) .

Then only x = G(u) needs to be evaluated. Constructing such an appro-

ximation formula G, it is important to realize that F

1

(u) has vertical

tangents at u = 1 (horizontal in Figure 2.4). This pole behavior must be

reproduced correctly by the approximating function G. This suggests to use

rational approximation ( Appendix C1). For the Gaussian distribution

one incorporates the point symmetry with respect to (u, x) = (

1

2

, 0), and the

pole at u = 1 (and hence at u = 0) in the ansatz for G ( Exercise 2.6).

Rational approximation of F

1

(u) with a suciently large number of terms

leads to high accuracy [Moro95]. The formulas are given in Appendix D2.

u=F(x)

1/2

x

1

u

Fig. 2.4. Normal distribution; small changes in u can lead to large changes in x

84

2.2 Extending to Random Variables From Other Distributions

2.2.2 Transformations in IR

1

Another class of methods uses transformations between random variables.

We start the discussion with the scalar case. If we have a random variable X

with known density and distribution, what can we say about the density and

distribution of a transformed h(X)?

Theorem 2.9

Suppose X is a random variable with density f(x) and distribution F(x).

Further assume h : S B with S, B IR, where S is the support

2

of

f(x), and let h be strictly monotonous.

(a) Then Y := h(X) is a random variable. Its distribution F

Y

is

F

Y

(y) = F(h

1

(y)) in case h

> 0

F

Y

(y) = 1 F(h

1

(y)) in case h

< 0.

(b) If h

1

is absolutely continuous then for almost all y the density of

h(X) is

f(h

1

(y))

dh

1

(y)

dy

. (2.6)

Proof:

(a) For h

> 0 we have P(h(X) y) = P(X h

1

(y)) = F(h

1

(y)) .

(b) For absolutely continuous h

1

the density of Y = h(X) is equal to the

derivative of the distribution function almost everywhere. Evaluating

the derivative

dF(h

1

(y))

dy

with the chain rule implies the assertion. The

absolute value in (2.6) is necessary such that a positive density comes

out in case h

< 0. (See for instance [Fisz63], 2.4 C.)

Application

Since we are able to calculate uniform deviates, we start from X |[0, 1]

with f being the density of the uniform distribution,

f(x) = 1 for 0 x 1, otherwise f = 0 .

Here the support S is the unit interval. What we need are random numbers

Y matching a prespecied target density g(y). It remains to nd a transfor-

mation h such that the density in (2.6) is identical to g(y),

1

dh

1

(y)

dy

= g(y) .

Then we only evaluate h(X).

2

f is zero outside S. (In this section, S is no asset price.)

85

Chapter 2 Generating Random Numbers with Specied Distributions

Example 2.10 (exponential distribution)

The exponential distribution with parameter > 0 has the density

g(y) =

_

e

y

for y 0

0 for y < 0 .

Here the range B consists of the nonnegative real numbers. The aim is to

generate an exponentially distributed random variable Y out of a |[0, 1]-

distributed random variable X. To this end we dene the monotonous

transformation from the unit interval S = [0, 1] into B by the decreasing

function

y = h(x) :=

1

log x

with the inverse function h

1

(y) = e

y

for y 0. For this h verify

f(h

1

(y))

dh

1

(y)

dy

= 1

()e

y

= e

y

= g(y)

as density of h(X). Hence h(X) is distributed exponentially.

Application:

In case U

1

, U

2

, ... are nonzero uniform deviates, the numbers h(U

i

)

log(U

1

),

1

log(U

2

), ...

are distributed exponentially. ( Exercise 2.17)

Attempt to Generate a Normal Distribution

Starting from the uniform distribution (f = 1) a transformation y = h(x) is

searched such that its density equals that of the standard normal distribution,

1

dh

1

(y)

dy

=

1

2

exp

_

1

2

y

2

_

.

This is a dierential equation for h

1

without analytical solution. As we will

see, a transformation can be applied successfully in IR

2

. To this end we need

a generalization of the scalar transformation of Theorem 2.9 into IR

n

.

2.2.3 Transformations in IR

n

The generalization of Theorem 2.9 to the vector case is

Theorem 2.11

Suppose X is a random variable in IR

n

with density f(x) > 0 on the

support S. The transformation h : S B, S, B IR

n

is assumed to be

invertible and the inverse be continuously dierentiable on B. Y := h(X)

is the transformed random variable. Then Y has the density

86

2.3 Normally Distributed Random Variables

f(h

1

(y))

(x

1

, ..., x

n

)

(y

1

, ..., y

n

)

, y B, (2.7)

where x = h

1

(y) and

(x

1

,...,x

n

)

(y

1

,...,y

n

)

is the determinant of the Jacobian

matrix of all rst-order derivatives of h

1

(y).

(Theorem 4.2 in [Dev86])

2.3 Normally Distributed Random Variables

In this section the focus is on applying the transformation method in IR

2

to

generate Gaussian random numbers. We describe the classical approach of

Box and Muller. Inversion is one of several valid alternatives.

3

2.3.1 Method of Box and Muller

To apply Theorem 2.11 we start with the unit square S := [0, 1]

2

and the

density (2.7) of the bivariate uniform distribution. The transformation is

_

y

1

=

_

2 log x

1

cos 2x

2

=: h

1

(x

1

, x

2

)

y

2

=

_

2 log x

1

sin 2x

2

=: h

2

(x

1

, x

2

) ,

(2.8)

h(x) is dened on [0, 1]

2

with values in IR

2

. The inverse function h

1

is given

by

x

1

= exp

_

1

2

(y

2

1

+y

2

2

)

_

x

2

=

1

2

arctan

y

2

y

1

where we take the main branch of arctan. The determinant of the Jacobian

matrix is

(x

1

, x

2

)

(y

1

, y

2

)

= det

_

x

1

y

1

x

1

y

2

x

2

y

1

x

2

y

2

_

=

=

1

2

exp

_

1

2

(y

2

1

+y

2

2

)

_

y

1

1

1 +

y

2

2

y

2

1

1

y

1

y

2

1

1 +

y

2

2

y

2

1

y

2

y

2

1

=

1

2

exp

_

1

2

(y

2

1

+y

2

2

)

_

.

This shows that

(x

1

,x

2

)

(y

1

,y

2

)

is the density (2.7) of the bivariate standard normal

distribution. Since this density is the product of the two one-dimensional

densities,

3

See also the Notes on this section.

87

Chapter 2 Generating Random Numbers with Specied Distributions

(x

1

, x

2

)

(y

1

, y

2

)

=

_

1

2

exp

_

1

2

y

2

1

_

_

_

1

2

exp

_

1

2

y

2

2

_

_

,

the two components of the vector y are independent. So, when the com-

ponents of the vector X are |[0, 1], the vector h(X) consists of two inde-

pendent standard normal variates. Let us summarize the application of this

transformation:

Algorithm 2.12 (BoxMuller)

(1) generate U

1

|[0, 1] and U

2

|[0, 1].

(2) := 2U

2

, :=

2 log U

1

(3) Z

1

:= cos is a normal variate

(as well as Z

2

:= sin ).

The variables U

1

, U

2

stand for the components of X. Each application of the

algorithm provides two standard normal variates. Note that a line structure

in [0, 1]

2

as in Example 2.5 is mapped to curves in the (Z

1

, Z

2

)-plane. This

underlines the importance of excluding an evident line structure.

Marsaglia

2

Box Muller

R S D

U , U

1 2

x , x

y , y

1 2

V , V

1 2

1 2

h

Fig. 2.5. Transformations of the BoxMullerMarsaglia approach, schematically

2.3.2 Variant of Marsaglia

The variant of Marsaglia prepares the input in Algorithm 2.12 such that

trigonometric functions are avoided. For U |[0, 1] we have V := 2U 1

|[1, 1]. (Temporarily we misuse also the nancial variable V for local

purposes.) Two values V

1

, V

2

calculated in this way dene a point in the

(V

1

, V

2

)-plane. Only points within the unit disk are accepted:

T := (V

1

, V

2

) [ V

2

1

+V

2

2

< 1 ; accept only (V

1

, V

2

) T.

88

2.3 Normally Distributed Random Variables

In case of rejection both values V

1

, V

2

must be rejected. As a result, the

surviving (V

1

, V

2

) are uniformly distributed on T with density f(V

1

, V

2

) =

1

for (V

1

, V

2

) T. A transformation from the disk T into the unit square

S := [0, 1]

2

is dened by

_

x

1

x

2

_

=

_

V

2

1

+V

2

2

1

2

arg((V

1

, V

2

))

_

.

That is, the Cartesian coordinates V

1

, V

2

on T are mapped to the squared ra-

dius and the normalized angle.

4

For illustration, see Figure 2.5. These polar

coordinates (x

1

, x

2

) are uniformly distributed on S ( Exercise 2.7).

Application

For input in (2.8) use V

2

1

+ V

2

2

as x

1

and

1

2

arctan

V

2

V

1

as x

2

. With these

variables the relations

cos 2x

2

=

V

1

_

V

2

1

+V

2

2

, sin 2x

2

=

V

2

_

V

2

1

+V

2

2

,

hold, which means that it is no longer necessary to evaluate trigonometric

functions. The resulting algorithm of Marsaglia has modied the BoxMuller

method by constructing input values x

1

, x

2

in a clever way.

Algorithm 2.13 (polar method)

(1) Repeat: generate U

1

, U

2

|[0, 1]; V

1

:= 2U

1

1,

V

2

:= 2U

2

1, until W := V

2

1

+V

2

2

< 1.

(2) Z

1

:= V

1

_

2 log(W)/W

Z

2

:= V

2

_

2 log(W)/W

are both standard normal variates.

The probability that W < 1 holds is given by the ratio of the areas, /4 =

0.785... So in about 21% of all |[0, 1] drawings the (V

1

, V

2

)-tuple is rejected

because of W 1. Nevertheless the savings of the trigonometric evaluations

makes Marsaglias polar method more ecient than the BoxMuller method.

Figure 2.6 illustrates normally distributed random numbers ( Exercise

2.8).

4

arg((V

1

, V

2

)) = arctan(V

2

/V

1

) with the proper branch

89

Chapter 2 Generating Random Numbers with Specied Distributions

0

0.2

0.4

0.6

0.8

1

-4 -3 -2 -1 0 1 2 3 4

Fig. 2.6. 10000 numbers N(0, 1) (values entered horizontally and separated ver-

tically with distance 10

4

)

2.3.3 Correlated Random Variables

The above algorithms provide independent normal deviates. In many applica-

tions random variables are required that depend on each other in a prescribed

way. Let us rst recall the general n-dimensional density function.

Multivariate normal distribution (notations):

X = (X

1

, ..., X

n

), = EX = (EX

1

, ..., EX

n

)

The covariance matrix (B1.8) of X is denoted , and has elements

ij

= (CovX)

ij

:= E((X

i

i

)(X

j

j

)) ,

2

i

=

ii

,

for i, j = 1, . . . , n. Using this notation, the correlation coecients are

ij

:=

ij

j

(

ii

= 1) , (2.9)

which set up the correlation matrix. The correlation matrix is a scaled version

of . The density function f(x

1

, ..., x

n

) corresponding to ^(, ) is

f(x) =

1

(2)

n/2

1

(det )

1/2

exp

_

1

2

(x )

tr

1

(x )

_

. (2.10)

By theory, a covariance matrix (or correlation matrix) is symmetric, and

positive semidenite. If in practice a matrix

is corrupted by insucient

data, a close matrix can be calculated with the features of a covariance

matrix [Hig02]. In case det ,= 0 the matrix is positive denite, which we

assume now.

Below we shall need a factorization of into = AA

tr

. From numerical

mathematics we know that for symmetric positive denite matrices the

Cholesky decomposition = LL

tr

exists, with a lower triangular matrix L

( Appendix C1). There are numerous factorizations = AA

tr

other than

90

2.3 Normally Distributed Random Variables

Cholesky. A more involved factorization of is the principal component

analysis, which is based on eigenvectors ( Exercise 2.18).

Transformation

Suppose Z ^(0, I) and x = Az, A IR

nn

, where z is a realization of Z,

0 is the zero vector, and I the identity matrix. We see from

exp

_

1

2

z

tr

z

_

= exp

_

1

2

(A

1

x)

tr

(A

1

x)

_

= exp

_

1

2

x

tr

A

tr

A

1

x

_

and from dx = [ det A[dz that

1

[ det A[

exp

_

1

2

x

tr

(AA

tr

)

1

x

_

dx = exp

_

1

2

z

tr

z

_

dz

holds for arbitrary nonsingular matrices A. To complete the transformation,

we need a matrix A such that = AA

tr

. Then [ det A[ = (det )

1/2

, and

the densities with the respect to x and z are converted correctly. In view of

the general density f(x) recalled in (2.10), AZ is normally distributed with

AZ ^(0, AA

tr

), and hence the factorization = AA

tr

implies

AZ ^(0, ) .

Finally, translation with vector implies

+AZ ^(, ) . (2.11)

Application

Suppose we need a normal variate X ^(, ) for given mean vector and

covariance matrix . This is most conveniently based on the Cholesky de-

composition of . Accordingly, the desired random variable can be calculated

with the following algorithm:

Algorithm 2.14 (correlated random variable)

(1) Calculate the Cholesky decomposition AA

tr

=

(2) Calculate Z ^(0, I) componentwise

by Z

i

^(0, 1), i = 1, ..., n, for instance,

with Marsaglias polar algorithm

(3) +AZ has the desired distribution ^(, )

Special case n = 2: In this case, in view of (2.9), only one correlation number

is involved, namely, :=

12

=

21

, and the covariance matrix must be of the

form

91

Chapter 2 Generating Random Numbers with Specied Distributions

25

30

35

40

45

50

55

0 0.2 0.4 0.6 0.8 1

Fig. 2.7. Simulation of a correlated vector process with two components, and =

0.05,

1

= 0.3,

2

= 0.2, = 0.85, t = 1/250

=

_

2

1

1

2

2

2

_

. (2.12)

In this two-dimensional situation it makes sense to carry out the Cholesky

decomposition analytically ( Exercise 2.9). Figure 2.7 illustrates a highly

correlated two-dimensional situation, with = 0.85. An example based on

(2.12) is (3.28).

2.4 Monte Carlo Integration

A classical application of random numbers is the Monte Carlo integration.

The discussion in this section will serve as background for Quasi Monte Carlo,

a topic of the following Section 2.5.

Let us begin with the one-dimensional situation. Assume a probability

distribution with density g. Then the expectation of a function f is

E(f) =

f(x)g(x) dx,

92

2.4 Monte Carlo Integration

compare (B1.4). For a denite integral on an interval T = [a, b], we use the

uniform distribution with density

g =

1

b a

1

D

=

1

1

(T)

1

D

,

where

1

(T) denotes the length of the interval T. This leads to

E(f) =

1

1

(T)

b

_

a

f(x) dx,

or

b

_

a

f(x) dx =

1

(T) E(f) .

This equation is the basis of Monte Carlo integration. It remains to appro-

ximate E(f). For independent samples x

i

|[a, b] the law of large numbers

( Appendix B1) establishes the estimator

1

N

N

i=1

f(x

i

)

as approximation to E(f). The approximation improves as the number of

trials N goes to innity; the error is characterized by the Central Limit

Theorem.

This principle of the Monte Carlo Integration extends to the higher-

dimensional case. Let T IR

m

be a domain on which the integral

_

D

f(x) dx

is to be calculated. For example, T = [0, 1]

m

. Such integrals occur in nance,

for example, when mortgage-backed securities (CMO, collateralized mortgage

obligations) are valuated [CaMO97]. The classical or stochastic Monte Carlo

integration draws random samples x

1

, ..., x

N

T which should be indepen-

dent and uniformly distributed. Then

N

:=

m

(T)

1

N

N

i=1

f(x

i

) (2.13)

is an approximation of the integral. Here

m

(T) is the volume of T (or the

m-dimensional Lebesgue measure [Nie92]). We assume

m

(T) to be nite.

From the law of large numbers follows convergence of

N

to

m

(T)E(f) =

_

D

f(x) dx for N . The variance of the error

N

:=

_

D

f(x) dx

N

93

Chapter 2 Generating Random Numbers with Specied Distributions

satises

Var(

N

) = E(

2

N

) (E(

N

))

2

=

2

(f)

N

(

m

(T))

2

, (2.14a)

with the variance of f

2

(f) :=

_

D

f(x)

2

dx

__

D

f(x) dx

_

2

. (2.14b)

Hence the standard deviation of the error

N

tends to 0 with the order

O(N

1/2

). This result follows from the Central Limit Theorem or from other

arguments ( Exercise 2.10). The deciency of the order O(N

1/2

) is the

slow convergence (Exercise 2.11 and the second column in Table 2.1). To

reach an absolute error of the order , equation (2.14a) tells that the sample

size is N = O(

2

). To improve the accuracy by a factor of 10, the costs (that

is the number of trials, N) increase by a factor of 100. Another disadvantage

is the lack of a genuine error bound. The probabilistic error of (2.14) does

not rule out the risk that the result may be completely wrong. The

2

(f) in

(2.14b) is not known and must be approximated, which adds to the uncer-

tainty of the error. And the Monte Carlo integration responds sensitively to

changes of the initial state of the used random-number generator. This may

be explained by the potential clustering of random points.

In many applications the above deciencies are balanced by two good fea-

tures of Monte Carlo integration: A rst advantage is that the order O(N

1/2

)

of the error holds independently of the dimension m. Another good feature

is that the integrands f need not be smooth, square integrability suces

(f L

2

, see Appendix C3).

So far we have described the basic version of Monte Carlo integration,

stressing the slow decline of the probabilistic error with growing N. The

variance of the error can also be diminished by decreasing the numerator

in (2.14a). This variance of the problem can be reduced by suitable methods.

(We will come back to this issue in Section 3.5.4.)

We conclude the excursion into the stochastic Monte Carlo integration

with the variant for those cases in which

m

(T) is hard to calculate. For

T [0, 1]

m

and x

1

, ..., x

N

|[0, 1]

m

use

_

D

f(x) dx

1

N

N

i=1

x

i

D

f(x

i

) . (2.15)

For the integral (1.50) with density f

GBM

see Section 3.5.

94

2.5 Sequences of Numbers with Low Discrepancy

2.5 Sequences of Numbers with Low Discrepancy

One diculty with random numbers is that they may fail to distribute uni-

formly. Here, uniform is not meant in the stochastic sense of a distribution

|[0, 1], but has the meaning of an equidistributedness that avoids extreme

clustering or holes. The aim is to generate numbers for which the deviation

from uniformity is minimal. This deviation is called discrepancy. Another

objective is to obtain good convergence for some important applications.

2.5.1 Discrepancy

The bad convergence behavior of the stochastic Monte Carlo integration is

not inevitable. For example, for m = 1 and T = [0, 1] an equidistant x-grid

with mesh size 1/N leads to a formula (2.13) that resembles the trapezoidal

sum ((C1.2) in Appendix C1). For smooth f, the order of the error is at least

O(N

1

). (Why?) But such a grid-based evaluation procedure is somewhat

inexible because the grid must be prescribed in advance and the number

N that matches the desired accuracy is unknown beforehand. In contrast,

the free placing of sample points with Monte Carlo integration can be per-

formed until some termination criterion is met. It would be desirable to nd

a compromise in placing sample points such that the neness advances but

clustering is avoided. The sample points should ll the integration domain T

as uniformly as possible. To this end we require a measure of the equidistri-

butedness.

Let Q [0, 1]

m

be an arbitrary axially parallel m-dimensional rectangle

in the unit cube [0, 1]

m

of IR

m

. That is, Q is a product of m intervals. Suppose

a set of points x

1

, ..., x

N

[0, 1]

m

. The decisive idea behind discrepancy is

that for an evenly distributed point set, the fraction of the points lying within

the rectangle Q should correspond to the volume of the rectangle (see Figure

2.8). Let # denote the number of points, then the goal is

# of x

i

Q

# of all points in [0, 1]

m

vol(Q)

vol([0, 1]

m

)

for as many rectangles as possible. This leads to the following denition:

Denition 2.15 (discrepancy)

The discrepancy of the point set x

1

, ..., x

N

[0, 1]

m

is

D

N

:= sup

Q

# of x

i

Q

N

vol(Q)

.

Analogously the variant D

N

(star discrepancy) is obtained when the set of

rectangles is restricted to those Q

, for which one corner is the origin:

95

Chapter 2 Generating Random Numbers with Specied Distributions

Fig. 2.8 On the idea of discrepancy

Table 2.1 Comparison of dierent convergence rates to zero

N

1

N

_

log log N

N

log N

N

(log N)

2

N

(log N)

3

N

10

1

.31622777 .28879620 .23025851 .53018981 1.22080716

10

2

.10000000 .12357911 .04605170 .21207592 .97664572

10

3

.03162278 .04396186 .00690776 .04771708 .32961793

10

4

.01000000 .01490076 .00092103 .00848304 .07813166

10

5

.00316228 .00494315 .00011513 .00132547 .01526009

10

6

.00100000 .00162043 .00001382 .00019087 .00263694

10

7

.00031623 .00052725 .00000161 .00002598 .00041874

10

8

.00010000 .00017069 .00000018 .00000339 .00006251

10

9

.00003162 .00005506 .00000002 .00000043 .00000890

Q

=

m

i=1

[0, y

i

)

where y IR

m

denotes the corner diagonally opposite the origin.

The more evenly the points of a sequence are distributed, the closer the

discrepancy D

N

is to zero. Here D

N

refers to the rst N points of a sequence

of points (x

i

), i 1. The discrepancies D

N

and D

N

satisfy ( Exercise

2.12b)

D

N

D

N

2

m

D

N

.

96

2.5 Sequences of Numbers with Low Discrepancy

The discrepancy allows to nd a deterministic bound on the error

N

of

the Monte Carlo integration,

[

N

[ v(f)D

N

; (2.16)

here v(f) is the variation of the function f with v(f) < , and the domain

of integration is T = [0, 1]

m

[Nie92], [TrW92], [MoC94]. This result is known

as Theorem of Koksma and Hlawka. The bound in (2.16) underlines the

importance to nd numbers x

1

, ..., x

N

with small value of the discrepancy

D

N

. After all, a set of N randomly chosen points satises

E(D

N

) = O

_

_

log log N

N

_

.

This is in accordance with the O(N

1/2

) law. The order of magnitude of

these numbers is shown in Table 2.1 (third column).

Denition 2.16 (low-discrepancy point sequence)

A sequence of points or numbers x

1

, x

2

, ..., x

N

, ... [0, 1]

m

is called low-

discrepancy sequence if

D

N

= O

_

(log N)

m

N

_

. (2.17)

Deterministic sequences of numbers satisfying (2.17) are also called quasi-

random numbers, although they are fully deterministic. Table 2.1 reports on

the orders of magnitude. Since log(N) grows only modestly, a low discrepancy

essentially means D

N

O(N

1

) as long as the dimension m is small. The

equation (2.17) expresses some dependence on the dimension m, contrary to

Monte Carlo methods. But the dependence on m in (2.17) is less stringent

than with classical quadrature.

2.5.2 Examples of Low-Discrepancy Sequences

In the one-dimensional case (m = 1) the point set

x

i

=

2i 1

2N

, i = 1, ..., N (2.18)

has the value D

N

=

1

2N

; this value can not be improved (Exercise 2.12c).

The monotonous sequence (2.18) can be applied only when a reasonable N is

known and xed; for N the x

i

would be newly placed and an integrand f

evaluated again. Since N is large, it is essential that the previously calculated

results can be used when N is growing. This means that the points x

1

, x

2

, ...

must be placed dynamically so that they are preserved and the neness

improves when N grows. This is achieved by the sequence

97

Chapter 2 Generating Random Numbers with Specied Distributions

1

2

,

1

4

,

3

4

,

1

8

,

5

8

,

3

8

,

7

8

,

1

16

, ...

This sequence is known as van der Corput sequence. To motivate such a

dynamical placing of points imagine that you are searching for some item in

the interval [0, 1] (or in the cube [0, 1]

m

). The searching must be fast and

successful, and is terminated as soon as the object is found. This denes N

dynamically by the process.

The formula that denes the van der Corput sequence can be formulated

as algorithm. Let us study an example, say, x

6

=

3

8

. The index i = 6 is

written as binary number

6 = (110)

2

=: (d

2

d

1

d

0

)

2

with d

i

0, 1 .

Then reverse the binary digits and put the radix point in front of the sequence:

(. d

0

d

1

d

2

)

2

=

d

0

2

+

d

1

2

2

+

d

3

2

3

=

1

2

2

+

1

2

3

=

3

8

If this is done for all indices i = 1, 2, 3, ... the van der Corput sequence

x

1

, x

2

, x

3

, ... results. These numbers can be dened with the following func-

tion:

Denition 2.17 (radical-inverse function)

For i = 1, 2, ... let j be given by the expansion in base b (integer 2)

i =

j

k=0

d

k

b

k

,

with digits d

k

0, 1, ..., b 1, which depend on b, i. Then the radical-

inverse function is dened by

b

(i) :=

j

k=0

d

k

b

k1

.

The function

b

(i) is the digit-reversed fraction of i. This mapping may be

seen as reecting with respect to the radix point. To each index i a rational

number

b

(i) in the interval 0 < x < 1 is assigned. Every time the number

of digits j increases by one, the mesh becomes ner by a factor 1/b. This

means that the algorithm lls all mesh points on the sequence of meshes with

increasing neness ( Exercise 2.13). The above classical van der Corput

sequence is obtained by

x

i

:=

2

(i) .

The radical-inverse function can be applied to construct points x

i

in the m-

dimensional cube [0, 1]

m

. The simplest construction is the Halton sequence.

98

2.5 Sequences of Numbers with Low Discrepancy

Denition 2.18 (Halton sequence)

Let p

1

, ..., p

m

be pairwise prime integers. The Halton sequence is dened

as the sequence of vectors

x

i

:= (

p

1

(i), ...,

p

m

(i)) , i = 1, 2, ...

Usually one takes p

1

, ..., p

m

as the rst m prime numbers. Figure 2.9 shows

for m = 2 and p

1

= 2, p

2

= 3 the rst 10000 Halton points. Compared to the

pseudo-random points of Figure 2.3, the Halton points are distributed more

evenly.

0

0.2

0.4

0.6

0.8

1

0 0.2 0.4 0.6 0.8 1

Fig. 2.9. 10000 Halton points from Denition 2.18, with p

1

= 2, p

2

= 3

Halton sequences x

i

of Denition 2.18 are easily constructed, but fail to

be uniform when the dimension m is high, see Section 5.2 in [Gla04]. Then

correlations between the radical-inverse functions for dierent dimensions are

99

Chapter 2 Generating Random Numbers with Specied Distributions

observed. This problem can be cured with a simple modication of the Halton

sequence, namely, by using only every lth Halton number [KoW97]. The leap

l is a prime dierent from all bases p

1

, . . . , p

m

. The result is the Halton

sequence leaped

x

k

:= (

p

1

(lk), ...,

p

m

(lk)) , k = 1, 2, ... (2.19)

This modication has shown good performance for dimensions at least up to

m = 400. As reported in [KoW97], l = 409 is one example of a good leap

value.

Other sequences have been constructed out of the van der Corput se-

quence. These include the sequences developed by Sobol, Faure and Nie-

derreiter, see [Nie92], [MoC94], [PrTVF92]. All these sequences are of low

discrepancy, with

N D

N

C

m

(log N)

m

+O

_

(log N)

m1

_

.

The Table 2.1 shows how fast the relevant terms (log N)

m

/N tend to zero.

If m is large, extremely large values of the denominator N are needed before

the terms become small. But it is assumed that the bounds are unrealistically

large and overestimate the real error. For the Halton sequence in case m = 2

the constant is C

2

= 0.2602.

Quasi Monte Carlo (QMC) methods approximate the integrals with the

arithmetic mean

N

of (2.13), but use low-discrepancy numbers x

i

instead

of random numbers. QMC is a deterministic method. Practical experience

with low-discrepancy sequences are better than might be expected from the

bounds known so far. This also holds for the bound (2.16) by Koksma and

Hlawka; apparently a large class of functions f satisfy [

N

[ v(f)D

N

, see

[SpM94].

Notes and Comments

on Section 2.1:

The linear congruential method is sometimes called Lehmer generator. Easily

accessible and popular generators are RAN1 and RAN2 from [PrTVF92].

Further references on linear congruential generators are [Mar68], [Rip87],

[Nie92], [LEc99]. Example 2.4 is from [Fis96], and Example 2.5 from [Rip87].

Nonlinear congruential generators are of the form

N

i

= f(N

i1

) mod M .

Hints on the algorithmic implementation are found in [Gen98]. Generally it is

advisable to run the generator in integer arithmetic in order to avoid rounding

errors that may spoil the period, see [Lehn02]. For Fibonacci generators we

100

Notes and Comments

refer to [Bre94]. The version of (2.5) is a subtractive generator. Additive

versions (with a plus sign instead of the minus sign) are used as well [Knu95],

[Gen98]. The codes in [PrTVF92] are recommendable. For simple statistical

tests with illustrations see [Hig04].

There are multiplicative Fibonacci generators of the form

N

i+1

:= N

i

N

i

mod M .

Hints on parallelization are given in [Mas99]. For example, parallel Fibonacci

generators are obtained by dierent initializing sequences. Note that com-

puter systems and software packages often provide built-in random number

generators. But often these generators are not clearly specied, and should

be handled with care.

on Sections 2.2, 2.3:

The inversion result of Theorem 2.8 can be formulated placing less or no

restrictions on F, see [Rip87], p. 59, [Dev86], p. 28, or [Lan99], p. 270. There

are numerous other methods to calculate normal and non normal variates;

for a detailed overview with many references see [Dev86]. The BoxMuller

approach was suggested in [BoM58]. Marsaglias modication was published

in a report quoted in [MaB64]. Several algorithms are based on the rejection

method [Dev86], [Fis96]. Fast algorithms include the ziggurat generator,

which works with precomputed tables [MaT00], and the Wallace algorithm

[Wal96], which works with a pool of random numbers and suitable transfor-

mations. Platform-dependent implementation details place emphasis on the

one or the other advantage. A survey on Gaussian random number generators

is [ThLLV07]. For simulating Levy processes, see [ConT04]. For singular sym-

metric positive semidenite matrices (x

tr

x 0 for all x), the Cholesky

decomposition can be cured, see [GoV96], or [Gla04].

on Section 2.4:

The bounds on errors of the Monte Carlo integration refer to arbitrary func-

tions f; for smooth functions better bounds can be expected. In the one-

dimensional case the variation is dened as the supremum of

j

[f(t

j

)

f(t

j1

)[ over all partitions, see Section 1.6.2. This denition can be genera-

lized to higher-dimensional cases. A thorough discussion is [Nie78], [Nie92].

An advanced application of Monte Carlo integration uses one or more me-

thods of reduction of variance, which allows to improve the accuracy in many

cases [HaH64], [Rub81], [Nie92], [PrTVF92], [Fis96], [Kwok98], [Lan99]. For

example, the integration domain can be split into subsets (stratied samp-

ling) [RiW03]. Another technique is used when for a control variate g with

g f the exact integral is known. Then f is replaced by (f g) + g and

Monte Carlo integration is applied to f g. Another alternative, the method

of antithetic variates, will be described in Section 3.5.4 together with the

control-variate technique.

101

Chapter 2 Generating Random Numbers with Specied Distributions

on Section 2.5:

Besides the supremum discrepancy of Denition 2.15 the L

2

-analogy of an

integral version is used. Hints on speed and preliminary comparison are found

in [MoC94]. For application on high-dimensional integrals see [PaT95]. For

large values of the dimension m, the error (2.17) takes large values, which

might suggest to discard its use. But the notion of an eective dimension and

practical results give a favorable picture at least for CMO applications of or-

der m = 360 [CaMO97]. The error bound of Koksma and Hlawka (2.16) is not

necessarily recommendable for practical use, see the discussion in [SpM94].

The analogy of the equidistant lattice in (2.18) in higher-dimensional space

has unfavorable values of the discrepancy, D

N

= O

_

1

m

N

_

. For m > 2 this

is worse than Monte Carlo, compare [Rip87]. Monte Carlo does not take

advantage of smoothness of integrands. In the case of smooth integrands,

sparse-grid approaches are highly competitive. These most rened quadra-

ture methods moderate the curse of the dimension, see [GeG98], [GeG03],

[Rei04].

Van der Corput sequences can be based also on other bases. Haltons

paper is [Hal60]. Computer programs that generate low-discrepancy numbers

are available. For example, Sobol numbers are calculated in [PrTVF92] and

Sobol- and Faure numbers in the computer program FINDER [PaT95] and

in [Tez95]. At the current state of the art it is open which point set has

the smallest discrepancy in the m-dimensional cube. There are generalized

Niederreiter sequences, which include Sobol- and Faure sequences as special

cases [Tez95]. In several applications deterministic Monte Carlo seems to be

superior to stochastic Monte Carlo [PaT96]. A comparison based on nance

applications has shown good performance of Sobol numbers; in [Jon11] Sobol

numbers are outperformed by Halton sequences leaped (2.19). [NiJ95] and

Chapter 5 in [Gla04] provide more discussion and many references.

Besides volume integration, Monte Carlo is needed to integrate over pos-

sibly high-dimensional probability distributions. Drawing samples from the

required distribution can be done by running a cleverly constructed Markov

chain. This kind of method is called Markov Chain Monte Carlo (MCMC).

That is, a chain of random variables X

0

, X

1

, X

2

, . . . is constructed where for

given X

j

the next state X

j+1

does not depend on the history of the chain

X

0

, X

1

, X

2

, . . . , X

j1

. By suitable construction criteria, convergence to any

chosen target distribution is obtained. For MCMC we refer to the literature,

for example to [GiRS96], [Lan99], [Beh00], [Tsay02], [Hag02].

102

Exercises

Exercises

Exercise 2.1

Consider the random number generator N

i

= 2N

i1

mod 11. For (N

i1

, N

i

)

0, 1, ..., 10

2

and integer tuples with z

0

+ 2z

1

= 0 mod 11 the equation

z

0

N

i1

+z

1

N

i

= 0 mod 11

denes families of parallel straight lines, on which all points (N

i1

, N

i

) lie.

These straight lines are to be analyzed. For which of the families of parallel

straight lines are the gaps maximal?

Exercise 2.2 Decient Random Number Generator

For some time the generator

N

i

= aN

i1

mod M, with a = 2

16

+ 3, M = 2

31

was in wide use. Show for the sequence U

i

:= N

i

/M

U

i+2

6U

i+1

+ 9U

i

is integer!

What does this imply for the distribution of the triples (U

i

, U

i+1

, U

i+2

) in

the unit cube?

Exercise 2.3 Lattice of the Linear Congruential Generator

a) Show by induction over j

N

i+j

N

j

= a

j

(N

i

N

0

) mod M

b) Show for integer z

0

, z

1

, ..., z

m1

N

i

N

i+1

.

.

.

N

i+m1

N

0

N

1

.

.

.

N

m1

= (N

i

N

0

)

1

a

.

.

.

a

m1

+M

z

0

z

1

.

.

.

z

m1

1 0 0

a M 0

.

.

.

.

.

.

.

.

.

.

.

.

a

m1

0 M

z

0

z

1

.

.

.

z

m1

Exercise 2.4 Coarse Approximation of Normal Deviates

Let U

1

, U

2

, ... be independent random numbers |[0, 1], and

X

k

:=

k+11

i=k

U

i

6 .

Calculate mean and variance of the X

k

.

103

Chapter 2 Generating Random Numbers with Specied Distributions

Exercise 2.5 Cauchy-Distributed Random Numbers

A Cauchy-distributed random variable has the density function

f

c

(x) :=

c

1

c

2

+x

2

.

Show that its distribution function F

c

and its inverse F

1

c

are

F

c

(x) =

1

arctan

x

c

+

1

2

, F

1

c

(y) = c tan((y

1

2

)) .

How can this be used to generate Cauchy-distributed random numbers out

of uniform deviates?

Exercise 2.6 Inverting the Normal Distribution

Suppose F(x) is the standard normal distribution function. Construct a rough

approximation G(u) to F

1

(u) for 0.5 u < 1 as follows:

a) Construct a rational function G(u) ( Appendix C1) with correct

asymptotic behavior, point symmetry with respect to (u, x) = (0.5, 0),

using only one parameter.

b) Fix the parameter by interpolating a given point (x

1

, F(x

1

)).

c) What is a simple criterion for the error of the approximation?

Exercise 2.7 Uniform Distribution

For the uniformly distributed random variables (V

1

, V

2

) on [1, 1]

2

consider

the transformation

_

X

1

X

2

_

=

_

V

2

1

+V

2

2

1

2

arg((V

1

, V

2

))

_

where arg((V

1

, V

2

)) denotes the corresponding angle. Show that (X

1

, X

2

) is

distributed uniformly.

Exercise 2.8 Programming Assignment: Normal Deviates

a) Write a computer program that implements the Fibonacci generator

U

i

:=U

i17

U

i5

U

i

:=U

i

+ 1 in case U

i

< 0

in the form of Algorithm 2.7.

Tests: Visual inspection of 10000 points in the unit square.

b) Write a computer program that implements Marsaglias Polar Algorithm

(Algorithm 2.13). Use the uniform deviates from a).

Tests:

1.) For a sample of 5000 points calculate estimates of mean and variance.

104

Exercises

2.) For the discretized SDE

x = 0.1t +Z

t, Z ^(0, 1)

calculate some trajectories for 0 t 1, t = 0.01, x

0

= 0.

Exercise 2.9 Correlated Distributions

Suppose we need a two-dimensional random variable (X

1

, X

2

) that must be

normally distributed with mean 0, and given variances

2

1

,

2

2

and prespecied

correlation . How is X

1

, X

2

obtained out of Z

1

, Z

2

^(0, 1)?

Exercise 2.10 Error of the Monte Carlo Integration

The domain for integration is Q = [0, 1]

m

. For

N

:=

1

N

N

i=1

f(x

i

) , E(f) :=

_

f dx , g := f E(f)

and

2

(f) from (2.14b) show

a) E(g) = 0

b)

2

(g) =

2

(f)

c)

2

(

N

) = E(

2

N

) =

1

N

2

_

(

g(x

i

))

2

dx =

1

N

2

(f)

Hint on (c): When the random points x

i

are i.i.d. (independent iden-

tical distributed), then also f(x

i

) and g(x

i

) are i.i.d. A consequence is

_

g(x

i

)g(x

j

) dx = 0 for i ,= j.

Exercise 2.11 Experiment on Monte Carlo Integration

To approximate the integral

_

1

0

f(x) dx

calculate a Monte Carlo sum

1

N

N

i=1

f(x

i

)

for f(x) = 5x

4

and, for example, N = 100000 random numbers x

i

|[0, 1].

The absolute error behaves like cN

1/2

. Compare the approximation with

the exact integral for several N and seeds to obtain an estimate of c.

Exercise 2.12 Bounds on the Discrepancy

(Compare Denition 2.15) Show

a) 0 D

N

1,

b) D

N

D

N

2

m

D

N

(show this at least for m 2),

c) D

N

1

2N

for m = 1.

105

Chapter 2 Generating Random Numbers with Specied Distributions

Exercise 2.13 Algorithm for the Radical-Inverse Function

Use the idea

i =

_

d

k

b

k1

+... +d

1

_

b +d

0

to formulate an algorithm that obtains d

0

, d

1

, ..., d

k

by repeated division by

b. Reformulate

b

(i) from Denition 2.17 into the form

b

(i) = z/b

j+1

such

that the result is represented as rational number. The numerator z should be

calculated in the same loop that establishes the digits d

0

, ..., d

k

.

Exercise 2.14 Testing the Distribution

Let X be a random variate with density f and let a

1

< a

2

< ... < a

l

dene

a partition of the support of f into subintervals, including the unbounded

intervals x < a

1

and x > a

l

. Recall from (B1.1), (B1.2) that the probability

of a realization of X falling into a

k

x < a

k+1

is given by

p

k

:=

a

k+1

_

a

k

f(x) dx , k = 1, 2, . . . , l 1 ,

which can be approximated by (a

k+1

a

k

)f

_

a

k

+a

k+1

2

_

. Perform a sample of

j realizations x

1

, . . . , x

j

of a random number generator, and denote j

k

the

number of samples falling into a

k

x < a

k+1

. For normal variates with

density f from (B1.9) design an algorithm that performs a simple statistical

test of the quality of the x

1

, . . . , x

j

.

Hints: See Section 2.1 for the special case of uniform variates. Argue for what

choices of a

1

and a

l

the probabilities p

0

and p

l

may be neglected. Think about

a reasonable relation between l and j.

Exercise 2.15 Quality of Fibonacci-Generated Numbers

Analyze and visualize the planes in the unit cube, on which all points fall

that are generated by the Fibonacci recursion

U

i+1

:= (U

i

+U

i1

) mod 1 .

Exercise 2.16

Use the inversion method and uniformly distributed U |[0, 1] to calculate

a stochastic variable X with distribution

F(x) = 1 e

2x(xa)

, x a .

Exercise 2.17 Time-Changed Wiener Process

For a time-changing function (t) set

j

:= (j t) for some time increment

t.

a) Argue why Algorithm 1.8 changes to W

j

= W

j1

+ Z

j1

(last

line).

106

Exercises

110

120

130

140

150

160

170

180

34

36

38

40

42

44

46

48

50

52

60

70

80

90

100

110

S1

S2

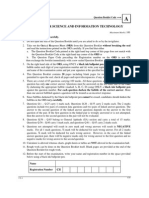

Fig. 2.10. Prices of the DAX assets Allianz (S1), BMW (S2), and HeidelbergCe-

ment; 500 trading days from Nov 5, 2005; eigenvalues of the covariance matrix are

400.8, 25.8, 2.73; eigenvectors centered at the mean point and scaled by

are

shown, and the plane spanned by v

(1)

, v

(2)

.

b) Let

j

be the exponentially distributed jump instances of a Poisson expe-

riment, see Section 1.9 and Property 1.20e. How should the jump intensity

be chosen such that the expectation of the is t? Implement and test

the algorithm, and visualize the results. Experiment with several values

of the jump intensity .

Exercise 2.18 Spectral Decomposition of a Covariance Matrix

For symmetric positive denite n n matrices there exists a set of ortho-

normal eigenvectors v

(1)

, . . . , v

(n)

and eigenvalues

1

. . .

n

> 0 such

that

v

(j)

=

j

v

(j)

, j = 1, . . . , n.

Arrange the n eigenvector columns into the nn matrix B := (v

(1)

, . . . , v

(n)

),

and the eigenvalues into the diagonal matrices := diag(

1

, . . . ,

n

) and

1

2

:= diag(

1

, . . . ,

n

).

a) Show B = B.

b) Show that

A := B

1

2

107

Chapter 2 Generating Random Numbers with Specied Distributions

factorizes in the sense = AA

tr

.

c) Show

AZ =

n

j=1

_

j

Z

j

v

(j)

d) And the reversal of Section 2.3.3 holds: For a random vector X ^(0, )

the transformed random vector A

1

X has uncorrelated components:

Show Cov(A

1

X) = I and Cov(B

1

X) = .

e) For the 2 2 matrix

=

_

5 1

1 10

_

calculate the Cholesky decomposition and B

1

2

.

Hint: The above is the essence of the principal component analysis. Here

represents a covariance matrix or a correlation matrix. (For an example see

Figure 2.10.) The matrix B and the eigenvalues in reveal the structure of

the data. B denes a linear transformation of the data to a rectangular coor-

dinate system, and the eigenvalues

j

measure the corresponding variances.

In case

k+1

k

for some index k, the sum in c) can be truncated after the

kth term in order to reduce the dimension. The computation of B and (and

hence A) is costly, but a dominating

1

allows for a simple approximation of

v

(1)

by the power method.

108

http://www.springer.com/978-1-4471-2992-9

Das könnte Ihnen auch gefallen

- ElasticityDokument3 SeitenElasticityDestinifyd MydestinyNoch keine Bewertungen

- Density of MatterDokument5 SeitenDensity of MatterDestinifyd MydestinyNoch keine Bewertungen

- Question Template: SubjectDokument102 SeitenQuestion Template: SubjectDestinifyd MydestinyNoch keine Bewertungen

- 8051 - MCQDokument5 Seiten8051 - MCQveeramaniks408Noch keine Bewertungen

- General Studies 1Dokument22 SeitenGeneral Studies 1praveen858Noch keine Bewertungen

- RL - AM-,WWY-: TRV Rating Concepts and Iec Standards TRV EnvelopesDokument17 SeitenRL - AM-,WWY-: TRV Rating Concepts and Iec Standards TRV EnvelopesDestinifyd Mydestiny100% (1)

- Electrical Engineering Objective Questions Part 6Dokument13 SeitenElectrical Engineering Objective Questions Part 6Destinifyd MydestinyNoch keine Bewertungen

- Quality Makes Us Strong: Home LogoutDokument7 SeitenQuality Makes Us Strong: Home LogoutDestinifyd MydestinyNoch keine Bewertungen

- Your Electrical Home: Instrument TransformerDokument5 SeitenYour Electrical Home: Instrument TransformerDestinifyd MydestinyNoch keine Bewertungen

- Recent Mechanical Engineering Grad Seeks Entry-Level PositionDokument2 SeitenRecent Mechanical Engineering Grad Seeks Entry-Level PositiontarunNoch keine Bewertungen

- Current Affairs Pocket PDF - August 2016 by AffairsCloudDokument45 SeitenCurrent Affairs Pocket PDF - August 2016 by AffairsCloudSurya Srinivas VinjarapuNoch keine Bewertungen

- Mechanical 2010Dokument32 SeitenMechanical 2010Anonymous KpVxNXsNoch keine Bewertungen

- Biophysical Chemistry UpadhyayDokument1.194 SeitenBiophysical Chemistry UpadhyayDestinifyd Mydestiny75% (12)

- Result and Discussion With Concluding RemarksDokument10 SeitenResult and Discussion With Concluding RemarksDestinifyd MydestinyNoch keine Bewertungen

- Indpendent Study Course Syllabus - Foundations of Orthopedic and Manual TherapyDokument3 SeitenIndpendent Study Course Syllabus - Foundations of Orthopedic and Manual TherapyDestinifyd MydestinyNoch keine Bewertungen

- FridayDokument1 SeiteFridayDestinifyd MydestinyNoch keine Bewertungen

- Sheet-Metal Forming ProcessesDokument56 SeitenSheet-Metal Forming Processesenverkara80Noch keine Bewertungen

- Create Models of Engineering Systems TutorialDokument28 SeitenCreate Models of Engineering Systems TutorialarietoxNoch keine Bewertungen