Beruflich Dokumente

Kultur Dokumente

252355

Hochgeladen von

Edwin Johny Asnate SalazarOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

252355

Hochgeladen von

Edwin Johny Asnate SalazarCopyright:

Verfügbare Formate

Technology Bytes

Any sufciently advanced technology is indistinguishable from magic. Arthur C. Clarke Technology and the Future, in Report on Planet Three and Other Speculations Harper & Row, 1972

Although most of the examples and exercises in this book can be done without the aid of technology, there are some that are intended to be done with a matrixcapable calculator or a computer algebra system. These are clearly marked with the symbol CAS . If you have access to such technology, it is also useful for checking hand calculations and for doing computations once you have mastered the basic techniques. In this section, you will see what selected examples from each chapter look like when done using the computer algebra systems Maple, Mathematica, and MATLAB. By imitating these examples (and making use of the extensive built-in help facilities of each of the systems), you should be able to exploit the power of these systems to help you with the exercises throughout this book.

Maple

In order to use Maple to do linear algebra, you must rst load the linear algebra package. This is done with the command

> with (LinearAlgebra) :

The package must be loaded for each new Maple session and each new worksheet.

Chapter 1: Vectors

A calculation that results in a vector or matrix must be performed using the command evalm. Observe that ending an input statement with a colon suppresses the output, while ending an input statement with a semicolon displays the output. Example 1.6

> > > > u:=vector([1,0,-1]) : v:=vector([2,-3,1]) : w:=vector([5,-4,0]) : evalm(3*u+2*v-w) ;

3 2, 2, 1 4 Example 1.15

> u:=vector([1,2,-3]) : v:=vector([-3,5,2]) : > dotprod(u,v) ;

1

1

Appendix E

If v is a vector, the Maple command norm(v,2) gives its (Euclidean) length. Example 1.17

(> norm(vector([2,3]) ,2) ;

113 The cross product of vectors in 3 is computed using the command crossprod.

> u:=vector([1,2,3]) : v:=vector([-1,1,5]) : > crossprod(u,v) ;

3 7, 8, 3 4 All linear algebra commands can be performed symbolically as well as numerically.

> u:=vector([u1,u2,u3]) : v:=vector([v1,v2,v3]) : > evalm(u+3*v) ;

3 u1 3 v1, u2 3 v2, u3 3 v3 4

(> dotprod(u,v) ;

u1 1 v1 2 u2 1 v2 2 u3 1 v3 2 What are the bars? Maple is assuming that the vectors may have entries that are complex numbers, in which case this is the version of the dot product that is needed. (See Exploration: Vectors and Matrices with Complex Entries in Chapter 7) Use the following command to obtain the real dot product symbolically:

> dotprod(u,v,'orthogonal') ;

u1 v1 u2 v2 u3 v3

> norm(u,2) ;

2 2 2 2u1 u2 u3

> crossprod(u,v) ;

3 u2 v3 u3 v2, u3 v1 u1 v3, u1 v2 u2 v1 4 Maple can also perform modular arithmetic. Example 1.12

> 3548 mod 3;

2 Example 1.14

> u:=vector([2,2,0,1,2]) : v:=vector([1,2,2,2,1]) : > dotprod(u,v) mod 3;

Appendix E

Example 1.40

> c:=vector([3,1,3,1,3,1,3,1,3,1,3,1]) : > v:=vector([0,7,4,9,2,7,0,2,0,9,4,6]) : > dotprod(c,v) mod 10;

0 To perform modular arithmetic calculations where the result is a vector or matrix, you need to rst dene a procedure called matmod.

> matmod:=proc(A,p) : > map('mod',evalm(A),p) : > end;

matmod : proc(A, p)map(mod , evalm(A), p) end proc

> u:=vector([2,2,0,1,2]) : v:=vector([1,2,2,2,1]) : > matmod(u+v,3) ;

3 0, 1, 2, 0, 0 4

Chapter 2: Systems of Linear Equations

Example 2.6

> A:=matrix(3,3,[1,-1,-1,3,-3,2,2,-1,1]) ;

1 A: C 3 2

1 3 1

1 2S 1

> b:=vector([2,16,9]) ;

b : 3 2, 16, 9 4

> linsolve(A,b) ;

3 3, 1, 2 4 Elementary row operations can be performed individually. To construct an augmented matrix, use the augment command.

> Ab:=augment(A,b) ;

1 Ab : C 3 2

1 3 1

1 2 1

2 16 S 9

> addrow(A,1,2,-3) ;

1 C0 2

1 0 1

1 5 1

2 10 S 9

Appendix E

This command adds 3 times row 1 to row 2 (putting the result in row 2).

> swaprow(%,2,3) ;

1 C2 0

1 1 0

1 1 5

2 9S 10

This command swaps rows 2 and 3 of the previous output (denoted by %).

> mulrow(%,3,1/5) ;

1 C2 0

1 1 0

1 1 1

2 9S 2

This command multiplies row 3 of the last result by 1 . 5 There are two other commands that can be useful in solving a linear system directly: rref and gaussjord. Examples 2.11 and 2.13

> A:=matrix(3,4,[1,-1,-1,2,2,-2,-1,3,-1,1,-1,0]) : > b:=vector([1,3,-3]) : > Ab:=augment(A,b) ;

1 C 2 1

> rref(Ab) ;

1 2 1

1 1 1

2 3 0

1 3S 3

1 C0 0

1 0 0

0 1 0

1 1 0

2 1S 0

> gaussjord(Ab) ;

1 C0 0

1 0 0

0 1 0

1 1 0

2 1S 0

Applying linsolve to this example produces the following:

> linsolve(A,b) ;

_t2, _t2 3 _t1, _t1, 1 _t1

Appendix E

Although this solution looks different from the solution to Example 2.11, the substitutions s _t2 3 _t1 and t 1 _t1

show that they are equivalent. You can combine the procedure matmod with the above techniques to handle systems over p. Example 2.16

> A:=matrix(3,3,[1,2,1,1,0,1,0,1,2]) : b:=vector([0,2,1]) : > Ab:=augment(A,b) ;

1 Ab : C 1 0

2 0 1

1 1 2

0 2S 1

> matmod(rref(Ab) ,3) ;

1 C0 0

0 1 0

0 0 1

1 2S 1

> matmod(linsolve(A,b) ,3) ;

3 1, 2, 1 4

Chapter 3: Matrices

All of the basic matrix operations are built into Maple, as the following examples illustrate.

> A:=matrix(2,2,[2,-1,3,4]) ; B:=matrix(2,2,[1,2,0,1]) ;

A: c B: c

2 3 1 0

1 d 4 2 d 1

> evalm(A-2*B) ;

0 3

5 d 2

To evaluate the matrix A2, use the command

> evalm(A^2) ;

1 18

6 d 13

Appendix E

Use the command &* for matrix multiplication.

> evalm(A&*B) ;

2 3

3 d 10

> transpose(A) ;

2 1

3 d 4

> inverse(A)

4 11 D 3 11

> det(A) ;

1 11 T 2 11

11 Like vector operations, matrix operations can be performed symbolically in Maple.

> A:=matrix(2,2,[a,b,c,d]) ;

A: c

a c

b d d

> evalm(A^2) ;

a2 bc ca dc

ab bd d bc d2

> det(A) ;

ad bc

> inverse(A) ;

d ad bc D c ad bc

b ad bc T a ad bc

The matmod procedure, as dened for the examples in Chapter 1, is used for matrix calculations involving modular arithmetic.

Appendix E

> A:=matrix(2,2,[2,-1,3,4]) : > A3:=matmod(A,3) ;

A3 : c

2 0

2 d 1

> matmod(inverse(A3),3) ;

2 0

2 d 1

So the matrix A3 is its own inverse modulo 3. Another built-in function of Maple will determine the rank of a matrix:

> rank(A) ;

2 Maple can produce bases for the row, column, and null spaces of a matrix. Examples 3.45, 3.47, and 3.48

> A:=matrix(4,5,[1,1,3,1,6,2,-1,0,1,-1,-3,2,1,-2,1,4,1,6,1,3]) : > rowspace(A) ;

5 3 0, 0, 0, 1, 4 4 , 3 0, 1, 2, 0, 3 4 , 3 1, 0, 1, 0, 1 4 6

> colspace(A) ;

5 3 1, 0, 0, 1 4 , 3 0, 1, 0, 6 4 , 3 0, 0, 1, 3 4 6

> nullspace(A) ;

5 3 0, 5, 1, 4, 1 4 , 3 1, 3, 0,4, 1 4 6 The bases given above for the column and null spaces of A are not the same as in the text. Can you show that they are equivalent? You can dene linear transformations in Maple. Examples 3.55 and 3.60

> T:=(x,y)->[x,2*x-y,3*x+4*y] : > T(1,-1) ;

3 1, 3, 1 4

> S:=(u,v,w)->[2*u+w,3*v-w,u-v,u+v+w] : > T(x,y) ;

3 x, 2x y, 3x 4y 4 To compute S(T(x,y)), you have to pick out the components of the previous output. These are known to Maple as %[1], %[2], and %[3].

> S(%[1],%[2],%[3]) ;

3 5x 4y, 3x 7y, x y, 6x 3y 4

Appendix E

Maple has a built-in command for the LU factorization of a matrix. Example 3.33

> A:=matrix(3,3,[2,1,3,4,-1,3,-2,5,5]) ;

2 A: C 4 2

1 1 5

3 3S 5

> LUdecomp(A,L='L',U='U') : > L:=evalm(L) ; U:=evalm(U) ;

1 L: C 2 1 2 U : C0 0

0 1 2 1 3 0

0 0S 1 3 3 S 2

To check that this result is correct, compute

> evalm(L&*U) ;

2 C 4 2

1 1 5

3 3S 5

which is A, as expected.

Chapter 4: Eigenvalues and Eigenvectors

Eigenvalues, eigenvectors, and the characteristic polynomial are all included in the LinearAlgebra package. Example 4.18

> A:=matrix(3,3,[0,1,0,0,0,1,2,-5,4]) :

The characteristic polynomial of A can be produced using the command

> c:=charpoly(A,lambda) ;

l3 4l2 5l 2 (Replacing lambda by x would give the characteristic polynomial as a function of x.) You can now factor this polynomial to nd its zeros (the eigenvalues of A):

> factor(c) ;

1l 2 2 1l 1 2 2 Or, you can solve the characteristic equation directly, if you like:

> solve(c=0,lambda) ;

2, 1, 1

Appendix E

If all you require are the eigenvalues of A, you can use

> eigenvals(A) ;

2, 1, 1 The command eigenvects gives the eigenvalues of a matrix, their algebraic multiplicities, and a basis for each corresponding eigenspace.

> eigenvects(A) ;

3 1, 2, 5 3 1, 1, 1 4 6 4 , 3 2, 1, 5 3 1, 2, 4 4 6 4 For a diagonalizable matrix A, you can nd a matrix P such that P 1 AP is diagonal. Example 4.26

> A:=matrix(3,3,[-1,0,1,3,0,-3,1,0,-1]) : > eig:=eigenvects(A) ;

eig : 3 0, 2, 5 3 0, 1, 0 4 , 3 1, 0, 1 4 6 4 , 3 2, 1, 5 3 1, 3, 1 4 6 4 Now you need to extract the three eigenvectors.

> p1:=eig[1,3,1] ;

p1: 3 0, 1, 0 4 This command tells Maple that the rst vector you want is inside the rst set of brackets, in the third entry (which is {[0, 1, 0], [1, 0, 1]}), and is the rst vector in this set. The commands for the second and third eigenvectors are similar:

> p2:=eig[1,3,2]; p3:=eig[2,3,1];

p2 : 3 1, 0, 1 4 p3 : 3 1, 3, 1 4 Next, you build the matrix P with these three eigenvectors as its columns:

> P:=augment(p1,p2,p3) ;

1 P: C1 0

1 0 1

1 3S 1

To check that this matrix is correct, compute

> evalm(inverse(P)&*A&*P) ;

0 C0 0

0 0 0

0 0S 2

which is, as expected, a diagonal matrix displaying the eigenvalues of A. When approximating eigenvalues (or other numerical results) in Maple, use the oating-point evaluation command, evalf.

10

Appendix E

> A:=matrix(2,2,[1,2,3,4]) : > eigenvals(A) ;

5 1 5 1 133, 133 2 2 2 2

> evalf(eigenvals(A)) ;

5.372281323, .372281323 Maple can also handle matrix exponentials. Example 4.47

> A:=matrix(2,2,[1,2,3,2]) : > exponential(A) ;

3 112 2 e e4 5 5 D 3 4 3 112 e e 5 5

2 4 2 112 e e 5 5 T 2 112 3 e e4 5 5

Chapter 5: Orthogonality

Maple includes the Gram-Schmidt Process as a built-in procedure. It can be used to convert a basis for a subspace of n into an orthogonal or an orthonormal basis for that subspace. Example 5.13

> > > > x1:=vector([1,-1,-1,1]) : x2:=vector([2,1,0,1]) : x3:=vector([2,2,1,2]) : GramSchmidt([x1,x2,x3]) ;

3 3 1 1 1 1 c 3 1, 1, 1, 1 4 , c , , , d , c , 0, , 1 d d 2 2 2 2 2 2 To produce an orthonormal basis, add the option normalized.

> GramSchmidt([x1,x2,x3], normalized) ;

1 1 1 1 3 3 1 1 cc , , , d , c 15, 15, 15, 15 d , 2 2 2 2 10 10 10 10 1 1 1 c 13 12, 0, 13 12, 13 12 d d 6 6 3 The QR factorization of a matrix is performed in Maple using the command QRdecomp, which returns the upper triangular matrix R. To obtain the orthogonal matrix Q, you must instruct Maple to produce it. The command

Appendix E

11

fullspan=false ensures that the dimensions of Q agree with those of A and

that R is a square matrix. Example 5.15

> A:=matrix(4,3,[1,2,2,-1,1,2,-1,0,1,1,1,2]) : > R:=QRdecomp(A, Q='Q', fullspan=false) ;

2 R : F0 0

> Q:=evalm(Q) ;

1 15 0

1 2 3 15V 2 1 16 2

1 2 1 2 Q: H 1 2 1 2

3 15 10 3 15 10 1 15 10 1 15 10

1 16 6 0 1 16 6 1 16 3 X

You can check that this result is correct by verifying that A QR:

> evalm(Q&*R) ;

1 1 D 1 1

2 1 0 1

2 2 T 1 2

Chapter 6: Vector Spaces

You can adapt Maples matrix commands for use in nding bases for nite-dimensional vector spaces or testing vectors for linear independence. The key is to use the isomorphism between an n-dimensional vector space and n. Example 6.26 To test the set {1 x, x x2, 1 x2} for linear independence in 2, use the standard isomorphism of 2 with 3. This gives the correspondence 1 0 1 2 2 1 x 4 C1S, x x 4 C1S, 1 x 4 C0S 0 1 1

12

Appendix E

Now build a matrix with these vectors as its columns:

> A:=matrix(3,3,[1,0,1,1,1,0,0,1,1]) ;

1 A: C 1 0

> rank(A) ;

0 1 1

1 0S 1

3 Since rank(A) 3, the columns of A are linearly independent. Hence, the set {1 x, x x 2, 1 x 2} is also linearly independent, by Theorem 6.7. Example 6.44 Extend the set {1 x, 1 x} to S {1 x, 1 x, 1, x, x2} and use the standard isomorphism of 2 with 3 to obtain 1 1 1 0 0 2 1 x 4 C 1 S , 1 x 4 C 1 S , 1 4 C 0 S , x 4 C 1 S , x 4 C 0 S 0 0 0 0 1 Form a matrix with these vectors as its columns and nd its reduced echelon form:

> A:=matrix(3,5,[1,1,1,0,0,1,-1,0,1,0,0,0,0,0,1]) ;

1 A: C 1 0

> rref(A) ;

1 1 0

1 0 0

0 1 0

0 0S 1

1 E0 0

0 1 0

1 2 1 2 0

1 2 1 2 0

0 0U 1

Since the leading 1s are in columns 1, 2, and 5, these are the vectors to use for a basis. Accordingly, {1 x, 1 x, x2} is a basis for 2 that contains 1 x and 1 x. Many questions about a linear transformation between nite-dimensional vectors spaces can be handled by using the matrix of the linear transformation. Example 6.68 The matrix of T c basis B e c of 2 is 1 0 a b b d 1 a b 2 1 b c 2 x 1 c a 2 x2 with respect to the c 0 0 1 0 0 d, c d, c d f of W and the standard basis C {1, x, x 2} 0 1 0 0 1

Appendix E

13

1 A C 0 1

1 1 0

0 1 S 1

(Check this.) By Exercises 40 and 41 in Section 6.6, the rank and nullity of T are the same as those of A.

> A:=matrix(3,3,[1,-1,0,0,1,-1,-1,0,1]) : > rank(A) ;

2 It now follows from the Rank Theorem that nullity (T ) 3 rank(T ) 3 2 1. If you want to construct bases for the kernel and range of T, you can proceed as follows:

> nullspace(A) ;

5 3 1, 1, 1 4 6 This is the coordinate vector of a matrix with respect to the basis B of W. Hence, the matrix is 1# c 1 0 0 0 d 1# c 0 1 1 1 1 0 d 1# c 0 0 1 d f. 1 0 1 d c 1 1 1 d 1

so a basis for ker(T) is e c

> colspace(A) ;

5 3 0, 1, 1 4 , 3 1, 0, 1 4 6 This time you have coordinate vectors with respect to the basis C of 2, so a basis for range(T ) is {x x 2, 1 x2}.

Chapter 7: Distance and Approximation

Computations involving the inner product 8 f, g 9 to do in Maple. Example 7.6

> f(x):=x:g(x):=3*x-2: > int(f(x)^2, x=0..1) ;

f 1x 2 g 1x 2 dx in [a, b] are easy

a

1 3

> int((f(x)-g(x))^2, x=0..1) ;

4 3

14

Appendix E

> int(f(x)*g(x),x=0..1) ;

0 The various norms discussed in Section 7.2 are built into Maple. The format of the command is norm(v,n), where v is a vector and n is a positive integer or the word innity. Use n=1 for the sum norm, n=2 for the Euclidean norm, and n=innity for the max norm.

> v:=vector([1,(2,5]) : > norm(v,1) ;

8

> norm(v,2) ;

130

> norm(v,innity) ;

5 If the command norm is applied to a matrix, you get the induced operator norm. Example 7.19

> A:=matrix(3,3,[1,-3,2,4,-1,-2,-5,1,3]) ; > norm(A,1) ;

10

> norm(A,innity) ;

9 The condition number of a square matrix A can be obtained with the command

cond(A,n), where n is one of 1,2,innity, or frobenius.

Example 7.21

> A:=matrix(2,2,[1,1,1,1.0005]) : > cond(A,1) ; cond(A,2) ; cond(A,innity) ; cond(A,frobenius) ;

8004.000500 8002.000373 8004.000500 8002.000501 Maple can perform least squares approximation in one step. Example 7.26

> A:=matrix(3,2,[1,1,1,2,1,3]) : b:=vector([2,2,4]) : > leastsqrs(A,b) ;

2 c , 1d 3

Appendix E

15

If A does not have linearly independent columns, then there is not a unique least squares solution of Ax b. The additional argument optimize tells Maple to produce the least squares solution of minimal (Euclidean) length. Example 7.40

> A:=matrix(2,2,[1,1,1,1]) : b:=vector([0,1]) : > leastsqrs(A,b) ;

1 _t1, _t1 d 2

> leastsqrs(A,b,optimize) ;

1 1 c , d 4 4 The singular value decomposition is also built into Maple. Example 7.34(b)

> A:=matrix(3,2,[1,1,1,0,0,1]) : > singularvals(transpose(A)) ;

sv : 3 13, 1 4 This command produces the exact singular values of A. Their numerical approximations are given by

> evalf(sv) ;

3 1.732050808, 1. 4 The same result is obtained with

> sv:=evalf(Svd(A,U,V)) ;

sv : 3 1.732050808, 1.000000000 4 (Note that evalf must be used.) This time, the orthogonal matrices U and V are computed (numerically) as well.

> U:=evalm(U) ; V:=evalm(V) ;

.8164965809 U : C .4082482905 .4082482905 V: c .7071067812 .7071067812

.35138179141016 .7071067812 .7071067812 .7071067812 d .707067812

.5773502692 .5773502692 S .5773502692

(Note that the signs of some of the entries differ from those in the solution in the text. This is not surprising, since the SVD is not unique. Also notice that u12 0.3513817914 1016 0.) You can easily verify that you have an SVD of A by computing .

16

Appendix E

> evalm(transpose(U)&*A&*V) ;

1.732050807 C 0 0

0 1.000000000 S 0

Correct! The pseudoinverse is not a built-in function in Maple, but it is not hard to construct. Example 7.39(b)

> A:=matrix(3,2,[1,1,1,0,0,1] : sv:=evalf(Svd(A,U,V)) : > d:=diag(sv[1],sv[2]) ;

d: c

1.732050808 0

0 d 1.000000000

> Splus:=augment(inverse(d),[0,0]) ;

S plus : c

.5773502690 0

0 1.000000000

0 d 0

> Aplus:=evalm(V&*Splus&*transpose(U)) ;

A plus: c

.3333333332 .3333333332

.6666666666 .3333333334

.3333333334 d .6666666666

Mathematica

In order to use Mathematica to do linear algebra, you must rst load the appropriate linear algebra package. For basic vector and matrix operations, this is done with the command

<LinearAlgebra 'MatrixManipulation'

The package must be loaded for each new Mathematica session and each new notebook.

Chapter 1: Vectors

Vectors are entered as lists, delimited by braces. A semicolon is used to suppress the output of an input statement. Example 1.6

In[1]:= u={1,0,-1}; v={2,-3,1}; w={5,-4,0}; In[2]:= 3*u+2*v-w Out[2]= {2,-2,-1}

Example 1.15

In[1]:= u={1,2,-3}; v={-3,5,2}

Appendix E

17

In[2]:= u.v Out[2]= 1

To nd the (Euclidean) length of a vector, use the function Norm. Example 1.17

In[1]:= Norm[{2,3}] Out[1]= 1 13

The Cross product of vectors in 3 is computed using the command Cross.

In[1]:= u={1,2,3}; v={-1,1,5} ; In[2]:=Cross[u,v] ; Out[2]= [7,-8,3]

All linear algebra commands can be performed symbolically as well as numerically.

In[1]:= u={u1,u2,u3}; v={v1,v2,v3}; In[2]:=u+3*v; Out[2]= {u1+3 v1, u2+3 v2, u3+3 v3} In[1]:=u.v Out[1]= u1 v1+u2 v2+u3 v3 In[1]:= Norm[u] Out[1]= 1 u1 2 u2 2 u3 3 In[1]:= Cross[u,v] ; Out[1]= {-u3 v2+u2 v3, u3 v1-u1 v3, -u2 v1+u1 v2}

Mathematica can also perform modular arithmetic. Example 1.12

In[1]:= Mod[3548, 3] Out[1]= 2

18

Appendix E

Example 1.14

In[1]:= u={2,2,0,1,2}; v={1,2,2,2,1}; In[2]:= Mod[u.v, 3] Out[2]= 1

Example 1.40

In[1]:= c={3,1,3,1,3,1,3,1,3,1,3,1}; v={0,7,4,9,2,7,0,2,0,9,4,6}; In[2]:= Mod[c.v, 10] Out[2]= 0

The same syntax is used to perform modular arithmetic calculations in which the result is a vector or matrix.

In[1]:= u={2,2,0,1,2}; v={1,2,2,2,1}; In[2]:= Mod[u+v, 3] Out[2]= {0,1,2,0,0}

Chapter 2: Systems of Linear Equations

Example 2.6

In[1]:= Solve[{x-y-z==2,3*x-3*y+2*z==16,2*x-y+z==9},{x,y,z}] Out[1]= {{x 3, y -1, z 2}}

Alternatively, you can have Mathematica perform the same calculations using matrices and vectors:

In[1]:= A={{1,-1,-1},{3,-3,2},{2,-1,1}}; b={{2},{16},{9}}; In[2]:=LinearSolve[A,b] {{3},{-1},{2}}

To perform elementary row operations individually, begin by entering the augmented matrix:

In[1]:= A={{1,-1,-1,2},{3,-3,2,16},{2,-1,1,9}}; In[2]:= A[[2]]=A[[2]]-3*A[[1]];

Appendix E

19

In[3]:= MatrixForm[A] Out[3]= 1 C0 2 -1 0 -1 -1 5 1 2 10 S 9

This command adds 3 times row 1 to row 2 (putting the result in row 2). The command MatrixForm displays the result as a matrix, rather than as a list of lists.

In[1]:= ReplacePart[A,A,3,2]; ReplacePart[%,A,2,3]//MatrixForm Out[1]= 1 C2 0 -1 -1 0 -1 1 5 2 9S 10

This command swaps rows 2 and 3 of the previous output (in two steps).

In[1]:= A[[3]]=1/5*%[[3]]; MatrixForm[A] 1 C2 0 -1 -1 0 -1 1 1 2 9S 2

This command multiplies row 3 of the last result by 1 and stores the answer back in A. 5 Another command that can be useful in solving a linear system directly is RowReduce. Examples 2.11 and 2.13

In[1]:= Ab={{1,-1,-1,2,1},{2,-2,-1,3,3},{-1,1,-1,0,-3}} ; In[2]:= RowReduce[Ab]//MatrixForm Out[2]= 1 C0 0 -1 0 0 0 1 0 1 -1 0 2 1S 0

To see the solutions explicitly, use Solve rather than LinearSolve:

In[1]:= Solve[{w-x-y+2z==1,2*w-2*x-y+3*z==3,-w+x-y==-3},{w,x,y,z}] Out[1]= {{w 2+x-z,y 1+z}}

You can solve systems over p by specifying the modulus.

20

Appendix E

Example 2.16

In[1]:= A={{1,2,1},{1,0,1},{0,1,2}}; b={{0},{2},{1}} ; In[2]:= LinearSolve[A,b,Modulus->3] Out[2]= {{1},{2},{1}} Chapter 3: Matrices

Mathematica handles matrices as lists of lists. A matrix is input as a list whose elements are themselves lists containing the entries of the rows of the matrix. To display the result of a matrix calculation as a matrix, use the command MatrixForm.

In[1]:= A={{2,-1},{3,4}}; B={{1,2},{0,1}}; In[2]:=A-2*B//MatrixForm Out[2]= c 0 3 -5 d 2

To evaluate the matrix A2, use the command

In[3]:= MatrixPower[A,2]//MatrixForm c 1 18 -6 d 13

(Do not use A^2; it squares all the entries of A individually.) Use a period for matrix multiplication. (The command A*B will give an elementwise product instead.)

In[4]:= A.B//MatrixForm Out[4]= c 2 3 3 d 10

In[5]:= Transpose[A]//MatrixForm Out[5]= c 2 -1 3 d 4

In[6]:= Inverse[A]//MatrixForm

Appendix E

21

Out[6]= 4 11 D 3 11 In[7]:= Det[A] Out[7]= 11 1 11 T 2 11

Like vector operations, matrix operations can be performed symbolically in Mathematica.

In[1]:= A={{a,b},{c,d}}; In[2]:= MatrixPower[A,2]//MatrixForm Out[2]= c a2 bc ca dc ab bd d bc d2

In[3]:= Det[A] Out[3]= -bc+ad In[4]:= Inverse[A]//MatrixForm Out[4]= d ad bc D c ad-bc b ad-bc T a ad-bc

The Mod and Modulus commands are used for matrix calculations involving modular arithmetic.

In[1]:= A={{2,-1},{3,4}}; In[2]:= A3=Mod[A,3] Out[2]= {{2,2},{0,1}}

Do not use the MatrixForm command here, since you are going to perform another calculation on the result.

22

Appendix E

In[3]:= Inverse[A3, Modulus->3] Out[3]= {{2,2},{0,1}}

So the matrix A3 is its own inverse modulo 3. Mathematica can produce a basis for the null space of a matrix. Examples 3.45, 3.47, and 3.48

In[1]:= A={{1,1,3,1,6},{2,-1,0,1,-1},{-3,2,1,-2,1},{4,1,6,1,3}} ; In[2]:= NullSpace[A] Out[2]= {{1,-3,0,-4,1},{-1,-2,1,0,0}}

To nd bases for the row and column spaces of A, use the RowReduce command.

In[3]:= R=RowReduce[A] Out[3]= {{1,0,1,0,-1},{0,1,2,0,3},{0,0,0,1,4},{0,0,0,0,0}}

From this output you see that the nonzero rows form a basis for the row space of A, and the columns of A corresponding to the positions of the leading 1s (columns 1, 2, and 4) give a basis for the column space of A. You can extract these as follows:

In[4]:= TakeRows[R,3] Out[4]= {{1,0,1,0,-1},{0,1,2,0,3},{0,0,0,1,4}} In[5]:= {TakeColumns[A,{1}], TakeColumns[A,{2}], TakeColumns[A,{4}]} Out[5]= {{{1},{2},{-3},{4}},{{1},{-1},{2},{1}},{{1},{1},{-2},{1}}}

You can dene linear transformations in Mathematica. Examples 3.55 and 3.60

In[1]:= T[x_,y_]:={x,2*x-y,3*x+4*y} In[2]:= T[1,-1] Out[2]= {1,3,-1} In[3]:= S[u_,v_,w_]:={2*u+w,3*v-w,u-v,u+v+w} In[4]:= T[x,y]

Appendix E

23

Out[4]= {x,2 x-y,3 x+4 y}

To compute S(T(x,y)), you have to pick out the components of the previous output. These are known to Mathematica as %[[1]], %[[2]], and %[[3]].

In[5]:= S[%[[1]],%[[2]],%[[3]]] Out[5]= {5 x+4 y,-3 x+3 (2 x-y)-4 y,-x+y,6 x+3 y} In[6]:= Simplify[%] Out[6]= {5 x+4 y,3 x-7 y,-x+y,3 (2 x+y)}

Mathematica has a built-in command for the LU factorization of a matrix. Example 3.33

In[1]:= A={{2,1,3},{4,-1,3},{-2,5,5}}; In[2]:= {LU,P,cond}=LUDecomposition[A] Out[2]= {{{2,1,3},{2,-3,-3},{-1,-2,2}},{1,2,3},1}

The term {{2,1,3},{2,-3,-3},{-1,-2,2}} contains the matrices L and U combined into a single matrix that leaves out the diagonal 1s of L. Mathematicas help facility gives the procedures Upper and Lower, which will extract L and U :

In[3]:= Upper[LU_?MatrixQ]:=LUTable[If[i j,1,0],{i,Length[LU]}, {j,Length[LU]}] In[4]:= Lower[LU_?MatrixQ] :=LU-Upper[LU]+IdentityMatrix [Length[LU]] In[5]:= MatrixForm[L=Lower[LU]] Out[5]= 1 C 2 -1 0 1 -2 0 0S 1

In[6]:= MatrixForm[U=Upper[LU]] Out[6]= 2 C0 0 1 -3 0 3 -3 S 2

24

Appendix E

To check that this result is correct, compute

In[7]:= MatrixForm[L.U] Out[7]= 2 C 4 -2 1 -1 5 3 3S 5

which is A, as expected.

Chapter 4: Eigenvalues and Eigenvectors

Eigenvalues and eigenvectors are included in Mathematica. Example 4.18

In[1]:= A={{0,1,0},{0,0,1},{2,-5,4}};

If all you require are the eigenvalues of A, you can use

In[2]:= Eigenvalues[A] Out[2]= {1,1,2}

The command Eigenvectors gives the corresponding eigenvectors.

In[3]:= Eigenvectors[A] Out[3]= {{1,1,1},{0,0,0},{1,2,4}}

Entering Eigensystem combines the two previous calculations.

In[4]:= Eigensystem[A] Out[4]= {{1,1,2},{{1,1,1},{0,0,0},{1,2,4}}}

For a diagonalizable matrix A, you can nd a matrix P such that P 1 AP is diagonal. Example 4.26

In[1]:= A={{-1,0,1},{3,0,-3},{1,0,-1}}; In[2]:= {evalues,evectors}=Eigensystem[A] Out[2]= {{-2,0,0},{{-1,3,1},{1,0,1},{0,1,0}}}

Now you need to extract the three eigenvectors and put them into the columns of P.

In[3]:= MatrixForm[P = Transpose[evectors]]

Appendix E

25

Out[3]= -1 C 3 1 1 0 1 0 1S 0

The corresponding diagonal matrix is created with the command

In[4]:= MatrixForm[Diag=DiagonalMatrix[evalues]] Out[4]= -2 C 0 0 0 0 0 0 0S 0

To check that the matrix is correct, compute

In[5]:= P.Diag.Inverse[P]//MatrixForm Out[5]= -1 C 3 1 0 0 0 1 -3 S -1

As expected, this is A! When approximating eigenvalues (or other numerical results) in Mathematica, use the oating-point evaluation command, N.

In[1]:= A=N[{{1,2},{3,4}}] Out[1]= {{1.,2.},{3.,4.}} In[2]:= Eigenvalues[A] Out[2]= {5.37228,-0.372281}

Mathematica can also handle matrix exponentials. Example 4.47

In[1]:= A={{1,2},{3,2}}; In[2]:= MatrixExp[A]//MatrixForm 3 2E5 5E D 3 1 -1 E5 2 5E 2 1 -1 E5 2 5E T 2 3E5 5E

26

Appendix E

Chapter 5: Orthogonality

Mathematica includes the Gram-Schmidt Process as a built-in procedure. To use it, you must rst load the orthogonalization package.

In[1]:= <LinearAlgebra'Orthogonalization'

Example 5.13

In[2]:= x1={1,-1,-1,1}; x2={2,1,0,1}; x3={2,2,1,2}; In[3]:= GramSchmidt[{x1,x2,x3}] Out[3]:= 1 1 1 3 3 1 1 1 1 2 1 , , , f, e ,0, , f e , - ,- , f, e 2 2 2 2 2 15 2 15 2 15 2 15 16 16 A 3

The default is an orthonormal basis; to obtain an orthogonal basis only, use the option Normalized->False.

In[4]:= GramSchmidt[{x1,x2,x3}, Normalized->False] Out[4]= 1 1 3 3 1 1 e 5 1,-1,-1,1 6, e , , , f , e - ,0, ,1 f f 2 2 2 2 2 2

The QR factorization of a matrix is performed in Mathematica using the command QRDecomposition, which returns an orthogonal matrix Q and an upper triangular matrix R such that QTR A. Example 5.15

In[1]:= A={{1,2,2},{-1,1,2},{-1,0,1},{1,1,2}}; In[2]:= {Q,R}=QRDecomposition[A]; In[3]:= Map[MatrixForm,{Q,R}] 1 2 3 g G 2 15 1 16 0 1 2 3 2 15 0 0 1 2 1 2 15 1 16 0 1 2 2 1 0 2 15 W G , 2 0 A3 0 0 1 15 0 0 1 2 3 15 2 Ww 3 A2 0

You can check that this result is correct by verifying that QTR A:

Appendix E

27

In[4]:= Transpose[Q].R//MatrixForm Out[4]= 1 -1 D -1 1 2 1 0 1 2 2 T 1 2

Chapter 6: Vector Spaces

You can adapt Mathematical matrix commands for use in nding bases for nitedimensional vector spaces or testing vectors for linear independence. The key is to use the isomorphism between an n-dimensional vector space and n. Example 6.26 To test the set {1 x, x x2, 1 x2} for linear independence in 2, use the standard isomorphism of 2 with 3. This gives the correspondence 1 0 1 1 x 4 C 1 S , x x2 4 C 1 S , 1 x2 4 C 0 S 0 1 1 Now build a matrix with these vectors as its columns:

In[1]:= A={{1,0,1},{1,1,0},{0,1,1}}; In[2]:= RowReduce[A] Out[2]= {{1,0,0},{0,1,0},{0,0,1}}

Since the reduced row echelon form of A is I, you know that the columns of A are linearly independent. Hence, the set {1 x, x x 2, 1 x 2} is also linearly independent, by Theorem 6.7. Example 6.44 Extend the set {1 x, 1 x} to S {1 x, 1 x, 1, x, x 2} and use the standard isomorphism of 2 with 3 to obtain 1 1 1 0 0 2 1 x 4 C 1 S , 1 x 4 C 1 S , 1 4 C 0 S , x 4 C 1 S , x 4 C 0 S 0 0 0 0 1 Form a matrix with these vectors as its columns and nd its reduced echelon form:

In[1]:= MatrixForm[A={{1,1,1,0,0},{1,-1,0,1,0},{0,0,0,0,1}}]

28

Appendix E

Out[1]= 1 C1 0 In[2]:= RowReduce[A]//MatrixForm Out[2]= 1 C0 0 0 1 0

1 2 1 2 1 2 -1 2

1 -1 0

1 0 0

0 1 0

0 0S 1

0 0S 1

Since the leading 1s are in columns 1, 2, and 5, these are the vectors to use for a basis. Accordingly, {1 x, 1 x, x 2} is a basis for 2 that contains 1 x and 1 x. Many questions about a linear transformation between nite-dimensional vector spaces can be handled by using the matrix of the linear transformation. Example 6.68 The matrix of T c B ec 1 0 a b 0 0 d,c 0 1 b d 1 a b 2 1 b c 2 x 1 c a 2 x 2 with respect to the basis c 1 0 0 d,c d f of W and the standard basis C {1, x, x 2} of 2 is 0 0 1 1 1 0 0 1 S 1

1 AC 0 1

(Check this.) By Exercises 40 and 41 in Section 6.6, the rank and nullity of T are the same as those of A.

In[1]:= A={{1,-1,0},{0,1,-1},{-1,0,1}} ; In[2]:= RowReduce[A] Out[2]:= {{1,0,-1},{0,1,-1},{0,0,0}}

You can see that the rank is 2. It now follows from the Rank Theorem that nullity (T ) 3 rank(T ) 3 2 1. If you want to construct bases for the kernel and range of T, you can proceed as follows:

In[3]:= NullSpace[A] Out[3]= {{1,1,1}}

Appendix E

29

This is the coordinate vector of a matrix with respect to the basis B of W. Hence, the matrix is 1# c 1 0 0 0 d 1# c 0 1 1 0 d 1# c 0 0 0 1 d c 1 1 1 d 1

so a basis for ker(T ) is e c

1 1 d f . The rst two columns of A form a basis for the 1 1 column space of A, since the leading 1s are in those columns. These correspond to a basis for the range of T. This time you have coordinate vectors with respect to the basis C of 2, so a basis for range(T ) is {1 x 2, x x 2}.

Chapter 7: Distance and Approximation

Computations involving the inner product 8 f, g 9 to do in Mathematica. Example 7.6

In[1]:= f=x; g=3*x-2; In[2]:= Integrate[f^2,{x,0,1}] Out[2]= 1 3 In[3]:= Integrate[(f-g)^2,{x,0,1}] Out[3]= 4 3 In[4]:= Integrate[f*g,{x,0,1}] Out[4]= 0

f 1x 2 g 1x 2 dx in [a, b] are easy

a

For vector norms, Mathematica has the following:

In[1]:= u={1,-2,5}; In[2]:= Norm[u] Out[2]= 130 In[3]:= SumNorm[x_]:=Apply[Plus,Map[Abs,x]]

30

Appendix E

In[4]:= SumNorm[u] Out[4]= 8 In[5]:= MaxNorm[x_]:=Apply[Max,Map[Abs,x]] In[6]:= MaxNorm[u] Out[6]= 5

You can perform least squares approximation by having Mathematica solve the normal equations. Example 7.26

In[1]:= A={{1,1},{1,2},{1,3}}; b={{2},{2},{4}}; In[2]:= LinearSolve[Transpose[A].A,Transpose[A].b] Out[2]= 2 e e f ,1 f 3

If A does not have linearly independent columns, then there is not a unique least squares solution of Ax b. In this case, the version of least squares that uses the pseudoinverse should be used. Example 7.40

In[1]:= A={{1,1},{1,1}}; b={{0},{1}}; In[2]:= PseudoInverse[A].b Out[2]= ee 1 1 f , e ff 4 4

The singular value decomposition is also built into Mathematica. It can be used only on numerical matrices. One way to ensure that a matrix is treated numerically is to make sure that some of its entries include a decimal point. Example 7.34(b)

In[1]:= A={{1.,1.},{1.,0},{0,1.}}; In[2]:= {U,S,V}=SingularValueDecomposition[A] Out[2]= {{{0.816497,0.408248,0.408248}, {2.33321 x 10-16,0.707107,-0.707107}}, {1.73205,1.}, {{0.707107,0.707107}, {0.707107,-0.707107}}}

Appendix E

31

This command gives a full SVD of A: The rst entry in the list of lists is the matrix U, which is followed by a list containing the singular values, and nally V. To see these as matrices, use the command

In[3]:= Map[MatrixForm,{U,DiagonalMatrix[S],V}] Out[3]= ec c 0.816497 2.33321x10-16 0.408248 0.707107 0.408248 d, -0.707107 0.707107 df -0.707107

1.73205 0

0 0.707107 d, c 1. 0.707107

Notice that Mathematica always returns the singular values in a square diagonal matrix and that the matrices U and V are not necessarily square. This is different from the way the text presents these values. However, the factorization has the same property:

In[4]:= MatrixForm[Transpose[U].DiagonalMatrix[S].V] Out[4]= 1# D 1# 7.60364x1016 1# -3.17341x1016T 1#

which, as expected, is A. [The (2, 2) and (3, 1) entries are effectively zeros.] As we have already noted, the pseudoinverse is a built-in function in Mathematica. Example 7.39(b)

In[1]:= A={{1,1},{1,0},{0,1}}; In[2]:= PseudoInverse[A] Out[2]= 1 1 2 1 2 1 ee , ,- f , e ,- , ff 3 3 3 3 3 3

MATLAB

MATLAB stands for Matrix Laboratory. As its name suggests, it is designed to handle matrix (and vector) calculations, which it does with ease.

Chapter 1: Vectors

MATLAB distinguishes between row and column vectors. The entries of a row vector are separated by spaces or commas, while the entries of a column vector are separated by semicolons:

32

Appendix E

v=[1 2 3], w=[1;2;3] v= 1 2 3 w= 1 2 3

Example 1.6

u=[1 0 -1]; v=[2 -3 1]; w=[5 -4 0]; 3*u+2*v-w ans = 2 -2 -1

A semicolon after an input statement tells MATLAB to suppress the output. Thus, in the above example, the vectors u, v, and w were not displayed. Example 1.15

u=[1 2 -3]; v=[-3 5 2]; dot(u,v) ans = 1

Example 1.17

norm([2 3]) ans = 3.6056

If MATLABs symbolic math toolbox is installed on your system, you can also obtain exact answers.

sym(norm([2 3])) ans = sqrt(13)

The cross product of vectors in 3 is computed using the command cross.

u=[1 2 3]; v=[-1 1 5]; cross(u, v) ans = 7 -8 3

MATLAB can also perform modular arithmetic. Example 1.12

mod(3548, 3) ans = 2

Appendix E

33

Example 1.14

u=[2 2 0 1 2]; v=[1 2 2 2 1]; mod(dot(u, v), 3) ans = 1

Example 1.40

c=[3 1 3 1 3 1 3 1 3 1 3 1]; v=[0 7 4 9 2 7 0 2 0 9 4 6]; mod(dot(c, v), 10) ans = 0

The same syntax is used to perform modular arithmetic calculations in which the result is a vector or matrix.

u=[2 2 0 1 2]; v=[1 2 2 2 1]; mod(u+v, 3) ans = 0 1 2 0 0

Chapter 2: Systems of Linear Equations

Matrices are entered in MATLAB the way they are written by hand: row by row and column by column. The entries in each row are separated by spaces or commas, and a semicolon is used to indicate the end of a row. Example 2.6

A=[1 -1 -1; 3 -3 2; 2 -1 1] A = 1 3 2 -1 -3 -1 -1 2 1

b=[2; 16; 9] b = 2 16 9 linsolve(A, b) ans= [ 3] [-1] [ 2]

34

Appendix E

The following syntax does the same thing as that above:

A\b ans = 3.0000 -1.0000 2.0000

You can also obtain the solution of a system from the reduced row echelon form of its augmented matrix. (This is necessary if the system has innitely many or no solutions, since MATLAB cannot handle these cases.)

Ab=[1 -1 -1 2; 3 -3 2 16; 2 -1 1 9]; rref(Ab) ans = 1 0 0 0 1 0 0 0 1 3 -1 2

If you like, you can ask MATLAB to walk you through the row reduction process. (Keep in mind, though, that MATLAB uses partial pivoting strategies.) This is done with the command

rrefmovie(Ab)

Use MATLABs mod command to handle systems over p. Example 2.16

A=[1 2 1; 1 0 1; 0 1 2]; b=[0; 2; 1]; mod(A\b, 3) ans = 1 2 1

This approach sometimes needs a little help, however. Suppose you need to solve the system Ax b, where 1 A C3 2 1 0 2 2 3 1 S and b C 3 S 3 2

over 5. You obtain

A=[1 1 2;3 0 1;2 2 3]; b=[3;3;2]; sol=mod(A\b,5) sol = 14/3 1/3 4

This is not what you want, since the solution is not a vector with entries in 5. In this case, you need to use the following theorem, due to Euler:

Appendix E

35

Theorem E.1

If p is prime, then for all k k p 1 1

0 in p,

In this example, p 5, so 3 51 34 1 in 5. Therefore, multiplying the solution by 34 1 will not change it but will get rid of the fractions.

mod(sol*(3^4),5) ans = 3 2 4 Chapter 3: Matrices

All of the basic matrix operations are built into MATLAB, as the following examples illustrate.

A=[2 -1;3 4]; B=[1 2;0 1]; A-2*B ans = 0 3 -5 2

To evaluate the matrix A2, use the command

A^2 ans = 1 18 -6 13

The same calculation is done by mpower(A,2). Use the command * for matrix multiplication.

A*B ans = 2 3 3 10

transpose(A) ans = 2 -1 3 4

A more compact way to take the transpose of a matrix A is to type A.

inv(A) ans = 4/11 -3/11 1/11 2/11

36

Appendix E

Entering A^((1) does the same thing.

det(A) ans = 11

Like vector operations, matrix operations can be performed symbolically in MATLAB.

A=sym('[a b; c d]') A = [a, b] [c, d] A^2 ans = [a^2+b*c, a*b+b*d] [c*a+d*c, b*c+d^d] det(A) ans = a*d-b*c inv(A) ans = [d/(a*d-b*c), -b/(a*d-b*c)] [-c/(a*d-b*c), a/(a*d-b*c)]

For matrix calculations involving modular arithmetic, proceed as with systems of linear equations.

A=[2 -1; 3 4]; A3=mod(A, 3) A3 = 2 0 2 1

mod(inv(A3), 3) ans = 1/2 0 2 1

This is not a matrix over 3, but Eulers Theorem tells us that 231 22 1. Therefore, multiplying the last answer by 22 mod 3 will give the inverse of A3 over 3:

mod(ans*(2^2),3) ans = 2 0 2 1

So A3 is its own inverse modulo 3. Another built-in function of MATLAB will determine the rank of a matrix.

rank(A) ans = 2

Appendix E

37

MATLAB can produce bases for the row, column, and null spaces of a matrix. Examples 3.45, 3.47, and 3.48

A=[1 1 3 1 6; 2 -1 0 1 -1; -3 2 1 -2 1; 4 1 6 1 3] ; rref(A) ans = 1 0 0 0 0 1 0 0 1 2 0 0 0 0 1 0 -1 3 4 0

From this matrix you can obtain a basis for row(A) by taking the nonzero rows.

colspace(sym(A)) ans = [1, 0, 0] [0, 1, 0] [0, 0, 1] [1, 6, 3]

The basis given above for the column space of A is not the same as in the text. Can you show that the two are equivalent?

null(sym(A)) ans = [-1, 1] [-2, -3] [-1, 0] [ 0, -4] [ 0, 1]

The same result arises from

null (A, 'r') ans = -1 -2 1 0 0 1 -3 0 -4 1

The 'r' tells MATLAB to give the result in rational arithmetic. Without it, you get a numerical answer. [In fact, MATLAB gives an orthonormal basis for null(A).]

null(A) ans = -0.5413 -0.5867 0.4421 0.3968 -0.0992 0.1443 -0.6327 0.0400 -0.7371 0.1843

38

Appendix E

MATLAB has a built-in command for the LU factorization of a matrix. Example 3.33

A=[2 1 3; 4 -1 3; -2 5 5]; [L, U]=1u(A) L = 0.5000 1.0000 -0.5000 U = 4.0000 0 0 -1.0000 4.5000 0 3.0000 6.5000 -0.6667 0.3333 0 1.0000 1.0000 0 0

To check that this result is correct, compute

L*U ans = 2.0000 4.0000 -2.0000 1.0000 -1.0000 5.0000 3.0000 3.0000 5.0000

which is A, as expected.

Chapter 4: Eigenvalues and Eigenvectors

MATLAB can handle eigenvalues, eigenvectors, and the characteristic polynomial. Example 4.18

A=[0 1 0; 0 0 1; 2 -5 4];

The characteristic polynomial of A can be produced using the command

c=poly(A) c = 1.0000 -4.0000 5.0000 -2.0000

This command gives the coefcients of the characteristic polynomial in order of decreasing powers of x. To see the actual polynomial, convert this result into standard form:

poly2sym(c) ans = x^3-4*x^2+5*x-2

To solve the characteristic equation (in order to obtain the eigenvalues of A), ask for the roots of c.

Appendix E

39

roots(c) ans = 2.0000 1.0000 + 0.0000i 1.0000 - 0.0000i

If all you require are the eigenvalues of A, you can use

eig(A) ans = 1.0000 + 0.0000i 1.0000 - 0.0000i 2.0000

To see both the eigenvalues and the bases for the corresponding eigenspaces, use the longer form:

[P,D]=eig(A,'nobalance') P= 0.5774 0.5774 0.5774 D= 1.0000 0 0 0 1.0000 0 0 0 2.0000 0.5774 0.5774 0.5774 -0.2182 -0.4364 -0.8729

Here, the columns of P are eigenvectors of A corresponding to the eigenvalues of A, which appear on the diagonal of D. For a diagonalizable matrix A, use the same syntax to nd a matrix P such that P1 AP is diagonal. Example 4.26

A=[-1 0 1; 3 0 -3; 1 0 -1]; [P,D]=eig(A,'nobalance') P = 0.3015 0.9045 0.3015 D = -0.0000 0 0 0 -2.0000 0 0 0 0.0000 0.3015 -0.9045 -0.3015 -0.0134 -0.9998 -0.0134

There are three distinct eigenvectors, so A is diagonalizable. Thus, PDP 1 should equal A.

40

Appendix E

p*D*inv(P) ans = -1.0000 3.0000 1.0000 0.0000 0.0000 -0.0000 1.0000 -3.0000 -1.0000

Bingo! MATLAB can also handle matrix exponentials using the command expm. Example 4.47

A=[1 2; 3 2]; expm(A) ans = 22.0600 32.5382 21.6921 32.9060

If the symbolic math toolbox is installed, you can get this result exactly.

expm(sym(A)) ans = [ 3/5*exp(-1)+2/5*exp(4), [ 3/5*exp(4)-3/5*exp(-1), Chapter 5: Orthogonality 2/5*exp(4)-2/5*exp(-1)] 2/5*exp(-1)+3/5*exp(4)]

MATLAB includes the Gram-Schmidt Process as a built-in procedure. It can be used to convert a basis for a subspace of n into an orthonormal basis for that sub-space. You accomplish this by putting the given basis vectors into the columns of a matrix and then using the command orth. Example 5.13

x1=[1; -1; -1; 1]; x2=[2; 1; 0; 1]; x3=[2; 2; 1; 2]; A=[x1 x2 x3] A = 1 -1 -1 1 Q = 0.6701 0.4806 0.1609 0.5423 -0.3781 0.6444 0.6018 -0.2823 -0.5241 -0.2321 0.2805 0.7700 2 1 0 1 2 2 1 2

You can check that these vectors are orthonormal by verifying that QTQ I.

Appendix E

41

Q'*Q ans = 1.0000 0.0000 -0.0000 0.0000 1.0000 0.0000 -0.0000 0.0000 1.0000

MATLAB can also perform the QR factorization of a matrix. It returns the upper triangular matrix R. Example 5.15

A=[1 2 2; -1 1 2; -1 0 1; 1 1 2]; qr(A) ans = -2.0000 -0.5000 -0.0000 0.5000 -1.0000 -2.2361 0.4472 0 -0.5000 -3.3541 -1.2247 0.9526

To obtain the orthonormal matrix Q, you must use a longer form. The command [Q,R] = qr(A,0) ensures that the dimensions of Q agree with those of A and that R is a square matrix.

[Q,R]=qr(A,0) Q = -0.5000 0.5000 0.5000 -0.5000 R = -2.0000 0 0 Q*R ans = 1.0000 -1.0000 -1.0000 1.0000 2.0000 1.0000 0.0000 1.0000 2.0000 2.0000 1.0000 2.0000 -1.0000 -2.2361 6 -0.5000 -3.3541 -1.2247 -0.6708 -0.6708 -0.2236 -0.2236 0.4082 -0.0000 -0.4082 -0.8165

You can check that this result is correct by verifying that A QR:

(The command [Q,R] = qr(A) produces the modied QR factorization discussed in the text.)

Chapter 6: Vector Spaces

You can adapt MATLABs matrix commands for use in nding bases for nite-dimensional vector spaces or testing vectors for linear independence. The key is to use the isomorphism between an n-dimensional vector space and n.

42

Appendix E

Example 6.26 To test the set {1 x, x x 2, 1 x 2} for linear independence in 2 use the standard isomorphism of 2 with 3. This gives the correspondence 1 0 1 2 2 1 x 4 C1S, x x 4 C1S, 1 x 4 C0S 0 1 1 Now build a matrix with these vectors as its columns:

A=[1 0 1;1 1 0;0 1 1] A = 1 1 0 rank(A) ans = 3 0 1 1 1 0 1

Since rank(A) 3, the columns of A are linearly independent. Hence, the set {1 x, x x 2, 1 x 2} is also linearly independent, by Theorem 6.7. Example 6.44 Extend the set {1 x, 1 x} to S {1 x, 1 x, 1, x, x2} and use the standard isomorphism of 2 with 3 to obtain 1 1 1 0 0 2 1 x 4 C 1 S , 1 x 4 C 1 S , 1 4 C 0 S , x 4 C 1 S , x 4 C 0 S 0 0 0 0 1 Form a matrix with these vectors as its columns and nd its reduced echelon form:

A=[1 1 1 0 0;1 -1 0 1 0;0 0 0 0 1] A = 1 1 0 rref (A) ans = 1.0000 0 0 0 1.0000 0 0.5000 0.5000 0 0.5000 -0.5000 0 0 0 1.0000 1 -1 0 1 0 0 0 1 0 0 0 1

Since the leading 1s are in columns 1, 2, and 5, these are the vectors to use for a basis. Accordingly, {1 x, 1 x, x 2} is a basis for 2 that contains 1 x and 1 x. Many questions about a linear transformation between nite-dimensional vector spaces can be handled by using the matrix of the linear transformation.

Appendix E

43

Example 6.68 The matrix of T c B ec 1 0 a b b d 1 a b 2 1 b c 2 x 1 c a 2 x 2 with respect to the basis c 0 1 d, c 0 0 1 1 0 0 d f of W and the standard basis C {1, x, x 2} of 2 is 1

0 0 d, c 0 1

1 AC 0 1

0 1 S 1

(Check this.) By Exercises 40 and 41 in Section 6.6, the rank and nullity of T are the same as those of A.

A=[1 -1 0;0 1 -1;-1 0 1]; rank(A) ans = 2

It now follows from the Rank Theorem that nullity(T ) 3 rank(T ) 3 2 1. If you want to construct bases for the kernel and range of T, you can proceed as follows:

null(A,'r') ans = 1 1 1

This is the coordinate vector of a matrix with respect to the basis B of W. Hence, the matrix is 1# c 1 0 0 0 d 1# c 0 1 1 1 1 0 d 1# c 0 0 1 d f. 1 0 1 d c 1 1 1 d 1

so a basis for ker(T ) is e c

A=[1 -1 0; 0 1 -1; -1 0 1]; rref(A) ans = 1 0 0 0 1 0 -1 -1 0

Since the leading 1s are in columns 1 and 2, these columns in the original matrix form a basis for col(A). That is, 1 1 col 1 A 2 span C 0 S , C 1 S 1 0

44

Appendix E

This time you have coordinate vectors with respect to the basis C of 2, so a basis for range(T ) is {x x 2, 1 x 2}.

Chapter 7: Distance and Approximation

Computations involving the inner product 8 f, g 9

f 1x 2 g 1x 2 dx in [a, b] can be

a

done using MATLABs symbolic math toolbox. Symbolic expressions are dened by placing the expression in single quotes and using the sym command. Example 7.6

f=sym('x'); g=sym('3*x-2');

b

The command int(F,a,b) computes

int(f^2,0,1) ans = 1/3 int((f-g)^2,0,1) ans = 4/3 int(f*g,0,1) ans= 0

F 1x 2 dx.

a

The various norms discussed in Section 7.2 are built into MATLAB. The format of the command is norm(v,n), where v is a vector and n is a positive integer or the expression inf. Use n=1 for the sum norm, n=2 for the Euclidean norm, and n=inf for the max norm. Typing norm(v) is the same as typing norm(v,2).

v=[1 -2 5]; norm(v,1) ans = 8 norm(v) ans = 5.4772 norm(v,inf) ans = 5 If the command norm is applied to a matrix, you get the induced operator norm.

Example 7.19

A=[1 -3 2; 4 -1 -2; -5 1 3] norm(A,1) ans = 10 norm(A,inf) ans = 9

Appendix E

45

The condition number of a square matrix A can be obtained with the command cond(A,n), where n is equal to 1,2, or inf. Example 7.21

A=[1 1; 1 1.0005]; c1=cond(A,1), c2=cond(A), cinf=cond(A,inf) c1 = 8.0040e+03 c2 = 8.0020e+03 cinf = 8.0040e+03

MATLAB can perform least squares approximation in one step. If the system Ax b has a nonsquare coefcient matrix A, then the command A\b returns the least squares solution of the system. Example 7.26

A=[1 1; 1 2; 1 3]; b=[2; 2; 4]; A\b ans = 0.6667 1.0000

The singular value decomposition is also built into Maple. Example 7.34(b)

A=[1 1; 1 0; 0 1]; svd(A) ans = 1.7321 1.0000

This command produces a vector containing numerical approximations to the singular values of A. If you want to see the matrices U, , and V, then you must enter

[U,S,V] = svd(A) U = 0.8165 0.4082 0.4082 S = 1.7321 0 0 V = 0.7071 0.7071 -0.7071 0.7071 0 1.0000 0 0.0000 -0.7071 0.7071 -0.5774 0.5774 0.5774

46

Appendix E

You can easily verify that you have an SVD of A by computing U VT.

U*S*V' ans = 1.0000 1.0000 0.0000 1.0000 -0.0000 1.0000

As expected, the result is A. The pseudoinverse is a built-in function in MATLAB. Example 7.39(b)

A=[1 1; 1 0; 0 1]; pinv(A) ans = 0.3333 0.3333 0.6667 -0.3333 -0.3333 0.6667

Using the SVD, you can nd minimum length least squares solutions of linear systems. Example 7.40

A=[1 1; 1 1]; b=[0; 1]; pinv(A)*b ans = 0.2500 0.2500

MATLAB can apply the SVD to achieve digital image compression. The following procedure will convert a grayscale image called le.ext (where le is the name of the le and ext is the le formatJPEG, GIF, TIFF, etc.) into a matrix and then use the SVD to produce rank k approximations to the original for any value of k up to the rank of the matrix. The following example uses k 12.

A = imread('le.ext'); A = double(A); gure(1); colormap(gray); imagesc(A); [U,S,V] = svd(A); k = 12; Ak = U(:, 1:k)*S(1:k, 1:k)*V(:, 1:k) '; gure(2); colormap(gray); imagesc(Ak);

Das könnte Ihnen auch gefallen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

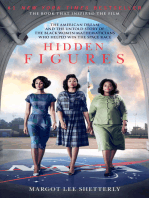

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Physics Formula SheetDokument4 SeitenPhysics Formula Sheethitachi hadiNoch keine Bewertungen

- Panel Data EconometricsDokument399 SeitenPanel Data EconometricsNuur AhmedNoch keine Bewertungen

- Julian SchwingerDokument23 SeitenJulian SchwingerPedro FigueroaNoch keine Bewertungen

- 10 Dichotomous or Binary ResponsesDokument74 Seiten10 Dichotomous or Binary ResponsesEdwin Johny Asnate SalazarNoch keine Bewertungen

- L 83Dokument16 SeitenL 83Edwin Johny Asnate SalazarNoch keine Bewertungen

- Using SPSS For One Sample Tests - Page 1Dokument4 SeitenUsing SPSS For One Sample Tests - Page 1Edwin Johny Asnate SalazarNoch keine Bewertungen

- Appendix A: Exercises A N NDokument5 SeitenAppendix A: Exercises A N NEdwin Johny Asnate SalazarNoch keine Bewertungen

- Example 2: Role-Play (Intermedíate To Upper Intermedíate) : TpgjhjngspeakingDokument3 SeitenExample 2: Role-Play (Intermedíate To Upper Intermedíate) : TpgjhjngspeakingEdwin Johny Asnate SalazarNoch keine Bewertungen

- Using V O A Speci To Improve Adva Learners' Product High Frequency 1Dokument1 SeiteUsing V O A Speci To Improve Adva Learners' Product High Frequency 1Edwin Johny Asnate SalazarNoch keine Bewertungen

- Survey Data Analysis in Stata: Jeff PitbladoDokument47 SeitenSurvey Data Analysis in Stata: Jeff PitbladoEdwin Johny Asnate SalazarNoch keine Bewertungen

- IBM SPSS SamplePower 3 Easy Interface ManualDokument12 SeitenIBM SPSS SamplePower 3 Easy Interface ManualEdwin Johny Asnate SalazarNoch keine Bewertungen

- Using Stata For One Sample Tests: Ztesti 36 8.7 .3 8.6, LevelDokument7 SeitenUsing Stata For One Sample Tests: Ztesti 36 8.7 .3 8.6, LevelEdwin Johny Asnate SalazarNoch keine Bewertungen

- Work Chapter TestDokument1 SeiteWork Chapter TestEpay Castañares LascuñaNoch keine Bewertungen

- Faraday's Law's of Electromagnetic Induction - First Law, Second Law - D&E NotesDokument3 SeitenFaraday's Law's of Electromagnetic Induction - First Law, Second Law - D&E NotesKiran KarthikNoch keine Bewertungen

- Assignment 3 EMTDokument3 SeitenAssignment 3 EMTelen19111076 KFUEITNoch keine Bewertungen

- NCERT Solutions For Class 12 Maths Chapter 3 MatricesDokument53 SeitenNCERT Solutions For Class 12 Maths Chapter 3 MatricesProphetNoch keine Bewertungen

- Interband TransitionDokument6 SeitenInterband TransitionNawaz KhanNoch keine Bewertungen

- Chapter 12.1Dokument19 SeitenChapter 12.1Meera PatelNoch keine Bewertungen

- Preliminary Concepts: 1.1 1.1.1 Historical PerspectiveDokument43 SeitenPreliminary Concepts: 1.1 1.1.1 Historical Perspectivegeo angNoch keine Bewertungen

- 2005QUA1HW5Dokument13 Seiten2005QUA1HW5afaelNoch keine Bewertungen

- Bec 9 - Science: Third QuarterDokument12 SeitenBec 9 - Science: Third QuarterEren YaegerrNoch keine Bewertungen

- Dynamic Model of Robot Manipulators: Claudio MelchiorriDokument65 SeitenDynamic Model of Robot Manipulators: Claudio MelchiorriSaxenaNoch keine Bewertungen

- Soalan DifferentiationDokument9 SeitenSoalan DifferentiationKhairuddin MuhamadNoch keine Bewertungen

- Inner Product Spaces, Orthogonality: Supplementary ProblemsDokument6 SeitenInner Product Spaces, Orthogonality: Supplementary ProblemsDarwinNoch keine Bewertungen

- CO1-I-1 (Part - 1)Dokument46 SeitenCO1-I-1 (Part - 1)Sivateja Nallamothu100% (1)

- MATH858D Markov Chains: Maria CameronDokument44 SeitenMATH858D Markov Chains: Maria CameronadelNoch keine Bewertungen

- 1 The Paramagnet To Ferromagnet Phase TransitionDokument7 Seiten1 The Paramagnet To Ferromagnet Phase TransitionYuyun Tri MulyaniNoch keine Bewertungen

- Cavity Optomagnonics: Silvia Viola KusminskiyDokument51 SeitenCavity Optomagnonics: Silvia Viola KusminskiyRaghvendra PratapNoch keine Bewertungen

- Important Questions For CBSE Class 12 Maths Chapter 3Dokument13 SeitenImportant Questions For CBSE Class 12 Maths Chapter 3todiwov781Noch keine Bewertungen

- Vector Calculus Independent Study Unit 6: Double and Triple IntegralsDokument3 SeitenVector Calculus Independent Study Unit 6: Double and Triple IntegralsAdil ShafiqNoch keine Bewertungen

- 1-1 Linear AlgebraDokument17 Seiten1-1 Linear AlgebraBhagvat prasadNoch keine Bewertungen

- Worksheet C: Raphs of UnctionsDokument2 SeitenWorksheet C: Raphs of UnctionsBONGLAV JemasonNoch keine Bewertungen

- 05 Compton ScatteringDokument36 Seiten05 Compton ScatteringrevnaldNoch keine Bewertungen

- Electric Charges and Fields Fomulae Sheet NewDokument2 SeitenElectric Charges and Fields Fomulae Sheet NewSuresh JangraNoch keine Bewertungen

- Morton Symmetric Product of The CircleDokument5 SeitenMorton Symmetric Product of The Circlevoyager7677Noch keine Bewertungen

- Vector Electricity and MagnetismDokument104 SeitenVector Electricity and MagnetismLyka LasilasNoch keine Bewertungen

- A High Flux Source of Cold Strontium Atoms: 1a 1 1 1 2 1 1b 1c 123dDokument12 SeitenA High Flux Source of Cold Strontium Atoms: 1a 1 1 1 2 1 1b 1c 123dJoel AuccapucllaNoch keine Bewertungen

- GRP 1Dokument7 SeitenGRP 1AKASH YATI BHARDWAJNoch keine Bewertungen

- MetII PDFDokument8 SeitenMetII PDFMadara SantosNoch keine Bewertungen

- Black Hole IntroDokument14 SeitenBlack Hole Introangel99battelNoch keine Bewertungen