Beruflich Dokumente

Kultur Dokumente

Modeling and Simulation of 3D Laser Range Scanner With Generic Interface For Robotics Applications

Hochgeladen von

SEP-PublisherOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Modeling and Simulation of 3D Laser Range Scanner With Generic Interface For Robotics Applications

Hochgeladen von

SEP-PublisherCopyright:

Verfügbare Formate

Frontie rs in Sensors (FS) Volume 1 Issue 1, January 2013

www.se ipub.org/fs/

Modeling and Simulation of 3D Laser Range Scanner With Generic Interface for Robotics Applications

Saso Koceski*1, Natasa Koceska2

1,2

Faculty of Computer Science, University Goce Delcev Stip

st. Krste Misirkov n.10-A, 2000 Stip, Macedonia

*1

saso.koceski@ugd.edu.mk; 2natasa.koceska@ugd.edu.mk inevitable for robots to behave in three-dimensional (3D) environments for various tasks, such as navigation, obstacle avoidance, object recognition, manipulation, etc. Therefore, in the field of robotics, there is an increasing need for accurate and low-cost vision sensors for three-dimensional environment perception. Compared to 2D vision systems, 3D visual sensors can provide direct geometric information of the environment. 3D laser range scanners enjoy a rising popularity and are widely used nowadays for these purposes. Many different approaches can be used to build 3 D scanning devices. Som e of them are based on passive vision: (Narvaez and Ramirez, 2012) for example are presenting 3D scanner based on two web cams; (Lv and Zhiyi, 2011) have constructed an easy to use 3D scanner based on binocular stereo vision using one hand-held line scanner and two cameras. On the other hand, numerous solutions for building 3D scanner based on active vision are reported in the literature. (Frh and Zakhor, 2004) present a system to map cities in 3D using two 2D laser scanners mounted on a truck, one vertically and one horizontally. (Thrun et al., 2000) also use two laser range scanners to obtain 3D models of underground mines. The same setup has been used by (Hhnel et al., 2003) in indoor and outdoor applications. A different approach is presented by (Kohlhepp et al., 2003) which uses 2D laser scanner that points forward and rotates around the front axis. In a similar way, a rotating 2D laser setup is chosen by (Wulf et al., 2004) where the scanner rotates around the vertical axis and is mounted either vertically or pointing upwards. Each of these approaches comes with its own limitations, advantages and elevated costs. Developm ent of an accurate virtual model of a 3 D range scanner sensor with off-line programming capabilities (like Easy-Rob and RobotWorks, 2012) together with robot models

Abstract The aim of this work is to describe the physics-base d simulation mode l of a custom 3D range laser scanner toge ther with ge ne ric inte rface which offe rs the possibility for clie nt applications to seamlessly inte ract with instances of the physical and virtual 3D range scanne r. The physicsbase d virtual mode l of the 3D range scanne r is realize d in rigid body dynamics e nvironme nt provide d by Ope n Dynamics Engine (ODE) library. In orde r to simulate the ste ppe r motor use d in the real scanne r, the joints are virtually constraine d and motorize d with the parame ters obtaine d from real de vice . For rays mode ling the ray-cast me thod is use d. The incide nce angle and the surface prope rties of the object hit by the rays are also take n into the consideration. The se nsor noise that re presents the unce rtainty of the measure is also take n into account. We have also trie d to mimic the internal se nsor control logic. In orde r to ve rify the de ve lope d mode l and the inte rface archite cture , a simple clie nt application for 3D data acquisition is de ve lope d and connecte d through the same inte rface to both physical and virtual de vice . The results of the e valuation are also re porte d and discusse d. Keywords Modeling; Simulation; 3D Range Scanner; Rigid Body Dynamics; Ray-cast Method

Introduction During the last two decades, the use of robots has gained substantially increasing attraction. Robots participate in meaningful and intelligent interactions with other entities inanimate objects, human users, or other robots. In order to perform the assigned tasks reliably, a robot first needs to be able to sense its environment accurately. Sensing is the only way through which the robot can assess the status of its environment, and its own status within it. Moreover, in many practical applications, it is

www.se ipub.org/fs/

Frontie rs in Se nsors (FS) Volume 1 Issue 1, January 2013

will enable fast and cheap testing of different scenarios and algorithms before implementing them in a real environment. Virtual models and simulation tools are often of significant importance in order to reduce development time and avoid damages due control algorithm failure. Most of the past research in mobile robot simulation has been focused on simulating the structure and kinematics and dynamics of robotic structure, without considering sensors modeling. Recently several robot simulators which are including range scanner models have been developed. Many of these simulators are designed for special robots, only a couple of them offer the possibility of designing own robots. Some of these are restricted to a two dimensional environment (Gerkey et al., 2003, Koenig and Howard, 2004) and planty of 3 D simulators offering 3D range scanner models (Monajjemi et al., 2011; Pinciroli et al., 2011; Schmits et al., 2011; Balague r et al., 2008; Michel, 2004; Gonalves , 2008; Pereira, 2012). The models of the sensors used in these simulators are too generalized and they typically fail to take into account sensor dynamics and its specific properties, such as the dependency on surface properties, angle of incidence, and uncertainty of the measurement. In this work, we are presenting a novel approach to create physics-based simulation model of an accurate, low-cost 3D laser scanner developed in our laboratory which is planned to be used in different mobile robotics application. It is based on one 2 D range sensor which rotates around a horizontal axis by the means of one stepper motor. The scanner model is realized in rigid body dynamics environment provided by Open Dynamics Engine (ODE) library (Smith, 2012). This library currently supports first order Euler method for the equations solution. The library uses the Lagrange multipliers technique and adopts the simplified Coulomb friction model. In the modeling of the 2 D range sensor the uncertainty of the measurement, the dependency of the incidence angle and the surface properties of the object from which it gets reflected, are considered. In order to achieve more realistic behavior of the simulation model, we have also tried to mimic stepper motor driver board and the internal range sensor control logic. This means to encode range sensor commands into the 2D sensors protocol in order to feed the data into the very same functions. A novel architecture of a 3 D range scanner generic interface which offers the possibility for client applications to

8

seamlessly interact with instances of the physical and virtual 3D range scanner model is also proposed. The rest of the paper is organized as follows: first, we explain the design of the physical 3D range scanner, in the next chapter the physics-based virtual model of the scanner is presented, following by a chapter in which the developed application interface is explained. Experimental evaluation of the proposed simulation model as well as the conclusions derived are presented in the last two chapters. Design of the 3D Range Scanner Prototype The developed 3 D range laser scanner prototype is built on the basis of 2D Time-Of-Flight (TOF) Laser Radar (LADAR) LD-OEM1000 manufactured by SICK (SICK, 2012). Th e 2 D laser range finder is attached to a plate and placed in an aluminum frame, so that it can be rotated (Figure 1). On the top of the plate, berth for camera is foreseen. The rotation axis is horizontal (pitch). In order to have a balanced pivot the construction is made so the weight of the mobile part is along the pivots axis and the pivot is supported at both ends. Stepper motor (code RS 440-442) from TECO ELECTRO DEVICES for rotation of the movable part is connected on the left side. The motor has a step angle of 1.8 with accuracy of 5%, detent torque of 30mNm and holding torque of 500mNm. In order to enable higher holding torque and higher angular resolution, additional wormshaft gear (series VF 30 P from Bonfiglioli) with transmission ratio of 20 is added.

FIG. 1 DEVELOPED PROTOTYPE AND THE SCHEMA OF ITS STRUCTURE

The 3D laser range finder uses only standard computer interfaces. The step motor is directly connected to the parallel port. For the LADAR, CAN data interface was chosen (instead of a RS-232 or RS422), because it enables high grabbing speed, up to 1 MBit/s, and it can be easily connected via low cost CAN to USB adaptor to an USB port. This permits the developed prototype to be used on every computer

Frontie rs in Sensors (FS) Volume 1 Issue 1, January 2013

www.se ipub.org/fs/

and mobile platforms. The LADAR m easures its environment in twodimensional polar coordinates. When a measuring beam strikes an object, the position is determined with regard to the distance and direction. The LADAR ranges depend on the scanning object surface properties and angle of incidence and can vary in the interval from 0.5m up to 250m. The scan of 360 (h) x 118.8 (v) is scanned with different horizontal (multiples of 0.125) and vertical (multiples of 0.09) resolutions. The scanner head rotates with a frequency of 5 to 20 Hz (programmable). Development of the 3D Range Scanner Physics-Based Virtual Model In the creation of the virtual 3D range scanner model the goal is to mimic the physical scanners behavior as complete as possible and to be able to use the same flow of information like in the physical model. Thus, we have to implement the following parts: 2D range sensor and its control unit; the stepper motor and its drive board; the Encode/Decode component.

head of the sensor is not exactly cylindrical shaped, we did not consider other alternatives such as meshes, since they are computationally more expensive. The same approach is used for modeling of the other parts of the 3 D scanner.

FIG. 2 VIRTUAL 3D RANGE SCANNER MODEL DEVELOPED AS RIGID BODY

For modeling the stepper motor functionality the angular motor in conjunction with ball-socket and hinge ODE joints are used. By attaching these joints between the frame and the support plate, angular velocity can be controlled on up to three axes, allowing torque motors and stops to be set for rotation about those axes. In our case the movements are limited only around the horizontal axis. The rotary motor operates by applying a torque to the hinge joint in the dynamics simulator. The amount of torque T applied to the hinge axis is based on the confguration of the motor and the voltage applied to the motor, using equation 1.

The 3 D range scanner virtual model is developed in rigid body dynamics environment provided by ODE. This environment allows fairly realistic modeling of physical interactions. The developed simulation model matches closely to the real 3 D range scanner in many aspects. All parts that constitute the scanner are modeled as rigid bodies. Each of them has diverse attributes describing its appearance (e. g. color, texture) and physical behavior (e. g. mass, geometrical dimensions, center of mass and moment of inertia). ODE provides several basic geometries: Box, Cylinder, Capped-Cylinder and Sphere. This set has been extended by the so-called ComplexShape. A body of that class has a graphical representation based on a number of geometric primitives and a physical representation based on a set of basic bodies which may approximate the shape. The LD-OEM1000 sensor has been modeled as a unique body with the same position of the center of mass and the same moment of inertia with respect to the three spatial axes as its real counterpart. Virtual sensor is graphically represented as a complex shape composed of several basic geometries, boxes and cylinders, Figure 2. In its geometrical modeling some approximation is done. For instance, although the

T = Gk t

(V G / k v ) R

(1)

V is the amount of voltage applied to the motor, kt is the torque constant of the motor, kv is the back emf constant, R is the internal motor resistance, G is the gear head ratio of motor turns to output shaft turns (if a gear head is attached), is the current angular velocity of the output shaft. Once the torque is computed, this information is passed to the hinge joint in ODE for correct dynamics simulation during the next simulation step. In the simulator the speeds are changed by setting the desired angular speed and maximum torque of the motor connecting the plate with the frame. The desired speed is reached in the shortest time possible, i.e. by applying the maximum torque, and this speed maintained afterward. ODE's ray collision primitives are used to simulate the laser beams. We define a ray that simulates the beam with the following characteristics: the start position, the ray direction, the minimum threshold (Dmin),

www.se ipub.org/fs/

Frontie rs in Se nsors (FS) Volume 1 Issue 1, January 2013

corresponding to the minimum measurable distance of the sensor, and the maximum threshold (Dmax), corresponding to the maximum measurable distance of the sensor. In our case, by the experimental tests we have obtained the value of Dmin = 0.9m for the minimal threshold for which the sensor is measuring correctly, and we have set the value of Dmax = 30m (which satisfies the needs of our applications). Rays are generated from the ray source. First intersection of these rays with the objects in the environment is returned as the distance information. In the simulation, instead of time of flight, direct distance is obtained using low level functions. The principle involving the range scan is also similar. At each simulation step only a single planar scan is made with the scanner. Thus, 3 D scans need more simulation steps to move the motor to required positions. In the modeling of the range sensor the dependency of the incidence angle and the surface properties of the object from which the rays get reflected, should be also considered. In general, reflections can be modeled as combination of specular and diffuse reflections. Perfect specular reflections (as would occur on a perfect mirror) obey the specular reflection law which states that the angle of incidence equals the angle of reflection. The beam is not dilated in the process and continues not displaying any beam divergence. In contrast, diffuse reflection spreads the incoming light over the entire hemisphere surrounding the surface of incidence. In the implemented model, the reflection properties of the objects in the environment are computed following Lamberts cosine law, which models diffus e reflections. Specular reflections are neglected since situations in which perfect specular reflections return to the sensor in the setup described above are rare. It is assumed that the emitter generates a laser beam of intensity I0 which does not display any beam divergence and that at the target object it is scattered homogeneously in all backward directions i.e. the reflected flux is distributed equally over a hemisphere (Lambertian surface). Th e detector is co-located with the emitter, facing the same direction and is assumed to have a circular receptor surface of diameter d. Hence the reflected light can be thought to originate from a Lambertian point source located at the point of incidence. An illustration of the sensor model is shown in Figure 3.

FIG. 3 SENSOR MODEL

Lamberts law states that the light intensity dI emitted in an infinitesimally small solid angle d at angle from the surface normal is

dI = I N cos( )d

(2)

with IN the luminous intensity which corresponds to the intensity emitted in direction of the surface normal at the point of incidence of the laser beam. IN is the maximal intensity reflected in any direction by the Lambertian point source. The total light intensity reflected at the point of incidence is

\2

I=

I

0

cos( )2 sin( )d

(3)

In equation 2, the solid angle d has been replaced by the solid angle which corresponds to a spherical segment (zone) of aperture angle d. The integration from 0 to /2 results in the entire hemisphere of reflectance being considered. The total intensity of the reflected light is related to the emitted intensity by

I = rI 0

(4)

with r being the coefficient of reflection (0 r 1 ), the only modeled optical material property of the environment. Solving equation 2 for IN yields

IN =

rI 0

(5)

The light intensity Irs received at the sensor depends on the solid angle covered by the receptive surface in relation to the surface of a h emisphere of radius l which is the total surface into which the light is emitted:

(l , d ) =

2 4 = d 1 8l 2 4l 2 2

d2

(6)

In equation 5, the surface of the sensor is approximated by a (planar) circular surface. Combining Lamberts cosine law with equation 4 and equation 5 yields the light intensity received at the

10

Frontie rs in Sensors (FS) Volume 1 Issue 1, January 2013

www.se ipub.org/fs/

sensor:

I rs = I N cos( ) = rI 0

LD-OEM does not request any data.

cos( )

d2 8l 2

(7)

When generating the sensor output, it is assumed that if sufficient light intensity is sensed, a correct distance value can be determined. If the intensity drops below a threshold, no output is generated. The sensor response model described above has been validated using data contained in the technical specifications provided by the sensor manufacturer (SICK, 2012), which relates minimum required reflectance values to target range, supposing that a full spot strike on the object. A good agreement between the model and the technical specifications data are found for range values higher than 0.5 meters using a value of Irs min = 0.00016 [photons/(scm 2sr)] (specifying I0=1[photons/(scm 2sr)] and d=4cm). The fact that only one free-parameter (Irs min) is required to fit data for four different values of visibility indicates that the physical basis of the model is reasonable at range values higher than 0.5 meters. We also modeled the sensor noise that represents the uncertainty on the measurement. We approximate this error by a Gaussian law. The noise is then defined by:

N = G + m

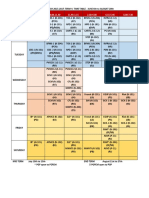

Consequently, requests are sent by the host whereas responses are sent by the LD-OEM. The remaining of the high byte determines the service group and the low byte defines the service number (Table 1).

TABLE 1 SERVICE CODE STRUCTURE Bit 15 Response bit Bit 148 Service group 0 ... 127 Bit 70 Service number 0 ... 255

LD-OEM provides the following services: Status Services Configuration Services Measurement Services Working Services Maintenance Services Routing Services File Services Monitor Services Application Services Adjust Services Special Services

(8)

Where is the standard deviation, m the mean of the Gaussian law, and G a Gaussian variable that can be computed by:

G = 2 ln( R1 ) cos(2R2 )

(9)

where R1 and R2 are two variables uniform in law on [0,1]. The implementation of the LD-OEM control unit is based on the sensors host protocol. The host protocol provides a set of commands to control the LD-OEM. This set of commands is divided into different service groups. When the LD-OEM has received a host protocol command, it answers with a certain response. The LD-OEM responds when the service has been processed. Generally, this takes less than one second. An exception is the service TRANS_ROTATE, which can take several seconds. In order to determine the kind of service of a host request the service codes are used. It can be seen as the command. Its data format is WORD. Th e most significant bit defines whether the code is a request or a response. For a request the most significant bit is set to zero and for a response it is set to one. Usually the

The control units encoder/decoder component which is responsible to translate the input and output messages, in the current implementation is capable to process the first four groups of services. A service request sent with missing or invalid parameters leads to a response, which indicates in the return value, that the request was invalid. An invalid command request is answered by a SERVICE_ FAILUR E (FF00h). The stepper motor drive board is implemented like a separate unit which continuously read the LPT port status. It accepts basically three types of signals related to the movement of the stepper motor: Direction (which corresponds to the LPT DATA0 line) - according to the level of this signal, the stepper motor will rotate clockwise or anticlockwise. Clock (which corresponds to the LPT DATA1 line) is the impulse which commands the execution of one motor step. The frequency of the squared impulses will determine the velocity of the stepper

11

www.se ipub.org/fs/

Frontie rs in Se nsors (FS) Volume 1 Issue 1, January 2013

motor. Mode (which corresponds to the LPT DATA2 line) when this signal is maintained on low level the stepper motor works in one-Phase-on mode performing 200 steps per cycle. Otherwise, the motor is working in Half-step mode performing 400 steps per cycle.

also responsible for error handling and error reporting. Front-End and Back-End Message The Front-end Message Handler (FEMH) is responsible for handling incoming requests from the user and if necessary, re-directs them to a Back-End Message Handler (BEMH). Depending on the type of the request received, the FEMH instance decides whether to read already obtained sensor data or ask the BEMH to process the request i.e. to pass the request on to the sensor. FEMH is application specific i.e. handles requests from and responds to a specific user application. The BEMH routes requests to the sensor. Each sensor physical or virtual is handled by a BEMH instance. Thus, there are as many BEMH instances as sensors attached to the interface. When the BEMH instance gets the response back from the sensor, it redirects the response to the proper FEMH instance which subsequently responds to the user. On creation of the handler instances the unique identifiers are assigned to them and registered by the interface and are preserved during the current working session. Communication Units The aim of the communication units in the system is to enable message delivery via a physical device between the senders encoder and recipients decoder. Communication units have very important role in mobile robotics applications where usually sensors and users logic reside on different locations. Various communication components exist in the system. In our case the front-end communication unit is based on TCP/IP protocol, while the back-end unit is based on CAN communication protocol for the range sensor and another simple protocol for the stepping motor, based on the three types of signals as described before. In both cases an implementation is necessary in the Encode/Decode components. Some specific issues about the CAN protocol are reported in following. Experimental Work In order to verify both the 3D range scanner model and the proposed interface architecture, a simple client application that can control the 3D range scanner and can collect 3D data from the environment is developed. The simulator, scanner interface modules and client application are implemented using C and C++ programming languages and ODE libraries on PC with AMD Athlon 64 Processor on 2.4GHz with 1GB of RAM, NVIDIA GeForce FX5500 with 256MB

3D Range Scanner Interface Architecture The contextual diagram of the 3D range scanners interface architecture is shown in Figure 3. The architecture provides the possibility for different client applications to be connected to the 3D range scanner instances, physical or virtual, through a single interface. This implies that one part of the interface should be able to handle different application requests, and thus it needs to be able to manage various implementation issues. The interface is composed of several functional modules. Each one needs to address specific issues: message Encoders/Decoders, which also check and report errors, external and internal message handlers that will act on predefined client and sensor messages, communication components.

FIG. 4 CONTEXT DIAGRAM OF THE S YSTEM INCLUDING THE 3D RANGE SCANNERS INTERFACE ARCHITECTURE

The top level 1 data-flow diagram of the system is shown on Figure 5. Message Encoders/Decoders The Encoders/Decoders are responsible for transforming messages in the proper format, between the client application and the Front-end Message Handlers on one hand and between Back-End Message Handlers and different 3D range scanner instances (physical or virtual) on the other. One or more are needed by client applications and one is normally needed per device. Encoders/Decoders are

12

Frontie rs in Sensors (FS) Volume 1 Issue 1, January 2013

www.se ipub.org/fs/

3D Range Sensor

Sensor measurement unit Step motor

LIDAR control unit Step motor driver board LIDAR messages encoder/decoder

Send msgs using LIDAR host protocol

Receive msgs using LIDAR host protocol

Send command messages

BEM encoder/decoder Pass the message to the BEM enc./dec. Receive the message from the BEM enc./dec.

configured with the same set of parameters. In both cases the same sequence of commands is send: INITIALIZ E to initialize the 2D sensor, MOV EMOTOR (with attributes which indicate the number of steps and the direction) to move the 2D sensor in the low vertical apex position, ROTATE start the rotation of the 2 D sensor head, MEASUR E start the acquisition process, GET2 DPROFILE acquire one 2 D profile, MOV EMOTOR (with attributes which indicate one step forward in the scanning direction), IDLE to exit the acquisition mode, MOV EMOTOR - to return the LADAR in the initial position.

BEMH Pass the message to the proper BEMH Receive the message from the BEMH

FEMH Pass the message to the proper FEMH Receive the message from the FEMH

FEM encoder/decoder

Interface

Send message via Receive message via communication protocol communication protocol

Client messages encoder/decoder Generate application request Client application logic Receive result

FIG. 6 REAL 3D RANGE SCANNER FACING AGAINS T A CARTON CYLINDRICAL OBJECT IN THE UNIVERS ITY CORRIDOR

Client

FIG. 5 FIRS T LEVEL DATA-FLOW DIAGRAM OF THE MAIN S YSTEM COMPONENTS

The same scene is also virtually modeled (Figure 7) using ODE.

memory and Windows XP operating system. The visualization is done using OpenGL. Th e application is connected first to the physical and after that virtual 3D range scanner instance. A simple scen e (Figure 6) in the real world is created and scanned. The scanner was placed on a wheeled wooden cart at a 4180mm distance from a large wall. Several simple shaped objects were placed in front of the wall and scanned with horizontal apex angle of 20, vertical apex of 21.6, the horizontal resolution of 0.25 and the vertical resolution of 0.18. Simulation environment and all objects in it are defined using XML files, which are parsed to construct the virtual world. This reduces the task of loading a scene to a single line of code. This allows the creation of much more complex environments and models easily. XML files can also be easily extended to add additional user parameters. Virtual scanner is

FIG. 7 VIRTUAL 3D RANGE SCANNER MODEL FACING AGAINST A VIRTUAL CYLINDRICAL OBJECT

The typical flow of information trough the interface, during the acquisition process is the following: Client application sends a request to the desired 3D range scanner using some protocol (e.g. TCP/IP)

13

www.se ipub.org/fs/

Frontie rs in Se nsors (FS) Volume 1 Issue 1, January 2013

or direct connection. The interface extracts and identifies the client from the request and calls the proper FEMH instance. Dependent on the request, the FEMH instance decides if it is able to get the information immediately from the scanners BEMH instance or if it needs to ask the scanners BEMH instance to pass the request on to the 3D range scanner. When the 3D range sensor BEMH instance gets the response back from the 3D range scanner, it redirects the response to the proper FEMH instance which in turn responds to the client.

realistic. The cylinder object was fitted in both cases. In the case of the real scan, the fitted cylinder is with diameter of 196mm and in the case of virtual scan it has diameter of 197mm. In both cases the len gth of the cylinder was 1m. These results are comparable with the dimensions of the real cylindrical object which has diameter of 195mm and length of 0.998m. One can also observe the absence of mixed pixels in the simulated scan at the top of the cylinder. Conclusions and Future Work This paper proposed a physics-based simulation model of a custom 3D range laser scanner. Experimental work was carried out in order to compare the scanner model against the real one. The results show good correspondence between the two measurements. The sensor and the simulation environment with all their properties are parametrically defined in XML format which enable easy extension of the concept on other 3D scanner devices. With the developed scanner interface on the other hand seamless integration with physical and virtual sensors is enabled. This enables the client applications to have a single point of contact with both types of sensors which is important in our future development. However, the developed model currently is not considering the reflectance of the scanned objects end environment conditions (for e.g. temperature and humidity which are influencing the measurements). So, our future research will be focused on this topic. Building of entire and flexible mobile robot simulator and other types of proximity sensors is also foreseen.

On Figure 8 the point cloud obtained with the scanning of cylindrical object in the real environment is presented. Local errors, which can be referred to as noise, stayed within the range -15 to 15 mm. In Figure 9, the scan output of the virtual cylindrical object is given. In the virtual model a Gaussian distributed noise with a zero mean and 0.15 cm of standard deviation was added to each distance measurement. It can be observed that the scan output looks very

FIG. 8 REAL SCANNER READINGS IN THE FORM OF POINT CLOUD

REFERENCES

Balaguer, Be njamin, and Ste fano Carpin. "Whe re Am I? A Simulate d GPS Sensor for Outdoor Robotic Applications." Simulation, Mode ling, and Programming for Autonomous Robots (2008): 222-233. Easy-Rob, 3D Robot Simulation Tool, we bsite http://www.e asy-rob.de / (2012) Frh, Christian and Zakhor Avide h. An automate d method for large-scale , ground-base d city mode l acquisition. International Journal of Compute r Vision, 60:524, October 2004. Ge rke y, Brian P, Richard T Vaughan, and Andre w Howard. 2003. The playe r/stage proje ct: Tools for multi-robot and distribute d se nsor systems. In Advance d Robotics, e d. U

FIG. 9 S IMULATED S CANNER READINGS IN THE FORM OF POINT CLOUD

14

Frontie rs in Sensors (FS) Volume 1 Issue 1, January 2013

www.se ipub.org/fs/

Nune s, A T de Aalme ida, A K Be jczy, K Kosuge , and J A T Macgado, 26:317-323 Gonalves, Jos , Jos Lima, H lder Olive ira, and Paulo Costa. "Sensor and actuator mode ling of a realistic whee le d mobile robot simulator." In Eme rging Technologies and Factory Automation, 2008. ETFA 2008. IEEE International Confere nce on, pp. 980-985. IEEE, 2008. Hhne l, Dirk, Wolfram Burgard, and Se bastian Thrun. "Learning compact 3D mode ls of indoor and outdoor e nvironme nts with a mobile robot." Robotics and Autonomous Systems 44, no. 1 (2003): 15-27. Koe nig, N, and A Howard. 2004. De sign and use paradigms for gaze bo, an ope n-source multi-robot simulator. 2004 IEEERSJ International Confere nce on Inte llige nt Robots and Syste ms IROS IEEE Cat No04CH37566 3: 2149-2154 Kohlhe pp, Pe te r, Marcus Walthe r, and Pe ter Ste inhaus. "Schritthalte nde 3D-kartie rung und lokalisie rung fr mobile Inspe ktionsroboter." Proceedings of the Autonome Mobile Syste me 18 (2003). Lv, Zhihua, and Zhiyi Zhang. "Build 3D laser scanne r base d on binocular stere o vision." In Inte llige nt Computation Technology and Automation (ICICTA), 2011 International Conf. on, vol. 1, pp. 600-603. IEEE, 2011. Miche l, Olivier. 2004. WebotsTM: Professional Mobile Robot Simulation. Inte rnational Journal of Advance d Robotic Systems 1, no. 1: 39-42. Monajjemi, Valiallah, Ali Koochakzade h, and Saee d Ghidary. "grSimRoboCup Small Size Robot Soccer Simulator." RoboCup 2011: Robot Socce r World Cup XV (2012): 450460. Narvaez, Astrid, and Esmitt Ramire z. "A Simple 3D Scanner Base d On Passive Vision for Geome try Reconstruction."

Latin Ame rica Transactions, IEEE (Re vista IEEE Ame rica Latina) 10, no. 5 (2012): 2125-2131. Pe re ira, Jos LF, and Rosaldo JF Rosse tti. "An inte grate d archite cture for autonomous ve hicles simulation." In Procee dings of the 27th Annual ACM Symposium on Applie d Computing, pp. 286-292. ACM, 2012. Pinciroli, Carlo, Vito Trianni, Re han O'Grady, Giovanni Pini, Arne Brutschy, Manue le Brambilla, Nithin Mathe ws e t a l. "ARGoS: a modular, multi-e ngine simulator for he teroge neous swarm r obotics." In Inte llige nt Robots and Systems (IROS), 2011 IEEE/RSJ Inte rnational Confere nce on, pp. 5027-5034. IEEE, 2011. RobotWorksA Robotics Inte rface and Traje ctory Ge nerator for SolidWorks, we bsite http://www.robotworks-e u.com/ (2008) Schmits, Tijn, and Arnoud Visser. "An omnidirectional camera simulation for the USARSim World." RoboCup 2008: Robot Socce r World Cup XII (2009): 296-307. SICK, http://www.sick.com (2012) Smith, R.: Ope n Dynamics Engine ODE http://www.ode .org (2012) Thrun, Sebastian, Wolfram Burgard, and Die te r Fox. "A realtime algorithm for mobile robot mapping with applications to multi-robot and 3D mapping." In Robotics and Automation, 2000. Procee dings. ICRA'00. IEEE Inte rnational Confere nce on, vol. 1, pp. 321-328. IEEE, 2000. Wulf, Oliver, Kai O. Arras, He nrik I. Christense n, and Be rnardo Wagne r. "2D mapping of clutte re d indoor e nvironme nts by means of 3D pe rception." In Robotics and Automation, 2004. Procee dings. ICRA'04. 2004 IEEE International Confe rence on, vol. 4, pp. 4204-4209. IEEE, 2004.

15

Das könnte Ihnen auch gefallen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (120)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2219)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- Solving Systems of Linear Equations in Two VariablesDokument13 SeitenSolving Systems of Linear Equations in Two VariablesMarie Tamondong50% (2)

- Process Validation - SDF - 1 - FDA PDFDokument111 SeitenProcess Validation - SDF - 1 - FDA PDFdipakrussiaNoch keine Bewertungen

- Advances in Littorinid BiologyDokument193 SeitenAdvances in Littorinid Biologyasaad lahmarNoch keine Bewertungen

- Mill's Critique of Bentham's UtilitarianismDokument9 SeitenMill's Critique of Bentham's UtilitarianismSEP-PublisherNoch keine Bewertungen

- Apollo System Engineering Manual. Environmental Control SystemDokument137 SeitenApollo System Engineering Manual. Environmental Control Systemjackie_fisher_email8329100% (2)

- YASHICA MAT-124 User's ManualDokument34 SeitenYASHICA MAT-124 User's Manuallegrandew100% (1)

- Syringe Infusion Pump S300: Technical SpecificationDokument1 SeiteSyringe Infusion Pump S300: Technical SpecificationJonathan Flores Gutang100% (1)

- Contact Characteristics of Metallic Materials in Conditions of Heavy Loading by Friction or by Electric CurrentDokument7 SeitenContact Characteristics of Metallic Materials in Conditions of Heavy Loading by Friction or by Electric CurrentSEP-PublisherNoch keine Bewertungen

- Influence of Aluminum Oxide Nanofibers Reinforcing Polyethylene Coating On The Abrasive WearDokument13 SeitenInfluence of Aluminum Oxide Nanofibers Reinforcing Polyethylene Coating On The Abrasive WearSEP-PublisherNoch keine Bewertungen

- Microstructural Development in Friction Welded Aluminum Alloy With Different Alumina Specimen GeometriesDokument7 SeitenMicrostructural Development in Friction Welded Aluminum Alloy With Different Alumina Specimen GeometriesSEP-PublisherNoch keine Bewertungen

- Effect of Slip Velocity On The Performance of A Magnetic Fluid Based Squeeze Film in Porous Rough Infinitely Long Parallel PlatesDokument11 SeitenEffect of Slip Velocity On The Performance of A Magnetic Fluid Based Squeeze Film in Porous Rough Infinitely Long Parallel PlatesSEP-PublisherNoch keine Bewertungen

- Device For Checking The Surface Finish of Substrates by Tribometry MethodDokument5 SeitenDevice For Checking The Surface Finish of Substrates by Tribometry MethodSEP-PublisherNoch keine Bewertungen

- FWR008Dokument5 SeitenFWR008sreejith2786Noch keine Bewertungen

- Improving of Motor and Tractor's Reliability by The Use of Metalorganic Lubricant AdditivesDokument5 SeitenImproving of Motor and Tractor's Reliability by The Use of Metalorganic Lubricant AdditivesSEP-PublisherNoch keine Bewertungen

- Enhancing Wear Resistance of En45 Spring Steel Using Cryogenic TreatmentDokument6 SeitenEnhancing Wear Resistance of En45 Spring Steel Using Cryogenic TreatmentSEP-PublisherNoch keine Bewertungen

- Experimental Investigation of Friction Coefficient and Wear Rate of Stainless Steel 202 Sliding Against Smooth and Rough Stainless Steel 304 Couter-FacesDokument8 SeitenExperimental Investigation of Friction Coefficient and Wear Rate of Stainless Steel 202 Sliding Against Smooth and Rough Stainless Steel 304 Couter-FacesSEP-PublisherNoch keine Bewertungen

- Reaction Between Polyol-Esters and Phosphate Esters in The Presence of Metal CarbidesDokument9 SeitenReaction Between Polyol-Esters and Phosphate Esters in The Presence of Metal CarbidesSEP-PublisherNoch keine Bewertungen

- Technological Mediation of Ontologies: The Need For Tools To Help Designers in Materializing EthicsDokument9 SeitenTechnological Mediation of Ontologies: The Need For Tools To Help Designers in Materializing EthicsSEP-PublisherNoch keine Bewertungen

- Microstructure and Wear Properties of Laser Clad NiCrBSi-MoS2 CoatingDokument5 SeitenMicrostructure and Wear Properties of Laser Clad NiCrBSi-MoS2 CoatingSEP-PublisherNoch keine Bewertungen

- Delightful: The Saturation Spirit Energy DistributionDokument4 SeitenDelightful: The Saturation Spirit Energy DistributionSEP-PublisherNoch keine Bewertungen

- Enhanced Causation For DesignDokument14 SeitenEnhanced Causation For DesignSEP-PublisherNoch keine Bewertungen

- Quantum Meditation: The Self-Spirit ProjectionDokument8 SeitenQuantum Meditation: The Self-Spirit ProjectionSEP-PublisherNoch keine Bewertungen

- Isage: A Virtual Philosopher System For Learning Traditional Chinese PhilosophyDokument8 SeitenIsage: A Virtual Philosopher System For Learning Traditional Chinese PhilosophySEP-PublisherNoch keine Bewertungen

- Cold Mind: The Released Suffering StabilityDokument3 SeitenCold Mind: The Released Suffering StabilitySEP-PublisherNoch keine Bewertungen

- Legal Distinctions Between Clinical Research and Clinical Investigation:Lessons From A Professional Misconduct TrialDokument4 SeitenLegal Distinctions Between Clinical Research and Clinical Investigation:Lessons From A Professional Misconduct TrialSEP-PublisherNoch keine Bewertungen

- Architectural Images in Buddhist Scriptures, Buddhism Truth and Oriental Spirit WorldDokument5 SeitenArchitectural Images in Buddhist Scriptures, Buddhism Truth and Oriental Spirit WorldSEP-PublisherNoch keine Bewertungen

- Social Conflicts in Virtual Reality of Computer GamesDokument5 SeitenSocial Conflicts in Virtual Reality of Computer GamesSEP-PublisherNoch keine Bewertungen

- Mindfulness and Happiness: The Empirical FoundationDokument7 SeitenMindfulness and Happiness: The Empirical FoundationSEP-PublisherNoch keine Bewertungen

- Metaphysics of AdvertisingDokument10 SeitenMetaphysics of AdvertisingSEP-PublisherNoch keine Bewertungen

- Ontology-Based Testing System For Evaluation of Student's KnowledgeDokument8 SeitenOntology-Based Testing System For Evaluation of Student's KnowledgeSEP-PublisherNoch keine Bewertungen

- The Effect of Boundary Conditions On The Natural Vibration Characteristics of Deep-Hole Bulkhead GateDokument8 SeitenThe Effect of Boundary Conditions On The Natural Vibration Characteristics of Deep-Hole Bulkhead GateSEP-PublisherNoch keine Bewertungen

- Damage Structures Modal Analysis Virtual Flexibility Matrix (VFM) IdentificationDokument10 SeitenDamage Structures Modal Analysis Virtual Flexibility Matrix (VFM) IdentificationSEP-PublisherNoch keine Bewertungen

- A Tentative Study On The View of Marxist Philosophy of Human NatureDokument4 SeitenA Tentative Study On The View of Marxist Philosophy of Human NatureSEP-PublisherNoch keine Bewertungen

- Computational Fluid Dynamics Based Design of Sump of A Hydraulic Pumping System-CFD Based Design of SumpDokument6 SeitenComputational Fluid Dynamics Based Design of Sump of A Hydraulic Pumping System-CFD Based Design of SumpSEP-PublisherNoch keine Bewertungen

- User Manual Thomson Mec 310#genset Controller Option J Canbus j1939 eDokument14 SeitenUser Manual Thomson Mec 310#genset Controller Option J Canbus j1939 eJapraAnugrahNoch keine Bewertungen

- 2.5 PsedoCodeErrorsDokument35 Seiten2.5 PsedoCodeErrorsAli RazaNoch keine Bewertungen

- Travelstar 25GS, 18GT, & 12GN: Quick Installation GuideDokument2 SeitenTravelstar 25GS, 18GT, & 12GN: Quick Installation Guidebim2009Noch keine Bewertungen

- Presentation MolesworthDokument13 SeitenPresentation Molesworthapi-630754890Noch keine Bewertungen

- Solid State Physics by Kettel Chapter 4Dokument17 SeitenSolid State Physics by Kettel Chapter 4Taseer BalochNoch keine Bewertungen

- Pate, M. B., Evaporators and Condensers For Refrigeration and Air-Conditioning Systems, in Boilers, Evaporators andDokument1 SeitePate, M. B., Evaporators and Condensers For Refrigeration and Air-Conditioning Systems, in Boilers, Evaporators andpete pansNoch keine Bewertungen

- Volcanoes, Earthquakes, and Mountain Ranges: What's inDokument3 SeitenVolcanoes, Earthquakes, and Mountain Ranges: What's inRuby Jean LagunayNoch keine Bewertungen

- Quality Assurance AnalystDokument2 SeitenQuality Assurance AnalystMalikNoch keine Bewertungen

- Term 4 Time Table & Exam Schedule.Dokument4 SeitenTerm 4 Time Table & Exam Schedule.Anonymous FD3MCd89ZNoch keine Bewertungen

- SCAQMD Method 3.1Dokument27 SeitenSCAQMD Method 3.1Jonathan Aviso MendozaNoch keine Bewertungen

- Anis MahmudahDokument14 SeitenAnis MahmudahAlim ZainulNoch keine Bewertungen

- Standards and Their ClassificationsDokument3 SeitenStandards and Their ClassificationsJoecelle AbleginaNoch keine Bewertungen

- Ullit Présentation GénéraleDokument34 SeitenUllit Présentation Généralepvk.p53Noch keine Bewertungen

- Defibrelator ch1Dokument31 SeitenDefibrelator ch1د.محمد عبد المنعم الشحاتNoch keine Bewertungen

- A Treatise On Physical GeographyDokument441 SeitenA Treatise On Physical Geographyramosbrunoo8933Noch keine Bewertungen

- Role of Digital Infrastructure in The Post Pandemic World: Preparing For Civil Services Interview-The Right PerspectiveDokument40 SeitenRole of Digital Infrastructure in The Post Pandemic World: Preparing For Civil Services Interview-The Right PerspectiveshekhardfcNoch keine Bewertungen

- Ryan Ashby DickinsonDokument2 SeitenRyan Ashby Dickinsonapi-347999772Noch keine Bewertungen

- School Bundle 05 SlidesManiaDokument19 SeitenSchool Bundle 05 SlidesManiaRazeta Putri SaniNoch keine Bewertungen

- 01 LANG Forgiarini Low Energy EmulsificationDokument8 Seiten01 LANG Forgiarini Low Energy EmulsificationDuvánE.DueñasLópezNoch keine Bewertungen

- MyITLab Access Grader Real Estate Case SolutionDokument3 SeitenMyITLab Access Grader Real Estate Case SolutionShivaani Aggarwal0% (1)

- Consumer Behavior Quiz (01-16-21)Dokument3 SeitenConsumer Behavior Quiz (01-16-21)litNoch keine Bewertungen

- Optimization of Lignin Extraction from Moroccan Sugarcane BagasseDokument7 SeitenOptimization of Lignin Extraction from Moroccan Sugarcane BagasseAli IrtazaNoch keine Bewertungen

- El 5036 V2 PDFDokument86 SeitenEl 5036 V2 PDFCriss TNoch keine Bewertungen

- Geotechnical Scope of Work For ERL Unit-2Dokument75 SeitenGeotechnical Scope of Work For ERL Unit-2smjohirNoch keine Bewertungen