Beruflich Dokumente

Kultur Dokumente

CH 8

Hochgeladen von

Raghu GunasekaranOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

CH 8

Hochgeladen von

Raghu GunasekaranCopyright:

Verfügbare Formate

Chapter 8: Adaptive Networks

Introduction (8.1)

Architecture (8.2)

Backpropagation for Feedforward

Networks (8.3)

2

Introduction (8.1)

Almost all kinds of Neural Networks paradigms

with supervised learning capabilities are unified

through adaptive networks

Nodes are process units. Causal relationships

between the connected nodes are expressed with

links

All or part of the nodes are adaptive, which means

that the outputs of these nodes are driven by

modifiable parameters pertaining to these nodes

3

Introduction (8.1) (cont.)

A learning rule explains how these parameters (or

weights) should be updated to minimize a

predefined error measure

The error measure computes the discrepancy

between the networks actual output and a desired

output

The steepest descent method is used as a basic

learning rule. It is also called backpropagation

4

Architecture (8.2)

Definition: An adaptive network is a network

structure whose overall input-output behavior is

determined by a collection of modifiable

parameters

The configuration of an adaptive network is

composed of a set of nodes connected by direct

links

Each node performs a static node function on its

incoming signals to generate a single node output

Each link specifies the direction of signal flow

from one node to another

5

A feedforward adaptive network in layered representation

6

Example 1 : Parameter sharing in adoptive network

A single node

Parameter sharing problem

7

Architecture (8.2) cont.)

There are 2 types of adoptive networks

Feedforward (acyclic directed graph such as in

fig. 8.1)

Recurrent (contains at least one directed cycle)

8

A recurrent adaptive network

9

Architecture (8.2) (cont.)

Static Mapping

A feedforward adaptive network is a static

mapping between its inputs and output spaces

This mapping may be linear or highly

nonlinear

Our goal is to construct a network for

achieving a desired nonlinear mapping

10

Architecture (8.2) (cont.)

Static Mapping (cont.)

This nonlinear mapping is regulated by a data

set consisting of desired input-output pairs of a

target system to be modeled: this data set is

called training data set

The procedures that adjust the parameters to

improve the networks performance are called

the learning rules

11

Architecture (8.2) (cont.)

Static Mapping (cont.)

Example 2: An adaptive network with a single

linear node

x

3

= f

3

(x

1

, x

2

; a

1

, a

2

, a

3

) = a

1

x

1

+ a

2

x

2

+ a

3

where: x

1

, x

2

are inputs; a

1

, a

2

and a

3

are

modifiable parameters

12

A linear single-node adaptive network

13

Architecture (8.2) (cont.)

Static Mapping (cont.)

Identification of parameters can be performed

through the linear least-squares estimation

method of chapter 5

Example 3: Perception network

x

3

= f

3

(x

1

, x

2

; a

1

, a

2

, a

3

) = a

1

x

1

+ a

2

x

2

+ a

3

and

(f

4

is called a step function)

<

>

= =

0 x if 0

0 x if 1

) x ( f x

3

3

3 4 4

14

A nonlinear single-node adaptive network

15

Architecture (8.2) (cont.)

The step function is discontinuous at one point

(origine) and flat at all other points, it is not

suitable for derivative based learning procedures

use of sigmoidal function

Sigmoidal function has values between 0 and 1

and is expressed as:

Sigmoidal function is the building-block of the

multi-layer perceptron

3

x

3 4 4

e 1

1

) x ( f x

+

= =

16

Architecture (8.2) (cont.)

Example 4: A multilayer perceptron

where x

4

, x

5

and x

6

are outputs from nodes 4, 5

and 6 respectively and the set of parameters of

node 7 is {w

4,7

, w

5,7

, w

6,7

, t

7

}

( ) | |

7 6 7 , 6 5 7 , 5 4 7 , 4

7

t x w x w x w exp 1

1

x

+ + + +

=

17

A 3-3-2 neural network

18

Backpropagation for Feedforward

Networks (8.3)

Basic learning rule for adaptive networks

It is a steepest descent-based method discussed in chapter 6

It is a recursive computation of the gradient vector in which

each element is the derivative of an error measure with

respect to a parameter

The procedure of finding a gradient vector in a network

structure is referred to as backpropagation because the

gradient vector is calculated in the direction opposite to the

flow of the output of each node

19

Backpropagation for Feedforward Networks (8.3) (cont.)

Once the gradient is computed, regression

techniques are used to update parameters

(weights, links)

Notations:

Assume we have L layers (l = 0, 1, , L 1)

N(l) represents the number of nodes in layer l

x

l,I

represents the output of node i in layer l

(i = 1, , N(l))

f

l,i

represents the function of node i

20

Backpropagation for Feedforward Networks (8.3) (cont.)

Principle

Since the output of a node depends on the

incoming signals and the parameter set of the

node, we can write:

where o, |, , , are the parameters of this

node.

,...) , , , x ,..., x , x ( f x

) 1 l ( N , 1 l 2 , 1 l 1 , 1 l i , l i , l

| o =

21

A layered representation

22

Backpropagation for Feedforward Networks (8.3) (cont.)

Principle (cont.)

Let assume that the training set has P patterns,

therefore we define an error for the pth pattern as:

Where: d

k

is the k-th component of the pth desired

output vector and x

L,K

is the k-th component of the

predicted output vector produced by presenting the

pth input vector to the network

The task is to minimize an overall measure defined

as:

( )

=

=

) L ( N

1 k

2

k , L k p

x d E

=

=

P

1 p

p

E E

23

Backpropagation for Feedforward Networks (8.3) (cont.)

Principle (cont.)

To use the steepest descent to minimize E,

we have to compute VE (gradient vector)

Causal Relationships

Change in

parameter o

Change in outputs

of nodes containing o

Change in

networks output

Change in

error measure

24

Backpropagation for Feedforward Networks (8.3) (cont.)

Principle (cont.)

Therefore, the basic concept in calculating the

gradient vector is to pass a form of derivative

information starting from the output layer and

going backward layer by layer until the input

layer is attained

Lets define as the error signal on

the node i in layer l (ordered derivative)

i , l

p

i , l

x

E

c

c

= c

+

25

Backpropagation for Feedforward Networks (8.3) (cont.)

Principle (cont.)

Example: Ordered derivatives and ordering

partial derivatives

Consider the following adaptive network

26

=

=

) x ( f y

) y , x ( g z

Ordered derivatives & ordinary partial derivatives

27

Backpropagation for Feedforward Networks (8.3) (cont.)

For the ordinary partial derivative,

For the ordered derivative, we take the indirect causal

relationship into account,

The gradient vector is defined as the derivative of the error

measure with respect to the parameter variables. If o is a

parameter of the ith node at layer l, we can write:

x

) y , x ( g

x

z

c

c

=

c

c

x and y are assumed independent without

paying attention that y = f(x)

x

f

*

y

) y , x ( g

x

) y , x ( g

x

)) x ( f , x ( g

x

z

) x ( f y ) x ( f y

c

c

c

c

+

c

c

=

c

c

=

c

c

= =

+

o c

c

c =

o c

c

c

c

=

o c

c

+ +

i , l

i , l

i , l

i , l

p p

f f

*

x

E E

Where c

l,i

is computed through

The indirect causal relationship

28

Backpropagation for Feedforward Networks (8.3) (cont.)

The derivative of the overall error measure E

with respect to o is:

Using a steepest descent scheme, the update for

o is:

=

+

+

o c

c

=

o c

c

P

1 p

p

E

E

o c

c

q o = o

+

E

now next

29

Backpropagation for Feedforward Networks (cont.)

Example 8.6 Adaptive network and its error

propagation model

In order to calculate the error signals at

internal nodes, an error-propagation network is

built

30

Error propagation model

31

Backpropagation for Feedforward Networks (8.3) (cont.)

7 , 9

w

7

9

9

7 , 8

w

7

8

8

7

9

9

p

7

8

8

p

7

p

7

8 8

8

p

8

p

8

9 9

9

p

9

p

9

x

f

x

f

x

f

*

x

E

x

f

*

x

E

x

E

) x d ( 2

x

E

x

E

) x d ( 2

x

E

x

E

c

c

c +

c

c

c =

c

c

c

c

+

c

c

c

c

=

c

c

= c

=

c

c

=

c

c

= c

=

c

c

=

c

c

= c

+ + +

+

+

32

Backpropagation for Feedforward Networks (8.3) (cont.)

There are 2 types of learning algorithms:

Batch learning (off-line learning): the

update for o takes place after the whole

training data set has been presented (one

epoch)

On-line learning (pattern-by-pattern

learning): the parameters o are updated

immediately after each input output pair has

been presented

Das könnte Ihnen auch gefallen

- Gmail - Pega RPA - Open Span Q&ADokument15 SeitenGmail - Pega RPA - Open Span Q&ARaghu GunasekaranNoch keine Bewertungen

- InvoiceDokument2 SeitenInvoiceRaghu GunasekaranNoch keine Bewertungen

- Opening LatestDokument16 SeitenOpening LatestRaghu GunasekaranNoch keine Bewertungen

- Duties & Responsibilities On Freshers Drive - 09.08.2014Dokument2 SeitenDuties & Responsibilities On Freshers Drive - 09.08.2014Raghu GunasekaranNoch keine Bewertungen

- Opening LatestDokument16 SeitenOpening LatestRaghu GunasekaranNoch keine Bewertungen

- Vaikuntam Final LQDokument12 SeitenVaikuntam Final LQRaghu GunasekaranNoch keine Bewertungen

- Is 2marksDokument50 SeitenIs 2marksRaghu GunasekaranNoch keine Bewertungen

- Corba DcomDokument6 SeitenCorba DcomRaghu GunasekaranNoch keine Bewertungen

- Webtech Lab ManualDokument86 SeitenWebtech Lab ManualAbinaya JananiNoch keine Bewertungen

- Simulation of Data Transfer Using TCP Without Loss: ServerDokument4 SeitenSimulation of Data Transfer Using TCP Without Loss: ServerRaghu GunasekaranNoch keine Bewertungen

- Webtech Lab ManualDokument86 SeitenWebtech Lab ManualAbinaya JananiNoch keine Bewertungen

- Simulation of Data Transfer Using TCP With LossDokument5 SeitenSimulation of Data Transfer Using TCP With LossRaghu GunasekaranNoch keine Bewertungen

- Avl TreeDokument11 SeitenAvl TreeRaghu GunasekaranNoch keine Bewertungen

- Energy Conservation &management in Lightining SystemDokument5 SeitenEnergy Conservation &management in Lightining SystemRaghu GunasekaranNoch keine Bewertungen

- Summer Fello Prog 2011 AppadvDokument3 SeitenSummer Fello Prog 2011 AppadvJoe147Noch keine Bewertungen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Transportation and Assignment ProblemDokument14 SeitenTransportation and Assignment ProblemMuhammad RamzanNoch keine Bewertungen

- 2009 Laporte - The VRPDokument10 Seiten2009 Laporte - The VRPViqri AndriansyahNoch keine Bewertungen

- TSPDokument21 SeitenTSPSuvankar KhanNoch keine Bewertungen

- GTR2019 7Dokument28 SeitenGTR2019 7Bhat SaqibNoch keine Bewertungen

- LectureDokument35 SeitenLectureSuvin NambiarNoch keine Bewertungen

- Assignment No 1Dokument5 SeitenAssignment No 1JoshuaNoch keine Bewertungen

- Physics & EconomicsDokument8 SeitenPhysics & EconomicsKousik GuhathakurtaNoch keine Bewertungen

- Fusion 360 Simulation Extension Comparison Matrix ENDokument1 SeiteFusion 360 Simulation Extension Comparison Matrix ENdelcamdmiNoch keine Bewertungen

- Linear Programming NotesDokument122 SeitenLinear Programming NoteslauraNoch keine Bewertungen

- Aliaksei Maistrou - Finite Element Method DemystifiedDokument26 SeitenAliaksei Maistrou - Finite Element Method DemystifiedsuyogbhaveNoch keine Bewertungen

- MLearning Using C#Dokument148 SeitenMLearning Using C#Ricardo RodriguezNoch keine Bewertungen

- Genmath Quiz 1Dokument1 SeiteGenmath Quiz 1Genelle Mae MadrigalNoch keine Bewertungen

- Calculating Ultra-Strong and Extended Solutions For Nine Men's Morris, Morabaraba, and Lasker MorrisDokument12 SeitenCalculating Ultra-Strong and Extended Solutions For Nine Men's Morris, Morabaraba, and Lasker MorrisMayank JoshiNoch keine Bewertungen

- 1 s2.0 S0895981120306520 MainDokument12 Seiten1 s2.0 S0895981120306520 MainCarla Dospesos SalinasNoch keine Bewertungen

- Phase Stability Analysis of Liquid Liquid EquilibriumDokument15 SeitenPhase Stability Analysis of Liquid Liquid EquilibriumJosemarPereiradaSilvaNoch keine Bewertungen

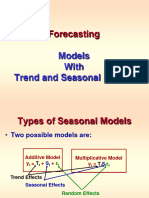

- Forecasting-Seasonal ModelsDokument34 SeitenForecasting-Seasonal Modelssuriani hamsahNoch keine Bewertungen

- 2018 Module 4 CS PDFDokument108 Seiten2018 Module 4 CS PDFPrasanth prasanthNoch keine Bewertungen

- AT2: Graphing The Hospital Floor Problem: Two Floor Plans of A Hospital Floor Are Shown. Draw A Graph That Represents The Floor PlanDokument4 SeitenAT2: Graphing The Hospital Floor Problem: Two Floor Plans of A Hospital Floor Are Shown. Draw A Graph That Represents The Floor PlanKimberly IgbalicNoch keine Bewertungen

- Signals and Systems Using Matlab Chapter 8 - Sampling TheoryDokument14 SeitenSignals and Systems Using Matlab Chapter 8 - Sampling TheoryDiluNoch keine Bewertungen

- Statistics For People Who Think They Hate Statistics 6th Edition Salkind Test Bank DownloadDokument19 SeitenStatistics For People Who Think They Hate Statistics 6th Edition Salkind Test Bank DownloadBarbara Hammett100% (27)

- Sampling Distribution Exam HelpDokument9 SeitenSampling Distribution Exam HelpStatistics Exam HelpNoch keine Bewertungen

- Gradient Descent TutorialDokument3 SeitenGradient Descent TutorialAntonioNoch keine Bewertungen

- Ahmed Hassan Ismail - BESE-9B - 237897 - NS Lab 5Dokument15 SeitenAhmed Hassan Ismail - BESE-9B - 237897 - NS Lab 5Hasan AhmedNoch keine Bewertungen

- Title: Business Intelligence Mini Project Problem DefinitionDokument10 SeitenTitle: Business Intelligence Mini Project Problem DefinitionRecaroNoch keine Bewertungen

- An Improved Image Steganography Method Based On LSB Technique With RandomDokument6 SeitenAn Improved Image Steganography Method Based On LSB Technique With RandomsusuelaNoch keine Bewertungen

- Multi-Head Self-Attention Transformer For Dogecoin Price PredictionDokument6 SeitenMulti-Head Self-Attention Transformer For Dogecoin Price PredictionThu GiangNoch keine Bewertungen

- DAA Lab ManualDokument49 SeitenDAA Lab ManualsammmNoch keine Bewertungen

- CSE101Lec 14 - 15 - 16 - 20201014 083027311Dokument66 SeitenCSE101Lec 14 - 15 - 16 - 20201014 083027311Sidharth SinghNoch keine Bewertungen

- Polynomials PPT Lesson 1Dokument26 SeitenPolynomials PPT Lesson 1Jesryl Talundas100% (1)

- Security in Communications and StorageDokument27 SeitenSecurity in Communications and StorageAfonso DelgadoNoch keine Bewertungen