Beruflich Dokumente

Kultur Dokumente

English Speech Recognition System On Chip: LL LL LL

Hochgeladen von

Sudhakar SpartanOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

English Speech Recognition System On Chip: LL LL LL

Hochgeladen von

Sudhakar SpartanCopyright:

Verfügbare Formate

TSINGHUA SCIENCE AND TECHNOLOGY ISSNll1007-0214ll15/17llpp95-99 Volume 16, Number 1, February 2011

English Speech Recognition System on Chip*

LIU Hong ( ), QIAN Yanmin (), LIU Jia ( )**

Tsinghua National Laboratory for Information Science and Technology Department of Electronic Engineering, Tsinghua University, Beijing 100084, China Abstract: An English speech recognition system was implemented on a chip, called speech system-on-chip (SoC). The SoC included an application specific integrated circuit with a vector accelerator to improve performance. The sub-word model based on a continuous density hidden Markov model recognition algorithm ran on a very cheap speech chip. The algorithm was a two-stage fixed-width beam-search baseline system with a variable beam-width pruning strategy and a frame-synchronous word-level pruning strategy to significantly reduce the recognition time. Tests show that this method reduces the recognition time nearly 6 fold and the memory size nearly 2 fold compared to the original system, with less than 1% accuracy degradation for a 600 word recognition task and recognition accuracy rate of about 98%. Key words: non-specific human voice-consciousness; system-on-chip; mel-frequency cepstral coefficients (MFCC)

Introduction

Embedded speech recognition systems[1] are becoming more important with the rapid development of handheld portable devices. However, only a few products are yet available due to the high chip costs. This paper describes an inexpensive English speech recognition system based on a chip. The chip includes a 16 bit microcontroller with 16 bit coprocessor, 32 KB RAM, and 16 bit A/D and D/A. The recognition models are based on the sub-word continuous density hidden Markov model (CHMM)[2,3] model, with the mel-frequency ceptrum coefficients (MFCC)[4] features. The recognition engine uses

Received: 2009-12-15; revised: 2010-06-10

* Supported by the National Natural Science Foundation of China and

Microsoft Research Asia (No. 60776800), the National Natural Science Foundation of China and Research Grants Council (No. 60931160443), and the National High-Tech Research and Development (863) Program of China (Nos. 2006AA010101, 2007AA04Z223, 2008AA02Z414, and 2008AA040201)

** To whom correspondence should be addressed.

E-mail: liuj@tsinghua.edu.cn; Tel: 86-10-62781847

two-pass beam searching algorithms[5] which greatly improve the implementation efficiency and lower the system cost. The system can be used in such command control devices as consumer electronics, handheld devices, and household appliances[6]; thus the system has many applications. The recognition accuracy of the speech recognition SoC is more than 97%. The hardware architecture of the speech recognition system-on-chip is designed for practical applications. All of a systems hardware is integrated into a single chip which presents the best solution for performance, size, power consumption, and cost as well as reliability[7]. The speech recognition system-on-chip is composed of a general purpose microcontroller, a vector accelerator, a 16 bit ADC/DAC, an analog filter circuit, audio input and output amplifiers, and a communication interface. In addition, the chip also includes a power management module and a clock. The application specific integrated circuit (ASIC) computing power is much greater than that of a microprocessor control unit (MCU) because of the vector accelerator. Unlike a DSP, this ASIC has integrated ADC, DAC, audio amplifier, and power management modules

96

Tsinghua Science and Technology, February 2011, 16(1): 95-99

without some unnecessary circuits to reduce the cost. Figure 1 shows a photo graph of the unpackaged chip core. The block diagram is shown in Fig. 2 with the details of each block described below.

continuous speech frame sequence. This sequence is then sent to the endpoint detection and feature extraction unit. After two-stage matching[8], the system outputs the recognition result. The result can be sent to the circuit or to the LCD display. 1.1 Software level division

Fig. 1 Photo graph of the chip

Hierarchical design[9] is often used for complex systems, regardless of the operating system or network structure. The lower levels provide bottom services and lower-level management. Each layer is encapsulated, so that the upper layer does not need to know the underlying method and the lower layer does not know the purpose. The structural layer increases the system flexibility with each module being logically related to the hierarchical design to improve the systems applicability and flexibility and to enhance the system reliability, robustness, and maintainability. The system software is divided into the driven layer, the universal module layer, the function module layer, and the scheduling layer, as shown in Fig. 4. The driver layer which isolates the software and hardware, includes all of the interrupt service routines and the peripheral drivers. The universal module layer includes a variety of basic operation modules which provide basic computing and operating services. The function module layer contains various functional modules as the core algorithms. The scheduling system which is the top layers, controls the ultra-loop of the global data maintenance system whose core aim is the task scheduling.

Fig. 2 SoC block diagram

Chip Software Design

The speech recognition process is described in Fig. 3. The speech signal from the microphone is pre-amplified and filtered by a low-pass filter and then sampled by the ADC with an 8 kHz sampling frequency. The signal is then segmented into frames, which form a

Fig. 4 Software division

Fig. 3

Speech recognition system

1.1.1 Driver layer The driver layer program enables direct operation of the hardware part. The program modules at this level generally correspond to the actual hardware modules, such as the memory, peripheral interfaces, and communication interfaces. These functions provide an interface between the hardware modules and the

LIU Hong ( ) et al.English Speech Recognition System on Chip

97

application program interface to the upper level program. 1.1.2 Service layer The driver layer program provides basic support to the hardware, but not any extended or enhanced functionality. The service layer provides a powerful interface program to the application level to further improve the system performance. Thus, the service layer improves use of the hardware features. 1.1.3 Scheduling layer The various inputs select different sub-tasks such as the speech recognition, speech coding, and speech decoding, which all have different response times for different asynchronous events. The scheduling layer then schedules these various tasks. This whole system is designed to provide good real-time performance with the scheduling layer providing seamless connectivity between the system procedures to complete applications without the applications needing to consider how to schedule the execution. The scheduling also facilitates control of the DSP power consumption. 1.1.4 Application layer The application level programs then use the driver level, service layer, and scheduling procedures provided by the API interface functions, so that the user can focus on the tasks, for example, the English command word recognition engine. Thus each application depends on the application layer procedures, with most, if not all, changes to be made only in the application layer program based on the application needs, while the driver, service, and the scheduling layer changes are relatively small. The driver, service, and the scheduling layer program serve as the system kernel layers, while the application layer program serves as the user program. 1.2 Two-pass beam search algorithm

systems using very simple models, do not give satisfactory results. Therefore, a two-pass search strategy was adopted to optimize the performance with speed, as shown in Fig. 5. In the first stage, the search uses approximate models such as the Monophone model with one Gaussian mixture[9]. This fast match generates a list of nbest hypotheses for the second search. The second stage was a detailed match among the nbest hypotheses using the Triphone model with three Gaussian mixtures. To reduce the computations, the covariance matrix of the Gaussian mixture model should be diagonal in both the fast match and detailed match stages. The output probability scores of all states are calculated and then matched one by one using the Viterbi method[10].

Fig. 5 Two-stage search structure

1.3

Front end feature extraction

The sub-word model in this system is based on the continuous density hidden Markov model (CHMM) with the output probability distribution of each state described by the Gaussian mixture model (GMM). The relationships between the context phonemes are categorized as Monophone, Biphone, and Triphone[9]. More complex models have higher recognition rate. However, such models take much longer. Thus the more complex models are not practical, even though the recognition rates can reach nealy 100%. Faster

Robust features must be used for embeded speech recognition systems. The mel-frequency cepstral coefficients have been proved to be more robust in the presence of background noise compared to other feature parameters. Since the dynamic range of the MFCC features is limited, the MFCC can be used for fixed-point algorithms and is more suitable for embedded systems. The MFCC parameters chosen here offer the best trade-offs between performance and computational requirements. Generally speaking, increased feature vector dimensions provide more information, but this increases the computational burden in the feature extraction and recognition stage[11,12]. The final recognition results for different features

98

Tsinghua Science and Technology, February 2011, 16(1): 95-99

are shown in Fig. 6. At the four-Gaussian-mixture situation, the first step needed twelve candidates for a 99% recognition rate. For 34 feature cases, the highest recognition rate was 99.22% and with 22 feature cases the recognition rate was 99.01% which satisfies the system requirements. Therefore a 22-dimension feature vector was chosen from the 12-dimension MFCC, 12-dimension difference MFCC, 12-dimension second difference MFCC, and the normalized energy and its first and second order differences.

then reached 99% in the second stage.

Fig. 7

Recognition rates for different candidates

Fig. 6

Different model recognition rates

The final system recognition rates using different sizes are shown in Table 1. All the evaluations were performed in an office environment with a moderate noise level. Although the recognition rate decreased as the vocabulary size increased, the recognition accuracy rate was still 98% with 600 phrases. The recognition time using the 600 phrase in a real environment was approximately 0.7 RTF (real time factor). Thus, this system could effectively deal with a 600 phrase vocabulary.

Table 1 Speech recognition system performance 99.2 98.8 98.1 Test speech (20 males /20 females) Recognition accuracy (%) 150 phrase vocabulary 300 phrase vocabulary 600 phrase vocabulary

Evaluation Results

The tests used 40 volunteer speakers using a test vocabulary[13,14] composed of names, places, and stock names. The vocabulary had a total of 600 phrases, with each phrase comprised of 2 to 4 English words spoken once by each speaker. The system recognition accuracy rate was tested by sampling input through the USB interface at 8 kHz/s. In this way, the recognition test conditions were almost the same as real conditions so that the statistical recognition accuracy could be properly assessed. The first recognition stage used a relatively simple acoustic model to produce a multi-candidate recognition result with a high recognition rate. Figure 7 shows the recognition rates for the multi-candidate results using a 600 phrase vocabulary. Although the model for the first recognition by one candidate obtains only a 93.7% recognition rate and six candidates recognition rate reached 98%. Thereafter, the upward trend of the recognition rate significantly slowed along with increasing numbers of candidates. In most cases, the correct results were included in the front four candidates. Therefore, the twelve candidates in the first stage were used for the second stage matching with a more complex acoustic model. The recognition rate

The best features of these medium size vocabulary recognition SoC systems are the low frequency (48 MHz) and small required system resources (48 KB). For the same performance the IBM Strong ARM needs a frequency of 200 MHz and a memory of 2.2 MB, while the Siemens ARM920T needs a frequency of 100 MHz and a memory of 402 KB.

Conclusions and Future Work

This paper describes an English verification system implemented on an SoC platform. The system uses an ASIC with a vector accelerator and speech recognition software developed to use the ASIC architecture. Tests show that the system can attain high recognition accuracy (more than 98%) with a short response time (0.7 RTF). The ASIC design of flexible fast speech recognition solutions for embeded applications has only 48 KB RAM. Future work will improve the algorithm to reduce the recognition time and increase the system robustness in noisy environments with changes

LIU Hong ( ) et al.English Speech Recognition System on Chip

99

in the memory and scheduling. The system can be used for Chinese or English on-chip identification. References

[1] Guo Bing, Shen Yan. SoC Technology and Its Application. Beijing: Tsinghua University Press, 2006. (in Chinese) [2] Levy C, Linares G, Nocera P, et al. Reducing computation and memory cost for cellular phone embedded speech recognition system. In: Proceedings of the ICASSP. Montreal, Canada, 2004, 5: 309-312. [3] Novak M, Hampl R, Krbec P, et al. Two-pass search strategy for large list recognition on embedded speech recognition platforms. In: Proceedings of the ICASSP. Hong Kong, China, 2003, 1: 200-203. [4] Xu Haiyang, Fu Yan. Embedded Technology and Applications. Beijing: Machinery Industry Press, 2002. (in Chinese) [5] Hoon C, Jeon P, Yun L, et al. Fast speech recognition to access a very large list of items on embedded devices. IEEE Trans. on Consumer Electronics, 2008, 54(2): 803-807. [6] Yang Zhizuo, Liu Jia. An embedded system for speech recognition and compression. In: ISCIT2005. Beijing, China, 2005: 653-656. [7] Westall F. Review of speech technologies for telecommunications. Electronics & Communication Engineering Journal, 1997, 9(5): 197-207.

[8] Shi Yuanyuan, Liu Jia, Liu Rensheng. Single-chip speech recognition system based on 8051 microcontroller core. IEEE Trans. on Consumer Electronics, 2001, 47(1): 149-154. [9] Yang Haijie, Yao Jing, Liu Jia. A novel speech recognition system-on-chip. In: International Conference on Audio, Language and Image Processing 2008 (ICALIP 2008). Shanghai, China, 2008: 166-174. [10] Lee T, Ching P C, Chan L W, et al. Tone recognition of isolated Cantonese syllables. IEEE Trans. on Speech and Audio Processing, 1995, 3(3): 204-209. [11] Novuk M, Humpl R, Krbec P, et al. Two-pass search strategy for large list recognition on embedded speech recognition platforms. In: ICCASP04. Montreal, Canada, 2004: 200-203. [12] Zhu Xuan, Wang Rui, Chen Yining. Acoustic model comparison for an embedded phonme-based mandarin name dialing system, In: Proceedings of International Symposium on Chinese Spoken Language Processing. Taipei, 2002: 83-86. [13] Zhu Xuan, Chen Yining, Liu Jia, et al. Multi-pass decoding algorithm based on a speech recognition chip. Chinese Acta Electronica Sinica, 2004, 32(1): 150-153. [14] Demeechai T, Mkelinen K. Integration of tonal knowledge into phonetic Hmms for recognition of speech in tone languages. Signal Processing, 2000, 80: 2241-2247.

Das könnte Ihnen auch gefallen

- PDFDokument764 SeitenPDFDe JavuNoch keine Bewertungen

- Final Year Project Progress ReportDokument17 SeitenFinal Year Project Progress ReportMuhd AdhaNoch keine Bewertungen

- Seminar Report On DcsDokument27 SeitenSeminar Report On DcsChandni Gupta0% (1)

- Discrete Event SimulationDokument140 SeitenDiscrete Event Simulationssfofo100% (1)

- International Standard: Electric Vehicle Conductive Charging System - General RequirementsDokument7 SeitenInternational Standard: Electric Vehicle Conductive Charging System - General Requirementskrishna chaitanyaNoch keine Bewertungen

- Project On Honeywell TDC3000 DcsDokument77 SeitenProject On Honeywell TDC3000 DcsAbv Sai75% (4)

- Lec 5 (Welded Joint)Dokument38 SeitenLec 5 (Welded Joint)Ahmed HassanNoch keine Bewertungen

- IPCA Precast Concrete Frames GuideDokument58 SeitenIPCA Precast Concrete Frames GuideMaurício Ferreira100% (1)

- Dotnet Projects: 1. A Coupled Statistical Model For Face Shape Recovery From Brightness ImagesDokument12 SeitenDotnet Projects: 1. A Coupled Statistical Model For Face Shape Recovery From Brightness ImagesreaderjsNoch keine Bewertungen

- Mits Hammed DSPDokument4 SeitenMits Hammed DSPMohammedHameed ShaulNoch keine Bewertungen

- The Design of Speech-Control Power Point Presentation Tool Using Arm7Dokument6 SeitenThe Design of Speech-Control Power Point Presentation Tool Using Arm7surendiran123Noch keine Bewertungen

- Term Paper On Embedded SystemsDokument7 SeitenTerm Paper On Embedded Systemsaflsbbesq100% (1)

- CMP 408 FinalDokument17 SeitenCMP 408 FinalAliyu SaniNoch keine Bewertungen

- An Efficient Resource Block Allocation in LTE SystemDokument6 SeitenAn Efficient Resource Block Allocation in LTE SystemlikehemanthaNoch keine Bewertungen

- The Application of Embedded System and LabVIEW in Flexible Copper Clad Laminates Detecting SystemDokument5 SeitenThe Application of Embedded System and LabVIEW in Flexible Copper Clad Laminates Detecting SystemSEP-PublisherNoch keine Bewertungen

- A System Bus Power: Systematic To AlgorithmsDokument12 SeitenA System Bus Power: Systematic To AlgorithmsNandhini RamamurthyNoch keine Bewertungen

- Figure 1: Electronic Interlocking For Mainline RailDokument11 SeitenFigure 1: Electronic Interlocking For Mainline RailDhirendra InkhiyaNoch keine Bewertungen

- 3.home AutomationDokument6 Seiten3.home AutomationI.k. NeenaNoch keine Bewertungen

- Real-Time Implementation of Low-Cost University Satellite 3-Axis Attitude Determination and Control SystemDokument10 SeitenReal-Time Implementation of Low-Cost University Satellite 3-Axis Attitude Determination and Control SystemivosyNoch keine Bewertungen

- Assmnt2phase1 SoftwarearchitectureDokument26 SeitenAssmnt2phase1 Softwarearchitectureapi-283791392Noch keine Bewertungen

- Stanley AssignmentDokument6 SeitenStanley AssignmentTimsonNoch keine Bewertungen

- Sensors: Bearing Fault Diagnosis Method Based On Deep Convolutional Neural Network and Random Forest Ensemble LearningDokument21 SeitenSensors: Bearing Fault Diagnosis Method Based On Deep Convolutional Neural Network and Random Forest Ensemble LearningFelipe Andres Figueroa VidelaNoch keine Bewertungen

- New VLSI Architecture For Motion Estimation Algorithm: V. S. K. Reddy, S. Sengupta, and Y. M. LathaDokument4 SeitenNew VLSI Architecture For Motion Estimation Algorithm: V. S. K. Reddy, S. Sengupta, and Y. M. LathaAlex RosarioNoch keine Bewertungen

- Design of ATP Software Architecture Based On Hierarchical Component Model PDFDokument6 SeitenDesign of ATP Software Architecture Based On Hierarchical Component Model PDFpoketupiNoch keine Bewertungen

- SE Fuzzy MP 2012Dokument10 SeitenSE Fuzzy MP 2012Cristian GarciaNoch keine Bewertungen

- 10.Data-Driven Design of Fog Computing AidedDokument5 Seiten10.Data-Driven Design of Fog Computing AidedVenky Naidu BalineniNoch keine Bewertungen

- Hardware Performance Simulations of Round 2 Advanced Encryption Standard AlgorithmsDokument55 SeitenHardware Performance Simulations of Round 2 Advanced Encryption Standard AlgorithmsMihai Alexandru OlaruNoch keine Bewertungen

- FPGA Based Area Efficient Edge Detection Filter For Image Processing ApplicationsDokument4 SeitenFPGA Based Area Efficient Edge Detection Filter For Image Processing ApplicationsAnoop KumarNoch keine Bewertungen

- 6G en SystemVueDokument5 Seiten6G en SystemVueSteve AyalaNoch keine Bewertungen

- Virtual Instrumentation Interface For SRRC Control System: The Is AsDokument3 SeitenVirtual Instrumentation Interface For SRRC Control System: The Is AsJulio CésarNoch keine Bewertungen

- Job SequencingDokument38 SeitenJob SequencingSameer ɐuɥsıɹʞNoch keine Bewertungen

- 255 - For Ubicc - 255Dokument6 Seiten255 - For Ubicc - 255Ubiquitous Computing and Communication JournalNoch keine Bewertungen

- City Guide CompleteDokument77 SeitenCity Guide Completemaliha riaz100% (1)

- Process Fault-Tolerance: Semantics, Design and Applications For High Performance ComputingDokument11 SeitenProcess Fault-Tolerance: Semantics, Design and Applications For High Performance ComputingSalil MittalNoch keine Bewertungen

- Overcoming Computational Errors in Sensing Platforms Through Embedded Machine-Learning KernelsDokument12 SeitenOvercoming Computational Errors in Sensing Platforms Through Embedded Machine-Learning KernelsctorreshhNoch keine Bewertungen

- 8516cnc05 PDFDokument14 Seiten8516cnc05 PDFAIRCC - IJCNCNoch keine Bewertungen

- An Efficient Approach Towards Mitigating Soft Errors RisksDokument16 SeitenAn Efficient Approach Towards Mitigating Soft Errors RiskssipijNoch keine Bewertungen

- Cps in AutomationDokument6 SeitenCps in AutomationMichelle SamuelNoch keine Bewertungen

- Error Log Processing For Accurate Failure PredictionDokument8 SeitenError Log Processing For Accurate Failure PredictionTameta DadaNoch keine Bewertungen

- A Study of Routing Protocols For Ad-Hoc NetworkDokument6 SeitenA Study of Routing Protocols For Ad-Hoc NetworkeditorijaiemNoch keine Bewertungen

- Multitask Application Scheduling With Queries and Parallel ProcessingDokument4 SeitenMultitask Application Scheduling With Queries and Parallel Processingsurendiran123Noch keine Bewertungen

- MICROCONTROLLERS AND MICROPROCESSORS SYSTEMS DESIGN - ChapterDokument12 SeitenMICROCONTROLLERS AND MICROPROCESSORS SYSTEMS DESIGN - Chapteralice katenjeleNoch keine Bewertungen

- A) (I) A Distributed Computer System Consists of Multiple Software Components That Are OnDokument14 SeitenA) (I) A Distributed Computer System Consists of Multiple Software Components That Are OnRoebarNoch keine Bewertungen

- Implementation of DCM Module For AUTOSAR Version 4.0: Deepika C. K., Bjyu G., Vishnu V. SDokument8 SeitenImplementation of DCM Module For AUTOSAR Version 4.0: Deepika C. K., Bjyu G., Vishnu V. SsebasTR13Noch keine Bewertungen

- Zigbee Based Voice Control System For Smart Home: Figure 1: Ucontrol Home Security, Monitoring and Automation (Sma)Dokument6 SeitenZigbee Based Voice Control System For Smart Home: Figure 1: Ucontrol Home Security, Monitoring and Automation (Sma)pratheeshprm143Noch keine Bewertungen

- Chapter 8 Operation System Support For Continuous MediaDokument13 SeitenChapter 8 Operation System Support For Continuous Mediaபாவரசு. கு. நா. கவின்முருகு100% (2)

- A Top-Down Microsystems Design Methodology and Associated ChallengesDokument5 SeitenA Top-Down Microsystems Design Methodology and Associated ChallengesVamsi ReddyNoch keine Bewertungen

- 01 Mckelvin-ECBS2005 PDFDokument7 Seiten01 Mckelvin-ECBS2005 PDFBuzatu Razvan IonutNoch keine Bewertungen

- An Introduction To Embedded Systems: Abs TractDokument6 SeitenAn Introduction To Embedded Systems: Abs TractSailu KatragaddaNoch keine Bewertungen

- Multirate Filters and Wavelets: From Theory To ImplementationDokument22 SeitenMultirate Filters and Wavelets: From Theory To ImplementationAnsari RehanNoch keine Bewertungen

- Lyu 2019Dokument8 SeitenLyu 2019vchicavNoch keine Bewertungen

- Evaluacion InstrumentacionDokument5 SeitenEvaluacion InstrumentacionCamila SarabiaNoch keine Bewertungen

- Go Mac PapDokument5 SeitenGo Mac Papsyedsalman1984Noch keine Bewertungen

- Speech Recognition On Mobile DevicesDokument27 SeitenSpeech Recognition On Mobile DevicesAseem GoyalNoch keine Bewertungen

- Computation-Aware Scheme For Software-Based Block Motion EstimationDokument13 SeitenComputation-Aware Scheme For Software-Based Block Motion EstimationmanikandaprabumeNoch keine Bewertungen

- EHaCON - 2019 Paper 8Dokument20 SeitenEHaCON - 2019 Paper 8Himadri Sekhar DuttaNoch keine Bewertungen

- Sensors: Clustering and Flow Conservation Monitoring Tool For Software Defined NetworksDokument23 SeitenSensors: Clustering and Flow Conservation Monitoring Tool For Software Defined NetworksAngel Nicolas Arotoma PraviaNoch keine Bewertungen

- Performance Analysis of Uncoded & Coded Ofdm System For Wimax NetworksDokument5 SeitenPerformance Analysis of Uncoded & Coded Ofdm System For Wimax NetworksInternational Journal of Application or Innovation in Engineering & ManagementNoch keine Bewertungen

- tmpAA19 TMPDokument10 SeitentmpAA19 TMPFrontiersNoch keine Bewertungen

- Java Adhoc Network EnvironmentDokument14 SeitenJava Adhoc Network EnvironmentImran Ud DinNoch keine Bewertungen

- Operating SystemsDokument6 SeitenOperating SystemsJagannath SrivatsaNoch keine Bewertungen

- Albany Chapter 3Dokument7 SeitenAlbany Chapter 3Aliyu SaniNoch keine Bewertungen

- UMTSDokument91 SeitenUMTSantonio_teseNoch keine Bewertungen

- How To Install Windows 7Dokument2 SeitenHow To Install Windows 7Sudhakar SpartanNoch keine Bewertungen

- Internal Combustion Engine: Induction TuningDokument39 SeitenInternal Combustion Engine: Induction TuningSudhakar SpartanNoch keine Bewertungen

- A Novel Charge Recycling Design Scheme Based On Adiabatic Charge PumpDokument13 SeitenA Novel Charge Recycling Design Scheme Based On Adiabatic Charge PumpSudhakar SpartanNoch keine Bewertungen

- Microarchitecture Configurations and Floorplanning Co-OptimizationDokument12 SeitenMicroarchitecture Configurations and Floorplanning Co-OptimizationSudhakar SpartanNoch keine Bewertungen

- VP-Administrative VP-Conferences VP-Publications VP-Technical ActivitiesDokument1 SeiteVP-Administrative VP-Conferences VP-Publications VP-Technical ActivitiesSudhakar SpartanNoch keine Bewertungen

- Double Error Correcting Codes With Improved Code Rates: Martin Rak Us - Peter Farka SDokument6 SeitenDouble Error Correcting Codes With Improved Code Rates: Martin Rak Us - Peter Farka SSudhakar SpartanNoch keine Bewertungen

- February 2007 Number 2 Itcob4 (ISSN 1063-8210) : Regular PapersDokument1 SeiteFebruary 2007 Number 2 Itcob4 (ISSN 1063-8210) : Regular PapersSudhakar SpartanNoch keine Bewertungen

- VP-Administrative VP-Conferences VP-Publications VP-Technical ActivitiesDokument1 SeiteVP-Administrative VP-Conferences VP-Publications VP-Technical ActivitiesSudhakar SpartanNoch keine Bewertungen

- AUGUST 2007 Number 8 Itcob4 (ISSN 1063-8210) : Special Section PapersDokument1 SeiteAUGUST 2007 Number 8 Itcob4 (ISSN 1063-8210) : Special Section PapersSudhakar SpartanNoch keine Bewertungen

- Assignment Strategic ManagementDokument18 SeitenAssignment Strategic ManagementDarmmini MiniNoch keine Bewertungen

- Module 2.1 Cultural Relativism-1Dokument20 SeitenModule 2.1 Cultural Relativism-1Blad AnneNoch keine Bewertungen

- PRML 2022 EndsemDokument3 SeitenPRML 2022 EndsembhjkNoch keine Bewertungen

- PERDEV Week3Dokument26 SeitenPERDEV Week3Coulline DamoNoch keine Bewertungen

- Introduction To Educational Research Connecting Methods To Practice 1st Edition Lochmiller Test Bank DownloadDokument12 SeitenIntroduction To Educational Research Connecting Methods To Practice 1st Edition Lochmiller Test Bank DownloadDelores Cooper100% (20)

- Family Health Nursing ProcessDokument106 SeitenFamily Health Nursing ProcessBhie BhieNoch keine Bewertungen

- Safety PledgeDokument3 SeitenSafety Pledgeapi-268778235100% (1)

- Concurrent Manager ConceptDokument9 SeitenConcurrent Manager Conceptnikhil_cs_08Noch keine Bewertungen

- Abrasive Jet Machining Unit 2Dokument7 SeitenAbrasive Jet Machining Unit 2anithayesurajNoch keine Bewertungen

- Fronte 1Dokument45 SeitenFronte 1Patty HMNoch keine Bewertungen

- Evaluation of Chick Quality Which Method Do You Choose - 4Dokument4 SeitenEvaluation of Chick Quality Which Method Do You Choose - 4Dani GarnidaNoch keine Bewertungen

- Window Pane Reflection ProblemDokument8 SeitenWindow Pane Reflection ProblemLee GaoNoch keine Bewertungen

- 7.proceeding Snib-Eng Dept, Pnp-IndonesiaDokument11 Seiten7.proceeding Snib-Eng Dept, Pnp-IndonesiamissfifitNoch keine Bewertungen

- Fundamental Neuroscience For Basic and Clinical Applications 5Th Edition Duane E Haines Full ChapterDokument67 SeitenFundamental Neuroscience For Basic and Clinical Applications 5Th Edition Duane E Haines Full Chaptermaxine.ferrell318100% (8)

- Parts Catalogue YAMAHA ET-1Dokument18 SeitenParts Catalogue YAMAHA ET-1walterfrano6523Noch keine Bewertungen

- The Theory of Reasoned ActionDokument2 SeitenThe Theory of Reasoned ActionAisha Vidya TriyandaniNoch keine Bewertungen

- Experion MX MD Controls R700.1 Traditional Control: Configuration and System Build ManualDokument62 SeitenExperion MX MD Controls R700.1 Traditional Control: Configuration and System Build Manualdesaivilas60Noch keine Bewertungen

- KTU BTech EEE 2016scheme S3S4KTUSyllabusDokument41 SeitenKTU BTech EEE 2016scheme S3S4KTUSyllabusleksremeshNoch keine Bewertungen

- Music and IQDokument10 SeitenMusic and IQh.kaviani88Noch keine Bewertungen

- Receipt - 1698288Dokument1 SeiteReceipt - 1698288shaikhadeeb7777Noch keine Bewertungen

- Elastic CollisionDokument1 SeiteElastic CollisionTeo Hui pingNoch keine Bewertungen

- Brainstorming and OutliningDokument7 SeitenBrainstorming and OutliningWalter Evans LasulaNoch keine Bewertungen

- Image Filtering: Low and High FrequencyDokument5 SeitenImage Filtering: Low and High FrequencyvijayNoch keine Bewertungen

- 4 A Study of Encryption AlgorithmsDokument9 Seiten4 A Study of Encryption AlgorithmsVivekNoch keine Bewertungen

- Curl (Mathematics) - Wikipedia, The Free EncyclopediaDokument13 SeitenCurl (Mathematics) - Wikipedia, The Free EncyclopediasoumyanitcNoch keine Bewertungen

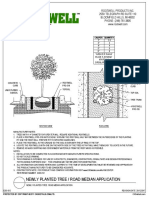

- Newly Planted Tree / Road Median ApplicationDokument1 SeiteNewly Planted Tree / Road Median ApplicationmooolkaNoch keine Bewertungen