Beruflich Dokumente

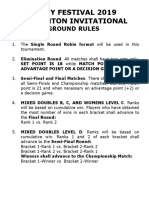

Kultur Dokumente

An Unsupervised Approach To

Hochgeladen von

Adam HansenCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

An Unsupervised Approach To

Hochgeladen von

Adam HansenCopyright:

Verfügbare Formate

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No.

5, September 2013

AN UNSUPERVISED APPROACH TO DEVELOP IR SYSTEM: THE CASE OF URDU

Mohd. Shahid Husain

Integral University, Lucknow

ABSTRACT

Web Search Engines are best gifts to the mankind by Information and Communication Technologies. Without the search engines it would have been almost impossible to make the efficient access of the information available on the web today. They play a very vital role in the accessibility and usability of the internet based information systems. As the internet users are increasing day by day so is the amount of information being available on web increasing. But the access of information is not uniform across all the language communities. Besides English and European languages that constitutes to the 60% of the information available on the web, there is still a wide range of the information available on the internet in different languages too. In the past few years the amount of information available in Indian Languages has also increased. Besides English and few European Languages, there are no tools and techniques available for the efficient retrieval of this information available on the internet. Especially in the case of the Indian Languages the research is still in the preliminary steps. There are no sufficient amount of tools and techniques available for the efficient retrieval of the information for Indian Languages. As we know that Indian Languages are very resource poor languages in terms of IR test data collection. So my main focus was mainly on developing the data set for URDU IR, training and testing data for Stemmer. We have developed a language independent system to facilitate efficient retrieval of information available in Urdu language which can be used for other languages as well. The system gives precision of 0.63 and the recall of the system is 0.8. For this Firstly I have developed an Unsupervised Stemmer for URDU Language [1] as it is very important in the Information Retrieval.

Keywords: Information Retrieval, Vector Space Model, Stemming, Urdu IR

1. INTRODUCTION

The rapid growth of electronic data has attracted the attention in the research and industry communities for efficient methods for indexing, analysis and retrieval of information from these large number of data repositories having wide range of data for a vast domain of applications. In this era of Information technology, more and more data is now being made available on online data repositories. Almost every information one need is now available on internet. English and European Languages basically dominated the web since its inception. However, now the web is getting multi-lingual. Especially, during last few years, a wide range of information in Indian regional languages like Hindi, Urdu, Bengali, Oriya, Tamil and Telugu has been made available on web in the form of e-data. But the access to these data repositories is very low because the efficient search engines/retrieval systems supporting these languages are very limited. Hence automatic information processing and retrieval is become an urgent requirement. Moreover, since India is a country having a wide range of regional languages, in the Indian context, the IR approach should be such that it can handle multilingual document collections.

DOI : 10.5121/ijaia.2013.4506

77

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

A number of information retrieval systems are available to support English and some other European languages. Work involving development of IR systems for Indian languages is only of recent interests. Development of such systems is constraint by the lack of the availability of linguistic resources and tools in these languages. The reported works in this direction for Indian languages were focused on Hindi, Tamil, Bengali, Marathi and Oriya. But there is no reported work is done for Urdu language. There is no sufficient amount of resources available to retrieve information effectively available on internet in Indian Languages. So there is a need of some efficient tools and techniques to represent, express, store and retrieve the information available in different languages. The present work focuses on development of an efficient Information Retrieval system for Urdu Language.

2.INFORMATION RETRIEVAL

Information Retrieval is the sub domain of text mining and natural language processing. This is the science in which the software system retrieves the relevant documents or the information in response to the user query need. The Information Retrieval system match the given user quires with the data corpus available and rank the documents on the basis of the relevance with the user need. Then the IR system returns the top ranked documents containing relevant information to the user query. IR systems may be monolingual, bi-lingual or multilingual. The main objective of this thesis work is the development of the mono-lingual information retrieval system for Urdu language. To retrieve the relevant information on the basis of user query

Fig. 1: typical IR system

The IR system breaks the query statement and the data corpus in a standard format. The query is then matched with the documents presented in the corpus and ranked on the basis of the relevance with the query. Top ranked documents are then retrieved.

78

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

There are various approaches for converting the query statement and the corpus data in a standard format like stemming, morphological analysis, Stop word removal, indexing etc. Similarly there are various techniques or methods for query matching like cosine similarity, Euclidean distance etc. The efficiency of any Information Retrieval system depends on the term weighting schemes, strategies used for indexing the documents and the retrieval model used to develop the IR system.

2.1 Stemmer

Stemming is the backbone process of any IR system. Stemmers are used for getting base or root form (i.e. stems) from inflected (or sometimes derived) words. Unlike morphological analyzer, where the root words have some lexical meaning, its not necessary with the case of a stemmer. A stemmer is used to remove the inflected part of the words to get their root form. Stemming is used to reduce the overhead of indexing and to improve the performance of an IR system. More specifically, stemmer increases recall of the search engine, whereas Precision decreases. However sometimes precision may increases depending upon the information need of the users. Stemming is the basic process of any query system, because a user who needs some information on plays may also be interested in documents that contain the word play (without the s).

2.2 Term Frequency

This is a local parameter which indicates the frequency or the count of a term within a document. This parameter gives the relevance of a document with a user query term on the basis of how many times that term occurs in that particular document. Mathematically it can be given as: tfij=nij Where nij is the frequency or the number of occurrence of term ti in the document dj.

2.3 Document Frequency

This is a global parameter and attempts to include distribution of term across the documents. This parameter gives the importance of the term across the document corpus. The number of the documents in the corpus containing the considered term t is called the document frequency. To normalize, it is divided by the total number of the documents in the corpus. Mathematically it can be given as: dfi=ni/n Where ni is the number of documents that contains term ti and the total number of the documents in the corpus is n. idf is the inverse of this document frequency.

2.4 The third factor which may affect the weighting function is the length of the document.

Hence the term weighting function can be represented by a triplet ABC here A- tf component B- idf component C- Length normalizing component The factor Term frequency within a document i.e. A may have following options:

79

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

Table 1: different options for considering term frequency

n b a

tf = tfij tf = 0 or 1 tfij tf = 0.5 + 0.5 max tf in Dj tf = ln(tfij) + 1.0

(Raw term frequency) (binary weight) (Augmented term frequency)

Logarithmic term frequency

The options for the factor inverse document frequency i.e. B is:

Table 2: different options for considering inverse document frequency

N T

Wt=tf Wt=tf*idf

No conversion i.e. idf is not taken Idf is taken into account

The options for the factor document length i.e. C is:

Table 3: different options for considering document length

N C

Wij=wt Wij=wt/ sqrt(sum of (wts squared))

No conversion Normalized weight

2.5 Indexing

To represent the documents in the corpus and the user query statement indexing is done. That is the process of transforming document text and given query statement to some representation of it is known as indexing. There are different index structures which can be used for indexing. The most commonly used data structure by IR system is inverted index. Indexing techniques concerned with the selection of good document descriptors, such as keywords or terms, to describe information content of the documents. A good descriptor is one that helps in describing the content of the document and in discriminating the document from other documents in the collection. The most widely used method is to represent the query and the document as a set of tokens i.e. index terms or keywords. For indexing a document, there are different indexing strategies as given below :

80

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

2.5.1 Character Indexing: In this scheme the tokens used for representing the documents are the characters present in the document. 2.5.2 Word Indexing: This approach uses words in the document to represent it. 2.5.3 N-gram indexing: This method breaks the words into n-grams, these n-grams are used to index the documents. 2.5.4 Compound Word Indexing: In this method bi-words or tri-words are used for indexing.

2.6

Information retrieval models

An IR model defines the following aspects of retrieval procedure of a search engine: a. How the documents and users queries are represented b. How system retrieves relevant documents according to users queries & c. How retrieved documents are ranked. Any typical IR model comprises of the following: a. A model for documents b. A model for queries and c. Matching function which compares queries to documents. The IR models can be categorized as: 2.6.1 Classical models of IR:

This is the simplest IR model. It is based on the well recognized and easy to understood knowledge of mathematics. Classical models are easy to implement and are very efficient. The three classical information retrieval models are: -Boolean -Vector and -Probabilistic models 2.6.2 Non-Classical models of IR:

Non-classical information retrieval models are based on principles like information logic model, situation theory model and interaction model. They are not based on concepts like similarity, probability, Boolean operations etc. on which classical retrieval models are based on. 2.6.3 Alternative models of IR: Alternative models are advanced classical IR models. These models make use of specific techniques from other fields like Cluster model, fuzzy model and latent semantic indexing (LSI) models. 2.6.4Boolean Retrieval model: This is the simplest retrieval model which retrieves the information on the basis of the query given in Boolean expression. Boolean queries are queries that uses And, OR and Not Boolean operations to join the query terms.

81

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

The one drawback of Boolean information retrieval model is that it requires Boolean query instead of free text. The second drawback is that this model cannot rank the documents on the basis of relevance with the user query. It just gives the document if it contains the query word, regardless the term count in the document or the actual importance of that query word in the document. 2.6.5 Vector Space model: This model represents documents and queries as vectors of features representing terms. Features are assigned some numerical value that is usually some function of frequency of terms. In this model, each document d is viewed as a vector of tfidf values, one component for each term So we have a vector space where a. Terms are axes b. documents live in this space Ranking algorithm compute similarity between document and query vectors to yield a retrieval score to each document. The Postulate is: Documents related to the same information are close together in the vector space. 2.6.6 Probabilistic retrieval model: In this model, initialy some set of documents is retrieved by using vectorial model or boolean model. The user inspects these documents looking for the relevant ones and gives his feed back. IR system uses this feedback information to refine the search criteria. This process is repeated, untill user gets the desired information in response to his needs.

2.7.Similarity Measures

To retrieve the most relevant documents with the user information need, the IR system matches the documents available in the corpus with the given user query. To perform this process different similarity measures are used. For example Euclidean distance, cosine similarity. 2.7.1 Cosine Similarity

We regard the query as short document. The documents present in the corpus and the query are represented by the vectors in the vector space with features as axes. The IR system rank the documents by the closeness of document vectors to the query vectors. IR system then retrieve the top ranked documents to the user.

Fig. 2: A VSM model representing 3 documents and a query 82

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

The above diagram shows a vector space model where axes ti and tj are the terms used for indexing. The cosine similarity between the document dj and the query vector qk is given as:

(dj, qk ) sim(dj, qk ) = = dj qk

w w

ij i =1

ik

w

i =1

ik

w

i =1

ij

2.8.Metrics for IR Evaluation

The aim of any Information Retrieval system is to search document in responce to a user query relavant to his information need. The performance of IR systems is evaluated on the basis of how relavent documents it retrieve. Relevance depends upon a specific users judgment. It is subjective in nature. The true relevance of the retrieved document can be judged by the user only, on the basis of his information need. For same query statement, the desired information need may differ from user to user. Traditionally the evaluation of IR systems has been done on a set of queries and test document collections. For each test query a set of ranked relavant documents is created manually then the system result is cross checked by it. There are many retrieval models/ algorithms/ systems. Different performance metrics are used to assess how effeciently an IR system retrieve the documents in responce to a users information need. Different Criteria's for evaluation of an IR system are: a. Coverage of the collection b. Time lag c. Presentation format d. User effort e. Precision f. Recall Effectiveness is the performance measure of any IR system which describes, how much the IR system satisfy a users information need by retrieving relevant documents. Aspects of effectiveness include: a. Whether the retrieved documents are pertinent to the information need of the user. b. Whether the retrieved documents are ranked according to the relevance with the user query. c. Whether the IR system returns a reasonable number of relevant documents present in the corpus to the user etc.

Fig. 3: trade-off between precision and recall 83

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

OUR APPROACH

In this work, to develop an Information Retrieval system for Urdu language, the following methods and evaluation parameters are used.

Fig. 4: Architecture of monolingual IR system

3.1 Stemmer:

For Developing Stemmer we have used an unsupervised approach [1] which gives accuracy of 84.2.

3.2 Term Weighting scheme:

For term weighting we have used the tf*idf

3.3 Indexing Scheme:

In this work the query statement and the documents are represented using the word indexing strategy.

3.4 Retrieval Model:

To implement our IR system we have used the vector space model.

3.5 Encoding Scheme:

As the system focuses on Urdu language, to access the data UTF8 character encoding is used.

3.6 Similarity Measure:

For getting documents which are more closely related to the query i.e. to measure the similarity between different documents in the corpus and the query statement, the cosine similarity measure is used.

3.7 Ranking of the document:

For ranking of the retrieved documents in order to their relevance with the query, cosine similarity values are used. The document having higher cosine value (min angular distance) with the query will be more similar i.e. contains the query terms more frequently and hence these documents will be considered more relevant to the user query.

84

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

4 EXPERIMENT

The data set used in this thesis for the training and testing of the developed Urdu IR system is taken from Emilie corpus. In this corpus documents are in xml format. The data set taken from EMILLE corpus a tagged data set consist of documents having information related to health issues, road safety issues, education issues, legal social issues, social issues, housing issues etc. The testing data set consist of documents from various domains such as:

Table 4: dataset specification used for Urdu IR

Domain Health Education Housing Legal Social issues Homeopathy Drama Myths Story and Novel Media Science History Politics Psychology Religion Sociology Miscellaneous

Number of Documents 33 8 8 8 12 32 13 10 21 15 47 33 21 27 34 21 48

Number of words 223412 115264 120327 108055 146083 527360 135680 202880 300160 224000 704000 502400 728320 555520 556800 398080 985374

A Query set consist of 200 queries is prepared manually for training and testing of the IR system.

Table 5: Sample of Query set

85

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013

5 RESULTS AND DISCUSSIONS

For testing purpose of the developed Information Retrieval system, a test collection of 350 documents have been used. A set of 200 queries was constructed on these 350 documents. This query set is used to evaluate the developed Urdu IR.

Table 5: results of the developed Urdu IR system testing

As shown in the above table, the system has value of 0.13 as the minimum average precision and maximum average precision value of the system is 0.63. Similarly the minimum average recall value for the system is 0.5 and maximum average recall value was found out to be 0.8.

6 CONCLUSION AND FUTURE WORK

In this paper we have discussed various indexing schemes and IR models. We have used tf*idf scheme for indexing and to implement the IR system VSM (Vector Space Model) is used. The experimental result shows that the average recall of the developed IR system is 0.8 with 0.3 precision. IR is one of the hottest research fields. One can do a lot new research to provide efficient IR system which can satisfy the users information needs. A lot of research is needed to develop language independent approaches to support IR systems for multilingual data collections.

REFERENCES

[1] Mohd Shahid Husain et. al. A language Independent Approach to develop Urdu stemmer. Proceedings of the second International Conference on Advances in Computing and Information Technology. 2012. [2] Rizvi, J et. al. Modeling case marking system of Urdu-Hindi languages by using semantic information. Proceedings of the IEEE International Conference on Natural Language Processing and Knowledge Engineering (IEEE NLP-KE '05). 2005. [3] Butt, M. King, T. Non-Nominative Subjects in Urdu: A Computational Analysis. Proceedings of the International Symposium on Non-nominative Subjects, Tokyo, December, pp. 525-548, 2001. [4] Chen, A. Gey, F. Building and Arabic Stemmer for Information Retrieval. Proceedings of the Text Retrieval Conference, 47, 2002. [5] R. Wicentowski. "Multilingual Noise-Robust Supervised Morphological Analysis using the Word Frame Model." In Proceedings of Seventh Meeting of the ACL Special Interest Group on Computational Phonology (SIGPHON), pp. 70-77, 2004. [6] Rizvi, Hussain M. Analysis, Design and Implementation of Urdu Morphological Analyzer. SCONEST, 1-7, 2005. [7] Krovetz, R. View Morphology as an Inference Process. In the Proceedings of 5th International Conference on Research and Development in Information Retrieval, 1993. [8] Thabet, N. Stemming the Quran. In the Proceedings of the Workshop on Computational Approaches to Arabic Script-based Languages, 2004. [9] Paik, Pauri. A Simple Stemmer for Inflectional Languages. FIRE 2008. [10] Sharifloo, A.A., Shamsfard M. A Bottom up Approach to Persian Stemming. IJCNLP, 2008 [11] Kumar, A. and Siddiqui, T. An Unsupervised Hindi Stemmer with Heuristics Improvements. In Proceedings of the Second Workshop on Analytics for Noisy Unstructured Text Data, 2008. 86

International Journal of Artificial Intelligence & Applications (IJAIA), Vol. 4, No. 5, September 2013 [12] Kumar, M. S. and Murthy, K. N. Corpus Based Statistical Approach for Stemming Telugu. Creation of Lexical Resources for Indian Language Computing and Processing (LRIL), C-DAC, Mumbai, India, 2007. [13] Qurat-ul-Ain Akram, Asma Naseer, Sarmad Hussain. Assas-Band, an Affix-Exception-List Based Urdu Stemmer. Proceedings of ACL-IJCNLP 2009. [14] http://en.wikipedia.org/wiki/Urdu [15] http://www.bbc.co.uk/languages/other/guide/urdu/steps.shtml [16] .http://www.andaman.org/BOOK/reprints/weber/rep-weber.htm [17] Natural Language processing and Information Retrieval by Tanveer Siddiqui, U S Tiwary. [18] Information retrieval: data structure and algorithms by William B. Frakes, Ricardo Baeza-Yates. [19] http://www.crulp.org/software/ling_resources.htm

AUTHOR Mohd. Shahid Husain

M.Tech. from Indian Institute of Information Technology (IIIT-A), Allahabad with Intelligent System as specialization. Currently pursuing Ph.D. and working as assistant professor in the department of Information Technology, Integral University, Lucknow.

87

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- BIOCHEM REPORT - OdtDokument16 SeitenBIOCHEM REPORT - OdtLingeshwarry JewarethnamNoch keine Bewertungen

- Iso 20816 8 2018 en PDFDokument11 SeitenIso 20816 8 2018 en PDFEdwin Bermejo75% (4)

- De La Salle Araneta University Grading SystemDokument2 SeitenDe La Salle Araneta University Grading Systemnicolaus copernicus100% (2)

- Uncertainty Estimation in Neural Networks Through Multi-Task LearningDokument13 SeitenUncertainty Estimation in Neural Networks Through Multi-Task LearningAdam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- Spot-The-Camel: Computer Vision For Safer RoadsDokument10 SeitenSpot-The-Camel: Computer Vision For Safer RoadsAdam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- Book 2Dokument17 SeitenBook 2Adam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- EDGE-Net: Efficient Deep-Learning Gradients Extraction NetworkDokument15 SeitenEDGE-Net: Efficient Deep-Learning Gradients Extraction NetworkAdam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- Design That Uses Ai To Overturn Stereotypes: Make Witches Wicked AgainDokument12 SeitenDesign That Uses Ai To Overturn Stereotypes: Make Witches Wicked AgainAdam HansenNoch keine Bewertungen

- Development of An Intelligent Vital Sign Monitoring Robot SystemDokument21 SeitenDevelopment of An Intelligent Vital Sign Monitoring Robot SystemAdam HansenNoch keine Bewertungen

- Psychological Emotion Recognition of Students Using Machine Learning Based ChatbotDokument11 SeitenPsychological Emotion Recognition of Students Using Machine Learning Based ChatbotAdam Hansen100% (1)

- A Comparison of Document Similarity AlgorithmsDokument10 SeitenA Comparison of Document Similarity AlgorithmsAdam HansenNoch keine Bewertungen

- Investigating The Effect of Bd-Craft To Text Detection AlgorithmsDokument16 SeitenInvestigating The Effect of Bd-Craft To Text Detection AlgorithmsAdam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- Call For Papers - International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenCall For Papers - International Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- Adpp: A Novel Anomaly Detection and Privacy-Preserving Framework Using Blockchain and Neural Networks in TokenomicsDokument16 SeitenAdpp: A Novel Anomaly Detection and Privacy-Preserving Framework Using Blockchain and Neural Networks in TokenomicsAdam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- An Authorship Identification Empirical Evaluation of Writing Style Features in Cross-Topic and Cross-Genre DocumentsDokument14 SeitenAn Authorship Identification Empirical Evaluation of Writing Style Features in Cross-Topic and Cross-Genre DocumentsAdam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- Ijaia 01Dokument15 SeitenIjaia 01Adam HansenNoch keine Bewertungen

- Converting Real Human Avatar To Cartoon Avatar Utilizing CycleganDokument15 SeitenConverting Real Human Avatar To Cartoon Avatar Utilizing CycleganAdam HansenNoch keine Bewertungen

- A Systematic Study of Deep Learning Architectures For Analysis of Glaucoma and Hypertensive RetinopathyDokument17 SeitenA Systematic Study of Deep Learning Architectures For Analysis of Glaucoma and Hypertensive RetinopathyAdam HansenNoch keine Bewertungen

- Improving Explanations of Image Classifiers: Ensembles and Multitask LearningDokument22 SeitenImproving Explanations of Image Classifiers: Ensembles and Multitask LearningAdam HansenNoch keine Bewertungen

- International Journal of Artificial Intelligence & Applications (IJAIA)Dokument2 SeitenInternational Journal of Artificial Intelligence & Applications (IJAIA)Adam HansenNoch keine Bewertungen

- DSLP CalculationDokument7 SeitenDSLP Calculationravi shankar100% (1)

- ImpetigoDokument16 SeitenImpetigokikimasyhurNoch keine Bewertungen

- The Gower Handbook of Project Management: Part 1: ProjectsDokument2 SeitenThe Gower Handbook of Project Management: Part 1: ProjectschineduNoch keine Bewertungen

- Sucesos de Las Islas Filipinas PPT Content - Carlos 1Dokument2 SeitenSucesos de Las Islas Filipinas PPT Content - Carlos 1A Mi YaNoch keine Bewertungen

- Economic Value Added in ComDokument7 SeitenEconomic Value Added in Comhareshsoni21Noch keine Bewertungen

- Acfrogb0i3jalza4d2cm33ab0kjvfqevdmmcia - Kifkmf7zqew8tpk3ef Iav8r9j0ys0ekwrl4a8k7yqd0pqdr9qk1cpmjq Xx5x6kxzc8uq9it Zno Fwdrmyo98jelpvjb-9ahfdekf3cqptDokument1 SeiteAcfrogb0i3jalza4d2cm33ab0kjvfqevdmmcia - Kifkmf7zqew8tpk3ef Iav8r9j0ys0ekwrl4a8k7yqd0pqdr9qk1cpmjq Xx5x6kxzc8uq9it Zno Fwdrmyo98jelpvjb-9ahfdekf3cqptbbNoch keine Bewertungen

- Sop 2Dokument43 SeitenSop 2naveengargnsNoch keine Bewertungen

- Pilot SafetyDokument120 SeitenPilot SafetyamilaNoch keine Bewertungen

- Pathfinder CharacterSheet.1.8InterActiveDokument3 SeitenPathfinder CharacterSheet.1.8InterActiveJessica AlvisNoch keine Bewertungen

- Ar 2003Dokument187 SeitenAr 2003Alberto ArrietaNoch keine Bewertungen

- A New Cloud Computing Governance Framework PDFDokument8 SeitenA New Cloud Computing Governance Framework PDFMustafa Al HassanNoch keine Bewertungen

- Introduction To Political ScienceDokument18 SeitenIntroduction To Political Sciencecyrene cayananNoch keine Bewertungen

- Configuration Steps - Settlement Management in S - 4 HANA - SAP BlogsDokument30 SeitenConfiguration Steps - Settlement Management in S - 4 HANA - SAP Blogsenza100% (4)

- Mat Clark - SpeakingDokument105 SeitenMat Clark - SpeakingAdiya SNoch keine Bewertungen

- Unidad 12 (libro-PowerPoint)Dokument5 SeitenUnidad 12 (libro-PowerPoint)Franklin Suarez.HNoch keine Bewertungen

- Simple Past TenselDokument3 SeitenSimple Past TenselPutra ViskellaNoch keine Bewertungen

- Ground Rules 2019Dokument3 SeitenGround Rules 2019Jeremiah Miko LepasanaNoch keine Bewertungen

- 2,3,5 Aqidah Dan QHDokument5 Seiten2,3,5 Aqidah Dan QHBang PaingNoch keine Bewertungen

- Prime White Cement vs. Iac Assigned CaseDokument6 SeitenPrime White Cement vs. Iac Assigned CaseStephanie Reyes GoNoch keine Bewertungen

- Short-Term Load Forecasting by Artificial Intelligent Technologies PDFDokument446 SeitenShort-Term Load Forecasting by Artificial Intelligent Technologies PDFnssnitNoch keine Bewertungen

- Framework For Marketing Management Global 6Th Edition Kotler Solutions Manual Full Chapter PDFDokument33 SeitenFramework For Marketing Management Global 6Th Edition Kotler Solutions Manual Full Chapter PDFWilliamThomasbpsg100% (9)

- MGT501 Final Term MCQs + SubjectiveDokument33 SeitenMGT501 Final Term MCQs + SubjectiveAyesha Abdullah100% (1)

- CV Michael Naughton 2019Dokument2 SeitenCV Michael Naughton 2019api-380850234Noch keine Bewertungen

- Level 5 - LFH 6-10 SepDokument14 SeitenLevel 5 - LFH 6-10 SepJanna GunioNoch keine Bewertungen

- Rubrics For Field Trip 1 Reflective DiaryDokument2 SeitenRubrics For Field Trip 1 Reflective DiarycrystalNoch keine Bewertungen

- Motivational Speech About Our Dreams and AmbitionsDokument2 SeitenMotivational Speech About Our Dreams and AmbitionsÇhärlöttë Çhrístíñë Dë ÇöldëNoch keine Bewertungen

- Payment Billing System DocumentDokument65 SeitenPayment Billing System Documentshankar_718571% (7)