Beruflich Dokumente

Kultur Dokumente

Major Issues in Data Mining

Hochgeladen von

Gaurav JaiswalOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Major Issues in Data Mining

Hochgeladen von

Gaurav JaiswalCopyright:

Verfügbare Formate

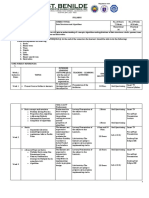

Unit-01: Data Mining and Data Warehousing

Major issues in Data Mining

Major issues in Data Mining research are partitioning them into 5 groups: mining methodology, user interaction, performance (efficiency and scalability), diversity of data types and data mining & society. Mining Methodology and user interaction issues These reflect the kinds of knowledge mined the ability to mine knowledge at multiple granularities, the use of domain knowledge, ad hoc mining, and knowledge visualization. Mining different kinds of knowledge in databases: Interactive mining of knowledge at multiple levels of abstraction: Incorporation of background knowledge: Data mining query languages and ad hoc data mining: Presentation and visualization of data mining results: Handling noisy or incomplete data: Pattern evaluationthe interestingness problem:

Performance (efficiency and scalability) issues These include efficiency, scalability, and parallelization of data mining algorithms. Efficiency and scalability of data mining algorithms: Parallel, distributed, and incremental mining algorithms: Diversity of database types

Handling of relational and complex types of data: Mining information from heterogeneous databases and global information systems:

Data mining and society How does mining impact society? What steps can mining take to preserve the privacy of individuals? Do we use mining in our daily lives without knowing that we do? These questions raise the following issues Social impacts of data mining: Privacy-preserving data mining: Invisible data mining:

Data preprocessing

The data you wish to analyze by data mining techniques are incomplete (lacking attribute values or certain attributes of interest, or containing only aggregate data), noisy (containing errors), and inconsistent. How can the data be preprocessed in order to help improve the quality of the data and, consequently, of the mining results? How can the data be preprocessed so as to improve the efficiency and ease of the mining process? There are a number of data preprocessing techniques. Data cleaning can be applied to remove noise and correct inconsistencies in the data. Data integration merges data from multiple sources into a coherent data store, such as a data warehouse. Data transformations, such as normalization, may be applied. For example, normalization may improve the accuracy and efficiency of mining algorithms

Gaurav Jaiswal, Dept. of CS, AITM

Unit-01: Data Mining and Data Warehousing

involving distance measurements. Data reduction can reduce the data size by aggregating, eliminating redundant features, or clustering, for instance.

Data cleaning

Real-world data tend to be incomplete, noisy, and inconsistent. Data cleaning routines attempt to fill in missing values, smooth out noise while identifying outliers, and correct inconsistencies in the data. Missing values How can you go about filling in the missing values for this attribute? Lets look at the following methods: Ignore the tuple Fill in the missing value manually Use a global constant to fill in the missing value Use the attribute mean to fill in the missing value: Use the attribute mean for all samples belonging to the same class as the given tuple: Use the most probable value to fill in the missing value:

Noisy Data Noise is a random error or variance in a measured variable. Data smoothing techniques are : Binning: Binning methods smooth a sorted data value by consulting its neighborhood, that is, the values around it. The sorted values are distributed into a number of buckets, or bins. Because binning methods consult the neighborhood of values, they perform local smoothing. smoothing by bin means smoothing by bin medians smoothing by bin boundaries

Gaurav Jaiswal, Dept. of CS, AITM

Unit-01: Data Mining and Data Warehousing

Data Integration

Regression: Data can be smoothed by fitting the data to a function, such as with regression. Linear regression involves finding the best line to fit two attributes (or variables), so that one attribute can be used to predict the other. Multiple linear regressions is an extension of linear regression, where more than two attributes are involved and the data are fit to a multidimensional surface. Clustering: Outliers may be detected by clustering, where similar values are organized into groups, or clusters. Intuitively, values that fall outside of the set of clusters may be considered outliers.

Data integration combines data from multiple sources into a coherent data store, as in data warehousing. These sources may include multiple databases, data cubes, or flat files. There are a number of issues to consider during data integration Entity Identification problem and Redundancy (An attribute may be redundant if it can be derived from another attribute or set of attributes.). Inconsistencies in attribute or dimension naming can also cause redundancies in the resulting data set. Some redundancies can be detected by correlation analysis.

Data Transformation

The data are transformed or consolidated into forms appropriate for mining. Data transformation can involve the following: Aggregation, where summary or aggregation operations are applied to the data.

Smoothing, this works to remove noise from the data. Such techniques include binning, regression, and clustering. Generalization of the data, where low-level or primitive (raw) data are replaced by higher-level concepts through the use of concept hierarchies. Normalization, where the attribute data are scaled so as to fall within a small specified range, such as 1:0 to 1:0, or 0:0 to 1:0.

Gaurav Jaiswal, Dept. of CS, AITM

Unit-01: Data Mining and Data Warehousing

There are many methods for data normalization: min-max normalization, z-score normalization, and normalization by decimal scaling. Min-max normalization performs a linear transformation on the original data. Suppose that min A and max A are the minimum and maximum values of an attribute, A. Min-max normalization maps a value, v, of A to v in the range [new min A; new max A] by computing In z-score normalization (or zero-mean normalization), the values for an attribute, A, are normalized based on the mean and standard deviation of A. A value, v, of A is normalized to v by computing Where A and qA are the mean and standard deviation, respectively, of attribute A. Decimal scaling: - Suppose that the recorded values of A range from -986 to 917. The maximum absolute value of A is 986. To normalize by decimal scaling, we therefore divide each value by 1,000 (i.e., j = 3) so that -986 normalize to -0.986 and 917 normalizes to 0:917.

Data Reduction

Attribute construction (or feature construction), where new attributes are constructed and added from the given set of attributes to help improve the accuracy and understanding of structure in high-dimensional data.

Complex data analysis and mining on huge amounts of data can take a long time, making such analysis impractical or infeasible. Data reduction techniques can be applied to obtain a reduced representation of the data set that is much smaller in volume, yet closely maintains the integrity of the original data. That is, mining on the reduced data set should be more efficient yet produce the same (or almost the same) analytical results. Strategies for data reduction include the following: Data cube aggregation, where aggregation operations are applied to the data in the construction of a data cube. Attribute subset selection, where irrelevant, weakly relevant or redundant attributes or dimensions may be detected and removed. Dimensionality reduction, where encoding mechanisms are used to reduce the data set size. Numerosity reduction, where the data are replaced or estimated by alternative, smaller data representations such as parametric models (which need store only the model parameters instead of the actual data) or nonparametric methods such as clustering, sampling, and the use of histograms.

Gaurav Jaiswal, Dept. of CS, AITM

Unit-01: Data Mining and Data Warehousing

Data cube aggregation Data cubes store multidimensional aggregated information. For example, Figure shows a data cube for multidimensional analysis of sales data with respect to annual sales per item type for each ABCD (an org.) branch. Data cubes provide fast access to precomputed, summarized data, thereby benefiting on-line analytical processing as well as data mining. The cube created at the lowest level of abstraction is referred to as the base cuboid. The base cuboid should correspond to an individual entity of interest, such as sales or customer. In other words, the lowest level should be usable, or useful for the analysis. A cube at the highest level of abstraction is the apex cuboid. Data cubes created for varying levels of abstraction are often referred to as cuboids, so that a data cube may instead refer to a lattice of cuboids. Each higher level of abstraction further reduces the resulting data size.

Discretization and concept hierarchy generation, where raw data values for attributes are replaced by ranges or higher conceptual levels.

Attribute subset selection Attribute subset selection reduces the data set size by removing irrelevant or redundant attributes (or dimensions). The goal of attribute subset selection is to find a minimum set of attributes such that the resulting probability distribution of the data classes is as close as possible to the original distribution obtained using all attributes. Mining on a reduced set of attributes has an additional benefit. It reduces the number of attributes appearing in the discovered patterns, helping to make the patterns easier to understand. The best (and worst) attributes are typically determined using tests of statistical significance, which assume that the attributes are independent of one another. Basic heuristic methods of attribute subset selection include the following techniques, some of which are: 1. Stepwise forward selection: The procedure starts with an empty set of attributes as the reduced set. The best of the original attributes is determined and added to the reduced set. At each subsequent iteration or step, the best of the remaining original attributes is added to the set. 2. Stepwise backward elimination: The procedure starts with the full set of attributes. At each step, it removes the worst attribute remaining in the set.

Gaurav Jaiswal, Dept. of CS, AITM

Unit-01: Data Mining and Data Warehousing

3. Combination of forward selection and backward elimination: The stepwise forward selection and backward elimination methods can be combined so that, at each step, the procedure selects the best attribute and removes the worst from among the remaining attributes. 4. Decision tree induction: Decision tree induction constructs a flow chart like structure where each internal (nonleaf) node denotes a test on an attribute, each branch corresponds to an outcome of the test, and each external (leaf) node denotes a class prediction. At each node, the algorithm chooses the best attribute to partition the data into individual classes. When decision tree induction is used for attribute subset selection, a tree is constructed from the given data. All attributes that do not appear in the tree are assumed to be irrelevant. The set of attributes appearing in the tree form the reduced subset of attributes.

Dimensionality reduction In dimensionality reduction, data encoding or transformations are applied so as to obtain a reduced or compressed representation of the original data. If the original data can be reconstructed from the compressed data without any loss of information, the data reduction is called lossless. If, instead, we can reconstruct only an approximation of the original data, then the data reduction is called lossy. Two popular and effective methods of lossy dimensionality reduction: 1. Discrete Wavelet transforms The discrete wavelet transform (DWT) is a linear signal processing technique that, when applied to a data vector X, transforms it to a numerically different vector, X of wavelet coefficients. When applying this technique to data reduction, we consider each tuple as an n-dimensional data vector, that is, X =(x1;x2; : : : ;xn), depicting n measurements made on the tuple from n database attributes. The technique also works to remove noise without smoothing out the main features of the data, making it effective for data cleaning as well. The general procedure for applying a discrete wavelet transform uses a hierarchical pyramid algorithm that halves the data at each iteration, resulting in fast computational speed. The method is as follows: 1. The length, L, of the input data vector must be an integer power of 2. This condition can be met by padding the data vector with zeros as necessary (L n). 2. Each transform involves applying two functions. The first applies some data smoothing, such as a sum or weighted average. The second performs a weighted difference, which acts to bring out the detailed features of the data.

Gaurav Jaiswal, Dept. of CS, AITM

1. Discrete Wavelet transforms and 2. Principal components analysis.

Unit-01: Data Mining and Data Warehousing

Wavelet transforms can be applied to multidimensional data, such as a data cube. This is done by first applying the transform to the first dimension, then to the second, and so on. The computational complexity involved is linear with respect to the number of cells in the cube. Popular wavelet transforms include the Haar-2, Daubechies-4, and Daubechies-6 transforms. Wavelet transforms have many real-world applications, including the compression of fingerprint images, computer vision, analysis of time-series data, and data cleaning.

3. The two functions are applied to pairs of data points in X, that is, to all pairs of measurements (X2i; X2i+1). This results in two sets of data of length L=2. In general, these represent a smoothed or low-frequency version of the input data and the high frequency content of it, respectively. 4. The two functions are recursively applied to the sets of data obtained in the previous loop, until the resulting data sets obtained are of length 2. 5. Selected values from the data sets obtained in the above iterations are designated the wavelet coefficients of the transformed data.

2. Principal component analysis Principal components analysis, or PCA (also called the Karhunen-Loeve, or K-L, method), searches for k n-dimensional orthogonal vectors that can best be used to represent the data, where k n. The original data are thus projected onto a much smaller space, resulting in dimensionality reduction. The basic procedure is as follows: 1. The input data are normalized, so that each attribute falls within the same range. This step helps ensure that attributes with large domains will not dominate attributes with smaller domains. 2. PCA computes k orthonormal vectors that provide a basis for the normalized input data. These are unit vectors that each point in a direction perpendicular to the others. These vectors are referred to as the principal components. The input data are a linear combination of the principal components. 3. The principal components are sorted in order of decreasing significance or strength. The principal components essentially serve as a new set of axes for the data, providing important information about variance. That is, the sorted axes are such that the first axis shows the most variance among the data, the second axis shows the next highest variance, and so on. This information helps identify groups or patterns within the data. 4. Because the components are sorted according to decreasing order of significance, the size of the data can be reduced by eliminating the weaker components, that is, those with low variance. Using the strongest principal components, it should be possible to reconstruct a good approximation of the original data.

Gaurav Jaiswal, Dept. of CS, AITM

Unit-01: Data Mining and Data Warehousing

Numerosity reduction Techniques of numerosity reduction can indeed be applied to reduce the data volume by choosing alternative, smaller forms of data representation. These techniques may be parametric or nonparametric. I. Parametric methods, a model is used to estimate the data, so that typically only the data parameters need to be stored, instead of the actual data e.g. Log-Linear Models. II. Nonparametric methods for storing reduced representations of the data include histograms, clustering, and sampling.

Principal components may be used as inputs to multiple regression and cluster analysis. In comparison with wavelet transforms, PCA tends to be better at handling sparse data, whereas wavelet transforms are more suitable for data of high dimensionality.

Data Discretization and Concept Hierarchy Generation Data discretization techniques can be used to reduce the number of values for a given continuous attribute by dividing the range of the attribute into intervals. Interval labels can then be used to replace actual data values. Replacing numerous values of a continuous attribute by a small number of interval labels thereby reduces and simplifies the original data. This leads to a concise, easy-to-use, knowledge-level representation of mining results. Discretization techniques can be categorized based on how the discretization is performed, such as whether it uses class information or which direction it proceeds (i.e., top-down vs. bottom-up). If the discretization process uses class information, then we say it is supervised discretization. Otherwise, it is unsupervised. If the process starts by first finding one or a few points (called split points or cut points) to split the entire attribute range, and then repeats this recursively on the resulting intervals, it is called top-down discretization or splitting. This contrasts with bottom-up discretization or merging, which starts by considering all of the continuous values as potential splitpoints, removes some by merging neighborhood values to form intervals, and then recursively applies this process to the resulting intervals. A concept hierarchy for a given numerical attribute defines a discretization of the attribute. Concept hierarchies can be used to reduce the data by collecting and replacing low-level concepts (such as numerical values for the attribute age) with higher-level concepts (such as youth, middleaged, or senior). Although detail is lost by such data generalization, the generalized data may be more meaningful and easier to interpret. This contributes to a consistent representation of data mining results among multiple mining tasks, which is a common requirement. In addition, mining on a reduced data set requires fewer input/output operations and is more efficient than mining on a larger, un-generalized data set. Because of these benefits, discretization techniques and concept hierarchies are typically applied before data mining as a preprocessing step, rather than during mining.

An example of a concept hierarchy for the attribute price

Gaurav Jaiswal, Dept. of CS, AITM

Unit-01: Data Mining and Data Warehousing

1. Discretization and Concept Hierarchy Generation for Numerical Data Concept

2. Concept Hierarchy Generation for Categorical Data Categorical data are discrete

hierarchies for numerical attributes can be constructed automatically based on data discretization. We examine the following methods: binning, histogram analysis, entropybased discretization, X2-merging, cluster analysis, and discretization by intuitive partitioning. In general, each method assumes that the values to be discretized are sorted in ascending order. data. Categorical attributes have a finite (but possibly large) number of distinct values, with no ordering among the values. Examples include geographic location, job category, and item type. There are several methods for the generation of concept hierarchies for categorical data. I. Specification of a partial ordering of attributes explicitly at the schema level by users or experts: II. Specification of a portion of a hierarchy by explicit data grouping: III. Specification of a set of attributes, but not of their partial ordering:

NOTE: Refer book

Data Mining: Concepts and Techniques

Second Edition or Third Edition

Jiawei Han Micheline Kamber

Gaurav Jaiswal, Dept. of CS, AITM

Das könnte Ihnen auch gefallen

- Virtual Feeder Segregation Using IIoT and Cloud TechnologiesDokument7 SeitenVirtual Feeder Segregation Using IIoT and Cloud Technologiespjgandhi100% (2)

- Data Preprocessing in Data MiningDokument4 SeitenData Preprocessing in Data MiningDhananjai SainiNoch keine Bewertungen

- SCC5-4000F Single ShaftDokument15 SeitenSCC5-4000F Single ShaftudelmarkNoch keine Bewertungen

- Cash Budget Sharpe Corporation S Projected Sales First 8 Month oDokument1 SeiteCash Budget Sharpe Corporation S Projected Sales First 8 Month oAmit PandeyNoch keine Bewertungen

- Major Issues in Data MiningDokument5 SeitenMajor Issues in Data MiningGaurav JaiswalNoch keine Bewertungen

- UNIT-III Data Warehouse and Minig Notes MDUDokument42 SeitenUNIT-III Data Warehouse and Minig Notes MDUneha srivastavaNoch keine Bewertungen

- Data Mining Unit-1 Lect-4Dokument49 SeitenData Mining Unit-1 Lect-4Pooja ReddyNoch keine Bewertungen

- Term PaperDokument5 SeitenTerm PaperAkash SudanNoch keine Bewertungen

- Lec 2 Preparing The DataDokument4 SeitenLec 2 Preparing The DataAlia buttNoch keine Bewertungen

- UNIT - 2 .DataScience 04.09.18Dokument53 SeitenUNIT - 2 .DataScience 04.09.18Raghavendra RaoNoch keine Bewertungen

- Unit 2 DWDMDokument14 SeitenUnit 2 DWDMbrijendersinghkhalsaNoch keine Bewertungen

- DATA MINING Notes (Upate)Dokument25 SeitenDATA MINING Notes (Upate)black smithNoch keine Bewertungen

- Prediction: All Topics in Scanned Copy "Adaptive Business Intelligence" by Zbigniewmichlewicz Martin Schmidt)Dokument46 SeitenPrediction: All Topics in Scanned Copy "Adaptive Business Intelligence" by Zbigniewmichlewicz Martin Schmidt)rashNoch keine Bewertungen

- Data Pre Processing - NGDokument43 SeitenData Pre Processing - NGVatesh_Pasrija_9573Noch keine Bewertungen

- Unit 2Dokument6 SeitenUnit 2Dakshkohli31 KohliNoch keine Bewertungen

- Unit 2: Big Data AnalyticsDokument45 SeitenUnit 2: Big Data AnalyticsPrabha JoshiNoch keine Bewertungen

- ICS 2408 - Lecture 2 - Data PreprocessingDokument29 SeitenICS 2408 - Lecture 2 - Data Preprocessingpetergitagia9781Noch keine Bewertungen

- DATA MINING NotesDokument37 SeitenDATA MINING Notesblack smithNoch keine Bewertungen

- Data PreprocessingDokument2 SeitenData PreprocessingsaravanyaNoch keine Bewertungen

- NormalizationDokument35 SeitenNormalizationHrithik ReignsNoch keine Bewertungen

- Ques 1.give Some Examples of Data Preprocessing Techniques?: Assignment - DWDM Submitted By-Tanya Sikka 1719210284Dokument7 SeitenQues 1.give Some Examples of Data Preprocessing Techniques?: Assignment - DWDM Submitted By-Tanya Sikka 1719210284Sachin ChauhanNoch keine Bewertungen

- IV-cse DM Viva QuestionsDokument10 SeitenIV-cse DM Viva QuestionsImtiyaz AliNoch keine Bewertungen

- Exp1 Data Visualization: 1.line Chart 2.area Chart 3.bar Chart 4.histogramDokument6 SeitenExp1 Data Visualization: 1.line Chart 2.area Chart 3.bar Chart 4.histogramNihar ChalkeNoch keine Bewertungen

- Q.1. Why Is Data Preprocessing Required?Dokument26 SeitenQ.1. Why Is Data Preprocessing Required?Akshay Mathur100% (1)

- Unit-3 Data PreprocessingDokument7 SeitenUnit-3 Data PreprocessingKhal Drago100% (1)

- Data Prep Roc EsDokument31 SeitenData Prep Roc EsM sindhuNoch keine Bewertungen

- Data Reduction TechniquesDokument10 SeitenData Reduction TechniquesVinjamuri Joshi ManoharNoch keine Bewertungen

- M-Unit-2 R16Dokument21 SeitenM-Unit-2 R16JAGADISH MNoch keine Bewertungen

- BDA Class1Dokument33 SeitenBDA Class1Neeraj Sivadas KNoch keine Bewertungen

- DWH Final PrepDokument17 SeitenDWH Final PrepShamila SaleemNoch keine Bewertungen

- Unit IiiDokument43 SeitenUnit Iii42. Nikita SinghNoch keine Bewertungen

- Syllabus: Data Warehousing and Data MiningDokument18 SeitenSyllabus: Data Warehousing and Data MiningIt's MeNoch keine Bewertungen

- Data Cleaning: Missing Values: - For Example in Attribute Income IfDokument30 SeitenData Cleaning: Missing Values: - For Example in Attribute Income IfAshyou YouashNoch keine Bewertungen

- Unit 2Dokument30 SeitenUnit 2Dakshkohli31 KohliNoch keine Bewertungen

- Data ReductionDokument22 SeitenData ReductionAdil Bin KhalidNoch keine Bewertungen

- Data PreprocessingDokument66 SeitenData PreprocessingTuLbig E. WinnowerNoch keine Bewertungen

- Bi Ut2 AnswersDokument23 SeitenBi Ut2 AnswersSuhasi GadgeNoch keine Bewertungen

- Data PreprocessingDokument28 SeitenData PreprocessingRahul SharmaNoch keine Bewertungen

- DWMDokument14 SeitenDWMSakshi KulbhaskarNoch keine Bewertungen

- Data Mining - Classification & PredictionDokument5 SeitenData Mining - Classification & PredictionTdx mentorNoch keine Bewertungen

- Solutions To DM I MID (A)Dokument19 SeitenSolutions To DM I MID (A)jyothibellaryv100% (1)

- INTRODUCTIONDokument9 SeitenINTRODUCTIONAshitha AshiNoch keine Bewertungen

- DMW Module 2Dokument32 SeitenDMW Module 2Binesh RBNoch keine Bewertungen

- DatawarehousingDokument10 SeitenDatawarehousingHarshit JainNoch keine Bewertungen

- Assignment 1: Aim: Preprocess Data Using Python. ObjectiveDokument7 SeitenAssignment 1: Aim: Preprocess Data Using Python. ObjectiveAbhinay SurveNoch keine Bewertungen

- Stages in Data MiningDokument11 SeitenStages in Data MiningYusuf mohammedNoch keine Bewertungen

- Knowledge Discovery in DatabasesDokument17 SeitenKnowledge Discovery in DatabasesSarvesh DharmeNoch keine Bewertungen

- Data MiningDokument22 SeitenData MiningvrkatevarapuNoch keine Bewertungen

- DWM - Exp 1Dokument11 SeitenDWM - Exp 1Himanshu PandeyNoch keine Bewertungen

- UNIT-1 Introduction To Data MiningDokument29 SeitenUNIT-1 Introduction To Data MiningVedhaVyas MahasivaNoch keine Bewertungen

- Data Warehousing and Mining: Ii Unit: Data Preprocessing, Language Architecture Concept DescriptionDokument7 SeitenData Warehousing and Mining: Ii Unit: Data Preprocessing, Language Architecture Concept Descriptionravi3754Noch keine Bewertungen

- Mit401 Unit 10-SlmDokument23 SeitenMit401 Unit 10-SlmAmit ParabNoch keine Bewertungen

- Lecture2 DataMiningFunctionalitiesDokument18 SeitenLecture2 DataMiningFunctionalitiesinsaanNoch keine Bewertungen

- Assignment 02Dokument9 SeitenAssignment 02dilhaniNoch keine Bewertungen

- Untitled DocumentDokument5 SeitenUntitled DocumentNoel OnyNoch keine Bewertungen

- Data BinningDokument9 SeitenData BinningNithish RajNoch keine Bewertungen

- Data MiningDokument5 SeitenData MiningNamra SarfrazNoch keine Bewertungen

- Data Mining University AnswerDokument10 SeitenData Mining University Answeroozed12Noch keine Bewertungen

- DM 02 04 Data TransformationDokument52 SeitenDM 02 04 Data Transformationmaneesh sNoch keine Bewertungen

- Unit 3 - Data PreprocessingDokument40 SeitenUnit 3 - Data PreprocessingMag CreationNoch keine Bewertungen

- Task 1Dokument3 SeitenTask 1GARCIA, KYLA MAE A.Noch keine Bewertungen

- Unit 3 Data WarehouseDokument17 SeitenUnit 3 Data WarehouseVanshika ChauhanNoch keine Bewertungen

- Systematics Review and Comparative Analysis Among Various XML Compression TechniquesDokument5 SeitenSystematics Review and Comparative Analysis Among Various XML Compression TechniquesGaurav JaiswalNoch keine Bewertungen

- C Programming QuestionsDokument6 SeitenC Programming QuestionsGaurav JaiswalNoch keine Bewertungen

- Unit-03 (Part 2)Dokument20 SeitenUnit-03 (Part 2)Gaurav JaiswalNoch keine Bewertungen

- Distributed System (ECS-701) First Mid PaperDokument1 SeiteDistributed System (ECS-701) First Mid PaperGaurav JaiswalNoch keine Bewertungen

- Distributed Systems Lab ProgramsDokument2 SeitenDistributed Systems Lab ProgramsGaurav Jaiswal100% (1)

- Distributed Systems Lab ProgramsDokument2 SeitenDistributed Systems Lab ProgramsGaurav Jaiswal100% (1)

- Distributed Systems Lab ProgramsDokument23 SeitenDistributed Systems Lab Programsgoodsp_007Noch keine Bewertungen

- System ModelsDokument19 SeitenSystem ModelsGaurav JaiswalNoch keine Bewertungen

- UPTU B.tech Computer Science 3rd 4th YrDokument51 SeitenUPTU B.tech Computer Science 3rd 4th YrAmit MishraNoch keine Bewertungen

- ZestDokument2 SeitenZestGaurav JaiswalNoch keine Bewertungen

- Registration Will Be Starting From 15 Feb 2013.: "Rich The Treasure, Sweet The Pleasure"Dokument1 SeiteRegistration Will Be Starting From 15 Feb 2013.: "Rich The Treasure, Sweet The Pleasure"Gaurav JaiswalNoch keine Bewertungen

- T-Shirt Designing & Painting CompetitionDokument1 SeiteT-Shirt Designing & Painting CompetitionGaurav JaiswalNoch keine Bewertungen

- Identity Management, Access Control System, & Intrusion DetectionDokument19 SeitenIdentity Management, Access Control System, & Intrusion DetectionGaurav JaiswalNoch keine Bewertungen

- Wordbank 15 Youtube Writeabout1Dokument2 SeitenWordbank 15 Youtube Writeabout1Olga VaizburgNoch keine Bewertungen

- Florida Gov. Ron DeSantis Provides Update As Hurricane Ian Prompts EvDokument1 SeiteFlorida Gov. Ron DeSantis Provides Update As Hurricane Ian Prompts Evedwinbramosmac.comNoch keine Bewertungen

- Wits Appraisalnof Jaw Disharmony by JOHNSONDokument20 SeitenWits Appraisalnof Jaw Disharmony by JOHNSONDrKamran MominNoch keine Bewertungen

- Intergard 475HS - Part B - EVA046 - GBR - ENG PDFDokument10 SeitenIntergard 475HS - Part B - EVA046 - GBR - ENG PDFMohamed NouzerNoch keine Bewertungen

- Topic 6 Nested For LoopsDokument21 SeitenTopic 6 Nested For Loopsthbull02Noch keine Bewertungen

- The Function and Importance of TransitionsDokument4 SeitenThe Function and Importance of TransitionsMarc Jalen ReladorNoch keine Bewertungen

- Overview of Incorporation in CambodiaDokument3 SeitenOverview of Incorporation in CambodiaDavid MNoch keine Bewertungen

- Presentation No. 3 - Songs and ChantsDokument44 SeitenPresentation No. 3 - Songs and Chantsandie hinchNoch keine Bewertungen

- Micronet TMRDokument316 SeitenMicronet TMRHaithem BrebishNoch keine Bewertungen

- 2011 - Papanikolaou E. - Markatos N. - Int J Hydrogen EnergyDokument9 Seiten2011 - Papanikolaou E. - Markatos N. - Int J Hydrogen EnergyNMarkatosNoch keine Bewertungen

- Tylenol CrisisDokument2 SeitenTylenol CrisisNida SweetNoch keine Bewertungen

- Engineering Mathematics John BirdDokument89 SeitenEngineering Mathematics John BirdcoutnawNoch keine Bewertungen

- Chemical Safety ChecklistDokument3 SeitenChemical Safety ChecklistPillai Sreejith100% (10)

- Ch04Exp PDFDokument17 SeitenCh04Exp PDFConstantin PopescuNoch keine Bewertungen

- Automated Facilities Layout Past Present and FutureDokument19 SeitenAutomated Facilities Layout Past Present and FutureJose Luis Diaz BetancourtNoch keine Bewertungen

- Saes T 883Dokument13 SeitenSaes T 883luke luckyNoch keine Bewertungen

- The Global Entrepreneurship and Development Index 2014 For Web1 PDFDokument249 SeitenThe Global Entrepreneurship and Development Index 2014 For Web1 PDFAlex Yuri Rodriguez100% (1)

- Vignyapan 18-04-2024Dokument16 SeitenVignyapan 18-04-2024adil1787Noch keine Bewertungen

- MSDS Charcoal Powder PDFDokument3 SeitenMSDS Charcoal Powder PDFSelina VdexNoch keine Bewertungen

- Haymne Uka@yahoo - Co.ukDokument1 SeiteHaymne Uka@yahoo - Co.ukhaymne ukaNoch keine Bewertungen

- Hansen Aise Im Ch12Dokument66 SeitenHansen Aise Im Ch12Rizki19maretNoch keine Bewertungen

- Your Bentley Bentayga V8: PresentingDokument9 SeitenYour Bentley Bentayga V8: PresentingThomas SeiferthNoch keine Bewertungen

- Cs Fujitsu SAP Reference Book IPDFDokument63 SeitenCs Fujitsu SAP Reference Book IPDFVijay MindfireNoch keine Bewertungen

- Name: Mercado, Kath DATE: 01/15 Score: Activity Answer The Following Items On A Separate Sheet of Paper. Show Your Computations. (4 Items X 5 Points)Dokument2 SeitenName: Mercado, Kath DATE: 01/15 Score: Activity Answer The Following Items On A Separate Sheet of Paper. Show Your Computations. (4 Items X 5 Points)Kathleen MercadoNoch keine Bewertungen

- Manuscript PDFDokument50 SeitenManuscript PDFMartina Mae Benig GinoNoch keine Bewertungen

- Data Structures and Algorithms SyllabusDokument9 SeitenData Structures and Algorithms SyllabusBongbong GalloNoch keine Bewertungen

- Commercial BanksDokument11 SeitenCommercial BanksSeba MohantyNoch keine Bewertungen