Beruflich Dokumente

Kultur Dokumente

Hands On Script - August 08 PDF

Hochgeladen von

nisha332Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Hands On Script - August 08 PDF

Hochgeladen von

nisha332Copyright:

Verfügbare Formate

28 Aug 2008

5/1/2008

Hands on Script

Search Engine - I

Amit K Khairnar

31 August 2008

At the end of the article you will come to know how search engine works.

Hands on script

How Does a Search Engine Work?

What are search engines? How do search engines work? Find your answers here.

What is a Search Engine?

By definition, an Internet search engine is an information retrieval system, which helps us find information on the World Wide Web. World Wide Web is the universe of information where this information is accessible on the network. It facilitates global sharing of information. But WWW is seen as an unstructured database. It is exponentially growing to become enormous store of information. Searching for information on the web is hence a difficult task. There is a need to have a tool to manage, filter and retrieve this oceanic information. A search engine serves this purpose.

31 August 2008

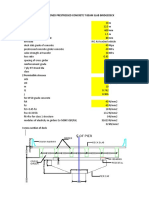

How does a Search Engine Work?

1. Internet search engines are web search engines that search and retrieve information on the web. Most of them use crawler indexer architecture. They depend on their crawler modules. Crawlers also referred to as spiders are small programs that browse the web. 2. Crawlers are given an initial set of URLs whose pages they retrieve. They extract the URLs that appear on the crawled pages and give this information to the crawler control module. The crawler module decides which pages to visit next and gives their URLs back to the crawlers. 3. The topics covered by different search engines vary according to the algorithms they use. Some search engines are programmed to search sites on a particular topic while the crawlers in others may be visiting as many sites as possible.

3

31 August 2008

4. The crawl control module may use the link graph of a previous crawl or may use usage patterns to help in its crawling strategy. 5. The indexer module extracts the words form each page it visits and records its URLs. It results into a large lookup table that gives a list of URLs pointing to pages where each word occurs. The table lists those pages, which were covered in the crawling process. 6. A collection analysis module is another important part of the search engine architecture. It creates a utility index. A utility index may provide access to pages of a given length or pages containing a certain number of pictures on them. 7. During the process of crawling and indexing, a search engine stores the pages it retrieves. They are temporarily stored in a page repository. Search engines maintain a cache of pages they visit so that retrieval of already visited pages expedites. 8. The query module of a search engine receives search requests form users in the form of keywords. The ranking module sorts the results. 9. The crawler indexer architecture has many variants. It is modified in the distributed architecture of a search engine. These search engine architectures consist of gatherers and brokers. Gatherers collect indexing information from web servers while the brokers give the indexing mechanism and the query interface. Brokers update indices on the basis of information received from gatherers and other brokers. They can filter information. Many search engines of today use this type of architecture.

31 August 2008

Best of luck!

For any Question or suggestion contact on the Email Id given below:

By Amit K Khairnar Email: amitkhairnar_2004@yahoo.co.in hands_on_scripts@yahoo.co.in

The figure in the cover is the microprocessor chip of Intel Pentium taken from www.hardwarezone.com .

Das könnte Ihnen auch gefallen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Manual de Credit LensDokument5 SeitenManual de Credit Lensas18090Noch keine Bewertungen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Nox Studio - PoornimaDokument30 SeitenNox Studio - PoornimaPoornima KalyanNoch keine Bewertungen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Receiving Desk - Layout PlanDokument1 SeiteReceiving Desk - Layout Planrohit guptaNoch keine Bewertungen

- Tellabs 8600 Smart Routers System Troubl PDFDokument245 SeitenTellabs 8600 Smart Routers System Troubl PDFNguyễn Văn ThiệnNoch keine Bewertungen

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Hawar HighwayDokument31 SeitenHawar HighwayantogsNoch keine Bewertungen

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- BC - Roofing Ver. GB CH 201007Dokument88 SeitenBC - Roofing Ver. GB CH 201007stilpgNoch keine Bewertungen

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Media Piracy Detection Using Artificial Intelligence, Machine Learning and Data MiningDokument3 SeitenMedia Piracy Detection Using Artificial Intelligence, Machine Learning and Data MiningIJRASETPublicationsNoch keine Bewertungen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- MktLit IEC 61850 Certificate 092014Dokument2 SeitenMktLit IEC 61850 Certificate 092014zinab90Noch keine Bewertungen

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Vulcraft Deck CatalogDokument100 SeitenVulcraft Deck Catalogyakking100% (1)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- ArchiCAD Step by Step TutorialDokument264 SeitenArchiCAD Step by Step TutorialAryana Budiman100% (3)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Template Unit+Test+CaseDokument30 SeitenTemplate Unit+Test+CaseLại Xuân ThăngNoch keine Bewertungen

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Macmat: Technical Data SheetDokument2 SeitenMacmat: Technical Data Sheetsundra0Noch keine Bewertungen

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- STAS 12313-85 Test On Stand of Concrete Prefabricated Components (En)Dokument16 SeitenSTAS 12313-85 Test On Stand of Concrete Prefabricated Components (En)Gelu-Razvan GimigaNoch keine Bewertungen

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- Thesis Hill ResortDokument8 SeitenThesis Hill ResortShahid Afridi43% (7)

- West Asiatic Architecture FinalDokument54 SeitenWest Asiatic Architecture FinalKelvin Andrie Molino100% (1)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- High Capacity Fully Removable Soil Anchors A D Barley D A Bruce M e Bruce J C Lang and H Aschenbroich 2003Dokument9 SeitenHigh Capacity Fully Removable Soil Anchors A D Barley D A Bruce M e Bruce J C Lang and H Aschenbroich 2003Kenny CasillaNoch keine Bewertungen

- GROHE Sanitary SystemsDokument135 SeitenGROHE Sanitary SystemsKadiri OlanrewajuNoch keine Bewertungen

- PT I Girder Design 20mDokument18 SeitenPT I Girder Design 20msamirbendre1Noch keine Bewertungen

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Key Management Interoperability ProtocolDokument64 SeitenKey Management Interoperability ProtocolKiran Kumar KuppaNoch keine Bewertungen

- Technical ManualDokument211 SeitenTechnical ManualBắp HuuthongNoch keine Bewertungen

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- PIC18LF26 27 45 46 47 55 56 57K42 Data Sheet 40001919E PDFDokument833 SeitenPIC18LF26 27 45 46 47 55 56 57K42 Data Sheet 40001919E PDFCiprian SiposNoch keine Bewertungen

- Report On Cracks in BuildingsDokument3 SeitenReport On Cracks in BuildingsAbilaash VelumaniNoch keine Bewertungen

- Example Copy of ETS Service Catalog TemplateDokument13 SeitenExample Copy of ETS Service Catalog TemplateSatish KumarNoch keine Bewertungen

- 3 Stage and 5 Stage ARMDokument4 Seiten3 Stage and 5 Stage ARMRaj HakaniNoch keine Bewertungen

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- District Rate - Morang 075 76 Final PDFDokument78 SeitenDistrict Rate - Morang 075 76 Final PDFDinesh PoudelNoch keine Bewertungen

- Emlab: Installation GuideDokument64 SeitenEmlab: Installation GuidekgskgmNoch keine Bewertungen

- Material HeritageDokument5 SeitenMaterial HeritageAxx A AlNoch keine Bewertungen

- Hyperion BWDokument2 SeitenHyperion BWjazz777Noch keine Bewertungen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- SOAL PAS Bahasa Inggris WajibDokument6 SeitenSOAL PAS Bahasa Inggris Wajibelsie sinduNoch keine Bewertungen

- Viewsonic - vg730m-5-Vs10383 - (Copia Conflictiva de Sylvan-Mesa 2013-01-30)Dokument96 SeitenViewsonic - vg730m-5-Vs10383 - (Copia Conflictiva de Sylvan-Mesa 2013-01-30)sylvan_Noch keine Bewertungen

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)