Beruflich Dokumente

Kultur Dokumente

The InterBase and Firebird Developer Magazine, Issue 2, 2005

Hochgeladen von

Nataly PolanskayaCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

The InterBase and Firebird Developer Magazine, Issue 2, 2005

Hochgeladen von

Nataly PolanskayaCopyright:

Verfügbare Formate

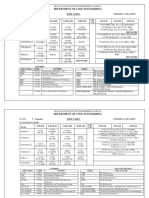

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Contents

Credits

Contents Replicating and synchronizing

by Jonathan Neve

Interbase/FireBird databases using CopyCat Alexey Kovyazin,

Chief Editor

......................................................................... 27

Editor’s note Helen Borrie,

by Dmitri Kouzmenko

Using IBAnalyst

Editor

by Alexey Kovyazin

Rock around the blog

......................................................................... 31 Dmitri Kouzmenko

Editor

................................................................... 3 Readers feedback

Noel Cosgrave,

by Volker Rehn

Comments to “Temporary tables” article

Sub-editor

by Helen E. M. Borrie

Firebird conference

......................................................................... 35 Lev Tashchilin,

................................................................... 4 Designer

Miscellaneous Natalya

Oldest Active ......................................................................... 36

Polyanskaya,

Blog editor

by Helen E. M. Borrie

On Silly Questions and Idiotic Outcomes

................................................................... 5

Server internals

Cover story

by Ann. W. Harrison

Locking, Firebird, and the Lock Table

Subscribe now!

................................................................... 6

To receive future issues

Magazine CD notifications send email to

by Dmitri Kouzmenko and Alexey Kovyazin

Inside BLOBs

subscribe@ibdeveloper.com

................................................................. 11

TestBed

by Vlad Horsun, Alexey Kovyazin

Testing NO SAVEPOINT in InterBase 7.5.1

................................................................... 13

Development area

Best viewed with Acrobat Reader 7

by Vladimir Kotlyarevsky

Object-Oriented Development in RDBMS, Part 1 Donations

Download now!

.................................................................. 22

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 2

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Editor’s note

Rock around the block

Rock around the blog Editor’s note

By Alexey Kovyazin

Dear readers, Tips&Tricks” by Dmitri Kouzmenko column “Oldest Active” by Helen but it is a considerable expense for

and Alexey Kovyazin. Borrie. No need to say more about its publisher. We must pay for arti-

I am glad to introduce you the author of “The Firebird Book”, just cles, editing and proofreading,

second issue of “The Inter- Object oriented development has read it! In this issue Helen looks at design, hosting, etc. In future we’ll

Base and Firebird Developer been a high-interest topic for many the phenomenon of support lists try to recover costs solely from

Magazine”. We received a lot years, and it is a still hot topic. The and their value to the Firebird com- advertising but, for these initial

of emails with kind words and article “Object-Oriented Develop- munity. She takes a swing at some issues, we need your support.

congratulations which have ment in RDBMS” explores the prac- of the unproductive things that list

helped us in creating this tical use of OOD principles in the posters do in this topic, titled “On See details here

issue. Thank you very much! design of InterBase or Firebird Silly Questions and Idiotic Out-

databases. http://ibdeveloper.com/donations

comes”.

In this issue Replication is a problem which On the Radar

The cover story is the newest faces every database developer Let’s blog again I’d like to introduce the several

Episode from Ann W. Harrison sooner or later. The article “Repli- Now it is a good time to say a few projects which will be launched in

“Locking, Firebird, and the Lock cating and synchronizing Inter- words about our new web-presen- the near future. We really need

Table”. The article covers the lock- Base/Firebird databases using tation. We've started a blog-style your feedback so please do not

ing issue from general principles to CopyCat” introduces the approach interface for our magazine – take a hesitate to place your comments in

specific advice, so everyone will used in the new CopyCat compo- look on www.ibdeveloper.com if blog or send email to us

find a bit that's interesting or inform- nent set and the CopyTiger tool. you haven't already discovered it.. (readers@ibdeveloper.com)

ative. Now you can make comments on

The last article by Dmitri Kouz-

We continue the topic of savepoints menko “Understanding Your Data- any article or message. In future

Magazine CD

internals with an article “Testing base with IBAnalyst” provides a we’ll publish all the materials relat-

ed to the PDF issues, along with spe- We will issue a CD with all 3 issues

NO SAVEPOINT in InterBase guide for better understanding of of “The InterBase and Firebird

7.5.1”. Along with interesting prac- how databases work and how tun- cial bonus articles and materials.

You can look out for previews of Developer Magazine” in Decem-

tical test results you will find a ing their parameters can optimize ber 2005 (yes, a Christmas CD!).

description of UNDO log workings performance and avoid bottle- articles, drafts and behind-the-

in InterBase 7.5.1. necks. scene community discussions.

Along with all issues in PDF and

Please feel welcome to blog with us!

searchable html-format you will find

We publish the small chapter, exclusive articles and bonus materi-

“Inside BLOBs” from the forthcom- “Oldest Active” column

Donations als. The CD will also include free

ing book “1000 InterBase&Firebird I am very glad to introduce the new versions of popular software relat-

The magazine is free for readers,

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 3

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Editor’s note

Rock around the block

Firebird conference

ed to InterBase and Firebird and ward for your feedback!

offer very attractive discounts for

dozens of the most popular prod-

ucts.

Invitation

As a footnote, I’d like to invite

Firebird

Conference

The price for CD is USD$9.99 plus all people who are in touch with

shipping. Firebird and InterBase to partici-

For details see pate in the life of the community.

www.ibdeveloper.com/magazine- The importance of a large, active

This issue of our magazine almost lunches on both conference days

cd community to any public project --

coincides with the third annual as well as mid-moring and mid-

and a DBMS with hundreds of thou-

Firebird World Conference, start- afternoon refreshments.

sands of users is certainly public! --

Paper version ing November 13 in Prague,

cannot be emphasised enough. It is Presentations include one on Fire-

of the magazine Czech Republic. This year's con-

the key to survival for such projects. bird's future development from

ference spans three nights, with a

In 2006 we intend to issue the first Read our magazine, ask your ques- the Firebird Project Coordinator,

tight programme of back-to-back

paper version of “The InterBase tions on forums, and leave com- Dmitry Yemanov and another

and parallel presentations over

and Firebird Developer Maga- ments in blog, just rock and roll with from Alex Peshkov, the architect

two days. With speakers in atten-

zine”. The paper version cannot be us! of the security changes coming in

dance from all across Europe and

free because of high production Firebird 2. Jim Starkey will be

coming the Americas presenting topics

costs. there, talking about Firebird Vul-

soon that range from specialised appli-

Sincerely, cation development techniques to can, and members of the SAS

We intend to publish 4 issues per

first-hand accounts of Firebird Institute team will talk about

year with a subscription price of Alexey Kovyazin

internals from the core develop- aspects of SAS's taking on Fire-

around USD$49. The issue volume

ers, this one promises to be the bird Vulcan as the back-end to its

will be approximately 64 pages. Chief Editor

best ever. illustrious statistical software.

In the paper version we plan to editor@ibdeveloper.com Most of the interface develop-

include the best articles from the Registration has been well under ment tools are on the menu,

online issues and, of course, exclu- way for some weeks now, including Oracle-mode Firebird,

sive articles and materials. although the time is now past for PHP, Delphi, Python and Java

early bird discounts on registra- (Jaybird).

To be or not be – this is the question tion fees. At the time of publish-

that only you can answer. If the ing, bookings were still being It is a "don't miss" for any Firebird

idea of subscribing to the paper taken for accommodation in the developer who can make it to

version appeals to you, please conference venue itself, Hotel Prague. Link to details and pro-

place a comment in our blog Olsanka, in downtown Prague. gramme either at

(http://ibdeveloper.com/paper- The hotel offers a variety of room http://firebird.sourceforge.net/i

version) or send email to configurations, including bed and ndex.php?op=konferenz or at the

readers@ibdeveloper.com with breakfast if wanted, at modest IBPhoenix website,

your pro and cons. We look for- tariffs. Registration includes http://www.ibphoenix.com.

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 4

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Oldest Active

On Silly Questions

and Idiotic Outcomes

On Silly Questions and Idiotic Outcomes Off-topic questions are another

source of noise and irritation in the

you do at least owe it to yourself

to know what you are talking

Firebird owes its existence to the community's support lists. Though it's impossible, when you post prob- lists. At the Firebird website, we about, and not to present your

all ancient history now, if it hadn't been for the esprit de corps among the lems with no descriptions. You have bent over backwards to problem as an attack on our soft-

"regulars" of the old InterBase support list hosted by mers.com in the waste our time. You waste your make it very clear which lists are ware. Whether intended or not, it

'nineties, Borland's shock-horror decision to kill off InterBase develop- time. We get frustrated because appropriate for which areas of comes over as a troll and you

ment at the end of 1999 would have been the end of it, for the English- you don't provide the facts; you interest. An off-topic posting is come across as a fool. There's a

speaking users at least. get frustrated because we can't easily forgiven if the poster polite- strong chance that your attitude

read your mind. And the list gets ly complies with a request from a will discourage anyone from

Firebird's support lists on Yahoo and Sourceforge were born out of the filled with noise. list regular to move the question to bothering with you at all. It's no-

same cataclysm of fatal fire and regeneration that caused the phoenix, "x" list. When these events instead win, sure, but it's also a reflection

drawn from the fire against extreme adversity, to take flight as Firebird If there's something I've learnt in become flame threads, or when of human nature. You reap what

in July 2000. Existing and new users, both of Firebird and of the rich more than 12 years of on-line the same people persistently reof- you sow.

selection of drivers and tools that spun off from and for it, depend heav- software support, it is that a prob- fend, they are an extreme burden

ily on the lists to get up to speed with the evolution of all of these soft- lem well described is a problem on everyone. In closing this little tirade, I just

ware products. solved. If a person applies time want to say that I hope I've struck

and thought to presenting a good In the "highly tedious" category a chord with those of our reader-

Newbies often observe that there is just nothing like our lists for any description of the problem, we have the kind of question that ship who struggle to get satisfac-

other software, be it open source or not. One of the things that I think chances are the solution will hop goes like this: "Subject: Bug in tion from their list postings. It will

makes the Firebird lists stand out from the crowd is that, once gripped by up and punch him on the nose. Firebird SQL. Body: "This state- be a rare question indeed that has

the power of the software, our users never leave the lists. Instead, they Even if it doesn't, good descrip- ment works in no answer. Those rarities, well-

stick around, learn hard and stay ready and willing to impart to others tions produce the fastest right MSSQL/Access/MySQL/insert presented, become excellent bug

what they have learnt. After nearly six years of this, across a dozen lists, answers from list contributors. any non-standard DBMS name reports. If you're not getting an

our concentrated mix of expertise and fidelity is hard to beat. Furthermore, those good descrip- here. It doesn't work in Firebird. answer, there's a high chance that

tions and their solutions form a Where should I report this bug?" you asked a silly question.

Now, doesn't all this make us all feel warm and fuzzy? For those of us

powerful resource in the archives, To be fair, George Bernard Shaw

in the hot core of this voluntary support infrastructure, the answer has to Helen E. M. Borrie

for others coming along behind. wasn't totally right when he said

be, unfortunately, "Not all the time!" There are some bugbears that can

"Ignorance is a sin." However, helebor@tpg.com.au

make the most patient person see red. I'm using this column to draw

attention to some of the worst.

First and worst is silly questions. You have all seen them. Perhaps

you even posted them! "Subject: Help! Body: Every time I try to

connect to Firebird I get the message 'Connection refused'. What

am I doing wrong?" What follows from that is a frustrating and

tedious game for responders. "What version of Firebird are you

using? Which server model? Which platform? Which tool?

What connection path? ('By connection path, we mean....').."

Everyone's time is valuable. Nobody has the luxury of being

able to sit around all day playing this game. If we weren't will-

ing to help, we wouldn't be there. But you make it hard, or even

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 5

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Cover story

Locking, Firebird, and the Lock Table

News & Events

Firebird Worldwide

Locking, Firebird, and the Lock Table

Author: Ann. W. Harrison

Conference 2005 One of the best-known facts about base to running transactions. Those A lock sounds like a very solid

Firebird is that it uses multi-version three functions can be implemented object, but in database systems, a aharrison@ibphoenix.com

The Firebird conference concurrency control instead of in various ways. The simplest is to lock anything but solid. “Locking” is

will take place record locking. For databases that serialize transactions – allowing a shorthand description of a proto- the transaction that has the lock, the

at the Hotel Olsanka manage concurrency though each transaction exclusive access to col for reserving resources. Data- identity of the table being locked,

in Prague, record locks, understanding mecha- the database until it finishes. That bases use locks to maintain consis- and a function to call if another

Czech Republic nisms of record locking is critical to solution is neither interesting nor tency while allowing concurrent transaction wants an incompatible

from the evening designing a high performance, high desirable. independent transactions to update lock on the table. The normal table

of Sunday the 13th concurrency application. In gener- distinct parts of the database. Each lock is shared – other transactions

of November al, record locking is irrelevant to A common solution is to allow each transaction reserves the resources – can lock the same table in the same

(opening session) Firebird. However, Firebird uses transaction to lock the data it uses, tables, records, data pages – that it way.

until the evening locks to maintain internal consisten- keeping other transactions from needs to do its work. Typically, the

of Tuesday the 15th cy and for interprocess coordina- reading data it changes and from reservation is made in memory to Before a transaction can delete a

of November tion. Understanding how Firebird changing data it reads. Modern control a resource on disk, so the table, it must get an exclusive lock

(closing session). does (and does not) use locks helps databases generally lock records. cost of reserving the resource is not on the table. An exclusive lock is

with system tuning and anticipating Firebird provides concurrency con- significant compared with the cost incompatible with other locks. If any

how Firebird will behave under trol without record locks by keeping of reading or changing the transaction has a lock on the table,

Request load. multiple versions of records, each resource. the request for an exclusive lock is

for Sponsors marked with the identifier of the denied, the drop table statement

I’m going to start by describing transaction that created it. Concur- fails. Returning an immediate error

locking abstractly, then Firebird rent transactions don’t overwrite Locking example is one way of dealing with conflict-

locking more specifically, then the each other’s changes, because the “Resource” is a very abstract term. ing lock requests. The other is to put

How to Register controls you have over the Firebird system will not allow a transaction Lets start by talking about locking the conflicting request on a list of

lock table and how they can affect to change a record if the most tables. Firebird does lock tables, but unfulfilled requests and let it wait for

your database performance. So, if recent version was created by a normally it locks them only to pre- the resource to become available.

you just want to learn about tuning, concurrent transaction. Readers vent catastrophes like having one

Conference skip to the end of the article. never see uncommitted data transaction drop a table that anoth- In its simplest form, that is how lock-

ing works. All transactions follow

Timetable because the system will not return er transaction is using.

record versions created by concur- the formal protocol of requesting a

Concurrency control Before a Firebird transaction can lock on a resource before using it.

rent transactions. Readers see a

Concurrency control in database access a table, it must get a lock on Firebird maintains a list of locked

Conference consistent version of the database

resources, a list of requests for locks

systems has three basic functions: because the system returns only the the table. The lock prevents other

Papers preventing concurrent transactions transactions from dropping the on resources – satisfied or waiting

data committed when they start –

and Speaker from overwriting each others’ allowing other transactions to cre- table while it is in use. When a – and a list of the owners of lock

changes, preventing readers from ate newer versions. Readers don’t transaction gets a lock on a table, requests. When a transaction

seeing uncommitted changes, and block writers. Firebird makes an entry in its table requests a lock that is incompatible

giving a consistent view of the data- of locks, indicating the identity of with existing locks on a resource,

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 6

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Cover story

Locking, Firebird, and the Lock Table

Firebird either denies the new describing follow a protocol known base file. Most databases have a itself. Firebird coordinates physical

request, or puts it on a list to wait as two-phase locking, which is typi- single server process like Super- access to the database through

until the resource is available. Inter- cal of locks taken by transactions in Server that has exclusive access to locks on database pages.

nal lock requests specify whether database systems. Databases that the database and coordinates

they wait or receive an immediate use record locking for consistency physical access to the file within In general database theory, a trans-

error on a case-by-case basis. control always use two-phase

When a transaction starts, it speci- record locks. In two-phase locking,

fies whether it will wait for locks that a transaction acquires locks as it

it acquires on tables, etc. proceeds and holds the locks until it

ends. Once it releases any lock, it

Lock modes can no longer acquire another. The

two phases are lock acquisition and

For concurrency and read commit- lock release. They cannot overlap.

ted transactions, Firebird locks

tables for shared read or shared When a Firebird transaction reads

write. Either mode says, “I’m using a table, it holds a lock on that table

this table, but you are free to use it until it ends. When a concurrency

too.” Consistency mode transac- transaction has acquired a shared

tions follow different rules. They write lock to update a table, no con-

lock tables for protected read or sistency mode transaction will be

protected write. Those modes say able to get a protected lock on that

“I’m using the table and no one else table until the transaction with the

is allowed to change it until I’m shared write lock ends and releases

done.” Protected read is compati- its locks. Table locking in Firebird is

ble with shared read and other pro- two-phase locking.

tected read transactions. Protected

write is only compatible with share Locks can also be transient, taken

read. and released as necessary during

the running of a transaction. Fire-

The important concept about lock bird uses transient locking exten-

modes is that locks are more subtle sively to manage physical access to

than mutexes – locks allow the database.

resource sharing, as well as protect-

ing resources from incompatible Firebird page locks

use.

One major difference between Fire-

bird and most other databases is

Two-phase locking vs. tran- Firebird’s Classic mode. In Classic

sient locking mode, many separate processes

The table locks that we’ve been share write access to a single data-

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 7

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Cover story

Locking, Firebird, and the Lock Table

action is a set of steps that transform section. In SuperServer, only the state of the lock table to reflect its on a resource that is already locked

the database from on consistent server uses that share memory request. in an incompatible mode, one of

state to another. During that trans- area. In Classic, every database two things happens. Either the

formation, the resources held by the connection maps the shared memo- Conflicting lock requests

requesting transaction gets an

transaction must be protected from ry and every connection can read When a request is made for a lock immediate error, or the request is

incompatible changes by other and change the contents of the

transactions. Two-phase locks are memory.

that protection.

The lock table is a separate piece of

In Firebird, internally, each time a share memory. In SuperServer, the

transaction changes a page, it lock table is mapped into the server

changes that page – and the physi- process. In Classic, each process

cal structure of the database as a maps the lock table. All databases

whole – from one consistent state to on a server computer share the

another. Before a transaction reads same lock table, except those run-

or writes a database page, it locks ning with the embedded server.

the page. When it finishes reading

or writing, it can release the lock The Firebird lock manager

without compromising the physical

consistency of the database file. We often talk about the Firebird

Firebird page level locking is tran- Lock Manager as if it were a sepa-

sient. Transactions acquire and rate process, but it isn’t. The lock

release page locks throughout their management code is part of the

existence. However, to prevent engine, just like the optimizer, pars-

deadlocks, transactions must be er, and expression evaluator. There

able to release all the page locks it is a formal interface to the lock

holds before acquiring a lock on a management code, which is similar Registering for the Conference

new page. to the formal interface to the distrib-

uted lock manager that was part of

Call for papers

The Firebird lock table VAX/VMS and one of the inter-

faces to the Distributed Lock Man-

When all access to a database is ager from IBM. Sponsoring the Firebird Conference

done in a single process – as is the

case with most database systems – The lock manager is code in the

locks are held in the server’s memo- engine. In Classic, each process has

ry and the lock table is largely invis- its own lock manager. When a

ible. The server process extends or Classic process requests or releases

remaps the lock information as a lock, its lock management code

required. Firebird, however, man- acquires a mutex on the shared http://firebird-conference.com/

ages its locks in a shared memory memory section and changes the

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 8

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Cover story

Locking, Firebird, and the Lock Table

put on a list of waiting requests and work the mechanism must be fast they check the index definitions for mode of the request, etc. Lock

the transactions that hold conflicting and reliable. A fast, reliable inter- the table, find the new index defini- blocks describe the resources being

locks on the resource are notified of process communication mechanism tion, and begin maintaining the locked.

the conflicting request. Part of every can be – and is – useful for a num- index.

ber of purposes outside the area To request a lock, the owner finds

lock request is the address of a rou- the lock block, follows the linked list

tine to call when the lock interferes that’s normally considered data- Firebird locking summary

base locking. of requests for that lock, and adds

with another request for a lock on Although Firebird does not lock its request at the end. If other own-

the same object. Depending on the For example, Firebird uses the lock records, it uses locks extensively to ers must be notified of a conflicting

resource, the routine may cause the table to notify running transactions isolate the effects of concurrent request, they are located through

lock to be released or require the of the existence of a new index on a transactions. Locking and the lock the request blocks already in the list.

new request to wait. table. That’s important, since as table are more visible in Firebird Each owner block also has a list of

soon as an index becomes active, than in other databases because the its own requests. The performance

Transient locks like the locks on every transaction must help main- lock table is a central communica- critical part of locking is finding lock

database pages are released tain it – making new entries when it tion channel between the separate blocks. For that purpose, the lock

immediately. When a transaction stores or modifies data, removing processes that access the database table includes a hash table for

requests a page lock and that page entries when it modifies or deletes in Classic mode. In addition to con- access to lock blocks based on the

is already locked in an incompati- data. trolling access to database objects name of the resource being locked.

ble mode, the transaction or trans- like tables and data pages, the Fire-

actions that hold the lock are noti- When a transaction first references bird lock manager allows different A quick refresher on hashing

fied and must complete what they a table, it gets a lock on the exis- transactions and processes to notify

are doing and release their locks tence of indexes for the table. A hash table is an array with linked

each other of changes to the state of lists of duplicates and collisions

immediately. Two-phase locks like When another transaction wants to the database, new indexes, etc.

table locks are held until the trans- create a new index on that table, it hanging from it. The names of lock-

action that owns the lock completes. must get an exclusive lock on the Lock table specifics able objects are transformed by a

When the conflicting lock is existence of indexes for the table. function called the hash function

released, and the new lock is grant- Its request conflicts with existing The Firebird lock table is an in-mem- into the offset of one of the elements

ed, then transaction that had been locks, and the owners of those locks ory data area that contains of four of the array. When two names

waiting can proceed. are notified of the conflict. When primary types of blocks. The lock transform to the same offset, the

those transactions are in a state header block describes the lock result is a collision. When two locks

where they can accept a new index, table as a whole and contains have the same name, they are

Locks as interprocess com- pointers to lists of other blocks and duplicates and always collide.

they release their locks, and imme-

munication free blocks. Owner blocks describe

diately request new shared locks on In the Firebird lock table, the array

Lock management requires a high the existence of indexes for the the owners of lock requests – gen-

erally lock owners are transactions, of the hash table contains the

speed, completely reliable commu- table. The transaction that wants to

connections, or the SuperServer. address of a hash block. Hash

nication mechanism between trans- create the index gets its exclusive

Request blocks describe the rela- blocks contain the original name, a

actions, including transactions in lock, creates the index, and com-

tionship between an owner and a collision pointer, a duplicate point-

different processes. The actual mits, releasing its exclusive lock on

lockable resource – whether the er, and the address of the lock block

mechanism varies from platform to the existence of indexing. As other

request is granted or pending, the that corresponds to the name. The

platform, but for the database to transactions get their new locks, collision pointer contains the

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 9

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Cover story

Locking, Firebird, and the Lock Table

address of a hash block whose ber of hash slots if the load is high. allowed to update the lock table at The change will not take effect until

News & Events name hashed to the same value. The symptom of an overloaded any instant. When updating the lock all connections to all databases on

The duplicate pointer contains the hash table is sluggish performance table, a process holds the table’s the server machine shut down.

PHP Server

address of a hash block that has under load. mutex. A non-zero mutex wait indi-

exactly the same name. cates that processes are blocked by If you increase the number of hash

One of the more interest-

The tool for checking the lock table the mutex and forced to wait for slots, you should also increase the

ing recent developments

A hash table is fast when there are is fb_lock_print, which is a com- access to the lock table. In turn, that lock table size. The second line of

in information technolo-

relatively few collisions. With no mand line utility in the bin directory indicates a performance problem the lock print

Version:114, Active owner 0,

gy has been the rise of

collisions, finding a lock block of the Firebird installation tree. The

Length: 262144, Used: 85740

browser based applica- inside the lock table, typically

involved hashing the name, index- full lock print describes the entire because looking up a lock is slow.

tions, often referred to

ing into the array, and reading the state of the lock table and is of limit-

by the acronym "LAMP".

pointer from the first hash block. ed interest. When your system is If the hash lengths are more than tells you how close you are to run-

One key hurdle for Each collision adds another pointer under load and behaving badly, min 5, avg 10, or max 30, you ning out of space in the lock table.

broad use of the LAMP to follow and name to check. The invoke the utility with no options or need to increase the number of The Version and Active owner are

technology for mid-mar- ratio of the size of the array to the switches, directing the output to a hash slots. The hash function used in uninteresting. The length is the max-

ket solutions was that it number of locks determines the file. Open the file with an editor. Firebird is quick but not terribly effi- imum size of the lock table. Used is

was never easy to con- number of collisions. Unfortunately, You'll see output that starts some- cient. It works best if the number of the amount of space currently allo-

figure and manage. the width of the array cannot be thing like this: hash slots is prime. cated for the various block types

LOCK_HEADER BLOCK

adjusted dynamically because the and hash table. If the amount used

Version:114, Active owner:0, Length:262144, Used:85740

PHPServer changes that: size of the array is part of the hash is anywhere near the total length,

Semmask:0x0, Flags: 0x0001

it can be installed with function. Changing the width uncomment this parameter in the

Enqs: 18512, Converts: 490, Rejects:0, Blocks: 0

just four clicks of the changes the result of the function configuration file by removing the

Deadlock scans:0, Deadlocks:0, Scan interval:10

mouse and support for and invalidates all existing entries in leading #, and increase the value.

#LockMemSize = 262144

Firebird is compiled in.

Acquires: 21048, Acquire blocks:0, Spin count:0

the hash table.

PHPServer shares all the Adjusting the lock table to Mutex wait:10.3%

Hash slots:101, Hash lengths (min/avg/max):3/ 15/ 30

qualities of Firebird: it is improve performance to this

… LockMemSize = 1048576

a capable, compact,

easy to install and easy The size of the hash table is set in

to manage solution. the Firebird configuration file. You The seventh and eighth lines suggest Change this line in the configuration

must shut down all activity on all that the hash table is too small and file: The value is bytes. The default lock

#LockHashSlots = 101

PHPServer is a free databases that share the hash table that it is affecting system perform- table is about a quarter of a

download – normally all databases on the ance. In the example, these values megabyte, which is insignificant on

machine – before changes take indicate a problem: Uncomment the line by removing modern computers. Changing the

Read more at:

Mutex wait: 10.3%

effect. The Classic architecture uses lock table size will not take effect

Hash slots: 101, Hash lengths (min/avg/max):3/ 15/ 30

www.fyracle.org/ the lock table more heavily than until all connections to all databases

phpserver.html SuperServer. If you choose the on the server machine shut down.

Classic architecture, you should In the Classic architecture, each the leading #. Choose a value that

check the load on the hash table process makes its own changes to is a prime number less than 2048.

LockHashSlots = 499

periodically and increase the num- the lock table. Only one process is

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 10

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Server internals

Inside BLOBs

Author: Dmitri Kouzmenko

Inside BLOBs

This is an excerpt from the book “1000 InterBase & Firebird Tips & Tricks”

by Alexey Kovyazin and Dmitri Kouzmenko, which will be published in 2006.

kdv@ib-aid.com

Author: Alexey Kovyazin

ak@ib-aid.com

How the server Initially, the basic record data on ally contains the BLOB data. The first type is the simplest. If the

works with BLOBs the data page includes a reference Depending on the size of the BLOB, size of BLOB-field data is less than

The BLOB data type is intended for to a “BLOB record” for each non- this BLOB-record will be one of the free space on the data page, it is

storing data of variable size. Fields null BLOB field, i.e. to record-like three types. placed on the data page as a sepa-

of BLOB type allow for storage of structure or quasi-record that actu- rate record of "BLOB" type.

data that cannot be placed in fields

of other types, - for example, pic-

tures, audio files, video fragments,

etc.

From the point view of the database

application developer, using BLOB

fields is as transparent as it is for

other field types (see chapter “Data

types” for details). However, there

is a significant difference between

the internal implementation mecha-

nism for BLOBs and that for other

data.

Unlike the mechanism used for han-

dling other types of fields, the data-

base engine uses a special mecha-

nism to work with BLOB fields. This

mechanism is transparently inte-

grated with other record handling

at the application level and at the

same time has its own means of

page organization. Let's consider in

detail how the BLOB-handling

mechanism works.

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 11

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Server internals

Inside BLOBs

The second type is used when the The special header contains the size will be 2 gigabytes. So, if you

size of BLOB is greater than the following information: plan to have very large BLOB

free space on the page. In this fields in your database, you should

case, references to pages contain- •The number of the first blob page experiment with storing data of a

ing the actual BLOB data are in this blob. It is used to check that large size beforehand.

stored in a quasi-record. Thus, a pages belong to one blob.

two-level structure of BLOB-field •A sequence number. This is The segment size mystery

data is used. important in checking the integrity Developers of database applica-

of a BLOB. For a BLOB pointer tions often ask what the Segment

page it is equal to zero. Size parameter in the definition of

•The length of data on a page. As a BLOB is, why we need it and

a page may or may not be filled to whether or not we should set it

the full extent, the length of actual when creating Blob-fields.

data is indicated in the header. In reality, there is no need to set

this parameter. Actually, it is a bit

Maximum BLOB size of a relic, used by the GPRE utility

BLOB Page As the internal structure for storing when pre-processing Embedded

BLOB data can have only 3 levels SQL. When working with BLOBs,

The blob page consists of the fol-

of organization, and the size of GPRE declares a buffer of speci-

lowing parts:

data page is also limited, it is pos- fied size, based on the segment

sible to calculate the maximum size size. Setting the segment size has

of a BLOB. no influence over the allocation

If the size of BLOB-field contents is and the size of segments when

very large, a three-level structure However, this is a theoretical limit storing the BLOB on disk. It also

is used – a quasi-record stores ref- (if you want, you can calculate it), has no influence on performance.

erences to BLOB pointer pages but in practice the limit will be Therefore the segment size can be

which contain references to the much lower. The reason for this safely set to any value, but it is set

actual BLOB data. lower limit is that the length of to 80 bytes by default.

BLOB-field data is determined by a

The whole structure of BLOB stor- variable of ULONG type, i.e. its Information for those who want to

age (except for the quasi-record, maximal size will be equal to 4 know everything: the number 80

of course) is implemented by one gigabytes. was chosen because 80 symbols

page type – the BLOB page type. could be allocated in alphanumer-

Different types of BLOB-pages dif- Moreover, in reality this practical ic terminals.

fer from each other in the presence limit is reduced if a UDF is to be

of a flag (value 0 or 1) defining used for BLOB processing. An

how the server should interpret the internal UDF implementation

given page. assumes that the maximum BLOB

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 12

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

Author: Vlad Horsun

Testing the NO SAVEPOINT hvlad@users.sourceforge.net

Author: Alexey Kovyazin

feature in InterBase 7.5.1 ak@ib-aid.com

In issue 1 we published Dmitri table, with the following structure: Download the InterBase 7.5.1 trial

Yemanov’s article about the inter- CREATE TABLE TEST ( version from www.borland.com.

ID NUMERIC(18,2),

nals of savepoints. While that article The installation process is obvious

NAME VARCHAR(120),

was still on the desk, Borland and well-described in the InterBase

DESCRIPTION VARCHAR(250),

announced the release of InterBase documentation.

CNT INTEGER,

7.5.1, introducing, amongst other

You can download a backup of the

QRT DOUBLE PRECISION,

things, a NO SAVEPOINT option

test database ready to use from

TS_CHANGE TIMESTAMP,

for transaction management. Is this

http://www.ibdeveloper.com/issu

TS_CREATE TIMESTAMP,

an important improvement for Inter-

Base? We decided to give this e2/testspbackup.zip (~4 Mb) or,

implementation a close look and test NOTES BLOB alternatively, an SQL script for cre-

it some, to discover what it is all ); ating it from

about. http://www.ibdeveloper.com/issu

This table contains 100,000 records, which will be updated during the test. e2/testspdatabasescript.zip (~1

The stored procedure and generators are used to fill the table with test data. Kb).

Testing NO SAVEPOINT You can increase the quantity of records in the test table by calling the

In order to analyze the problem that stored procedure to insert them: If you download the database

SELECT * FROM INSERTRECS(1000);

the new transaction option was backup, the test tables are already

intended to address, and to assess populated with records and you

its real value, we performed several The second table, TEST2DROP, has the same structure as the first and is filled can proceed straight to the section

with the same records as TEST. “Preparing to test”, below.

INSERT INTO TEST2DROP SELECT FROM TEST;

very simple SQL tests. The tests are

all 100% reproducible, so you will If you choose instead to use the

be able to verify our results easily. As you will see, the second table will be dropped immediately after connect. SQL script, you will create data-

We are just using it as a way to increase database size cheaply: the pages base yourself. Make sure you

Database for testing occupied by the TEST2DROP table will be released for reuse after we drop insert 100,000 records into table

the table. With this trick we avoid the impact of database file growth on the TEST using the INSERTRECS stored

The test database file was created in

test results. procedure and then copy all of

InterBase 7.5.1, page size = 4096,

character encoding is NONE. It them to TEST2DROP three or four

contains two tables, one stored pro- Setting test environment times.

cedure and three generators. All that is needed to perform this test is the trial installation package of Inter- After that, perform a backup of this

Base 7.5.1, the test database and an SQL script. database and you will be on the

For the test we will use only one

same position as if you had down-

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 13

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

loaded “ready for use” backup.

Hardware is not a material issue for these tests, since we are only comparing performance with and without the NO

SAVEPOINT option. Our test platform was a modest computer with Pentium-4, 2 GHz, with 512 RAM and an 80GB

Samsung HDD.

Preparing to test

A separate copy of the test database is used for each test case, in order to eliminate any interference between state-

ments. We create four fresh copies of the database for this purpose. Supposing all files are in a directory called

C:\TEST, simply create the four test databases from your test backup file:

gbak –c –user SYSDBA –pass masterkey C:\TEST\testspbackup.gbk C:\TEST\testsp1.ib

gbak –c –user SYSDBA –pass masterkey C:\TEST\testspbackup.gbk C:\TEST\testsp2.ib

gbak –c –user SYSDBA –pass masterkey C:\TEST\testspbackup.gbk C:\TEST\testsp3.ib

gbak –c –user SYSDBA –pass masterkey C:\TEST\testspbackup.gbk C:\TEST\testsp4.ib

SQL test scripts

The first script tests a regular, one-pass update without the NO SAVEPOINT option.

For convenience, the important commands are clarified with comments:

connect "C:\TEST\testsp1.ib" USER "SYSDBA" Password "masterkey"; //Connect

drop table TEST2DROP; // Drop table TEST2DROP to free database pages

commit;

select count(*) from test; // Walk down all records in TEST to place them into cache

commit;

set time on; //enable time statistics for performed statements

set stat on; // enables writes/fetches/memory statistics

commit;

// perform bulk update of all records in TEST table

update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT_TIMESTAMP;

commit;

quit;

The second script tests performance for the same UPDATE with the NO SAVEPOINT option:

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 14

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

News & Events connect "C:\testsp2.ib" USER "SYSDBA" Password "masterkey"; News & Events

drop table TEST2DROP;

Fyracle 0.8.9 commit; IBAnalyst 1.9

Janus has released a select count(*) from test; IBSurgeon has issued new

new version of Oracle- commit; version of IBAnalyst.

mode Firebird, Fyracle. set time on;

Fyracle is a specialized set stat on; Now it can better analyze

build of Firebird 1.5: commit; InterBase or Firebird data-

SET TRANSACTION NO SAVEPOINT; // enable NO SAVEPOINT

it adds temporary base statistics with using

update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT_TIMESTAMP;

tables, hierarchical

metadata information (in this

commit;

queries and

case connection to database

quit;

a PL/SQL engine.

is required).

Version 0.8.9 adds

support for stored Except for the inclusion of the SET TRANSACTION NO SAVEPOINT statement in the second script, both scripts are IBAnalyst is a tool that assists

procedures written the same, simply testing the behavior of engine in case of the single bulk UPDATE. a user to analyze in detail

in Java. Firebird or InterBase data-

To test sequential UPDATEs, we added several UPDATE statements--we recommend using five.

Fyracle dramatically The script for testing without NO SAVEPOINT would be: base statistics and identify

reduces the cost of port- possible problems with data-

ing Oracle-based appli- connect "E:\testsp3.ib" USER "SYSDBA" Password "masterkey"; base performance, mainte-

cations to Firebird. drop table TEST2DROP; nance and how an applica-

commit; tion interacts with the data-

select count(*) from test;

Common usage

includes the base.

Compiere open source commit;

set time on;

ERP package,

It graphically displays data-

set stat on;

mid-market deployments base statistics and can then

of Developer/ commit; automatically make intelli-

2000 applications update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT_TIMESTAMP; gent suggestions about

and demo CD's update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT_TIMESTAMP; improving database per-

update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT_TIMESTAMP;

of applications formance and database

update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT_TIMESTAMP;

without license trouble. maintenance

Read more at: update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT_TIMESTAMP;

commit;

www.ibsurgeon.com/

quit;

www.janus-soft- news.html

ware.com

You can download all the scripts and the raw results of their execution from this location:

http://www.ibdeveloper.com/issue2/testresults.zip

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 15

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

How to perform the test

News & Events News & Events

The easiest way to perform the test is to use isql's INPUT command.

Fast Report 3.19 TECT Software

Suppose you have the scripts located in c:\test\scripts:

>isql

The new version of the

presents

famous Fast Report is. Use CONNECT or CREATE DATABASE to specify a database New versions of nice utilities

SQL>input c:\test\scripts\script01.sql;

now out. FastReport® 3 from TECT Software

is an add-in component www.tectsoft.net.

that gives your applica-

tions the ability to gener- SPGen, Stored Procedure Gen-

Test results erator for Firebird and Inter-

ate reports quickly and

efficiently. FastReport® The single bulk UPDATE Base, creates a standard set of

provides all the tools you stored procs for any table, full

need to develop reports. First, let’s perform the test where the single-pass bulk UPDATE is performed. details can be found here

All variants of FastRe- This is an excerpt from the one-pass script with default transaction settings. http://www.tectsoft.net/

SQL> update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT

port® 3 contains:

Products/Firebird/

_TIMESTAMP;

Visual report designer FIBSPGen.aspx

Current memory = 10509940

with rulers, guides and

Delta memory = 214016

zooming, wizard for And FBMail, Firebird EMail

(FBMail) is a cross platform PHP

Max memory = 10509940

basic types of report,

utility which easily allows the

Elapsed time= 4.63 sec

export filters for html, tiff,

bmp, jpg, xls, pdf out- sending of email's direct from

puts, Dot matrix reports Buffers = 2048 within a database.

support, support for most Reads = 2111

Writes 375

This utility is specifically aimed

popular DB-engines. Full

Fetches = 974980

at ISP's that support Firebird,

WYSIWYG, text rotation

but can easily be used on any

0..360 degrees, memo

SQL> commit;

computer which is connected

object supports simple

Current memory = 10340752

to the internet.

html-tags (font color, b, i,

Delta memory = -169188

u, sub, sup), improved Full details

Max memory = 10509940

stretching (StretchMode,

can be found here:

Elapsed time= 0.03 sec

ShiftMode properties),

Buffers = 2048

access to DB fields, http://www.tectsoft.net/

Reads = 1

styles, text flow, URLs,

Anchors. Products/Firebird/

Writes 942 FBMail.aspx

Trial! Fetches = 3

Buy Now! This is an excerpt from the one-pass script with NO SAVEPOINT enabled

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 16

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

SQL> SET TRANSACTION NO SAVEPOINT;

News & Events SQL> update TEST set ID = ID+1, QRT = QRT+1, NAME=NAME||'1', ts_change = CURRENT

News & Events

_TIMESTAMP; SQLHammer 1.5

Current memory = 10352244

CopyTiger 1.00

Delta memory = 55296

CopyTiger, Enterprise Edition

Max memory = 10352244

Elapsed time= 4.72 sec

a new product The interesting

Buffers = 2048

from Microtec, developer tool,

is an advanced Reads = 2102 SQLHammer,

database Writes 350 now has

Fetches = 967154

replication & an Enterprise Edition.

synchronisation SQL> commit;

Current memory = 10344052

SQLHammer is

tool for

Delta memory = -8192 a high-performance tool

Max memory = 10352244

InterBase/ for rapid

Firebird, Elapsed time= 0.15 sec

Buffers = 2048

database development

powered by

Reads = 1 and administration.

Writes 938

their CopyCat

component set Fetches = 3 It includes a common

(see the article integrated development

about CopyCat Performance appears to be almost the same, whether the NO SAVEPOINT option is enabled or not. environment,

in this issue). registered custom

Sequential bulk UPDATE statements components

For more With the mutlti-pass script (sequential UPDATEs) the raw test results are rather large.

based on the

information about Table 1 Borland Package Library

CopyTiger see: Test results for 5 sequental

mechanism

UPDATEs

www.microtec.fr/ and an Open Tools API

copycat/ct written in

Download Delphi/Object Pascal.

an evaluation www.metadataforge.com

version

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 17

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

In the second UPDATE we start to see the difference. With default transaction settings this

UPDATE takes a very long time - 47 seconds - compared to only 7210 ms with NO SAVE-

POINT enabled. With default transaction settings we can see that memory usage is signifi-

cant, wherease with NO SAVEPOINT no additional memory is used.

The third and all following UPDATE statements with default settings show equal time and

memory usage values and the growth of writes parameters.

Table 1

Test results for 5 sequental UPDATEs

Figure 2

Memory usage while performing UPDATEs with and without NO SAVEPOINT

With NO SAVEPOINT usage we observe that time/memory values and writes growth are

all small and virtually equal for each pass. The corresponding graphs are below:

Inside the UNDO log

Figure 1

So what happened during the execution of the test scripts? What is the secret behind this

Time to perform UPDATEs with and without NO SAVEPOINT magic that NO SAVEPOINT does? Is there any cost attached to these gains?

For convenience, the results are tabulated above.

The first UPDATE statement has almost the same execution time with and without the NO A few words about versions

SAVEPOINT option. However, memory consumption is reduced fivefold when we use NO You probably know already that InterBase is a multi-record-version database engine,

SAVEPOINT. meaning that each time a record is changed, a new version of that record is produced. The

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 18

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

old version does not disappear The NO SAVEPOINT “Release Notes” for InterBase 7.5. SP1, “New in InterBase 7.5.1”, page 2-2).

immediately but is retained as a

backversion.

option Secondly, when a NO SAVEPOINT transaction is rolled back, it is marked as rolled back in the transaction

inventory page. Record version garbage thereby gets stuck in the "interesting" category and prevents the

In fact, the first time a backversion is The NO SAVEPOINT option in

OIT from advancing. Sweep is needed to advance the OIT and back out dead record versions.

written to disk, it is as a delta ver- InterBase 7.5.1 is a workaround for

sion, which saves disk space and the problem of performance loss Fuller details of the NO SAVEPOINT option are provided in the InterBase 7.5.1. Release Notes.

memory usage by writing out only during bulk updates that do multiple

the differences between the old and passes of a table. The theory is: if

Initial situation

the new versions. The engine can using the implicit savepoint man-

agement causes problems then let's Consider the implementation details of the undo-log. Figure 3 shows the initial situation:

rebuild the full old version from the

new version and chains of delta ver- kill the savepoints. No savepoints –

Recall that we perform this test on freshly-restored database, so it is guaranteed that only one version exists

sions. It is only if the same record is no problem :-)

for any record.

updated more than once within the Besides ISQL, it has been surfaced

same transaction that the full back- as a transaction parameter in both

version is written to disk. DSQL and ESQL. At the API level, a

new transaction parameter block

The UNDO log concept (TPB) option isc_tpb_no_savepoint

You may recall from Dmitri’s article can be passed in the isc_start_trans-

how each transaction is implicitly action() function call to disable

enclosed in a "frame" of savepoints, savepoints management. Syntax

each having its own undo log. This details for the latter flavors and for

log stores a record of each change the new tpb option can be found in

in sequence, ready for the possibili- the 7.5.1 release notes.

ty that a rollback will be requested. The effect of specifying the NO

A backversion materializes when- SAVEPOINT transaction parameter

Figure 3

ever an UPDATE or DELETE state- is that no undo log will be created.

However, along with the perform- Initial situation before any UPDATE - only the one record version exists, Undo log is empty

ment is performed. The engine has

to maintain all these backversions in ance gain for sequential bulk

updates, it brings some costs for The first UPDATE

the undo log for the relevant save-

point. transaction management. The first UPDATE statement creates delta backversions on disk (see figure 4). Since deltas store only the

differences between the old and new versions, they are quite small. This operation is fast and it is easy

So, the Undo Log is a mechanism to First and most obvious is that, with work for the memory manager.

manage backversions for save- NO SAVEPOINT enabled, any

points in order to enable the associ- error handling that relies on save- It is simple to visualize the undo log when we perform the first UPDATE/DELETE statement inside the trans-

ated changes to be rolled back. The points is unavailable. Any error action – the engine just records the numbers of all affected records into the bitmap structure. If it needs to

process of Undo logging is quite during a NO SAVEPOINT transac- roll back the changes associated with this savepoint, it can read the stored numbers of the affected records,

complex and maintaining it can tion precludes all subsequent exe- then walk down to the version of each and restore it from the updated version and the backversion stored

consume a lot of resources. cution and leads to rollback (see on disk.

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 19

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

This approach is very fast and economical on memory usage. The engine The engine could write all intermediate versions to disk but there is no reason to do so.

does not waste too many resources to handle this undo log – in fact it reuses These versions are visible only to the modifying transaction and would not be used unless

the existing multi-versioning mechanism. Resource consumption is merely a rollback was required.

the memory used to store the bitmap structure with the backversion num-

bers. We don't see any significant difference here between a transaction

with the default settings and one with the NO SAVEPOINT option enabled.

Figure 5

The second UPDATE creates a new delta backversion for transaction 1,

Figure 4 erases from disk the delta version created by the first UPDATE, and copies

UPDATE1 create small delta version on disk and put record number into UNDO log the version from UPDATE1 into the Undo log.

The second UPDATE

When the second UPDATE statement is performed on the same set of records, we have a This all makes hard work for the memory manager and the CPU, as you can see from the

different situation. growth of the “max mem”\”delta mem” parameters values in the test that uses the default

transaction parameters.

Here is a good place to note that the example we are considering is the most simple situa-

tion, where only the one global (transaction level) savepoint exists. We will also look at the When NO SAVEPOINT is enabled we avoid the expense of maintaining the Undo log. As

difference in the Undo log when an explicit (or enclosed BEGIN… END) savepoint is used. a result, we see execution time, reads/writes and memory usage as low for subsequent

updates as for the first.

To preserve on-disk integrity (remember the ‘careful write’ principle ?) the engine must

compute a new delta between the old version (by transaction1) and new version (by trans- The third UPDATE

action2, update2), store it somewhere on disk, fetch the current full version (by transac-

tion2, update1), put it into the in-memory undo-log, replace it with the new full version (with The third and all subsequent UPDATEs are similar to the second UPDATE, with one excep-

backpointers set to the newly created delta) and erase the old, now superseded delta. As tion – memory usage does not grow any further.

you can see – there is much more work to do, both on disk and in memory.

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 20

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 TestBed

Testing the NO SAVEPOINT

Original design of IB implement second UPDATE another way but sometime after IB6 Bor- BEGIN... END savepoint framework, the engine has to store a backversion for each associ-

land changed original behavior and we see what we see now.But this theme is for another ated record version in the Undo log.

article;)

For example, if we used an explicit savepoint, e.g. SAVEPOINT Savepoint1, upon perform-

Why is the delta of memory usage zero? The reason is that, beyond the second UPDATE, ing UPDATE2, we would have the situation illustrated in figure 7:

no record version is created. From here on, the update just replaces record data on disk

with the newest one and shifts the superseded version into the Undo log.

A more interesting question is why we see an increase in disk reads and writes during the

test. We would have expected that the third and following UPDATEs would do essentially

equal numbers of read and writes to write the newest versions and move the previous ones

to the undo log. However, we are actually seeing a growing count of writes. We have no

answer for it, but we would be pleased to know.

The following figure (figure 6) helps to illustrate the situation in the Undo log during the

sequential updates. When NO SAVEPOINT is enabled, the only pieces we need to perform

are replacing the version on disk and updating the original backversion. It is fast as the first

UPDATE.

Figure 7

If we have an explicit SAVEPOINT, each new record version associated with it will have a

corresponding backversion in the Undo log of that savepoint

In this case the memory consumption would be expected to increase each time an UPDATE

occurs within the explicit savepoint's scope.

Figure 6 Summary

The third UPDATE overwrites the UPDATE1 version in the Undo log with the UPDATE2 The new transaction option NO SAVEPOINT can solve the problem of excessive resource

version and its own version is written to disk as the latest one. usage growth that can occur with sequential bulk updates. It should be beneficial when

Explicit SAVEPOINT applied appropriately. Because the option can create its own problems by inhibiting the

advance of the OIT, it should be used with caution, of course. The developer will need to take

When an UPDATE statement is going to be performed within its own explicit or implicit extra care about database housekeeping, particularly with respect to timely sweeping.

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 21

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Development area

OOD and RDBMS, Part 1

Author: Vladimir Kotlyarevsky

Object-Oriented vlad@contek.ru

Development in RDBMS,

Part 1 of all the methods taken from the tion development - if you develop

Thanks and apologies The problem state-

articles, and of course I have tested your applications just like 20-30

This article is mostly a compilation all my own methods.

ment years ago.

of methods that are already well-

known, though many times it turned Mixing of object-oriented program- What is the problem? As you see, almost all these charac-

out that I on my own have reinvent- ming and RDBMS use is always a teristics sound good, except, proba-

Present-day relational databases

ed a well-known and quite good compromise. I have endeavored to bly, the last one. Today you can

were developed in times when the

wheel. I have endeavored to pro- recommend several approaches in hardly find a software product (in

sun shone brighter, the computers

vide readers with links to publica- which this compromise is minimised almost any area) consisting of more

were slower, mathematics was in

tions I know of that are discussing for both components. I have also than few thousand of lines which is

favour, and OOP had yet to see the

the problem. However, if I missed tried to describe both advantages written without OOP technologies.

light of day. Due to that fact most

someone’s work in the bibliogra- and disadvantages of such a com- OOP languages have been used for

RDBMSs’ have the following char-

phy, and thus violated copyright, promise. a long time for building visual

acteristics in common:

please drop me a message at forms, i.e. in UI development. It is

I should make it clear that the object also quite usual to apply OOP at

vlad@contek.ru. I apologize 1.Everyone got used to them and

approach to database design as the business logic level, if you

beforehand for possible inconven- felt comfortable with them.

described is not appropriate for implement it either on a middle-tier,

ience, and promise to add any nec-

every task. It is still true for the OOP 2.They are quite fast (if you use or on a client. But things fall apart

essary information to the article.

as a whole, too, no matter what them according to certain known when the deal comes closer to the

The sources in the bibliography are OOP apologists may say! ?. I would standards). data storage issues… During the last

listed in the order of their appear- recommend using it for such tasks as ten years there were several

ance in my mind. document storage and processing, 3.They use SQL, which is an easy,

attempts to develop an object-ori-

accounting, etc. comprehensible and time-proved

ented database system, and, as far

The described database structures data manipulation method.

And the last, but not least , I am very as I know, all those attempts were

have been simplified in order to

thankful to Dmitry Kuzmenko, 4.They are based upon a strong rather far from being successful.

illustrate the problems under con-

Alexander Nevsky and other peo- mathematical theory. The characteristics of an OODBMS

sideration as much clearly as possi-

ple who helped me in writing this are the antithesis of those for an

ble, leaving out more unimportant 5.They are convenient for applica-

article. RDBMS. They are unusual and

elements. I have tested the viability

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 22

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Development area

OOD and RDBMS, Part 1

slow; there are no standards for The OID any disadvantages. Even in the And what is more, nobody requires

data access and no underlying All objects are unique, and they pure relational model it does not run-time pointers to contain some

mathematical theory. Perhaps the must be easily identifiable. That is matter whether the surrogate key is additional information about an

OOP developer feels more comfort- why all the objects stored in the unique within a single table or the object except for a memory

able with them, although I am not database should have unique ID- whole database. address. However, there are some

sure… keys from a single set (similar to people who vigorously reject usage

OIDs should never have any real of surrogates. The most brilliant

As a result, everyone continues object pointers in run-time). These world meaning. In other words, the

identifiers are used to link to an argument against surrogates I’ve

using RDBMS, combining object- key should be completely surro- ever heard is that "they conflict with

oriented business logic and domain object, to load an object into a run- gate. I will not list here all the pros

time environment, etc. In the [1] the relational theory». This state-

objects with relational access to the and cons of surrogate keys in com- ment is quite arguable, since surro-

database, where these objects are article these identifiers are called parison with natural ones: those

OIDs (i.e. Object IDs), in [2] – UINs gate keys, in some sense, are much

stored. who are interested can refer to the closer to that theory than natural

(Unique Identification Number), or [4] article. The simplest explanation

"hyperkey". Let us call them OIDs, ones.

What do we need? is that everything dealing with the

though “hyperkey” is also quite a real world may change (including Those who are interested in more

The thing we need is simple – to beautiful word, isn’t it? ? . the vehicle engine number, network strong evidence supporting the use

develop a set of standard methods card number, name, passport num- of OIDs with the characteristics

that will help us to simplify the First of all, I would like to make a

couple of points concerning key ber, social security card number, described above (pure surrogate,

process of tailoring the OO-layer of and even sex ?. unique at least within the data-

business logic and a relational stor- uniqueness. Database developers

who are used to the classical base), should refer to [1], [2], and

age together. In other words, our Nobody can change their date of [4] articles.

task is to find out how to store approach to database design birth – at least not their de facto

objects in a relational database, would probably be quite surprised date of birth. But birth dates are not The simplest method of OID imple-

and how to implement links at the idea that sometimes it makes unique, anyway.) mentation in a relational database

between the objects. At the same sense to make a table key unique is a field of “integer” type, and a

time we want to keep all the advan- not only within a single table (in Remember the maxim "everything function for generating unique val-

tages provided by the relational terms of OOP – not only within a that can go bad will go bad" ("con- ues of this type. In larger or distrib-

database design and access: certain class), but also within the sequently, everything that cannot uted databases, it probably makes

speed, flexibility, and the power of whole database (all classes). How- go bad…". hum!. But let’s not talk sense to use “int64” or a combina-

relation processing. ever, such strict uniqueness offers about such gloomy things ? ). tion of several integers.

important advantages, which will Changes to some OIDs would

become obvious quite soon. More- immediately lead to changes in all

RDBMS as an object over, it often makes sense to pro- identifiers and links, and thus, as ClassId

vide complete uniqueness in a Uni- Mr. Scott Ambler wrote [1], could All objects stored in a database

storage verse, which provides considerable result in a “huge maintenance should have a persistent analogue

First let’s develop a database struc- benefits in distributed databases nightmare.” As for the surrogate of RTTI, which must be immediately

ture that would be suitable for and replication development. At the key, there is no need to change it, at available through the object identi-

accomplishing the specified task. same time, strict uniqueness of a key least in terms of dependency on the fier. Then, if we know the OID of an

within the database does not have changing world. object, keeping in mind that it is

©2005 www.ibdeveloper.com All right reserved www.ibdeveloper.com 23

The InterBase and Firebird Developer Magazine 2005 ISSUE 2 Development area

OOD and RDBMS, Part 1

Description varchar(128),

unique within the database, we can links, is often a very complicated

Deleted smallint,

immediately figure out what type task, to put it mildly ?.

Creation_date timestamp default CURRENT_TIMESTAMP,

the object is. This is the first advan-

Thus each object has to have

Change_date timestamp default CURRENT_TIMESTAMP ,

tage of OID uniqueness. Such an

objective may be accomplished by mandatory attributes (“OID” and

a ClassId object attribute, which “ClassId”) and desirable attributes Owner TOID);

refers to the known types list, which (“Name,” “Description,” “cre-

basically is a table (let us call it ation_date,” “change_date,” and The ClassId attribute (the object type identifier) refers to the OBJECTS table,

“CLASSES”). This table may include “owner”). Of course, when devel- that is, the type description is also an object of a certain type, which has, for

any kind of information – from just oping an application system, you example, well-known ClassId = –100. (You need to add a description of the

a simple type name to detailed type can always add extra attributes known OID). Then the list of all persistent object types that are known to the

metadata, necessary for the appli- specific to your particular require- system is sampled by a query: select OID, Name, Description from

cation domain. ments. This issue will be considered OBJECTS where ClassId = -100).

a little later.

Name, Description, cre- Each object stored in our database will have a single record in the OBJECTS

ation_date, change_date, table referring to it, and hence a corresponding set of attributes. Wherever

The OBJECTS Table we see a link to a certain unidentified object, this fact about all objects

owner

Reading this far leads us to the con- enables us to find out easily what that object is - using a standard method.