Beruflich Dokumente

Kultur Dokumente

Firefly Algorithm, Stochastic Test Functions and Design Optimisation

Hochgeladen von

Amin Ali AminOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Firefly Algorithm, Stochastic Test Functions and Design Optimisation

Hochgeladen von

Amin Ali AminCopyright:

Verfügbare Formate

a

r

X

i

v

:

1

0

0

3

.

1

4

0

9

v

1

[

m

a

t

h

.

O

C

]

6

M

a

r

2

0

1

0

Firey Algorithm, Stochastic Test Functions and Design

Optimisation

Xin-She Yang

Department of Engineering, University of Cambridge,

Trumpington Street, Cambridge CB2 1PZ, UK

Email: xy227@cam.ac.uk

March 9, 2010

Abstract

Modern optimisation algorithms are often metaheuristic, and they are very promising in solv-

ing NP-hard optimization problems. In this paper, we show how to use the recently developed

Firey Algorithm to solve nonlinear design problems. For the standard pressure vessel design

optimisation, the optimal solution found by FA is far better than the best solution obtained

previously in literature. In addition, we also propose a few new test functions with either sin-

gularity or stochastic components but with known global optimality, and thus they can be used

to validate new optimisation algorithms. Possible topics for further research are also discussed.

To cite this paper as follows: Yang, X. S., (2010) Firey Algorithm, Stochastic Test Functions

and Design Optimisation, Int. J. Bio-Inspired Computation, Vol. 2, No. 2, pp.7884.

1 Introduction

Most optimization problems in engineering are nonlinear with many constraints. Consequently, to

nd optimal solutions to such nonlinear problems requires ecient optimisation algorithms (Deb

1995, Baeck et al 1997, Yang 2005). In general, optimisation algorithms can be classied into two

main categories: deterministic and stochastic. Deterministic algorithms such as hill-climbing will

produce the same set of solutions if the iterations start with the same initial guess. On the other

hand, stochastic algorithms often produce dierent solutions even with the same initial starting

point. However, the nal results, though slightly dierent, will usually converge to the same optimal

solutions within a given accuracy.

Deterministic algorithms are almost all local search algorithms, and they are quite ecient in

nding local optima. However, there is a risk for the algorithms to be trapped at local optima, while

the global optima are out of reach. A common practice is to introduce some stochastic component

to an algorithm so that it becomes possible to jump out of such locality. In this case, algorithms

become stochastic.

Stochastic algorithms often have a deterministic component and a random component. The

stochastic component can take many forms such as simple randomization by randomly sampling the

search space or by random walks. Most stochastic algorithms can be considered as metaheuristic,

and good examples are genetic algorithms (GA) (Holland 1976, Goldberg 1989) and particle swarm

optimisation (PSO) (Kennedy and Eberhart 1995, Kennedy et al 2001). Many modern metaheuristic

algorithms were developed based on the swarm intelligence in nature (Kennedy and Eberhart 1995,

Dorigo and St utzle 2004). New modern metaheuristic algorithms are being developed and begin to

show their power and eciency. For example, the Firey Algorithm developed by the author shows

its superiority over some traditional algorithms (Yang 2009, Yang 2009, Lukasik and Zak 2009).

The paper is organized as follows: we will rst briey outline the main idea of the Firey

Algorithm in Section 2, and we then describe a few new test functions with singularity and/or

1

randomness in Section 3. In Section 4, we will use FA to nd the optimal solution of a pressure

vessel design problem. Finally, we will discuss the topics for further studies.

2 Firey Algorithm and its Implementation

2.1 Firey Algorithm

The Firey Algorithm was developed by the author (Yang 2008, Yang 2009), and it was based on

the idealized behaviour of the ashing characteristics of reies. For simplicity, we can idealize these

ashing characteristics as the following three rules

all reies are unisex so that one rey is attracted to other reies regardless of their sex;

Attractiveness is proportional to their brightness, thus for any two ashing reies, the less

brighter one will move towards the brighter one. The attractiveness is proportional to the

brightness and they both decrease as their distance increases. If no one is brighter than a

particular rey, it moves randomly;

The brightness or light intensity of a rey is aected or determined by the landscape of the

objective function to be optimised.

For a maximization problem, the brightness can simply be proportional to the objective function.

Other forms of brightness can be dened in a similar way to the tness function in genetic algorithms

or the bacterial foraging algorithm (BFA) (Gazi and Passino 2004).

In the FA, there are two important issues: the variation of light intensity and formulation of the

attractiveness. For simplicity, we can always assume that the attractiveness of a rey is determined

by its brightness or light intensity which in turn is associated with the encoded objective function.

In the simplest case for maximum optimization problems, the brightness I of a rey at a particular

location x can be chosen as I(x) f(x). However, the attractiveness is relative, it should be seen

in the eyes of the beholder or judged by the other reies. Thus, it should vary with the distance

r

ij

between rey i and rey j. As light intensity decreases with the distance from its source, and

light is also absorbed in the media, so we should allow the attractiveness to vary with the degree of

absorption.

In the simplest form, the light intensity I(r) varies with the distance r monotonically and expo-

nentially. That is

I = I

0

e

r

, (1)

where I

0

is the original light intensity and is the light absorption coecient. As a reys at-

tractiveness is proportional to the light intensity seen by adjacent reies, we can now dene the

attractiveness of a rey by

=

0

e

r

2

, (2)

where

0

is the attractiveness at r = 0. It is worth pointing out that the exponent r can be

replaced by other functions such as r

m

when m > 0. Schematically, the Firey Algorithm (FA)

can be summarised as the pseudo code.

2

Firey Algorithm

Objective function f(x), x = (x

1

, ..., x

d

)

T

Initialize a population of reies x

i

(i = 1, 2, ..., n)

Dene light absorption coecient

while (t <MaxGeneration)

for i = 1 : n all n reies

for j = 1 : i all n reies

Light intensity I

i

at x

i

is determined by f(x

i

)

if (I

j

> I

i

)

Move rey i towards j in all d dimensions

end if

Attractiveness varies with distance r via exp[r]

Evaluate new solutions and update light intensity

end for j

end for i

Rank the reies and nd the current best

end while

Postprocess results and visualization

The distance between any two reies i and j at x

i

and x

j

can be the Cartesian distance

r

ij

= ||x

i

x

j

||

2

or the

2

-norm. For other applications such as scheduling, the distance can be time

delay or any suitable forms, not necessarily the Cartesian distance.

The movement of a rey i is attracted to another more attractive (brighter) rey j is deter-

mined by

x

i

= x

i

+

0

e

r

2

ij

(x

j

x

i

) +

i

, (3)

where the second term is due to the attraction, while the third term is randomization with the vector

of random variables

i

being drawn from a Gaussian distribution.

For most cases in our implementation, we can take

0

= 1, [0, 1], and = 1. In addition,

if the scales vary signicantly in dierent dimensions such as 10

5

to 10

5

in one dimension while,

say, 10

3

to 10

3

along others, it is a good idea to replace by S

k

where the scaling parameters

S

k

(k = 1, ..., d) in the d dimensions should be determined by the actual scales of the problem of

interest.

In essence, the parameter characterizes the variation of the attractiveness, and its value is

crucially important in determining the speed of the convergence and how the FA algorithm behaves.

In theory, [0, ), but in practice, = O(1) is determined by the characteristic/mean length S

k

of the system to be optimized. In one extreme when 0, the attractiveness is constant =

0

.

This is equivalent to saying that the light intensity does not decrease in an idealized sky. Thus, a

ashing rey can be seen anywhere in the domain. Thus, a single (usually global) optimum can

easily be reached. This corresponds to a special case of particle swarm optimization (PSO). In fact,

if the inner loop for j is removed and I

j

is replaced by the current global best g

, FA essentially

becomes the standard PSO, and, subsequently, the eciency of this special case is the same as that

of PSO. On the other hand, if , we have (r) (r), which is a Dirac -function. This means

that the attractiveness is almost zero in the sight of other reies, or the reies are short-sighted.

This is equivalent to the case where the reies y in a very foggy region randomly. No other

reies can be seen, and each rey roams in a completely random way. Therefore, this corresponds

to the completely random search method. So partly controls how the algorithm behaves. It is also

possible to adjust so that multiple optima can be found at the same during iterations.

2.2 Numerical Examples

From the pseudo code, it is relatively straightforward to implement the Firey Algorithm using a

popular programming language such as Matlab. We have tested it against more than a dozen test

3

5

0

5

5

0

5

0

1

2

3

Figure 1: Four global maxima at (1/2, 1/2).

functions such as the Ackley function

f(x) = 20 exp

_

1

5

_

1

d

d

i=1

x

2

i

_

exp[

1

d

d

i=1

cos(2x

i

)] + 20 + e, (4)

which has a unique global minimum f

= 0 at (0, 0, ..., 0). From a simple parameter studies, we

concluded that, in our simulations, we can use the following values of parameters = 0.2, = 1,

and

0

= 1. As an example, we now use the FA to nd the global maxima of the following function

f(x) =

_

d

i=1

|x

i

|

_

exp

_

i=1

x

2

i

_

, (5)

with the domain 10 x

i

10 for all (i = 1, 2, ..., d) where d is the number of dimensions. This

function has multiple global optima. In the case of d = 2, we have 4 equal maxima f

= 1/

e

0.6065 at (1/2, 1/2), (1/2, 1/2), (1/2, 1/2) and (1/2, 1/2) and a unique global minimum at

(0, 0).

The four peaks are shown in Fig. 1, and these global maxima can be found using the implemented

Firey Algorithms after about 500 function evaluations. This corresponds to 25 reies evolving

for 20 generations or iterations. The initial locations of 25 reies are shown Fig. 2 and their

nal locations after 20 iterations are shown in Fig. 3. We can see that the Firey Algorithm is

very ecient. Recently studies also conrmed its promising power in solving nonlinear constrained

optimization tasks (Yang 2009, Lukasik and Zak 2009).

3 New Test Functions

The literature about test functions is vast, often with dierent collections of test functions for

validating new optimisation algorithms. Test functions such as Rosenbrocks banana function and

Ackleys function mentioned earlier are well-known in the optimisation literature. Almost all these

4

5 0 5

5

0

5

Figure 2: Initial locations of 25 reies.

5 0 5

5

0

5

Figure 3: Final locations after 20 iterations.

5

test functions are deterministic and smooth. In the rest of this paper, we rst propose a few new

test functions which have some singularity and/or stochastic components. Some of the formulated

functions have stochastic components but their global optima are deterministic. Then, we will use

the Firey Algorithm to nd the optimal solutions of some of these new functions.

The rst test function we have designed is a multimodal nonlinear function

f(x) =

_

e

d

i=1

(xi/)

2m

2e

d

i=1

(xi)

2

_

i=1

cos

2

x

i

, m = 5, (6)

which looks like a standing-wave function with a defect (see Fig. 4). It has many local minima

and the unique global minimum f

= 1 at x

= (, , ..., ) for = 15 within the domain

20 x

i

20 for i = 1, 2, ..., d. By using the Firey Algorithm with 20 reies, it is easy to nd

the global minimum in just about 15 iterations. The results are shown in Fig. 5 and Fig. 6.

As most test functions are smooth, the next function we have formulated is also multimodal but

it has a singularity

f(x) =

_

d

i=1

|x

i

|

_

exp

_

i=1

sin(x

2

i

)

_

, (7)

which has a unique global minimum f

= 0 at x

= (0, 0, ..., 0) in the domain 2 x

i

2 where

i = 1, 2, ..., d. At a rst look, this function has some similarity with function (5) discussed earlier.

However, this function is not smooth, and its derivatives are not well dened at the optimum

(0, 0, ..., 0). The landscape of this forest-like function is shown in Fig. 7 and its 2D contour is

displayed in Fig. 8.

Almost all existing test functions are deterministic. Now let us design a test function with

stochastic components

f(x, y) = 5e

[(x)

2

+(y)

2

]

j=1

K

i=1

ij

e

[(xi)

2

+(yj)

2

]

, (8)

where , > 0 are scaling parameters, which can often be taken as = = 1. Here the random

variables

ij

(i, j = 1, ..., K) obey a uniform distribution

ij

Unif[0,1]. The domain is 0 x, y K

and K = 10. This function has K

2

local valleys at grid locations and the xed global minimum at

x

= (, ). It is worth pointing that the minimum f

min

is random, rather than a xed value; it

may vary from (K

2

+ 5) to 5, depending and as well as the random numbers drawn.

For stochastic test functions, most deterministic algorithms such as hill-climbing would simply

fail due to the fact that the landscape is constantly changing. However, metaheuristic algorithms

could still be robust in dealing with such functions. The landscape of a realization of this stochastic

function is shown in Fig. 9.

Using the Firey Algorithm, we can nd the global minimum in about 15 iterations for n = 20

reies. That is, the total number of function evaluations is just 300. This is indeed very ecient

and robust. The initial locations of the reies are shown in Fig. 10 and the nal results are shown

Fig.11.

Furthermore, we can also design a relative generic stochastic function which is both stochastic

and non-smooth

f(x) =

d

i=1

x

i

i

, 5 x

i

5, (9)

where

i

(i = 1, 2, ..., d) are random variables which are uniformly distributed in [0, 1]. That is,

i

Unif[0, 1]. This function has the unique minimum f

= 0 at x

= (0, 0, ..., 0) which is also

singular.

We found that for most problems n = 15 to 50 would be sucient. For tougher problems, larger

n can be used, though excessively large n should not be used unless there is no better alternative,

as it is more computationally extensive for large n.

6

5

0

5

5

0

5

1

0

1

Figure 4: The standing wave function for two independent variables with the global minimum

f

= 1 at (, ).

5 0 5

6

4

2

0

2

4

6

Figure 5: The initial locations of 20 reies.

7

5 0 5

6

4

2

0

2

4

6

Figure 6: Final locations after 15 iterations.

5

0

5

5

0

5

0

100

200

300

Figure 7: The landscape of function (7).

8

5 0 5

5

0

5

Figure 8: Contour of function (7).

0

5

10

0

5

10

10

5

0

Figure 9: The 2D Stochastic function for K = 10 with a unique global minimum at (, ), though

the value of this global minimum is somewhat stochastic.

9

0 2 4 6 8 10

0

2

4

6

8

10

Figure 10: The initial locations of 20 reies.

0 2 4 6 8 10

0

2

4

6

8

10

Figure 11: The nal locations of 20 reies after 15 iterations, converging into (, ).

10

4 Engineering Applications

Now we can apply the Firey Algorithm to carry out various design optimisation tasks. In principle,

any optimization problems that can be solved by genetic algorithms and particle swarm optimisation

can also be solved by the Firey Algorithm. For simplicity to demonstrate its eectiveness in real-

world optimisation, we use the FA to nd the optimal solution of the standard but quite dicult

pressure design optimisation problem.

Pressure vessels are literally everywhere such as champagne bottles and gas tanks. For a given

volume and working pressure, the basic aim of designing a cylindrical vessel is to minimize the total

cost. Typically, the design variables are the thickness d

1

of the head, the thickness d

2

of the body,

the inner radius r, and the length L of the cylindrical section (Coello 2000, Cagnina et al 2008).

This is a well-known test problem for optimization and it can be written as

minimize f(x) = 0.6224d

1

rL + 1.7781d

2

r

2

+3.1661d

2

1

L + 19.84d

2

1

r, (10)

subject to the following constraints

g

1

(x) = d

1

+ 0.0193r 0

g

2

(x) = d

2

+ 0.00954r 0

g

3

(x) = r

2

L

4

3

r

3

+ 1296000 0

g

4

(x) = L 240 0.

(11)

The simple bounds are

0.0625 d

1

, d

2

99 0.0625, (12)

and

10.0 r, L 200.0. (13)

Recently, Cagnina et al (2008) used an ecient particle swarm optimiser to solve this problem

and they found the best solution

f

6059.714, (14)

at

x

(0.8125, 0.4375, 42.0984, 176.6366). (15)

This means the lowest price is about $6059.71.

Using the Firey Algorithm, we have found an even better solution with 40 reies after 20

iterations, and we have obtained

x

(0.7782, 0.3846, 40.3196, 200.0000)

T

, (16)

with

f

min

5885.33, (17)

which is signicantly lower or cheaper than the solution f

6059.714 obtained by Cagnina et al

(2008).

This clearly shows how ecient and eective the Firey Algorithm could be. Obviously, further

applications are highly needed to see how it may behave for solving various tough engineering

optimistion problems.

5 Conclusions

We have successfully used the Firey Algorithm to carry out nonlinear design optimisation. We rst

validated the algorithms using some standard test functions. After designing some new test functions

with singularity and stochastic components, we then used the FA to solve these unconstrained

stochastic functions. We also applied it to nd a better global solution to the pressure vessel design

11

optimisation. The optimisation results imply that the Firey Algorithm is potentially more powerful

than other existing algorithms such as particle swarm optimisation.

The convergence analysis of metaheuristic algorithms still requires some theoretical framework.

At the moment, it still lacks of a general framework for such analysis. Fortunately, various studies

started to propose a feasible measure for comparing algorithm performance. For example, Shilane et

al (2008) suggested a framework for evaluating statistical performance of evolutionary algorithms.

Obviously, more comparison studies are highly needed so as to identify the strength and weakness

of current metaheuristic algorithms. Ultimately, even better optimisation algorithms may emerge.

References

[1] Baeck, T., Fogel, D. B., Michalewicz, Z. (1997) Handbook of Evolutionary Computation, Taylor

& Francis.

[2] Cagnina, L. C., Esquivel, S. C., Coello, C. A. (2008) Solving engineering optimization problems

with the simple constrained particle swarm optimizer, Informatica, 32, 319-326 (2008).

[3] Coello, C. A. (2000) Use of a self-adaptive penalty approach for engineering optimization prob-

lems, Computers in Industry, 41, 113-127.

[4] Deb, K. (1995) Optimisation for Engineering Design, Prentice-Hall, New Delhi.

[5] Dorigo, M. and St utzle, T. (2004) Ant Colony Optimization, MIT Press.

[6] Gazi, K., and Passino, K. M. (2004) Stability analysis of social foraging swarms, IEEE Trans.

Sys. Man. Cyber. Part B - Cybernetics, 34, 539-557.

[7] Goldberg, D. E. (1989) Genetic Algorithms in Search, Optimisation and Machine Learning,

Reading, Mass.: Addison Wesley.

[8] Holland, J. H. (1975) Adaptation in Natural and Articial Systems, University of Michigan

Press, Ann Arbor.

[9] Kennedy, J. and Eberhart, R. C. (1995) Particle swarm optimization, Proc. of IEEE Interna-

tional Conference on Neural Networks, Piscataway, NJ. pp. 1942-1948.

[10] Kennedy J., Eberhart R., Shi Y. (2001), Swarm intelligence, Academic Press.

[11] Lukasik, S. and Zak, S. (2009) Firey algorithm for continuous constrained optimization tasks,

ICCCI 2009, Lecture Notes in Articial Intelligence (Eds. N. T. Ngugen, R. Kowalczyk, S. M.

Chen), 5796, pp. 97-100.

[12] Shilane, D., Martikainen, J., Dudoit, S., Ovaska, S. J. (2008) A general framework for statistical

performance comparison of evolutionary computation algorithms, Information Sciences: an Int.

Journal, 178, 2870-2879.

[13] Yang, X. S. (2005) Biology-derived algorithms in engineering optimization, in Handbook of

Bioinspired Algorithms and Applications (eds S. Olarius & A. Y. Zomaya), Chapman & Hall /

CRC.

[14] Yang, X. S. (2008) Nature-Inspired Metaheuristic Algorithms, Luniver Press, UK.

[15] Yang, X. S. (2009) Firey algorithms for multimodal optimization, in: Stochastic Algorithms:

Foundations and Appplications (Eds O. Watanabe and T. Zeugmann), SAGA 2009, Lecture

Notes in Computer Science, 5792, Springer-Verlag, Berlin, pp. 169-178.

12

Das könnte Ihnen auch gefallen

- Firefly Algorithm, Stochastic Test Functions and Design OptimisationDokument12 SeitenFirefly Algorithm, Stochastic Test Functions and Design OptimisationSiyu TaoNoch keine Bewertungen

- Firefly OptimizationDokument5 SeitenFirefly Optimizationamanpatel786Noch keine Bewertungen

- Fire Fly Algorithm M01117578 PDFDokument4 SeitenFire Fly Algorithm M01117578 PDFVageesha Shantha Veerabhadra SwamyNoch keine Bewertungen

- Meybodi AISP Shiraz 2012Dokument5 SeitenMeybodi AISP Shiraz 2012Masoud760Noch keine Bewertungen

- Experiments With Firefly AlgorithmDokument10 SeitenExperiments With Firefly AlgorithmBalayogi GNoch keine Bewertungen

- Gravity Field Theory9512024v1Dokument27 SeitenGravity Field Theory9512024v1stosicdusanNoch keine Bewertungen

- Firefly AlgorithmDokument14 SeitenFirefly AlgorithmSukun TarachandaniNoch keine Bewertungen

- 10.3934 Amc.2021067Dokument25 Seiten10.3934 Amc.2021067Reza DastbastehNoch keine Bewertungen

- Genetic Algorithm Applied To Fractal Image Compression: Y. Chakrapani and K. Soundara RajanDokument6 SeitenGenetic Algorithm Applied To Fractal Image Compression: Y. Chakrapani and K. Soundara Rajanhvactrg1Noch keine Bewertungen

- Continuous Versions of Firefly Algorithm: A ReviewDokument48 SeitenContinuous Versions of Firefly Algorithm: A ReviewminemineNoch keine Bewertungen

- 09 Aos687Dokument31 Seiten09 Aos687Anirban NathNoch keine Bewertungen

- 2011-Mixed Variable Structural Optimization Using Firefly AlgorithmDokument12 Seiten2011-Mixed Variable Structural Optimization Using Firefly AlgorithmSteven PhamNoch keine Bewertungen

- Optimization, Learning, and Games With Predictable SequencesDokument22 SeitenOptimization, Learning, and Games With Predictable SequencesIrwan Adhi PrasetyaNoch keine Bewertungen

- Blur Cvpr12Dokument20 SeitenBlur Cvpr12Gary ChangNoch keine Bewertungen

- An Algorithm For The Computation of EigenvaluesDokument44 SeitenAn Algorithm For The Computation of Eigenvalues孔翔鸿Noch keine Bewertungen

- Explaining The Saddlepoint ApproximationDokument9 SeitenExplaining The Saddlepoint ApproximationShafayat AbrarNoch keine Bewertungen

- Cite453High Res Radar Via CSDokument10 SeitenCite453High Res Radar Via CSNag ChallaNoch keine Bewertungen

- Ollifying EtworksDokument13 SeitenOllifying EtworksJane DaneNoch keine Bewertungen

- Difference Equations in Normed Spaces: Stability and OscillationsVon EverandDifference Equations in Normed Spaces: Stability and OscillationsNoch keine Bewertungen

- Adaptivity and Optimality: A Universal Algorithm For Online Convex OptimizationDokument10 SeitenAdaptivity and Optimality: A Universal Algorithm For Online Convex OptimizationJulinarNoch keine Bewertungen

- Evolving Neural Network Using Real Coded Genetic Algorithm (GA) For Multispectral Image ClassificationDokument13 SeitenEvolving Neural Network Using Real Coded Genetic Algorithm (GA) For Multispectral Image Classificationjkl316Noch keine Bewertungen

- The Physical Meaning of Replica Symmetry BreakingDokument15 SeitenThe Physical Meaning of Replica Symmetry BreakingpasomagaNoch keine Bewertungen

- Adobe Scan 27 Sep 2022Dokument6 SeitenAdobe Scan 27 Sep 2022039 MeghaEceNoch keine Bewertungen

- Building A Good Team: Secretary Problems and The Supermodular DegreeDokument20 SeitenBuilding A Good Team: Secretary Problems and The Supermodular Degreearun_kejariwalNoch keine Bewertungen

- The Comparison Logit and Probit Regression Analyses in Estimating The Strength of Gear TeethDokument6 SeitenThe Comparison Logit and Probit Regression Analyses in Estimating The Strength of Gear TeethGetamesay WorkuNoch keine Bewertungen

- Modelling Claim Frequency in Vehicle Insurance: Jiří ValeckýDokument7 SeitenModelling Claim Frequency in Vehicle Insurance: Jiří ValeckýQinghua GuoNoch keine Bewertungen

- Bose-Einstein Condensation Controlled by (HOP+OLP) Trapping PotentialsDokument5 SeitenBose-Einstein Condensation Controlled by (HOP+OLP) Trapping PotentialsIOSRJEN : hard copy, certificates, Call for Papers 2013, publishing of journalNoch keine Bewertungen

- 10.1007@s10898 007 9256 8Dokument18 Seiten10.1007@s10898 007 9256 8NikosNoch keine Bewertungen

- Ge 2015 EscapingDokument46 SeitenGe 2015 EscapingditceeNoch keine Bewertungen

- Topological Dynamics of Cellular Automata: Dimension MattersDokument23 SeitenTopological Dynamics of Cellular Automata: Dimension MattersTony BuNoch keine Bewertungen

- Evolution Strategies With Additive Noise: A Convergence Rate Lower BoundDokument22 SeitenEvolution Strategies With Additive Noise: A Convergence Rate Lower BoundHoldolf BetrixNoch keine Bewertungen

- Holographic Entanglement in A Noncommutative Gauge Theory: Prepared For Submission To JHEPDokument28 SeitenHolographic Entanglement in A Noncommutative Gauge Theory: Prepared For Submission To JHEPcrocoaliNoch keine Bewertungen

- An Electromagnetism-likeMechanism For Global OptimizationDokument20 SeitenAn Electromagnetism-likeMechanism For Global OptimizationArman DaliriNoch keine Bewertungen

- Theoretical Background LatexDokument35 SeitenTheoretical Background Latexgeo84Noch keine Bewertungen

- Bs (It) Al Habib Group of Colleges: Assignment AlgorithmDokument5 SeitenBs (It) Al Habib Group of Colleges: Assignment AlgorithmusmanaltafNoch keine Bewertungen

- Unit V Questions and Answers 30-03-2023Dokument4 SeitenUnit V Questions and Answers 30-03-2023Anirudha KrishnaNoch keine Bewertungen

- Understanding Vector Calculus: Practical Development and Solved ProblemsVon EverandUnderstanding Vector Calculus: Practical Development and Solved ProblemsNoch keine Bewertungen

- CHP 1curve FittingDokument21 SeitenCHP 1curve FittingAbrar HashmiNoch keine Bewertungen

- IcomscDokument12 SeitenIcomscMarco VillacisNoch keine Bewertungen

- Generalized Pattern Search Methods For A Class of Nonsmooth Optimization Problems With Structure - Bogani (2008)Dokument11 SeitenGeneralized Pattern Search Methods For A Class of Nonsmooth Optimization Problems With Structure - Bogani (2008)Emerson ButynNoch keine Bewertungen

- The Particle Swarm-Explosion, Stability, and Convergence in A Multidimensional Complex SpaceDokument16 SeitenThe Particle Swarm-Explosion, Stability, and Convergence in A Multidimensional Complex SpaceAbolfazl AbbasiyanNoch keine Bewertungen

- On Kernel-Target AlignmentDokument7 SeitenOn Kernel-Target AlignmentJônatas Oliveira SilvaNoch keine Bewertungen

- Theory For Penalised Spline Regression: Peter - Hall@anu - Edu.auDokument14 SeitenTheory For Penalised Spline Regression: Peter - Hall@anu - Edu.auAnn FeeNoch keine Bewertungen

- Tomographic Imaging With Diffracting Sources 203Dokument71 SeitenTomographic Imaging With Diffracting Sources 203IgorNoch keine Bewertungen

- III-4: Mass RenormalizationDokument13 SeitenIII-4: Mass RenormalizationGustavo VicenteNoch keine Bewertungen

- Paulo Gonc Alves Patrice Abry, Gabriel Rilling, Patrick FlandrinDokument4 SeitenPaulo Gonc Alves Patrice Abry, Gabriel Rilling, Patrick FlandrinS Santha KumarNoch keine Bewertungen

- The Uniformity Principle On Traced Monoidal Categories: Masahito HasegawaDokument19 SeitenThe Uniformity Principle On Traced Monoidal Categories: Masahito HasegawampnhczhsNoch keine Bewertungen

- Optimal Turnover, Liquidity, and Autocorrelation: Bastien Baldacci, Jerome Benveniste and Gordon Ritter January 26, 2022Dokument11 SeitenOptimal Turnover, Liquidity, and Autocorrelation: Bastien Baldacci, Jerome Benveniste and Gordon Ritter January 26, 2022Lexus1SaleNoch keine Bewertungen

- An Adaptive Metropolis Algorithm: 1350 7265 # 2001 ISI/BSDokument20 SeitenAn Adaptive Metropolis Algorithm: 1350 7265 # 2001 ISI/BSSandeep GogadiNoch keine Bewertungen

- Jordan18 Dynamical SymplecticDokument28 SeitenJordan18 Dynamical Symplecticzhugc.univNoch keine Bewertungen

- Electrodynamics-Point ParticlesDokument15 SeitenElectrodynamics-Point ParticlesIrvin MartinezNoch keine Bewertungen

- Amir Jstat 2020Dokument19 SeitenAmir Jstat 2020Damm TechyNoch keine Bewertungen

- Numerical Modeling in AcousticsDokument9 SeitenNumerical Modeling in AcousticsSandeep JaiswalNoch keine Bewertungen

- Compressed SensingDokument34 SeitenCompressed SensingLogan ChengNoch keine Bewertungen

- Ambrus Theta, Eisenstein Series Over Function FieldDokument21 SeitenAmbrus Theta, Eisenstein Series Over Function Fieldrammurty2.hri2022Noch keine Bewertungen

- Part 3Dokument22 SeitenPart 3Lo SimonNoch keine Bewertungen

- Estimating Functions (Godambe Article)Dokument12 SeitenEstimating Functions (Godambe Article)Genevieve Magpayo NangitNoch keine Bewertungen

- Ecture Nverse Inematics: 1 DefinitionDokument13 SeitenEcture Nverse Inematics: 1 Definitionulysse_d_ithaqu7083Noch keine Bewertungen

- Em 602Dokument23 SeitenEm 602Vaibhav DafaleNoch keine Bewertungen

- CMMDokument25 SeitenCMMAmin Ali AminNoch keine Bewertungen

- Firefly Algorithms For Multimodal OptimizationDokument10 SeitenFirefly Algorithms For Multimodal OptimizationAmin Ali AminNoch keine Bewertungen

- CMM LectDokument15 SeitenCMM LectAmin Ali AminNoch keine Bewertungen

- CNC Classnotes PDFDokument28 SeitenCNC Classnotes PDFelangandhiNoch keine Bewertungen

- F03a Pump-MotorEffiencies Tmms10Dokument10 SeitenF03a Pump-MotorEffiencies Tmms10Amin Ali AminNoch keine Bewertungen

- CAM Sheet 1Dokument1 SeiteCAM Sheet 1Amin Ali AminNoch keine Bewertungen

- Missile GuidanceDokument9 SeitenMissile GuidanceAmin Ali AminNoch keine Bewertungen

- CAM Sheet 1Dokument1 SeiteCAM Sheet 1Amin Ali AminNoch keine Bewertungen

- No.:13Name:QNF450 Horizontal Packaging MachineDokument2 SeitenNo.:13Name:QNF450 Horizontal Packaging MachineAmin Ali AminNoch keine Bewertungen

- Bosch Wrappers Pack 201 PDFDokument2 SeitenBosch Wrappers Pack 201 PDFAmin Ali AminNoch keine Bewertungen

- Bosch Wrappers Doboy Stratus PDFDokument2 SeitenBosch Wrappers Doboy Stratus PDFAmin Ali Amin100% (1)

- No.:13Name:QNF450 Horizontal Packaging MachineDokument2 SeitenNo.:13Name:QNF450 Horizontal Packaging MachineAmin Ali AminNoch keine Bewertungen

- Abrasive Flow MachningDokument27 SeitenAbrasive Flow Machningraj2avinashNoch keine Bewertungen

- HardnessDokument5 SeitenHardnessAmin Ali AminNoch keine Bewertungen

- Higher Algebra - Hall & KnightDokument593 SeitenHigher Algebra - Hall & KnightRam Gollamudi100% (2)

- 3 Geometry of Single Point Cutting ToolsDokument13 Seiten3 Geometry of Single Point Cutting Toolsrichardcaroncstj100% (2)

- CAD DesignerDokument3 SeitenCAD DesignerAmin Ali AminNoch keine Bewertungen

- Overview of Materials For Low Carbon Steel: CategoriesDokument6 SeitenOverview of Materials For Low Carbon Steel: CategoriesAmin Ali AminNoch keine Bewertungen

- Technical Paper Glass ProductionDokument42 SeitenTechnical Paper Glass Productioncun_85Noch keine Bewertungen

- 10 Torsion TestDokument16 Seiten10 Torsion Testfrankjono75% (4)

- Important Algorithms MAKAUT (Former WBUT)Dokument43 SeitenImportant Algorithms MAKAUT (Former WBUT)srjmukherjee2Noch keine Bewertungen

- Solver - PanchtantraDokument5 SeitenSolver - PanchtantraDHARMENDRA SHAHNoch keine Bewertungen

- Prim's and Kruskal's AlgorithmDokument31 SeitenPrim's and Kruskal's AlgorithmMuthumeena ValliyappanNoch keine Bewertungen

- Computer Based Optimization Method MCA - 305Dokument3 SeitenComputer Based Optimization Method MCA - 305Mangesh Malvankar100% (1)

- Artificial Intelligence For Fault Diagnosis of Rotating Machinery A ReviewDokument15 SeitenArtificial Intelligence For Fault Diagnosis of Rotating Machinery A ReviewMarcos RamisNoch keine Bewertungen

- A Simple Proof of AdaBoost AlgorithmDokument4 SeitenA Simple Proof of AdaBoost AlgorithmXuqing WuNoch keine Bewertungen

- University Institute of Engineering Department of Computer Science & EngineeringDokument23 SeitenUniversity Institute of Engineering Department of Computer Science & EngineeringAnkit ChaurasiyaNoch keine Bewertungen

- A Comparison of 1-Regularizion, PCA, KPCA and ICA For Dimensionality Reduction in Logistic RegressionDokument20 SeitenA Comparison of 1-Regularizion, PCA, KPCA and ICA For Dimensionality Reduction in Logistic RegressionManole Eduard MihaitaNoch keine Bewertungen

- SVM and Linear Regression Interview Questions 1gwndpDokument23 SeitenSVM and Linear Regression Interview Questions 1gwndpASHISH KUMAR MAJHINoch keine Bewertungen

- Digital Communication QuestionsDokument14 SeitenDigital Communication QuestionsNilanjan BhattacharjeeNoch keine Bewertungen

- Programming COURSE Data Structures: Duration: 1.5 Months Fees: Rs 10000Dokument5 SeitenProgramming COURSE Data Structures: Duration: 1.5 Months Fees: Rs 10000balujalabsNoch keine Bewertungen

- SpeechDokument39 SeitenSpeechArshad ShaikNoch keine Bewertungen

- Presentation 13Dokument12 SeitenPresentation 13Parmeshwar KhendkeNoch keine Bewertungen

- Table of Window Function Details - VRUDokument29 SeitenTable of Window Function Details - VRUVivek HanchateNoch keine Bewertungen

- Quantitative Analysis Forl Decision MakingDokument4 SeitenQuantitative Analysis Forl Decision MakingYG DENoch keine Bewertungen

- Assignment ProblemDokument26 SeitenAssignment ProblemShalini Reddy BNoch keine Bewertungen

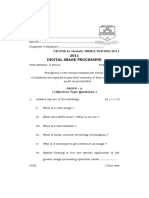

- Pgdgi 202 Digital Image Processing 2011Dokument3 SeitenPgdgi 202 Digital Image Processing 2011cNoch keine Bewertungen

- 2018 7TH Sem Elective Stream 1Dokument11 Seiten2018 7TH Sem Elective Stream 1Aman SinghNoch keine Bewertungen

- Digital Communication Lecture 1-22Dokument78 SeitenDigital Communication Lecture 1-22Abhay SetiaNoch keine Bewertungen

- Hierarchical and Partitional ClusteringDokument2 SeitenHierarchical and Partitional ClusteringRavindra Kumar PrajapatiNoch keine Bewertungen

- SMAI-M20-01: CSE-471: Statistical Methods in AI: C. V. JawaharDokument10 SeitenSMAI-M20-01: CSE-471: Statistical Methods in AI: C. V. JawaharSwayatta DawNoch keine Bewertungen

- Signal Flow Graphs: (SFG)Dokument2 SeitenSignal Flow Graphs: (SFG)muguNoch keine Bewertungen

- Non-Negative Matrix Factorization (NMF) : Benjamin WilsonDokument43 SeitenNon-Negative Matrix Factorization (NMF) : Benjamin Wilsonprjet1 fsm1Noch keine Bewertungen

- MIDA1 AUT - SolutionsDokument4 SeitenMIDA1 AUT - SolutionsGianluca CastrinesiNoch keine Bewertungen

- Cs170 Sp2020 Mt2 Chiesa Nelson SolnDokument19 SeitenCs170 Sp2020 Mt2 Chiesa Nelson SolnJohn PepeNoch keine Bewertungen

- Computer Graphics 4: Bresenham Line Drawing Algorithm, Circle Drawing & Polygon FillingDokument48 SeitenComputer Graphics 4: Bresenham Line Drawing Algorithm, Circle Drawing & Polygon FillingareejalmajedNoch keine Bewertungen

- 01-Symmetric EncryptionDokument20 Seiten01-Symmetric Encryptionastudent0230Noch keine Bewertungen

- Proiecte Functii SplineDokument75 SeitenProiecte Functii SplineCristina NicoNoch keine Bewertungen

- SUMMATIVE TEST NO.1 Math 10 Second Quarter 2022-2023Dokument2 SeitenSUMMATIVE TEST NO.1 Math 10 Second Quarter 2022-2023Justiniano SalicioNoch keine Bewertungen

- Linear Programming: Chapter 6 SupplementDokument14 SeitenLinear Programming: Chapter 6 Supplementrogean gullemNoch keine Bewertungen