Beruflich Dokumente

Kultur Dokumente

Neural Networks (Review) : Piero P. Bonissone GE Corporate Research & Development

Hochgeladen von

vani_V_prakashOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Neural Networks (Review) : Piero P. Bonissone GE Corporate Research & Development

Hochgeladen von

vani_V_prakashCopyright:

Verfügbare Formate

Soft Computing: Neural Networks

1

Neural Networks

(Review)

Piero P. Bonissone

GE Corporate Research & Development

Bonissone@crd.ge.com

(Adapted from Roger Jang)

Soft Computing: Neural Networks

2

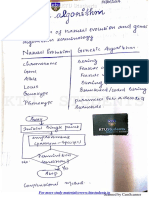

Outline

Introduction

Classifications

Amount of supervision

Architectures

Output Types

Node Types

Connection Weights

Reflections

Soft Computing: Neural Networks

3

Neural Nets: Classification

Supervised Learning

Multilayer perceptrons

Radial basis function networks

Modular neural networks

LVQ (learning vector quantization)

Unsupervised Learning

Competitive learning networks

Kohonen self-organizing networks

ART (adaptive resonant theory)

Others

Hopfield networks

Soft Computing: Neural Networks

4

Supervised Neural Networks

Requirement:

known input-output relations

input pattern output

Soft Computing: Neural Networks

5

Perceptrons

-Rosenblatt: 1950s

-Input patterns represented is binary

-single layer network can be trained easily

-output o is computed by

where

w

i

is a (modifiable) weight

x

i

is the input signal

is some threshold

f(

.

) is the activation function

o f w x

i i

i

n

_

,

1

f x x ( ) sgn( )

'

1

0

if x > 0

otherwise

Soft Computing: Neural Networks

6

Single-Layer Perceptrons

Network architecture

x1

x2

x3

w1

w2

w3

w0

y = signum(wi xi + w0)

Soft Computing: Neural Networks

7

Single-Layer Perceptron

Example: Gender classification

h

v

w1

w2

w0

Network Arch.

y = signum(hw1+vw2+w0)

-1 if female

1 if male

=

y

Training data

h (hair length)

v

(

v

o

i

c

e

f

r

e

q

.

)

Soft Computing: Neural Networks

8

Perceptron

Learning:

select an input vector

if the response is incorrect, modify all weights

where

t

i

is a target output

is the learning rate

If there exists a set of weights, then the method is

guaranteed to converge

w t x

i i i

Soft Computing: Neural Networks

9

XOR

Minsky and Papert reported a severe shortcoming

of single layer perceptrons, the XOR problem

not linearly separable

which (together with a lack or proper training

techniques for multi-layer perceptrons) all but killed

interest in neural nets in the 70s and early 80s.

x1 x2 output

0 0 0

0 1 1

1 0 1

1 1 0

x

x O

O 1

1 0

0

Soft Computing: Neural Networks

10

ADALINE

Single layer network (proposed by Widrow and Hoff)

Output is a weighted linear combination of weights

The error is described as

(for pattern p)

where

t

p

is the target output

o

p

is the actual output

o w x w

i i

i

n

0

1

( )

E t o

p p p

2

Soft Computing: Neural Networks

11

ADALINE

To decrease the error, the derivative with

respect to the weights is taken

The delta rule is:

( )

p i p p i

w t o x

( )

E

w

t o x

p

i

p p i

2

Soft Computing: Neural Networks

12

Multi-Layer Perceptrons

-Recall the output

-and the squared error measure

which is approximated by

-and the activation function

then the learning rule for each node can be derived

using the chain rule...

o f w x

i i

i

n

_

,

1

( )

E t o

p p p

2

( )

p

k

p

k

p

k

k

n

E t o

2

1

( )

f x

e

x

+

1

1

Soft Computing: Neural Networks

13

Multilayer Perceptrons (MLPs)

Learning rule:

Steepest descent (Backprop)

Conjugate gradient method

All optim. methods using first derivative

Derivative-free optim.

Network architecture

x1

x2

y1

y2

hyperbolic tangent

or logistic function

Soft Computing: Neural Networks

14

Backpropagation

to propagate the error back through a multi-

layer perceptron.

w

E

w

ki

p

ki

p

Soft Computing: Neural Networks

15

Improvements

Momentum term

smoothes weight updating

can speed learning up

w E w

w prev

+

Soft Computing: Neural Networks

16

MLP Decision Boundaries

A B

B A

A

B

XOR Interwined General

1-layer: Half planes

A B

B A

A

B

2-layer: Convex

A B

B A

A

B

3-layer: Arbitrary

Das könnte Ihnen auch gefallen

- The Beginner's Guide To SynthsDokument11 SeitenThe Beginner's Guide To SynthsAnda75% (4)

- Hard Disk Formatting and CapacityDokument3 SeitenHard Disk Formatting and CapacityVinayak Odanavar0% (1)

- Why Rife Was Right and Hoyland Was Wrong and What To Do About ItDokument4 SeitenWhy Rife Was Right and Hoyland Was Wrong and What To Do About ItHayley As Allegedly-Called Yendell100% (1)

- Artificial Neural NetworkDokument20 SeitenArtificial Neural NetworkSibabrata Choudhury100% (2)

- ITP For Pipeline (Sampel)Dokument5 SeitenITP For Pipeline (Sampel)Reza RkndNoch keine Bewertungen

- Charotar University of Science and Technology Faculty of Technology and EngineeringDokument10 SeitenCharotar University of Science and Technology Faculty of Technology and EngineeringSmruthi SuvarnaNoch keine Bewertungen

- Concentric-Valves-API-609-A (Inglés)Dokument12 SeitenConcentric-Valves-API-609-A (Inglés)whiskazoo100% (1)

- Caution!: Portable Digital Color Doppler Ultrasound SystemDokument177 SeitenCaution!: Portable Digital Color Doppler Ultrasound SystemDaniel Galindo100% (1)

- Advanced English Communication Skills LaDokument5 SeitenAdvanced English Communication Skills LaMadjid MouffokiNoch keine Bewertungen

- Pattern Classification SlideDokument45 SeitenPattern Classification SlideTiến QuảngNoch keine Bewertungen

- Networks of Artificial Neurons Single Layer PerceptronsDokument16 SeitenNetworks of Artificial Neurons Single Layer PerceptronsSurenderMalanNoch keine Bewertungen

- 3D - Quick Start - OptitexHelpEnDokument26 Seiten3D - Quick Start - OptitexHelpEnpanzon_villaNoch keine Bewertungen

- 6802988C45 ADokument26 Seiten6802988C45 AJose Luis Pardo FigueroaNoch keine Bewertungen

- Artificial Neural Networks: System That Can Acquire, Store, and Utilize Experiential KnowledgeDokument40 SeitenArtificial Neural Networks: System That Can Acquire, Store, and Utilize Experiential KnowledgeKiran Moy Mandal100% (1)

- PL54 PeugeotDokument3 SeitenPL54 Peugeotbump4uNoch keine Bewertungen

- Bio Inspired Computing: Fundamentals and Applications for Biological Inspiration in the Digital WorldVon EverandBio Inspired Computing: Fundamentals and Applications for Biological Inspiration in the Digital WorldNoch keine Bewertungen

- Chapter 9Dokument9 SeitenChapter 9Raja ManikamNoch keine Bewertungen

- Multi-Dimensional Neural Networks: Unified Theory: Garimella RamamurthyDokument22 SeitenMulti-Dimensional Neural Networks: Unified Theory: Garimella RamamurthyvivekolaNoch keine Bewertungen

- Classification by Back PropagationDokument20 SeitenClassification by Back PropagationShrunkhala Wankhede BadwaikNoch keine Bewertungen

- CNN and Gan: Introduction ToDokument58 SeitenCNN and Gan: Introduction ToGopiNath VelivelaNoch keine Bewertungen

- Unit 1Dokument61 SeitenUnit 1GowthamUcekNoch keine Bewertungen

- ECSE484 Intro v2Dokument67 SeitenECSE484 Intro v2Zaid SulaimanNoch keine Bewertungen

- Part7.2 Artificial Neural NetworksDokument51 SeitenPart7.2 Artificial Neural NetworksHarris Punki MwangiNoch keine Bewertungen

- Neural Networks Backpropagation Algorithm: COMP4302/COMP5322, Lecture 4, 5Dokument11 SeitenNeural Networks Backpropagation Algorithm: COMP4302/COMP5322, Lecture 4, 5Martin GarciaNoch keine Bewertungen

- Assign 1 Soft CompDokument12 SeitenAssign 1 Soft Compsharmasunishka30Noch keine Bewertungen

- 17 C - Artificial Neural Networks - 1Dokument40 Seiten17 C - Artificial Neural Networks - 1Pratik RajNoch keine Bewertungen

- Neural - NetworksDokument47 SeitenNeural - NetworkshowgibaaNoch keine Bewertungen

- Lec7 NN BackPropagation1Dokument55 SeitenLec7 NN BackPropagation1tejsharma815Noch keine Bewertungen

- Artificial Neural Network (ANN) : Duration: 8 Hrs OutlineDokument61 SeitenArtificial Neural Network (ANN) : Duration: 8 Hrs Outlineanhtu9_910280373Noch keine Bewertungen

- CC511 Week 5 - 6 - NN - BPDokument62 SeitenCC511 Week 5 - 6 - NN - BPmohamed sherifNoch keine Bewertungen

- Pattern Classification of Back-Propagation Algorithm Using Exclusive Connecting NetworkDokument5 SeitenPattern Classification of Back-Propagation Algorithm Using Exclusive Connecting NetworkEkin RafiaiNoch keine Bewertungen

- Machine LearningDokument83 SeitenMachine LearningChris D'SilvaNoch keine Bewertungen

- Artificial Neural NetworksDokument50 SeitenArtificial Neural NetworksRiyujamalNoch keine Bewertungen

- Artificial Neural NetworkDokument20 SeitenArtificial Neural NetworkYash PatelNoch keine Bewertungen

- Sesi#2 - WJ - Artificial Neural NetworkDokument69 SeitenSesi#2 - WJ - Artificial Neural NetworkDwiki KurniaNoch keine Bewertungen

- Neural NetworksDokument45 SeitenNeural NetworksDiễm Quỳnh TrầnNoch keine Bewertungen

- Unit 6 Application of AIDokument91 SeitenUnit 6 Application of AIKavi Raj AwasthiNoch keine Bewertungen

- Institute For Advanced Management Systems Research Department of Information Technologies Abo Akademi UniversityDokument41 SeitenInstitute For Advanced Management Systems Research Department of Information Technologies Abo Akademi UniversityKarthikeyanNoch keine Bewertungen

- Machine Learning: Lecture 4: Artificial Neural Networks (Based On Chapter 4 of Mitchell T.., Machine Learning, 1997)Dokument14 SeitenMachine Learning: Lecture 4: Artificial Neural Networks (Based On Chapter 4 of Mitchell T.., Machine Learning, 1997)harutyunNoch keine Bewertungen

- Ann4-3s.pdf 7oct PDFDokument21 SeitenAnn4-3s.pdf 7oct PDFveenaNoch keine Bewertungen

- Back PropagationDokument27 SeitenBack PropagationShahbaz Ali Khan100% (1)

- Lecture 25 - Artificial Neural NetworksDokument42 SeitenLecture 25 - Artificial Neural Networksbscs-20f-0009Noch keine Bewertungen

- ML NeuralNetwork DeepLearningDokument74 SeitenML NeuralNetwork DeepLearningadminNoch keine Bewertungen

- ANN PG Module1Dokument75 SeitenANN PG Module1Sreerag Kunnathu SugathanNoch keine Bewertungen

- Training Neural Networks With GA Hybrid AlgorithmsDokument12 SeitenTraining Neural Networks With GA Hybrid Algorithmsjkl316Noch keine Bewertungen

- Neural NetworksDokument37 SeitenNeural NetworksOmiarNoch keine Bewertungen

- A Presentation On: By: EdutechlearnersDokument33 SeitenA Presentation On: By: EdutechlearnersshardapatelNoch keine Bewertungen

- Introduction To ANNsDokument31 SeitenIntroduction To ANNsanjoomNoch keine Bewertungen

- Matlab FileDokument16 SeitenMatlab FileAvdesh Pratap Singh GautamNoch keine Bewertungen

- (w3) 484 12Dokument27 Seiten(w3) 484 12U2005336 STUDENTNoch keine Bewertungen

- TO Artificial Neural NetworksDokument22 SeitenTO Artificial Neural NetworksandresNoch keine Bewertungen

- 19 - Introduction To Neural NetworksDokument7 Seiten19 - Introduction To Neural NetworksRugalNoch keine Bewertungen

- Hardware Implementation of A MLP Network With On-Chip LearningDokument6 SeitenHardware Implementation of A MLP Network With On-Chip LearningYuukiaNoch keine Bewertungen

- Neural NetworksDokument22 SeitenNeural NetworksProgramming LifeNoch keine Bewertungen

- Seminar AnnDokument27 SeitenSeminar AnnShifa ThasneemNoch keine Bewertungen

- Lecture 10 Neural NetworkDokument34 SeitenLecture 10 Neural NetworkMohsin IqbalNoch keine Bewertungen

- Unit II Supervised IIDokument16 SeitenUnit II Supervised IIindiraNoch keine Bewertungen

- 4.2 AnnDokument26 Seiten4.2 AnnMatrix BotNoch keine Bewertungen

- A Systolic Array Exploiting The Parallelisms of Artificial Neural Inherent NetworksDokument15 SeitenA Systolic Array Exploiting The Parallelisms of Artificial Neural Inherent NetworksctorreshhNoch keine Bewertungen

- Artificial Neural NetworksDokument34 SeitenArtificial Neural NetworksAYESHA SHAZNoch keine Bewertungen

- Multi Layer PerceptronDokument62 SeitenMulti Layer PerceptronKishan Kumar GuptaNoch keine Bewertungen

- D.Y. Patil College of Engineering, Akurdi Department of Electronics & Telecommunication EngineeringDokument41 SeitenD.Y. Patil College of Engineering, Akurdi Department of Electronics & Telecommunication EngineeringP SNoch keine Bewertungen

- Unit 2 - Soft Computing - WWW - Rgpvnotes.inDokument20 SeitenUnit 2 - Soft Computing - WWW - Rgpvnotes.inRozy VadgamaNoch keine Bewertungen

- Non-Linear ClassifiersDokument19 SeitenNon-Linear ClassifiersPooja PatwariNoch keine Bewertungen

- Interconnection Networks: Crossbar Switch, Which Can Simultaneously Connect Any Set ofDokument11 SeitenInterconnection Networks: Crossbar Switch, Which Can Simultaneously Connect Any Set ofgk_gbuNoch keine Bewertungen

- UNIT II Basic On Neural NetworksDokument36 SeitenUNIT II Basic On Neural NetworksPoralla priyankaNoch keine Bewertungen

- ML W5 Pla NNDokument37 SeitenML W5 Pla NNBùi Trọng HiếuNoch keine Bewertungen

- What Is A Neural Network?Dokument26 SeitenWhat Is A Neural Network?Angel Pm100% (1)

- Feedforward Neural Networks: Fundamentals and Applications for The Architecture of Thinking Machines and Neural WebsVon EverandFeedforward Neural Networks: Fundamentals and Applications for The Architecture of Thinking Machines and Neural WebsNoch keine Bewertungen

- A Est200-Dec2020Dokument2 SeitenA Est200-Dec2020vani_V_prakashNoch keine Bewertungen

- Pre ProcessingDokument60 SeitenPre Processingvani_V_prakashNoch keine Bewertungen

- System Software - 5 - KQB KtuQbankDokument15 SeitenSystem Software - 5 - KQB KtuQbankvani_V_prakashNoch keine Bewertungen

- DMWH M1Dokument25 SeitenDMWH M1vani_V_prakashNoch keine Bewertungen

- Module 1Dokument34 SeitenModule 1vani_V_prakashNoch keine Bewertungen

- Module 5Dokument24 SeitenModule 5vani_V_prakashNoch keine Bewertungen

- Module 5 Remaining NotesDokument15 SeitenModule 5 Remaining Notesvani_V_prakashNoch keine Bewertungen

- Module 4Dokument20 SeitenModule 4vani_V_prakashNoch keine Bewertungen

- Design CommunicationDokument42 SeitenDesign Communicationvani_V_prakashNoch keine Bewertungen

- Ktu Studnts: For More Study Materials WWW - Ktustudents.inDokument165 SeitenKtu Studnts: For More Study Materials WWW - Ktustudents.invani_V_prakashNoch keine Bewertungen

- Soft Computing EbookDokument11 SeitenSoft Computing Ebookvani_V_prakashNoch keine Bewertungen

- Counterpropagation NetworksDokument6 SeitenCounterpropagation Networksvani_V_prakashNoch keine Bewertungen

- Object Design: Module 4Dokument18 SeitenObject Design: Module 4vani_V_prakashNoch keine Bewertungen

- Phase ShifterDokument7 SeitenPhase ShifterNumanAbdullahNoch keine Bewertungen

- Coomaraswamy, SarpabandhaDokument3 SeitenCoomaraswamy, SarpabandhakamakarmaNoch keine Bewertungen

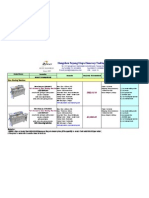

- Quotation For Blue Star Printek From Boway2010 (1) .09.04Dokument1 SeiteQuotation For Blue Star Printek From Boway2010 (1) .09.04Arvin Kumar GargNoch keine Bewertungen

- Stock Market Lesson PlanDokument4 SeitenStock Market Lesson PlanWilliam BaileyNoch keine Bewertungen

- Version 2 Production Area Ground Floor + 1st Floor Samil EgyptDokument1 SeiteVersion 2 Production Area Ground Floor + 1st Floor Samil EgyptAbdulazeez Omer AlmadehNoch keine Bewertungen

- DELTA - IA-HMI - Danfoss VLT 2800 - FC Protocol - CM - EN - 20111122Dokument4 SeitenDELTA - IA-HMI - Danfoss VLT 2800 - FC Protocol - CM - EN - 20111122Ronnie Ayala SandovalNoch keine Bewertungen

- Introduction To Ada Solo Project: Robert Rostkowski CS 460 Computer Security Fall 2008Dokument20 SeitenIntroduction To Ada Solo Project: Robert Rostkowski CS 460 Computer Security Fall 2008anilkumar18Noch keine Bewertungen

- Unist MQL Article - ShopCleansUpAct CTEDokument2 SeitenUnist MQL Article - ShopCleansUpAct CTEMann Sales & MarketingNoch keine Bewertungen

- DLS RSeriesManual PDFDokument9 SeitenDLS RSeriesManual PDFdanysan2525Noch keine Bewertungen

- Long+term+storage+procedure 1151enDokument2 SeitenLong+term+storage+procedure 1151enmohamadhakim.19789100% (1)

- BB TariffDokument21 SeitenBB TariffKarthikeyanNoch keine Bewertungen

- Seguridad en Reactores de Investigación PDFDokument152 SeitenSeguridad en Reactores de Investigación PDFJorge PáezNoch keine Bewertungen

- CM29, 03-16-17Dokument3 SeitenCM29, 03-16-17Louie PascuaNoch keine Bewertungen

- LIVING IN THE IT ERA (Introduction)Dokument9 SeitenLIVING IN THE IT ERA (Introduction)johnnyboy.galvanNoch keine Bewertungen

- XJ3 PDFDokument2 SeitenXJ3 PDFEvert Chavez TapiaNoch keine Bewertungen

- 8 Candidate Quiz Buzzer Using 8051Dokument33 Seiten8 Candidate Quiz Buzzer Using 8051prasadzeal0% (1)

- Assessment of Reinforcement CorrosionDokument5 SeitenAssessment of Reinforcement CorrosionClethHirenNoch keine Bewertungen

- 00 EET3196 Lecture - Tutorial Ouline Plan1Dokument6 Seiten00 EET3196 Lecture - Tutorial Ouline Plan1mikeNoch keine Bewertungen

- PROSIS Part Information: Date: Image Id: Catalogue: ModelDokument2 SeitenPROSIS Part Information: Date: Image Id: Catalogue: ModelAMIT SINGHNoch keine Bewertungen

- 7ML19981GC61 1Dokument59 Seiten7ML19981GC61 1Andres ColladoNoch keine Bewertungen