Beruflich Dokumente

Kultur Dokumente

Misra GamesWorkshopSlides 2013

Hochgeladen von

Lucas PiroulusCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Misra GamesWorkshopSlides 2013

Hochgeladen von

Lucas PiroulusCopyright:

Verfügbare Formate

Estimating Dynamic Games

Sanjog Misra

Anderson UCLA

Structural Workshop 2013

Misra Estimating Dynamic Games

Estimating Dynamic Discrete Games

In this workshop we have gone through a lot.

The main topics include...

1 Foundations: Structural Models (Reiss), Causality and

Identication (Goldfarb), Instruments (Rossi), Data (Mela)

2 Methods: Static Demand Models (Sudhir), Single Agent

Dynamics: Theory and Econometrics (Hitsch), Static Games

(Ellickson)

What you should have gleaned from their talks is that the

estimation of structural models requires

Data +Theory +Econometrics

The estimation of dynamic games combines elements from all

of the above talks and requires considerable expertise in

handling data, game theory, econometrics and computational

methods.

Misra Estimating Dynamic Games

Estimating Dynamic Discrete Games

Weve already learned how to estimate single-agent (SA)

dynamic discrete choice (DDC) models

Two main approaches

1 Full solution: NXFP (Rust, 1987)

2 Two-Step: CCP (Hotz and Miller, 1993)

In both cases, the underlying SA optimization problem

involved agents solving a dynamic programming (DP)

problem

With games, agents must solve an inter-related system of DP

problems

Their actions must be optimal given their beliefs & their

beliefs must be correct on average (or at least self-conrming)

As you can imagine, computing equilibria can be pretty

complicated...

Misra Estimating Dynamic Games

Estimating Dynamic Discrete Games

Ericson & Pakes (ReStud, 95) and Pakes & McGuire (Rand,

94) provide a framework for computing equilibria to dynamic

games.

More recently Goettler and Gordon (JPE 2012) provide an

alternative approach

However, using the original PM algorithm, solving a

reasonably complex EP-style dynamic game even once is

computationally demanding (if not impossible)

Estimating the model using NFXP is essentially intractable

Theres also the issue of multiple equilibria

The incompleteness this introduces can make it dicult to

construct a proper likelihood/objective function, further

complicating a NFXP approach

Two-step CCP estimation provides a work-around that

circumvents the iterative xed point calculation, solving

both problems

Misra Estimating Dynamic Games

Estimating Dynamic Discrete Games

CCP estimators were rst developed by Hotz & Miller (HM,

1993) & Hotz, Miller, Sanders & Smith (HMS

2

, 1994) for

DDC models

Four sets of authors (contemporaneously) suggested adapting

these methods to games:

1 Aguirregabiria and Mira (AM) (Ema, 2007),

2 Bajari, Benkard, and Levin (BBL) (Ema, 2007),

3 Pakes, Ostrovsky, and Berry (POB) (Rand, 2007), &

4 Pesendorfer and Schmidt-Dengler (PSD) (ReStud, 2008),

Well talk about AM & BBL

AM extends HM to games

BBL extends HMS

2

to games

Both are based on the framework suggested in Rust (94)

More recent contributions are Blevins et al. (2012) and

Arcidiacono and Miller (Ema, 2011)

Misra Estimating Dynamic Games

Aguirregabiria and Mira (Ema, 2007)

Sequential Estimation of Dynamic Discrete Games

Model

A dynamic discrete game of incomplete information.

Motivated by stylized model of retail chain competition.

Let d

t

be a vector of demand shifters in period t.

N rms operate in the market, indexed by i 1, 2, ..., N.

In each period t, rms decide simultaneously how many

outlets to operate - choosing from the discrete set

A = 0, 1, ..., J

The decision of rm i in period t is a

it

A

The vector of all rms actions is a

t

= (a

1t

, a

2t

, ..., a

Nt

)

Misra Estimating Dynamic Games

Model

Firms are characterized by two vectors of state variables that

aect protability: x

it

and

it

x

t

= (d

t

, x

1t

, x

2t

, ..., x

Nt

) is common knowledge, but

t

= (

1t

,

2t

, ...,

Nt

) is privately observed by rm i

i

(a

t

, x

t

,

it

) is rm i s per-period prot function.

Assume x

t

,

t

follows a controlled Markov process with

transition probability p (x

t+1

,

t+1

[a

t

, x

t

,

t

) , which is

common knowledge

Misra Estimating Dynamic Games

Model

Each rm chooses its number of outlets to maximize expected

discounted intertemporal prots,

E

_

s=t

st

i

(a

t

, x

t

,

it

) [ x

t

,

it

_

where (0, 1) is the (known) discount factor.

The primitives of the model are the prot functions

i

(),

the transition probability p ([), and

AM make the following set of assumptions...

Misra Estimating Dynamic Games

Assumptions

Misra Estimating Dynamic Games

Strategies and Bellman Equations

AM also assume that rms play stationary Markov strategies

Let =

i

(x,

i

) be a set of strategy functions (decision

rules), one for each rm, with

i

: X R

J+1

A

Associated with a set of strategy functions , dene a set of

conditional choice probabilities P

= P

i

(a

i

[x) such that

P

i

(a

i

[x) = Pr (

i

(x,

i

) = a

i

[x) =

_

I

i

(x,

i

) = a

i

g

i

(

i

) d

i

which represent the expected behavior of rm i from the point

of view of the rest of the rms (& us!), when rm i follows .

Misra Estimating Dynamic Games

Expected prots

Let

i

(a

i

, x) be rm i s current expected prot from

choosing alternative a

i

while the other rms follow .

By the independence of private information,

i

(a

i

, x) =

a

i

A

N1

_

j ,=i

P

j

(a

i

[ j ] [x)

_

i

(a

i

, a

i

, x)

where a

i

[ j ] is the j

th

rms element in the vector of actions

players other than i

Let

V

i

(x,

i

) be the value of rm i if it behaves optimally

now and in the future given that the other rms follow .

Misra Estimating Dynamic Games

Value Functions

By Bellmans principle of optimality, we can write

V

i

(x,

i

) = max

a

i

A

_

i

(a

i

, x) +

i

(a

i

) +

x

/

X

_

_

V

i

_

x

/

,

/

i

_

g

i

_

/

i

_

d

/

i

_

f

i

_

x

/

[x, a

i

_

_

(1)

where f

i

(x

/

[x, a

i

) is the transition probability of x conditional

on rm i choosing a

i

and the other rms following :

f

i

_

x

/

[x, a

i

_

=

a

i

A

N1

_

j ,=i

P

j

(a

i

[j ] [x)

_

f

_

x

/

[x, a

i

, a

i

_

AM prefer to work with the value functions integrated over

the private information variables.

Let V

i

(x) be the integrated value function

_

V

i

(x,

i

) g

i

(d

i

)

Misra Estimating Dynamic Games

Choice-Specic Value Functions

Based on this denition

V

i

(x) =

_

V

i

(x,

i

) g

i

(d

i

)

and the Bellman equation from above

V

i

(x,

i

) = max

a

i

A

_

i

(a

i

, x) +

i

(a

i

) +

x

/

X

_

_

V

i

_

x

/

,

/

i

_

g

i

_

/

i

_

d

/

i

_

f

i

_

x

/

[x, a

i

_

_

(1)

we can obtain the integrated Bellman equation

V

i

(x) =

_

max

a

i

A

v

i

(a

i

, x) +

i

(a

i

) g

i

(d

i

)

where

v

i

(a

i

, x) =

i

(a

i

, x) +

x

/

X

V

i

_

x

/

_

f

i

_

x

/

[x, a

i

_

which are often referred to as choice-specic value functions.

Misra Estimating Dynamic Games

Markov Perfect Equilibria

Now its time to enforce the fact that describes equilibrium

behavior (i.e. its a best response)

DEFINITION: A stationary Markov perfect equilibrium (MPE)

in this game is a set of strategy functions

+

such that for any

rm i and any (x,

i

) X R

J+1

+

i

(x,

i

) = arg max

a

i

A

_

v

+

i

(a

i

, x) +

i

(a

i

)

_

Ultimately, an equation (somewhat) like this will be the basis

of estimation

Misra Estimating Dynamic Games

Markov Perfect Equilibria

Note that

i

, V

i

, & f

i

only depend on through P, so AM

switch notation to

P

i

, V

P

i

, & f

P

i

so they can represent the

MPE in probability space.

Let

+

be a set of MPE strategies and let P

+

be the

probabilities associated with these strategies

P

+

(a

i

[x) =

_

I a

i

=

+

i

(x,

i

) g

i

(

i

) d

i

Equilibrium probabilities are a xed point (P

+

= (P

+

)),

where, for any vector of probabilities

P, (P) =

i

(a

i

[x; P

i

) and

i

(a

i

[x; P

i

) =

_

I

_

a

i

= arg max

a

i

A

_

v

P

+

i

(a, x) +

i

(a)

_

_

g

i

(

i

) d

i

The functions

i

are best response probability functions

Misra Estimating Dynamic Games

Markov Perfect Equilibria

The equilibrium probabilities solve the coupled xed-point

problems dened by

V

i

(x) =

_

max

a

i

A

v

i

(a

i

, x) +

i

(a

i

) g

i

(d

i

) (2)

and

i

(a

i

[x; P

i

) =

_

I

_

a

i

= arg max

a

i

A

_

v

P

+

i

(a, x) +

i

(a)

_

_

g

i

(

i

) d

i

(3)

Given a set of probabilities P:

The value functions V

P

i

are solutions of the N Bellman

equations in (2), and

Given these value functions, the best response probabilities are

dened by the RHS of (3).

Misra Estimating Dynamic Games

An Alternative Best Response Mapping

Its easier to work with an alternative best response mapping

(in probability space) that avoids the solution of the N

dynamic programming problems in (2).

The evaluation of this mapping is computationally much

simpler than the evaluation of the mapping (P), and it will

prove more convenient for the estimation of the model.

Let P

+

be an equilibrium and let V

P

+

1

, V

P

+

2

, ..., V

P

+

N

be rms

value functions associated with this equilibrium.

Misra Estimating Dynamic Games

Alternative Best Response Mapping

Because equilibrium probabilities are best responses, we can

rewrite the Bellman equation

V

i

(x) =

_

max

a

i

A

v

i

(a

i

, x) +

i

(a

i

) g

i

(d

i

) (2)

as

V

P

+

i

(x) =

a

i

A

P

+

i

(a

i

[x)

_

P

+

i

(a

i

, x) +e

P

+

i

(a

i

, x)

_

+

x

/

X

V

P

+

i

_

x

/

_

f

P

+

_

x

/

[x

_

(4)

where f

P

+

(x

/

[x) is the transition probability of x induced by

P

+

e

P

+

i

(a

i

, x) is the expectation of

i

(a

i

) conditional on x and

on alternative a

i

being optimal for player i

Misra Estimating Dynamic Games

Alternative Best Response Mapping

Note that e

P

+

i

(a

i

, x) is a function of g

i

and P

+

i

(x) only

The functional form depends on the probability distribution g

i

For example, if the

i

(a

i

) are iid T1EV, then

e

P

+

i

(a

i

, x) = E (

i

(a) [x,

+

i

(x,

i

) = a

i

) = ln (P

i

(a

i

[x))

where is Eulers constant & is the logit dispersion

parameter.

Taking equilibrium probabilities as given, expression (4)

V

P

+

i

(x) =

a

i

A

P

+

i

(a

i

[x)

_

P

+

i

(a

i

, x) +e

P

+

i

(a

i

, x)

_

+

x

/

X

V

P

+

i

_

x

/

_

f

P

+

_

x

/

[x

_

describes the vector of values V

P

+

i

as the solution of a system

of linear equations, which can be written in vector form as

_

I F

P

+

_

V

P

+

i

=

a

i

A

P

+

i

(a

i

) +

_

P

+

i

(a

i

) +e

P

+

i

(a

i

)

_

Misra Estimating Dynamic Games

Alternative Best Response Mapping

Let

i

(P

+

) =

i

(x; P

+

) : x X be the solution to this

system of linear equations, such that V

P

+

i

(x) =

i

(x; P

+

) .

For arbitrary probabilities P, the mapping

i

(P) =

_

I F

P

+

_

1

_

a

i

A

P

+

i

(a

i

) +

_

P

+

i

(a

i

) +e

P

+

i

(a

i

)

_

_

(5)

can be interpreted as a valuation operator.

An MPE is then a xed point (P) =

i

(a

i

[x; P) where

i

(a

i

[x; P) =

_

I

_

a

i

= arg max

a

i

A

_

P

i

(a, x) +

i

(a) +

x

/

X

i

_

x

/

; P

_

f

P

i

_

x

/

[x, a

_

__

g

i

(

i

) d

i

(6)

By denition, an equilibrium vector P

+

is a xed point of .

AMs Representation Lemma establishes that the reverse is

also true.

Equation (6) will be the basis of estimation

Misra Estimating Dynamic Games

Estimation

Assume the researcher observes M geographically separate

markets over T periods, where M is large and T is small.

The primitives

i

, g

i

, f , : i I are known to the

researcher up to a nite vector of structural parameters

R

[[

.

is assumed known (its very dicult to estimate)

We now incorporate as an explicit argument in the

equilibrium mapping .

Let

0

be the true value of in the population

Under Assumption 2 (i.e., conditional independence), the

transition probability function f can be estimated from

transition data using a standard maximum likelihood method

and without solving the model.

Misra Estimating Dynamic Games

Assumptions on DGP

Misra Estimating Dynamic Games

Maximum Likelihood Estimation

Dene the pseudo likelihood function

Q

M

(, P) =

1

M

M

m=1

T

t=1

N

i =1

ln

i

(a

imt

[x

mt

; P, )

where P is an arbitrary vector of players choice probabilities.

Consider rst the hypothetical case of a model with a unique

equilibrium for each possible value of .

Then the maximum likelihood estimator (MLE) of

0

can be

dened from the constrained multinomial likelihood

MLE

= arg max

Q

M

(, P) subject to P = (, P)

Misra Estimating Dynamic Games

MLE

However, with multiple equilibria the restriction P = (, P)

does not dene a unique vector P, but a set of vectors.

In this case, the MLE can be dened as

MLE

= arg max

_

_

_

sup

P(0,1)

N[X[

Q

M

(, P) subject to P = (, P)

_

_

_

Thus, for each candidate , we need to compute all the

vectors P that constitute equilibria (given ) and select the

one with the highest value of Q

M

(, P) .

At present, there are no known methods that can do so

(robustly).

Therefore, AM introduce a class of pseudo maximum

likelihood estimators.

Misra Estimating Dynamic Games

Pseudo Maximum Likelihood (PML) Estimation

The PML estimators try to minimize the number of

evaluations of for dierent vectors of players probabilities

P.

Suppose that we know the population probabilities P

0

and

consider the (infeasible) PML estimator

= arg max

Q

M

_

, P

0

_

Under standard regularity conditions, this estimator is

_

M-CAN, but infeasible since P

0

is unknown.

However, if we can obtain a

_

M-consistent nonparametric

estimator of P

0

, then we can dene the feasible two-step PML

estimator

2S

= arg max

Q

M

_

,

P

0

_

Misra Estimating Dynamic Games

PML

_

M-CAN of

P

0

(+ regularity conditions) are sucient to

guarantee that the PML estimator is

_

M-CAN.

There are two good reasons to care about this estimator

1 It deals with the indeterminacy problem associated with

multiple equilibria (its robust)

2 Furthermore, repeated solutions of the dynamic game are

avoided, which can result in signicant computational gains

(its simple)

Misra Estimating Dynamic Games

PML: Drawbacks

However, the two-step PML has some drawbacks

1 Its asymptotic variance depends on the variance of the

nonparametric estimator

P

0

.

Therefore, it can be very inecient when is large.

2 For the sample sizes available in actual applications, the

nonparametric estimator of P

0

can be very imprecise (small

sample bias).

Theres a curse of dimensionality here...

3 For some models, its impossible to obtain consistent

nonparametric estimates of P

0

.

e.g. models with unobserved market characteristics.

To address these issues, AM introduce the Nested Pseudo

Likelihood estimator.

Misra Estimating Dynamic Games

Nested Pseudo Likelihood (NPL) Method

NPL is a recursive extension of the two-step PML estimator.

Let

P

0

be a (possibly inconsistent) initial guess of the vector

of players choice probabilities.

Given

P

0

, the NPL algorithm generates a sequence of

estimators

_

K

: K _ 1

_

, where the K-stage estimator is

dened as

K

= arg max

Q

M

_

,

P

K1

_

and the probabilities

_

P

K

: K _ 1

_

are obtained recursively as

P

K

=

_

K

,

P

K1

_

Misra Estimating Dynamic Games

NPL

If the initial guess

P

0

is a consistent estimator, all elements of

the sequence of estimators

_

K

: K _ 1

_

are consistent

However, AM are interested in the properties of the estimator

in the limit (if it converges)

If the sequence

_

K

,

P

K

_

converges, its limit

_

,

P

_

is such

that

maximizes Q

M

_

,

P

_

and

P =

_

,

P

_

and any pair that does so is a NPL xed point.

AM show that a NPL xed point always exists and, if there is

more than one, the one with the highest value of the pseudo

likelihood is a consistent estimator

Of course, it may be very dicult to nd multiple roots

Misra Estimating Dynamic Games

NPL

NPL preserves the two main advantages of PML

1 Its feasible in models with multiple equilibria, and

2 It minimizes the number of evaluations of the mapping for

dierent values of P

Furthermore

1 Its more ecient than either infeasible or two-step PML

(because it imposes the MPE condition in sample)

2 It reduces the nite sample bias generated by imprecise

estimates of P

0

3 It doesnt require initially consistent

P

0

s (so it can

accommodate unobserved heterogeneity)

AM show how to extend NPL to settings with permanent

unobserved heterogeneity, but lets skip that & look at some

Monte Carlos

Misra Estimating Dynamic Games

Monte Carlos

Consider a simple entry/exit example where 5 rms can

operate at most 1 store, so a

it

0, 1

Variable prot is given by

RS

ln (S

mt

)

RN

ln

_

1 +

j ,=i

a

jmt

_

where S

mt

is the size of market m in period t, and

RS

&

RN

are parameters to be estimated

The prot function of an active rm is

imt

(1) =

RS

ln (S

mt

)

RN

ln

_

1 +

j ,=i

a

jmt

_

FC,i

EC

(1 a

im,t1

) +

imt

where

FC,i

is xed cost,

EC

is entry cost, and

imt

~ T1EV

The prot function of an inactive rm is simply

imt

(0) =

imt

Misra Estimating Dynamic Games

Monte Carlos

ln (S

mt

) follows a discrete rst order Markov process, with

known transition matrix and nite support 1, 2, 3, 4, 5

Fixed operating costs are

FC,1

= 1.9,

FC,2

= 1.8,

FC,3

= 1.7,

FC,4

= 1.6,

FC,5

= 1.5

so that rm 5 is most ecient and rm 1 is least ecient.

RS

= 1 and = .95 throughout, but AM will vary

RN

and

EC

Misra Estimating Dynamic Games

Monte Carlos

The space of common knowledge state variables (S

mt

, a

t1

)

has 2

5

5 = 160 cells.

Theres a dierent vector of CCPs for each rm, so the

dimension of the CCP vector for all rms is 5 160 = 800.

For each experiment, they compute a MPE.

The equilibrium is obtained by iterating the best response

probability mapping starting with a 800 1 vector of choice

probabilities (guesses) - (e.g. P

i

(a

i

= 1[x) = .5 \x, i )

They can then calculate the steady state distribution and

generate fake data.

Table II presents some descriptive statistics associated with

the MPE of each experiment.

Misra Estimating Dynamic Games

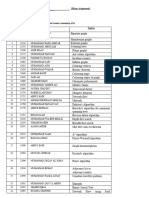

Markov Perfect Equilibria for each experiment

Misra Estimating Dynamic Games

Monte Carlos

They then calculate the two-step PML and NPL estimators

using the following choices for the initial vector of probabilities

1 The true vector of equilibrium probabilities P

0

2 Nonparametric frequency estimates

3 Logits (for each rm) with the log of market size and

indicators of incumbency status for all rms as explanatory

variables, and

4 Independent random draws from a U(0, 1) r.v.

Tables IV and V summarize the results

Misra Estimating Dynamic Games

Monte Carlo Results

Misra Estimating Dynamic Games

Monte Carlo Results

Misra Estimating Dynamic Games

Discussion of Results

The NPL algorithm always converged to the same estimates

(regardless of the value of

P

0

)

The algorithm converged faster when initialized with logit

estimates

The 2S-Freq estimator is highly biased in all experiments,

although its variance is sometimes smaller than the NPL and

2S-True estimators.

Its main drawback is small sample bias...

The NPL estimator performs very well relative to the 2S-True

both in terms of variance and bias.

In all the experiments, the most important gains associated

with the NPL estimator occur for the entry cost parameter.

Misra Estimating Dynamic Games

Motivation for BBL

A big drawback of the AM approach (and PSD & POB as

well) is that its designed for discrete controls (and discrete

states)

A big selling point of BBL is that it can

However,

1 Its not completely clear that AM cant be extended to

continuous controls and states (see, e.g., Arcidiacono and

Miller (2011))

2 The way BBL handles continuous controls is probably

infeasible (unless there are no structural shocks to investment)

3 BBLs objective function can be dicult to optimize, so you

might want to mix & match a bit.

Lets look now at how BBL works...

Misra Estimating Dynamic Games

Background for BBL (HMSS)

The original idea for the methods proposed in BBL came from

Hotz, Miller, Sanders and Smith (1994)

They proposed a two-step (really 3) approach

1 Estimate the transition kernels f (s

/

[s, a) and CCPs P (a[s)

from the data

2 Approximate V

_

s[

P

_

using Monte-Carlo approaches

3 Construct and maximize an objective function to obtain

parameters

Lets look how this might work...

Misra Estimating Dynamic Games

Forward Simulation (HMSS)

For each state (s S) draw a sequence of R future paths as

follows

1 Draw iid shocks

0

(a)

2 Compute optimal policies

P (a[s

0

) and pick action a

0

(

0

, s

0

)

3 Compute payos U

r

0

= u (s

0

, a

0

; ) +

0

(a

0

)

4 Draw next period state s

1

~

f (s

/

[s

0

, a

0

) and repeat for T

iterations.

We can use these to construct Value functions...

Misra Estimating Dynamic Games

Value Function (HMSS)

At some T we stop the forward simulation and use the fact

that

V

r

(s

0

) =

T

t=0

t

U

r

t

To construct

V

_

s

0

[

P

_

=

1

R

R

r =1

V

r

(s

0

)

We can then obtain an estimator using

A Pseudo Likelihood, GMM or Least Squares

max

t

i

a

it

ln

_

a

it

[s

it

,

V

_

min

i

_

t

_

a

it

_

a

it

[s

it

,

V

_

Z

it

_

_

1

_

t

_

a

it

_

a

it

[s

it

,

V

_

Z

it

_

_

/

min

P (a[s)

_

a[s,

V

__

/

W

1

_

P (a[s)

_

a[s,

V

__

Misra Estimating Dynamic Games

Bajari, Benkard, and Levin (Ema, 2007)

Estimating Dynamic Models of Imperfect Competition

So what does BBL do?

Extends HMSS to games

Generalizes the idea to continuous actions

Proposes an inequality conditions for estimation (Bounds

estimator)

The key element of BBL is that it (like AM 2007) allows the

research to be agnostic about equilibrium selection

and side-step the multiple equilibria problem.

Misra Estimating Dynamic Games

Bajari, Benkard, and Levin (Ema, 2007)

Estimating Dynamic Models of Imperfect Competition

Notation

The game is in discrete time with an innite horizon

There are N rms, denoted i = 1, ..., N making decisions at

times t = 1, 2, ...,

Conditions at time t are summarized by discrete states

s

t

S

L

Given s

t

, rms choose actions simultaneously

Let a

it

A

i

denote rm i s action at time t, and

a

t

= (a

1t

, ..., a

Nt

) the vector of time t actions

Misra Estimating Dynamic Games

Notation

Before choosing its action, each rm i receives a private shock

it

drawn iid from G

i

([s

t

) with support 1

i

M

Denote the vector of private shocks

t

= (

1t

, ...,

Nt

)

Firm i s prots are given by

i

(a

t

, s

t

,

it

) and rms share a

common (& known) discount factor < 1

Given s

t

, rm i s expected prot (prior to seeing

it

) is

E

_

=t

t

i

(a

, s

,

i

)[s

t

_

where the expectation is over current shocks and actions, as

well as future states, actions, and shocks.

Misra Estimating Dynamic Games

State Transitions & Equilibrium

s

t+1

is drawn from a probability distribution P (s

t+1

[a

t

, s

t

)

They focus on pure strategy MPE

A Markov strategy is a function

i

: S 1

i

A

i

A prole of Markov strategies is a vector, = (

1

, ...,

N

) ,

where : S 1

1

... 1

N

A

Given , rm i s expected prot can then be written

recursively

V

i

(s; ) = E

i

( (s, ) , s,

i

) +

_

V

_

s

/

;

_

dP

_

s

/

[ (s, ) , s

_

[s

_

The prole is a MPE if, given opponent prole

i

, each

rm i prefers strategy

i

to all other alternatives

/

i

V

i

(s; ) _ V

i

_

s;

/

i

,

i

_

Misra Estimating Dynamic Games

Structural Parameters

The structural parameters of the model are the discount factor

, the prot functions

1

, ...,

N

, the transition probabilities

P, and the distributions of private shocks G

1

, ..., G

N

.

Like AM, they treat as known and estimate P directly from

the observed state transitions.

They assume the prots and shock distributions are known

functions of a parameter vector :

i

(a, s,

i

; ) and

G

i

(

i

[s, ) .

The goal is to recover the true under the assumption that

the data are generated by a MPE.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

Their main (novel) example is based on the EP framework.

Incumbent rms are heterogeneous, each described by its

state z

it

1, 2, ..., z ; potential entrants have z

it

= 0

Incumbents can make an investment I

it

_ 0 to improve their

state

An incumbent rm i in period t earns

q

it

(p

it

mc (q

it

, s

t

; )) C (I

it

,

it

; )

where p

it

is rm i s price, q

it

= q

i

(s

t

, p

t

; ) is quantity,

mc () is marginal cost, and

it

is a shock to the cost of

investment.

C (I

it

,

it

; ) is the cost of investment.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

Competition is assumed to be static Nash in prices.

Firms can also enter and exit.

Exitors receive and entrants pay

et

, an iid draw from G

e

In equilibrium, incumbents make investment and exit decisions

to maximize expected prots.

Each incumbent i uses an investment strategy I

i

(s,

i

) and

exit strategy

i

(s,

i

) chosen to maximize expected prots.

Entrants follow a strategy

e

(s,

e

) that calls for them to

enter if the expected prot from doing so exceeds its entry

cost.

Misra Estimating Dynamic Games

First Stage Estimation

The goal of the rst stage is to estimate the state transition

probabilities P(s

/

[a, s) and equilibrium policy functions (s, )

The second stage will use the equilibrium conditions from

above to estimate the structural parameters

In order to obtain consistent rst stage estimates, they must

assume that the data are generated by a single MPE prole

This assumption has a lot of bite if the data come from

multiple markets

Its quite weak if the data come from only a single market

Misra Estimating Dynamic Games

First Stage Estimation

Stage 0:

The static payo function will typically be estimated o line

in a 0

th

stage (e.g. BLP, Olley-Pakes)

Stage 1:

Its usually fairly straightforward to run the rst stage: just

regress actions on states in a exible manner.

Since these are not structural objects, you should be as exible

as possible. Why?

Of course, if s is big, you may have to be very parametric here

(i.e. OLS regressions and probits).

In this case, your second stage estimates will be inconsistent...

Continuous actions are especially tricky (hard to be

nonparametric here)

Misra Estimating Dynamic Games

Estimating the Value Functions

After estimating policy functions, rms value functions are

estimated by forward simulation.

Let V

i

(s, ; ) denote the value function of rm i at state s

assuming rm i follows the Markov strategy

i

and rival rms

follow

i

Then

V

i

(s, ; ) = E

_

0=t

t

i

( (s

t

,

t

) , s

t

,

it

; )[s

0

= s;

_

where the expectation is over current and future values of s

t

and

t

Given a rst-stage estimate

P of the transition probabilities,

we can simulate the value function V

i

(s, ; ) for any strategy

prole and parameter vector .

Misra Estimating Dynamic Games

Estimating the Value Functions

A single simulated path of play can be obtained as follows:

1 Starting at state s

0

= s, draw private shocks

i 0

from

G

i

([s

0

, ) for each rm i .

2 Calculate the specied action a

i 0

=

i

(s

0

,

i 0

) for each rm i ,

and the resulting prots

i

(a

0

, s

0

,

i 0

; )

3 Draw a new state s

1

using the estimated transition

probabilities

P ([a

0

, s

0

)

4 Repeat steps 1-3 for T periods or until each rm reaches a

terminal state with known payo (e.g. exits from the market)

Averaging rm i s discounted sum of prots over many paths

yields an estimate

V

i

(s, ; ) , which can be obtained for any

(, ) pair, including both the true prole (which you

estimated in the rst stage) and any alternative you care to

construct.

Misra Estimating Dynamic Games

Special Case of Linearity

Forward simulation yields a low cost estimate of the Vs for

dierent s given , but the procedure must be repeated for

each candidate .

One case is simpler.

If the prot function is linear in the parameters so that

i

(a, s,

i

; ) =

i

(a, s,

i

)

we can then write the value function as

V

i

(s, ; ) = E

_

t=0

t

i

( (s

t

,

t

) , s

t

,

it

)[s

0

= s

_

= W

i

(s; )

In this case, for any strategy prole , the forward simulation

procedure only needs to be used once to construct each W

i

.

You can then obtain V

i

easily for any value of .

Misra Estimating Dynamic Games

Second Stage Estimation

The rst stage yields estimates of the policy functions, state

transitions, and value functions.

The second stage uses the models equilibrium conditions

V

i

(s;

i

,

i

; ) _ V

i

_

s;

/

i

,

i

;

_

to recover the parameters that rationalize the strategy

prole observed in the data.

They show how to do so for both set and point identied

models

We will focus on the point identied case here.

Misra Estimating Dynamic Games

Second Stage Estimation

To see how the second stage works, dene

g (x; , ) = V

i

(s;

i

,

i

; , ) V

i

_

s;

/

i

,

i

; ,

_

where x A indexes the equilibrium conditions and

represents the rst-stage parameter vector.

The inequality dened by x is satised at , if g (x; , ) _ 0

Dene the function

Q (, ) =

_

(min g (x; , ) , 0)

2

dH(x)

where H is a distribution over the set A of inequalities.

Misra Estimating Dynamic Games

Second Stage Estimation

The true parameter vector

0

satises

Q (

0

,

0

) = 0 = min

Q(,

0

)

so we can estimate by minimizing the sample analog of

Q(,

0

)

The most straightforward way to do this is to draw rms and

states at random and consider alternative policies

/

i

that are

slight perturbations of the estimated policies.

Misra Estimating Dynamic Games

Second Stage Estimation

We can then use the above forward simulation procedure to

construct analogues of each of the V

i

terms and construct

Q (, ) =

1

n

I

n

I

k=1

(min g (X

k

; , ) , 0)

2

How? By drawing n

I

dierent alternative policies, computing

their values, nding the dierence versus the optimal policy

payo, and using an MD procedure to estimate the

parameters that minimize these protable deviations.

Their estimator minimizes the objective function at =

n

= arg min

Q

n

(,

n

)

See the paper for the technical details.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

Lets see how they estimate the EP model.

First, they have to choose some parameterizations.

They assume a logit demand system for the product market.

There are M consumers with consumer r deriving utility U

ri

from good i

U

ri

=

0

ln(z

i

) +

1

ln (y

r

p

i

) +

ri

where z

i

is the quality of rm i , p

i

is rm i s price, y

r

is

income, and

ri

is an iid logit error

All rms have identical constant marginal costs of production

mc(q

i

; ) =

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

Each period, rms choose investment levels I

it

+

to

increase their quality in the next period.

Firm i s investment is successful with probability

I

it

(1 + I

it

)

in which case quality increases by one, otherwise it doesnt

change.

There is also an outside good, whose quality moves up by one

with probability each period.

Firm i s cost of investment is

C(I

i

) = I

i

so there is no shock to investment (its deterministic)

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

The scrap value is constant and equal for all rms.

Each period, the potential entrant draws a private entry cost

et

from a uniform distribution on

_

L

,

H

The state variable s

t

= (N

t

, z

1t

, ..., z

Nt

, z

out,t

) includes the

number of incumbent rms and current product qualities.

The model parameters are

0

,

1

, , , ,

L

,

H

, , , , & y

They assume that & y are known, & are transition

parameters estimated in a rst stage,

0

,

1

, & are demand

parameters (also estimated in a rst stage), so the main

(dynamic) parameters are simply =

_

, ,

L

,

H

_

Due to the computational burden of the PM algorithm, they

consider a setting in which only _ 3 rms can be active.

They generated datasets of length 100-400 periods using PM.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

Here are the parameters they use.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

The rst stage requires estimation of the state transitions and

policy functions (as well as the demand and mc parameters).

For the state transitions, they used the observed investment

levels and qualities to estimate and by MLE.

They estimated the demand parameters by MLE as well, using

quantity, price, and quality data.

They recover from the static mark-up formula.

They used local linear regressions with a normal kernel to

estimate the investment, entry, and exit policies.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

Given strategy prole = (I , ,

e

), the incumbent value

function is

V

i

(s; ) = W

1

(s; ) +W

2

(s; ) +W

3

(s; )

= E

_

t=0

t

i

(s

t

)[s = s

0

_

E

_

t=0

t

I

i

(s

t

)[s = s

0

_

+E

_

t=0

t

i

(s

t

)[s = s

0

_

where the rst term

i

(s

t

) is the static prot of incumbent i

given state s

t

The 2

nd

term is the expected PV of investment

The 3

rd

term is the expected PV of the scrap value earned

upon exit.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

To apply the MD estimator, they constructed alternative

investment and exit policies by drawing a mean zero normal

error and adding it to the estimated rst stage investment and

exit policies.

They used n

s

= 2000 simulation paths, each having length at

most 80, to compute the PV W

1

, W

2

, W

3

terms for these

alternative policies.

They can then estimate & using their MD procedure.

Its also straightforward to estimate the entry cost distribution

(parametrically or non-parametrically) - see the paper for

details.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

Truth: = 1 & = 6

For small sample sizes, there is a slight bias in the estimates

of the exit value.

Investment cost parameters are spot on.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

Truth: = 1, = 6,

l

= 7, &

h

= 11

The subsampled standard errors are on average slightly

smaller than the true SEs.

This is likely due to small sample sizes.

Misra Estimating Dynamic Games

Example: Dynamic Oligopoly

The entry cost distribution is recovered quite well, despite

small sample size (and few entry events).

Misra Estimating Dynamic Games

Conclusions

Both AM & BBL are based on the same underlying idea (CCP

estimation)

As such, its quite possible to mix and match from the two

approaches

e.g. forward simulate the CV terms and use a MNL likelihood

We have found AM-style approaches easier to implement, but

that might be idiosyncratic.

Applications in marketing are growing: Goettler and Gordon

(2012), Ellickson, Misra, Nair (2012), Misra and Nair (2011),

Chung et al. (2012) ...

If you are interested in applying this stu, you should read

everything you can get your hands on!

Misra Estimating Dynamic Games

Das könnte Ihnen auch gefallen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (120)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Construction Management Plan TemplateDokument15 SeitenConstruction Management Plan TemplatePrasad Ghorpade100% (2)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- Harvard 2011Dokument141 SeitenHarvard 2011moidodyr100% (5)

- Harvard 2011Dokument141 SeitenHarvard 2011moidodyr100% (5)

- BMath3 ProjectDokument6 SeitenBMath3 ProjectRainbow VillanuevaNoch keine Bewertungen

- Wharton CasebookDokument130 SeitenWharton CasebookLucas Piroulus100% (2)

- MercerDokument33 SeitenMercersadir16100% (4)

- This Study Resource Was: Memory TestDokument8 SeitenThis Study Resource Was: Memory TestDanica Tolentino67% (3)

- KelloggDokument105 SeitenKelloggSamuel LeeNoch keine Bewertungen

- Dynamic Prcing EstimationDokument69 SeitenDynamic Prcing EstimationLucas PiroulusNoch keine Bewertungen

- SSRN Id2823221 PDFDokument56 SeitenSSRN Id2823221 PDFLucas PiroulusNoch keine Bewertungen

- Algorithms: Variable Selection in Time Series Forecasting Using Random ForestsDokument25 SeitenAlgorithms: Variable Selection in Time Series Forecasting Using Random ForestsLucas PiroulusNoch keine Bewertungen

- SSRN Id2652755 PDFDokument46 SeitenSSRN Id2652755 PDFLucas PiroulusNoch keine Bewertungen

- Mit PDFDokument61 SeitenMit PDFLucas PiroulusNoch keine Bewertungen

- 11 Stat PrelimDokument6 Seiten11 Stat PrelimLucas PiroulusNoch keine Bewertungen

- 博弈论讲义8 不完全信息动态博弈 PDFDokument46 Seiten博弈论讲义8 不完全信息动态博弈 PDFLucas PiroulusNoch keine Bewertungen

- Stable Pdfplus 2555627Dokument12 SeitenStable Pdfplus 2555627Lucas PiroulusNoch keine Bewertungen

- Signaling: Economics 302 - Microeconomic Theory II: Strategic BehaviorDokument10 SeitenSignaling: Economics 302 - Microeconomic Theory II: Strategic BehaviorLucas PiroulusNoch keine Bewertungen

- Ps2solution PDFDokument4 SeitenPs2solution PDFLucas PiroulusNoch keine Bewertungen

- ExdataDokument198 SeitenExdataTarun N. O'Brain GahlotNoch keine Bewertungen

- KTN Omitted VariablesDokument6 SeitenKTN Omitted VariablesCristina CNoch keine Bewertungen

- Problem2 PDFDokument7 SeitenProblem2 PDFLucas PiroulusNoch keine Bewertungen

- MicroA Choice Post 2014Dokument62 SeitenMicroA Choice Post 2014Lucas PiroulusNoch keine Bewertungen

- Holger Seig-Problem SetDokument5 SeitenHolger Seig-Problem SetLucas PiroulusNoch keine Bewertungen

- CV Kolstad 13Dokument4 SeitenCV Kolstad 13Lucas PiroulusNoch keine Bewertungen

- Farnsworth Grant V.-Econometrics in RDokument50 SeitenFarnsworth Grant V.-Econometrics in RRalf SanderNoch keine Bewertungen

- Texas Structural Estimation LectureDokument60 SeitenTexas Structural Estimation LectureLucas PiroulusNoch keine Bewertungen

- Upamanyu Bhattacharyya: Echostream Design ConsultancyDokument1 SeiteUpamanyu Bhattacharyya: Echostream Design ConsultancyLucas PiroulusNoch keine Bewertungen

- CV Santosh Anagol 7Dokument4 SeitenCV Santosh Anagol 7Lucas PiroulusNoch keine Bewertungen

- Bhattacharjee CV 1Dokument4 SeitenBhattacharjee CV 1Lucas PiroulusNoch keine Bewertungen

- Optimal Dynamic Contracting: October 2012Dokument52 SeitenOptimal Dynamic Contracting: October 2012Lucas PiroulusNoch keine Bewertungen

- An Assessment ofDokument27 SeitenAn Assessment ofLucas PiroulusNoch keine Bewertungen

- Tree DiagramDokument12 SeitenTree DiagramLucas PiroulusNoch keine Bewertungen

- SSRN Id2470676Dokument17 SeitenSSRN Id2470676Lucas PiroulusNoch keine Bewertungen

- Sudhir-Demand Estimation-Aggregate Data Workshop-Updated 2013Dokument72 SeitenSudhir-Demand Estimation-Aggregate Data Workshop-Updated 2013Lucas PiroulusNoch keine Bewertungen

- Hostel Survey Analysis ReportDokument10 SeitenHostel Survey Analysis ReportMoosa NaseerNoch keine Bewertungen

- Week 6 Team Zecca ReportDokument1 SeiteWeek 6 Team Zecca Reportapi-31840819Noch keine Bewertungen

- STC 2010 CatDokument68 SeitenSTC 2010 Catjnovitski1027Noch keine Bewertungen

- Pilot Test Evaluation Form - INSTRUCTORDokument9 SeitenPilot Test Evaluation Form - INSTRUCTORKaylea NotarthomasNoch keine Bewertungen

- Questionnaire of Measuring Employee Satisfaction at Bengal Group of IndustriesDokument2 SeitenQuestionnaire of Measuring Employee Satisfaction at Bengal Group of IndustriesMuktadirhasanNoch keine Bewertungen

- Drpic Syllabus TheMedievalObject 2015Dokument8 SeitenDrpic Syllabus TheMedievalObject 2015Léo LacerdaNoch keine Bewertungen

- Thesis Chapter IiiDokument6 SeitenThesis Chapter IiiJohn Rafael AtienzaNoch keine Bewertungen

- 7.IITD 2012 Theory of VibrationDokument9 Seiten7.IITD 2012 Theory of Vibrationlaith adnanNoch keine Bewertungen

- Graph Theory (B)Dokument2 SeitenGraph Theory (B)Waqar RoyNoch keine Bewertungen

- EI in NegotiationsDokument22 SeitenEI in NegotiationspranajiNoch keine Bewertungen

- Guide Specification - SDP 200: GeneralDokument10 SeitenGuide Specification - SDP 200: GeneralhbookNoch keine Bewertungen

- Remaking The Indian Historians CraftDokument9 SeitenRemaking The Indian Historians CraftChandan BasuNoch keine Bewertungen

- Scheme of Work Writing Baby First TermDokument12 SeitenScheme of Work Writing Baby First TermEmmy Senior Lucky100% (1)

- Angular With Web ApiDokument32 SeitenAngular With Web ApiAnonymous hTmjRsiCp100% (1)

- Chapter 6Dokument13 SeitenChapter 6Melissa Nagy100% (1)

- UntitledDokument30 SeitenUntitledGauravNoch keine Bewertungen

- MAX31865 RTD-to-Digital Converter: General Description FeaturesDokument25 SeitenMAX31865 RTD-to-Digital Converter: General Description FeaturespaKoSTe1Noch keine Bewertungen

- Solutions of 6390 Homework 4Dokument12 SeitenSolutions of 6390 Homework 4mukesh.kgiri1791Noch keine Bewertungen

- Perkembangan Kurikulum USADokument25 SeitenPerkembangan Kurikulum USAFadhlah SenifotoNoch keine Bewertungen

- Mini Test PBD - SpeakingDokument4 SeitenMini Test PBD - Speakinghe shaNoch keine Bewertungen

- Attachment 4A Security Classification GuideDokument42 SeitenAttachment 4A Security Classification GuidepingNoch keine Bewertungen

- Chapter 4Dokument21 SeitenChapter 4Ahmad KhooryNoch keine Bewertungen

- Module 2Dokument4 SeitenModule 2Amethyst LeeNoch keine Bewertungen

- Double Helix Revisited PDFDokument3 SeitenDouble Helix Revisited PDFPaulo Teng An SumodjoNoch keine Bewertungen

- The Scientific Method Is An Organized Way of Figuring Something OutDokument1 SeiteThe Scientific Method Is An Organized Way of Figuring Something OutRick A Middleton JrNoch keine Bewertungen