Beruflich Dokumente

Kultur Dokumente

A Comparison Between Neural Network and Box Jenkins Forecasting Techniques With Application To Real Data PDF

Hochgeladen von

Dr_Gamal1Originalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

A Comparison Between Neural Network and Box Jenkins Forecasting Techniques With Application To Real Data PDF

Hochgeladen von

Dr_Gamal1Copyright:

Verfügbare Formate

A comparison between NNF and BJF - Al-shawadfi

A comparison between Neural Network

and Box-Jenkins Forecasting Techniques

With Application to Real Data

Dr. Gamal A. Al-Shawadfi

Statistics Department

Faculty of Commerce Al-Azhar University

Cairo Egypt

E. mail: Dr_Gamal1@yahoo.com

ABSTRACT

This paper has two objects. First, we present artificial neural

networks method for forecasting linear and nonlinear time

series. Second , we compare the proposed method with the well

known Box-Jenkins method through a simulation study. To achieve

these objects 16000 samples , generated from different ARMA

models , were used for the network training. Then the system was

tested for generated data . The accuracy of the neural network

forecasts(NNF) is compared with the corresponding Box-Jenkins

forecasts(BJF) by using three tools: the mean square error (MSE) ,

the mean absolute deviation of error (MAD) and the ratio of

closeness from the true values(MPE) . Two macro computer

programs were written, with MALTAB package. The first for NN

training , testing and comparing with Box-Jenkins method ,and the

second for calculating automatically the proposed neural network

forecasts.

A comparison between NNF and BJF - Al-Shawadfi

2

Using the measures mentioned above, the artificial neural

networks were found deliver a better forecasts than the Box-

Jenkins technique.

Key Words

Neural network forecasts (NNF). Networks architecture,

Neuro-computing, Matlab package toolboxes, Artificial Intelligence,

Network training and Learning, Box-Jenkins Forecasts (BJF).

[1] INTRODUCTION and Literature Review

Neural network technology is a useful forecasting tool for

market researchers, financial analysts and business consultants .

Neural networks can be used to answer questions such as:

What will sales be next year?

Which way will the stock market turn tomorrow?

Who of my customers are most likely to respond to a direct

mailing?

How can I pinpoint the fastest growing cities to select the

most profitable locations for my new stores?

These types of questions are difficult to answer with traditional

analytical tools.

The major difficulty with the analysis of time series data

generated from ARMA models is that the likelihood function is

analytically intractable because there is no closed form for the

precision matrix or for the determinant of the variance-covariance

A comparison between NNF and BJF - Al-Shawadfi

3

matrix in terms of the parameters directly. Furthermore, with any

prior distribution the posterior distributions are not standard, which

means that inferences about the parameters must be done

numerically. Thus as the sample size increases, computation of

the likelihood function becomes increasingly laborious. To solve

these problems the statistician uses approximation and/or

numerical techniques. Choi (1992) , p. 31, stated that Box-

Jenkins method is not very useful for identifying mixed ARMA

models , because even the graph of ACF and PACF are error

free, the simple inspection of their graphs would not , in general ,

yield unique values of number of the parameters. The difficulty is

compounded when their estimates are substituted for the true

functions.

Neural networks are flexible tools in a dynamic environment.

They have the capacity to learn rapidly and change quickly. The

goal of the neural network approach was to improve the timeliness

of the forecasts without losing accuracy. A neural networks

models can be easily modified and retrained for different

phenomena, where as classical models require a great deal of

expert knowledge in their construction and are therefore very

problem specific .

Because neural networks are non-parametric data-driven

models rather than parametric data-driven models, they give much

better results despite the unavailability of a good underlying theory

or model of the observed phenomena.

A comparison between NNF and BJF - Al-Shawadfi

4

There are many books and articles with theoretical aspects,

methodological advances, and practical applications and computer

software in domains relating to statistical and computational time

series data analysis. Box and Jenkins (1976) presents the most

popular classical procedure to analyze the ARMA models. The

procedure consists of four phases: the identification, estimation,

diagnostic checking and forecasting phases. The identification

phase determines the initial number of autoregressive (AR) and

moving average (MA) parameters using the autocorrelation and

partial autocorrelation functions. The estimation phase is based on

the maximum likelihood or nonlinear least squares estimates. The

diagnostic checking phase examines the residuals to see the

adequacy of the identified model. The last phase is the forecasting

of the future observations using their conditional expectations.

Box Jenkins procedure has been explained also by

Pankratz(1983), Harvey(1990), and others.

The time series analysis of some related ARMA models has

been discussed in Dijk and et al(1999) and Lutkepohl (1993). The

properties of ARIMA models are discussed also by Dunsmuir and

Spencer (1991), Souza and Neto (1996) and Makridakis , S. and

H. Michele (1997) and Mcleod(1999).

Although there are many papers concerning classical time

series analysis in the past three decades , there is a few papers

in time series analysis using neural networks. Swanson(1995)

takes a model selection approach to the question of whether a

class of adaptive prediction models , which is a branch of artificial

neural networks, are useful for predicting future values of 9

A comparison between NNF and BJF - Al-Shawadfi

5

macroeconomic variables. He used a variety of out-of-sample

forecast-based model selection criteria including forecast error

measures and forecast direction accuracy to compare his

predictions to professionally available survey predictions . For

some details about using the neural networks in statistical analysis

,see references Arminger and Enache(1995), Allende and et al

(1999) , Cheng and et al(1997), Refenes and et al (1994), and

Zhang(2001). Comprehensive discussions and details of artificial

neural networks are found in some books and articles see, for

instance , Elman(1993) , Hertz and et al. (1991) and Tsoi and Tan

(1997).

Several well-known computer packages are widely available

and can be utilized to relieve the user of the computational

problem; all of which can be used to solve both linear and

polynomial equations: SPSS (Statistical Package for the Social

Sciences) developed by the University of Chicago; SAS

(Statistical Analysis System) and the BMPD packages (Biomedical

Computer Programs). Another package that is also available is

IMSL, the International Mathematical and Statistical Libraries,

which contains a great variety of standard mathematical and

statistical calculations. There are also many packages which

include time series analysis , some of them are : MINITAB, CSS,

SYSTAT, SIGMASTAT and VIEWSTAT. All of these software

packages use matrix algebra to solve simultaneous equations. A

program called Webstat is now available in the World Wide Web

in www.webstat.com site . This program may be used for doing

statistical analysis through the internet. For more details about

A comparison between NNF and BJF - Al-Shawadfi

6

time series and neural networks software , see references

Aghadazeh and Romal (1992) , Morgan (1998), Oster (1998),

Rahlf(1994) , Rycroft(1993), sahay(1996), Small(1997),

Sparks(1997), Tashman and Leach(1991) and West and et al

(1998).

The first object of this research is to use the Artificial Neural

Network (ANN) in the forecasting of an appropriate ARMA model

to a given sample of data. The proposed approach will be

applied to a very wide class of data samples representing

different ARMA models .To achieve this object a set of generated

data from suitable autoregressive moving average ( ARMA )

models will be used for the training of the network , then the

system will be tested for both generated and real world data .

The accuracy of the forecasts are measured by its closeness of the

actual values through suitable distance functions. The second

object is to compare the proposed approach forecasts to Box

Jenkins forecasts to show whether or not the proposed approach

has a good performance for the forecasting of ARMA models.

Matlab software packages will be used to analyze the

data and a macro computer program will be designed using

Matlab to achieve time series forecasting automatically . Also,

the proposed approach will be demonstrated with Box-Jenkins

forecasting methodology through some applied examples .The

paper also demonstrates the applicability of the proposed

forecasting ANN approach in the forecasting of real data.

A comparison between NNF and BJF - Al-Shawadfi

7

This paper is organized as follows. A brief overview of the

fundamentals of ANN and Box-Jenkins techniques is presented in

section [2] . Next, the time series NN model and the proposed

network architecture are introduced in section [3] . The proposed

time series network is trained in section [4] . In section [5] , we will

do a simulation study for testing and comparing the accuracy of

forecasts for both the proposed network and Box-Jenkins

techniques . Also , some examples for forecasting real time series

data are given . Finally , summary and conclusions are introduced

in section [6] .

[2] NEURAL NETWORKS and Box-Jenkins

[2-1] NEURAL NETWORKS

Neural networks are a type of artificial intelligence

technology that mimic the human brains powerful ability to

recognize patterns . The neural network consists of processing

units or neurons and connections that are organized in layers , this

layers are input , hidden and output layers . Each neuron receives

a number of inputs and produces output which will be the input to

another neuron or returned to the user as network output . The

connection between the different neurons are associated with

weights that reflect the strength of the relationships between the

connected neurons . Each node in the hidden layer is fully

connected to the inputs. That means what is learned in a hidden

node is based on all the inputs taken together. This hidden layer is

where the network learns interdependencies in the model.

A comparison between NNF and BJF - Al-Shawadfi

8

There are four main transfer functions, these being :

sigmoidal ,linear , radial basis , and cosine. The most used

transfer function is the sigmoid function . Sigmoidal transfer

functions are easy to differentiate and eases the computation of

the gradient. Linear transfer functions are mostly in the output

neurons of networks in some time series applications. In most

cases the transfer functions composed of linear combination.

These kinds of units are called perceptrons. Feedforward

networks consist only of nodes that feedforward to the output. The

structures of this network differ only in the number of inputs, their

temporal order, the type of synaptic connections between nodes

and the number of hidden layers.

Because of the possibility of using nonlinear transfer function

in the hidden layer and in the output layer, very complex nonlinear

approximation functions can be built from simple components. The

complexity of the network then depends on the number of layers

as well as in the number of units in the hidden layer (hidden units).

The nonlinear transformation and the hidden units create a flexible

approximation function with a complex parameterization.

It is noted that feedforward networks are much easier to

construct and train than their recurrent counterparts. It is however

also clear that recurrent networks are much better temporal

processors than feedforward networks and they are structurally an

order of magnitude smaller than feed forward networks. Some

details of artificial neural networks are found in Elman(1993) ,

Hertz and et al. (1991) and Tsoi and Tan (1997) .

A comparison between NNF and BJF - Al-Shawadfi

9

[2-2] Box-Jenkins Forecasting Method

Box-Jenkins forecasting models are based on statistical

concepts and principles and are able to model a wide spectrum of

time series behavior. It has a large class of models to choose from

and a systematic approach for identifying the correct model form.

There are both statistical tests for verifying model validity and

statistical measures of forecast uncertainty. In contrast, traditional

forecasting models offer a limited number of models relative to the

complex behavior of many time series with little in the way of

guidelines and statistical tests for verifying the validity of the

selected model.

The univariate version of this methodology is a self-

projecting time series forecasting method. The underlying goal is to

find an appropriate formula so that the residuals are as small as

possible and exhibit no pattern. The model- building process

involves four steps. Repeated as necessary, to end up with a

specific formula that replicates the patterns in the series as closely

as possible and also produces accurate forecasts. The models

estimated by this procedure are :

y

t

=

1

y

t-1

+ +

p

y

t-p

+

1

t-1

+ + q

t-q

+

t

(2- 1)

where y

t

is the observation at time t ,

t

is a random shock ,

i

s and

j

s are parameters. If this is desired. More compactly :

(L)

d

V y

t

= (L)

t

(2- 2)

A comparison between NNF and BJF - Al-Shawadfi

01

where (L)and (L) are polynomials in the lag operator and

d

V y

t

= (1 - L)

d

y

t

, d = 0,1,2,.. , and L is the lag operator L y

t

= y

t-1

. We may also specify no differences (d = 0), which will be most of

the time. If we leave out (L) as well, (p=0), a pure moving

average process results. Do note that this estimator requires that

q, the order of the moving average part, be greater than 0. If q = 0,

we will have pure autoregressive process and we can just estimate

the equation by least squares method . As Box-Jenkins(1976)

mentioned ( p. 11) , in most practical time series , the order of p ,

d and q does not increase 2.

It should be noted that the misuse, misunderstanding, and

inaccuracy of forecasts is often a result of not appreciating the

nature of the data in hand. The consistency of the data must be

insured and it must be clear what the data represents and how it

was gathered or calculated. As a rule of thumb, Box-Jenkins

requires at least 40 or 50 equally-spaced periods of data , see

Pankratz(1983) , p. 297. The data must also be edited to deal with

extreme or missing values or other distortions through the sue of

functions as log or the difference to achieve stabilization.

[3] The neural network model and the time

series network architecture

A class of artificial neural networks (ANN) may be used in

forecasting time series data . It may be used to approximate an

unknowns expectation function of future observation y

n+1

given

past values y

n

y

n-1

, . Thus the weights of these ANN can be

viewed as parameters , which can be estimated through the

A comparison between NNF and BJF - Al-Shawadfi

00

network training . Then the model is used for forecasting . The

accuracy of the forecasts is evaluated by suitable functions .

Depending on the chosen evaluation functions , the suitable

forecasting model will be discussed and some significance tests

are described .

Artificial neural networks (ANN) for prediction can be written

as nonlinear regression model containing input variables past

values of the series ys , or a transformation of it xs, where

X = ( x

t

,x

t-1

, , , ,x

t-p

)

, and output :

z =( z

t+1

,z

t+2

, , , ,z

t+h

)

These models may be used to approximate unknown deterministic

relations :

Z = v(X)

(3-1)

or stochastic relations

Z = v(X)+

(3-2)

With E( | X) = 0, The function v(X)= E(Z | X ) is usually unknown

and is to be approximated as loosely as possible by a function

g(x,w) ,w is a set of free parameters , called weights , which is an

element of a parameters space and is to be estimated from a

training set (sample) . The parameters space and the parameter

array both depend on choice of the approximation function g(x,w) .

Since no priori assumptions are made regarding the functional

A comparison between NNF and BJF - Al-Shawadfi

02

form of v(x) , the neural model g(x,w) is a non-parametric estimator

of the conditional density E(Z/X), as opposed to a parametric

estimator where the functional form is assumed priori , for

example in ARMA model.

An observation y

t+1

generated by the ARMA model , in

equation (2-2), may be expressed as an infinite weighted sum of

previous observations and added random shock i.e. :

1 t

1 j

j - 1 t j 1 t

y y

+

=

+ +

c + t =

(3 4)

where

1

1 j

j

=

= t

(3 5)

and the weights may be obtained from :

) B ( ...)

2

B

2

- B

1

- (1 ) B ( u t t =

(3 - 6)

so the ARMA model in equation (2 -2 ) may be seen as a

special case of the proposed neural network (3 -2 ) :

y v(x)

1 j

j - 1 t j

=

+

t =

( 3 7)

In general the proposed approximation NN model may have

the form :

Z

t+1

= g(x,w) +

t+1

A comparison between NNF and BJF - Al-Shawadfi

03

(3-8)

In predicting Z with the approximation function (3-8) , two

types of errors can occur. First, is the stochastic error , second

{v(x) g(x,w)} is the approximation error . The second error is

equivalent to the identification error in miss-specified nonlinear

time series models. The error distribution may not specified in

contrast to many statistical models. The parametric identification of

the approximation function g(x,w) is called network architecture

and is a function of a composition of linear or nonlinear functions.

Usually it can be visualized by graphs in which sub-functions are

represented by circles and a transfer of results from one to

another sub-functions by arrows .

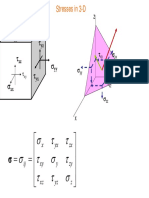

Figure [3-1] , from Lutkepohi (1993) , shows the graphical

representations of linear or nonlinear autoregressive time series

model as a network model . Its architecture consists of one hidden

layer which combines linearly - or nonlinearly - the input series x

t

,

x

t-1

, , , ,x

t-p

with the parameter vector ( or weights ) w in the

transfer function and returns the result as inputs to other transfer

function and the future forecasts zs .

A comparison between NNF and BJF - Al-Shawadfi

04

FIGURE [3 - 1]

THE Neural Network structure

In figure [3-1], the bottom layer represents the input

layer(time series or a transformation of it ). In this case, there are

5 inputs labeled X

1

through X

5

. In the middle there is something

called the hidden layer, with a variable number of nodes ( m ) . It

is the hidden layer that performs much of the work of the network.

The output layer in this case has two nodes, Z

1

and Z

2

representing output values we are trying to determine from the

inputs. For example, we may be trying to predict future sales

(output) based on past sales (input).

[4] Network Training

To train the network a backpropagation algorithm with

momentum was used, which is an enhancement of the

backpropagation algorithm. The back propagation network (BPN)

is a supervised learning network and its output value is continuous.

A comparison between NNF and BJF - Al-Shawadfi

05

The network learns by comparing the actual network output and

the target; then it updates the weights by computing the first

derivatives of the objective function, and use momentum to escape

from local minima. In the training process, we use 16000 Samples

were generated from ARMA(p,q) models each of which of suitable

size , 50 observation, for comaparison purposes . The number of

generated samples are 2000 from each model of the following:

ARMA(1,0) , ARMA (2,0) , ARMA(0,1) , ARMA(0,2) , ARMA(1,1) ,

ARMA(1,2) , ARMA(2,1) and ARMA(2,2) with different 32 set of

parameters . For comparison purposes with Box-Jenkins method,

the selected parameters are distributed through the region of

stationarity and invertability for each model. For example , the

invertability interval for MA(1) model is -1 < <1 , and the

behavior of the absolute ACF and PACF functions for positive and

negative is symmetric .The four selected values for the model

parameter are 0.3, 0.5, 0.7 , 0.9 and so on .

The results in this research were computed by separating each

set of data in two subsets, where the first n-3 observations ,

corresponding from time 1 to time n-3 , called training set ,were

used to fit the model and then use the last 3, called test set,

corresponding from time n-3 , to make the forecast. The data used

to fit the model are also used for the training of the neural network.

This data were rescaled in the interval 0 ,1 . The NN used to

modeling the data and in forecasting is a feedforward with one

hidden layer and a bias in the hidden and output layer. A sigmoidal

transfer function is used to transfer the data from the input to the

hidden layer , and from the hidden to the output layer . The

sigmoidal function is:

A comparison between NNF and BJF - Al-Shawadfi

06

]

)

j

b

k

x

n

1 j

ij

w (

e [1 / 1 y

+

=

+ =

(4-1)

Sigmoid transfer functions are easy to differentiate and eases

the computation of the gradient. Because of the use of sigmoid

functions in the ANN model, the time series data must be

normalized onto the range [0,1] before applying the ANN

methodology. In this research, the following equation are used to

normalize NN inputs to the range [0,1].

0.1

min

- y

max

y

i

- y

max

y

0.8

i

x +

|

|

.

|

\

|

=

(4-2)

where y

max

and y

min

are the respective maximum and minimum

values of all observations . In the case of non-stationary data one

may make some modifications to the series. Then the data are

filtered through equation (4 - 2) and used as input neurons, so that

the points of inputs are in the range (0.1 , 0.9). All the back

propagation and networks used had 47 input neurons represents

the data values, 40 hidden neurons and 3 output neurons. The

output can only refer to predicted observations from the

ARMA(p,q) model with p , q = 0,1,2 .

The weights (parameters) that to be used in the NN model are

estimated from the data by minimizing the mean squared error .

As it is well known , all forecasting methods have either an implicit

or explicit error structure, where error is defined as the difference

between the model prediction and the "true" value. Additionally, many

A comparison between NNF and BJF - Al-Shawadfi

07

data snooping methodologies within the field of statistics need to be

applied to data supplied to a forecasting model. Also, diagnostic

checking, as defined within the field of statistics, is required for any

model which uses data. Using any method for forecasting one must use a

performance measure to assess the quality of the method. With respect to

proposed NN forecasting technique , our aim is to minimize the

following function:

2

(NNF)]

t

y -

t

y [

n

1

MSE(t) =

. ...(4-3)

where MSE(t) is the sum of squares of the difference between the

observed(y

t

) and NN predicted ( (NNF)

t

y )observations. To identify

an ANN forecasting model, values for the network weights

(w

1

w

2

.. ) must be estimated so that the prediction error is

minimized. The convergence condition , in this research , is that

the sum of squares of the errors is less than .01 or the number of

epochs =2000. The following figure(4 1) shows curves of the

mean square errors and the learning rate respectively .

A comparison between NNF and BJF - Al-Shawadfi

08

FIGURE(4-1)

The MSE and the learning rate of the network training for the

proposed forecasting approach

0 200 400 600 800 1000 1200 1400 1600 1800 2000

10

-4

10

-2

10

0

10

2

Epoch

S

u

m

-

S

q

u

a

r

e

d

E

r

r

o

r

Training for 2000 Epochs

0 200 400 600 800 1000 1200 1400 1600 1800 2000

0

5

10

15

Epoch

L

e

a

r

n

i

n

g

R

a

t

e

The training of the network takes about 15 hours on a MATLAB

package with PC computer.

A comparison between NNF and BJF - Al-Shawadfi

09

[5] Network test and comparing with Box-Jenkins

method

[5-1] Generated data

To compare the proposed neural network with the Box-

Jenkins forecasting techniques ,500 samples of data are

generated from each model of 32 different ARMA(p,q) models

with p,q=0,1,2 and used for the comparison . The accuracy of the

forecasts is evaluated using three popular residual statistics: the

mean square error (MSE) , the mean absolute deviation (MAD)

and the MPE , where the MPE is defined as:

100 .

2

1

n

n

MPE =

( 5 1)

where :

n

1

is the number of cases for which |y

NNF

y| is less than |y

BJF

y|

, n

2

is number of cases for which |y

BJF

y| is less than |y

NNF

y| ,

y

BJF

is the Box-Jenkins forecasts and y

NNF

is the neural network

forecasts.

A computerized generated data including 16000 sample are

used in the comparison . The data are generated from different

ARMA(p,q) models , p , q = 0 , 1 , 2 , with different parameters .

every model is used to generate 500 sample of data which are

then used to forecast the future with both NN and BJ methods .

The MSE , MAD and MPE measures are calculated and are used

A comparison between NNF and BJF - Al-Shawadfi

21

in the comparison .The results are summarized in tables: (5-1) and

(5-2). Rows 2 and 3 of Table (5-1) clearly shows that the ANN

model has the best forecasts as measured by the MSE statistics .

Visual comparison of the results presented in rows 4 and 5

of table (5-1) , shows that the ANN approach appears, on average,

to provide less value for MAD than BJ for all models . The MPE

statistic is shown in row 6 of table (5-1) . One can find that the

overall performance of the NN forecasting approach with

comparison to BJF approach is fairly very relevant , in the sense

that the NN forecast deviations from the true values is always

shorter , with ratio 118.8% , than Box-Jenkins forecasts.

TABLE(5-1)

The simulation results for the MSE , MAD and

MPE functions of NNF and BJF for different

ARMA(p,q) models

P,q

Method

1 ,0 2 ,0 0,1 0,2 1 , 1 1, 2 2 ,1 2 ,2 Main

average

NNF

MSD

3.770 3.102 2.930 3.176 1.886 2.788 4.604 3.829 3.261

BJ

MSD

5.000 3.676 2.972 3.234 2.254 3.153 5.234 4.299 3.7280

NNF

MAD

2.103 1.957 1.909 1.987 1.523 1.844 2.305 2.143 1.971

BJ

MAD

2.363 2.134 1.937 2.013 1.682 1.967 2.465 2.273 2.104

MPE

Ratio

009.3 024.1 019.8 017.9 031.8 008.5 020.4 008.5 118. 8

A comparison between NNF and BJF - Al-Shawadfi

20

Table(5-2) shows the MPE for one , two and three-step

ahead forecasts of the NN approach , which are better than the

corresponding BJ approach.

TABLE(5-2) The simulation results

The moderate behavior of the ratio between the NNF and BJF for 3

step ahead forecasts

Observation Zn+1 Zn+2 Zn+3 Average

MPE 124.717 107.786 123.839 118.781

Figures (5,1) represents MSE from rows 2 and 3 in table

(5-1). In figure (5-1) ,the BJF tends to have the largest deviations

from the actual data , while the NNF show small MAD than BJF

for all models . As suggested before, this may indicate that the

ANN approach is implicitly doing a better forecasting job than BJF

do . However, there is clearly still similarity between the two

approaches in case of ARMA(2,1).

A comparison between NNF and BJF - Al-Shawadfi

22

FIGURE(5-1)

The simulation results for the MSE function of NN

and BJ forecasts for different ARMA(p,q) models

0

1

2

3

4

5

6

1 ,0 2 ,0 2,1 2,2 1 , 1 1, 2 0 ,1 0 ,2 Main

average

NNF BJ

[5-2] Application to real data

Both NNF and BJF are used to forecast five real data series

which are found in Pankratz (1983). The five cases represents

different economics and business data. Series I represents

personal saving rate as a percent of disposable personal income ,

series II represents coal production and series III represents the

index of new private housing units authorized by local building

permits. Series iv is for the freight volume carried by class I

railroads measured in billions of ton-miles. The data of series v

represents the closing price of the American Telephone and

Telegraph (AT&T) common shares. The proposed Box-Jenkins

models for this data respectively are ARMA(1,0) ,ARMA(2,0) ,

A comparison between NNF and BJF - Al-Shawadfi

23

ARMA(1,1) , ARMA(2,1) and ARMA(2,2) . For every sample we

use the first n-3 observations to predict the last three observations

and hence calculate the MSE and MAD measures.

The results are summarized in table (5-3) . In this table it is

noted for all models that the MSE and MAD for NNF are smaller

than the corresponding BJF except for the ARMA(1,0) model.

Table(5 3)

MSE and MAD for both NNF and BJF for 5 real time series

Series Model Method MSE MAD

I- Saving rate NNF 1.2724 1.9647

ARMA(1,0) BJF 1.2643 1.1963

II - Coal production NNF 0.4292 2.0342

ARMA(2,0) BJF 0.7607 3.2422

III - Housing permits NNF 8.5052 1.0126

ARMA(1,1) BJF 26.2699 0.2580

IV - Rail freight NNF 1.5729 1.3337

ARMA(2,1) BJF 0.5725 2.6181

V - AT&T stock price NNF 8.9305 1.1961

ARMA(2,2) BJF 51.4446 2.9579

[6] SUMMARY AND CONCLUSIONS

The results presented in this paper are based on the use of

an effective and efficient network training algorithm. The purposes

of this research were first to design a neural network forecasting

A comparison between NNF and BJF - Al-Shawadfi

24

approach and second to compare the accuracy of the proposed

approach against other classical approaches. The results of

implementation of the time series forecasting approach was

compared with the results obtained using the Box-Jenkins

forecasting method. Table [5-1] shows the forecasting results for

different time series generated from different ARMA(p,q) models .

The potential of Artificial Neural Network models for

forecasting time series, linear or nonlinear, ARMA models has

been presented in this paper. A new procedure for forecasting time

series was described and compared with the classical Box-Jenkins

forecast method. The forecasts of the proposed NN approach, as

shown from three measures, seems to provide better results than

the classical forecasting Box-Jenkins approach. However, the

results suggest that the ANN approach may provide a superior

alternative to the Box-Jenkins forecasting approach for developing

forecasting models in situations that do not require modeling of the

internal structure of the series.

Numerical results show that the proposed approach has a

good performance for the forecasting of ARMA( p , q ) models .

The performance of the proposed NN forecasting approach are

summarized and discussed below:

1. The mean square error (MSE) statistic measures the

residual variance; the better is the smaller . The

proposed ANN forecasting approach tends to have

smaller MSE than Box-Jenkins forecasts , see rows 2

and of table (5-1) . On average, the MSE for ANN

A comparison between NNF and BJF - Al-Shawadfi

25

forecasts = 3.26106 which is less than MSE for

BJF(3.72809) . and thus the NN approach performs

best than Box-Jenkins approach , as measured by this

statistic. Figures (5-1) presents the MSE statistic, for

each model. Notice that, for all eight models, MSE

performance of the NNF is better than that of the BJF.

This may suggest that the NN approach has some

ability to forecast the behavior of the series in the

future similar or better than BJ method.

2. The MAD statistic measures the mean absolute

deviation and is small for NN forecasts for different

ARMA(p,q) models than the corresponding Box-

Jenkins one , see rows 4 and 5 of table (5-1) . On

average, the ANN performance(1.97185) is better than

Box-Jenkins performance (2.1048 ) .

3. The MPE statistic measures the mean percent of NN

forecasts nearer to the true value than Box-Jenkins

forecasts ,see row 6 of table (5 1) : the two models

perform well. While the ANN performance stays

consistently more relevant (118.8% ) than Box-Jenkins

as measured by this statistic.

4- Unlike conventional techniques for time series analysis,

an artificial neural network needs little information

about the time series data and can be applied to linear

and nonlinear time series models. However, the

problem of network "tuning" remains: i.e. parameters of

A comparison between NNF and BJF - Al-Shawadfi

26

the back propagation algorithm as well as the network

topology need to be adjusted for optimal performances.

For our application, we conducted experiments to find

the appropriate parameters for forecasting network.

The artificial neural networks that were found delivered

a better forecasting performance than results obtained

by the well known Box-Jenkins technique.

5- When trying to arrive at the ARMA forecasting in Box-

Jenkins method , the analysts makes decisions based

on autocorrelation and partial autocorrelation plots.

Because this process relies on human judgment to

interpret the data, it can be slow and sometimes

inaccurate. The analysts may turned to neural network

technology as a quicker , automatic and more accurate

alternative. Neural networks are ideal for finding

patterns in data, and neural connection was used to

find the patterns leading to the ARMA model

forecasting.

6- The NN and BJ methods are used for short and

intermediate term forecasting, updated as new data

becomes available to minimize the number of periods

ahead required for the forecast. The ANN forecasting

approach matches or has better performance than

that of the Box-Jenkins .

A comparison between NNF and BJF - Al-Shawadfi

27

References

(1) Arminger , G. and D. Enache (1995)

Statistical Models and Artificial Neural Networks ,

Proceedings of the 19th annual conference of the

Gesellschaft fur classification e.V., University of Basel ,

March 8-10 , 1995,H.-H. Bock .W.Polasek Editors ,

Springers , Germany .

(2) Aghadazeh S. M. and Romal J. B. (1992)

A Directory of 66 Packages for Forecasting and

Statistical Analysis, Journal of business forecasting

Methods and systems, 8, No.2, 14-20, U.S.A.

(3) Allende H. and et al (1999)

Artificial Neural Networks in Time Series Forecasting

: A comparative Analysis , University of Tecnica

Federico Santa Maria , department of information ,

Casilla 110 V; Valparaiso- Chile .

(4) Box, G. E. P. and Jenkins, G. M. (1976)

Time Series Analysis Forecasting and Control, Holden

Day, San Francisco, U.S.A.

(5) Cheng Y., Karjala T.W. & Himmelblau D.M. (1997)

Closed Loop Non-linear Process Forecasting Using

Internally Recurrent Nets, Neural Networks, Vol. 10,

No. 3, pp. 573-586. Concepts and cases John Wily &

Sons New York U.S.A. Day, San Francisco, U.S.A.

A comparison between NNF and BJF - Al-Shawadfi

28

(6) Choi , ByoungSeon (1992)

ARMA Model Identification , Springer

Verlag ,New York , U.S.A.

(7) Dijk, Dick Van and et al (1999)

Testing for ARCH in the Presence of Additive Outliers

Journal of Applied Econometrics , 14 , pp. 539-562 ,

John Wiley & Sons. Ltd. , U.S.A.

(8) Dunsmuir , W. and N. M. Spencer (1991)

Strong Consistency and asymptotic Normality of 11

Estimates of the Autoregressive moving average

Model , Journal of Time Series Analysis Vol. 12 ,

No. 2 , pp. 95-104 ,U.S.A.

(9) Elman J.L. (1993)

Learning and development in neural

networks: the importance of starting small,

Cognition, International Journal of

Forecasting, 48, pp.71-99.Evaluation,

(10) Harvey A.C. (1990)

The Econometric Analysis of Time Series, Philip Allan

Publishers Limited, Market Place, Deddincton Oxford

Ox 545 E, Great Britain.

A comparison between NNF and BJF - Al-Shawadfi

29

(11) Hertz J. and et al. (1991)

Introduction to the theory of neural

computation, Lecture notes of the Santa-

Fe Institute, vol. 1, Reading MA: Addison-

Wesley.

(12) Lutkepohi, Helmut (1993)

Introduction to Multiple Time Series analysis ,second

edition , Springer Verlag , Berlin , Heidelberg

,Germany.

(13) McLeod, A. (1999)

Necessary and Sufficient Condition for nonsingular

Fisher Information Matrix in ARMA and Fractional

ARIMA Models, Journal of the American Statistical

Association , ASA, The American Statistician,

February 1999, Vol. 53, No. 1 , U.S.A.

(14) Minitab Users Guide Manual (2000)

Release 13.3 , for windows , Pennsylvania State

College , PA 16801: Minitab Inc. ,3081 enterprise Dr.

U.S.A.

A comparison between NNF and BJF - Al-Shawadfi

31

(15) Morgan, Walter T (1998)

A Review of Eight Statistics Software Packages for

General Use , Journal of the American Statistical

Association, February, 998 Vol. 52 No.1. U.S.A.

(16) Ord, Keith and Sam Lowe (1996)

Automatic Forecasting, Journal of the American

Statistical Association, February 1996 ,Vol. 50 No.1.,

U.S.A.

(17) Oster, Robert A. (1998)

An Examination of Five Statistical Software Packages

for Epidemiology Journal of the American Statistical

Association, August 1998, Vol. 52. no. 3 , U.S.A.

(18) Pankratz, Alan (1983)

Forecasting with univariate Box-Jenkins Models ,

Publishers Limited, Market Place, Deddincton Oxford

Ox 545 E, Great

(19) Rahlf, Thomas (1994)

PC Programs for Time Series Analysis, Data

Management , Graphics, and univariate Analysis

process (SPSS, SYSTAT, STATISTICA, Microtsp,

Mesosaur), Historical Social Research, 1994, 19,

3(69), 78-123, Germany.

A comparison between NNF and BJF - Al-Shawadfi

30

(20) Sahay , Surottam N. and et al. (1996)

Software Review, The Journal of the Royal

Economic Society, ISSN: 0013-0133, Vol. 106, Isis.

439 Nov. 1996 , pp 1820-1829.

(21) Small, John (1997)

SHAZAM 8.0 Journal of Econometric Surveys, vol.

11, no 4., Blackwell, Publishers Ltd., 1997, 108

Cowley rd., Oxford ox4 1JF, U.K. and 350 Main Street,

Boston, MA 02148, U.S.A.

(22) Swanson, Norman R. and Halbert White

(1995)

A Model Selection Approach to Real-Time

Macroeconomic Forecasting Using Linear

Models and Artificial Neural Networks

The Pennsylvania State University,

Department of Economics, University Park,

PA, 16802 and Research Group for

Econometric Analysis, University of

California, San Diego, La Jolla, California

92093

A comparison between NNF and BJF - Al-Shawadfi

32

(23) Tashman, l. J. and M.L. Leach (1991)

Automatic Forecasting Software: A Survey and

Evaluation, International Journal of Forecasting,

7,pp.209-230, U.S.A.

(24) Tsoi A.C. and Tan S. (1997)

Recurrent neural networks: A constructive

algorithm and its properties,

Neurocomputing, 15, pp.309-326. Vol. 16,

May. 1997, pp. 147-163.

(25) West, R. W. and R. T. Ogden (1997)

Statistical Analysis with Webstat , a Java Applet for

the World Wide Web , Journal of Statistical Software,

2,3, U.S.A.

(26) Zhang , G. P. (2001)

An investigation of neural networks for linear

time series forecasting , Computers &

Operations Research 28,2001 , pp1183-1202 ,

Elsevier Science Ltd.

A comparison between NNF and BJF - Al-Shawadfi

33

APPENDIX [A]

MATLAB MACRO COMPUTER PROGRAM FOR TIME SERIES

FORECASTING TRAINING & TESTING USING NEURAL

NETWORK TECHNIQUE

% ... Matlab Program for comparison between NNF and BJF ... ....

%... ...file name : train135 ... output file out135.mat..., outf.mat ...

diary ('outf135')

clc;

clear all;

tic

load 'train131'

mu=0 ; sigma =1; mm=60 ; m= mm-10 ; n=1 ; m0 =500 ; n1=32 ;

n2=8 ; n3=4 ; h=3; ss01(2*n2,h)=0 ; ss02(2*n2,h)=0 ; ss(n2,h)=0;

sb(n2,h)=0; ss03(1,h)=0; ss04(2*n2,1)=0; ss05(1,h)=0;

ss06(2*n2,1)=0; ss3(1,h)=0; ss4(n2,1)= 0; sb3(1,h)=0; sb4(n2,1)=0

; s1=0 ; s2=0 ; s3=0 ; s4=0 ;

p=[1 0;1 0;1 0;1 0;2 0;2 0;2 0;2 0;0 1;0 1;0 1;0 1;0 2;0 2;0 2;0 2;1

1;

1 1;1 1;1 1;1 2;1 2;1 2;1 2;2 1;2 1;2 1;2 1;2 2;2 2;2 2;2 2];

a=[.3 .5 .7 .9 .3 .3 .5 .7 0 0 0 0 0 0 0 0 .3 .5 .7 .9 .3 .5

.7 .9 .3 .3 .5 .7 .3 .5 .5 .7;

0 0 0 0 -.5 .5 -.7 -.5 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

0 -.5 .5 -.7 -.5 -.5 -.7 .3 -5;

0 0 0 0 0 0 0 0 .3 .5 .7 .9 .3 .3 .5 .7 .5 .3 .5 .7 .3 .3 .5

.5 0 0 0 0 .3 .3 .5 .5;

0 0 0 0 0 0 0 0 0 0 0 0 -.5 .5 -.7 -.5 0 0 0 0 -.5 .5 -.7

.3 .3 .5 .7 .5 .3 .3 .5 5];

A comparison between NNF and BJF - Al-Shawadfi

34

%1... ... ... ... generating DATA ... ... ... ...

for I = 1: m0

z(mm,n1) = 0;

E(mm,1) = 0.0 ;

E0(m,1) = 0.0;

E = normrnd(mu,sigma,mm,n);

z(1,:) = E(1)*ones(1,n1) ;

z(2,:) = E(2)*ones(1,n1) + a(1,:).*z(1,:)- a(3,:)*E(1);

for i1 = 3 :mm

z(i1,:) = E(i1)*ones(1,n1) + a(1,:).*z(i1-1,:)+a(2,:).* z(i1-2,:) -

a(3,:)*E(i1-1) -a(4,:)*E(i1-2);

end

z00 = z(11:mm,:) ;

y0 = z00(1:(m-h),:); y1=z00((m-h+1):m ,:) ;

z001 = 0.8*(z00-ones(m,1)*min(z00))./(ones(m,1)*(max(z00)-

min(z00)))+ 0.1 ;

z0 = z001(1:(m-h),:);

z1=z001((m-h+1):m ,:) ;

if i==1

z01=z0;z02=z1 ;

z2=z00;

y01=y0;y02=y1 ;

else

z01=[z01 z0] ;

z02=[z02 z1] ;

z2=[z2 z00] ;

y01=[y01 y0] ;

y02=[y02 y1] ;

A comparison between NNF and BJF - Al-Shawadfi

35

end

end

%2... ... ... ...comparison and testing phase ... ... ... ... ... ...

j00=0

for j = 1:m0

for j0 = 1:n1

j00 = j00+1

j1 = fix((j0-1)/n3)+1;

s = simuff(z01(:,j00),w1,b1,'logsig',w2,b2,'logsig');

TH = ARMAX(y01(:,j00),[p(j0,:)]);

YP = PREDICT([y01(:,j00);y02(:,j00)] , TH , m-h)

y = (s - 0.1)*((max(z2(:,j00))- min(z2(:,j00)))/0.8) +

min(z2(:,j00));

for j3 = 1:h

s01 = abs(y02(j3,j00)-y(j3));

s02 = (s01)^2;

b01 = abs(y02(j3,j00)-YP(m-h+j3));

b02 = (b01)^2;

ss01((2*j1-1),j3) = ss01((2*j1-1),j3)+ s01;

ss01((2*j1 ),j3) = ss01((2*j1 ),j3)+ b01;

ss02((2*j1-1),j3) = ss02((2*j1-1),j3)+ s02;

ss02((2*j1 ) ,j3) = ss02((2*j1 ),j3)+ b02;

if s01 < b01 ; ss(j1 ,j3) = ss(j1,j3)+1 ;else;sb(j1 ,j3)=

sb(j1,j3)+1 ;end

end

end

end

ss03 = (ones(1,2*n2)* ss01) /(n2*n3*m0);

A comparison between NNF and BJF - Al-Shawadfi

36

ss04 = (ss01 * ones(h,1))/(h *n3*m0);

ss05 = (ones(1,2*n2)* ss02) /(n2*n3*m0);

ss06 = (ss02 * ones(h,1))/(h *n3*m0);

ss3 = (ones(1,n2)* ss) /(n2*n3*m0);

ss4 = (ss * ones(h,1)) /(h *n3*m0);

sb3 = (ones(1,n2)* sb) /(n2*n3*m0);

sb4 = (sb * ones(h,1)) /(h *n3*m0);

s1 = sum(ss03)/h;

s2 = sum(ss05)/h;

s3 = sum(ss3) /h;

s4 = sum(sb3) /h;

%3...Results ... ...

disp ' MAE RESULTS'

Mabs = [ss01,ss04;[ss03,s1]]

disp ' MSE RESULTS'

Mmse = [ss02,ss06;[ss05,s2]]

disp ' NNF RATIOS RESULTS'

Mnnf = [ss/(n3*m0),ss4;[ss3,s3]]

disp ' BOX-JENKINS RATIOS RESULTS '

Mbox = [sb/(n3*m0),sb4;[sb3,s4]]

save out135

diary off ;

toc

A comparison between NNF and BJF - Al-Shawadfi

37

APPENDIX [B]

MATLAB MACRO COMPUTER PROGRAM FOR TIME SERIES

FORECASTING USING NEURAL NETWORK TECHNIQUE

%... ...file name : train131 ... output file out13.mat...

clc

clear all

load 'train131'

diary ('outf13')

tic

m0 =1;n1=1;n2=1;n3=1;h=3;j00=1;j1=1;j0=5

ss01(2*n2,h)=0;ss02(2*n2,h)=

0;ss(n2,h)=0;sb(n2,h)=0;ss03(1,h)=0;ss04(2*n2,1)=0;

ss05(1,h)=0;ss06(2*n2,1)=0;ss3(1,h)=0;ss4(n2,1)=

0;sb3(1,h)=0;sb4(n2,1)=0;s1=0;s2=0;s3=0;s4=0;

p=[1 0;2 0;0 1;0 2;1 1;1 2;2 1;2 2];

%1... ... ... ... PREPARING THE DATA ... ... ... ...

% DATA1 SAVING RATE

% DATA2 COAL PRODUCTION

% DATA3 HOUSING PERMITS

% DATA4 RAIL FREIGHT

% DATA5 AT & T STOCK PRICE

z00 = data1;

m1 = length(z00)

if m1 >50

m=50;y0 = z00(m1-m+1:(m1-h),:); y1=z00((m1-h+1):m1,:);

else

A comparison between NNF and BJF - Al-Shawadfi

38

m=m1;

y0 = z00(1:(m-h),:); y1=z00((m-h+1):m ,:) ;

end

z0 = 0.8*(y0 - min(y0))/(max(y0)-min(y0))+ 0.1 ;

%2... FORECASTING WITH NUERAL & BJ METHODS ...

s = simuff(z0,w1,b1,'logsig',w2,b2,'logsig');

TH = ARMAX(y0,[p(j0,:)]);

YP = PREDICT([y0;y1] , TH , m-h)

y =(s - 0.1)*((max(y0)- min(y0))/0.8) + min(y0);

plot(1:m1,z00)

acf=xcorr(z00,'coeff')

acf1=acf(m+1:m+25)

newplot

plot(1:25,acf1)

ac=xcorr(y0,'coeff')

ac1=ac(m+1:m+25)

hold on

plot(1:25,ac1)

for j3=1:h

s01 = abs(y1(j3)-y(j3));

s02 = (s01)^2;

b01 = abs(y1(j3)-YP(m-h+j3));

b02 = (b01)^2;

ss01(1,j3)= ss01(1,j3)+ s01;

ss01(2,j3)= ss01(2,j3)+ b01;

ss02(1,j3)= ss02(1,j3)+ s02;

ss02(2,j3)= ss02(2,j3)+ b02;

if s01 < b01

A comparison between NNF and BJF - Al-Shawadfi

39

ss(1,j3)= ss(1,j3)+1 ;

else

sb(1,j3)= sb(1,j3)+1 ;

end

end

end

end

ss04 = (ss01 * ones(h,1))/(h *n3*m0);

ss06 = (ss02 * ones(h,1))/(h *n3*m0);

ss4 = (ss * ones(h,1)) /(h *n3*m0);

sb4 = (sb * ones(h,1)) /(h *n3*m0);

%3...Results ... ...

disp ' MAE RESULTS'

Mabs = [ss01,ss04]

disp ' MSE RESULTS'

Mmse = [ss02,ss06]

disp ' NNF RATIOS RESULTS'

Mnnf = [ss/(n3*m0),ss4]

disp ' BOX-JENKINS RATIOS RESULTS '

Mbox = [sb/(n3*m0),sb4]

save out136

toc;

diary off

Das könnte Ihnen auch gefallen

- Module 2 - Pile Group Effect (Compatibility Mode)Dokument46 SeitenModule 2 - Pile Group Effect (Compatibility Mode)nallay1705Noch keine Bewertungen

- College Physics Volume 1 11th Edition Serway Solutions Manual 1 PDFDokument7 SeitenCollege Physics Volume 1 11th Edition Serway Solutions Manual 1 PDFBina56% (9)

- Ed Thorp - A Mathematician On Wall Street - Statistical ArbitrageDokument33 SeitenEd Thorp - A Mathematician On Wall Street - Statistical Arbitragenick ragoneNoch keine Bewertungen

- Applications of Artificial Neural Networks in Management Science A Survey PDFDokument19 SeitenApplications of Artificial Neural Networks in Management Science A Survey PDFFaisal KhalilNoch keine Bewertungen

- MATLAB TutorialDokument182 SeitenMATLAB Tutorialxfreshtodef123Noch keine Bewertungen

- Designing A Neural Network For Forecasting Financial and Economic Time SerieDokument22 SeitenDesigning A Neural Network For Forecasting Financial and Economic Time SerieFaisal KhalilNoch keine Bewertungen

- DEEP LEARNING TECHNIQUES: CLUSTER ANALYSIS and PATTERN RECOGNITION with NEURAL NETWORKS. Examples with MATLABVon EverandDEEP LEARNING TECHNIQUES: CLUSTER ANALYSIS and PATTERN RECOGNITION with NEURAL NETWORKS. Examples with MATLABNoch keine Bewertungen

- Jig Fix Handbook (Carr Lane)Dokument438 SeitenJig Fix Handbook (Carr Lane)Jobin GeorgeNoch keine Bewertungen

- Detailed Lesson Plan in Mathematics IV (Plane Figures)Dokument7 SeitenDetailed Lesson Plan in Mathematics IV (Plane Figures)Mark Robel Torreña88% (56)

- Prediction of Time Series Data Using GA-BPNN Based Hybrid ANN ModelDokument6 SeitenPrediction of Time Series Data Using GA-BPNN Based Hybrid ANN ModelRajuNoch keine Bewertungen

- Forecasting Foreign Exchange Rate Using Robust Laguerre Neural NetworkDokument5 SeitenForecasting Foreign Exchange Rate Using Robust Laguerre Neural NetworkRahul VyasNoch keine Bewertungen

- Forecasting Oil Production by Adaptive Neuro Fuzzy Inference SystemDokument9 SeitenForecasting Oil Production by Adaptive Neuro Fuzzy Inference SystemTheo VhaldinoNoch keine Bewertungen

- Neural Networks For Time-Series ForecastingDokument13 SeitenNeural Networks For Time-Series ForecastingernestoNoch keine Bewertungen

- Forecasting of Nonlinear Time Series Using Ann: SciencedirectDokument11 SeitenForecasting of Nonlinear Time Series Using Ann: SciencedirectrestuNoch keine Bewertungen

- 049 - Flores Et Al - Loaeza - Rodriguez - Gonzalez - Flores - TerceñoDokument9 Seiten049 - Flores Et Al - Loaeza - Rodriguez - Gonzalez - Flores - TerceñosicokumarNoch keine Bewertungen

- Time Series Analysis and Forecasting Using ARIMA Modeling, Neural Network and Hybrid Model Using ELMDokument14 SeitenTime Series Analysis and Forecasting Using ARIMA Modeling, Neural Network and Hybrid Model Using ELMved2903_iitkNoch keine Bewertungen

- Manifold Learning Techniques For Unsupervised Anomaly DetectionDokument12 SeitenManifold Learning Techniques For Unsupervised Anomaly DetectionAlex ShevchenkoNoch keine Bewertungen

- I-:'-Ntrumsu'!I: Model Selection in Neural NetworksDokument27 SeitenI-:'-Ntrumsu'!I: Model Selection in Neural Networksniwdex12Noch keine Bewertungen

- Generative Adversarial Networks in Time Series: A Systematic Literature ReviewDokument31 SeitenGenerative Adversarial Networks in Time Series: A Systematic Literature Reviewklaus peterNoch keine Bewertungen

- ARIMA Model Has A Strong Potential For Short-Term Prediction of Stock Market TrendsDokument4 SeitenARIMA Model Has A Strong Potential For Short-Term Prediction of Stock Market TrendsMahendra VarmanNoch keine Bewertungen

- Time series forecasting using Python modelsDokument1 SeiteTime series forecasting using Python modelsPandu snigdhaNoch keine Bewertungen

- Exploring The Use of Recurrent Neural Networks For Time Series ForecastingDokument5 SeitenExploring The Use of Recurrent Neural Networks For Time Series ForecastingInternational Journal of Innovative Science and Research TechnologyNoch keine Bewertungen

- Neural NetworkDokument6 SeitenNeural NetworkMuhammed Cihat AltınNoch keine Bewertungen

- Back Propagation Neural NetworkDokument10 SeitenBack Propagation Neural NetworkAhmad Bisyrul HafiNoch keine Bewertungen

- A Hybrid Method of Exponential Smoothing and Recurre - 2020 - International JourDokument11 SeitenA Hybrid Method of Exponential Smoothing and Recurre - 2020 - International JourcrackendNoch keine Bewertungen

- Zhang 2012Dokument18 SeitenZhang 2012Mario Gutiérrez MoralesNoch keine Bewertungen

- Median Radial Basis Function Neural Network: G. BorgDokument14 SeitenMedian Radial Basis Function Neural Network: G. BorgINoch keine Bewertungen

- Causal Model Based On ANNDokument6 SeitenCausal Model Based On ANNdeesingNoch keine Bewertungen

- Using Artificial Neural Network Models in Stock Market Index Prediction PDFDokument9 SeitenUsing Artificial Neural Network Models in Stock Market Index Prediction PDFDavid DiazNoch keine Bewertungen

- Neurocontrol of An Aircraft: Application To Windshear: Pergamon 08957177 (95) 00101-8Dokument16 SeitenNeurocontrol of An Aircraft: Application To Windshear: Pergamon 08957177 (95) 00101-8Alfa BetaNoch keine Bewertungen

- Demand Forecast in A Supermarket Using S Hybrid Intelligent SystemDokument8 SeitenDemand Forecast in A Supermarket Using S Hybrid Intelligent SystemgoingroupNoch keine Bewertungen

- A Hybrid Model To Forecast Stock Trend UDokument8 SeitenA Hybrid Model To Forecast Stock Trend URam KumarNoch keine Bewertungen

- Forecasting of Nonlinear Time Series Using Artificial Neural NetworkDokument9 SeitenForecasting of Nonlinear Time Series Using Artificial Neural NetworkranaNoch keine Bewertungen

- Puspita 2019 J. Phys. Conf. Ser. 1196 012073Dokument8 SeitenPuspita 2019 J. Phys. Conf. Ser. 1196 012073Pokerto JavaNoch keine Bewertungen

- Comparative Study of Financial Time Series Prediction by Artificial Neural Network With Gradient Descent LearningDokument8 SeitenComparative Study of Financial Time Series Prediction by Artificial Neural Network With Gradient Descent LearningArka GhoshNoch keine Bewertungen

- A Systematic Literature Review On Convolutional Neural Networks Applied To Single Board ComputersDokument4 SeitenA Systematic Literature Review On Convolutional Neural Networks Applied To Single Board Computersmadupiz@gmailNoch keine Bewertungen

- Energy Management in Wireless Sensor NetworksDokument13 SeitenEnergy Management in Wireless Sensor NetworksabdirahimNoch keine Bewertungen

- Classifier Based Text Mining For Radial Basis FunctionDokument6 SeitenClassifier Based Text Mining For Radial Basis FunctionEdinilson VidaNoch keine Bewertungen

- ANN Model for Water Quality PredictionDokument8 SeitenANN Model for Water Quality PredictionEllango ArasarNoch keine Bewertungen

- Paper 74Dokument10 SeitenPaper 74Vikas KhannaNoch keine Bewertungen

- A Hybrid Neural Network-First Principles Approach Process ModelingDokument13 SeitenA Hybrid Neural Network-First Principles Approach Process ModelingJuan Carlos Ladino VegaNoch keine Bewertungen

- A Novel Hybridization of Artificial Neural Networks and ARIMA Models For Time Series ForecastingDokument12 SeitenA Novel Hybridization of Artificial Neural Networks and ARIMA Models For Time Series ForecastingAli AkbarNoch keine Bewertungen

- Ijcsea 2Dokument13 SeitenIjcsea 2Billy BryanNoch keine Bewertungen

- Bayesian Modeling Using The MCMC ProcedureDokument22 SeitenBayesian Modeling Using The MCMC ProcedureKian JahromiNoch keine Bewertungen

- Article ANN EstimationDokument7 SeitenArticle ANN EstimationMiljan KovacevicNoch keine Bewertungen

- Generalize DR BFDokument12 SeitenGeneralize DR BFvliviuNoch keine Bewertungen

- Eye Detection Using Optimal Wavelets and RBFsDokument18 SeitenEye Detection Using Optimal Wavelets and RBFsEdgar Alexander Olarte ChaparroNoch keine Bewertungen

- 00501715Dokument13 Seiten00501715Elyazia MohammedNoch keine Bewertungen

- A Real-Time Short-Term Peak and Average Load Forecasting System Using A Self-Organising Fuzzy Neural NetworkDokument10 SeitenA Real-Time Short-Term Peak and Average Load Forecasting System Using A Self-Organising Fuzzy Neural NetworkkalokosNoch keine Bewertungen

- Review PapaerDokument25 SeitenReview PapaerVaibhav TelrandheNoch keine Bewertungen

- Estimation of Difficult-to-Measure Process Variables Using Neural NetworksDokument4 SeitenEstimation of Difficult-to-Measure Process Variables Using Neural NetworksWalid AbidNoch keine Bewertungen

- Cao 2019Dokument13 SeitenCao 2019Matheus LaranjaNoch keine Bewertungen

- Comparison Adaptive Methods Function Estimation From SamplesDokument16 SeitenComparison Adaptive Methods Function Estimation From SamplesÍcaro RodriguesNoch keine Bewertungen

- A Survey On Selected Algorithm - Evolutionary, Deep Learning, Extreme Learning MachineDokument5 SeitenA Survey On Selected Algorithm - Evolutionary, Deep Learning, Extreme Learning MachineiirNoch keine Bewertungen

- Grid Search Random Search Genetic Algorithm A BigDokument11 SeitenGrid Search Random Search Genetic Algorithm A BigDr. Khan MuhammadNoch keine Bewertungen

- Artificial Neural NetworkDokument10 SeitenArtificial Neural NetworkSantosh SharmaNoch keine Bewertungen

- A Neural-Network-Based Nonlinear Metamodeling Approach To Financial Time Series ForecastingDokument12 SeitenA Neural-Network-Based Nonlinear Metamodeling Approach To Financial Time Series ForecastingFrancisco MirandaNoch keine Bewertungen

- Time Series Forecasting Using A Hybrid ARIMADokument17 SeitenTime Series Forecasting Using A Hybrid ARIMAlocvotuongNoch keine Bewertungen

- Master Thesis Neural NetworkDokument4 SeitenMaster Thesis Neural Networktonichristensenaurora100% (1)

- Psichogios AICHE-1 PDFDokument13 SeitenPsichogios AICHE-1 PDFRuppahNoch keine Bewertungen

- Ricardo Vargas Article Neural Networks en PDFDokument27 SeitenRicardo Vargas Article Neural Networks en PDFAqui SoloNoch keine Bewertungen

- An Improved Radial Basis Function Networks Based On Quantum Evolutionary Algorithm For Training Nonlinear DatasetsDokument12 SeitenAn Improved Radial Basis Function Networks Based On Quantum Evolutionary Algorithm For Training Nonlinear DatasetsIAES IJAINoch keine Bewertungen

- Dietmar Heinke and Fred H. Hamker - Comparing Neural Networks: A Benchmark On Growing Neural Gas, Growing Cell Structures, and Fuzzy ARTMAPDokument13 SeitenDietmar Heinke and Fred H. Hamker - Comparing Neural Networks: A Benchmark On Growing Neural Gas, Growing Cell Structures, and Fuzzy ARTMAPTuhmaNoch keine Bewertungen

- Cost Estimation Predictive Modeling Regression Verses NNDokument28 SeitenCost Estimation Predictive Modeling Regression Verses NNMubangaNoch keine Bewertungen

- Sres 2179Dokument16 SeitenSres 2179yasmeen ElwasifyNoch keine Bewertungen

- Mixture Models and ApplicationsVon EverandMixture Models and ApplicationsNizar BouguilaNoch keine Bewertungen

- 8 Antipatterns AssignmentDokument52 Seiten8 Antipatterns Assignmentdemelash kasayeNoch keine Bewertungen

- Dependability 3 UnlockedDokument26 SeitenDependability 3 UnlockedAngelBlancoPomaNoch keine Bewertungen

- Seminar Topics: 1. Pushover AnalysisDokument4 SeitenSeminar Topics: 1. Pushover AnalysisTezin0% (1)

- CALCULOSDokument227 SeitenCALCULOSLuisHuamanQuilicheNoch keine Bewertungen

- Cost EstimationDokument16 SeitenCost EstimationNagendran NatarajanNoch keine Bewertungen

- 2.9 Analysing Forces in Equilibrium: Chapter 2 Forces and MotionDokument31 Seiten2.9 Analysing Forces in Equilibrium: Chapter 2 Forces and MotionPauling ChiaNoch keine Bewertungen

- Non Linear Data StructuresDokument50 SeitenNon Linear Data Structuresnaaz_pinuNoch keine Bewertungen

- CAPE Applied Mathematics Past Papers 2005P2B PDFDokument5 SeitenCAPE Applied Mathematics Past Papers 2005P2B PDFEquitable BrownNoch keine Bewertungen

- (Help) OLS Classical Assumptions PDFDokument3 Seiten(Help) OLS Classical Assumptions PDFasdfghNoch keine Bewertungen

- Met 2aDokument70 SeitenMet 2aharshaNoch keine Bewertungen

- Advancedigital Communications: Instructor: Dr. M. Arif WahlaDokument34 SeitenAdvancedigital Communications: Instructor: Dr. M. Arif Wahlafahad_shamshadNoch keine Bewertungen

- 2015-12-98 Math MagazineDokument76 Seiten2015-12-98 Math MagazineMETI MICHALOPOULOU100% (1)

- (5 PTS) (1 PTS) : Test 1 MAT 1341C Feb. 7, 2009 1Dokument5 Seiten(5 PTS) (1 PTS) : Test 1 MAT 1341C Feb. 7, 2009 1examkillerNoch keine Bewertungen

- Unit-Iii Sequence and Series: 2 Marks QuestionsDokument18 SeitenUnit-Iii Sequence and Series: 2 Marks QuestionsAshish Sharma0% (1)

- CXC Msths Basic Questions and AnswerDokument20 SeitenCXC Msths Basic Questions and AnswerRen Ren Hewitt100% (1)

- Fundamentals of Computer Programming: Array and String FundamentalsDokument40 SeitenFundamentals of Computer Programming: Array and String FundamentalsFares BelaynehNoch keine Bewertungen

- Structured Query Language (SQL) : Database Systems: Design, Implementation, and Management 7th EditionDokument30 SeitenStructured Query Language (SQL) : Database Systems: Design, Implementation, and Management 7th EditionArvind LalNoch keine Bewertungen

- Pronominal Anaphora Resolution inDokument7 SeitenPronominal Anaphora Resolution inijfcstjournalNoch keine Bewertungen

- Write The Ratio, Decimal and Percent Equivalent of Each Picture. Write Your Answer On A Sheet of PaperDokument14 SeitenWrite The Ratio, Decimal and Percent Equivalent of Each Picture. Write Your Answer On A Sheet of PaperMarlon Ursua BagalayosNoch keine Bewertungen

- Level-Ii: Sample PaperDokument5 SeitenLevel-Ii: Sample PaperSeng SoonNoch keine Bewertungen

- Viva QuestionsDokument12 SeitenViva QuestionsVickyGaming YTNoch keine Bewertungen

- Ecture 4Dokument11 SeitenEcture 4bob buiddddddNoch keine Bewertungen

- Network Analysis Exam QuestionsDokument1 SeiteNetwork Analysis Exam QuestionsPritam PiyushNoch keine Bewertungen