Beruflich Dokumente

Kultur Dokumente

Wireless Gesture Driven Robotic Arm

Hochgeladen von

yrikkiCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Wireless Gesture Driven Robotic Arm

Hochgeladen von

yrikkiCopyright:

Verfügbare Formate

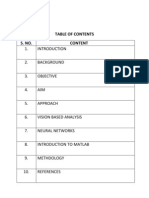

WIRELESS GESTURE DRIVEN ROBOTIC ARM

(WGDRA)

INTRODUCTION

Human gestures have long been an important way of communication, adding emphasis to

voice messages or even being a complete message by itself. Such human gestures could be

used to improve human machine interface. These may be used to control a wide variety of

devices remotely. Vision-based framework can be developed to allow the users to interact

with computers through human gestures. This study focuses in understanding such human

gesture recognition, typically hand gesture.

Gesture recognition is an important area for novel human computer interaction (HCI) systems

and a lot of research has been focused on it. These systems differ in basic approaches

depending on the area in which it is used. Basically, the field of gestures can be separated

into dynamic gestures (e.g. writing letters or numbers) and static postures (e.g. sign-

language). The goal of gesture analysis and interpretation is to push the advanced human

machine communication in order to bring the performance of human-machine interaction

closer to human-human interaction.

Gesture recognition can be termed as an approach in this direction. It is the process by which

the gestures made by the user are recognized by the receiver. Gestures are expressive,

meaningful body motions involving physical movements of the fingers, hands, arms, head,

face, or body with the intent of:

conveying meaningful information or

Interacting with the environment.

They constitute one interesting small subspace of possible human motion. A gesture may also

be perceived by the environment as a compression technique for the information to be

transmitted elsewhere and subsequently reconstructed by the receiver.

Classification :-

A) hand and arm gestures:

Recognition of hand poses, sign languages, and entertainment applications.

B) head and face gestures:

Nodding or shaking of head; direction of eye gaze; etc.;

C) body gestures:

Involvement of full body motion, as in; tracking movements of two people

interacting outdoors; analyzing movements of a dancer for generating matching music

and graphics;

Benefits:

A human computer interface can be provided using gestures:

Pointing gestures

Interact with the 3D world

Replace mouse and keyboard

Pick up and manipulate virtual objects

Navigate in a virtual environment

DESCRIPTION :-

A. Architecture of recognition system

A basic gesture input device is the word processing tablet. In the system, two dimensional

hand gestures are sent via an input device to the computer's memory and appear on the

computer monitor. These symbolic gestures are identified as editing commands through

geometric modelling techniques. The commands are then executed, modifying the document

stored in computer memory. Gestures are represented by a view-based approach, and stored

patterns are matched to perceived gestures using dynamic time warping. View-based vision

approaches also permit a wide range of gestural inputs when compared to the mouse and

stylus input devices.

Hand and arm gesture

Hand gestures are the most expressive and the most frequently used gestures. This involves:

1) A posture

Static finger configuration without hand movement, and

2) A gesture

Dynamic hand movement with or without finger motion.

Gestures may be categorized as

Gesticulation:- spontaneous movement of hands and arms, accompanying speech. These

spontaneous movements constitute around 90% of human gestures. People gesticulate when

they are on telephone, and even blind people regularly gesture when speaking to one another;

Language like gestures: gesticulation integrated into a spoken utterance, replacing a

particular spoken word or phrase;

Pantomimes: gestures depicting objects or actions, with or without accompanying speech;

Emblems: familiar signs such as V for victory, or other culture-specific rude gestures;

Sign languages: well-defined linguistic systems. These carry the most semantic meaning and

are more systematic, thereby being easier to model in a virtual environment.

Representation of hand gesture

Representation of hand motion includes:

Global configuration: six DOF of a frame attached to the wrist, representing the pose of the

hand.

Local configuration: the angular DOF of fingers.

Block Diagram:-

Transmitter Section-

Receiver Section:-

Design Methodology:-

New intelligent algorithms could help robots to quickly recognize and respond to human

gestures. Researchers in past have created a computer program which recognises human

gestures quickly and accurately, and requires very little training.

Interface improvements more than anything else has triggered explosive growth in robotics.

It was quite common in light-pen based systems to include some gesture recognition

SOFTWARE DESIGN -

1) CAD Design.

2) Simulation on SIMULINK.

HARDWARE-

COMPONENT/ MODULES USED-

1.) Accelerometer:- An accelerometer is a device that measures proper acceleration,

also called the four-acceleration. For example, an accelerometer on a rocket

accelerating through space will measure the rate of change of the velocity of the

rocket relative to any inertial frame of reference. However, the proper

acceleration measured by an accelerometer is not necessarily the coordinate

acceleration (rate of change of velocity).

An accelerometer is an electromechanical device that will measure acceleration

forces. These forces may be static, like the constant force of gravity pulling at your

feet, or they could be dynamic caused by moving or vibrating the accelerometer.

2.) Microcontroller-

3.) HT12E Decoder

18 PIN DIP, Operating Voltage : 2.4V ~ 12.0V

Low Power and High Noise Immunity, CMOS Technology

Low Stand by Current, Ternary address setting

Capable of Decoding 12 bits of Information

8 ~ 12 Address Pins and 0 ~ 4 Data Pins

Received Data are checked 2 times, Built in Oscillator needs only 5% resistor

VT goes high during a valid transmission

Easy Interface with an RF of IR transmission medium

Minimal External Components

Transmitter:

For controlling robot we have used RF transmitting remote. For Rf transmission we have

used HT12E Decoder IC. The 212 encoders are a series of CMOS LSIs for remote control

system applications. They are capable of encoding information which consists of N address

bits and 12_N data bits. Each address/ data input can be set to one of the two logic states. The

programmed addresses/data are transmitted together with the header bits via an RF or an

infrared transmission medium upon receipt of a trigger signal. The capability to select a TE

trigger on the HT12E or a DATA trigger on the HT12A further enhances the application

flexibility of the 212 series of encoders. The HT12A additionally provides a 38kHz carrier

for infrared systems.

For the proper working of this local control section a permanent 5V back up needed

continuously. This is achieved by using a 230V to 12V transformer, Bridge rectifier,

capacitor filter and 5V regulated power supply from a voltage regulated IC 7805. This 5V

source is connected to all ICs and relays.

RF Receiver: For RF transmission purposed it is needed to encode the signal generated at

computer parallel port with the help visual basic code. For signal encoding purpose we have

used HT 12E encoder. HT^12 E is 2^12 encoders are a series of CMOS LSIs for remote

control system applications. They are capable of encoding information which consists of N

address bits and 12_N data bits. Each address/ data input can be set to one of the two logic

states. The programmed addresses/data are transmitted together with the header bits via an

RF transmission medium upon receipt of a trigger signal. The capability to select a TE trigger

on the HT12E enhances the application Flexibility of the 2^12 series of encoders.

Encoder

18 PIN DIP

Operating Voltage : 2.4V ~ 12V

Low Power and High Noise Immunity CMOS Technology

Low Standby Current and Minimum Transmission Word

Built-in Oscillator needs only 5% Resistor

Easy Interface with and RF or an Infrared transmission medium

Minimal External Components

DC motor driver:

The H-Bridge is used for motor driver. The H-Bridge is widely used in Robotics for driving

DC motor in both clockwise and anticlockwise.

Fig:- L293D Driver

Motor Driver Mechanism:-

It is an electronic circuit which enables a voltage to be applied across a load in either

direction.

It allows a circuit full control over a standard electric DC motor. That is, with an H-

bridge, a microcontroller, logic chip, or remote control can electronically command

the motor to go forward, reverse, brake, and coast.

H-bridges are available as integrated circuits, or can be built from discrete

components.

A double pole double throw relay can generally achieve the same electrical

functionality as an H-bridge, but an H-bridge would be preferable where a smaller

physical size is needed, high speed switching, low driving voltage, or where the

wearing out of mechanical parts is undesirable.

The term H-bridge is derived from the typical graphical representation of such a circuit,

which is built with four switches, either solid-state (e.g. L293/ L298) or mechanical (e.g.,

relays). The current provided by the MCU is of the order of 5mA and that required by a

motor is ~500mA. Hence, motor cant be controlled directly by MCU and we need an

interface between the MCU and the motor. A motor driver does not amplify the current; it

only acts as a switch.

RF Module :-

Application:-

1.) Vehicle Navigation

2.) Marine Navigation

3.) Base Service

4.) Auto Pilot

5.) Personal Navigation

6.) Travel Equipment

7.) Track equipment

8.) System & Mapping Application

9.) Medical Applications.

Future Scope:-

Wireless modules consume very low power and is best suited for wireless, battery

driven devices.

Advanced robotic arms that are designed like the human hand itself can easily

controlled using hand gestures only.

Proposed utility in fields of Construction, Hazardous waste disposal, Medical

science.

Combination of Heads Up display, Wired Gloves, Haptictactile sensors, Omni

directional Tread mills may produce a feel of physical places during simulated

environments.

VR simulation may prove to be crucial for Military, LAW enforcement and Medical

Surgeries.

References:-

[1] W.T. Freeman, C.D. Weissman, Television control by hand gesture, Proceedings of the

International Workshop on Automatic Face-and Gesture-Recognition, Zurich, Switzerland,

June 1995, pp. 179-183.

[2] J.S. Kim, C.S. Lee, K.J. Song, B. Min, Z. Bien, Real-time hand gesture recognition for

avatar motion control, Proceedings of HCI97, February 1997, pp. 96-101.

[3] T. Starner, A. Pentland,"Visual recognition of american sign language using hidden

Markov model", Proceedings of the International Workshop on Automatic Face-and Gesture-

Recognition, Zurich, Switzerland, June 1995, pp. 189-194.

[4] J. Yang, Y. Xu, C.S. Chen,"Human action learningvia hidden markov model", IEEE

Trans. Systems, Man, Cybernet. 27 (1), 1997, pp. 34-44.

[5] T.S. Huang, A. Pentland,"Hand gesture modeling, analysis, and synthesis", Proceedings

of the International Workshop on Automatic Face-and Gesture-Recognition, Zurich,

Switzerland, June 1995, pp. 73-79.

[6] Jakub Segan,"Controlling computer with gloveless gesture", In Virtual Reality

System93, 1993, pp. 2-6.

[7] E. Hunter, J. Schlenzig, R. Jain,"Posture estimation in reduced-model gesture input

systems, Proceedings of the International Workshop on Automatic Face-and Gesture-

Recognition, June 1995, pp. 290-295.

[8] M.J. Swain, D.H. Ballard,"Color indexing", International Journal of Computer Vision 7

(1), 1991, pp. 11-32.

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Ques (1-5) Directions: Study The Following Information Carefully and Answer The Questions Given BelowDokument2 SeitenQues (1-5) Directions: Study The Following Information Carefully and Answer The Questions Given BelowyrikkiNoch keine Bewertungen

- Directions (Q. 1-5) : Study The Following Information Carefully and Answer The Questions Given BelowDokument2 SeitenDirections (Q. 1-5) : Study The Following Information Carefully and Answer The Questions Given BelowyrikkiNoch keine Bewertungen

- Answersheet For Section Wise Set of Professional Knowledge For It OfficerDokument1 SeiteAnswersheet For Section Wise Set of Professional Knowledge For It OfficeryrikkiNoch keine Bewertungen

- Punit Narayan CVDokument2 SeitenPunit Narayan CVyrikkiNoch keine Bewertungen

- Voice Recognition System ReportDokument17 SeitenVoice Recognition System ReportyrikkiNoch keine Bewertungen

- Marketing Is The Process of Communicating The Value of A Product or Service To Customers, For TheDokument4 SeitenMarketing Is The Process of Communicating The Value of A Product or Service To Customers, For TheyrikkiNoch keine Bewertungen

- Avr+lcd ReportDokument95 SeitenAvr+lcd ReportyrikkiNoch keine Bewertungen

- Synopsis Automatic Phase ExchangerDokument4 SeitenSynopsis Automatic Phase ExchangeryrikkiNoch keine Bewertungen

- Vehicle Speed Mearurement System Final2Dokument71 SeitenVehicle Speed Mearurement System Final2yrikkiNoch keine Bewertungen

- Hardware Inv by Ti BestDokument18 SeitenHardware Inv by Ti BestyrikkiNoch keine Bewertungen

- The Stack, Subroutines, Interrupts and ResetsDokument20 SeitenThe Stack, Subroutines, Interrupts and ResetsyrikkiNoch keine Bewertungen

- Election Commission of India: A State-of-the-Art, User Friendly and Tamper ProofDokument29 SeitenElection Commission of India: A State-of-the-Art, User Friendly and Tamper ProofyrikkiNoch keine Bewertungen

- Synopsis On Electromagnetic Car PDFDokument3 SeitenSynopsis On Electromagnetic Car PDFyrikkiNoch keine Bewertungen

- Synopsis On Car Parking SystemDokument5 SeitenSynopsis On Car Parking SystemyrikkiNoch keine Bewertungen

- Gesture Control CarDokument39 SeitenGesture Control CarSAMBE SRUJAN KUMARNoch keine Bewertungen

- Disadvantages Eeg EogDokument22 SeitenDisadvantages Eeg EogSaurabh MishraNoch keine Bewertungen

- Hand Gesture Recognition Based On Computer Vision: A Review of TechniquesDokument29 SeitenHand Gesture Recognition Based On Computer Vision: A Review of TechniquespradyumnNoch keine Bewertungen

- An Augmented Reality Application With Hand Gestures For Learning 3D GeometryDokument9 SeitenAn Augmented Reality Application With Hand Gestures For Learning 3D Geometryjenny pujosNoch keine Bewertungen

- ReportDokument77 SeitenReportEngr Naseeb UllahNoch keine Bewertungen

- IEEE Sensor Journal Motion Recognition 2012Dokument8 SeitenIEEE Sensor Journal Motion Recognition 20129985237595Noch keine Bewertungen

- Software Requirement Specification DocumentDokument9 SeitenSoftware Requirement Specification DocumentKuldeep Singh100% (1)

- Design of Smart Gloves IJERTV3IS110222Dokument6 SeitenDesign of Smart Gloves IJERTV3IS110222Rab DinoNoch keine Bewertungen

- Ijsrcsamsv 8 I 2 P 122Dokument2 SeitenIjsrcsamsv 8 I 2 P 122526 Vani BoligarlaNoch keine Bewertungen

- Air Canvas SynopsisDokument23 SeitenAir Canvas SynopsisRahul RajNoch keine Bewertungen

- Blind Deaf and Dumb PPT 1st ReviewDokument16 SeitenBlind Deaf and Dumb PPT 1st ReviewRaju SharmaNoch keine Bewertungen

- Piyush Group 3-2Dokument40 SeitenPiyush Group 3-2Biren PatelNoch keine Bewertungen

- Sign Language Recognition System Using Deep LearningDokument5 SeitenSign Language Recognition System Using Deep LearningInternational Journal of Innovative Science and Research TechnologyNoch keine Bewertungen

- Gesture MSCDokument52 SeitenGesture MSCWahid KhanNoch keine Bewertungen

- Hand Gesture Recognition Using OpenCV and PythonDokument7 SeitenHand Gesture Recognition Using OpenCV and PythonEditor IJTSRDNoch keine Bewertungen

- Sat - 28.Pdf - Sign Language Recognition Using Machine LearningDokument11 SeitenSat - 28.Pdf - Sign Language Recognition Using Machine LearningVj KumarNoch keine Bewertungen

- Sixth Sense Technology Seminar ReportDokument34 SeitenSixth Sense Technology Seminar Reportpiyush_gupta_79100% (1)

- A Human Hand Gesture Based TV Fan Control System Using Open CVDokument99 SeitenA Human Hand Gesture Based TV Fan Control System Using Open CVTechnos_IncNoch keine Bewertungen

- Sixth Sense TechnologyDokument26 SeitenSixth Sense Technologyharisri269Noch keine Bewertungen

- (1-5) CE GESTURE CONTROLLED WHEELCHAIR-formatDokument5 Seiten(1-5) CE GESTURE CONTROLLED WHEELCHAIR-formatAKHILNoch keine Bewertungen

- JWS - Tech Portfolio 2023Dokument35 SeitenJWS - Tech Portfolio 2023Dee KNoch keine Bewertungen

- Hand Gesture RecognitionDokument28 SeitenHand Gesture Recognitionmayur chablaniNoch keine Bewertungen

- Research Article: Deep Learning-Based Real-Time AI Virtual Mouse System Using Computer Vision To Avoid COVID-19 SpreadDokument8 SeitenResearch Article: Deep Learning-Based Real-Time AI Virtual Mouse System Using Computer Vision To Avoid COVID-19 SpreadRatan teja pNoch keine Bewertungen

- Fast and Robust Dynamic Hand Gesture Recognition Via Key Frames Extraction and Feature FusionDokument11 SeitenFast and Robust Dynamic Hand Gesture Recognition Via Key Frames Extraction and Feature FusionWilliam RodriguezNoch keine Bewertungen

- Final ReportDokument66 SeitenFinal Reporthrithik zubairNoch keine Bewertungen

- Human Computer Interface TutorialDokument39 SeitenHuman Computer Interface TutorialOvidiu Costinel Danciu100% (3)

- Distance Transform Based Hand Gestures Recognition For Powerpoint Presentation NavigationDokument8 SeitenDistance Transform Based Hand Gestures Recognition For Powerpoint Presentation NavigationAnonymous IlrQK9HuNoch keine Bewertungen

- Convolution Neural Networks For Hand Gesture RecognationDokument5 SeitenConvolution Neural Networks For Hand Gesture RecognationIAES IJAINoch keine Bewertungen

- #Chapter - 7 @HCI (Important Points)Dokument8 Seiten#Chapter - 7 @HCI (Important Points)Izz HfzNoch keine Bewertungen

- Sixthsense: - Sanjana Sukumar 3Rd YearDokument2 SeitenSixthsense: - Sanjana Sukumar 3Rd YearSanjana SukumarNoch keine Bewertungen