Beruflich Dokumente

Kultur Dokumente

Neural Network Based 3D Surface Reconstruction

Hochgeladen von

International Journal on Computer Science and EngineeringCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Neural Network Based 3D Surface Reconstruction

Hochgeladen von

International Journal on Computer Science and EngineeringCopyright:

Verfügbare Formate

Vincy Joesph et al /International Journal on Computer Science and Engineering Vol.

1(3), 2009, 116-121

NeuralNetwork Based 3D Surface Reconstruction

Vincy Joseph Shalini Bhatia

Computer Department Computer Department

Thadomal Shahani Engineering College, Thadomal Shahani Engineering College,

Bandra, Mumbai, India. Bandra, Mumbai, India.

vincyej@gmail.com shalini.tsec@gmail.com

Abstract—This paper proposes a novel neural-network-based source direction and yields better shape recovery than previous

adaptive hybrid-reflectance three-dimensional (3-D) surface approaches.

reconstruction model. The neural network combines the diffuse

and specular components into a hybrid model. The proposed A hybrid approach uses two self-learning neural networks to

model considers the characteristics of each point and the variant generalize the reflectance model by modeling the pure

albedo to prevent the reconstructed surface from being distorted. Lambertian surface and the specular component of the non-

The neural network inputs are the pixel values of the two- Lambertian surface, respectively. However, the hybrid

dimensional images to be reconstructed. The normal vectors of approach still has two drawbacks:

the surface can then be obtained from the output of the neural

1) The albedo of the surface is disregarded or regarded as

network after supervised learning, where the illuminant direction

does not have to be known in advance. Finally, the obtained

constant, distorting the recovered shape.

normal vectors can be applied to integration method when 2) The combination ratio between diffuse and specular

reconstructing 3-D objects. Facial images were used for training components is regarded as constant, which is determined

in the proposed approach by trial and error.

Keywords-Lambertian Model;neural network;Refectance Model; C. Neural Network Based Hybrid Reflectance Model

shape from shading surface normal and integration

This model intelligently integrates both reflection

components. The pure diffuse and specular reflection

I. INTRODUCTION components are both formed by similar feed-forward neural

Shape recovery is a classical computer vision problem. The network structures. A supervised learning algorithm is applied

objective of shape recovery is to obtain a three-dimensional to produce the normal vectors of the surface for

(3-D) scene description from one or more two-dimensional (2- reconstruction. The proposed approach estimates the

D) images. Shape recovery from shading (SFS) is a computer illuminant direction, viewing direction, and normal vectors of

vision approach, which reconstructs 3-D shape of an object object surfaces for reconstruction after training. The 3-D

from its shading variation in 2-D images. When a point light surface can also be reconstructed using integration methods.

source illuminates an object, they appear with different

brightness, since the normal vectors corresponding to different III. DESCRIPTION

parts of the object’s surface are different. The spatial variation Fig. 1 shows the schematic block diagram of the proposed

of brightness, referred to as shading, is used to estimate the adaptive hybrid-reflectance model, which consists of the

orientation of surface and then calculate the depth map of the diffuse and specular components. This diagram is used to

object describe the characteristics of diffuse and specular

components of adaptive hybrid-reflectance model by two

II. DIFFERENT APPROACHES FOR RECONSTRUCTION neural networks with similar structures. The composite

intensity Rhybrid is obtained by combining diffuse intensity Rd

A. Lambertain Model

and the specular intensity Rs based on the adaptive weights

A successful reflectance model for surface reconstructions of λd(x,y) and λs(x,y) . The system inputs are the 2-D image

objects should combine both diffuse and specular components intensities of each point, and the outputs are the learned

[1]. The Lambertian model describes the relationship between reflectance map.

surface normal and light source direction by assuming that the Fig. 2 shows the framework of the proposed symmetric neural

surface reflection is due diffuse reflection only. This model network which simulates the diffuse reflection model. The

ignores specular component. input/output pairs of the network are arranged like a mirror in

B. Hybrid Reflectance Model the center layer, where the number of input nodes equals the

number of output nodes, making it a symmetric neural

A novel hybrid approach generalizes the reflectance model by network.

considering both diffuse component and specular component.

This model does not require the viewing direction and the light

116 ISSN : 0975-3397

Vincy Joesph et al /International Journal on Computer Science and Engineering Vol.1(3), 2009, 116-121

Figure 1 Block diagram of the proposed adaptive hybrid-reflectance model

Figure 2 Framework of the proposed symmetric neural network for diffuse reflection model

The light source direction and the normal vector from the

f =

I i

, i = 1,...., m

input 2-D images in the left side of the symmetric neural i

α i

network are separated and then combined inversely to generate Layer 3: The purpose of Layer 3 is to separate the light source

the reflectance map for diffuse reflection in the right side of direction from the 2-D image. The light source directions of

the network. The function of each layer is discussed in detail this layer are not normalized.

below.

m

A. Function of Layers fj = ∑ Iˆ ω

i =1

i di, j , i = 1,..., m j = 1,2,3 (3)

Layer 1: This layer normalizes the intensity values of the input

images. Node Ii denotes the ith pixel of the 2-D image and m

denotes the number of total pixels of the image. That is s ′j = a (j 3 ) = f j , j = 1,2,3

fi = I i , i = 1 ,...., m Layer 4: The nodes of this layer represent the unit light source.

Equation (4) is used to normalize the non-normalized light

a i(1) = f i , i = 1,...., m (1)

source direction obtained in Layer 3.

Layer 2: This layer adjusts the intensity of the input 2-D

image with corresponding albedo value.

117 ISSN : 0975-3397

Vincy Joesph et al /International Journal on Computer Science and Engineering Vol.1(3), 2009, 116-121

B. Training Algorithm

1

fj = j = 1, 2 , 3 (4) Back-propagation learning is employed for supervised training

s 1′ 2 + s ′2 2 + s ′3 2 of the proposed model to minimize the error function defined

as

s′j 2

∑ (R )

m

s′j = a (4)

j = f j .s′j = (5) ET = − Di

s1′ 2 + s′22 + s′32 (8)

hybrid i

i =1

Layer 5: Layer 5 combines the light source direction s and where m denotes the number of total pixels of the 2-D image,

normal vectors of the surface to generate the diffuse reflection Ri denotes the i th output of the neural network, and Di denotes

reflectance map. the i th desired output equal to the i th intensity of the original

2-D image. For each 2-D image, starting at the input nodes, a

3 forward pass is used to calculate the activity levels of all the

fk = ∑s υ

j =1

j d j ,k , k = 1,...,m nodes in the network to obtain the output. Then, starting at the

output nodes, a backward pass is used to calculate ∂ET ∂ω ,

where ω denotes the adjustable parameters in the network. The

Rˆdk =a(j5) = fk,

general parameter update rule is given by

k = 1,...,m (6) ω (t + 1 ) = ω (t ) + ∆ ω (t )

⎛ ∂E T ⎞

Layer 6: This layer transfers the non-normalized reflectance = ω (t ) + η ⎜⎜ − ⎟⎟

map of diffuse reflection obtained in Layer 5 into the interval ⎝ ∂ω (t ) ⎠ (9)

[0,1]. The details of the learning rules corresponding to each

f k = Rˆ d k , k = 1,..., m

adjustable parameter are given below.

C. The Output Layer

Rˆ d k = a (j6 ) The combination ratio for each point λ dk (t ) and λ sk (t )

is calculated iteratively by

=

(

255 f k − min Rˆ d ( )) λ dk ( t + 1) = λ dk ( t ) + ∆λ dk ( t )

max( Rˆ d ) − min( Rˆ d ) = λ dk ( t ) + 2η ( D k ( t ) − R hybridk ( t )) R dk ( t )

k = 1,..., m

(

255 Rˆ d k − min Rˆ d ( )) (10)

=

max( Rˆ d ) − min Rˆ d ( ) λ sk ( t + 1) = λ sk ( t ) + ∆λ sk ( t )

= λ sk ( t ) + 2η ( D k ( t ) − R hybridk ( t )) R sk ( t )

k = 1,..., m (7) k = 1,..., m (11)

where (Rˆ d , Rˆ d 2 ,..., Rˆ d m

1

T

)

and the link weights

where Dk (t ) denotes the kth desired output; Rhybridk (t )

denotes the kth system output; Rdk (t ) denotes the kth

between Layers 5 and 6 are unity.

Similar to the diffuse reflection model, a symmetric neural diffuse intensity obtained from the diffuse subnetwork;

network is used to simulate the specular component in the Rsk (t ) denotes the kth specular intensity obtained from

hybrid-reflectance model. The major differences between the specular subnetwork;m denotes the total number of

these two networks are the node representation in Layers 3 and pixels in a 2-D image, and η denotes the learning rate of

4 and the active function of Layer 5. Through the supervised the neural network. For a gray image, the intensity value

learning algorithm derived in the following section, the normal of a pixel is in the interval [0, 1]. To prevent the intensity

surface vectors can be obtained automatically.[3] Then,

integration methods can be used to obtain the depth value of R hybridk from exceeding the interval [0, 1], then

information for reconstructing the 3-D surface of an object by the rule λd+λs = 1 where λd >0 and λs >0, must be

the obtained normal vectors[4]. enforced. Therefore, the combination ratio λdk and λsk is

normalized by

118 ISSN : 0975-3397

Vincy Joesph et al /International Journal on Computer Science and Engineering Vol.1(3), 2009, 116-121

λ dk ( t + 1)

λ dk ( t + 1) = k = 1,..., m W d ( t + 1) = (V d ( t + 1) T V d ( t + 1)) − 1 V d ( t + 1) T

λ dk ( t + 1) + λ sk ( t + 1)

λ sk ( t + 1) W s ( t + 1) = (V s ( t + 1) T V s ( t + 1)) − 1 V s ( t + 1) T (17)

λ sk ( t + 1) = k = 1,..., m (12)

λ dk ( t + 1) + λ sk ( t + 1) where Vd ( t + 1) and V s ( t + 1) denote the weights

D. Subnetworks betweens the output and central layers of the two

The normal vector calculated from the subnetwork subnetworks for the diffuse and specular components,

corresponding to the diffuse component is denoted as respectively.

Additionally, for fast convergence, the learning rate η of

n dk = (υ d 1 k ,υ d 2 k ,υ d 3 k ) for the kth point on the the neural network is adaptive in the updating process. If

surface, and the normal vector calculated from the the current error is smaller than the errors of the

subnetwork corresponding to the specular component is previous two iterations, then the current direction of

denoted as n sk = (υ s 1 k ,υ s 2 k , υ s 3 k ) for the kth point. adjustment is correct.

The normal vectors ndk and n sk are updated iteratively Thus, the current direction should be maintained, and

using the gradient method as the step size should be increased, to speed up

υ djk ( t + 1) = υ djk ( t ) + ∆υ djk ( t ) convergence. By contrast, if the current error is larger

than the errors of the previous two iterations, then the

= υ djk ( t ) + 2η s j ( t )( Dk ( t ) − Rhybridk ( t )) step size must be decreased because the current

adjustment is wrong. Otherwise, the learning rate does

j = 1,2,3 (13) not change. Thus, the cost function ET could reach the

υ sjk ( t + 1) = υ sjk ( t ) + ∆υ sjk ( t ) minimum quickly and avoid oscillation around the local

minimum. The adjustment rule of the learning rate is

given as follows:

= υ sjk ( t ) + 2η rh j ( t )( D k ( t ) − R hybridk ( t ))

j = 1,2,3 If (Err (t-1) > Err (t) and Err (t-2) > Err (t) )

(14) η(t+1)= η(t) + ξ ,

where sj(t) denotes the jth element of illuminant direction Else If (Err (t-1) < Err (t) and Err (t-2) < Err (t) )

s ; hj(t) denotes the jth element of the halfway vector , η(t+1)= η(t) – ξ , where ξ is a given scalar.

and r denotes the degree of the specular equation. The Else η(t+1)= η(t)

updated υ djk and υ sjk should be normalized as follows:

υ djk ( t + 1) IV. EXPERIMENT RESULTS AND DISCUSSION

υ djk ( t + 1) =

ndk ( t + 1) Yale Database has been used in this project and from this

database two datasets have been considered for training [5]. In

υ sjk ( t + 1) the first dataset a person with fixed pose, 5 images were

υ sjk ( t + 1) = j = 1,2,3 (15) selected with 5 different illuminant direction. In the second

n sk ( t + 1)

dataset five people with fixed pose, 3 images were taken with

To obtain the reasonable normal vectors of the surface 3 different illuminant direction. Illuminant directions chosen

from the adaptive hybrid-reflectance model, ndk and for datasets are different.

n sk are composed from the hybrid normal vector nk of After the text edit has been completed, the paper is ready

the surface on the kth point by for the template. Duplicate the template file by using the Save

nk (t + 1) = ndk (t + 1)λdk (t + 1) + nsk (t + 1)λsk (t + 1) (16) As command, and use the naming convention prescribed by

your conference for the name of your paper. In this newly

where λdk ( t + 1) and λ sk ( t + 1) denote the created file, highlight all of the contents and import your

combination ratios for the diffuse and specular prepared text file. You are now ready to style your paper; use

the scroll down window on the left of the MS Word Formatting

components.

toolbar.

Since the structure of the proposed neural networks is

like a mirror in the center layer, the update rule for the

A. Preprocessing

weights between Layers 2 and 3 of the two subnetworks

denoted as Wd and Ws can be calculated by the least In the preprocessing stage, the images were cropped into

square method. Hence, Wd and Ws at time t+1 can be 64X64 pixels. It was made into a single vector of size 4096.

calculated by Therefore the first database is of size 3X4096 and the second

database is of size 9X4096.

119 ISSN : 0975-3397

Vincy Joesph et al /International Journal on Computer Science and Engineering Vol.1(3), 2009, 116-121

B. Training Results First the training was started with a fixed learning constant.

The neural network was implemented and the training of the It was working fine for higher values of error and later the

network was started with the first dataset. The results of the convergence of error was very slow. Then the training was

training done so far are given below. done applying momentum. It reduced the error to some lower

value and it started degrading very slowly. Then adaptive

learning method was employed which showed that the

convergence can happen faster. Thus adaptive learning method

is a faster method in error back propagation algorithm.

Diffuse Intensity Reconstruction

0.4

0.3

Diffuse Component

0.2

Figure 3 Efficiency plot with constant eata

Efficiency plot of training with momentum 0.1

660.58

660.56 0

80

60 80

660.54

40 60

40

660.52 20 20

0 0

error

y-axis x-axis

660.5

660.48 Figure 6 Reconstruction with Diffuse Component

660.46

660.44

0 5 10 15 20 25 30 35 40 45 50

no. of epoches

Figure 4 Efficiency plot with momentum

Efficiency plot of training with ADAPTIVE learning

228.2576

228.2574

228.2572

error

228.257

228.2568

228.2566 Figure 7.Reconstruction with Specular Component

228.2564

0 5 10 15 20 25 30

no. of epoches

Figure 5 Efficiency plot with adaptive eata

120 ISSN : 0975-3397

Vincy Joesph et al /International Journal on Computer Science and Engineering Vol.1(3), 2009, 116-121

IEEE International Conference on Control and Automation Guangzhou,

China - May 30 to June 1, 2007

[2] Wen-Chang Cheng,“Neural-Network-Based Photometric Stereo

for 3D Surface Reconstruction,” 2006 International Joint Conference

on Neural Networks Sheraton Vancouver Wall Centre Hotel, Vancouver,

BC, Canada July 16-21, 2006

[3] Chin-Teng Lin,Wen-Chang Cheng, and Sheng-Fu Liang, “

Neural-Network-Based Adaptive Hybrid-Reflectance Model for 3-

D Surface Reconstruction, ” IEEE Transaction on Neural

Networks, Vol.16, No. 6, November 2005.

[4] Zhongquan Wu and Lingxiao Li, “A line-integration based method for

depth recovery from surface normals”, IEEE Transactions on Pattern

Analysis and Machine Intelligence, Vol.43, No.1, July 1988.

[5] S. Georghiades, P. N. Belhumeur, and D. J. Kriegman, “From few

to many: illumination cone models for face recognition under

variable lighting and pose,” IEEE Transactions on Pattern

Analysis and Machine Intelligence, vol. 23, No.06, June 2001

Shalini Bhatia was born on August 08, 1971. She received the B.E.

degree in Computer Engineering from Sri Sant Gajanan Maharaj

College of Engineering, Amravati University,

Shegaon, Maharashtra, India in 1993, M.E.

Figure 8. Reconstruction with Hybrid Component

degree in Computer Engineering from Thadomal

Shahani Engineering College, Mumbai,

Maharashtra, India in 2003. She has been

associated with Thadomal Shahani Engineering

College since 1995, where she has worked as

V. CONCLUSION

Lecturer in Computer Engineering Department from Jan 1995 to Dec

In this paper a novel 3-D image reconstruction approach 2004 and as Assistant Professor from Dec 2004 to Dec 2005. She has

which considers both diffuse and specular components published a number of technical papers in National and International

of the reflectance model simultaneously has been Conferences. She is a member of CSI and SIGAI which is a part of

proposed. Two neural networks with symmetric CSI.

structures were used to estimate these two components

Vincy Elizabeth Joseph was born on February 5, 1982. She received

separately and to combine them with an adaptive ratio

the B.E. degree in Electronics and Communication Engineering from

for each point on the object surface. This paper also College of Engineering, Kidangoor, Cochin

attempted to reduce distortion caused by variable albedo University of Science and Technology, Cochin,

variation by dividing each pixel’s intensity by Kerala. She is pursuing M.E. degree in Computer

corresponding albedo value. Then, these intensity Engineering from Thadomal Shahani Engineering

values were fed into network to learn the normal vectors College, Mumbai, Maharashtra, India.She is

of surface by back-propagation learning algorithm. The working with St.Francis Institute of Technology,

parameters such as light source and viewing direction Borivli (W) Mumbai from the year 2004 to 2005 as Lecturer in

can be obtained from the neural network. The normal Electronics and Telecommunication Department and from the year

2005 as Lecturer in Computer Engineering Department. Her research

surface vectors thus obtained can then be applied to 3-D interests include Image Processsing, Neural Networks, Data

surface reconstruction by integration method. Encryption and Data Compression

REFERENCES

[1] Yuefang Gao, Jianzhong Cao, and Fei Luo, “A Hybrid-reflectance-

modeled and Neural-network based Shape from Shading Algorithm”,

121 ISSN : 0975-3397

Das könnte Ihnen auch gefallen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5795)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- MyLLP Customer Portal User Guide - RegistrationDokument10 SeitenMyLLP Customer Portal User Guide - RegistrationEverboleh ChowNoch keine Bewertungen

- LanDokument5 SeitenLanannamyemNoch keine Bewertungen

- ContainerDokument12 SeitenContainerlakkekepsuNoch keine Bewertungen

- Brochure Otis Gen360 enDokument32 SeitenBrochure Otis Gen360 enshimanshkNoch keine Bewertungen

- t410 600w 4 Amp PDFDokument8 Seitent410 600w 4 Amp PDFJose M PeresNoch keine Bewertungen

- H-D 2015 Touring Models Parts CatalogDokument539 SeitenH-D 2015 Touring Models Parts CatalogGiulio Belmondo100% (1)

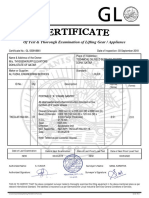

- Of Test & Thorough Examination of Lifting Gear / Appliance: - QatarDokument12 SeitenOf Test & Thorough Examination of Lifting Gear / Appliance: - QatarChaimaNoch keine Bewertungen

- Resetting of Computers - A320: Systems That Can Be Reset in The Air or On The GroundDokument8 SeitenResetting of Computers - A320: Systems That Can Be Reset in The Air or On The Groundanarko arsipelNoch keine Bewertungen

- TECDCT-2145 Cisco FabricPathDokument156 SeitenTECDCT-2145 Cisco FabricPathWeiboraoNoch keine Bewertungen

- March Aprilwnissue2016Dokument100 SeitenMarch Aprilwnissue2016Upender BhatiNoch keine Bewertungen

- Siva QADokument6 SeitenSiva QAsivakanth mNoch keine Bewertungen

- API Valves: A. API Gate Valves B. Mud Gate Valves C. API Plug ValvesDokument15 SeitenAPI Valves: A. API Gate Valves B. Mud Gate Valves C. API Plug Valveskaveh-bahiraeeNoch keine Bewertungen

- Exedy 2015 Sports Clutch Catalog WebDokument80 SeitenExedy 2015 Sports Clutch Catalog WebfjhfjNoch keine Bewertungen

- Prospects of Bulk Power EHV and UHV Transmission (PDFDrive)Dokument20 SeitenProspects of Bulk Power EHV and UHV Transmission (PDFDrive)Prashant TrivediNoch keine Bewertungen

- Delhi Subordinate Services Selection Board: Government of NCT of DelhiDokument60 SeitenDelhi Subordinate Services Selection Board: Government of NCT of Delhiakkshita_upadhyay2003Noch keine Bewertungen

- Differential Pressure Switch RH3Dokument2 SeitenDifferential Pressure Switch RH3Jairo ColeccionistaNoch keine Bewertungen

- Oracle IRecruitment - By: Hamdy MohamedDokument49 SeitenOracle IRecruitment - By: Hamdy Mohamedhamdy2001100% (1)

- Temperature Measuring Instrument (1-Channel) : Testo 925 - For Fast and Reliable Measurements in The HVAC FieldDokument8 SeitenTemperature Measuring Instrument (1-Channel) : Testo 925 - For Fast and Reliable Measurements in The HVAC FieldMirwansyah TanjungNoch keine Bewertungen

- BCS 031 Solved Assignments 2016Dokument15 SeitenBCS 031 Solved Assignments 2016Samyak JainNoch keine Bewertungen

- It Implementation-Issues, Opportunities, Challenges, ProblemsDokument9 SeitenIt Implementation-Issues, Opportunities, Challenges, ProblemsAnnonymous963258Noch keine Bewertungen

- Cs - cp56 64 74 Specalog (Qehq1241)Dokument20 SeitenCs - cp56 64 74 Specalog (Qehq1241)firman manaluNoch keine Bewertungen

- VM 6083 - 60B1 Data SheetDokument3 SeitenVM 6083 - 60B1 Data SheetMinh HoàngNoch keine Bewertungen

- Ansi z245 2 1997Dokument31 SeitenAnsi z245 2 1997camohunter71Noch keine Bewertungen

- Lit MotorsDokument11 SeitenLit MotorsJohnson7893Noch keine Bewertungen

- Dri-Su-1824-Q-Induction MotorDokument8 SeitenDri-Su-1824-Q-Induction MotorTaufiq Hidayat0% (1)

- Architects DONEDokument10 SeitenArchitects DONEAyu Amrish Gupta0% (1)

- Vaas Head Office DetailsDokument8 SeitenVaas Head Office DetailsDanielle JohnsonNoch keine Bewertungen

- Report On The Heritage City ProjectDokument23 SeitenReport On The Heritage City ProjectL'express Maurice100% (2)