Beruflich Dokumente

Kultur Dokumente

Ann 4

Hochgeladen von

Anirut M TkttOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Ann 4

Hochgeladen von

Anirut M TkttCopyright:

Verfügbare Formate

1

34

Simple Associative Network

a hardlim wp b + ( ) hardlim wp 0.5 ( ) = =

p

1 stimulus ,

0 no stimulus ,

= a

1 response ,

0 no response ,

=

Associative Learning Associative Learning

1

35

Binary Association Binary Association

1

36

Real Value Association Real Value Association

1

37

Bipolar Association Bipolar Association

1

38

Hamming Distance Hamming Distance

1

39

Enhanced Hamming Distance Enhanced Hamming Distance

1

40

Enhanced Binary Association Enhanced Binary Association

1

41

Interference Interference

1

42

XX--OR Interference OR Interference

1

43

Banana Associator Banana Associator

p

0

1 shape detected ,

0 shape not detected ,

= p

1 smell detected ,

0 smell not detected ,

=

Unconditioned Stimulus Conditioned Stimulus

1

44

Unsupervised Hebb Rule Unsupervised Hebb Rule

w

ij

q ( ) w

ij

q 1 ( ) oa

i

q ( ) p

j

q ( ) + =

W q ( ) W q 1 ( ) oa q ( ) p

T

q ( ) + =

Vector Form:

p 1 ( ) p 2 ( ) . p Q ( ) , , ,

Training Sequence:

from (7.5)

Define the rate of

association

1

45

Banana Recognition Example Banana Recognition Example

w

0

1 w 0 ( ) , 0 = =

Initial Weights:

p

0

1 ( ) 0 = p 1 ( ) , 1 = { } p

0

2 ( ) 1 = p 2 ( ) , 1 = { } . , ,

Training Sequence:

w q ( ) w q 1 ( ) a q ( ) p q ( ) + =

a 1 ( ) h a r d l i m w

0

p

0

1 ( ) w 0 ( ) p 1 ( ) 0.5 + ( )

h a r d l i m 1 0 0 1 0.5 + ( ) 0 (no response)

=

= =

First Iteration (sight fails):

w 1 ( ) w 0 ( ) a 1 ( ) p 1 ( ) + 0 0 1 + 0 = = =

o = 1

1

46

Example Example

a 2 ( ) h a r d l i m w

0

p

0

2 ( ) w 1 ( ) p 2 ( ) 0.5 + ( )

h a r d l i m 1 1 0 1 0.5 + ( ) 1 (banana)

=

= =

Second Iteration (sight works):

w 2 ( ) w 1 ( ) a 2 ( ) p 2 ( ) + 0 1 1 + 1 = = =

Third Iteration (sight fails):

a 3 ( ) hardlim w

0

p

0

3 ( ) w 2 ( ) p 3 ( ) 0.5 + ( )

hardlim 1 0 1 1 0.5 + ( ) 1 (banana)

=

= =

w 3 ( ) w 2 ( ) a 3 ( ) p 3 ( ) + 1 1 1 + 2 = = =

Banana will now be detected if either sensor works.

1

47

Problems with Hebb Rule Problems with Hebb Rule

Weights can become arbitrarily large (continue

updating on presenting input)

There is no mechanism for weights to decrease (a

problem occurs in case of facing any noise)

1

48

Hebb Rule with Decay Hebb Rule with Decay

W q ( ) W q 1 ( ) oa q ( ) p

T

q ( ) W q 1 ( ) + =

W q ( ) 1 ( )W q 1 ( ) oa q ( ) p

T

q ( ) + =

This keeps the weight matrix from growing without bound, which can be

demonstrated by setting both a

i

and p

j

to 1:

w

i j

max

1 ( ) w

i j

max

oa

i

p

j

+ =

w

i j

max

1 ( ) w

i j

max

o + =

w

i j

max o

--- =

from (7.45)

1 0 ; rate decay s s =

1

49

Example Example: : Banana Associator Banana Associator

a 1 ( ) h a r d l i m w

0

p

0

1 ( ) w 0 ( ) p 1 ( ) 0.5 + ( )

h a r d l i m 1 0 0 1 0.5 + ( ) 0 (no response)

=

= =

First Iteration (sight fails):

w 1 ( ) w 0 ( ) a 1 ( ) p 1 ( ) 0.1w 0 ( ) + 0 0 1 0.1 0 ( ) + 0 = = =

a 2 ( ) h a r d l i m w

0

p

0

2 ( ) w 1 ( ) p 2 ( ) 0.5 + ( )

h a r d l i m 1 1 0 1 0.5 + ( ) 1 (banana)

=

= =

Second Iteration (sight works):

w 2 ( ) w 1 ( ) a 2 ( ) p 2 ( ) 0.1w 1 ( ) + 0 1 1 0.1 0 ( ) + 1 = = =

= 0.1 o = 1

1

50

Example Example

Third Iteration (sight fails):

a 3 ( ) hardlim w

0

p

0

3 ( ) w 2 ( ) p 3 ( ) 0.5 + ( )

hardlim 1 0 1 1 0.5 + ( ) 1 (banana)

=

= =

w 3 ( ) w 2 ( ) a 3 ( ) p 3 ( ) 0.1w 3 ( ) + 1 1 1 0.1 1 ( ) + 1.9 = = =

0 10 20 30

0

10

20

30

0 10 20 30

0

2

4

6

8

10

Hebb Rule Hebb with Decay

w

i j

max

o

---

1

0.1

------- 10 = = =

1

51

Problem of Hebb with Decay Problem of Hebb with Decay

Associations will decay away if stimuli are not occasionally presented.

w

ij

q ( ) 1 ( )w

ij

q 1 ( ) =

If a

i

= 0, then

If = 0, this becomes

w

i j

q ( ) 0.9 ( )w

i j

q 1 ( ) =

Therefore the weight decays by 10% at each iteration

where there is no stimulus.

0 10 20 30

0

1

2

3

1

52

Instar Instar ((Recognition Network Recognition Network))

(A solution to decay rate problem)

A vector input, a scalar output performing recognition tasks.

1

53

Instar Operation Instar Operation

a hardlim Wp b + ( ) hardlim w

T

1

p b + ( ) = =

The instar will be active when

w

T

1

p b >

or

w

T

1

p w

1

p u cos b > =

For normalized vectors, the largest inner product occurs when the angle between the

weight vector and the input vector is zero -- the input vector is equal to the weight

vector.

The rows of a weight matrix represent patterns

to be recognized.

1

54

Vector Recognition Vector Recognition

b w

1

p = If we set

the instar will only be active when u = 0.

b w

1

p > If we set

the instar will be active for a range of angles.

As b is increased, the more patterns there will be (over a wider range of u) which

will activate the instar.

w

1

(recognize only pattern

1

w)

or

b > p w

1

1

55

Instar Rule Instar Rule

w

ij

q ( ) w

ij

q 1 ( ) oa

i

q ( ) p

j

q ( ) + =

Hebb with Decay

Modify so that learning and forgetting will only occur

when the neuron is active - Instar Rule:

w

i j

q ( ) w

i j

q 1 ( ) oa

i

q ( ) p

j

q ( ) a

i

q ( ) w q 1 ( ) + =

i j

w

ij

q ( ) w

ij

q 1 ( ) oa

i

q ( ) p

j

q ( ) w

i j

q 1 ( ) ( ) + =

w q ( )

i

w q 1 ( )

i

oa

i

q ( ) p q ( ) w q 1 ( )

i

( ) + =

or

Vector Form:

; =

1

56

Graphical Representation Graphical Representation

w q ( )

i

w q 1 ( )

i

o p q ( ) w q 1 ( )

i

( ) + =

For the case where the instar is active (a

i

=

1):

or

w q ( )

i

1 o ( ) w q 1 ( )

i

op q ( ) + =

For the case where the instar is inactive (a

i

=

0):

w q ( )

i

w q 1 ( )

i

=

1

57

Example Example

p

0

1 orange detected visually ,

0 orange not detected ,

=

p

shape

texture

weight

=

1

58

Training Training

W 0 ( ) w

T

1

0 ( )

0 0 0

= =

p

0

1 ( ) 0 = p 1 ( ) ,

1

1

1

=

)

`

p

0

2 ( ) 1 = p 2 ( ) ,

1

1

1

=

)

`

. , ,

First Iteration (o=1):

a 1 ( ) hardlim w

0

p

0

1 ( ) Wp 1 ( ) 2 + ( ) =

a 1 ( ) h ardlim 3 0

0 0 0

1

1

1

2 +

\ .

|

|

|

| |

0 (no response) = =

w 1 ( )

1

w 0 ( )

1

a 1 ( ) p 1 ( ) w 0 ( )

1

( ) +

0

0

0

0

1

1

1

0

0

0

\ .

|

|

|

| |

+

0

0

0

= = =

1

59

Further Training Further Training

(orange)

h a 2 ( ) h a r d l i m w

0

p

0

2 ( ) W p 2 ( ) 2 + ( ) = a r d l i m 3 1

0 0 0

1

1

1

2 +

)

1 = =

w 2 ( )

1

w 1 ( )

1

a 2 ( ) p 2 ( ) w 1 ( )

1

( ) +

0

0

0

1

1

1

1

0

0

0

\ .

|

|

|

| |

+

1

1

1

= = =

a 3 ( ) h a r d l i m w

0

p

0

3 ( ) W p 3 ( ) 2 + ( ) =

(orange)

h a r d l i m 3 0

1 1 1

1

1

1

2 +

)

1 = =

w 3 ( )

1

w 2 ( )

1

a 3 ( ) p 3 ( ) w 2 ( )

1

( ) +

1

1

1

1

1

1

1

1

1

1

\ .

|

|

|

| |

+

1

1

1

= = =

Orange will now be detected if either set of sensors works.

.

1

60

Kohonen Rule Kohonen Rule

w q ( )

1

w q 1 ( )

1

o p q ( ) w q 1 ( )

1

( ) + = for i X q ( ) e ,

Learning occurs when the neurons index i is a member of the set X(q).

We will see in the next chapter that this can be used to train all

neurons in a given neighborhood.

1

61

Outstar Outstar ((Recall Network Recall Network))

A scalar input, a vector output performing recall tasks.

1

62

Outstar Operation Outstar Operation

W a

-

=

Suppose we want the outstar to recall a certain pattern a*

whenever the input p = 1 is presented to the network. Let

Then, when p = 1

a satlins Wp ( ) s atli ns a

-

1 ( ) a

-

= = =

and the pattern is correctly recalled.

The columns of a weight matrix represent patterns

to be recalled.

1

63

Outstar Rule Outstar Rule

w

ij

q ( ) w

i j

q 1 ( ) oa

i

q ( ) p

j

q ( ) p

j

q ( )w

ij

q 1 ( ) + =

For the instar rule we made the weight decay term of the Hebb

rule proportional to the output of the network. For the outstar

rule we make the weight decay term proportional to the input of the network.

If we make the decay rate equal to the learning rate o,

w

i j

q ( ) w

i j

q 1 ( ) o a

i

q ( ) w

ij

q 1 ( ) ( ) p

j

q ( ) + =

Vector Form:

w

j

q ( ) w

j

q 1 ( ) o a q ( ) w

j

q 1 ( ) ( ) p

j

q ( ) + =

1

64

Example Example -- Pineapple Recall Pineapple Recall

1

65

Definitions Definitions

a s atl ins W

0

p

0

Wp + ( ) =

W

0

1 0 0

0 1 0

0 0 1

=

p

0

shape

texture

weight

=

p

1 if a pineapple can be seen ,

0 otherwise ,

=

p

pi neap ple

1

1

1

=

1

66

Iteration Iteration 11

p

0

1 ( )

0

0

0

= p 1 ( ) , 1 =

)

`

p

0

2 ( )

1

1

1

= p 2 ( ) , 1 =

)

`

. , ,

a 1 ( ) satli ns

0

0

0

0

0

0

1 +

\ .

|

|

|

| |

0

0

0

(no response) = =

w

1

1 ( ) w

1

0 ( ) a 1 ( ) w

1

0 ( ) ( ) p 1 ( ) +

0

0

0

0

0

0

0

0

0

\ .

|

|

|

| |

1 +

0

0

0

= = =

o = 1

1

67

Convergence Convergence

a 2 ( ) satlins

1

1

1

0

0

0

1 +

\ .

|

|

|

| |

1

1

1

(measurements given) = =

w

1

2 ( ) w

1

1 ( ) a 2 ( ) w

1

1 ( ) ( ) p 2 ( ) +

0

0

0

1

1

1

0

0

0

\ .

|

|

|

| |

1 +

1

1

1

= = =

w

1

3 ( ) w

1

2 ( ) a 2 ( ) w

1

2 ( ) ( ) p 2 ( ) +

1

1

1

1

1

1

1

1

1

\ .

|

|

|

| |

1 +

1

1

1

= = =

a 3 ( ) satli ns

0

0

0

1

1

1

1 +

\ .

|

|

|

| |

1

1

1

(measurements recalled) = =

1

68

Hamming Network

From figure 3.5

Instar layer

Competitive layer

Competitive Networks Competitive Networks

1

69

Layer Layer 11 ((Correlation Correlation))

p

1

p

2

. p

Q

, , , { }

We want the network to recognize the following prototype vectors:

W

1

w

T

1

w

T

2

.

.

.

w

T

S

p

1

T

p

2

T

.

.

.

p

Q

T

= = b

1

R

R

.

.

.

R

=

The first layer weight matrix and bias vector are given by:

The response of the first layer is:

The prototype

closest to the

input vector produces

the largest response.

a

1

W

1

p b

1

+

p

1

T

p R +

p

2

T

p R +

.

.

.

p

Q

T

p R +

= =

: (Recognize multiple patterns)

1

70

Layer Layer 22 ((Competition Competition))

a

2

0 ( ) a

1

=

a

2

t 1 + ( ) pos li n W

2

a

2

t ( ) ( ) =

w

ij

2

1 if i j = ,

c otherwise ,

= 0 c

1

S 1

------------ < <

a

i

2

t 1 + ( ) poslin a

i

2

t ( ) c a

j

2

t ( )

j i =

\ .

| |

=

The neuron with the largest

initial condition will win the

competiton.

The second layer is

initialized with the output

of the first layer.

Lateral inhibition

For each neuron :

1

71

Competitive Layer Competitive Layer

a

i

1 i i

-

= ,

0 i i

-

= ,

=

n

i

-

n

i

> i , i

-

i s n

i

n

i

-

= ,

a compet n ( ) =

n W p

w

1

T

w

2

T

.

.

.

w

S

T

p

w

1

T

p

w

2

T

p

.

.

.

w

S

T

p

L

2

u

1

cos

L

2

u

2

cos

.

.

.

L

2

u

S

cos

= = = =

1

72

Competitive Learning Competitive Learning

w

i

q ( ) w

i

q 1 ( ) oa

i

q ( ) p q ( ) w

i

q 1 ( ) ( ) + =

w

i

q ( ) w

i

q 1 ( ) = i i

-

=

w

i

-

q ( ) w

i

-

q 1 ( ) o p q ( ) w

i

-

q 1 ( ) ( ) + =

w

i

-

q ( ) 1 o ( ) w

i

-

q 1 ( ) op q ( ) + =

For the competitive network, the winning neuron has an

ouput of 1, and the other neurons have an output of 0.

Instar Rule

Kohonen Rule

1

73

Graphical Representation Graphical Representation

w

i

-

q ( ) w

i

-

q 1 ( ) o p q ( ) w

i

-

q 1 ( ) ( ) + =

w

i

-

q ( ) 1 o ( ) w

i

-

q 1 ( ) op q ( ) + =

1

74

Example Example

1

75

Four Iterations Four Iterations

1

76

Typical Convergence Typical Convergence ((Clustering Clustering))

Before Training After Training

Weights

Input Vectors

1

77

Dead Units Dead Units (p14-9)

One problem with competitive learning is that neurons with initial weights far

from any input vector may never win.

Solution: Add a negative bias to each neuron, and increase the magnitude of the

bias as the neuron wins. This will make it harder to win if a neuron has won often.

This is called a conscience.

Dead Unit

1

78

Stability Stability (p14-10)

1

w(0)

2

w(0)

p

1

p

3

p

2

p

5

p

6

p

7

p

4

p

8

1

w(8)

2

w(8)

p

1

p

3

p

2

p

5

p

6

p

7

p

4

p

8

If the input vectors dont fall into nice clusters, then for large learning rates the

presentation of each input vector may modify the

configuration so that the system will undergo continual evolution.

1

79

Competitive Layers in Biology Competitive Layers in Biology

w

i j ,

1 if i j = ,

c if i j = ,

=

w

i j ,

1 if d

i j ,

0 = ,

c if d

i j ,

0 > ,

=

Weights in the competitive layer of the Hamming network:

Weights assigned based on distance:

On-Center/Off-Surround Connections for Competition

1

80

Mexican Mexican--Hat Function Hat Function

1

81

Feature Maps Feature Maps

w

i

q ( ) w

i

q 1 ( ) o p q ( ) w

i

q 1 ( ) ( ) + =

w

i

q ( ) 1 o ( ) w

i

q 1 ( ) op q ( ) + =

i N

i

-

d ( ) e

Update weight vectors in a neighborhood of the winning neuron.

N

i

d ( ) j d

i j ,

d s , { } =

N

13

1 ( ) 8 12 13 14 18 , , , , { } =

N

13

2 ( ) 3 7 8 9 11 12 13 14 15 17 18 19 23 , , , , , , , , , , , , { } =

1

82

Self Organizing Maps: Clustering Self Organizing Maps: Clustering

With unsupervised learning there is no instruction and the network is left to

cluster patterns. All of the patterns within a cluster will be judged as

being similar.

Cluster algorithms form groups referred to as clusters and the arrangement

of clusters should reflect two properties:

Patterns within a cluster should be similar in some way.

Clusters that are similar in some way should be close together.

1

83

The Self The Self- -organizing Feature Map organizing Feature Map

The input units correspond in number to the dimension of the training

vectors.

The output units act as prototypes.

This network has three inputs and five cluster units. Each unit in the input layer is connected to every

unit in the cluster layer.

1

84

How does a cluster unit act as a prototype? How does a cluster unit act as a prototype?

w

2,1

w

1,1

x

1

x

2

p

1

x

2

coordinate x

1

coordinate

w

1,1

w

2,1

The input layer takes coordinates from the input space. The weights adapt during training, and when learning

is complete each cluster unit will have a position in the input space determined by weights.

1

85

Radius 1

Radius 2

Winning unit, only one to update if radius=0

Units to be updated when radius=1

Units to be updated when radius=2

The topology determines which units fall within a

radius of the winning unit.

The winning unit will adapt its weights in a way that

moves that cluster unit even closer to the training

vector.

Weight updates within a radius Weight updates within a radius

1

86

Algorithm Algorithm

initialize weights to random values.

while not HALT

for each input vector

for each cluster unit calculate the distance from the training vector

find unit j with the minimum distance

update all weight vectors for units within the radius according to

check to see if the learning rate or radius needs updating

check HALT.

=

i

i ij j

x w d

2

) (

)] ( )[ ( ) ( ) 1 ( n w x n n w n w

ij i ij ij

+ = + q

1

87

Self Self- -Organizing Maps Organizing Maps

On each training input, all output nodes that are within a topological distance, d

T

,

of D from the winner node will have their incoming weights modified.

d

T

(y

i

,y

j

) = # nodes that must be traversed in the output layer in moving between

output nodes y

i

and y

j

.

D is typically decreased as training proceeds.

Fully Interconnected

Input

Output

Partially

Intraconnected

1

88

Example Example

An SOFM has a 10x10 two-dimensional grid layout and the radius is initially set to

6. Find how many units will be updated after 1000 epochs if the winning unit

is located in the extreme bottom right-hand corner of the grid and the radius

is updated according to

r = r-1 if current_epoch mod 200 = 0

Assume that epoch numbering starts at 1.

1

89

Example Example

1

90

Example Example 22

1

91

Example Example 22

1

92

Example Example 22

1

93

Example Example 33

1

94

Example Example 33

Figure 3.16 The weight vector positions converted to (x,y) coordinates after 5000 cycles through

all the training data.

1

95

Example Example

33

Figure 3.18 The weight vector positions converted to (x,y) coordinates after 20000 cycles

through all the training data.

Figure 3.17 The weight vector positions converted to (x,y) coordinates after 10000 cycles

through all the training data.

1

96

Convergence Convergence

-1 -0.8 -0.6 -0.4 -0.2 0 0.2 0.4 0.6 0.8 1

-1

-0.8

-0.6

-0.4

-0.2

0

0.2

0.4

0.6

0.8

1

-1 -0.8 -0.6 -0.4 -0.2 0 0.2 0.4 0.6 0.8 1

-1

-0.8

-0.6

-0.4

-0.2

0

0.2

0.4

0.6

0.8

1

-1 -0.8 -0.6 -0.4 -0.2 0 0.2 0.4 0.6 0.8 1

-1

-0.8

-0.6

-0.4

-0.2

0

0.2

0.4

0.6

0.8

1

-1 -0.8 -0.6 -0.4 -0.2 0 0.2 0.4 0.6 0.8 1

-1

-0.8

-0.6

-0.4

-0.2

0

0.2

0.4

0.6

0.8

1

1

97

There Goes The Neighborhood There Goes The Neighborhood

D = 1

D = 2

D = 3

As the training period progresses, gradually decrease D.

Over time, islands form in which the center represents the centroid C of a set

of input vectors, S, while nearby neighbors represent slight variations on C and

more distant neighbors are major variations.

These neighbors may only win on a few (or no) input vectors, while the island

center will win on many of the elements of S.

1

98

Self Organization Self Organization

In the beginning, the Euclidian distance d

E

(y

l

,y

k

) and Topological distance d

T

(y

l

,y

k

) between output nodes y

l

and y

k

will

not be related.

But during the course of training, they will become positively correlated: Neighbor nodes in the topology will have

similar weight vectors, and topologically distant nodes will have very different weight vectors.

d

E

(y

l

, y

k

) = w

li

w

ki

( )

2

i=1

n

Euclidean Neighbor

Emergent Structure of Output Layer

Before After

Topological Neighbor

1

99

Example Example 44

An SOFM network with three inputs and two cluster units is to be trained using the four training vectors:

[0.8 0.7 0.4], [0.6 0.9 0.9], [0.3 0.4 0.1], [0.1 0.1 02] and initial weights

The initial radius is 0 and the learning rate is 0.5 . Calculate the weight changes during the first cycle

through the data, taking the training vectors in the given order.

(

(

(

5 . 0 8 . 0

2 . 0 6 . 0

4 . 0 5 . 0

q

weights to the first cluster unit

1

100

Solution Solution

The euclidian distance of the input vector 1 to cluster unit 1 is:

The euclidian distance of the input vector 1 to cluster unit 2 is:

Input vector 1 is closest to cluster unit 1 so update weights to cluster unit 1:

( ) ( ) ( ) 26 . 0 4 . 0 8 . 0 7 . 0 6 . 0 8 . 0 5 . 0

2 2 2

1

= + + = d

( ) ( ) ( ) 42 . 0 4 . 0 5 . 0 7 . 0 2 . 0 8 . 0 4 . 0

2 2 2

2

= + + = d

) 8 . 0 4 . 0 ( 5 . 0 8 . 0 6 . 0

) 6 . 0 7 . 0 ( 5 . 0 6 . 0 65 . 0

) 5 . 0 8 . 0 ( 5 . 0 5 . 0 65 . 0

)] ( [ 5 . 0 ) ( ) 1 (

+ =

+ =

+ =

+ = + n w x n w n w

ij i ij ij

(

(

(

5 . 0 60 . 0

2 . 0 65 . 0

4 . 0 65 . 0

1

101

Solution Solution

The euclidian distance of the input vector 2 to cluster unit 1 is:

The euclidian distance of the input vector 2 to cluster unit 2 is:

Input vector 2 is closest to cluster unit 1 so update weights to cluster unit 1 again:

( ) ( ) ( ) 155 . 0 9 . 0 6 . 0 9 . 0 65 . 0 6 . 0 65 . 0

2 2 2

1

= + + = d

( ) ( ) ( ) 69 . 0 9 . 0 5 . 0 9 . 0 2 . 0 6 . 0 4 . 0

2 2 2

2

= + + = d

) 60 . 0 9 . 0 ( 5 . 0 60 . 0 750 . 0

) 65 . 0 9 . 0 ( 5 . 0 65 . 0 775 . 0

) 65 . 0 6 . 0 ( 5 . 0 65 . 0 625 . 0

)] ( [ 5 . 0 ) ( ) 1 (

+ =

+ =

+ =

+ = + n w x n w n w

ij i ij ij

(

(

(

5 . 0 750 . 0

2 . 0 775 . 0

4 . 0 625 . 0

Repeat the same update procedure for input vector 3 and 4 also.

1

102

The angle between vectors as the measure of The angle between vectors as the measure of

similarity similarity

| |

n

v v v v ......

2 1

=

| |

n

w w w w ......

2 1

=

| |

n n

w v w v w v w v ...... .

2 2 1 1

=

The dot product which is sometimes called the inner product or scalar

product, of two vectors v and w

is given as follows:

p

1

p

2

a

The angle between non-zero vectors v and w is:

|

|

.

|

\

|

w v

w v.

cos

1

2 2

2

2

1

...

n

v v v v vector of magnitude or norm v + + + = =

1

103

Weight update for dot product as measure of Weight update for dot product as measure of

similarity similarity

The winning prototype index for an input vector x:

index(x)=max p

j

.x for all j

The weight updates are given by:

x n w

x n w

n w

j

j

j

q

q

+

+

+ +

) (

) (

) 1 (

1

104

Self Self- -Organized Maps for Robot Navigation Organized Maps for Robot Navigation

Owen & Nehmzow (1998)

Task: Autonomous robot navigation in a laboratory

Goals:

1. Find a useful internal representation (i.e. map) that supports an intelligent choice of

actions for the given sensory inputs

2. Let the robot build/learn the map itself

- Saves the user from specifying it.

- Allows the robot to handle new environments.

- By learning the map in a noisy, real-world situation, the robot

will be more apt to handle other noisy environments.

Approach:

Use an SOM to organize situation-action vectors.

The emerging structure of the SOM then constitutes the robots functional internal

representation of both the outside world and the appropriate actions to take in different

regions of that world.

1

105

The Training Phase The Training Phase

R

1. Record Sensory Info

Turn Right

& Slow Down

2. Get correct actions

3. Input Vector = Sensory Inputs & Actions

Input

Output

4. Run SOM on Input Vector

5. Update Winner & Neighbors

1

106

The Testing Phase The Testing Phase

R

1. Record Sensory Info

2. Input Vector = Sensory Inputs & No Actions

Input

Output

3. Run SOM on Input Vector 4. Read Recommended Actions from the

Winners Weight Vector

A

1

107

Clustering of Perceptual Signatures Clustering of Perceptual Signatures

The general closeness of successive winners shows a correlation between points & distances in the

objective world and the robots functional view of that world.

Note: A trace of the robots path on a map of the real world (i.e. lab floor) would have ONLY short

moves.

The sequence of winner nodes during the testing phase of

a typical navigation task.

1

108

SOM for Navigation Summary SOM for Navigation Summary

SOM Regions = Perceptual Landmarks = Sets of similar perceptual patterns

Navigation = Association of actions with perceptual landmarks

Behavior is controlled by the robots subjective functional interpretation of

the world, which may abstract the world into a few key perceptual categories.

No extensive objective map of the entire environment is required.

Useful maps are user & task centered.

Robustness (Fault Tolerance): The robot also navigates successfully when a

few of its sensors are damaged => The SOM has generalized from the specific

training instances.

Similar neuronal organizations, with correlations between points in the visual

field and neurons in a brain region, are found in many animals.

1

109

Learning Vector Quantization Learning Vector Quantization

The net input is not computed by taking an inner product of the prototype vectors

with the input. Instead, the net input is the negative of the distance between the

prototype vectors and the input.

1

110

Subclass Subclass

For the LVQ network, the winning neuron in the first layer

indicates the subclass which the input vector belongs to. There

may be several different neurons (subclasses) which make up

each class.

The second layer of the LVQ network combines subclasses into

a single class. The columns of W

2

represent subclasses, and the

rows represent classes. W

2

has a single 1 in each column, with

the other elements set to zero. The row in which the 1 occurs

indicates which class the appropriate subclass belongs to.

w

k i ,

2

1 = ( ) subclass i is a part of class k

1

111

Example Example

W

2

1 0 1 1 0 0

0 1 0 0 0 0

0 0 0 0 1 1

=

Subclasses 1, 3 and 4 belong to class 1.

Subclass 2 belongs to class 2.

Subclasses 5 and 6 belong to class 3.

A single-layer competitive network can create convex classification regions. The second

layer of the LVQ network can combine the convex regions to create more complex

categories.

1

112

LVQ Learning LVQ Learning

w

1

i

-

q ( ) w

1

i

-

q 1 ( ) o p q ( ) w

1

i

-

q 1 ( ) ( ) + =

a

k

-

2

t

k

-

1 = =

w

1

i

-

q ( ) w

1

i

-

q 1 ( ) o p q ( ) w

1

i

-

q 1 ( ) ( ) = a

k

-

2

1 t

k

-

= 0 = =

If the input pattern is classified correctly, then move the winning weight toward the

input vector according to the Kohonen rule.

If the input pattern is classified incorrectly, then move the winning weight away

from the input vector.

LVQ learning combines competive learning with supervision.

It requires a training set of examples of proper network behavior.

p

1

t

1

, { } p

2

t

2

, { } . p

Q

t

Q

, { } , , ,

1

113

Example Example

p

1

0

1

= t

1

1

0

= ,

)

`

p

4

0

0

= t

4

0

1

= ,

)

`

W

2

1 1 0 0

0 0 1 1

=

W

1

0 ( )

w

1

1

( )

T

w

1

2

( )

T

w

1

3

( )

T

w

1

4

( )

T

0.25 0.75

0.75 0.75

1 0.25

0.5 0.25

= =

p

2

1

0

= t

2

0

1

= ,

`

)

p

3

1

1

= t

3

1

0

= ,

`

)

(Random setting)

1

114

First Iteration First Iteration

a

1

c o m p et

0.25 0.75

T

0 1

T

0.75 0.75

T

0 1

T

1.00 0.25

T

0 1

T

0.50 0.25

T

0 1

T

)

c o m p et

0.354

0.791

1.25

0.901

)

1

0

0

0

= = =

a

1

c o m p et n

1

( ) c o m p et

w

1

1

p

1

w

1

2

p

1

w

1

3

p

1

w

1

4

p

1

)

= =

1

115

Second Layer Second Layer

a

2

W

2

a

1

1 1 0 0

0 0 1 1

1

0

0

0

1

0

= = =

w

1

1

1 ( ) w

1

1

0 ( ) o p

1

w

1

1

0 ( ) ( ) + =

w

1

1

1 ( )

0.25

0.75

0.5

0

1

0.25

0.75

\ .

|

| |

+

0.125

0.875

= =

This is the correct class, therefore the weight vector is moved

toward the input vector.

1

116

Figure Figure

1

117

Final Decision Regions Final Decision Regions

1

118

LVQ LVQ22

If the winning neuron in the hidden layer incorrectly classifies the current input, we

move its weight vector away from the input vector, as before. However, we also adjust

the weights of the closest neuron to the input vector that does classify it properly. The

weights for this second neuron should be moved toward the input vector.

When the network correctly classifies an input vector, the weights of only one neuron

are moved toward the input vector. However, if the input vector is incorrectly

classified, the weights of two neurons are updated, one weight vector is moved away

from the input vector, and the other one is moved toward the input vector. The

resulting algorithm is called LVQ2.

1

119

LVQ LVQ2 2 Example Example

1

120

Radial Radial--basis function networks basis function networks

RBF = radial-basis function: a function

which depends only on the radial distance

from a point

So RBFs are functions taking the form

Where is a nonlinear activation scalar

function of distance between vector x and

x

i

(|| ||)

i

x x |

p = -3:.1:3;

a = radbas(p);

a2 = radbas(p-1.5);

a3 = radbas(p+2);

a4 = a + a2*1 + a3*0.5;

plot(p,a,'b-',p,a2,'b--',p,a3,'b--',p,a4,'m-')

title('Weighted Sum of Radial Basis Transfer Functions');

xlabel('Input p');

ylabel('Output a');

1

121

Radial Radial--basis function networks basis function networks

x

M

Input

x

1

x

2

Three-layer networks

Hidden layer

w

i

d

output = S w

i

adjustable parameters are weights w

i

number of hidden units = number of data points

Form of the basis functions decided in advance

(|| ||)

i

x x |

(|| ||)

i

x x |

Das könnte Ihnen auch gefallen

- Hebbian LearningDokument53 SeitenHebbian LearningPiyush BansalNoch keine Bewertungen

- Inverse Trigonometric Functions (Trigonometry) Mathematics Question BankVon EverandInverse Trigonometric Functions (Trigonometry) Mathematics Question BankNoch keine Bewertungen

- I N F P F P F: Branching ProcessDokument7 SeitenI N F P F P F: Branching ProcessFelix ChanNoch keine Bewertungen

- Ten-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesVon EverandTen-Decimal Tables of the Logarithms of Complex Numbers and for the Transformation from Cartesian to Polar Coordinates: Volume 33 in Mathematical Tables SeriesNoch keine Bewertungen

- HW 1 SolDokument6 SeitenHW 1 SolSekharNaikNoch keine Bewertungen

- Haykin, Xue-Neural Networks and Learning Machines 3ed SolnDokument103 SeitenHaykin, Xue-Neural Networks and Learning Machines 3ed Solnsticker59253% (17)

- (A) Source: 2.2 Binomial DistributionDokument6 Seiten(A) Source: 2.2 Binomial DistributionjuntujuntuNoch keine Bewertungen

- Chapter 02Dokument13 SeitenChapter 02Sudipta GhoshNoch keine Bewertungen

- Fourier SeriesDokument26 SeitenFourier SeriesPurushothamanNoch keine Bewertungen

- Quantum Computing Problem Set 1 SolutionsDokument4 SeitenQuantum Computing Problem Set 1 SolutionsLidia ArtiomNoch keine Bewertungen

- MJC JC 2 H2 Maths 2011 Mid Year Exam Solutions Paper 2Dokument11 SeitenMJC JC 2 H2 Maths 2011 Mid Year Exam Solutions Paper 2jimmytanlimlongNoch keine Bewertungen

- Power Flow Analysis: Newton-Raphson IterationDokument27 SeitenPower Flow Analysis: Newton-Raphson IterationBayram YeterNoch keine Bewertungen

- (A) Modeling: 2.3 Models For Binary ResponsesDokument6 Seiten(A) Modeling: 2.3 Models For Binary ResponsesjuntujuntuNoch keine Bewertungen

- MgtutDokument119 SeitenMgtutpouyarostamNoch keine Bewertungen

- Relationship of Z - Transform and Fourier TransformDokument9 SeitenRelationship of Z - Transform and Fourier TransformarunathangamNoch keine Bewertungen

- Math 13: Differential EquationsDokument11 SeitenMath 13: Differential EquationsReyzhel Mae MatienzoNoch keine Bewertungen

- Homework 8 Solutions: 6.2 - Gram-Schmidt Orthogonalization ProcessDokument5 SeitenHomework 8 Solutions: 6.2 - Gram-Schmidt Orthogonalization ProcessCJ JacobsNoch keine Bewertungen

- Solution To Problem Set 5Dokument7 SeitenSolution To Problem Set 588alexiaNoch keine Bewertungen

- Part3 1Dokument8 SeitenPart3 1Siu Lung HongNoch keine Bewertungen

- Handout 13Dokument7 SeitenHandout 13djoseph_1Noch keine Bewertungen

- Newton Iteration and Polynomial Computation:: Algorithms Professor John ReifDokument12 SeitenNewton Iteration and Polynomial Computation:: Algorithms Professor John Reifraw.junkNoch keine Bewertungen

- Calculating The Inverse Z-TransformDokument10 SeitenCalculating The Inverse Z-TransformJPR EEENoch keine Bewertungen

- DSP ProblemsDokument10 SeitenDSP ProblemsMohammed YounisNoch keine Bewertungen

- Numerical Methods For Solutions of Equations in PythonDokument9 SeitenNumerical Methods For Solutions of Equations in Pythontheodor_munteanuNoch keine Bewertungen

- Answers 2009-10Dokument19 SeitenAnswers 2009-10Yoga RasiahNoch keine Bewertungen

- Selected Solutions, Griffiths QM, Chapter 1Dokument4 SeitenSelected Solutions, Griffiths QM, Chapter 1Kenny StephensNoch keine Bewertungen

- Numerical Solution of Single Odes: Euler Methods Runge-Kutta Methods Multistep Methods Matlab ExampleDokument16 SeitenNumerical Solution of Single Odes: Euler Methods Runge-Kutta Methods Multistep Methods Matlab ExampleZlatko AlomerovicNoch keine Bewertungen

- EE - 210 - Exam 3 - Spring - 2008Dokument26 SeitenEE - 210 - Exam 3 - Spring - 2008doomachaleyNoch keine Bewertungen

- Week 1 NoteDokument2 SeitenWeek 1 NoteGwuqh He6hahNoch keine Bewertungen

- Olympiad KVPY Solutions - KVPY SX PDFDokument122 SeitenOlympiad KVPY Solutions - KVPY SX PDFATHIF.K.PNoch keine Bewertungen

- Tutorial 6Dokument12 SeitenTutorial 6Yomna SalemNoch keine Bewertungen

- OSC Sample ExamDokument5 SeitenOSC Sample ExamRemy KabelNoch keine Bewertungen

- 24 AssociativeLearning1Dokument35 Seiten24 AssociativeLearning1Mahyar MohammadyNoch keine Bewertungen

- Hw1 Sol Amcs202Dokument4 SeitenHw1 Sol Amcs202Fadi Awni EleiwiNoch keine Bewertungen

- Math 1Dokument86 SeitenMath 1Naji ZaidNoch keine Bewertungen

- HWDokument84 SeitenHWTg DgNoch keine Bewertungen

- Discrete-Time Signals and SystemsDokument111 SeitenDiscrete-Time Signals and SystemsharivarahiNoch keine Bewertungen

- Eli Maor, e The Story of A Number, Among ReferencesDokument10 SeitenEli Maor, e The Story of A Number, Among ReferencesbdfbdfbfgbfNoch keine Bewertungen

- Problem Set 2 - Solution: X, Y 0.5 0 X, YDokument4 SeitenProblem Set 2 - Solution: X, Y 0.5 0 X, YdamnedchildNoch keine Bewertungen

- Discrete-Time Signals and SystemsDokument111 SeitenDiscrete-Time Signals and Systemsduraivel_anNoch keine Bewertungen

- Power FlowDokument25 SeitenPower FlowsayedmhNoch keine Bewertungen

- Inverse Trignometric FunctionsDokument24 SeitenInverse Trignometric FunctionsKoyal GuptaNoch keine Bewertungen

- Probleme Rezolvate Matematic PDS 2010-2011Dokument8 SeitenProbleme Rezolvate Matematic PDS 2010-2011Alina Maria BeneaNoch keine Bewertungen

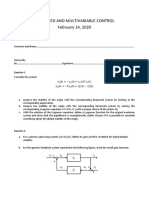

- Advanced and Multivariable Control February 14, 2020: Exercise 1Dokument3 SeitenAdvanced and Multivariable Control February 14, 2020: Exercise 1Gianluca CastrinesiNoch keine Bewertungen

- R x f ξ n+1 ! x−x: Taylorov red (Mac Laurinov red)Dokument13 SeitenR x f ξ n+1 ! x−x: Taylorov red (Mac Laurinov red)Hinko FušNoch keine Bewertungen

- DC AnalysisDokument27 SeitenDC AnalysisJr CallangaNoch keine Bewertungen

- CH 4Dokument36 SeitenCH 4probability2Noch keine Bewertungen

- Newton Raphson MethodDokument24 SeitenNewton Raphson MethodaminmominNoch keine Bewertungen

- Maulina Putri Lestari - M0220052 - Tugas 1Dokument5 SeitenMaulina Putri Lestari - M0220052 - Tugas 1Maulina Putri LestariNoch keine Bewertungen

- MJC JC 2 H2 Maths 2011 Mid Year Exam Solutions Paper 1Dokument22 SeitenMJC JC 2 H2 Maths 2011 Mid Year Exam Solutions Paper 1jimmytanlimlongNoch keine Bewertungen

- X, 0 0.02 1 Y, 0 0.02 1 L Xy) X L Xy) Y L Xy) 1 X Y N Xy), , + X Y 1 Nan Xy) L Xy) Xy) L Xy) Xy) Z (Xy) If (,, + X Y 1 Nan 0)Dokument6 SeitenX, 0 0.02 1 Y, 0 0.02 1 L Xy) X L Xy) Y L Xy) 1 X Y N Xy), , + X Y 1 Nan Xy) L Xy) Xy) L Xy) Xy) Z (Xy) If (,, + X Y 1 Nan 0)Yvette Anna OrbanNoch keine Bewertungen

- 1 Iterative Methods For Linear Systems 2 Eigenvalues and EigenvectorsDokument2 Seiten1 Iterative Methods For Linear Systems 2 Eigenvalues and Eigenvectorsbohboh1212Noch keine Bewertungen

- Neural Network and Fuzzy System MathDokument12 SeitenNeural Network and Fuzzy System MathFahad hossienNoch keine Bewertungen

- AP Calc AB/BC Review SheetDokument2 SeitenAP Calc AB/BC Review Sheetmhayolo69100% (1)

- Useful Inequalities: V0.27a November 29, 2014Dokument3 SeitenUseful Inequalities: V0.27a November 29, 2014peterNoch keine Bewertungen

- 1 - Single Layer Perceptron ANN SDokument40 Seiten1 - Single Layer Perceptron ANN SDumidu GhanasekaraNoch keine Bewertungen

- Spectral Estimation ModernDokument43 SeitenSpectral Estimation ModernHayder MazinNoch keine Bewertungen

- Linearna Algebra - Rjesenja, ETFDokument44 SeitenLinearna Algebra - Rjesenja, ETFmarkovukNoch keine Bewertungen

- Discrete Time Systems - PropertiesDokument55 SeitenDiscrete Time Systems - PropertiesKingNoch keine Bewertungen

- Absence of Mind: The Dispelling of Inwardn - Marilynne RobinsonDokument180 SeitenAbsence of Mind: The Dispelling of Inwardn - Marilynne RobinsonTim Tom100% (4)

- Careers in Music Project RubricDokument1 SeiteCareers in Music Project RubricHollyNoch keine Bewertungen

- Critical Thinking: Critical Thinking... The Awakening of The Intellect To The Study of ItselfDokument11 SeitenCritical Thinking: Critical Thinking... The Awakening of The Intellect To The Study of ItselfLeonard Ruiz100% (1)

- AEE English4 WLP Q1 Week2Dokument10 SeitenAEE English4 WLP Q1 Week2Loralyn Sadiasa CapagueNoch keine Bewertungen

- What Is Communicative Language Teaching?Dokument8 SeitenWhat Is Communicative Language Teaching?Kala SafanaNoch keine Bewertungen

- WB - 9a2 - Semester 4 - TBDokument117 SeitenWB - 9a2 - Semester 4 - TBDiệu Thúy CaoNoch keine Bewertungen

- 1 - PGDE - Educational Psychology ApplicationDokument7 Seiten1 - PGDE - Educational Psychology ApplicationatiqahNoch keine Bewertungen

- EarWorms Rapid Brazilian Portuguese Vol 2Dokument20 SeitenEarWorms Rapid Brazilian Portuguese Vol 2Susan Jackman100% (1)

- Chapter 1Dokument24 SeitenChapter 1Matthew CarpenterNoch keine Bewertungen

- Week 1 - Lecture 2 - Legal Solutions PPT - 2223Dokument53 SeitenWeek 1 - Lecture 2 - Legal Solutions PPT - 2223Shingai KasekeNoch keine Bewertungen

- B.Ed Early Grade PDFDokument400 SeitenB.Ed Early Grade PDFEdward Nodzo100% (1)

- Blended Learning StrategiesDokument14 SeitenBlended Learning StrategiesGreigh TenNoch keine Bewertungen

- GraphologyDokument34 SeitenGraphologyAstroSunilNoch keine Bewertungen

- Foundation of Individual DifferencesDokument18 SeitenFoundation of Individual DifferencesHitesh DudaniNoch keine Bewertungen

- Socratic MethodDokument3 SeitenSocratic MethodLorence GuzonNoch keine Bewertungen

- Academic Skills and Studying With ConfidenceDokument8 SeitenAcademic Skills and Studying With ConfidenceMaurine TuitoekNoch keine Bewertungen

- Education Guide WebDokument57 SeitenEducation Guide Weblorabora1Noch keine Bewertungen

- Module 1 (Arts and Humanities)Dokument2 SeitenModule 1 (Arts and Humanities)frederick liponNoch keine Bewertungen

- DLL Arts Q3 W5Dokument7 SeitenDLL Arts Q3 W5Cherry Cervantes HernandezNoch keine Bewertungen

- Final Exam Questions For HEDokument13 SeitenFinal Exam Questions For HEAliya Nicole CNoch keine Bewertungen

- Positivitrees LessonDokument5 SeitenPositivitrees Lessonapi-380331542Noch keine Bewertungen

- Module 3 - Principles of Community DevelopmentDokument9 SeitenModule 3 - Principles of Community DevelopmentHeart BenchNoch keine Bewertungen

- Definition of Language VariationsDokument4 SeitenDefinition of Language VariationsPatrisiusRetnoNoch keine Bewertungen

- EthicsDokument15 SeitenEthicsjp gutierrez100% (3)

- B-82 HandballDokument15 SeitenB-82 HandballCough ndNoch keine Bewertungen

- Year 2 Daily Lesson Plans: Skills Pedagogy (Strategy/Activity)Dokument5 SeitenYear 2 Daily Lesson Plans: Skills Pedagogy (Strategy/Activity)Kalavathy KrishnanNoch keine Bewertungen

- Moving Beyond Boundaries The Relationship Between Self Transcendence and Holistic Well Being of Taal Volcano Eruption SurvivorsDokument174 SeitenMoving Beyond Boundaries The Relationship Between Self Transcendence and Holistic Well Being of Taal Volcano Eruption Survivors�May Ann Costales100% (1)

- Two Armed Bandit Coursera - RMDDokument6 SeitenTwo Armed Bandit Coursera - RMDNikhar KavdiaNoch keine Bewertungen

- Section 1.3 and 1.4 WorksheetDokument5 SeitenSection 1.3 and 1.4 WorksheetelizabethmNoch keine Bewertungen

- Generative AI Opportunity of A LifetimeDokument3 SeitenGenerative AI Opportunity of A Lifetimeshubhanwita2021oacNoch keine Bewertungen

- The Age of Magical Overthinking: Notes on Modern IrrationalityVon EverandThe Age of Magical Overthinking: Notes on Modern IrrationalityBewertung: 4 von 5 Sternen4/5 (32)

- By the Time You Read This: The Space between Cheslie's Smile and Mental Illness—Her Story in Her Own WordsVon EverandBy the Time You Read This: The Space between Cheslie's Smile and Mental Illness—Her Story in Her Own WordsNoch keine Bewertungen

- Love Life: How to Raise Your Standards, Find Your Person, and Live Happily (No Matter What)Von EverandLove Life: How to Raise Your Standards, Find Your Person, and Live Happily (No Matter What)Bewertung: 3 von 5 Sternen3/5 (1)

- LIT: Life Ignition Tools: Use Nature's Playbook to Energize Your Brain, Spark Ideas, and Ignite ActionVon EverandLIT: Life Ignition Tools: Use Nature's Playbook to Energize Your Brain, Spark Ideas, and Ignite ActionBewertung: 4 von 5 Sternen4/5 (404)

- ADHD is Awesome: A Guide to (Mostly) Thriving with ADHDVon EverandADHD is Awesome: A Guide to (Mostly) Thriving with ADHDBewertung: 5 von 5 Sternen5/5 (3)

- Think This, Not That: 12 Mindshifts to Breakthrough Limiting Beliefs and Become Who You Were Born to BeVon EverandThink This, Not That: 12 Mindshifts to Breakthrough Limiting Beliefs and Become Who You Were Born to BeBewertung: 2 von 5 Sternen2/5 (1)

- The Body Keeps the Score by Bessel Van der Kolk, M.D. - Book Summary: Brain, Mind, and Body in the Healing of TraumaVon EverandThe Body Keeps the Score by Bessel Van der Kolk, M.D. - Book Summary: Brain, Mind, and Body in the Healing of TraumaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Mindset by Carol S. Dweck - Book Summary: The New Psychology of SuccessVon EverandMindset by Carol S. Dweck - Book Summary: The New Psychology of SuccessBewertung: 4.5 von 5 Sternen4.5/5 (328)

- Summary: The Psychology of Money: Timeless Lessons on Wealth, Greed, and Happiness by Morgan Housel: Key Takeaways, Summary & Analysis IncludedVon EverandSummary: The Psychology of Money: Timeless Lessons on Wealth, Greed, and Happiness by Morgan Housel: Key Takeaways, Summary & Analysis IncludedBewertung: 4.5 von 5 Sternen4.5/5 (82)

- The Ritual Effect: From Habit to Ritual, Harness the Surprising Power of Everyday ActionsVon EverandThe Ritual Effect: From Habit to Ritual, Harness the Surprising Power of Everyday ActionsBewertung: 4 von 5 Sternen4/5 (4)

- Summary of The 48 Laws of Power: by Robert GreeneVon EverandSummary of The 48 Laws of Power: by Robert GreeneBewertung: 4.5 von 5 Sternen4.5/5 (233)

- The Twentysomething Treatment: A Revolutionary Remedy for an Uncertain AgeVon EverandThe Twentysomething Treatment: A Revolutionary Remedy for an Uncertain AgeBewertung: 4.5 von 5 Sternen4.5/5 (2)

- Raising Mentally Strong Kids: How to Combine the Power of Neuroscience with Love and Logic to Grow Confident, Kind, Responsible, and Resilient Children and Young AdultsVon EverandRaising Mentally Strong Kids: How to Combine the Power of Neuroscience with Love and Logic to Grow Confident, Kind, Responsible, and Resilient Children and Young AdultsBewertung: 5 von 5 Sternen5/5 (1)

- Dark Psychology & Manipulation: Discover How To Analyze People and Master Human Behaviour Using Emotional Influence Techniques, Body Language Secrets, Covert NLP, Speed Reading, and Hypnosis.Von EverandDark Psychology & Manipulation: Discover How To Analyze People and Master Human Behaviour Using Emotional Influence Techniques, Body Language Secrets, Covert NLP, Speed Reading, and Hypnosis.Bewertung: 4.5 von 5 Sternen4.5/5 (110)

- Manipulation: The Ultimate Guide To Influence People with Persuasion, Mind Control and NLP With Highly Effective Manipulation TechniquesVon EverandManipulation: The Ultimate Guide To Influence People with Persuasion, Mind Control and NLP With Highly Effective Manipulation TechniquesBewertung: 4.5 von 5 Sternen4.5/5 (1412)

- Dark Psychology: Learn To Influence Anyone Using Mind Control, Manipulation And Deception With Secret Techniques Of Dark Persuasion, Undetected Mind Control, Mind Games, Hypnotism And BrainwashingVon EverandDark Psychology: Learn To Influence Anyone Using Mind Control, Manipulation And Deception With Secret Techniques Of Dark Persuasion, Undetected Mind Control, Mind Games, Hypnotism And BrainwashingBewertung: 4 von 5 Sternen4/5 (1138)

- The Courage Habit: How to Accept Your Fears, Release the Past, and Live Your Courageous LifeVon EverandThe Courage Habit: How to Accept Your Fears, Release the Past, and Live Your Courageous LifeBewertung: 4.5 von 5 Sternen4.5/5 (254)

- Codependent No More: How to Stop Controlling Others and Start Caring for YourselfVon EverandCodependent No More: How to Stop Controlling Others and Start Caring for YourselfBewertung: 5 von 5 Sternen5/5 (88)

- The Garden Within: Where the War with Your Emotions Ends and Your Most Powerful Life BeginsVon EverandThe Garden Within: Where the War with Your Emotions Ends and Your Most Powerful Life BeginsNoch keine Bewertungen

- Empath: The Survival Guide For Highly Sensitive People: Protect Yourself From Narcissists & Toxic Relationships. Discover How to Stop Absorbing Other People's PainVon EverandEmpath: The Survival Guide For Highly Sensitive People: Protect Yourself From Narcissists & Toxic Relationships. Discover How to Stop Absorbing Other People's PainBewertung: 4 von 5 Sternen4/5 (95)

- Critical Thinking: How to Effectively Reason, Understand Irrationality, and Make Better DecisionsVon EverandCritical Thinking: How to Effectively Reason, Understand Irrationality, and Make Better DecisionsBewertung: 4.5 von 5 Sternen4.5/5 (39)

- Cult, A Love Story: Ten Years Inside a Canadian Cult and the Subsequent Long Road of RecoveryVon EverandCult, A Love Story: Ten Years Inside a Canadian Cult and the Subsequent Long Road of RecoveryBewertung: 4 von 5 Sternen4/5 (46)

- Troubled: A Memoir of Foster Care, Family, and Social ClassVon EverandTroubled: A Memoir of Foster Care, Family, and Social ClassBewertung: 4.5 von 5 Sternen4.5/5 (27)