Beruflich Dokumente

Kultur Dokumente

Validation of The Calibration Procedure in Atomic Absorption Spectrometric Methods

Hochgeladen von

aureaboros0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

81 Ansichten10 SeitenA general strategy for the validation of the Calibration Procedure in AAS was developed. The suitability of different experimental designs and statistical tests was evaluated. Results obtained indicate that to validate a straight-line model, the measurement points should preferably be distributed over three or four concentration levels.

Originalbeschreibung:

Originaltitel

Validation of the Calibration Procedure in Atomic Absorption Spectrometric Methods

Copyright

© © All Rights Reserved

Verfügbare Formate

PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenA general strategy for the validation of the Calibration Procedure in AAS was developed. The suitability of different experimental designs and statistical tests was evaluated. Results obtained indicate that to validate a straight-line model, the measurement points should preferably be distributed over three or four concentration levels.

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

81 Ansichten10 SeitenValidation of The Calibration Procedure in Atomic Absorption Spectrometric Methods

Hochgeladen von

aureaborosA general strategy for the validation of the Calibration Procedure in AAS was developed. The suitability of different experimental designs and statistical tests was evaluated. Results obtained indicate that to validate a straight-line model, the measurement points should preferably be distributed over three or four concentration levels.

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 10

Validation of t he Calibration Procedure in

Atomic Absorption Spectrometric

Methods

J ournal of

Analytical

Atomic

Spectrometry

W. PENNI NCKX, C. HARTMANN, D. L. MASSART AND J . SMEY ERS-VERBEKE

ChemoAC, Pharmaceutical Institute, Vrije Universiteit Brussel, Laarbeeklaan 103, 1090 Brussels, Belgium

A general strategy for the validation of the calibration

procedure in AAS was developed. In order to accomplish this,

the suitability of different experimental designs and statistical

tests, to trace outliers, to examine the behaviour of the

variance and to detect a lack-of-fit, was evaluated. Parametric

as well as randomization tests were considered. For these

investigations, simulated data were used, which are based on

real measurements. The results obtained indicate that to

validate a straight-line model, the measurement points should

preferably be distributed over three or four concentration

levels. In order to check the goodness-of-fit, the significance of

the quadratic term should be investigated. A lack-of-fit to a

second degree model is better detected when the measurement

points are distributed over more than four concentration levels.

For an unweighted second degree model, an analysis of

variance (ANOVA) lack-of-fit can be used, while a

randomization test is proposed for a weighted model. A one-

tailed Res t or an alternative randomization test should be

used to trace a non-constant variance.

Keywords: Method validation; calibration; randomization test

Method validation is the process of demonstrating the ability

of a newly developed method to produce reliable results.'

Generally, one starts this process by validating the applied

calibration procedure. The calibration model is used to describe

the relationship between the analytical signal ( y ) and the

concentration (x). One can assume, for example, that the

Lambert-Beer law is valid within the applied concentration

range, so that a straight-line model (y=b,+b,x) can be used.

However, if the Lambert-Beer law is not valid and the straight-

line model is fitted to the data, the calibration procedure

introduces a systematic error in the analysis results. The

calibration method has to be evaluated prior to its routine

use, since the limited number of data points used routinely

does not permit such an evaluation.

Different approaches can be followed to validate the

calibration procedure. A number of guidelines to validate

the calibration function are, for example, published by the

International Organisation for Standardisation ( ISO).273

However, some important problems, such as the investigation

of the lack-of-fit of a weighted and a second degree calibration

line, are not discussed by ISO. Therefore, in this paper a more

general strategy for the validation of AAS calibration pro-

cedures is given.

This work investigates the validation of straight-line models

( y =b, +blx) and second degree models ( y =bo +blx +b,x2),

which are the most applied in practice and are included in the

I S0 guideline^.^,^Other calibration models are reported in the

literature. Some workers4 use, for example, a cubic model ( y =

b, +blx +b,x2 +b3x3). However, such a model has little physi-

cal meaning and requires a large number of calibration stan-

dards during routine analysis. Barnett' has described the

calibration model that is included in the Perkin-Elmer atomic

absorption spectrometers. Other models have been reported

by Phillips and Eyring6 and Ko~cielniak.~ Since these models

are not generally applied their validation is not discussed

further here.

The validation of the calibration procedure involves an

examination of the behaviour of the variance and of the

goodness-of-fit of the selected model. In order to accomplish

this, aqueous standards are measured at different concentration

levels, covering the complete calibration range. IS0,233 for

example, recommends to distribute ten standards uniformly

over the calibration range and to perform ten replicate analyses

of each of the lowest and highest concentrations. The exper-

imental design is important since it influences the probability

that a problem, such as a lack-of-fit or a non-constant variance,

is detected. Therefore, this work evaluates the applicability of

different experimental designs.

After the performance of the experiments, one should first

evaluate the data graphically. Often this permits an easy

detection of important problems. A good way to do this is by

examining the residuals.' Since this is not included in the

I S0293 recommendations, it is discussed briefly in this paper.

For a statistical evaluation of the results, one should check

whether the data are free of outliers. Outlying points may

disturb the normality of the data, which is required by most

of the tests used to examine the behaviour of the variance and

the goodness-of-fit. Moreover, the occurrence of multiple out-

liers would indicate a fundamental problem with the method.

In this paper, two tests to trace single outliers, namely the

Dixon' and the Grubbs" tests, and a test to trace paired

outliers," are studied. Next, the behaviour of the variance is

investigated. This work evaluates the suitability of tests which

compare variances at different concentration levels, such as

the F, 2, 3 Cochran,12 Hartley12 and Bartlettl3 tests, as well as

alternatives for these tests, where the standard deviations at

the different levels are estimated by the range.I4 Finally, in

order to trace a lack-of-fit, the applicability of an analysis of

variance (ANOVA) procedure15 and the significance of the

quadratic terrnI6 are evaluated. An alternative test is considered

for weighted m0de1s.l~This work also investigates the suit-

ability of a number of randomization tests." In that case, the

computed test statistic is not compared with a critical value,

but with a distribution which is obtained by random assign-

ment of the experimental data. By deriving the distribution

from the data themselves, these tests should be less sensitive

to deviations from normality.18

The different tests and experimental designs are evaluated

in a systematic way by means of simulations which are based

on a number of real data sets. The results of this evaluation

are used to construct a general validation strategy for the

calibration procedure in AAS. This could form the basis for a

more general strategy applicable to other measurement

techniques.

EXPERIMENTAL

The symbols that are used throughout this paper are summar-

ized in Table 1.

Journal of Analytical Atomic Spectrometry, April 1996, Vol. 11 (237-246) 237

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online / Journal Homepage / Table of Contents for this issue

Table 1 Symbols that are used

~

Total number of measurements: N

Concentration levels: 1; ...; i; ...; n

Number of replicates: m,; ...; mi; ...; m,

xi=concentration at level i (i =l , ..., n)

yij=jth absorbance measured at level i ( j = 1, . . ., mi)

Yi =mean absorbance at level i

si =the standard deviation of the absorbances of level i

wi =the range of the absorbances of level i

b, ; bl ; bz =the estimated calibration parameters

j i =estimated absorbance at level i

=b, +b , Xj for a straight-line model

=b, +b,xi +b,x? for a second degree model

eij =yij - ji =jth residual at level i

Ci=mean of the residuals at level i

Z =mean of all residuals

ewij =,,h%K [ y z j - ji] =jth weighted residual at level i

q = ,=the weight at level i

-

1

3,

Instrumental

All programming was performed on a Compaq ProLinea 4/25s

personal computer. Visual Basic 3.0 (Microsoft) was used as

the programming environment. A Perkin-Elmer (Norwalk, CT,

USA) Zeeman 3030 atomic absorption spectrometer equipped

with an HGA-600 graphite furnace, an AS-60 autosampler and

a PR-100 printer were used for ETAAS determinations. For

flame AAS determinations, a Perkin-Elmer 373 spectrometer

with a PRS-10 printer sequencer were used.

Planning of Simulations

Description of experimental data

In order to simulate the data as realistically as possible, some

real experimental results were obtained first. The investigated

data sets contain absorbances measured in aqueous solutions,

at different levels and over a large concentration range. Zn, Fe

and Cu measurements were obtained with flame AAS, while

ETAAS was used for Pb, Cd and Mn measurements.

For the different data sets, the standard deviation is constant

at low concentration levels, but from a certain level it increases

with the absorbance. This is illustrated in Fig. 1 for Fe measure-

ments obtained with flame AAS. For the given example it is

clear that, from a certain concentration level, the standard

deviation increases linearly with the absorbance, but this could

T

0.008 } ,-

I

T

0.006 \ w

v) t

I

0.004 t

A

----.-- rn

i- -+-+ t--+

Fe concentration/mg I-

I .

0.002

0 2 4 6 8 10 12 14 16 18 20

Fig. 1

the Fe concentration (data obtained with flame AAS)

Standard deviation of the absorbance (n =6 ) as a function of

1.2

1 .o

0.8

ct 0.6

0.4

0.2

0

1 2 4 6 8 10 12 14 16

-0.2

Zn concentration/mg I-

Fig. 2 Absorbances as a function of the Zn concentration (data

obtained with flame AAS). The line represents the weighted second

degree function that is computed from these data

not be shown for all examined cases. The concentration level

where the behaviour of the variance changes can be situated

above, below or within the selected calibration range. This

must be investigated during the validation, because the first

situation (constant variance) permits the use of an unweighted

model, while for the other two (non-constant variance) the

most precise results are obtained with a weighted model.

Generally, up to a certain concentration, a straight-line

model can be used to describe the relationship between concen-

tration and absorbance. For higher concentrations a second

degree calibration model is needed. This is also the case for

the inspected data sets. Moreover, it is difficult to build a good

calibration model in a concentration range where the cali-

bration line is partially straight (lower part of the range) and

partially curved (upper part of the range). Fig. 2 shows, for

example, the absorbance values measured with flame AAS as

a function of the Zn concentration. It can be seen that the

weighted second degree model that is computed from the data

does not describe the measurement results accurately. In such

a situation the calibration range should be split into two parts.

For the given example, an unweighted straight-line model can

be used for concentrations up to 2mgl-, while a weighted

second degree model has to be used in the range between 2

and 15 mg 1- (results not shown). Consequently, in the selected

calibration range, three situations can occur. The calibration

line can be straight or curved over the complete range, or it

can be partially straight and partially curved. Moreover, it is

found that in the region where a straight-line model can be

used, the variance remains constant, while it increases when

the calibration line starts to bend.

Simulations

In order to evaluate the different statistical tests and experimen-

tal designs for each situation considered, 100 data sets were

simulated. Randomized normal distributed numbers were gen-

lerated in Visual Basic, using the method proposed by Box and

Mu1ler.l The suitability of the random generator was con-

firmed by simulating a large number of data of which the

normality of the distribution was checked graphically (with a

]histogram) as well as statistically (with a x2 test). Moreover,

in0 correlation was observed between data that were success-

ively simulated.

The simulations are based on the Pb data that were obtained

by ETAAS. The applied parameters are given in Table 2. The

calibration range (0-100 pg I-) is divided into two equal parts

in which different conditions can be valid. For example, a data

set can be simulated with a constant standard deviation in the

lower part, but an increasing standard deviation in the upper

part of the calibration range. Similarly, a first degree model

can be valid in the lower part but not in the upper part. For

238 Journal of Analytical Atomic Spectrometry, April 1996, Vol. 11

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online

Table 2 Parameters applied for the simulation of the data

I .

I

.

.

.

0 - - : ~

0.05 0.10 a.15 0.50 0.25 0.30. 0.35

Experimental conditions-

Concentration range:

Equation calibration line:

b1 =0.003

0-100 pg I -' (divided into two equal

parts)

y =Po +plxi +& x i 2

with Po =0.02

pz= -4.0 x -3.8 x ..., -0.2 x 0.0 x

Standard deviation:

homoscedastic: oi =0.002

heteroscedastic: oi =0.002 +yi 0.02

y i j ' =y i j +ka with k=4, 6, 8, 10 or 12

Introduction outlier:

In case of a problem at the lowest concentration level (see

Section 4):

Yl j ' = ~ 1 j + c ,

0.010

0.005

Experimental design-

Number of

concentration

Design levels (n)

( d )

-~

c11

c21

c31

c41

1151

0 .

3

4

6

9

12

.

m

.. . 0.05 0.10 0.15 0.20 ,0.25 0.30

rn

Number of

replicates at

each level (mi)

12

9

6

4

3

each investigation (evaluation of outlier tests, tests to trace

heteroscedasticity and goodness-of-fit tests) different simu-

lations were performed, which are specified further.

Five different ways to distribute 36 measurement points

symmetrically over the calibration range are considered (see

Table 2). Design 1, for example, positions 12 measurements at

three concentration levels, namely 0, 50 and 100 pg 1-'. The

choice of 36 points is arbitrary. The main reason why this

number was selected is that it permits the residual variance to

be estimated with a large number of degrees of freedom (>30)

and the measurement points can be distributed evenly over

three or four concentration levels. These are the minimum

number of levels to investigate a lack-of-fit to a straight line

and second degree model, respectively (see below).

0.006

0.004

0.002

0

-0.002

-0.004

. m

.

. . . m .

1

.

I

: ! :

.

0.05 ' 0.10 0.15 O. ; O 0.2: 0.30 ; 0.35

. '

m .

I

- v) -0.006

a

' 0.008 1

3

I

0.004

I

!

I :

1

. .

-0.008

I

:

The design that is proposed by IS0,293 namely ten replicates

at both extremes and a single measurement point at the eight

other concentration levels, is not considered, mainly for practi-

cal reasons. In the first place, this design cannot be applied for

the validation of a weighted model. In order to determine the

weight factors the variance must be estimated at the different

concentration levels which requires the performance of repli-

cate measurements. The lack of replicates also hampers the

application of outlier tests at the different levels, as well as the

evaluation of tests which compare variance estimates at differ-

ent levels, such as the Cochran test. Moreover, if one does not

take into account the replicates at the extremes of the cali-

bration range for the investigation of the goodness-of-fit, as is

proposed by KO, several tests that are evaluated here (e.g.,

ANOVA lack-of-fit) cannot be performed.

RESULTS AND DISCUSSION

1. Examination of the Residuals

The residuals ( ei j ) as given in Table 1 are the differences

between the responses actually measured (yij) and those pre-

dicted by the calibration model ( j i ) . In order to examine the

residuals they are plotted against the predicted value. When a

weighted model is used the weighted residuals (see Table 1)

are plotted against the predicted value. Fig. 3 illustrates four

situations that can occur. In the first situation [Fig. 3(a)] the

residuals form a horizontal band which indicates no abnor-

mality. In the second situation [Fig. 3(b)] the spread of the

residuals increases with the size of the predicted value (and

thus also with the concentration), which indicates that the

variance is not constant. In such a situation the use of a

weighted calibration model should be considered. Fig. 3 (c)

shows a trend in the residuals, which indicates that the model

is inadequate. Fig. 3( d) illustrates the residual plot in a situation

where the calibration set contains an outlier.

Draper and Smith' have shown that the estimated residuals

are correlated but they indicate that this correlation does not

invalidate the residual plot when N is large compared with

the number of regression parameters estimated.

0.008 i ( b )

0.004 1

.

.

= I

m

I

-0.004

-0.008

. . rn '

I

.

.

I

! 8

rn

I I .

. ' 0.05 '0.10 :0.15 : 0.25~ 0.301 0.35

= 0.20

-0.005 1

-0.01 0 I

Predicted value

Fig. 3 The residuals (Le., the difference between the measured and estimated absorbances) are plotted against the estimated absorbance to detect

a calibration problem. The following plots can be obtained, which indicate: (a), no abnormality; (b), a variance that increases with the estimated

absorbance (i.e., heteroscedasticity); (c), a lack-of-fit; and ( d) , an outlier

Journal of Analytical Atomic Spectrometry, April 1996, Vol. 1 1 239

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online

Table 3 Test criteria to trace an outlier at concentration level i. The

applied symbols are explained in Table 1

Test criteria f or Dixons test, as presented by ISO:5

When 2<m<8:

When 7<m<13:

Q=

When 12<rn<41:

Yi 2- Yi 1 and Q= Yi m- Yi ( m- 1)

Y i m- Y i 2 Yi ( m - 1) - YI

Yi 3- Yi 1 and Q= Yi m- Yi ( m- 2)

Y i m- Y i 3

Q=

Yi ( m- 2) - Y i l

Test criteria for Grubbs single outlier test?

and z= -

Test criteria for Grubbs paired outlier test:7

z2= -

with si the standard deviation of leaving out a pair of values

Y i m - j i Y i l - P i

z= __.

Si Si

1 - s i t

Si

2. Detection of Outliers

2.1. Description of tests

After the performance of the experiments, one inspects each of

the concentration levels for outliers. This work considers the

Dixon and the Grubbs tests which are the most generally

applied tests for the detection of a single outlier. The Dixon

test is applied as originally presented in the I S 0 guidelines.

The test criteria are given in Table 3. In contrast to the Dixon

test, which is based on a range, the Grubbs or maximum

normalized deviation (MND) test makes use of the standard

deviation to detect outliers. The Grubbs test criterion is also

given in Table 3. The use of the Grubbs test is recommended

by the Association of Official Analytical Chemists (AOAC)

and is also preferred in the latest draft I S0 document.21

Multiple outliers can be detected by repeated application of a

test for single outliers. However, paired outliers may mask

each other so that neither of them is detected with a single

outlier test. The Grubbs pair statistic for the detection of two

outliers, given in Table 3, investigates the decrease in the

standard deviation when removing a pair of values.

2.2. Evaluation

In order to evaluate the performance of the described tests,

samples containing 3- 18 normally distributed measurement

points were simulated. Single outliers were introduced at 4, 6,

8, 10 or 12 standard deviations (0) of the population mean. In

order to introduce two paired outliers, the first was positioned

at 4, 6, 8, 10 or 12 standard deviations, while the second was

put at two times the position of the first. For each situation

100 samples were simulated and the tests were performed at

the 5% significance level.

For all investigated situations with sample sizes larger than

three, similar results are obtained with both single outlier tests.

Fig. 4 shows, for example, the percentage of positive test results,

when an outlier is positioned at 60 or when no outlier is

present. The probability of correctly detecting an outlier

decreases with a decreasing sample size. One can see that for

sample sizes below six, the probability of not detecting an

outlier when it is present ( p error) becomes very high. The

probability of falsely detecting an outlier (a error) varies around

5%, which is in agreement with the specified significance level.

However, for a sample size equal to three the number of false

positive results (a error) obtained with the Grubbs test is

-a

- ---_ _ _

i t

- q----_ ----$-

2 4 6 8 10 12 14 16 18

Sample size

r 1 - 4

Fig.4 Percentage of positive test results obtained with the Grubbs

(squares) and the Dixon (triangles) test when an outlier is positioned

at 6s (solid line) and when no outlier is present (broken line)

unexpectedly high. There is clearly a problem with the Grubbs

test for very small sample sizes.

For a data set that contains 2 outliers, the Grubbs test

performs better than the Dixon test. The best results are

obtained with the test for the detection of paired outliers. For

example, with nine measurements, of which one is at 40 and

another at 80, a problem is detected in 21, 53 and 81% of the

cases, with the Dixon test, Grubbs test and the Grubbs test

for paired outliers, respectively. The probability of falsely

detecting a problem (a error) with the paired outlier test varies

around 5% (significance level) for samples containing 18, 12

and 9 measurements. However, for samples that contain only

six measurements the a error is about 11 YO.

These results indicate that, to avoid difficulties with false

outliers, the number of replicate measurements at each concen-

tration level should be sufficiently high (at least >4). When

no significant outlier is detected with the single outlier test,

one should check the data set for two paired outliers that may

mask each other. This test, however, requires even larger

sample sizes (>6).

The Behaviour of the Variance

3.1. Description of the evaluated tests

Parametric tests. I S0 proposes to use a one-tailed F-test to

check (a) whether the variance at the highest concentration

level is significantly larger than at the lowest concentration

level and (b) whether the variance at the lowest concen-

tration level is significantly larger than at the highest con-

centration l e~el . ~?~ For AAS applications, only the first test is

meaningful, because one knows that the variance, when not

constant, increases with the concentration. Other tests, which

can be applied to trace a non-constant variance, use estimated

variances at different levels. In this work the suitability of the

Cochran, Hartley12 and BartlettI3 tests is investigated. Table 4

shows how the test statistics are computed. Cochran compares

the ratio between the highest variance and the sum of the

variances with a critical value. Hartley, on the other hand,

uses the ratio between the highest and the lowest variance.

Theoretically, both these tests, for which specific tables exist,

require an equal number of measurement points at each

concentration level. However, I S0 indicates that for the

Cochran test small differences in the number of points can be

ignored, and applies the Cochran criterion for the number of

measurements that occur at most concentration levels. The

more complex Bartlett test does not assume an equal number

of points at the different levels. A quantity M/ C is computed

I(seeTable 4), which is distributed as x2 when the variances are

not significantly different. All these tests (Cochran, Hartley,

Bartlett and F-test) are based on the comparison of estimated

variances (s2). Some workers14 propose to test the sample

range (w). Correction terms are published14 by which the range

240 Journal of Analytical Atomic Spectrometry, April 1996, Vol, 11

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online

Table 4 Evaluated test parameters to detect a non-constant variance.

The symbols are explained in Table 1

Smm2

r = ~

Smin'

Hartley

F-test

Bartlett M/ C, with:

where vi =mi - 1

must be divided to obtain an estimation of s. These estimations

can then be applied in parametric statistical tests, such as the

F-test. Alternatives for the Cochran and Hartley tests, based

on the ranges, have also been described.14 The test statistics

for these tests are also given in Table 4.

Randomization test. Apart from the parametric tests, which

are generally applied, this work also investigates the applica-

bility of a randomization test" to trace heteroscedasticity. The

randomization F-test that wepropose is illustrated in Table 5.

First, at each concentration level, the squared differences

between the individual and the mean measurement results are

computed (di;). Since the size of the d2 values depends on the

variance at that level, the test statistic for the experimental

data ( Re) is computed as the sum of the d2 values at the upper

concentration level divided by the sum of the values at the

lowest level. In a next step, the d2 values are randomly

permuted between the concentration levels, and for each

permutation an R, value is computed. If the d2 value (and thus

the variances) at the upper and the lower concentration levels

are similar, R, values will be found that are distributed around

Re. However, if the d2 values at the upper concentration level

are significantly larger, most of the R, values will be smaller

than Re. Consequently, the significance level can be computed

as the ratio between the number of permutations with R,>R,

to the total number of permutations. In this work the test

results are based on 1000 permutations obtained by means of

a random data permutation program.18

3.2. Performance under normal conditions

As already mentioned, five different ways to distribute the 36

measurement points symmetrically over the calibration range

were considered. Fig. 5 shows the number of positive test

results for these designs, in a situation where the variance is

1

h

s?

v

Q)

v)

0

.- .- .I-

n

\

0 ' I

I I I

Design 1 Design 2 Design 3 Design 4 Design 5

Fig. 5 Percentage of positive test results obtained with Cochran (A),

Hartley (A), Bartlett ( 0) and F (H) tests in a heteroscedastic situation

constant in the lower part of the calibration range, but increases

in the upper part. It can be seen that for all tests the best

results are obtained with the design that positions all meas-

urement points at three concentration levels (design 1).

Distributing the measurements over more levels, and thus

reducing the number of replicates at each level, decreases the

probability of detecting the heteroscedasticity. When the

different tests are compared, one can conclude that the best

results are obtained with the Bartlett and the F-tests. The F-

test has its simplicity as an additional advantage. Moreover,

this test is recommended by IS0.273 For homoscedastic

measurements the evaluated tests produce between 2 and 10%

of false positive results which is in agreement with the specified

significance level of 5%.

For the tests that estimate the standard deviation by the

range, a similar performance is observed. The test that uses

the ratio of the smallest to largest absorbance range, for

example, performs similarly to the classical Hartley test.

Moreover, determining the significance level of the F-test by a

randomization procedure gives similar results as using a criti-

cal value.

3.3. Performance in the presence of outliers

Since there is always a probability that an outlier is not

detected it is important to examine the effect of such a

measurement point on the applied tests. Here, a number of

situations are considered, where an outlier at one of the

concentration levels results in an overestimation of the variance

at that level.

With homoscedasticity, this overestimated variance can lead

to an increased number of false positive conclusions. Fig. 6

illustrates this, in a situation where the lower or the upper

concentration level contains an outlier at 60. With the Bartlett

test, for example, between 50 and 80% of false positive results

are obtained, depending on the applied design. Similar results

are obtained with the Cochran and Hartley tests (not shown).

The F-test is only affected by the outliers at the extreme levels,

because it does not use the data of the other levels. Moreover,

since this test is one-sided, an outlier at the lowest concen-

tration level does not increase the number of false positive

results (see Fig. 6). When the upper concentration level con-

tains an outlier, the F-test gives a comparable number of false

positive results as the other tests. However, for the most

suitable designs (designs 1, 2 and 3), this number largely

decreases when the significance level of the test is determined

by a randomization procedure (see Fig. 6). A similar conclusion

is obtained for data sets where one of the concentration levels

is contaminated with two outliers. The number of false positive

results obtained with the F-test is only increased by outliers

: : L*+ , ,

0

Design 1 Design 2 Design 3 Design 4 Design 5

Fig. 6 Percentage of positive test results obtained with the Bartlett

test (squares), the F-test (triangles) and the randomization F-test

(diamonds) in a homoscedastic situation, but with an outlier (60) at

the lower (solid line) or upper (broken line) concentration level

Journal of Analytical Atomic Spectrometry, April 1996, Vol. 11 241

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online

at the upper concentration levels, while the other tests are also

affected by an overestimation of the variance at the other levels.

When, in a heteroscedastic situation, the variance at the

lowest concentration level is overestimated owing to an outlier,

an increased number of false negative conclusions can be

obtained. In that case, the real difference between the variance

at the highest and lowest concentration levels is underesti-

mated, so that an existing heteroscedasticity is masked. As an

example, a situation is considered where design 1 is applied

and the real standard deviations at the lowest, the middle and

the upper level of the concentration range equal 0.002, 0.002

and 0.008, respectively. The lowest level is contaminated with

an outlier, which leads to an overestimation of the variance at

this level. With an outlier positioned at 40 or at 60, the

probability to detect the heteroscedasticity with the F-test

decreases from about 100 to 65 and 30%, respectively.

Determining the significance levels of the I;-test by a randomiz-

ation procedlure does not improve the results. With the Bartlett

test, this decrease is also observed, but the number of positive

test results stabilizes and even slightly increases for important

outliers. This is because, in this situation, the variance at the

lowest level is overestimated in such a way, that it becomes

significantly larger than the variance at the other levels. This

effect is not experienced with the one-tailed F-test which checks

whether the variance at the upper level is significantly higher

than at the lower level.

4. Goodness-of-fit for Homoscedastic Data

4.1. Descriplion of the tests

Parametric tlests. I S02,3 recommends, as a goodness-of-fit test,

to check whether the data are better fitted by a second degree

model ( y =bo +blx +b, x2) than by a straight-line model ( y =

b, +blx) using an F-test. In this work we preferred to check

whether b2 iis significantly different from zero, using a t-test

(see Table 6). When it is shown that the data are better fitted

by a second degree than by a straight-line model, one concludes

that the straight-line model does not fit the data accurately.

This does, however, not prove that the second degree model

fits the data correctly, as is illustrated in Fig. 2. ANOVA lack-

of-fit is a test which can be applied to check the goodness-

of-fit of a model such as a straight-line or second degree model.

The test, which is described in Table 6, compares the error due

to lack-of-fit with the pure experimental error.

Randomization tests. Van der VoetZ2 has proposed a randomiz-

ation test to compare the predictive accuracy of two calibration

models. In this work we applied this test to check whether

better predictions are made by a second degree model than by

a straight-line model. Consequently, it can be considered as a

Table 5 Procedure of the randomization F-test

1. Computation of d2 values:

2. Test param,eter for experimental data:

3. Permutations:

The following steps are repeatedly performed:

3.1. The d2 values are randomly permuted between the

3.2. The test parameter is computed for each permutation: R,

concentration levels

4. Determination of signijicance level:

= =umber of permutations

number of permutations with R, >Re

Table 6 Parameters applied to detect a lack-of-fit of an unweighted

calibration model. The symbols are explained in Table 1

ANOVA lack-of-@ test:

Degrees of Mean squares

Sum of squares (SS) freedom (df) ( MS)

N- n

ss,,

df pe

Lack of fit: n- k SSlOf

i =l dhof

mi c ~i - j i 12

with k =2 for a straight-line model

k =3 for a second degree model

MSlof

MSF

--

F(iV - n) , ( n- k) , a -

Signijicance of second degree term:

randomization alternative of the parametric test proposed by

IS0.293 The test compares the squared residuals for the straight-

line model (ei?l) and the squared residuals for the second

degree model (eij22). If both models have the same predictive

ability, ei:l and e i t 2 have equal distributions (HO =null

hypothesis). However, if better predictions are obtained with

the second degree model, the eij21 values are generally larger

than the eij22 values. In order to test this, the difference between

the squared residuals, dsi j = eij21 - eij22, is computed for each

measurement point. The mean of these values, which is called

T, is then used as the test parameter. This value is first

computed for the experimental data (T,). If both models have

the same predictive ability, the dsij values are small and

distributed around zero so that T, will be almost zero. However,

if the second degree model provides better estimations, the dsij

values will generally be positive so that a T, value larger than

zero is obtained. In the randomization test at each iteration

random signs are then attached to the dsij values, and the T

value is computed (T,). If the original dsij values are distributed

around zero (HO true), this operation has little effect on T so

that T, values are obtained which are sometimes larger and

sometimes smaller than T,. However, if random signs are

attached to ds, values that are predominantly positive (HO not

true), most T, values will be smaller than T,. Therefore, the

significance level is then computed as the ratio between the

number of iterations with T,> T, and the total number of

iterations.

In this paper we propose another randomization test to

trace a lack-of-fit. This test investigates whether the prediction

error, estimated by the residuals, is independent of the concen-

tration level. Asshown in Fig. 3(c), a lack-of-fit can be detected

by demonstrating that the size of the residuals depends on the

level where they are measured. Table 7 explains how this can

beused in a randomization test. The numerator of the applied

test parameter is an estimate of the variation of the mean

residuals between the different concentration levels, while the

denominator estimates the variation within the levels. First,

the parameter value is computed for the experimental data

(LJ. Then, data permutations are performed. At each step the

residuals are re-assigned to the different concentration levels,

and the test parameter is re-calculated (I+). Re-assigning the

data has little effect on the test parameter when the residuals

are randomly distributed over the concentration levels [see

Fig. 3 (a)]. Consequently, for the different permutations one

will find test values which are sometimes larger and sometimes

smaller than that obtained for the experimental data. However,

when the size of the residuals depends on the concentration

level [see Fig. 3(c)] re-assigning the data will affect the test

parameter. In fact, for most permutations a test value will be

242 Journal of Analytical Atomic Spectrometry, April 1996, Vol. 11

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online

Table7 Procedure of the randomization test to investigate the

goodness-of-fit

1. The test parameter:

m i [ ~ i - ~ z

2. Computation of the test parameter for the experimental data:

100

80

60

40

20

0

W

.I -20 -1 5 -1 0 -5 0

c. The residuals [el I ; . . .; ei j ; . . .; enm] are used to compute L,

.-

3. Permutations:

The following steps are repeatedly performed:

3.1. The residuals are randomly assigned to the n concentration

3.2. The test parameter is computed for the ith permutation: L,

levels

4. Determination of signi,ficance level:

= total number of permutations

number of permutations with L, >L,

found which is lower than that obtained for the experimental

data. The significance level is then calculated as the ratio

between the number of permutations with a test parameter

higher than that computed with the experimental data (L,. >L,)

and the total number of permutations. In this work the test

results are again based on 1000 random permutations.

Strictly speaking, the proposed test, as with most statistical

tests, is applicable only when the data are independent. For

the estimated residuals this is not the case owing to the

correlation that exists between them. However, when the

number of measurement points is large compared with the

number of regression parameters estimated, the effect is small,

so that it can beignored.

4.2. Performance under normal conditions

First, the validation of a straight-line model is investigated.

For the test that is based on the significance of the quadratic

term the best result is theoretically found with design 1. It

follows from the D-optimality principle23 that the volume of

the confidence region for the calibration parameters of a

second degree polynomial is minimized when the measure-

ments are equally distributed over the two extremes and the

middle of the concentration range. It is clear that the more

precisely the calibration parameters are estimated, the easier

it is to demonstrate that the quadratic term is significantly

different from zero. For the ANOVA lack-of-fit it is more

difficult to explain theoretically which is the optimum design.

The simulations show, however, that the design that distributes

all measurement points over three concentration levels

(design 1 ) is the best to trace a lack-of-fit of the straight-line

model with both tests. The sensitivity of the tests decreases

when the number of concentration levels over which the

measurements are distributed increases and the number of

replicates at each level decreases. This is illustrated in Fig. 7(u)

for the ANOVA lack-of-fit. One can see, for example, that for

a calibration line with a quadratic term equal to - 12 x l op7,

the lack-of-fit to the straight-line model is almost certainly

detected when design 1 is used. With design 5, on the other

hand, the probability of correctly detecting the lack-of-fit is

about 30%. The test based on the significance of the quadratic

term is less affected by the applied design [see Fig. 7(b)].

Compared with the ANOVA lack-of-fit, this test gives similar

results when the most suitable design is applied, but better

results when the other designs are applied. The randomization

tests give less good results. For example, ANOVA lack-of-fit

detects a problem in 97, 88 and 34% of the cases when the

100

80

60

40

20

0

-20 -15 -1 0 -5 0

Quadratic term (x lo-)

Fig. 7 Percentage of cases for which (a) a lack-of-fit is detected with

an ANOVA, and (b) a significance of the quadratic term is detected,

for a curved calibration line and for different designs. The lines

correspond to: H, design 1; 0, design 2; +, design 3; 0, design 4;

and A, design 5

calibration lines contain a quadratic term equal to - 12 x

-8 x and -4 x respectively (design 1 applied).

With the randomization test described in Table 7, this is in 93,

72 and 18% of the cases. The randomization test proposed by

van der Voet22 detects a lack-of-fit in 82, 56 and 13% of the

cases. The number of false positive conclusions obtained with

the randomization tests is situated between 2 and 6%, which

is in agreement with the specified significance level of 5%.

Similar conclusions are obtained for situations where the

lack-of-fit to the straight-line model is the result of problems

other than a curvature to the x-axis. For example, a situation

is considered where data following a straight-line model are

simulated, and a constant value cb is added to all measurement

points of the lowest concentration level. Also, in that situation,

the test based on the significance of the quadratic term and

the ANOVA lack-of-fit give the best results, and they should

preferably be combined with design 1.

In order to validate a second degree model, an ANOVA

lack-of-fit is applied. Design 1, which uses only three concen-

tration levels, cannot be applied here. Table 6 illustrates that

with three parameters to estimate ( k =3 ) and three concen-

tration levels (n=3), the degrees of freedom of the error due

to lack-of-fit would be zero. In order to simulate a lack-of-fit

to a second degree calibration model, curved calibration lines

were simulated and a constant value (cb) was added to the

measurement results of the lowest concentration level. Fig. 8

gives the number of positive test results, for the different cb

values added, and for different designs. For the given situations,

the most suitable designs to detect a lack-of-fit are designs 3

and 4. For example, when cb=0.005, with design 2 a lack-of-

fit is detected in 30% of the cases, while designs 3 and 4 detect

it in about 50% of the cases. Thus, in contrast to the straight-

line model, the distribution of the measurement points over

the minimum number of concentration levels (four in this

situation) does not guarantee the best results. The reason for

this is explained further for the weighted second degree model,

where this problem is even more important. The randomization

test gives less good results than the ANOVA lack-of-fit. For

example, for cb values of 0.002,0.004 and 0.006, a lack-of-fit is

detected in 16, 33 and 62% of the cases, respectively, when

Journal of Analytical Atomic Spectrometry, April 1996, VoL 11 243

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online

'OiI 60 A

501- 4-==

' Design 2 Deskn 3 Des'ign 4 Deiign 5 '

1

Fig. 8 Percentage of positive test results obtained with an ANOVA

lack-of-fit for a second degree model, in a situation where the lowest

concentration level is contaminated. To simulate this problem, constant

values (cb) of 0.00 (H), 0.02 (0), 0.03 (+), 0.04 (0 ), 0.05 (A) and 0.06

(A) were added to the absorbances of the lowest concentration level

ANOVA lack-of-fit and design 3 are applied. With the same

design, the randomization test detects a lack-of-fit in 4, 16 and

42% of the cases, respectively.

4.3. Performance in the presence of outliers

The effect of outliers is only evaluated for the validation of a

straight-line model. Two outlier problems are examined,

namely a curvature that is masked by one or two too high

measurement results at the upper concentration level and a

curvature that is falsely detected owing to one or two too high

measurements at the middle concentration level. For the most

suitable design (design 1) the effect of an outlier at the upper

level is the same for the ANOVA lack-of-fit and the test based

on the significance of the quadratic term. When the curvature

is strong and the outlier is not very large, the probability of

detecting a lack-of-fit to the straight-line model is little affected.

However, when the curvature is weak and the outlier is

important, the probability of detecting the curvature decreases.

For example, when the quadratic term equals - 12 x

-8 x l op7 and -4 x a lack-of-fit is detected in 97, 95

and 34% of the cases, respectively. When an outlier (60) is

introduced at the upper concentration level, the probabilities

decrease to 89, 28 and 3%. With two outliers at the upper

concentration level (40 and 80), the probabilities to detect the

lack-of-fit are 25, 3 and 1%. In a similar way to what was

described above, also in the presence of an outlier the signifi-

cance of the quadratic term is less affected by the applied design.

The probability of falsely detecting a curvature increases if

the middle concentration level contains one or two outliers.

With design 1, for example, and a single outlier (60) at the

middle concentration level, one falsely detects a lack-of-fit to

the straight-line model in 10% of the cases, with the ANOVA

lack-of-fit as well as with the test based on the significance of

the quadratic term. When the middle concentration level is

contaminated with two outliers (40 and 80), a lack-of-fit is

detected in 22% of the cases. The tests are performed at a

significance level of 5%, so that a number of false positive

results around this level is expected.

In a similar way to what was described above, also in the

presence of outliers the randomization tests are less sensitive

than the parametric tests. This means that the number of false

positive results, owing to an outlier in the middle of the

concentration range, is lower with the randomization tests.

However, the probability of correctly detecting a curvature

when the upper concentration level contains an outlier is also

lower with the randomization than with the parametric test.

Therefore, one cannot say that the randomization tests are

more robust to outliers.

5. Goodness-of-fit for Heteroscedastic Data

5.1. Description of the tests

In order to examine the goodness-of-fit of a weighted cali-

bration model, two parametric and two randomization tests

are evaluated. The first testI7 computes the sum of squares

S = c K( Ji - 9i ) 2, where the weight factor is the inverse of

the variance of yi. If the calibration model describes the data

accurately, the value of S has a x2 distribution, with n- 2 or

n - 3 degrees of freedom for a straight-line and a second degree

model, respectively. As a second test, to validate a straight-

line model, one can also check the significance of the quadratic

term for a weighted model.

The evaluated randomization tests are similar to those

described in Section 4.1. In order to apply the test proposed

by van der Voet22 for weighted models, the weighted residuals

pi ( yi j -j i ) are used. Thus, one computes the mean difference

between the squared weighted residual for the straight-line

model and the squared weighted residual for the second degree

model and applies the test on these values. For the test that is

proposed by us (see Table 7), one states that the weighted

residuals must be randomly distributed over the concentration

levels. Thus, the randomization test which is explained in

Table 7 can also be applied on the weighted residuals. Here it

is also assumed that the correlation that exists between the

weighted residuals does not affect the test results.

5.2. Performance

Fig. 9 compares the performance of the evaluated tests for the

validation of a weighted straight-line model. A situation is

considered where all measurement points are distributed over

three concentration levels (design 1) and where the relative

standard deviation equals 2%. It can be seen that the best

results are obtained with the test that determines the signifi-

cance of the quadratic term. Less good results are obtained

with the randomization test that is proposed by us. The other

tests seem less suitable. Regarding the selection of the design,

the same conclusions can be made as for the unweighted

straight-line model, namely that the best results are obtained

with design 1. It should also be remarked that when the

heteroscedasticity is not detected, and the goodness-of-fit tests

for an unweighted model are performed, satisfactory results

are still obtained when the data set is free of outliers. However,

when the middle concentration level is contaminated with two

outliers (40 and 84, the probability of obtaining a false positive

result with the unweighted test is very high (between 20 and

30%). The weighted tests, on the other hand, are little affected

by these outliers. This is probably because the outliers increase

80

h

$?

- 60

a)

.- .- U

a 40

20

0

B

I I

-30 -25 -20 -15 -10 -5 0

Quadratic term (x

Fig. 9 Percentage of cases where a lack-of-fit to a weighted straight-

line model is detected with the x2 test (O), the randomization test

proposed in Table 7 (A), the randomization test proposed by van der

Voet (+) and by determining the significance of the quadratic term (H)

244 Journal of Analytical Atomic Spectrometry, April 1996, Vol. 11

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online

.

.

0.5

0 -

-1

-2

. .

.

0

-0.5

I

10 20 30. 40 50 60 '70 80 90 100

I

~ : .I'

10 20 -30 40 50 60 70, 80. 99 :

rn

~. -

100

--

.

I

m

.

.

. .

I .

.

. .

.

-41

Concentration

Fig. 10 Weighted residuals for a second degree calibration model in

a situation where a contamination of the lowest concentration level

occurs. Design 2 (a) and design 5 (b) are applied.

the variance at the middle concentration level so that a smaller

weight is given to this level.

In order to evaluate the goodness-of-fit of a weighted second

degree model, the randomization test described in Table 7,

performed on the weighted residuals, is found to be the most

suitable test. The probability to detect a lack-of-fit increases

when the measurement points are spread over an increasing

number of concentration levels, so that the best results are

obtained with design 5. This is because, for a small number of

concentration levels, an alternative model can be found which

fits the actual data accurately, but does not give a correct

description of the relation between concentration and

absorbance. This is illustrated in Fig. 10. The weighted

residuals for a calibration line obtained with design 2 and

design 5 are given in a situation where a curved line is

simulated, but a value cb is added to all data points of the

lowest level. Design 5 clearly indicates a problem, because the

weighted residuals are not randomly distributed over the

concentration levels. With design 2, on the other hand, no

problem is detected. However, the calibration model that is

found with this latter design cannot be used to make correct

estimations. The x2 test performs less well than the randomiz-

ation test. With design 4, for example, and Cb=0.002, 0.004

and 0.006, the randomization test detects a lack-of-fit in 24, 58

and 90% of the cases, respectively. With the x2 test, the lack-

of-fit is only detected in 1,16 and 38% of the cases, respectively.

In contrast to what is concluded for the straight-line model,

the goodness-of-fit tests that assume homoscedasticity fail

when they are applied on a second degree model in a heterosc-

edastic situation.

Recommended Strategy

The described results were used to build a strategy for the

validation of atomic absorption calibration models. The pro-

posed strategy is based on the assumption that the analyst has

an idea of the linear range of the calibration line before he

starts the validation. Consequently, he will preferably try to

demonstrate the suitability of a straight-line model within this

range. However, sometimes the linear range is so small that

one is obliged to work in the curved concentration range. The

analyst will then try to demonstrate the suitability of a second

degree model within the specified calibration range.

A number of experienced analysts, whose opinion was asked,

stated that to ensure the general acceptance of the validation

strategy it should be combined with information on how to

continue when a problem, such as a lack-of-fit, is detected.

Although this is not part of the validation, the results of the

validation experiments can be used to give these recommen-

dations. In order to avoid confusion, in this section a distinction

is made between the real validation of the calibration line and

a number of additional tests that are performed to advise the

analyst on how to continue.

Validation strategy

In order to validate a straight-line model, the analyst is advised

to apply experimental design 2 (ie., nine replicates at four

concentration levels). Design 1 (three concentration levels) is

more sensitive but does not permit a further investigation

when a lack-of-fit is detected. Designs 4 and 5, on the other

hand, do not permit an accurate outlier detection at the

different concentration levels and are less suitable for the

validation of a straight-line model. Moreover, owing to the

small number of replicates at each concentration level, these

designs are not really suitable to evaluate a possible het-

eroscedasticity.

For the validation of a second degree model, an alternative

design is proposed because none of the evaluated designs seems

optimum. The designs that position all measurement points at

a small number of concentration levels (designs 1, 2 and 3) are

the most appropriate to detect a heteroscedasticity but are the

least suitable to detect a lack-of-fit, especially for a weighted

second degree model. The designs that spread the measurement

points over a large number of levels (designs 4 and 5) , on the

other hand, are the most suited to detect a lack-of-fit, but the

small number of replicates makes them scarcely appropriate

to investigate the behaviour of the variance. Therefore, it is

proposed to position nine replicates at both extremes and six

replicates at five other concentration levels, equally spread

over the concentration range. This design requires more

measurement points than for that proposed for the validation

of a straight-line model (48 instead of 36), but additional effort

can be required for the validation of a more complex model.

After the performance of the experiments, the results should

first be evaluated visually. The most appropriate way to do

this is by plotting the residuals uersus the predicted value. The

statistical evaluation of the experimental results for a straight-

line model is summarized in Fig. 11. First, single outliers are

traced at the different concentration levels, applying a Grubbs

test. When no single outliers are found, the presence of possible

paired outliers is investigated. When, in the complete data set,

no more than two outliers are detected, the analyst can remove

them and continue the evaluation of the data. More outliers

indicate a fundamental problem with the analysis method,

which must be investigated. When the problem that is respon-

sible for the outliers is solved, a new data set should be

prepared. The homoscedasticity of the data is then investigated.

One investigates whether the variance at the highest concen-

tration level is significantly larger than at the lowest concen-

tration level. In order to accomplish this one can use a one-

tailed F-test or the alternative randomization test which is

even more suitable. Depending on the result, an unweighted

or a weighted model must be used.

In order to investigate the goodness-of-fit of the straight-

line model, one checks whether the data are better fitted by a

second degree model. If this is not the case, one confirms the

suitability of a straight-line model with an ANOVA lack-of-fit

(for an unweighted model) or with a randomization test (for

Journal of Analytical Atomic Spectrometry, April 1996, Vol. 1 I 245

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

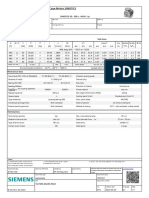

View Article Online

method does

not perfow

as expected

Straight

line model

is not

suitable

outliers

from data

F test

n l y

171 Use unweighted 'rl U s e weighted

n l

Unueighted

straight

line model

Weighted

straight

line model

Fig. 11 Strategy for the evaluation of the experimental results

a weighted model). These tests are also those used to demon-

strate the suitability of a second degree model.

Recommendations after the detection of a lack-of--t

When the validation shows that calibration data cannot be

described by a straight-line model, two actions can be taken.

First, one can check whether a straight-line model can be valid

over a smaller concentration range. Therefore, the upper

concentration level of the design is eliminated and the tests for

the validation of a straight-line model are applied on the

reduced calibration set. Possibly, a decrease of the calibration

range also allows the use of an unweighted instead of a

weighted model. When no suitable straight-line model can be

built, one can investigate the suitability of a second degree

model. It must beclear that those tests on a reduced calibration

set are only applied to give an indication of how the analysis

method can be adapted. They cannot be used as validation

results. If these tests indicate, for example, that a straight-line

calibration model seems suitable over a smaller concentration

range than specified at the start of the validation, the analyst

can adapt his method and start the validation of this new

method.

REFERENCES

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

Taylor, J. K., Anal. Chem., 1983, 55, 600A.

I S0 International Standard 8466- 1, Water Quality-Calibration

and Evaluation of Analytical Methods and Estimation of

Pevformance Characteristics-Part 1: Statistical Evaluation of the

Linear Calibration Function, International Organisation for

Standardisation, Geneva, 1990.

I S0 International Standard 8466-2, Water Quality-Calibration

and Evaluation of Analytical Methods and Estimation of

Performance Characteristics--Part 2: Calibration Strategy for

Non-linear Second Order Functions, International Organisation

for Standardisation, Geneva, 1990.

Wendt, R. H., At. Absorpt. Newsl., 1968, 7, 28.

Barnett, W. B., Spectrochim. Acta, Part B, 1984, 39, 829.

Phillips, G. R., and Eyring, E. M., Anal. Chem., 1983, 55, 1134.

Koscielniak, P., Anal. Chim. Acta, 1993, 278, 177.

Draper, N., and Smith, H., Applied Regression Analysis, Wiley,

New York, 2nd edn., 1981.

I S0 International Standards 5725, International Organisation

for Standardisation, Geneva, 1986.

Grubbs, F. E., and Beck, G., Technometrics, 1972, 14, 847.

Kelly, P. C., J. Assoc. 08. Anal. Chem., 1990, 73, 58.

CETEMA, Statistique Appliqu6e a 1 'Exploitation des Mesures,

Masson, Paris, 2nd edn., 1986.

Snedecor, G. W., and Cochran, W. G., Statistical Methods, The

Iowa State University Press, Ames, 7th edn., 1982.

Lang-Michaut, C., Pratique des Tests Statistiques, Dunod,

Paris, 1990.

Massart, D. L., Vandeginste, B. G. M., Deming, S. N., Michotte, Y.,

and Kaufman, L., Chemometrics: a Textbook, Elsevier,

Amsterdam, 1988.

Garden, J. S., Mitchel, D. G., and Mills, W. N., Anal. Chem.,

1980, 52, 2310.

Cooper, B. E., Statistics for Experimentalists, Pergamon Press,

Oxford, 1969.

Edgington, E. S., Randomization Tests, Marcel Dekker, New

York, 1987.

Box, G., and Muller, M., Ann. Math. Stat., 1958, 29, 610.

AOAC Referee, 1994, October, p. 6.

I S 0 DIS 5725-1 to 5725-3 (Draft versions), Accuracy (Trueness

and Precision) of Measurement Methods and Results, International

Organisation for Standardisation, Geneva, 1990/1991.

Van der Voet, H., Chemometr. Intell. Lab., 1994, 25, 313.

Atkinson, A. C., Chemometr. Intell. Lab., 1995, 28, 35.

Paper 5/07400B

Received November 10, 1995

Accepted December 13, 1995

246 Journal of Analytical Atomic Spectrometry, April 1996, VGl. 11

P

u

b

l

i

s

h

e

d

o

n

0

1

J

a

n

u

a

r

y

1

9

9

6

.

D

o

w

n

l

o

a

d

e

d

b

y

U

n

i

v

e

r

s

i

d

a

d

e

N

o

v

a

d

e

L

i

s

b

o

a

o

n

3

0

/

0

6

/

2

0

1

4

1

3

:

3

8

:

4

8

.

View Article Online

Das könnte Ihnen auch gefallen

- Color I MeterDokument8 SeitenColor I MeterVishal GoswamiNoch keine Bewertungen

- Confidence in MeasurementDokument8 SeitenConfidence in MeasurementloleNoch keine Bewertungen

- 2012 Calibrations in Process Control and AutomationDokument11 Seiten2012 Calibrations in Process Control and AutomationLALIT RAAZPOOTNoch keine Bewertungen

- Certificate of Analysis ListDokument3 SeitenCertificate of Analysis ListFabian GarciaNoch keine Bewertungen

- Measurement of Respirable Crystalline Silica in Workplace Air by Infrared SpectrometryDokument17 SeitenMeasurement of Respirable Crystalline Silica in Workplace Air by Infrared SpectrometryasmaNoch keine Bewertungen

- USP Certificate: Caffeine Melting Point StandardDokument2 SeitenUSP Certificate: Caffeine Melting Point StandardKimia AsikNoch keine Bewertungen

- National University of Singapore: Faculty of Engineering Department of Mechanical & Production EngineeringDokument9 SeitenNational University of Singapore: Faculty of Engineering Department of Mechanical & Production EngineeringDelroy JqNoch keine Bewertungen

- Coffee Moisture MeterDokument4 SeitenCoffee Moisture Metersaurabh_acmasNoch keine Bewertungen

- Bod Cell TestDokument1 SeiteBod Cell TestΠΟΠΗNoch keine Bewertungen

- HAXO 8 Product Brochure OptDokument2 SeitenHAXO 8 Product Brochure OptAnjas WidiNoch keine Bewertungen

- Expression of Uncertainty in MeasurementDokument1 SeiteExpression of Uncertainty in MeasurementamishraiocNoch keine Bewertungen

- Accuracy and PrecisionDokument2 SeitenAccuracy and PrecisionMilind RavindranathNoch keine Bewertungen

- Microprocessor Based COD Analysis ColorimeterDokument4 SeitenMicroprocessor Based COD Analysis Colorimetersaurabh_acmasNoch keine Bewertungen

- Industrial Training Report On NTPC DADRIDokument75 SeitenIndustrial Training Report On NTPC DADRINitin SinghNoch keine Bewertungen

- A Note About Scale BioreactorDokument6 SeitenA Note About Scale BioreactorCupi MarceilaNoch keine Bewertungen

- Microcoulomb AnalyzerDokument4 SeitenMicrocoulomb Analyzersaurabh_acmasNoch keine Bewertungen

- Particle Charge TestDokument2 SeitenParticle Charge TestBhaskar Pratim DasNoch keine Bewertungen

- Certificate of Analysis ListDokument3 SeitenCertificate of Analysis ListJorge Luis ParraNoch keine Bewertungen

- FreshPoint Service Manual 42264Dokument40 SeitenFreshPoint Service Manual 42264Vemulapalli SaibabuNoch keine Bewertungen

- Technical Paper on Air Sampler Selection for cGMP EnvironmentsDokument11 SeitenTechnical Paper on Air Sampler Selection for cGMP EnvironmentsBLUEPRINT Integrated Engineering ServicesNoch keine Bewertungen

- HiLoWeldGage PDFDokument1 SeiteHiLoWeldGage PDFJlaraneda SantiagoNoch keine Bewertungen

- Casella CEL-712 User ManualDokument50 SeitenCasella CEL-712 User ManualarjmandquestNoch keine Bewertungen

- Entomological Chamber: Model No. - Acm-7131RDokument8 SeitenEntomological Chamber: Model No. - Acm-7131RAcmas IndiaNoch keine Bewertungen

- SherwoodScientific Flame-Photometers M360 ManualDokument39 SeitenSherwoodScientific Flame-Photometers M360 ManualAlexandru IgnatNoch keine Bewertungen

- V V V V V V: Weight-Volume Relationships, Plasticity, and Structure of SoilDokument13 SeitenV V V V V V: Weight-Volume Relationships, Plasticity, and Structure of SoilAmira SyazanaNoch keine Bewertungen

- G100 Manual Update 10.8.18 DiamondDokument54 SeitenG100 Manual Update 10.8.18 DiamondViasensor InfoNoch keine Bewertungen

- Leather Moisture MeterDokument4 SeitenLeather Moisture Metersaurabh_acmasNoch keine Bewertungen

- Master sensor calibration certificateDokument2 SeitenMaster sensor calibration certificatemaniNoch keine Bewertungen

- Semi Automatic Polarimeter: MODEL NO. - ACM-SAP-2644Dokument4 SeitenSemi Automatic Polarimeter: MODEL NO. - ACM-SAP-2644saurabh_acmasNoch keine Bewertungen