Beruflich Dokumente

Kultur Dokumente

6 - 7 - Multimedia - Part 2 (18-45)

Hochgeladen von

Saumith Dahagam0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

14 Ansichten8 SeitenThe next class is the MediaPlayer, which controls the playback of audio and video streams. The VideoView class can load video content from different sources. The release method releases the resources used by the current media player.

Originalbeschreibung:

Copyright

© © All Rights Reserved

Verfügbare Formate

TXT, PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenThe next class is the MediaPlayer, which controls the playback of audio and video streams. The VideoView class can load video content from different sources. The release method releases the resources used by the current media player.

Copyright:

© All Rights Reserved

Verfügbare Formate

Als TXT, PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

14 Ansichten8 Seiten6 - 7 - Multimedia - Part 2 (18-45)

Hochgeladen von

Saumith DahagamThe next class is the MediaPlayer, which controls the playback of audio and video streams. The VideoView class can load video content from different sources. The release method releases the resources used by the current media player.

Copyright:

© All Rights Reserved

Verfügbare Formate

Als TXT, PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 8

[BLANK_AUDIO]

Hi, I'm Adam Porter, and this is

programming mobile applications for

Android handheld systems.

The next class is the MediaPlayer.

MediaPlayer controls the playback of audio

and video streams and files.

And this allows you to incorporate audio

and video into your

applications, and to let the applications

and users control that playback.

This class operates according to a complex

state machine,

which I won't go over here in this lesson,

so please take a look at the following

website for more information.

Some of the methods that you're likely to

use when you use the MediaPlayer include

SetDataSource, which tells the media

player which streams to play.

Prepare, which initializes the media

player and loads the necessary streams.

The prepare method is synchronous, and

you'll normally use it when

the media content is stored in a file on

the device.

And there's also a asynchronous version of

this method.

Which can be used, for example, when the

media is streamed from the internet.

There's also a start method, to start or

resume playback.

A pause method, to stop playing

temporarily.

A seekTo method, to move to a particular

position in the stream.

A stop method, to stop playing the media.

And the release method, which releases the

resources used by the current media

player.

Another class that can be used to view

video content is the VideoView class.

And this class is a sub-class of

SurfaceView and internally

makes use of the media player we just

talked about.

This class can load video content from

different sources, and it includes a

number of methods and controls to make it

easier to view video content.

Our next example application is called

AudioVideoVideoPlay, and this application

displays a simple

view with video playback controls, and

allows the user to play a video file.

In this case, the film is a clip from the

1902 film, A Trip to the Moon, by Georges

Mlis.

Let's take a look.

So here's my device.

And now I'll start up the

AudioVideoVideoPlay application.

If I now touch the display, you can see

that a set of playback controls appear.

And now I'll hit the single triangle and

the video will begin playing.

Here we go.

[BLANK_AUDIO]

Let's take a look at the source code for

this application.

[BLANK_AUDIO]

Here's the AudioVideoVideoPlay application

open in the IDE.

And now I'll open the main activity.

In OnCreate, the code first gets a

reference to a video view that's in this

activity's layout.

Next, it creates a media controller,

which is a view that contains controls for

controlling the media player.

The code continues by disabling the media

controls, and

then by attaching this media controller to

the video view, with

a call to the video view's

setMediaController method.

Next, the code identifies the media file

to play, passing in a URI

that points to a file stored in the

res/raw directory.

After that, the code sets an

OnPreparedListener on the video view.

This code will be called, once the media

is loaded and ready to play.

And when that happens, the code will

enable the

media controller, so the user can start

the film playing.

And finally, down in the onPause method,

the code shuts down the video view.

The next class we'll discuss is the

MediaRecorder.

Now this class can be used to record both

audio and video.

The class operates in accordance with a

state machine,

which you can read more about, at this

URL.

[BLANK_AUDIO]

Now some of the media recorder methods

that you'll likely use include

setAudioSource, and setVideoSource.

Which set the source of the input, such as

the microphone for audio, or a camera for

video.

SetOutputFormat, which sets the output

format for the recording.

For instance, mp4.

Prepare,

which readies the recorder to begin

capturing and encoding data.

Start, which starts the actual recording

process.

Stop, which stops the recording process.

And release, which releases the resources

held by this MediaRecorder.

Our next example application is

AudioVideoAudioRecording.

Now this application records audio from

the user and

can play the recorded audio back to the

user.

Let's use this application to capture my

voice.

So here's my device.

[BLANK_AUDIO]

And now I'll start up the

AudioVideoAudioRecording application.

This application displays two toggle

buttons, one

labeled Start Recording and one labeled

Start Playback.

When I press the Start Recording button

the application will begin recording.

The button's label will change to Stop

Recording,

and the play back button will be disabled.

[BLANK_AUDIO]

When I press the start recording button

again, the recording will stop.

The button's label will change back, and

the playback button will be enabled again.

Let's try it out.

Now I'll press the Start Recording button.

[BLANK_AUDIO]

Testing, testing, one, two, three,

testing.

And now that I've pressed the button

again, the recording is finished, and

saved, and the Start Playback button is

now enabled.

Let me press that one now.

Testing, testing, one, two, three,

testing.

And

now I'll press that button again.

And we're back to where we started.

Let's look at the source code for this

application.

Here's the AudioVideoAudioRecording

application open in the IDE.

Now I'll open the main activity.

In onCreate the code first gets references

to the two toggle buttons.

Next it sets up an onCheckChangeListener,

on each of the

toggle buttons.

This code is called when the check state

of a toggle button changes.

Let's look at the first toggle button

which is the recording button.

[BLANK_AUDIO]

When this button's checked state changes,

say from off to on, this code will

first disable the play button, and then

will call the onRecordPressed method.

The playback button does something

similar.

It first changes the enabled state of the

recording

button, disabling it if the user wants to

start playback.

Or enabling

it, if the user wants to stop playback.

After that, it then calls the

onPlayPressed method.

Let's look at the onRecordPressed method

first.

As you can see, this method takes

a Boolean as a parameter called

shouldStartRecording.

If shouldStartRecording is true, then the

code calls the startRecording method.

Otherwise, it calls the stopRecording

method.

The start recording method first creates a

new media recorder and then sets

its source as the microphone.

Then it sets the output format.

And then the output file where the

recording will be saved.

And then it sets the encoder for the audio

file.

Now continuing on, the code calls prepare

to get the recorder ready,

and then finally it calls the start method

to begin recording.

[BLANK_AUDIO]

The stop recording method instead, stops

the

media recorder and then releases its

resources.

[BLANK_AUDIO]

If the user instead had pressed the

playback button, then onPlayPressed would

have been called.

If the button was checked then

the parameter shouldStartPlaying would be

true.

If so, the start playing method is

called, otherwise the stop playing method

is called.

The start playing method starts by

creating a media player.

And then follows up by setting its data

source, then by calling

prepare on the media player, and then by

calling the start method.

The stop playing method will stop the

media

player, and then release the media

player's resources.

[BLANK_AUDIO]

The last class we'll talk about, in this

lesson, is the camera class.

This class allows applications to access

the camera service.

The low level code that manages the actual

camera hardware on your device.

Now, through this class your application

can manage settings for capturing images.

Start and stop a preview function, which

allows

you to use the devices display as a kind

of camera view finder.

And most importantly, it allows you to

take pictures and video.

To use the camera features you'll need to

set some permissions, and features.

You'll need at least the camera

permission, and you'll probably want to

include a uses-feature tag in your Android

manifest .xml file that specifies

the need for a camera.

And you may want to specify that your

application requires other sub-features,

such as autofocus or a flash.

Although you can easily use the built in

camera application to take

pictures, you might want to add

some features to a traditional camera

application.

Or, you might want to use the camera for

other purposes.

In that case, you can follow the following

steps.

First, you get a camera instance.

Next, you can set any camera parameters

that you might need.

After that, you will want to setup

the preview display, so the user can see

what the camera sees.

Next, you'll start the preview, and you'll

keep

it running, until the user takes a

picture.

And once the user takes a picture, your

application will receive and process the

picture image.

And then eventually, your application will

release the camera

so that other applications can have access

to it.

The last example application for this

lesson is called AudioVideoCamera.

This application takes still photos and

uses

the device's display as the camera's

viewfinder.

Let's give it a try.

So

here's my device.

And now, I'll start up the

AudioVideoCamera application.

As you can see, the application displays

the image currently visible through the

camera's lens.

And if you move the camera, the image

changes.

If the user is satisfied with the image,

then he

or she can simply touch the screen to take

a picture.

And when he or she does so, the camera

will take

the picture, and then freeze the preview

window for about two seconds.

So the user can see the picture they just

snapped.

Let me do that.

I'll touch the display, to snap the

picture,

and now the preview freezes for about two

seconds.

And now the camera is ready to take

another photo.

Let's look at the

source code for this application.

Here's the AudioVideoCamera application

open in the IDE.

Now, I'll open the main activity.

And let's scroll down to the onCreate

method.

[BLANK_AUDIO]

And one of the things we see here is the

code

calls the getCamera method, to get a

reference to the camera object.

Let's scroll down to that method.

[BLANK_AUDIO]

This method calls the camera classes open

method.

Which returns a reference, to the first

back facing camera on this device.

If your device has several cameras, you

can use

other versions of the open method to get

particular cameras.

Now scrolling back up to onCreate, the

code now sets up a touch listener on the

main view.

And when the user touches the screen, this

listener's onTouch method will be called.

And this method will call the camera's

takePicture method to take a picture.

Now we'll come back to this method in a

few seconds.

Next, the code sets up a surface view that

is used to display the preview,

which shows the user what the camera is

currently seeing.

And these steps are just what we talked

about in our previous lesson on graphics.

First, the code gets the surface holder

for the surface view,

and then it adds a callback object to the

surface holder.

And that callback object is defined below.

Let's scroll down to it.

[BLANK_AUDIO]

Now as you remember, the

SurfaceHolder.Callback interface defines

three methods.

SurfaceCreated, surfaceChanged, and

surfaceDestroyed.

The surfaceCreated method starts by

setting the surface

holder on which the camera will show its

preview.

And after that, the code starts the

camera's preview.

When the surface changes its structure

or format, the surfaceChanged method is

called.

And this method disables touches on the

layout, and then stops the camera preview.

Next, the code changes the camera

parameters.

And in this case, the code finds an

appropriate size for the camera preview.

And then sets the preview size,

and then passes the updated parameters

object back to the camera.

Now that the parameters are set, the code

restarts the preview, by calling the

startPreview method.

Then, finally, the code re-enables touches

on the layout.

So now that we've gone over setting up and

managing the

preview display, let's go back and look at

taking an actual picture.

So scrolling back up to the

onTouchListener,

When the user touches the display, the

takePicture method gets called.

In that method, the code here passes in

two CameraCallback objects.

One is the ShutterCallback, and the other

is the CameraCallback.

The ShutterCallback is called around the

time that the user takes the picture,

basically to let the user know that the

camera is taking a picture.

The CameraCallback used here, is called

after the picture

has been taken and when the compressed

image is available.

When this happens, the CameraCallback's

onPictureTaken method is called.

In this example, the code simply sleeps

for two seconds, and then restarts the

preview.

And you might notice that this particular

application doesn't actually save the

image.

But of course, you'd normally would want

to do that, and

if so, you'd typically do it right here in

this method.

[BLANK_AUDIO]

The last method I'll talk about is

onPause.

Here the code disables touches on the

display, shuts down the preview,

and then releases the camera so that other

applications can use it.

So that's all for our lesson on

multimedia.

Please join me next time, when we'll talk

about sensors.

Thanks.

Das könnte Ihnen auch gefallen

- Internet Trading - 01-07-202030-06-2021 - CMDokument11 SeitenInternet Trading - 01-07-202030-06-2021 - CMSaumith DahagamNoch keine Bewertungen

- NSCCL CM Ann MFDokument123 SeitenNSCCL CM Ann MFSaumith DahagamNoch keine Bewertungen

- Sr. No. Symbol Isin Security NameDokument54 SeitenSr. No. Symbol Isin Security NameSaumith DahagamNoch keine Bewertungen

- Doc2 of Stock Betas, For AnalysisDokument50 SeitenDoc2 of Stock Betas, For AnalysisSaumith DahagamNoch keine Bewertungen

- Doc1 of The Stock Correlation and RegressionDokument40 SeitenDoc1 of The Stock Correlation and RegressionSaumith DahagamNoch keine Bewertungen

- Doc4 of The Patterns of CandlestoicksDokument47 SeitenDoc4 of The Patterns of CandlestoicksSaumith DahagamNoch keine Bewertungen

- PHA F344Midsem Grading - DocxaaaaaDokument1 SeitePHA F344Midsem Grading - DocxaaaaaSaumith DahagamNoch keine Bewertungen

- LyricsaaaaaaaaaaaaaaaaaaaaaaaaaDokument2 SeitenLyricsaaaaaaaaaaaaaaaaaaaaaaaaaSaumith DahagamNoch keine Bewertungen

- 8 - 4 - The ContentProvider Class - Part 2 (11-55)Dokument5 Seiten8 - 4 - The ContentProvider Class - Part 2 (11-55)Saumith DahagamNoch keine Bewertungen

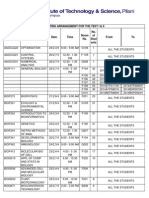

- Seating Arrangement For The Test I & Ii: Instruction DivisionDokument34 SeitenSeating Arrangement For The Test I & Ii: Instruction DivisionPranshu SharmaNoch keine Bewertungen

- IntroductionDokument15 SeitenIntroductionSaumith DahagamNoch keine Bewertungen

- Name: - ID: - : A) B) C) D)Dokument1 SeiteName: - ID: - : A) B) C) D)Saumith DahagamNoch keine Bewertungen

- CAT 2015 Study SchedulaaaaaaaaaaaaaaaaaaaaDokument5 SeitenCAT 2015 Study SchedulaaaaaaaaaaaaaaaaaaaaSaumith DahagamNoch keine Bewertungen

- Seating Arrangement II Sem 14-AaaaaaaaaaaaaaaaaaaaDokument17 SeitenSeating Arrangement II Sem 14-AaaaaaaaaaaaaaaaaaaaSaumith DahagamNoch keine Bewertungen

- Name: - ID: - : A) B) C) D)Dokument1 SeiteName: - ID: - : A) B) C) D)Saumith DahagamNoch keine Bewertungen

- ERP InstructionsDokument1 SeiteERP InstructionsSaumith DahagamNoch keine Bewertungen

- Calendar Holidays 2013-14Dokument1 SeiteCalendar Holidays 2013-14Saumith DahagamNoch keine Bewertungen

- 8 - 5 - The Service Class - Part 1 (10-19)Dokument5 Seiten8 - 5 - The Service Class - Part 1 (10-19)Saumith DahagamNoch keine Bewertungen

- User NotificationsaaaaaaaDokument20 SeitenUser NotificationsaaaaaaaSaumith DahagamNoch keine Bewertungen

- 8 - 2 - Data Management - Partaaaaaaaaaaa 2 (13-43)Dokument6 Seiten8 - 2 - Data Management - Partaaaaaaaaaaa 2 (13-43)Saumith DahagamNoch keine Bewertungen

- 8 - 5 - The Service Class - Part 1 (10-19)Dokument5 Seiten8 - 5 - The Service Class - Part 1 (10-19)Saumith DahagamNoch keine Bewertungen

- 1 - 1 - Introduction To The Android Platform (18-19)Dokument9 Seiten1 - 1 - Introduction To The Android Platform (18-19)Saumith DahagamNoch keine Bewertungen

- 8 - 3 - The ContentProvider Class - Part 1 (15-24)Dokument7 Seiten8 - 3 - The ContentProvider Class - Part 1 (15-24)Saumith DahagamNoch keine Bewertungen

- 6 - 4 - Touch and Gestures - Part 1 (15-48)Dokument7 Seiten6 - 4 - Touch and Gestures - Part 1 (15-48)Saumith DahagamNoch keine Bewertungen

- 6 - 2 - Graphics and Animation - Part 2 (12-26)Dokument5 Seiten6 - 2 - Graphics and Animation - Part 2 (12-26)Saumith DahagamNoch keine Bewertungen

- 8 - 1 - Data Management - Part 1 (15-57)Dokument7 Seiten8 - 1 - Data Management - Part 1 (15-57)Saumith DahagamNoch keine Bewertungen

- 7 - 2 - Sensors - Part 2 (11-40)Dokument5 Seiten7 - 2 - Sensors - Part 2 (11-40)Saumith DahagamNoch keine Bewertungen

- 7 - 1 - Sensors - Part 1 (11-06)Dokument5 Seiten7 - 1 - Sensors - Part 1 (11-06)Saumith DahagamNoch keine Bewertungen

- 6 - 3 - Graphics and Animation - Part 3 (20-42)Dokument8 Seiten6 - 3 - Graphics and Animation - Part 3 (20-42)Saumith DahagamNoch keine Bewertungen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- SimulatorTiketi 19.3.2022Dokument6 SeitenSimulatorTiketi 19.3.2022Djordje StankovicNoch keine Bewertungen

- The Game of The GeneralsDokument11 SeitenThe Game of The GeneralsBeverly Galutan AgulayNoch keine Bewertungen

- An Overview of Transceiver SystemsDokument11 SeitenAn Overview of Transceiver SystemssurvivalofthepolyNoch keine Bewertungen

- Score Study Analysis-MarkingDokument2 SeitenScore Study Analysis-Markingapi-330821764Noch keine Bewertungen

- Rizal's Grand Tour of Europe With Maximo ViolaDokument16 SeitenRizal's Grand Tour of Europe With Maximo ViolaRuzzell Rye BartolomeNoch keine Bewertungen

- CX500 ProductsheetDokument2 SeitenCX500 Productsheetgtmx 14Noch keine Bewertungen

- Dynamics of Mass Communication Media in Transition 12th Edition Dominick Test Bank 1Dokument16 SeitenDynamics of Mass Communication Media in Transition 12th Edition Dominick Test Bank 1lois100% (41)

- eBasisInfo A3 050312Dokument17 SeiteneBasisInfo A3 050312rcpuram01Noch keine Bewertungen

- 3.17) Refer To Exercise 3.7. Find The Mean and Standard Deviation For Y The Number of EmptyDokument2 Seiten3.17) Refer To Exercise 3.7. Find The Mean and Standard Deviation For Y The Number of EmptyVirlaNoch keine Bewertungen

- Utility - Drivers - Mini Button Camera S918 (Manual)Dokument4 SeitenUtility - Drivers - Mini Button Camera S918 (Manual)ssudheerdachapalliNoch keine Bewertungen

- Auld Lang SyneDokument3 SeitenAuld Lang Synesylvester_handojoNoch keine Bewertungen

- Q1 2019 Price Pages - Excavators PDFDokument252 SeitenQ1 2019 Price Pages - Excavators PDFvitaliyNoch keine Bewertungen

- Rural Marketing Rural Marketing: - A Paradigm Shift in Indian MarketingDokument19 SeitenRural Marketing Rural Marketing: - A Paradigm Shift in Indian MarketingGowtham Reloaded DNoch keine Bewertungen

- VOLLEYBALLDokument69 SeitenVOLLEYBALLElen Grace ReusoraNoch keine Bewertungen

- Strategic Management: AssignmentDokument3 SeitenStrategic Management: AssignmentGarimaNoch keine Bewertungen

- Luigi Nono S Late Period States in DecayDokument37 SeitenLuigi Nono S Late Period States in DecayAlen IlijicNoch keine Bewertungen

- 101 Onomatopoeic SoundsDokument4 Seiten101 Onomatopoeic SoundsErick EspirituNoch keine Bewertungen

- CPAR 2nd ExamDokument2 SeitenCPAR 2nd ExamAlexander ArranguezNoch keine Bewertungen

- Datapage Top-Players2 MinnisotaDokument5 SeitenDatapage Top-Players2 Minnisotadarkrain777Noch keine Bewertungen

- How To Install Subversion-1.3.2-2Dokument3 SeitenHow To Install Subversion-1.3.2-2Sharjeel SayedNoch keine Bewertungen

- Sharp Ar 235 275 Sim CodesDokument98 SeitenSharp Ar 235 275 Sim CodesBansi Khetwani0% (1)

- Hasselblad 500CMDokument29 SeitenHasselblad 500CMcleansweeper100% (1)

- LongaDokument3 SeitenLongaMosses Emmanuel A. MirandaNoch keine Bewertungen

- Korean Cinema 2019Dokument138 SeitenKorean Cinema 2019UDNoch keine Bewertungen

- Curse (Aka Luisa's Curse)Dokument9 SeitenCurse (Aka Luisa's Curse)Michael MerlinNoch keine Bewertungen

- Colossus March 2019 PDFDokument76 SeitenColossus March 2019 PDFMarmik ShahNoch keine Bewertungen

- Service Manual ILO 32Dokument28 SeitenService Manual ILO 32ca_otiNoch keine Bewertungen

- Pre School Holidays HomeworkDokument17 SeitenPre School Holidays HomeworkSalonee ChadhaNoch keine Bewertungen

- Emergency DescentDokument5 SeitenEmergency Descentpraveenpillai83Noch keine Bewertungen

- 20 Century Music To Other Art Forms and Media: MAPEH (Music) First Quarter - Week 2Dokument18 Seiten20 Century Music To Other Art Forms and Media: MAPEH (Music) First Quarter - Week 2ro geNoch keine Bewertungen