Beruflich Dokumente

Kultur Dokumente

DataAn-NordanTextura Realităţii

Hochgeladen von

Sorin VanatoruOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

DataAn-NordanTextura Realităţii

Hochgeladen von

Sorin VanatoruCopyright:

Verfügbare Formate

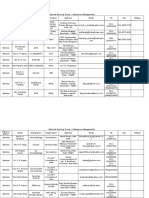

Data Analytics Technical Details with references to R

packages

I.What we have and we know tu use:

1. lustering: clust (shipped with base R), kmeans, dbscan, hierarchical clustering

!. Association Rules: Package arules provides both data structures for efficient handling of

sparse binary data as well as interfaces to implementations of Apriori and Eclat for mining

frequent itemsets, maximal frequent itemsets, closed frequent itemsets and association rules

II.What we can do "tested with hypothetical data#:

$. Recursive %artitioning: Tree-structured models for regression, classification and survival

analysis, following the ideas in the !"R# book, are implemented in rpart (shipped with base R)

and tree Package rpart is recommended for computing !"R#$like trees " rich toolbox of

partitioning algorithms is available in %eka , package R%eka provides an interface to this

implementation, including the &'($variant of !') and *) #he !ubist package fits rule$based

models (similar to trees) with linear regression models in the terminal leaves, instance$based

corrections and boosting #he !)+ package can fit !)+ classification trees, rule$based models,

and boosted versions of these Two recursive partitioning algorithms with unbiased variable

selection and statistical stopping criterion are implemented in package party ,unction ctree() is

based on non-parametrical conditional inference procedures for testing independence between

response and each input variable whereas mob() can be used to partition parametric models

-xtensible tools for visualizing binary trees and node distributions of the response are available

in package party as well "n adaptation of rpart for multivariate responses is available in

package mvpart ,or problems with binary input variables the package .ogicReg implements

logic regression Graphical tools for the visuali/ation of trees are available in package maptree

"n approach to deal with the instability problem via extra splits is available in package #%01

#rees for modelling longitudinal data by means of random effects are offered by packages

R--*tree and longRPart Partitioning of mixture models is performed by RP**

!omputational infrastructure for representing trees and unified methods for predition and

visuali/ation is implemented in partykit #his infrastructure is used by package evtree to

implement evolutionary learning of globally optimal trees Obliue trees are available in package

obliquetree

&. 'eural 'etworks: single-hidden-layer neural networ! are implemented in package nnet

(shipped with base R) Package R2332 offers an interface to the "tuttgart #eural #etwor!

2imulator (2332)

(. )pti*i+ation using ,enetic Algorith*s: Packages rgp and rgenoud offer optimi/ation

routines based on genetic algorithms

III.What we can do in future "learning and testing in progress#:

-. Regulari+ed and .hrinkage /ethods: $egression models with some constraint on the

parameter estimates can be fitted with the lasso4 and lars packages %asso with simultaneous

updates for groups of parameters (groupwise lasso) is available in package grplasso5 the grpreg

package implements a number of other group penalization models, such as group *!P and

group 2!"6 #he .7 regularization path for generali/ed linear models and !ox models can be

obtained from functions available in package glmpath, the entire lasso or elastic-net

regularization path (also in elasticnet) for linear regression, logistic and multinomial regression

models can be obtained from package glmnet #he penali/ed package provides an alternative

implementation of lasso &%'( and ridge &%)( penali/ed regression models (both 8.* and !ox

models) "emiparametric additive hazards models under lasso penalties are offered by package

aha/ " generalisation of the %asso shrin!age techniue for linear regression is called relaxed

lasso and is available in package relaxo #he shrun!en centroids classifier and utilities for gene

expression analyses are implemented in package pamr "n implementation of multivariate

adaptive regression splines is available in package earth *ariable selection through clone

selection in 29*s in penali/ed models (2!"6 or .7 penalties) is implemented in package

penali/ed29* 9arious forms of penalized discriminant analysis are implemented in packages

hda, rda, sda, and 266" Package .iblineaR offers an interface to the .0:.03-"R library #he

ncvreg package fits linear and logistic regression models under the the 2!"6 and *!P

regression penalties using a coordinate descent algorithm

0. 1oosting: 9arious forms of gradient boosting are implemented in package gbm (tree$based

functional gradient descent boosting) #he +inge-loss is optimi/ed by the boosting

implementation in package bst Package 8"*:oost can be used to fit generalized additive

models by a boosting algorithm "n extensible boosting framework for generalized linear,

additive and nonparametric models is available in package mboost %i!elihood-based boosting

for !ox models is implemented in !ox:oost and for mixed models in 8**:oost 8"*.22

models can be fitted using boosting by gamboost.22

2. .upport 3ector /achines and 4ernel /ethods: #he function svm() from e7+;7 offers an

interface to the .0:29* library and package kernlab implements a flexible framework for

!ernel learning (including 29*s, R9*s and other kernel learning algorithms) "n interface to

the 29*light implementation (only for one$against$all classification) is provided in package

klaR #he relevant dimension in kernel feature spaces can be estimated using rdetools which also

offers procedures for model selection and prediction

5. 1ayesian /ethods: -ayesian Additive $egression #rees (:"R#), where the final model is

defined in terms of the sum over many weak learners (not unlike ensemble methods), are

implemented in package :ayes#ree -ayesian nonstationary, semiparametric nonlinear

regression and design by treed 8aussian processes including :ayesian !"R# and treed linear

models are made available by package tgp

16. /odel selection and validation: Package e7+;7 has function tune() for hyper parameter

tuning and function errorest() (ipred) can be used for error rate estimation #he cost parameter !

for support vector machines can be chosen utili/ing the functionality of package svmpath

,unctions for R<! analysis and other visualisation techniques for comparing candidate

classifiers are available from package R<!R Package caret provides miscellaneous functions

for building predictive models, including parameter tuning and variable importance measures

#he package can be used with various parallel implementations (eg *P0, 3%2 etc)

11. 7le*ents of .tatistical 8earning: 6ata sets, functions and examples from the book #he

-lements of 2tatistical .earning: 6ata *ining, 0nference, and Prediction by #revor =astie,

Robert #ibshirani and &erome ,riedman have been packaged and are available as -lem2tat.earn

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Manuals Com Cost Accounting by Matz and Usry 9th Edition Manual Ht4Dokument2 SeitenThe Manuals Com Cost Accounting by Matz and Usry 9th Edition Manual Ht4ammarhashmi198633% (12)

- Fall01 Crist Symbol of Self PDFDokument3 SeitenFall01 Crist Symbol of Self PDFSorin VanatoruNoch keine Bewertungen

- Kyabin Studio Pricelist 2021Dokument18 SeitenKyabin Studio Pricelist 2021BudiNoch keine Bewertungen

- 105good BookDokument711 Seiten105good BookSorin VanatoruNoch keine Bewertungen

- The Science Behind Distant HealingDokument6 SeitenThe Science Behind Distant HealingSorin Vanatoru100% (1)

- User Guide: Resuscitation System Model 100Dokument90 SeitenUser Guide: Resuscitation System Model 100Sorin VanatoruNoch keine Bewertungen

- Unconscious Perception: Attention, Awareness, and Control: James A. Debner and Larry L. JacobyDokument14 SeitenUnconscious Perception: Attention, Awareness, and Control: James A. Debner and Larry L. JacobySorin VanatoruNoch keine Bewertungen

- Bale Spears: Features and BenefitsDokument1 SeiteBale Spears: Features and BenefitsSorin VanatoruNoch keine Bewertungen

- 8 Express Scribe Software QandADokument4 Seiten8 Express Scribe Software QandASorin VanatoruNoch keine Bewertungen

- Accesorii Pentru IZMX16: IZMX-OC16, IZMX-ST..., +IZMX-STS..., IZMX-SR..Dokument1 SeiteAccesorii Pentru IZMX16: IZMX-OC16, IZMX-ST..., +IZMX-STS..., IZMX-SR..Sorin VanatoruNoch keine Bewertungen

- Chapter 10Dokument167 SeitenChapter 10Sorin VanatoruNoch keine Bewertungen

- Husserl PhdAr English 1Dokument21 SeitenHusserl PhdAr English 1Sorin VanatoruNoch keine Bewertungen

- Anstey 19681Dokument15 SeitenAnstey 19681Sorin VanatoruNoch keine Bewertungen

- Diverse CDokument249 SeitenDiverse CSorin VanatoruNoch keine Bewertungen

- Predicting Future Duration From Present Age: Revisiting A Critical Assessment of Gott's RuleDokument13 SeitenPredicting Future Duration From Present Age: Revisiting A Critical Assessment of Gott's RuleSorin VanatoruNoch keine Bewertungen

- Absense of TimeDokument3 SeitenAbsense of TimeSorin VanatoruNoch keine Bewertungen

- III Sem Jan 2010 Examination Results SwatisDokument21 SeitenIII Sem Jan 2010 Examination Results SwatisAvinash HegdeNoch keine Bewertungen

- BBRAUNDokument9 SeitenBBRAUNLuis RosasNoch keine Bewertungen

- Strategic Management MBA (GTU)Dokument639 SeitenStrategic Management MBA (GTU)keyur0% (2)

- CM Line Catalog ENUDokument68 SeitenCM Line Catalog ENUdmugalloyNoch keine Bewertungen

- Shivani Singhal: Email: PH: 9718369255Dokument4 SeitenShivani Singhal: Email: PH: 9718369255ravigompaNoch keine Bewertungen

- CV hll3220cw Uke PSGDokument17 SeitenCV hll3220cw Uke PSGczarownikivanovNoch keine Bewertungen

- Cat Serial Number PrefixesDokument4 SeitenCat Serial Number Prefixestuffmach100% (5)

- Thrust Bearing Design GuideDokument56 SeitenThrust Bearing Design Guidebladimir moraNoch keine Bewertungen

- BEAFAd 01Dokument124 SeitenBEAFAd 01Vina Zavira NizarNoch keine Bewertungen

- Example Quality PlanDokument11 SeitenExample Quality PlanzafeerNoch keine Bewertungen

- Pd-Coated Wire Bonding Technology - Chip Design, Process Optimization, Production Qualification and Reliability Test For HIgh Reliability Semiconductor DevicesDokument8 SeitenPd-Coated Wire Bonding Technology - Chip Design, Process Optimization, Production Qualification and Reliability Test For HIgh Reliability Semiconductor Devicescrazyclown333100% (1)

- Introduction To CMOS Circuit DesignDokument20 SeitenIntroduction To CMOS Circuit DesignBharathi MuniNoch keine Bewertungen

- Contact List For All NWGDokument22 SeitenContact List For All NWGKarthickNoch keine Bewertungen

- Mitac 6120N ManualDokument141 SeitenMitac 6120N ManualLiviu LiviuNoch keine Bewertungen

- Thermodynamics Nozzle 1Dokument19 SeitenThermodynamics Nozzle 1waseemjuttNoch keine Bewertungen

- High Pressure Accessories CatalogDokument117 SeitenHigh Pressure Accessories CatalogRijad JamakovicNoch keine Bewertungen

- All C PG and CPP Service ManualDokument60 SeitenAll C PG and CPP Service Manualhurantia100% (1)

- Scan 0001Dokument1 SeiteScan 0001ochiroowitsNoch keine Bewertungen

- TDS - Masterkure 106Dokument2 SeitenTDS - Masterkure 106Venkata RaoNoch keine Bewertungen

- Manual Cmp-Smartrw10 CompDokument66 SeitenManual Cmp-Smartrw10 CompAllegra AmiciNoch keine Bewertungen

- MKDM Gyan KoshDokument17 SeitenMKDM Gyan KoshSatwik PandaNoch keine Bewertungen

- Luyện tâp Liên từ Online 1Dokument5 SeitenLuyện tâp Liên từ Online 1Sơn KhắcNoch keine Bewertungen

- Fax 283Dokument3 SeitenFax 283gary476Noch keine Bewertungen

- Bobcat 3 Phase: Quick SpecsDokument4 SeitenBobcat 3 Phase: Quick SpecsAnonymous SDeSP1Noch keine Bewertungen

- GSPDokument27 SeitenGSPVirgil Titimeaua100% (1)

- TX-SMS Remote Programming GuideDokument2 SeitenTX-SMS Remote Programming GuidedjbobyNoch keine Bewertungen

- Reduced Chemical Kinetic Mechanisms For Methane Combustion Ino /N and O /co AtmosphereDokument51 SeitenReduced Chemical Kinetic Mechanisms For Methane Combustion Ino /N and O /co Atmosphereariel zamoraNoch keine Bewertungen

- Hydraulic Backhoe MachineDokument57 SeitenHydraulic Backhoe MachineLokesh SrivastavaNoch keine Bewertungen