Beruflich Dokumente

Kultur Dokumente

Theorist's Toolkit Lecture 11: LP-relaxations of NP-hard Problems

Hochgeladen von

JeremyKunOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Theorist's Toolkit Lecture 11: LP-relaxations of NP-hard Problems

Hochgeladen von

JeremyKunCopyright:

Verfügbare Formate

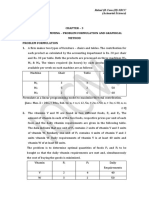

princeton university F02 cos 597D: a theorists toolkit

Lecture 11: Relaxations for NP-hard Optimization

Problems

Lecturer: Sanjeev Arora Scribe:Manoj M P

1 Introduction

In this lecture (and the next one) we will look at methods of nding approximate solutions to NP-

hard optimization problems. We concentrate on algorithms derived as follows: the problem is

formulated as an Integer Linear Programming (or integer semi-denite programming, in the next

lecture) problem; then it is relaxed to an ordinary Linear Programming problem(or semi-denite

programming problem, respectively) and solved. This solution may be fractional and thus not

correspond to a solution to the original problem. After somehow modifying it (rounding) we

obtain a solution. We then show that the solution thus obtained is a approximately optimum.

The rst main example is the Leighton-Rao approximation for Sparsest Cut in a graph.

Then we switch gears to look at Lift and Project methods for designing LP relaxations. These

yield a hierarchy of relaxations, with potentially a trade-o between running time and approx-

imation ratio. Understanding this trade-o remains an important open probem.

2 Vertex Cover

We start o by introducing the LP relaxation methodology using a simple example. Vertex

Cover problem involves a graph G = (V, E), and the aim is to nd the smallest S V such that

{i, j} E, i S or j S.

An Integer Linear Programming (ILP) formulation for this problem is as follows:

x

i

{0, 1} for each i V

x

i

+x

j

1 for each {i, j} E

Minimize

_

iV

x

i

The LP relaxation replaces the integrality constraints with

0 x

i

1 for each i V

If opt is the solution to the ILP (i.e., the exact solution to the vertex cover problem), and opt

f

the solution to the LP relaxation (f indicates that this solution involves fractional values for x

i

),

then clearly opt opt

f

. Now we show that opt 2 opt

f

. Consider the following rounding

algorithm which converts a feasible solution to the LP to a feasible solution to the ILP, and

increases the objective function by at most a factor of 2: if x

i

<

1

2

, set x

i

= 0, else set x

i

= 1,

where {x

i

} is the solution to the LP, and {x

i

} a solution to the ILP.

We observe that the factor of 2 above is fairly tight. This is illustrated by the problem on a

clique

n

, where opt = n1 and opt

f

= n/2 (by taking x

i

=

1

2

for all i).

What we have shown is that the the integrality gap, opt/opt

f

2, and that this is tight.

Note that if the integrality gap of the relaxation is then solving the LP gives an -approximation

to the optimum value (in this case, the size of the smallest vertex cover). Usually we want more

1

2

from our algorithms, namely, an actual solution (in this case, a vertex cover). However, known

techniques for upperbounding the integrality gap tend to be algorithmic in nature and yield

the solution as well (though conceivably this may not always hold).

To sum up, the smaller the integrality gap (i.e., the tighter the relaxation), the better.

3 Sparsest Cut

Our next example is Graph Expansion, or Sparsest-Cut problem. Given a graph G = (V, E)

dene

G

= min

SV

E(S,

S)

min{|S|, |

S|}

= min

SV:|S|

|V|

2

E(S,

S)

|S|

where E(S,

S) is the number of edges between S and

S.

1

The Expansion problem is to nd a

cut S which realizes

G

.

LP formulation

Leighton and Rao (1988) showed how to approximate

G

within a factor of O(log n) using an

LP relaxation method. The ILP formulation is in terms of the cut-metric induced by a cut.

Denition 1 The cut-metric on V, induced by a cut (S,

S), is dened as follows:

S

(i, j) = 0 if

i, j S or i, j

S, and

S

(i, j) = 1 otherwise.

Note that this metric satises the triangle inequality (for three points i, j, k, all three pairwise

distances are 0, or two of them are 1 and the other 0; in either case the triangle inequality is

satised).

ILP formulation and Relaxation: It will be convenient to work with

G

= min

SV:|S|

|V|

2

E(S,

S)

|S||

S|

Since

n

2

|

S| n, we have

n

2

G

G

n

G

, and the factor 2 here will end up being absorbed

in the O(log n).

Below, the variables x

ij

for i, j V are intended to represent the distances (i, j) induced

by the cut-metric. Then we have

i<j

x

ij

= |S||

S|. Consider the following ILP which xes |S||

S|

to a value t:

_

i<j

x

ij

= 1 (scaling by 1/t)

x

ij

= x

ji

x

ij

+x

jk

x

ik

x

ij

{0, 1/t} (integrality constraint)

Minimize

_

{i,j}E

x

ij

1

Compare this with the Edge Expansion problem we encountered in an earlier lecture: the Cheegar constant

was dened as

h

G

= min

SV

E(S,

S)

min{Vol(S), Vol(

S)}

where Vol(S) =

vS

deg(v). Note that for a d-regular graph, we have Vol(S) = d|S| and hence h

G

=

G

/d.

3

Note that this exactly solves the sparsest cut problem if we could take t corresponding to the

optimum cut. This is because, (a) the cut-metric induced by the optimum cut gives a solution

to the above ILP with value equal to

G

, and (b) a solution to the above ILP gives a cut S dened

by an equivalence class of points with pair-wise distance (values of x

ij

) equal to 0, so that

E(S,

S)

t

{i,j}E

x

ij

.

In going to the LP relaxation we substitute the integrality constraint by 0 x

ij

1. This in

fact is a relaxation for all possible values of t, and therefore lets us solve a single LP without

knowing the correct value of t.

Rounding the LP

Once we formulate the LP as above it can be solved to obtain {x

ij

} which dene a metric on

the nodes of the graph. But to get a valid cut from this, this needs to be rounded o to a

cut-metric- i.e., an integral solution of the form x

ij

{0, } has to be obtained (which, as we

noted above, can be converted to a cut of cost no more than the cost it gives for the LP). The

rounding proceeds in two steps. First the graph is embedded into the

1

-norm metric-space

R

O(log

2

n)

. This entails some distortion in the metric, and can increase the cost. But then, we

can go from this metric to a cut-metric with out any increase in the cost. Below we elaborate

the two steps.

LP solution R

O(log

2

n)

metric: The n vertices of the graph are embedded into the real space

using Bourgains Lemma.

Lemma 1 (Bourgains Lemma, following LLR94)

There is a constant c such that n, for every metric (, ) on n points, points z

i

R

O(log

2

n)

such that for all i, j [n],

(i, j) ||z

i

z

j

||

1

c log n(i, j) (1)

Further such z

i

can be found using a randomized polynomial time algorithm.

R

O(log

2

n)

metric cut-metric: We show that

1

-distances can be expressed as a positive com-

bination of cut metrics.

Lemma 2

For any n points z

1

, . . . , z

n

R

m

, there exist cuts S

1

, . . . , S

N

[n] and

1

, . . . ,

N

0 where

N = m(n1) such that for all i, j [n],

||z

i

z

j

||

1

=

_

k

S

k

(i, j) (2)

Proof: We consider each of the m co-ordinates separately, and for each co-ordinate give n1

cuts S

k

and corresponding

k

. Consider the rst co-ordinate for which we produce cuts {S

k

}

n1

k=1

as follows: let

(1)

i

R be the rst co-ordinate of z

i

. w.l.o.g assume that the n points are sorted

by their rst co-ordinate:

(1)

1

(1)

2

. . .

(1)

n

. Let S

k

= {z

1

, . . . , z

k

} and

k

=

(1)

k+1

(1)

k

.

Then

n1

k=1

k

S

k

(i, j) = |

(1)

i

(1)

j

|.

Similarly dening S

k

,

k

for each co-ordinate we get,

m(n1)

_

k=1

S

k

(i, j) =

m

_

=1

|

()

i

()

j

| = ||z

i

z

j

||

1

4

The cut-metric we choose is given by S

= argmin

S

k

E(S

k

,

S

k

)

|S

k

||

S

k

|

.

Now we shall argue that S

provides an O(log n) approximate solution to the sparsest cut

problem. Let opt

f

be the solution of the LP relaxation.

Theorem 3

opt

f

G

c log nopt

f

.

Proof: As outlined above, the proof proceeds by constructing a cut from the metric obtained

as the LP solution, by rst embedding it in R

O(log

2

n)

and then expressing it as a positive com-

bination of cut-metrics. Let x

ij

denote the LP solution. Then opt

f

=

{i,j}E

x

ij

i<j

x

ij

.

Applying the two inequalities in Equation (1) to the metric (i, j) = x

ij

,

c log n

{i,j}E

x

ij

i<j

x

ij

{i,j}E

||z

i

z

j

||

1

i<j

||z

i

z

j

||

1

Now by Equation (2),

{i,j}E

||z

i

z

j

||

1

i<j

||z

i

z

j

||

1

=

{i,j}E

S

k

(i, j)

i<j

S

k

(i, j)

=

{i,j}E

S

k

(i, j)

i<j

S

k

(i, j)

=

k

E(S

k

,

S

k

)

k

|S

k

||

S

k

|

min

k

E(S

k

,

S

k

)

|S

k

||

S

k

|

=

E(S

,

S

)

|S

||

S

|

where we used the simple observation that for a

i

, b

i

0,

k

a

k

k

b

k

min

k

a

k

b

k

.

Thus, c log nopt

f

E(S

,

S

)

|S

||

S

G

. On the other hand, we have already seen that the LP is a

relaxation of an ILP which solves

G

exactly, and therefore opt

f

G

.

Corollary 4

n

2

opt

f

G

c log n

n

2

opt

f

.

The O(log n) integrality gap is tight, as it occurs when the graph is a constant degree (say

3-regular) expander. (An exercise; needs a bit of work.)

4 Lift and Project Methods

In both examples we saw thus far, the integrality gap proved was tight. Can we design tighter

relaxations for these problems? Researchers have looked at this question in great detail. Next,

we consider an more abstract view of the process of writing better relaxation.

The feasible region for the ILP problem is a polytope, namely, the convex hull of the integer

solutions

2

. We will call this the integer polytope. The set of feasible solutions of the relaxation

is also a polytope, which contains the integer polytope. We call this the relaxed polytope; in the

above examples it was of polynomial size but note that it would suce (thanks to the Ellipsoid

algorithm) to just have an implicit description of it using a polynomial-time separation oracle.

2

By integer solutions, we refer to solutions with co-ordinates 0 or 1. We assume that the integrality constraints

in the ILP correspond to restricting the solution to such points.

5

On the other hand, if P NP the integer polytope has no such description. The name of the

game here is to design a relaxed polytope that as close to the integer polytope as possible. Lift-

and-project methods give, starting from any relaxation of our choice, a hierarchy of relaxations

where the nal relaxation gives the integer polytope. Of course, solving this nal relaxation

takes exponential time. In-between relaxations may take somewhere between polynomial and

exponential time to solve, and it is an open question in most cases to determine their integrality

gap.

Basic idea

The main idea in the Lift and Project methods is to try to simulate non-linear programming

using linear programming. Recall that nonlinear constraints are very powerful: to restrict a

variable x to be in {0, 1} we simply add the quadratic constraint x(1 x) = 0. Of course, this

means nonlinear programming is NP-hard in general. In lift-and-project methods we introduce

auxiliary variables for the nonlinear terms.

Example 1 Here is a quadratic program for the vertex cover problem.

0 x

i

1 for each i V

(1 x

i

)(1 x

j

) = 0 for each {i, j} E

To simulate this using an LP, we introduce extra variables y

ij

0, with the intention that

y

ij

represents the product x

i

x

j

. This is the lift step, in which we lift the problem to a higher

dimensional space. To get a solution for the original problem from a solution for the new

problem, we simply project it onto the variables x

i

. Note that this a relaxation of the original

integer linear program, in the sense that any solution of that program will still be retained as a

solution after the lift and project steps. Since we have no way of ensuring y

ij

= x

i

x

j

in every

solution of the lifted problem, we still may end up with a relaxed polytope. But note that this

relaxation can be no worse than the original LP relaxation (in which we simply dropped the

integrality constraints), because 1 x

i

x

j

+y

ij

= 0, y

ij

0 x

i

+x

j

1, and any point in

the new relaxation is present in the original one.

(If we insisted that the matrix (y

ij

) formed a positive semi-denite matrix, it would still be

a (tighter) relaxation, and we get a Semi-denite Programming problem. We shall see this in the

next lecture.)

5 Sherali-Adams Lift and Project Method

Nowwe describe the method formally. Suppose we are given a polytope P R

n

(via a separation

oracle) and we are trying to get a representation for P

0

P dened as the convex hull of

P {0, 1}

n

. We proceed as follows:

The rst step is homogenization. We change the polytope P into a cone K in R

n+1

. (A cone

is a set of points that is closed under scaling: if x is in the set, so is c x for all c 0.) If a

point (

1

, . . . ,

n

) P then (1,

1

, . . . ,

n

) K. In terms of the linear constraints dening P

this amounts to multiplying the constant term in the constraints by a new variable x

0

and thus

making it homogeneous: i.e.,

n

i=1

a

i

x

i

b is replaced by

n

i=1

a

i

x

i

bx

0

. Let K

0

K be the

cone generated by the points K {0, 1}

n+1

(x

0

= 0 gives the origin; otherwise x

0

= 1 and we

have the points which dene the polytope P

0

).

For r = 1, 2, . . . , n we shall dene SA

r

(K) to be a cone in R

V

n+1

(r)

, where V

n+1

(r) =

r

i=0

_

n+1

i

_

. Each co-ordinate corresponds to a variable y

s

for s [n + 1], |s| r. The in-

tention is that the variable y

s

stands for the homogenuous term (

is

x

i

) x

r|s|

0

. Let y

(r)

denote the vector of all the V

n+1

(r) variables.

6

Denition 2 Cone SA

r

(K) is dened as follows:

SA

1

(K) = K, with y

{i}

= x

i

and y

= x

0

.

SA

r

(K) The constraints dening SA

r

(K) are obtained fromthe constraints dening SA

r1

(K):

for each constraint ay

(r1)

0, for each i [n], form the following two constraints:

(1 x

i

) ay

(r1)

0

x

i

ay

(r1)

0

where the operator distributes over the sum ay

(r1)

=

s[n]:|s|r

a

s

y

s

and x

i

y

s

is

a shorthand for y

s{i}

.

Suppose (1, x

1

, . . . , x

n

) K {0, 1}

n+1

. Then we note that the cone SA

r

(K) contains the

points dened by y

s

=

is

x

i

. This is true for r = 1 and is maintained inductively by the

constraints we form for each r. Note that if i s then x

i

y

s

= y

s

, but we also have x

2

i

= x

i

for x

i

{0, 1}.

To get a relaxation of K we need to come back to n+1 dimensions. Next we do this:

Denition 3 S

r

(K) is the cone obtained by projecting the cone SA

r

(K) to n+1 dimensions as

follows: a point u SA

r

(K) will be projected to u|

s:|s|1

; the variable u

is mapped to x

0

and

for each i [n] u

{i}

to x

i

.

Example 2 This example illustrates the above procedure for Vertex Cover, and shows how it

can be thought of as a simulation of non-linear programming. The constraints for S

1

(K)

come from the linear programming constraints:

j V, 0 y

{j}

y

{i, j} E, y

{i}

+y

{j}

y

0

Among the various constraints for SA

2

(K) formed from the above constraints for SA

1

(K), we

have (1 x

i

) (y

y

{j}

) 0 and (1 x

i

) (y

{i}

+ y

{j}

y

) 0 The rst one expands

to y

y

{i}

y

{j}

+ y

{i,j}

0 and the second one becomes to y

{j}

y

{i,j}

y

y

{i}

0,

together enforcing y

y

{i}

y

{j}

+ y

{i,j}

= 0. This is simulating the quadratic constraint

1 x

i

x

j

+ x

i

x

j

= 0 or the more familiar (1 x

i

)(1 x

j

) = 0 for each {i, j} E. It can be

shown that the dening constraints for the cone S

2

(K) are the odd-cyle constraints: for an

odd cycle C,

x

i

C

(|C| +1)/2. An exact characterization of S

(

(K)r) for r > 2 is open.

Intuitively, as we increase r we get tighter relaxations of K

0

, as we are adding more and more

valid constraints. Let us prove this formally. First, we note the following characterization of

SA

r

(K).

Lemma 5

u SA

r

(K) i i [n] we have v

i

, w

i

SA

r1

(K), where s [n], |s| r 1, v

i

= u

s{i}

and w

i

= u

s

u

s{i}

.

Proof: To establish the lemma, we make our notation used in dening SA

r

(K) from SA

r1

(K)

explicit. Suppose SA

r1

(K) has a constraint ay

(r1)

0. Recall that from this, for each i [n]

we form two constraints for SA

r

(K), say a

y

(r)

0 and a

y

(r)

0, where a

y

(r)

x

i

ay

(r1)

is given by

a

s

=

_

0 if i s

a

s

+a

s\{i}

if i s

(3)

7

and a

y

(r)

(1 x

i

) ay

(r1)

by

a

s

=

_

a

s

if i s

a

s\{i}

if i s

(4)

Then we see that

a

u =

_

si

_

a

s

+a

s\{i}

_

u

s

=

_

si

a

s

v

i

s

+

_

si

a

s

v

i

s

= av

i

where we used the fact that for s i, u

s

= v

i

s

= v

i

s\{i}

. Similarly, noting that for s i, w

i

s

= 0

and for s i, w

i

s

= u

s

u

s\{i}

, we have

a

u =

_

si

a

s\{i}

u

s

+

_

si

a

s

u

s

=

_

si

_

a

s

u

s{i}

+a

s

u

s

_

= aw

i

Therefore, u satises the constraints of SA

r

(K) i for each i [n] v

i

and w

i

satisfy the

constraints of SA

r1

(K).

Now we are ready to show that S

r

(K), 1 r n form a hierarchy of relaxations of K

0

.

Theorem 6

K

0

S

r

(K) S

r1

(K) K for every r.

Proof: We have already seen that each integer solution x in K

0

gives rise to a corresponding

point in SA

r

(K): we just take y

(

)

s =

is

x

i

. Projecting to a point in S

r

(K), we just get x back.

Thus K

0

S

r

(K) for each r.

So it is left to only show that S

r

(K) S

r1

(K), because S

1

(K) = K.

Suppose x S

r

(K) is obtained as u|

s:|s|1

, u SA

r

(K). Let v

i

, w

i

SA

r1

(K) be two vectors

(for some i [n]) as given by Lemma 5. Since SA

r1

(K) is a convex cone, v

i

+w

i

SA

r1

(K).

Note that for each s [n], |s| r 1, w

i

s

+v

i

s

= u

s

. In particular this holds for s, |s| 1. So

x = (v

i

+w

i

)|

s:|s|1

S

r1

(K).

Theorem 7

S

n

(K) = K

0

Proof: Recall from the proof of Theorem 6 that if x S

r

(K), then there are two points v

i

, w

i

SA

r1

(K) such that x = v

i

|

s:|s|1

+ w

i

|

s:|s|1

. It follows from the denition of v

i

and w

i

that

v

i

= v

i

{i}

and w

i

{i}

= 0. So v

i

|

s:|s|1

S

r1

(K)|

y

{i}

=y

and w

i

|

s:|s|1

S

r1

(K)|

y

{i}

=0

. Hence,

S

r

(K) S

r1

(K)|

y

{i}

=y

+S

r1

(K)|

y

{i}

=0

. Further, this holds for all i [n]. Thus,

S

r

(K)

_

i[n]

_

S

r1

(K)|

y

{i}

=y

+S

r1

(K)|

y

{i}

=0

_

Repeating this for r 1 and so on, we get

S

r

(K)

_

{i

1

,...,i

r

}[n]

_

_

_

_

T{0,y

}

r

K|

(y

{i

1

}

,...,y

{ir }

)=T

_

_

_

8

For r = n this becomes simply

S

n

(K)

_

T{0,y

}

n

K|

(y

{1}

,...,y

{n}

)=T

= K

0

Along with K

0

S

n

(K) this gives us that S

n

(K) = K

0

.

Finally we note that for small r we can solve an optimization problem over S

r

(K) relatively

eciently.

Theorem 8

If there is a polynomial (in n) time separation oracle for K, then there is an n

O(r)

algorithm for

optimizing a linear function over S

r

(K).

Proof: Note that using Lemma 5, a separation oracle for S

r

(K) can be formed by calling the

separation oracle for S

r1

(K) 2n times (for v

i

and w

i

, i [n]), and therefore, calling the

separation oracle for K n

O(r)

times. Given access to the separation oracle, the optimization

can be carried out using the ellipsoid method in polynomial time.

Thus, on the one hand a higher value of r gives a tighter relaxation (a smaller integrality gap)

and possibly a better approximation (with r = n giving the exact solution), but on the other

hand the time taken is exponential in r. So to use this method, it is crucial to understand this

trade-o. It has been shown by Arora, Bollobas and Lovasz that in applying a related method

by Lovasz and Schriver to the Vertex cover problem, even for r = (

_

log n) the integrality gap

is as bad as 2 o(1). Sanjeev conjectures that for somewhat higher r the integrality gap may

go down signicantly. But this problem is open.

Das könnte Ihnen auch gefallen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (119)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (587)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2219)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (894)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- Mod BDokument32 SeitenMod BRuwina Ayman100% (1)

- Lecture 2 PDFDokument16 SeitenLecture 2 PDFsatheeshsep24Noch keine Bewertungen

- Rank Vs Communication ComplexityDokument9 SeitenRank Vs Communication ComplexityJeremyKunNoch keine Bewertungen

- Homework 1Dokument1 SeiteHomework 1JeremyKunNoch keine Bewertungen

- Homework 3Dokument1 SeiteHomework 3JeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 12: Semindefinite Programs and Tightening RelaxationsDokument4 SeitenTheorist's Toolkit Lecture 12: Semindefinite Programs and Tightening RelaxationsJeremyKunNoch keine Bewertungen

- Homework 2Dokument2 SeitenHomework 2JeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 4: The Dimension MethodDokument7 SeitenTheorist's Toolkit Lecture 4: The Dimension MethodJeremyKunNoch keine Bewertungen

- Homework 4Dokument1 SeiteHomework 4JeremyKunNoch keine Bewertungen

- Entire CourseDokument84 SeitenEntire CourseJeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 2-3: LP DualityDokument9 SeitenTheorist's Toolkit Lecture 2-3: LP DualityJeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 5: Dimension and The Lore of ExpandersDokument6 SeitenTheorist's Toolkit Lecture 5: Dimension and The Lore of ExpandersJeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 7: Random Walks, Analysis and ConvergenceDokument7 SeitenTheorist's Toolkit Lecture 7: Random Walks, Analysis and ConvergenceJeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 6: Eigenvalues and ExpandersDokument9 SeitenTheorist's Toolkit Lecture 6: Eigenvalues and ExpandersJeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 8: High Dimensional Geometry and Geometric Random WalksDokument8 SeitenTheorist's Toolkit Lecture 8: High Dimensional Geometry and Geometric Random WalksJeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 9: High Dimensional Geometry (Continued) and VC-dimensionDokument8 SeitenTheorist's Toolkit Lecture 9: High Dimensional Geometry (Continued) and VC-dimensionJeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 10: Discrete Fourier Transform and Its UsesDokument9 SeitenTheorist's Toolkit Lecture 10: Discrete Fourier Transform and Its UsesJeremyKunNoch keine Bewertungen

- Theorist's Toolkit Lecture 1: Probabilistic ArgumentsDokument7 SeitenTheorist's Toolkit Lecture 1: Probabilistic ArgumentsJeremyKunNoch keine Bewertungen

- Mixed Integer Prog - Excel Solver Practice ExampleDokument22 SeitenMixed Integer Prog - Excel Solver Practice Exampleendu wesenNoch keine Bewertungen

- MATHEMATICAL TRIPOS GUIDE TO COURSES IN PART IADokument12 SeitenMATHEMATICAL TRIPOS GUIDE TO COURSES IN PART IAmichael pasquiNoch keine Bewertungen

- Finding All Solutions of Piecewise-Linear Resistive Circuits Using Integer ProgrammingDokument4 SeitenFinding All Solutions of Piecewise-Linear Resistive Circuits Using Integer ProgramminggkovacsdsNoch keine Bewertungen

- CH 02 Modeling With Linear ProgramminDokument26 SeitenCH 02 Modeling With Linear ProgramminAura fairuzNoch keine Bewertungen

- Full ThesisDokument112 SeitenFull ThesisInfinity TechNoch keine Bewertungen

- QM10 Tif Ch09Dokument24 SeitenQM10 Tif Ch09Lour RaganitNoch keine Bewertungen

- David Gale: Born: DiedDokument4 SeitenDavid Gale: Born: DiedMihai DumitrascuNoch keine Bewertungen

- A Maximal Covering Location Model in The Presence of Partial CoverageDokument12 SeitenA Maximal Covering Location Model in The Presence of Partial Coveragerodolfo123456789Noch keine Bewertungen

- Mathematical ProgrammingDokument90 SeitenMathematical ProgrammingHidden heiderNoch keine Bewertungen

- Examiner's Report: F5 Performance Management June 2010Dokument3 SeitenExaminer's Report: F5 Performance Management June 2010Mareea RushdiNoch keine Bewertungen

- Chemistry Class 12 SyllabusDokument13 SeitenChemistry Class 12 SyllabusHunter AakashNoch keine Bewertungen

- HW3 S12Dokument1 SeiteHW3 S12Carlos JaureguiNoch keine Bewertungen

- Chapter - 3 Linear Programming - Problem Formulation and Graphical Method Problem Formulation 1. A Firm Makes Two Types of Furniture - Chairs and Tables. The Contribution ForDokument11 SeitenChapter - 3 Linear Programming - Problem Formulation and Graphical Method Problem Formulation 1. A Firm Makes Two Types of Furniture - Chairs and Tables. The Contribution ForYamica ChopraNoch keine Bewertungen

- Efficient Solutions To The Placement and Chaining Problem of User PlaneDokument16 SeitenEfficient Solutions To The Placement and Chaining Problem of User PlaneOussema OUERFELLINoch keine Bewertungen

- Lecture 5Dokument6 SeitenLecture 5Tony AbhishekNoch keine Bewertungen

- WORKSHEET - 1 (On Convex Set) .Dokument10 SeitenWORKSHEET - 1 (On Convex Set) .Gudeta nNoch keine Bewertungen

- EMGT810 Syllabus Spr22 - HO3Dokument5 SeitenEMGT810 Syllabus Spr22 - HO3Mohammed ImamuddinNoch keine Bewertungen

- 3.6. Tug SchedulingDokument8 Seiten3.6. Tug SchedulingAmelia Agista pNoch keine Bewertungen

- Week 4 SolutionDokument18 SeitenWeek 4 SolutionAditya Upgade100% (3)

- An Application of Branch and Cut To Open Pit Mine SchedulingDokument2 SeitenAn Application of Branch and Cut To Open Pit Mine SchedulingLeo Damian Pacori ToqueNoch keine Bewertungen

- PTS-23 For XII - by O.P. GUPTADokument7 SeitenPTS-23 For XII - by O.P. GUPTAshobhitNoch keine Bewertungen

- Chapter 22Dokument40 SeitenChapter 22u201021475Noch keine Bewertungen

- Ford-Fulkerson Method Via Linear Ranking Function For Solving Fuzzy Maximum Flow ProblemsDokument15 SeitenFord-Fulkerson Method Via Linear Ranking Function For Solving Fuzzy Maximum Flow ProblemsDaban SalhNoch keine Bewertungen

- 6 PolygonShapeBooksDokument6 Seiten6 PolygonShapeBooksacmcNoch keine Bewertungen

- Lecture 5-6-7 HARMONYDokument76 SeitenLecture 5-6-7 HARMONYAsif MahmoodNoch keine Bewertungen

- Linear ProgramingDokument40 SeitenLinear ProgramingFifah hNoch keine Bewertungen

- Opeartions Research MaterialDokument234 SeitenOpeartions Research MaterialMohamed JamalNoch keine Bewertungen

- Business Administrtion Operation Research PDFDokument32 SeitenBusiness Administrtion Operation Research PDFNagrathna Poojari100% (1)