Beruflich Dokumente

Kultur Dokumente

BIDM Assignment No1

Hochgeladen von

ee052022Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

BIDM Assignment No1

Hochgeladen von

ee052022Copyright:

Verfügbare Formate

BIDM Assignment No.

1

Predictive Modelling Using Decision Trees

A supermarket ofers a new line of organic products. The supermarkets

management wants

to determine which customers are likely to purchase these products.

The supermarket has a customer loyalty program. As an initial buyer

incentive plan, the supermarket provided coupons for the organic products to

all of the loyalty program participants and collected data that includes

whether these customers purchased any of the organic products.

The ORGANICS data set contains over 22,000 observations.

The ata !ining "b#ective is to determine whether a customer would purchase

organic products or not . The target variable $"%&'() is a binary variable that

indicates whether an individual purchased organic products or not. ataset*

"%&A(+,- $uploaded on claroline). 'ou need to build a ecision Tree !odel using

-A- enterprise miner.

-teps to be followed*

1) ,reate a new folder and upload all the -A- atasets and specially the

"%&A(+,- dataset in the folder. ,reate a new library and link it to the

folder. -teps to be followed are listed below

.hen you open -A- /.2, several libraries are automatically assigned and can be

seen in the 01plorer window.

2. ouble3click on the 4ibraries icon in the 01plorer window.

To de5ne a new library*

2. %ight3click in the 01plorer window and select New.

6. +n the (ew 4ibrary window, type a name for the new library. 7or e1ample,

type ,%--A!8.

9. Type in the path name or select Browse to choose the folder to be connected

with the new library name. 7or e1ample, the chosen folder might be located

at ,*:workshop:sas:dmem.

;. +f you want this library name to be connected with this folder every time you

open -A-, select n!"le !t st!rt#$.

<. -elect O%. The new library is now assigned and can be seen in the 01plorer

window.

=. To view the data sets that are included in the new library, double3click on the

icon for ,rssamp.

2) "pen -A- 0nterprise !iner To start 0nterprise !iner, type miner in the

command bo1 or select

Sol#tions An!l&sis nter$rise Miner.

3) ,reate a new pro#ect $7ile3(ew > 8ro#ect ) and a diagram

4) rag the +nput ata -ource to the iagram -ubspace. "pen the +nput ata

-ource (ode and -elect "%&A(+,- as the source data. -elect ,hange in

!etadata sample and select use complete data as sample. ,hange role of

variable "%&'( to target

5) ,onnect !ultiplot and insight nodes to input datasource node.. %un the

!ultiplot (ode and e1plore the results

6) -et the roles for the analysis variables $,heck that modelrole for custid is

set to id , while model role for "?, 0AT0 and 4,AT0 is set to re#ected).

Also, set the model role for variables A&0&%82, A&0&%82 and

(0+&@?"A%@"" to re#ected.

-et the model role for "%&'( to target $check that the measurement role is

binary), while model role of "%&A(+,- should be set to re#ected.

As noted !"ove' onl& ORG(N will "e #sed )or t*is !n!l&sis !nd s*o#ld

*!ve ! role

o) T!rget. +Tr& #sing ORGANICS !s !n in$#t v!ri!"le' re$ort t*e

res#lts o) t*e decision tree !nd !nswer t*e )ollowing ,#estion- .

C!n ORGANICS "e #sed !s !n in$#t )or ! model t*!t is #sed to

$redict ORG(N .

/*& or w*& not.

Att!c* ! screens*ot01 o) t*e in$#t d!t! so#rce s*owing t*e model

role o) !ll t*e v!ri!"les

7) ,onnect data partition node and partition the dataset into training > <0B

and validation > 90B

8) ,onnect a ecision Tree (ode. "pen the (ode and in the basic settings

select gini reduction as splitting criterion. Ceep the default stopping rules

$no. of observations in leaf node, observations reDuired for split search,

ma1 branches from node, ma1 no. of levels). 'ou may try changing

splitting criterion and stopping rules and see the impact on results

9) +n the advanced settings, select proportion misclassi5ed of as the

assessment criterion. ,lick on score and select process or score training,

validation and test and click on show details of validation.

10) %un the decision tree node and e1plore the results. &o to Eiew >

Tree to view the tree . &o to Eiew > tree options to change the no. of levels

that you want to view the tree. &o to 8lot and Table to e1plore the

misclassi5cation error vs the no. of leaves plot. .hat is the no. of leaves

and the corresponding misclassi5cation error in the 5nal selectedFpruned

subtree. &o to -core and variable selection to see the variables ranked in

the order of importance.

Att!c* screens*ots o) t*e tree res#lts +tree' $lot !nd 1n!l selected

v!ri!"les- S*ot02'3'4

11) ,onnect +nsight (ode to the decision Tree (odes. "pen the insight

node, select entire dataset and validation dataset. %un insight node and

e1plore the results

Att!c* screens*ot o) t*e insig*t node res#lts s*ot05

12) ,onnect Assessment (ode to both the ecision tree (odes . %un

and e1plore the results $lift charts > cumulative and non cumulative B

response chart, B captured response and lift value). /*!t is t*e

c#m#l!tive 6 res$onse' 6 c!$t#red res$onse !nd li)t v!l#e )or

t*e decision tree !t 17 $ercentile !nd 27 $ercentile. Also' w*!t is

t*e non8c#m#l!tive 6 res$onse 6 c!$t#red res$onse !nd li)t

v!l#e !t 27 $ercentile

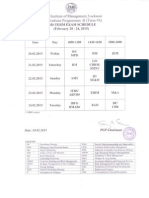

17 27

c#m#l!tive 6

res$onse

9: ;5

c#m#l!tive 6

c!$t#red res$onse

32 52

c#m#l!tive li)t v!l#e 3.2 2.;

non8c#m#l!tive 6

res$onse

51

non8c#m#l!tive 6

c!$t#red res$onse

27

non8c#m#l!tive li)t

v!l#e

2

Att!c* screens*ots o) c#m#l!tive !nd nonc#m#l!tive 6 res$onse !nd

6 c!$t#red res$onse c*!rts s*ot0;' s*ot09' s*ot0<' s*ot0:

13) ,onnect a 63way decision tree $a decision tree with ma1 no.of

branches G 6), run and view the results. Also, connect the assessment

node to the 63way tree. B!sed on miscl!ssi1c!tion error !nd t*e li)t

c*!rts' w*ic* model wo#ld &o# select +!nd w*&. - i)

a. I) &o# *!ve to t!rget to$ 576 res$onders

b. I) &o# *!ve to t!rget to$ 276 res$onders

Das könnte Ihnen auch gefallen

- PGP-II Term VI End Term Examination February 20-24, 2015Dokument1 SeitePGP-II Term VI End Term Examination February 20-24, 2015ee052022Noch keine Bewertungen

- SK Notes PM 2014 l01Dokument16 SeitenSK Notes PM 2014 l01ee052022Noch keine Bewertungen

- Paper For Little's AlgorithmDokument87 SeitenPaper For Little's Algorithmee052022Noch keine Bewertungen

- Am or GMDokument14 SeitenAm or GMee052022Noch keine Bewertungen

- Micromax SurveyResultsDokument3 SeitenMicromax SurveyResultsee052022Noch keine Bewertungen

- Consolidated RawDokument9 SeitenConsolidated Rawee052022Noch keine Bewertungen

- PGP-I Term II End Term Exam Schedule December 15Dokument1 SeitePGP-I Term II End Term Exam Schedule December 15ee052022Noch keine Bewertungen

- A History of MathematicsDokument415 SeitenA History of MathematicsLuís Pinho50% (2)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- S-Sapfico-Satyanarayanamaterial 121212Dokument183 SeitenS-Sapfico-Satyanarayanamaterial 121212mpsing1133Noch keine Bewertungen

- Formato MultimodalDokument1 SeiteFormato MultimodalcelsoNoch keine Bewertungen

- Thermal Physics Lecture 1Dokument53 SeitenThermal Physics Lecture 1Swee Boon OngNoch keine Bewertungen

- A Project On "Automatic Water Sprinkler Based On Wet and Dry Conditions"Dokument28 SeitenA Project On "Automatic Water Sprinkler Based On Wet and Dry Conditions"Srínívas SrínuNoch keine Bewertungen

- SHS G11 Reading and Writing Q3 Week 1 2 V1Dokument15 SeitenSHS G11 Reading and Writing Q3 Week 1 2 V1Romeo Espinosa Carmona JrNoch keine Bewertungen

- Rapp 2011 TEREOS GBDokument58 SeitenRapp 2011 TEREOS GBNeda PazaninNoch keine Bewertungen

- Art Integrated ProjectDokument14 SeitenArt Integrated ProjectSreeti GangulyNoch keine Bewertungen

- HDO OpeationsDokument28 SeitenHDO OpeationsAtif NadeemNoch keine Bewertungen

- The Marriage of Figaro LibrettoDokument64 SeitenThe Marriage of Figaro LibrettoTristan BartonNoch keine Bewertungen

- Assignment - 1 AcousticsDokument14 SeitenAssignment - 1 AcousticsSyeda SumayyaNoch keine Bewertungen

- 15 Miscellaneous Bacteria PDFDokument2 Seiten15 Miscellaneous Bacteria PDFAnne MorenoNoch keine Bewertungen

- Ferobide Applications Brochure English v1 22Dokument8 SeitenFerobide Applications Brochure English v1 22Thiago FurtadoNoch keine Bewertungen

- This Is A Short Presentation To Explain The Character of Uncle Sam, Made by Ivo BogoevskiDokument7 SeitenThis Is A Short Presentation To Explain The Character of Uncle Sam, Made by Ivo BogoevskiIvo BogoevskiNoch keine Bewertungen

- You Can't Blame A FireDokument8 SeitenYou Can't Blame A FireMontana QuarterlyNoch keine Bewertungen

- BSNL BillDokument3 SeitenBSNL BillKaushik GurunathanNoch keine Bewertungen

- Data Science ProjectsDokument3 SeitenData Science ProjectsHanane GríssetteNoch keine Bewertungen

- Journal of Molecular LiquidsDokument11 SeitenJournal of Molecular LiquidsDennys MacasNoch keine Bewertungen

- Fce Use of English 1 Teacher S Book PDFDokument2 SeitenFce Use of English 1 Teacher S Book PDFOrestis GkaloNoch keine Bewertungen

- LP Week 8Dokument4 SeitenLP Week 8WIBER ChapterLampungNoch keine Bewertungen

- Tec066 6700 PDFDokument2 SeitenTec066 6700 PDFExclusivo VIPNoch keine Bewertungen

- B-GL-385-009 Short Range Anti-Armour Weapon (Medium)Dokument171 SeitenB-GL-385-009 Short Range Anti-Armour Weapon (Medium)Jared A. Lang100% (1)

- FluteDokument13 SeitenFlutefisher3910% (1)

- OBOE GougerDokument2 SeitenOBOE GougerCarlos GaldámezNoch keine Bewertungen

- Microsoft Powerpoint BasicsDokument20 SeitenMicrosoft Powerpoint BasicsJonathan LocsinNoch keine Bewertungen

- Actron Vismin ReportDokument19 SeitenActron Vismin ReportSirhc OyagNoch keine Bewertungen

- Unit 1 Bearer PlantsDokument2 SeitenUnit 1 Bearer PlantsEmzNoch keine Bewertungen

- CHN Nutri LabDokument4 SeitenCHN Nutri LabMushy_ayaNoch keine Bewertungen

- How To Change Front Suspension Strut On Citroen Xsara Coupe n0 Replacement GuideDokument25 SeitenHow To Change Front Suspension Strut On Citroen Xsara Coupe n0 Replacement Guidematej89Noch keine Bewertungen

- Financial Institutions Markets and ServicesDokument2 SeitenFinancial Institutions Markets and ServicesPavneet Kaur Bhatia100% (1)

- PRESENTACIÒN EN POWER POINT Futuro SimpleDokument5 SeitenPRESENTACIÒN EN POWER POINT Futuro SimpleDiego BenítezNoch keine Bewertungen