Beruflich Dokumente

Kultur Dokumente

System Programming Unit-2 by Arun Pratap Singh

Hochgeladen von

ArunPratapSinghOriginalbeschreibung:

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

System Programming Unit-2 by Arun Pratap Singh

Hochgeladen von

ArunPratapSinghCopyright:

Verfügbare Formate

UNIT : II

SYSTEM

PROGRAMMING

II SEMESTER (MCSE 201)

PREPARED BY ARUN PRATAP SINGH

PREPARED BY ARUN PRATAP SINGH 1

1

ASPECT OF COMPILATION :

UNIT : II

PREPARED BY ARUN PRATAP SINGH 2

2

Compiler bridges the semantic gap between a PL (programming language) domain and an

execution domain.

There are 2 aspects of compiler:

1. Generate code to implement meaning of a source program in the execution domain.

2. Provide problem solving approach for harms of PL semantics in a source program.

PREPARED BY ARUN PRATAP SINGH 3

3

PREPARED BY ARUN PRATAP SINGH 4

4

PREPARED BY ARUN PRATAP SINGH 5

5

PREPARED BY ARUN PRATAP SINGH 6

6

PREPARED BY ARUN PRATAP SINGH 7

7

.

PREPARED BY ARUN PRATAP SINGH 8

8

PREPARED BY ARUN PRATAP SINGH 9

9

PREPARED BY ARUN PRATAP SINGH 10

10

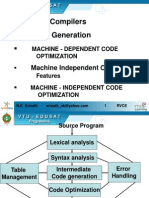

OVERVIEW OF THE VARIOUS PHASES OF THE COMPILER :

A compiler is a computer program (or set of programs) that transforms source code written in

a programming language (the source language) into another computer language (the target

language, often having a binary form known as object code). The most common reason for

wanting to transform source code is to create an executable program.

The name "compiler" is primarily used for programs that translate source code from a high-level

programming language to a lower level language (e.g., assembly language or machine code). If

the compiled program can run on a computer whose CPU or operating system is different from

the one on which the compiler runs, the compiler is known as a cross-compiler. A program that

translates from a low level language to a higher level one is a decompiler. A program that

translates between high-level languages is usually called a language translator, source to source

PREPARED BY ARUN PRATAP SINGH 11

11

translator, or language converter. A language rewriter is usually a program that translates the

form of expressions without a change of language.

A compiler is likely to perform many or all of the following operations: lexical

analysis, preprocessing, parsing, semantic analysis (Syntax-directed translation), code

generation, and code optimization.

Structure of a compiler :

Compilers bridge source programs in high-level languages with the underlying hardware. A

compiler verifies code syntax, generates efficient object code, performs run-time organization,

and formats the output according to assembler and linker conventions. A compiler consists of:

1. The front end: Verifies syntax and semantics, and generates an intermediate

representation or IR of the source code for processing by the middle-end. Performs type

checking by collecting type information. Generates errors and warning, if any, in a useful

way.

2. The middle end: Performs optimizations, including removal of useless or unreachable

code, discovery and propagation of constant values, relocation of computation to a less

frequently executed place (e.g., out of a loop), or specialization of computation based on

the context. Generates another IR for the backend.

3. The back end: Generates the assembly code, performing register allocation in process.

(Assigns processor registers for the program variables where possible.) Optimizes target

code utilization of the hardware by figuring out how to keep parallel execution units busy,

filling delay slots. Although most algorithms for optimization are in NP, heuristic

techniques are well-developed.

PREPARED BY ARUN PRATAP SINGH 12

12

LEXICAL ANALYSER :

In computer science, lexical analysis is the process of converting a sequence of characters into

a sequence of tokens, i.e. meaningful character strings. A program or function that performs

lexical analysis is called a lexical analyzer, lexer, tokenizer, or scanner, though "scanner" is

also used for the first stage of a lexer. A lexer is generally combined with a parser, which together

analyze the syntax of computer languages, such as in compilers for programming languages, but

also HTML parsers in web browsers, among other examples.

Strictly speaking, a lexer is itself a kind of parser the syntax of some programming language is

divided into two pieces: the lexical syntax (token structure), which is processed by the lexer; and

the phrase syntax, which is processed by the parser. The lexical syntax is usually a regular

language, whose alphabet consists of the individual characters of the source code text. The

phrase syntax is usually a context-free language, whose alphabet consists of the tokens produced

by the lexer. While this is a common separation, alternatively, a lexer can be combined with the

parser in scannerless parsing.

PREPARED BY ARUN PRATAP SINGH 13

13

A token is a string of one or more characters that is significant as a group. The process of forming

tokens from an input stream of characters is called tokenization. When a token represents more

than one possible lexemes, the lexers saves the string representation of the token, so that it can

be used in semantic analysis. The parser typically retrieves this information from the lexer and

stores it in the abstract syntax tree. This is necessary in order to avoid information loss in the case

of numbers and identifiers.

Tokens are identified based on the specific rules of the lexer. Some methods used to identify

tokens include: regular expressions, specific sequences of characters known as aflag, specific

separating characters called delimiters, and explicit definition by a dictionary. Special characters,

including punctuation characters, are commonly used by lexers to identify tokens because of their

natural use in written and programming languages.

Tokens are often categorized by character content or by context within the data stream.

Categories are defined by the rules of the lexer. Categories often involve grammar elements of

the language used in the data stream. Programming languages often categorize tokens as

identifiers, operators, grouping symbols, or by data type. Written languages commonly categorize

tokens as nouns, verbs, adjectives, or punctuation. Categories are used for post-processing of

the tokens either by the parser or by other functions in the program.

A lexical analyzer generally does nothing with combinations of tokens, a task left for a parser. For

example, a typical lexical analyzer recognizes parentheses as tokens, but does nothing to ensure

that each "(" is matched with a ")".

Consider this expression in the C programming language:

sum = 3 + 2;

Tokenized and represented by the following table:

PREPARED BY ARUN PRATAP SINGH 14

14

Lexeme Token

sum "Identifier"

= "Assignment operator"

3 "Integer literal"

+ "Addition operator"

2 "Integer literal"

; "End of statement"

When a lexer feeds tokens to the parser, the representation used is a number. For example

"Identifier" is represented with 0, "Assignment operator" with 1, "Addition operator" with 2, etc.

Tokens are frequently defined by regular expressions, which are understood by a lexical

analyzer generator such as lex. The lexical analyzer (either generated automatically by a tool

like lex, or hand-crafted) reads in a stream of characters, identifies the lexemes in the stream,

and categorizes them into tokens. This is called "tokenizing". If the lexer finds an invalid token,

it will report an error.

SYNTAX ANALYZER:

During the first Scanning phase i.e Lexical Analysis Phase of the compiler , symbol table is

created by the compiler which contain the list of leximes or tokens.

1. It is Second Phase Of Compiler after Lexical Analyzer

2. It is also Called as Hierarchical Analysis or Parsing.

3. It Groups Tokens of source Program into Grammatical Production

4. In Short Syntax Analysis Generates Parse Tree

Parse Tree Generation :

sum = num1 + num2

Now Consider above C Programming statement. In this statement we Syntax Analyzer will

create a parse tree from the tokens.

PREPARED BY ARUN PRATAP SINGH 15

15

Syntax Analyzer will check only Syntax not the meaning of Statement

We know , Addition operator plus (+) operates on two Operands

Syntax analyzer will just check whether plus operator has two operands or not . It does

not checks the type of operands.

Suppose One of the Operand is String and other is Integer then it does not throw error

as it only checks whether there are two operands associated with + or not .

So this Phase is also called Hierarchical Analysis as it generates Parse Tree

Representation of the Tokens generated by Lexical Analyzer

PREPARED BY ARUN PRATAP SINGH 16

16

PREPARED BY ARUN PRATAP SINGH 17

17

SEMANTIC ANALYSIS :

Syntax analyzer will just create parse tree. Semantic Analyzer will check actual meaning of the

statement parsed in parse tree. Semantic analysis can compare information in one part of a parse

tree to that in another part (e.g., compare reference to variable agrees with its declaration, or that

parameters to a function call match the function definition).

Semantic Analysis is used for the following -

1. Maintaining the Symbol Table for each block.

2. Check Source Program for Semantic Errors.

3. Collect Type Information for Code Generation.

PREPARED BY ARUN PRATAP SINGH 18

18

4. Reporting compile-time errors in the code (except syntactic errors, which are caught by

syntactic analysis)

5. Generating the object code (e.g., assembler or intermediate code)

Now In the Semantic Analysis Compiler Will Check -

1. Data Type of First Operand

2. Data Type of Second Operand

3. Check Whether + is Binary or Unary.

4. Check for Number of Operands Supplied to Operator Depending on Type of Operator

(Unary | Binary | Ternary)

PREPARED BY ARUN PRATAP SINGH 19

19

INTERMEDIATE CODE GENERATOR :

In computing, code generation is the process by which a compiler's code generator converts

some intermediate representation of source code into a form (e.g., machine code) that can be

readily executed by a machine.

PREPARED BY ARUN PRATAP SINGH 20

20

Sophisticated compilers typically perform multiple passes over various intermediate forms. This

multi-stage process is used because many algorithms for code optimization are easier to apply

one at a time, or because the input to one optimization relies on the completed processing

performed by another optimization. This organization also facilitates the creation of a single

compiler that can target multiple architectures, as only the last of the code generation stages

(the backend) needs to change from target to target. (For more information on compiler design,

see Compiler.)

The input to the code generator typically consists of a parse tree or an abstract syntax tree. The

tree is converted into a linear sequence of instructions, usually in an intermediate language such

as three address code. Further stages of compilation may or may not be referred to as "code

generation", depending on whether they involve a significant change in the representation of the

program. (For example, a peephole optimization pass would not likely be called "code

generation", although a code generator might incorporate a peephole optimization pass.)

PREPARED BY ARUN PRATAP SINGH 21

21

PREPARED BY ARUN PRATAP SINGH 22

22

PREPARED BY ARUN PRATAP SINGH 23

23

PREPARED BY ARUN PRATAP SINGH 24

24

AST ABSTRACT SYNTAX TREE

SDT SYNTAX DIRECTED TRANSLATION

IR INTERMEDIATE REPRESENTATION

THREE ADDRESS CODE :

In computer science, three-address code (often abbreviated to TAC or 3AC) is an intermediate

code used by optimizing compilers to aid in the implementation of code-improving

transformations. Each TAC instruction has at most three operands and is typically a combination

of assignment and a binary operator. For example, t1 := t2 + t3. The name derives from the

use of three operands in these statements even though instructions with fewer operands may

occur.

Since three-address code is used as an intermediate language within compilers, the operands

will most likely not be concrete memory addresses or processor registers, but rather symbolic

addresses that will be translated into actual addresses during register allocation. It is also not

PREPARED BY ARUN PRATAP SINGH 25

25

uncommon that operand names are numbered sequentially since three-address code is typically

generated by the compiler.

A refinement of three-address code is static single assignment form (SSA).

PREPARED BY ARUN PRATAP SINGH 26

26

PREPARED BY ARUN PRATAP SINGH 27

27

PREPARED BY ARUN PRATAP SINGH 28

28

PREPARED BY ARUN PRATAP SINGH 29

29

PREPARED BY ARUN PRATAP SINGH 30

30

CODE OPTIMIZATION:

In computing, an optimizing compiler is a compiler that tries to minimize or maximize some

attributes of an executable computer program. The most common requirement is to minimize the

time taken to execute a program; a less common one is to minimize the amount

of memory occupied. The growth of portable computers has created a market for minimizing

the power consumed by a program. Compiler optimization is generally implemented using a

sequence of optimizing transformations, algorithms which take a program and transform it to

produce a semantically equivalent output program that uses fewer resources.

It has been shown that some code optimization problems are NP-complete, or even undecidable.

In practice, factors such as the programmer's willingness to wait for the compiler to complete its

task place upper limits on the optimizations that a compiler implementer might provide.

(Optimization is generally a very CPU- and memory-intensive process.) In the past, computer

memory limitations were also a major factor in limiting which optimizations could be performed.

Because of all these factors, optimization rarely produces "optimal" output in any sense, and in

PREPARED BY ARUN PRATAP SINGH 31

31

fact an "optimization" may impede performance in some cases; rather, they are heuristic methods

for improving resource usage in typical programs.

PREPARED BY ARUN PRATAP SINGH 32

32

PREPARED BY ARUN PRATAP SINGH 33

33

PREPARED BY ARUN PRATAP SINGH 34

34

PREPARED BY ARUN PRATAP SINGH 35

35

PREPARED BY ARUN PRATAP SINGH 36

36

PREPARED BY ARUN PRATAP SINGH 37

37

PREPARED BY ARUN PRATAP SINGH 38

38

PREPARED BY ARUN PRATAP SINGH 39

39

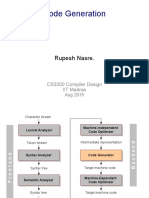

CODE GENERATION:

In computing, code generation is the process by which a compiler's code generator converts

some intermediate representation of source code into a form (e.g., machine code) that can be

readily executed by a machine.

Sophisticated compilers typically perform multiple passes over various intermediate forms. This

multi-stage process is used because many algorithms for code optimization are easier to apply

one at a time, or because the input to one optimization relies on the completed processing

performed by another optimization. This organization also facilitates the creation of a single

compiler that can target multiple architectures, as only the last of the code generation stages

(the backend) needs to change from target to target. (For more information on compiler design,

see Compiler.)

The input to the code generator typically consists of a parse tree or an abstract syntax tree. The

tree is converted into a linear sequence of instructions, usually in an intermediate language such

as three address code. Further stages of compilation may or may not be referred to as "code

generation", depending on whether they involve a significant change in the representation of the

program. (For example, a peephole optimization pass would not likely be called "code

generation", although a code generator might incorporate a peephole optimization pass.)

PREPARED BY ARUN PRATAP SINGH 40

40

In addition to the basic conversion from an intermediate representation into a linear sequence of

machine instructions, a typical code generator tries to optimize the generated code in some way.

Tasks which are typically part of a sophisticated compiler's "code generation" phase include:

Instruction selection: which instructions to use.

Instruction scheduling: in which order to put those instructions. Scheduling is a speed

optimization that can have a critical effect on pipelined machines.

Register allocation: the allocation of variables to processor registers

[1]

Debug data generation if required so the code can be debugged.

Instruction selection is typically carried out by doing a recursive post order traversal on the

abstract syntax tree, matching particular tree configurations against templates; for example, the

tree W := ADD(X,MUL(Y,Z)) might be transformed into a linear sequence of instructions by

recursively generating the sequences for t1 := X and t2 := MUL(Y,Z), and then emitting the

instruction ADD W, t1, t2.

In a compiler that uses an intermediate language, there may be two instruction selection stages

one to convert the parse tree into intermediate code, and a second phase much later to convert

the intermediate code into instructions from the instruction set of the target machine. This second

phase does not require a tree traversal; it can be done linearly, and typically involves a simple

replacement of intermediate-language operations with their corresponding opcodes. However, if

the compiler is actually a language translator (for example, one that converts Eiffel to C), then the

second code-generation phase may involve building a tree from the linear intermediate code.

PREPARED BY ARUN PRATAP SINGH 41

41

PREPARED BY ARUN PRATAP SINGH 42

42

SYMBOL TABLE CONCEPTUAL DESIGN :

PREPARED BY ARUN PRATAP SINGH 43

43

PREPARED BY ARUN PRATAP SINGH 44

44

PREPARED BY ARUN PRATAP SINGH 45

45

PREPARED BY ARUN PRATAP SINGH 46

46

PREPARED BY ARUN PRATAP SINGH 47

47

SYNTAX ERROR HANDLING :

PREPARED BY ARUN PRATAP SINGH 48

48

PREPARED BY ARUN PRATAP SINGH 49

49

PREPARED BY ARUN PRATAP SINGH 50

50

PREPARED BY ARUN PRATAP SINGH 51

51

SCANNING AND PARSING :

PREPARED BY ARUN PRATAP SINGH 52

52

In computer science, lexical analysis is the process of converting a sequence of characters into

a sequence of tokens, i.e. meaningful character strings. A program or function that performs

lexical analysis is called a lexical analyzer, lexer, tokenizer,

[1]

or scanner, though "scanner" is

also used for the first stage of a lexer. A lexer is generally combined with a parser, which together

PREPARED BY ARUN PRATAP SINGH 53

53

analyze the syntax of computer languages, such as in compilers for programming languages, but

also HTML parsers in web browsers, among other examples.

Strictly speaking, a lexer is itself a kind of parser the syntax of some programming language is

divided into two pieces: the lexical syntax (token structure), which is processed by the lexer; and

the phrase syntax, which is processed by the parser. The lexical syntax is usually a regular

language, whose alphabet consists of the individual characters of the source code text. The

phrase syntax is usually a context-free language, whose alphabet consists of the tokens produced

by the lexer. While this is a common separation, alternatively, a lexer can be combined with the

parser in scannerless parsing.

PREPARED BY ARUN PRATAP SINGH 54

54

PARSING :

Parsing or syntactic analysis is the process of analyzing a string of symbols, either in natural

language or in computer languages, according to the rules of a formal grammar. The

term parsing comes from Latin pars (orationis), meaning part (of speech).

[1][2]

The term has slightly different meanings in different branches of linguistics and computer science.

Traditional sentence parsing is often performed as a method of understanding the exact meaning

of a sentence, sometimes with the aid of devices such as sentence diagrams. It usually

emphasizes the importance of grammatical divisions such as subject and predicate.

Within computational linguistics the term is used to refer to the formal analysis by a computer of

a sentence or other string of words into its constituents, resulting in a parse tree showing their

syntactic relation to each other, which may also contain semantic and other information.

The term is also used in psycholinguistics when describing language comprehension. In this

context, parsing refers to the way that human beings analyze a sentence or phrase (in spoken

language or text) "in terms of grammatical constituents, identifying the parts of speech, syntactic

relations, etc."

[2]

This term is especially common when discussing what linguistic cues help

speakers to interpret garden-path sentences.

Within computer science, the term is used in the analysis of computer languages, referring to the

syntactic analysis of the input code into its component parts in order to facilitate the writing

of compilers and interpreters.

PREPARED BY ARUN PRATAP SINGH 55

55

PREPARED BY ARUN PRATAP SINGH 56

56

Types of parser :

The task of the parser is essentially to determine if and how the input can be derived from the

start symbol of the grammar. This can be done in essentially two ways:

Top-down parsing- Top-down parsing can be viewed as an attempt to find left-most

derivations of an input-stream by searching for parse trees using a top-down expansion of the

PREPARED BY ARUN PRATAP SINGH 57

57

given formal grammar rules. Tokens are consumed from left to right. Inclusive choice is used

to accommodate ambiguity by expanding all alternative right-hand-sides of grammar rules.

[4]

Bottom-up parsing - A parser can start with the input and attempt to rewrite it to the start

symbol. Intuitively, the parser attempts to locate the most basic elements, then the elements

containing these, and so on. LR parsers are examples of bottom-up parsers. Another term

used for this type of parser is Shift-Reduce parsing.

LL parsers and recursive-descent parser are examples of top-down parsers which cannot

accommodate left recursive production rules. Although it has been believed that simple

implementations of top-down parsing cannot accommodate direct and indirect left-recursion and

may require exponential time and space complexity while parsing ambiguous context-free

grammars, more sophisticated algorithms for top-down parsing have been created by Frost, Hafiz,

and Callaghan which accommodate ambiguity and left recursion in polynomial time and which

generate polynomial-size representations of the potentially exponential number of parse trees.

Their algorithm is able to produce both left-most and right-most derivations of an input with regard

to a given CFG (context-free grammar).

An important distinction with regard to parsers is whether a parser generates a leftmost

derivation or a rightmost derivation (see context-free grammar). LL parsers will generate a

leftmost derivation and LR parsers will generate a rightmost derivation (although usually in

reverse).

PREPARED BY ARUN PRATAP SINGH 58

58

DYNAMIC STORAGE ALLOCATION TECHNIQUES :

PREPARED BY ARUN PRATAP SINGH 59

59

Example :

Storage Allocation-

Static allocation vs. dynamic allocation

o Static allocation

Temporary variables, including the one used to save the return address,

were also assigned fixed addresses within the program. This type of

storage assignment is called static allocation.

o Dynamic allocation

It is necessary to preserve the previous values of any variables used by

subroutine, including parameters, temporaries, return addresses, register

PREPARED BY ARUN PRATAP SINGH 60

60

save areas, etc.

It can be accomplished with a dynamic storage allocation technique.

Recursive invocation of a procedure using static storage allocation

The dynamic storage allocation technique.

o Each procedure call creates an activation record that contains storage for

all the variables used by the procedure.

o Activation records are typically allocated on a stack.

PREPARED BY ARUN PRATAP SINGH 61

61

Recursive invocation of a procedure using automatic storage allocation

PREPARED BY ARUN PRATAP SINGH 62

62

PREPARED BY ARUN PRATAP SINGH 63

63

PREPARED BY ARUN PRATAP SINGH 64

64

The compiler must generate additional code to manage the activation records

themselves.

Prologue

o At the beginning of each procedure there must be code to create a new

activation record, linking it to the previous one and setting the appropriate

pointers.

Epilogue

o At the end of the procedure, there must be code to delete the current

activation record, resetting pointers as needed.

The techniques needed to implement dynamic storage allocation techniques depends

on how the space is deallocated.

ie, implicitly or explicitly

PREPARED BY ARUN PRATAP SINGH 65

65

Explicit allocation of fixed size block

Explicit allocation of variable size block

Implicit deallocation

Explicit allocation of fixed size block

The simplest form dynamic storage allocation.

The blocks linked together in a list and the allocation and deallocation can done

quickly with less or no storage overhead

A pointer available points to the first block in the list of available blocks

Explicit allocation of variable size block

When blocks are allocated & deallocated storage can become fragmented ie,

heap may consist alternate blocks that are free & in use

In variable size allocation it will be a problem because we could not allocate a

block larger than any free blocks, even though the space is available

The first fit method can be used to allocate variable sized block

When a block of size is allocated it search for the first free block size f>=s. This

block is then subdivided in to a used block of size s& a free block of size f-s. Its

time consuming;

When a block is deallocated ,it check to see if it is next to a free block .If

possible,the deallocated is combined with a free block next to it to create larger

free block.It helps to avoid fragmentation.

PREPARED BY ARUN PRATAP SINGH 66

66

Implicit deallocation-

Implicit deallocation requires the cooperation between user program& runtime

packages.this is implemented by fixing the format of storage blocks.

The first problem is to recognize the block boundaries,for fixed size it is easy.

In variable size block the size of block is kept in a inaccessible storage attached

to the block.

The second problem is of recognizing the if a block is in use. Used block can be

referred by the user program using pointers. The pointers are kept in a fixed

position in the block for the easiness of checking the reference .

Two approaches can be used for implicit deallocation.

Reference counts

Marking techniques

PREPARED BY ARUN PRATAP SINGH 67

67

Reference counts-

We keep track of the no of reference to the present block. if it ever drops to 0 the

block is deallocated.

Maintaining reference counts can be costly in time(the pointer assignment p:=q

leads to changes in the reference counts of the blocks pointed by both p&q)

Reference counts are best if there is no cyclical reference occurs.

Marking techniques

Here the user program suspend temporarily & use the frozen pointers to

determine the used blocks This approach requires all the pointers to the heap to

be known.(conceptually,its like pouring paint to the heap through the pointers)

First we go through the heap&marks all the blocks unused.Then we follow the

pointers &mark all the reachable blocks as used.Then sequential scan of heap

collects all the blocks still marked unused.

DYNAMIC PROGRAMMING CODE-GENERATION :

The dynamic programming algorithm can be used to generate code for any machine

with r interchangeable registers and load, store, and add instructions.

The dynamic programming algorithm partitions the problem of generating optimal

code for an expression into the sub-problems of generating optimal code for the

sub expressions of the given expression.

Contiguous evaluation:

Complete the evaluations of T1, T2, then evaluate root

Noncontiguous evaluation:

First evaluate part of T1 leaving the value in a register, next evaluate T2,

then return to evaluate the rest of T1

Dynamic programming algorithm uses contiguous evaluation.

The dynamic programming algorithm proceeds in three phases (suppose the

target machine has r registers)

PREPARED BY ARUN PRATAP SINGH 68

68

1. Compute bottom-up for each node n of the expression tree T an array C of costs, in

which the ith component C[i] is the optimal cost of computing the subtree S rooted at n

into a register, assuming i registers are available for the computation, for 1<=i<=r.

2. Traverse T, using the cost vectors to determine which subtrees of T must be

computed into memory.

3. Traverse each tree using the cost vectors and associated instructions to generate the

final target code. The code for the subtrees computed into memory locations is

generated first.

PREPARED BY ARUN PRATAP SINGH 69

69

PRINCIPAL SOURCES OF OPTIMIZATION :

Elimination of unnecessary instructions

Replacement of one sequence of instructions by a faster sequence of

instructions

Local optimization

Global optimizations

based on data flow analyses

A number of ways in which a compiler can improve a program without changing

the function it computes

Common-sub expression elimination

Copy propagation

Dead-code elimination

Constant folding

Common-sub expression elimination :-

An occurrence of an expression E is common subexpression if E was previously

computed and the values of variables in E have not changed since.

Copy propagation :-

PREPARED BY ARUN PRATAP SINGH 70

70

Dead-code elimination :-

Remove unreachable code

If (debug) print

Many times, debug := false

Constant folding :-

PREPARED BY ARUN PRATAP SINGH 71

71

APPROACHES TO COMPILER DEVELOPMENT :

There are several approaches to compiler developments. Here we will look at some of then

1.3.1 Assembly Language Coding

Early compilers were mostly coded in assembly language. The main consideration was to

increase efficiency. This approach worked very well for small High Level Languages (HLL). As

languages and their compilers became larger, lots of bugs started surfacing which were difficult

to remove. The major difficulty with assembly language implementation was of poor software

maintenance.

Around this time, it was realized that coding the compilers in high level language would overcome

this disadvantage of poor maintenance. Many compilers were therefore coded in FORTRAN, the

only widely available HLL at that time. For example, FORTRAN H compiler for IBM/360 was coded

in FORTRAN. Later many system programming languages were developed to ensure efficiency

of compilers written into HLL.

Assembly language is still being used but trend is towards compiler implementation through HLL.

1.3.2 Cross-Compiler

A cross-compiler is a compiler which runs on one machine and generates a code for another

machine. The only difference between a cross-compiler and a normal compiler is in terms of code

generated by it. For example, consider the problem of implementing a Pascal compiler on a new

piece of hardware (a computer called X) on which assembly language is the only programming

language already available. Under these circumstances, the obvious approach is to write the

Pascal compiler in assembler. Hence, the compiler in this case is a program that takes Pascal

source as input, produces machine code for the target machine as output and is written in the

assembly language of the target machine. The languages characterizing this compiler can be

represented as:

PREPARED BY ARUN PRATAP SINGH 72

72

showing that Pascal source is translated by a program written in X assembly language (the

compiler) running on machine X into X's object code. This code can then be run on the target

machine. This notation is essentially equivalent to the T-diagram. The T-diagram for this compiler

is shown in figure 2.

Fig. 2 T-diagram

The language accepted as input by the compiler is stated on the left the language output by the

compiler is shown on the right and the language in which the compiler is written is shown at the

bottom. The advantage of this particular notation is that several T-diagrams can be meshed

together to represent more complex compiler implementation methods. This compiler

implementation involves a great deal of work since a large assembly language program has to be

written for X. It is to be noticed in this case that the compiler is very machine specific; that is, not

only does it run on X but it also produces machine code suitable for running on X.

Furthermore, only one computer is involved in the entire implementation process.

The use of a high-level language for coding the compiler can offer great savings in implementation

effort. If the language in which the compiler is being written is already available on the computer

in use, then the process is simple. For example, Pascal might already be available on machine

X, thus permitting the coding of, say, a Modula-2 compiler in Pascal.

Such a compiler can be represented as:

PREPARED BY ARUN PRATAP SINGH 73

73

If the language in which the compiler is being written is not available on the machine, then all is

not lost, since it may be possible to make use of an implementation of that language on another

machine. For example, a Modulc-2 compiler could be implemented in Pascal on machine Y,

producing object code for machine X:

The object code for X generated on machine Y would of course have, to be transferred to X for

its execution. This process of generating code on one machine for execution on another is called

cross-compilation.

At first sight, the introduction of a second computer to the compiler implementation plan seems to

offer a somewhat inconvenient solution. Each time a compilation is required, it has to be done on

machine Y and the object code transferred, perhaps via a slow or laborious mechanism, to

machine X for execution. Furthermore, both computes have to be running and inter-linked

somehow, for this approach to work. But the significance of the cross-compilation approach can

be seen in the next section.

REGISTER ALLOCATION TECHNIQUES :

In compiler optimization, register allocation is the process of assigning a large number of target

program variables onto a small number of CPU registers. Register allocation can happen over a

basic block (local register allocation), over a whole function/procedure (global register allocation),

or across function boundaries traversed via call-graph (interprocedural register allocation). When

done per function/procedure the calling convention may require insertion of save/restore around

each call-site.

PREPARED BY ARUN PRATAP SINGH 74

74

In many programming languages, the programmer has the illusion of allocating arbitrarily many

variables. However, during compilation, the compiler must decide how to allocate these variables

to a small, finite set of registers. Not all variables are in use (or "live") at the same time, so some

registers may be assigned to more than one variable. However, two variables in use at the same

time cannot be assigned to the same register without corrupting its value. Variables which cannot

be assigned to some register must be kept in RAM and loaded in/out for every read/write, a

process called spilling. Accessing RAM is significantly slower than accessing registers and slows

down the execution speed of the compiled program, so an optimizing compiler aims to assign as

many variables to registers as possible. Register pressure is the term used when there are fewer

hardware registers available than would have been optimal; higher pressure usually means that

more spills and reloads are needed.

In addition, programs can be further optimized by assigning the same register to a source and

destination of a move instruction whenever possible. This is especially important if the compiler

is using other optimizations such as SSA analysis, which artificially generates

additional move instructions in the intermediate code.

Each of Scheduling and Register Allocation are hard to solve individually, let alone

solve globally as a combined optimization.

So, solve each optimization locally and heuristically patch up the two stages.

PREPARED BY ARUN PRATAP SINGH 75

75

Storing and accessing variables from registers is much faster than accessing data

from memory.

The way operations are performed in load/store (RISC) processors.

Therefore, in the interests of performanceif not by necessityvariables ought to

be stored in registers.

For performance reasons, it is useful to store variables as long as possible, once

they are loaded into registers.

Registers are bounded in number (say 32.)

Therefore, register-sharing is needed over time.

Register allocation is the process of determining which values should be placed into which

registers and at what times during the execution of the program. Note that register allocation is

not concerned specifically with variables, only values distinct uses of the same variable can be

assigned to different registers without affecting the logic of the program.

There have been a number of techniques developed to perform register allocation at a variety of

different levels local register allocation refers to allocation within a very small piece of code,

typically a basic block; global register allocation assigns registers within an entire function; and

interprocedural register allocation works across function calls and module boundaries. The first

two techniques are commonplace, with global register allocation implemented in virtually every

production compiler, while the latter interprocedural register allocation is rarely performed by

today's mainstream compilers.

Interference Graphs -

Register allocation shows close correspondence to the mathematical problem of graph coloring.

The recognition of this correspondence is originally due to John Cocke, who first proposed this

approach as far back as 1971. Almost a decade later, it was first implemented by G. J. Chaitin

and his colleagues in the PL.8 compiler for IBM's 801 RISC prototype. The fundamental approach

is described in their famous 1981 research paper [ChaitinEtc1981]. Today, almost every

production compiler uses a graph coloring global register allocator.

When formulated as a coloring problem, each node in the graph represents the live range of a

particular value. A live range is defined as a write to a register followed by all the uses of that

register until the next write. An edge between two nodes indicates that those two live ranges

interfere with each other because their lifetimes overlap. In other words, they are both

simultaneously active at some point in time, so they must be assigned to different registers. The

resulting graph is thus called an interference graph.

PREPARED BY ARUN PRATAP SINGH 76

76

Figure 1 Register allocation by graph coloring.

Graph Coloring-

This introductory paper describes the most widely used variant of these coloring heuristics the

optimistic coloring method first proposed by Preston Briggs and his associates at Rice University,

which is based on the general structure of the original IBM "Yorktown" allocator described in

Chaitin's original research papers. The optimistic "Chaitin/Briggs" graph coloring algorithm is

clearly the technique most widely used by production compilers, and arguably the most effective.

Figure 2 The graph coloring process.

Figure 3 A non-contiguous live range.

PREPARED BY ARUN PRATAP SINGH 77

77

Figure 4 An example of the graph coloring process.

Spilling

If spill code is necessary, the spilling can be done in many different ways. One approach is to

simply spill the value everywhere and insert loads and stores around every use. There are some

advantages to spilling a value for its entire lifetime it is straightforward to implement and tends

to reduce the number of coloring iterations required before a solution is found. Unfortunately,

PREPARED BY ARUN PRATAP SINGH 78

78

there are also major disadvantages to this simple approach. Spilling a node everywhere does not

quite correspond to completely removing it from the graph, but rather to splitting it into several

small live ranges around its uses. Not all of these small live ranges may be causing the problem

it might only have been necessary to spill the value for part of its lifetime.

Figure 5 Tiles used in hierarchical graph coloring.

Clique Separators -

Since graph coloring is a relatively slow optimization, anything that can be done to make it faster

is worth investigation. The chief determining factor is, of course, the size of the interference graph.

The asymptotic efficiency of graph coloring is somewhat worse than linear in the size of the

interference graph in practice, graph coloring register allocation is something like O(n log n)

so coloring two smaller graphs is faster than coloring a single large graph. The bulk of the running

time is actually the build-coalesce loop, not the coloring phases, however this too is dependent

on the size of the graph (and non-linear).

PREPARED BY ARUN PRATAP SINGH 79

79

Figure 6 Faster register allocation using clique separators.

Linear-Scan Allocation -

As a special case, local register allocation within basic blocks can be considerably accelerated

by taking advantage of the structure of the particular interference graphs involved. For straight-

line code sequences, such as basic blocks or software-pipelined loops, the interference graphs

are always interval graphs the types of graphs formed by the interference of segments along a

line. It is known that such graphs can be easily colored, optimally, in linear time. In practice, an

interference graph need not even be constructed all that is required is a simple scan through

the code. This approach can even be extended to the global case, by using approximate live

ranges which are the easy-to-identify linear supersets of the actual segmented live ranges.

VECTORISATION OF PROGRAM :

Automatic vectorisation, in parallel computing, is a special case of automatic parallelization,

where a computer program is converted from a scalar implementation, which processes a single

pair of operands at a time, to a vector implementation which processes one operation on multiple

pairs of operands at once. As an example, modern conventional computers (as well as

specialized supercomputers) typically have vector operations that perform, e.g. the four additions

all at once. However, in most programming languages, one typically writes loops that perform

additions on many numbers, e.g. (example in C):

for (i=0; i<n; i++)

PREPARED BY ARUN PRATAP SINGH 80

80

c[i] = a[i] + b[i];

The goal of a vectorizing compiler is to transform such a loop into a sequence of vector

operations, that perform additions on length-four (in our example) blocks of elements from the

arrays a, b and c. Automatic vectorization is a major research topic in computer science.

Early computers generally had one logic unit that sequentially executed one instruction on one

operand pair at a time. Computer programs and programming languages were accordingly

designed to execute sequentially. Modern computers can do many things at once. Many

optimizing compilers feature auto-vectorization, a compiler feature where particular parts of

sequential programs are transformed into equivalent parallel ones, to produce code which will

well utilize a vector processor. For a compiler to produce such efficient code for a programming

language intended for use on a vector-processor would be much simpler, but, as much real-world

code is sequential, the optimization is of great utility.

Loop vectorization converts procedural loops that iterate over multiple pairs of data items and

assigns a separate processing unit to each pair. Most programs spend most of their execution

times within such loops. Vectorizing loops can lead to significant performance gains without

programmer intervention, especially on large data sets. Vectorization can sometimes instead slow

execution because of pipeline synchronization, data movement timing and other issues.

Intel's MMX, SSE, AVX and Power Architecture's AltiVec and ARM's NEON instruction sets

support such vectorized loops.

Many constraints prevent or hinder vectorization. Loop dependence analysis identifies loops that

can be vectorized, relying on the data dependence of the instructions inside loops.

Automatic vectorization, like any loop optimization or other compile-time optimization, must exactly

preserve program behavior.

Data dependencies-

All dependencies must be respected during execution to prevent incorrect outcomes.

In general, loop invariant dependencies and lexically forward dependencies can be easily

vectorized, and lexically backward dependencies can be transformed into lexically forward. But

these transformations must be done safely, in order to assure the dependence between all

statements remain true to the original.

Cyclic dependencies must be processed independently of the vectorized instructions.

Data precision-

Integer precision (bit-size) must be kept during vector instruction execution. The correct vector

instruction must be chosen based on the size and behavior of the internal integers. Also, with

mixed integer types, extra care must be taken to promote/demote them correctly without losing

PREPARED BY ARUN PRATAP SINGH 81

81

precision. Special care must be taken with sign extension (because multiple integers are packed

inside the same register) and during shift operations, or operations with carry bits that would

otherwise be taken into account.

Floating-point precision must be kept as well, unless IEEE-754 compliance is turned off, in which

case operations will be faster but the results may vary slightly. Big variations, even ignoring IEEE-

754 usually means programmer error. The programmer can also force constants and loop

variables to single precision (default is normally double) to execute twice as many operations per

instruction.

Das könnte Ihnen auch gefallen

- Information Theory, Coding and Cryptography Unit-3 by Arun Pratap SinghDokument64 SeitenInformation Theory, Coding and Cryptography Unit-3 by Arun Pratap SinghArunPratapSingh50% (4)

- Soft Computing Unit-4 by Arun Pratap SinghDokument123 SeitenSoft Computing Unit-4 by Arun Pratap SinghArunPratapSingh67% (3)

- Soft Computing Unit-4 by Arun Pratap SinghDokument123 SeitenSoft Computing Unit-4 by Arun Pratap SinghArunPratapSingh67% (3)

- Information Theory, Coding and Cryptography Unit-3 by Arun Pratap SinghDokument64 SeitenInformation Theory, Coding and Cryptography Unit-3 by Arun Pratap SinghArunPratapSingh50% (4)

- CSE1002 Problem Solving With Object Oriented Programming LO 1 AC39Dokument7 SeitenCSE1002 Problem Solving With Object Oriented Programming LO 1 AC39SPAM rohitNoch keine Bewertungen

- Information Theory, Coding and Cryptography Unit-2 by Arun Pratap SinghDokument36 SeitenInformation Theory, Coding and Cryptography Unit-2 by Arun Pratap SinghArunPratapSingh100% (4)

- Advance Concept in Data Bases Unit-2 by Arun Pratap SinghDokument51 SeitenAdvance Concept in Data Bases Unit-2 by Arun Pratap SinghArunPratapSingh100% (1)

- System Programming Unit-1 by Arun Pratap SinghDokument56 SeitenSystem Programming Unit-1 by Arun Pratap SinghArunPratapSingh100% (2)

- Advance Concept in Data Bases Unit-1 by Arun Pratap SinghDokument71 SeitenAdvance Concept in Data Bases Unit-1 by Arun Pratap SinghArunPratapSingh100% (2)

- Soft Computing Unit-2 by Arun Pratap SinghDokument74 SeitenSoft Computing Unit-2 by Arun Pratap SinghArunPratapSingh100% (1)

- Web Technology and Commerce Unit-5 by Arun Pratap SinghDokument82 SeitenWeb Technology and Commerce Unit-5 by Arun Pratap SinghArunPratapSingh100% (3)

- Information Theory, Coding and Cryptography Unit-5 by Arun Pratap SinghDokument79 SeitenInformation Theory, Coding and Cryptography Unit-5 by Arun Pratap SinghArunPratapSingh100% (2)

- Web Technology and Commerce Unit-3 by Arun Pratap SinghDokument32 SeitenWeb Technology and Commerce Unit-3 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Information Theory, Coding and Cryptography Unit-1 by Arun Pratap SinghDokument46 SeitenInformation Theory, Coding and Cryptography Unit-1 by Arun Pratap SinghArunPratapSingh67% (6)

- Soft Computing Unit-5 by Arun Pratap SinghDokument78 SeitenSoft Computing Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- Web Technology and Commerce Unit-2 by Arun Pratap SinghDokument65 SeitenWeb Technology and Commerce Unit-2 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Advance Concept in Data Bases Unit-5 by Arun Pratap SinghDokument82 SeitenAdvance Concept in Data Bases Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- Web Technology and Commerce Unit-4 by Arun Pratap SinghDokument60 SeitenWeb Technology and Commerce Unit-4 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Advance Concept in Data Bases Unit-3 by Arun Pratap SinghDokument81 SeitenAdvance Concept in Data Bases Unit-3 by Arun Pratap SinghArunPratapSingh100% (2)

- Soft Computing Unit-1 by Arun Pratap SinghDokument100 SeitenSoft Computing Unit-1 by Arun Pratap SinghArunPratapSingh100% (1)

- System Programming Unit-4 by Arun Pratap SinghDokument83 SeitenSystem Programming Unit-4 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Web Technology and Commerce Unit-1 by Arun Pratap SinghDokument38 SeitenWeb Technology and Commerce Unit-1 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Compiler Overview & PhasesDokument26 SeitenCompiler Overview & PhasesAadilNoch keine Bewertungen

- Compiler Overview & PhasesDokument26 SeitenCompiler Overview & PhasesAadilNoch keine Bewertungen

- Compiler DesignDokument249 SeitenCompiler DesignJohn Paul Anthony ArlosNoch keine Bewertungen

- CD NotesDokument69 SeitenCD NotesgbaleswariNoch keine Bewertungen

- Compiler Construction and PhasesDokument8 SeitenCompiler Construction and PhasesUmme HabibaNoch keine Bewertungen

- Compiler Design Quick GuideDokument45 SeitenCompiler Design Quick GuideKunal JoshiNoch keine Bewertungen

- CD Unit - 1 Lms NotesDokument58 SeitenCD Unit - 1 Lms Notesashok koppoluNoch keine Bewertungen

- Quick Book of CompilerDokument66 SeitenQuick Book of CompilerArshadNoch keine Bewertungen

- Compiler Construction NotesDokument61 SeitenCompiler Construction NotesmatloobNoch keine Bewertungen

- IntroductionDokument46 SeitenIntroductionAmanuel GetachewNoch keine Bewertungen

- Module 2&3Dokument127 SeitenModule 2&3Mrs. SUMANGALA B CSENoch keine Bewertungen

- 1.1 What Is A Compiler?: Source Language Translator Target LanguageDokument22 Seiten1.1 What Is A Compiler?: Source Language Translator Target Languagecute_barbieNoch keine Bewertungen

- Lexical Analyzer (Compiler Contruction)Dokument6 SeitenLexical Analyzer (Compiler Contruction)touseefaq100% (1)

- 1-Phases of CompilerDokument66 Seiten1-Phases of CompilerHASNAIN JANNoch keine Bewertungen

- Compiler Design: ObjectivesDokument45 SeitenCompiler Design: Objectivesminujose111_20572463Noch keine Bewertungen

- Unit 1,2 PDFDokument31 SeitenUnit 1,2 PDFMD NADEEM ASGARNoch keine Bewertungen

- Unit 1,2 PDFDokument31 SeitenUnit 1,2 PDFMD NADEEM ASGARNoch keine Bewertungen

- Introduction CompilerDokument47 SeitenIntroduction CompilerHarshit SinghNoch keine Bewertungen

- CD Notes-2Dokument26 SeitenCD Notes-2ajunatNoch keine Bewertungen

- Analysis Phases:: The Structure of A CompilerDokument9 SeitenAnalysis Phases:: The Structure of A CompilerSantino Puokleena Yien GatluakNoch keine Bewertungen

- Compiler Construction CS-4207 Lecture - 01 - 02: Input Output Target ProgramDokument8 SeitenCompiler Construction CS-4207 Lecture - 01 - 02: Input Output Target ProgramFaisal ShehzadNoch keine Bewertungen

- SPCC VivaDokument11 SeitenSPCC Vivanamrah409Noch keine Bewertungen

- Compiler DesignDokument3 SeitenCompiler DesignDiaa SalamNoch keine Bewertungen

- CC Viva QuestionsDokument5 SeitenCC Viva QuestionsSaraah Ghori0% (1)

- Unit I SRMDokument36 SeitenUnit I SRManchit100% (1)

- 1.Q and A Compiler DesignDokument20 Seiten1.Q and A Compiler DesignSneha SathiyamNoch keine Bewertungen

- Program Compilation Lec 7Dokument18 SeitenProgram Compilation Lec 7delight photostateNoch keine Bewertungen

- One Line Ques Ans1Dokument11 SeitenOne Line Ques Ans1Nikhil Patkal100% (1)

- CH1 3Dokument32 SeitenCH1 3sam negroNoch keine Bewertungen

- 82001Dokument85 Seiten82001Mohammed ThawfeeqNoch keine Bewertungen

- Module 3-CD-NOTESDokument12 SeitenModule 3-CD-NOTESlekhanagowda797Noch keine Bewertungen

- DocumentationDokument36 SeitenDocumentationAnkit JainNoch keine Bewertungen

- Compiler ConstructionDokument63 SeitenCompiler ConstructionMSMWOLFNoch keine Bewertungen

- Compiler Final ModifiedDokument33 SeitenCompiler Final ModifiedAkshatNoch keine Bewertungen

- Software Tool For Translating Pseudocode To A Programming LanguageDokument9 SeitenSoftware Tool For Translating Pseudocode To A Programming LanguageJames MorenoNoch keine Bewertungen

- Chapter 1 - IntroductionDokument28 SeitenChapter 1 - Introductionbekalu alemayehuNoch keine Bewertungen

- CD 1Dokument23 SeitenCD 1PonnuNoch keine Bewertungen

- CConsDokument6 SeitenCConsAaron RNoch keine Bewertungen

- Compiler DesignDokument10 SeitenCompiler DesignDeSi ChuLLNoch keine Bewertungen

- COMPILERDESIGNDokument3 SeitenCOMPILERDESIGNkevinrejijohnNoch keine Bewertungen

- Compiler DesignDokument117 SeitenCompiler DesignⲤʟᴏᴡɴᴛᴇʀ ᏀᴇɪꜱᴛNoch keine Bewertungen

- Soft Computing Unit-5 by Arun Pratap SinghDokument78 SeitenSoft Computing Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- System Programming Unit-4 by Arun Pratap SinghDokument83 SeitenSystem Programming Unit-4 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- System Programming Unit-4 by Arun Pratap SinghDokument83 SeitenSystem Programming Unit-4 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Soft Computing Unit-1 by Arun Pratap SinghDokument100 SeitenSoft Computing Unit-1 by Arun Pratap SinghArunPratapSingh100% (1)

- Advance Concept in Data Bases Unit-3 by Arun Pratap SinghDokument81 SeitenAdvance Concept in Data Bases Unit-3 by Arun Pratap SinghArunPratapSingh100% (2)

- Advance Concept in Data Bases Unit-5 by Arun Pratap SinghDokument82 SeitenAdvance Concept in Data Bases Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- Web Technology and Commerce Unit-3 by Arun Pratap SinghDokument32 SeitenWeb Technology and Commerce Unit-3 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Advance Concept in Data Bases Unit-5 by Arun Pratap SinghDokument82 SeitenAdvance Concept in Data Bases Unit-5 by Arun Pratap SinghArunPratapSingh100% (1)

- Information Theory, Coding and Cryptography Unit-5 by Arun Pratap SinghDokument79 SeitenInformation Theory, Coding and Cryptography Unit-5 by Arun Pratap SinghArunPratapSingh100% (2)

- Web Technology and Commerce Unit-4 by Arun Pratap SinghDokument60 SeitenWeb Technology and Commerce Unit-4 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Web Technology and Commerce Unit-5 by Arun Pratap SinghDokument82 SeitenWeb Technology and Commerce Unit-5 by Arun Pratap SinghArunPratapSingh100% (3)

- Information Theory, Coding and Cryptography Unit-1 by Arun Pratap SinghDokument46 SeitenInformation Theory, Coding and Cryptography Unit-1 by Arun Pratap SinghArunPratapSingh67% (6)

- Web Technology and Commerce Unit-1 by Arun Pratap SinghDokument38 SeitenWeb Technology and Commerce Unit-1 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- Web Technology and Commerce Unit-2 by Arun Pratap SinghDokument65 SeitenWeb Technology and Commerce Unit-2 by Arun Pratap SinghArunPratapSinghNoch keine Bewertungen

- SPCC Assignment PDFDokument2 SeitenSPCC Assignment PDFRohit ShambwaniNoch keine Bewertungen

- Code GenerationDokument49 SeitenCode GenerationCandy AngelNoch keine Bewertungen

- Module-5: Syntax Directed Translation, Intermediate Code Generation, Code Generation 5.1,5.2,5.3, 6.1,6.2,8.1,8.2Dokument37 SeitenModule-5: Syntax Directed Translation, Intermediate Code Generation, Code Generation 5.1,5.2,5.3, 6.1,6.2,8.1,8.2snameNoch keine Bewertungen

- DAI0034A Efficient CDokument36 SeitenDAI0034A Efficient Csivaguru.n.sNoch keine Bewertungen

- ICCAVRDokument236 SeitenICCAVRyasir127Noch keine Bewertungen

- L3 - Compiler Construction (CS-403) PDFDokument25 SeitenL3 - Compiler Construction (CS-403) PDFShah JeeNoch keine Bewertungen

- Acd 5Dokument9 SeitenAcd 5uppiNoch keine Bewertungen

- Chapter 8 - Code GenerationDokument62 SeitenChapter 8 - Code GenerationEkansh GuptaNoch keine Bewertungen

- PCD - Theory - Paper Solution - Nov - Dec - 2017Dokument27 SeitenPCD - Theory - Paper Solution - Nov - Dec - 2017Kalpesh JoshiNoch keine Bewertungen

- CSC3201 - Compiler Construction (Part II) - Lecture 5 - Code GenerationDokument64 SeitenCSC3201 - Compiler Construction (Part II) - Lecture 5 - Code GenerationAhmad AbbaNoch keine Bewertungen

- Code GenerationDokument202 SeitenCode GenerationAbirNoch keine Bewertungen

- Vlsidsp Chap6Dokument35 SeitenVlsidsp Chap6Anupam DubeyNoch keine Bewertungen

- C Compiler Design For A Network ProcessorDokument8 SeitenC Compiler Design For A Network ProcessorAritra DattaguptaNoch keine Bewertungen

- Compiler Design - Code GenerationDokument62 SeitenCompiler Design - Code Generationshanthi prabhaNoch keine Bewertungen

- SSA Book PDFDokument411 SeitenSSA Book PDFtharunNoch keine Bewertungen

- Compiler Optimizations1Dokument43 SeitenCompiler Optimizations1BefNoch keine Bewertungen

- Sri Vidya College of Engineering and Technology Question BankDokument5 SeitenSri Vidya College of Engineering and Technology Question Bankgotise4417Noch keine Bewertungen

- Atcd-Unit-5 (1) - 2Dokument32 SeitenAtcd-Unit-5 (1) - 2Duggineni VarunNoch keine Bewertungen

- 18CS61 - SS and C - Module 5Dokument36 Seiten18CS61 - SS and C - Module 5Juice KudityaNoch keine Bewertungen

- B.tech - CSE - CPS - 2020 - 2021 - Curriculum and Syllabus Without SLODokument250 SeitenB.tech - CSE - CPS - 2020 - 2021 - Curriculum and Syllabus Without SLOSai CharanNoch keine Bewertungen

- Cse1002 Problem Solving and Object Oriented Programming Lo 1.0 37 Cse1002Dokument3 SeitenCse1002 Problem Solving and Object Oriented Programming Lo 1.0 37 Cse1002Shiva CharanNoch keine Bewertungen

- The Constrainedness Knife-Edge Toby WalshDokument6 SeitenThe Constrainedness Knife-Edge Toby WalshShishajimooNoch keine Bewertungen

- Advanced Compiler Design and Implementation by Steven S. MuchnickDokument887 SeitenAdvanced Compiler Design and Implementation by Steven S. MuchnickNjabulo Majozi100% (2)

- Unit 4 CDDokument12 SeitenUnit 4 CDlojsdwiGWNoch keine Bewertungen

- MCA 0705 II UNIT 5 Code Optimization & Code GenerationDokument10 SeitenMCA 0705 II UNIT 5 Code Optimization & Code GenerationDr. V. Padmavathi Associate ProfessorNoch keine Bewertungen

- Class4 0Dokument61 SeitenClass4 0Monika AhlawatNoch keine Bewertungen

- Compiler Construction The Art of Niklaus WirthDokument14 SeitenCompiler Construction The Art of Niklaus WirthPeter LoNoch keine Bewertungen

- Chapter 10 - Code GenerationDokument31 SeitenChapter 10 - Code Generationbekalu alemayehuNoch keine Bewertungen

- 6-Codegen Opti PDFDokument47 Seiten6-Codegen Opti PDFsushmitha patilNoch keine Bewertungen