Beruflich Dokumente

Kultur Dokumente

Advantages of Data Warehouse

Hochgeladen von

ramu546Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Advantages of Data Warehouse

Hochgeladen von

ramu546Copyright:

Verfügbare Formate

www.fullinterview.

com

Advantages of Data warehouse:

Data warehouse provides a common data model for all data of interest

regardless of the data's source. This makes it easier to report and

analyze information than it would be if multiple data models were used

to retrieve information such as sales invoices, order receipts, general

ledger charges, etc.

nconsistencies are identified and resolved prior to loading of data in

the Data warehouse. This greatly simplifies reporting and analysis.

nformation in the data warehouse is under the control of data

warehouse users so that, even if the source system data is purged

over time, the information in the warehouse can be stored safely for

e!tended periods of time.

"ecause they are separate from operational systems, data

warehouses provide retrieval of data without slowing down operational

systems.

Data warehouses enhance the value of operational business

applications, notably customer relationship management #$%&'

systems.

Data warehouses facilitate decision support system applications such

as trend reports #e.g., the items with the most sales in a particular area

within the last two years', e!ception reports, and reports that show

actual performance versus goals.

Disadvantages of Data (arehouse:

Data warehouses are not the optimal environment for unstructured

data.

"ecause data must be e!tracted, transformed and loaded into the

warehouse, there is an element of latency in data warehouse data.

)ver their life, data warehouses can have high costs. &aintenance

costs are high.

Data warehouses can get outdated relatively *uickly. There is a cost of

delivering suboptimal information to the organization.

There is often a fine line between data warehouses and operational

systems. Duplicate, e!pensive functionality may be developed. )r,

functionality may be developed in the data warehouse that, in

retrospect, should have been developed in the operational systems

and vice versa.

1

www.fullinterview.com

Data warehousing and its $oncepts:

What is Data warehouse?

Data (arehouse is a central managed and integrated database containing data

from the operational sources in an organization #such as +A,, $%&, -%,

system'. t may gather manual inputs from users determining criteria and

parameters for grouping or classifying records.

Data warehouse database contains structured data for *uery analysis and can be

accessed by users. The data warehouse can be created or updated at any time,

with minimum disruption to operational systems. t is ensured by a strategy

implemented in -T. process.

A source for the data warehouse is a data e!tract from operational databases.

The data is validated, cleansed, transformed and finally aggregated and it

becomes ready to be loaded into the data warehouse.

Data warehouse is a dedicated database which contains detailed, stable, non/

volatile and consistent data which can be analyzed in the time variant.

+ometimes, where only a portion of detailed data is re*uired, it may be worth

considering using a data mart.

A data mart is generated from the data warehouse and contains data focused on

a given sub0ect and data that is fre*uently accessed or summarized.

2

www.fullinterview.com

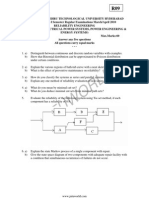

Data warehouse Architecture:

3

www.fullinterview.com

Data warehouse Architecture #$ontd':

4

www.fullinterview.com

-T. $oncept:

-T. is the automated and auditable data ac*uisition process from source system

that involves one or more sub processes of data e!traction, data transportation,

data transformation, data consolidation, data integration, data loading and data

cleaning.

E/ Extracting data from source operational or archive systems which are primary

source of data for the data warehouse.

T / Transforming the data 1 which may involve cleaning, filtering, validating and

applying business rules.

L/ Loading the data into the data warehouse or any other database or application

that houses the data.

5

www.fullinterview.com

-T. ,rocess:

-T. ,rocess involves the -!traction, Transformation and .oading ,rocess.

Extraction:

The first part of an ETL process involves extracting the data from the source

systems. Most data warehousing projects consolidate data from different source

systems. Each separate system may also use a different data format. Common data

source formats are relational databases and flat files, but may include nonrelational

database structures such as !nformation Management "ystem #!M"$ or other data

structures such as %irtual "torage &ccess Method #%"&M$ or !ndexed "e'uential

&ccess Method #!"&M$, or even fetching from outside sources such as through web

spidering or screenscraping. Extraction converts the data into a format for

transformation processing.

&n intrinsic part of the extraction involves the parsing of extracted data, resulting in

a chec( if the data meets an expected pattern or structure. !f not, the data may be

rejected entirely or in part.

Transformation:

Transformation is the series of tas(s that prepares the data for loading into the

warehouse. )nce data is secured, you have worry about its format or structure.

*ecause it will be not be in the format needed for the target. Example the grain

6

www.fullinterview.com

level, data type, might be different. +ata cannot be used as it is. "ome rules and

functions need to be applied to transform the data

)ne of the purposes of ETL is to consolidate the data in a central repository or to

bring it at one logical or physical place. +ata can be consolidated from similar

systems, different subject areas, etc.

ETL must support data integration for the data coming from multiple sources and

data coming at different times. This has to be seamless operation. This will avoid

overwriting existing data, creating duplicate data or even worst simply unable to load

the data in the target

Loading:

Loading process is critical to integration and consolidation. Loading process decides

the modality of how the data is added in the warehouse or simply rejected. Methods

li(e addition, ,pdating or deleting are executed at this step. -hat happens to the

existing data. "hould the old data be deleted because of new information. )r

should the data be archived. "hould the data be treated as additional data to the

existing one.

"o data to the data warehouse has to loaded with utmost care for which data

auditing process can only establish the confidence level. This auditing process

normally happens after the loading of data.

.ist of -T. tools:

"elow is the list of -T. Tools available in the market:

List of ETL Tools ETL Vendors

)racle (arehouse "uilder #)("' )racle

Data ntegrator 2 Data +ervices +A, "usiness )b0ects

"& nformation +erver #Datastage' "&

+A+ Data ntegration +tudio +A+ nstitute

,ower$enter nformatica

-li!ir %epertoire -li!ir

Data &igrator nformation "uilders

+3. +erver ntegration +ervices &icrosoft

Talend )pen +tudio Talend

Data4low &anager

,itney "owes "usiness

nsight

Data ntegrator ,ervasive

)pen Te!t ntegration $enter )pen Te!t

Transformation &anager -T. +olutions .td.

Data &anager5Decision +tream "& #$ognos'

$lover -T. 6avlin

-T.7A.. 8A9

D": (arehouse -dition "&

7

www.fullinterview.com

,entaho Data ntegration ,entaho

Adeptia ntegration +erver Adeptia

-T. Testing:

4ollowing are some common goals for testing an -T. application:

Data completeness / To ensure that all e!pected data is loaded.

Data Quality / t promises that the -T. application correctly re0ects, substitutes

default values, corrects and reports invalid data.

Data transformation / This is meant for ensuring that all data is correctly

transformed according to business rules and design specifications.

,erformance and scalability/ This is to ensure that the data loads and *ueries

perform within e!pected time frames and the technical architecture is scalable.

ntegration testing/ t is to ensure that -T. process functions well with other

upstream and downstream applications.

User-acceptance testing / t ensures the solution fulfills the users; current

e!pectations and also anticipates their future e!pectations.

Regression testing / To keep the e!isting functionality intact each time a new

release of code is completed.

"asically data warehouse testing is divided into two categories <"ack/end testing;

and <4ront/end testing;. The former applies where the source systems data is

compared to the end/result data in .oaded area which is the -T. testing. (hile

the latter refers to where the user checks the data by comparing their &+ with

the data that is displayed by the end/user tools.

Data Validation:

Data completeness is one of the basic ways for data validation. This is needed to

verify that all e!pected data loads into the data warehouse. This includes the

validation of all the records, fields and ensures that the full contents of each field

are loaded.

Data Transformation:

=alidating that the data is transformed correctly based on business rules, can be

one of the most comple! parts of testing an -T. application with significant

transformation logic. Another way of testing is to pick up some sample records

and compare them for validating data transformation manually, but this method

re*uires manual testing steps and testers who have a good amount of

e!perience and understand of the -T. logic.

8

www.fullinterview.com

Data (arehouse Testing .ife $ycle:

.ike any other piece of software a D( implementation undergoes the natural

cycle of >nit testing, +ystem testing, %egression testing, ntegration testing and

Acceptance testing.

Unit testing: Traditionally this has been the task of the developer. This is a

white/bo! testing to ensure the module or component is coded as per agreed

upon design specifications. The developer should focus on the following:

a' That all inbound and outbound directory structures are created properly with

appropriate permissions and sufficient disk space. All tables used during the -T.

are present with necessary privileges.

b' The -T. routines give e!pected results:

i. All transformation logics work as designed from source till target

ii. "oundary conditions are satisfied? e.g. check for date fields with leap year

dates

iii. +urrogate keys have been generated properly

iv. 9>.. values have been populated where e!pected

v. %e0ects have occurred where e!pected and log for re0ects is created with

sufficient details

vi. -rror recovery methods

vii. Auditing is done properly

c' That the data loaded into the target is complete:

i. All source data that is e!pected to get loaded into target, actually get

loaded? compare counts between source and target and use data

profiling tools

ii. All fields are loaded with full contents? i.e. no data field is truncated while

transforming

iii. 9o duplicates are loaded

iv. Aggregations take place in the target properly

v. Data integrity constraints are properly taken care of

System testing: @enerally the 3A team owns this responsibility. 4or them the

design document is the bible and the entire set of test cases is directly based

upon it. Aere we test for the functionality of the application and mostly it is black/

bo!. The ma0or challenge here is preparation of test data. An intelligently

designed input dataset can bring out the flaws in the application more *uickly.

(herever possible use production/like data. Bou may also use data generation

tools or customized tools of your own to create test data. (e must test for all

possible combinations of input and specifically check out the errors and

e!ceptions. An unbiased approach is re*uired to ensure ma!imum efficiency.

9

www.fullinterview.com

8nowledge of the business process is an added advantage since we must be

able to interpret the results functionally and not 0ust code/wise.

The 3A team must test for:

i. Data completeness? match source to target counts terms of business.

Also the load windows refresh period for the D( and the views created

should be signed off from users.

ii. Data aggregations? match aggregated data against staging tables.

iii. @ranularity of data is as per specifications.

iv. -rror logs and audit tables are generated and populated properly.

v. 9otifications to T and5or business are generated in proper format

Regression testing: A D( application is not a one/time solution. ,ossibly it is

the best e!ample of an incremental design where re*uirements are enhanced

and refined *uite often based on business needs and feedbacks. n such a

situation it is very critical to test that the e!isting functionalities of a D(

application are not messed up whenever an enhancement is made to it.

@enerally this is done by running all functional tests for e!isting code whenever a

new piece of code is introduced. Aowever, a better strategy could be to preserve

earlier test input data and result sets and running the same again. 9ow the new

results could be compared against the older ones to ensure proper functionality.

Integration testing: This is done to ensure that the application developed works

from an end/to/end perspective. Aere we must consider the compatibility of the

D( application with upstream and downstream flows. (e need to ensure for

data integrity across the flow. )ur test strategy should include testing for:

i. +e*uence of 0obs to be e!ecuted with 0ob dependencies and scheduling

ii. %e/startability of 0obs in case of failures

iii. @eneration of error logs

iv. $leanup scripts for the environment including database

This activity is a combined responsibility and participation of e!perts from all

related applications is a must in order to avoid misinterpretation of results.

Acceptance testing: This is the most critical part because here the actual users

validate your output datasets. They are the best 0udges to ensure that the

application works as e!pected by them. Aowever, business users may not have

proper -T. knowledge. Aence, the development and test team should be ready

to provide answers regarding -T. process that relate to data population. The test

team must have sufficient business knowledge to translate the results in terms of

business. Also the load windows, refresh period for the D( and the views

created should be signed off from users.

Performance testing: n addition to the above tests a D( must necessarily go

through another phase called performance testing. Any D( application is

10

www.fullinterview.com

designed to be scalable and robust. Therefore, when it goes into production

environment, it should not cause performance problems. Aere, we must test the

system with huge volume of data. (e must ensure that the load window is met

even under such volumes. This phase should involve D"A team, and -T. e!pert

and others who can review and validate your code for optimization.

Summary:

Testing a D( application should be done with a sense of utmost responsibility. A

bug in a D( traced at a later stage results in unpredictable losses. And the task

is even more difficult in the absence of any single end/to/end testing tool. +o the

strategies for testing should be methodically developed, refined and streamlined.

This is also true since the re*uirements of a D( are often dynamically changing.

>nder such circumstances repeated discussions with development team and

users is of utmost importance to the test team. Another area of concern is test

coverage. This has to be reviewed multiple times to ensure completeness of

testing. Always remember, a D( tester must go an e!tra mile to ensure near

defect free solutions.

11

Das könnte Ihnen auch gefallen

- SAS Programming Guidelines Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesVon EverandSAS Programming Guidelines Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNoch keine Bewertungen

- Types of ETL TestingDokument3 SeitenTypes of ETL Testingramu546Noch keine Bewertungen

- Base SAS Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesVon EverandBase SAS Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNoch keine Bewertungen

- ETL TestingDokument12 SeitenETL Testingsempoline12345678Noch keine Bewertungen

- Data Warehouse Interview Questions:: Why Oracle No Netezza?Dokument6 SeitenData Warehouse Interview Questions:: Why Oracle No Netezza?HirakNoch keine Bewertungen

- Fact and Dimension TablesDokument11 SeitenFact and Dimension Tablespavan2711Noch keine Bewertungen

- How To Load Fact TablesDokument6 SeitenHow To Load Fact TablesRamyaKrishnanNoch keine Bewertungen

- Definition and Types of Data WarehousingDokument187 SeitenDefinition and Types of Data Warehousingvinayreddy460Noch keine Bewertungen

- Technet Etl Design QuestionnaireDokument15 SeitenTechnet Etl Design Questionnairepubesh100% (1)

- Data Warehousing FAQDokument5 SeitenData Warehousing FAQsrk78Noch keine Bewertungen

- InformaticaDokument70 SeitenInformaticaVenkataramana Sama100% (1)

- What is a Factless Fact TableDokument5 SeitenWhat is a Factless Fact TableDreamteam SportsteamNoch keine Bewertungen

- Governance Policies A Complete Guide - 2019 EditionVon EverandGovernance Policies A Complete Guide - 2019 EditionNoch keine Bewertungen

- ETL Testing With UNIXDokument23 SeitenETL Testing With UNIXramu546100% (1)

- "Data Warehouse and Data Mining": Institute of Management StudiesDokument14 Seiten"Data Warehouse and Data Mining": Institute of Management StudiesBhavna DubeyNoch keine Bewertungen

- SQL SERVER - Data Warehousing Interview Questions and Answers Part 1 PDFDokument3 SeitenSQL SERVER - Data Warehousing Interview Questions and Answers Part 1 PDFAraz2008Noch keine Bewertungen

- MDMMMMDokument14 SeitenMDMMMMatulsharmarocksNoch keine Bewertungen

- DWH and Testing1Dokument11 SeitenDWH and Testing1ramu546Noch keine Bewertungen

- ETL Testing Techniques, Process, Challenges for Data Warehouse ProjectsDokument3 SeitenETL Testing Techniques, Process, Challenges for Data Warehouse ProjectsgmkprasadNoch keine Bewertungen

- OLTP (On-Line Transaction Processing) Is Characterized by A Large Number of Short On-Line TransactionsDokument12 SeitenOLTP (On-Line Transaction Processing) Is Characterized by A Large Number of Short On-Line TransactionseazyNoch keine Bewertungen

- Data Warehousing Chapter 1Dokument8 SeitenData Warehousing Chapter 1Luntian Amour JustoNoch keine Bewertungen

- Data, Information and KnowledgeDokument12 SeitenData, Information and KnowledgebirjubrijeshNoch keine Bewertungen

- ETL Testing in Less TimeDokument16 SeitenETL Testing in Less TimeShiva CHNoch keine Bewertungen

- What Is ETLDokument47 SeitenWhat Is ETLKoti BaswarajNoch keine Bewertungen

- ETL Testing Introduction - Extract Transform Load Data Warehouse ProcessDokument3 SeitenETL Testing Introduction - Extract Transform Load Data Warehouse Processjeffa123Noch keine Bewertungen

- Explain About Your Project?Dokument20 SeitenExplain About Your Project?Akash ReddyNoch keine Bewertungen

- Nipuna DWHDokument15 SeitenNipuna DWHapi-3831106Noch keine Bewertungen

- OLTP vs OLAP Systems ComparisonDokument10 SeitenOLTP vs OLAP Systems ComparisoneazyNoch keine Bewertungen

- Performance Tuning: Eliminate Source and Target Database BottlenecksDokument11 SeitenPerformance Tuning: Eliminate Source and Target Database BottlenecksSannat ChouguleNoch keine Bewertungen

- Data Warehouse Concepts and ArchitectureDokument58 SeitenData Warehouse Concepts and ArchitectureharishrjooriNoch keine Bewertungen

- Navya KommalapatiDokument7 SeitenNavya KommalapatisudheersaidNoch keine Bewertungen

- DW Basic + UnixDokument31 SeitenDW Basic + UnixbabjeereddyNoch keine Bewertungen

- Sequential DatawarehousingDokument25 SeitenSequential DatawarehousingSravya ReddyNoch keine Bewertungen

- Abinitio IntewDokument8 SeitenAbinitio IntewSravya ReddyNoch keine Bewertungen

- Presented By: - Preeti Kudva (106887833) - Kinjal Khandhar (106878039)Dokument72 SeitenPresented By: - Preeti Kudva (106887833) - Kinjal Khandhar (106878039)archna27Noch keine Bewertungen

- SQL Interview Questions For Software TestersDokument9 SeitenSQL Interview Questions For Software TestersDudi Kumar100% (1)

- Vanishree-Sr. Informatica DeveloperDokument5 SeitenVanishree-Sr. Informatica DeveloperJoshElliotNoch keine Bewertungen

- Teradata SQLDokument36 SeitenTeradata SQLrupeshvinNoch keine Bewertungen

- Pushdown Optimization and Types in InformaticaDokument2 SeitenPushdown Optimization and Types in Informaticajalabas11Noch keine Bewertungen

- How To Obtain Flexible, Cost-Effective Scalability and Performance Through Pushdown ProcessingDokument16 SeitenHow To Obtain Flexible, Cost-Effective Scalability and Performance Through Pushdown ProcessingAlok TiwaryNoch keine Bewertungen

- ETL ReviewDokument10 SeitenETL Reviewapi-3831106100% (3)

- Teradata SQL Performance Tuning Case Study Part IIDokument37 SeitenTeradata SQL Performance Tuning Case Study Part IIsahmed4500% (1)

- When Do You Go For Data Transfer Transformation: BenefitsDokument5 SeitenWhen Do You Go For Data Transfer Transformation: BenefitsRamkoti VemulaNoch keine Bewertungen

- Informatica Interview QuestionsDokument10 SeitenInformatica Interview QuestionsrhythmofkrishnaNoch keine Bewertungen

- IdqDokument2 SeitenIdqPriyanka Sinha100% (1)

- All InterviewDokument11 SeitenAll InterviewsanguinerkNoch keine Bewertungen

- DwquesDokument5 SeitenDwquesMURALi ChodisettiNoch keine Bewertungen

- Teradata CVDokument4 SeitenTeradata CVkavitha221Noch keine Bewertungen

- Teradata JoinsDokument27 SeitenTeradata JoinsHarsh KalraNoch keine Bewertungen

- Informatica MDM Master A Complete Guide - 2020 EditionVon EverandInformatica MDM Master A Complete Guide - 2020 EditionNoch keine Bewertungen

- IBM InfoSphere DataStage A Complete Guide - 2021 EditionVon EverandIBM InfoSphere DataStage A Complete Guide - 2021 EditionNoch keine Bewertungen

- Enterprise Data Modeling A Complete Guide - 2021 EditionVon EverandEnterprise Data Modeling A Complete Guide - 2021 EditionNoch keine Bewertungen

- Master Data Management Program A Complete Guide - 2021 EditionVon EverandMaster Data Management Program A Complete Guide - 2021 EditionNoch keine Bewertungen

- Big Data Architecture A Complete Guide - 2019 EditionVon EverandBig Data Architecture A Complete Guide - 2019 EditionNoch keine Bewertungen

- Semantic Data Model A Complete Guide - 2020 EditionVon EverandSemantic Data Model A Complete Guide - 2020 EditionNoch keine Bewertungen

- DWH Concepts OverviewDokument11 SeitenDWH Concepts Overviewramu546Noch keine Bewertungen

- Ugcnet Cs Paper 2Dokument1 SeiteUgcnet Cs Paper 2ramu546Noch keine Bewertungen

- ETL DWH ConceptDokument11 SeitenETL DWH Conceptramu546Noch keine Bewertungen

- MS BiDokument1 SeiteMS Biramu546Noch keine Bewertungen

- 09 Reliability EngineeringDokument2 Seiten09 Reliability Engineeringramu546Noch keine Bewertungen

- MS BiDokument1 SeiteMS Biramu546Noch keine Bewertungen

- Paper I - Set WDokument1 SeitePaper I - Set WCharles WatersNoch keine Bewertungen

- HVDC Light TechnologyDokument1 SeiteHVDC Light Technologyramu546Noch keine Bewertungen

- MSBI Training DetailsDokument6 SeitenMSBI Training Detailsramu546Noch keine Bewertungen

- Web Technologies Lab ManualDokument47 SeitenWeb Technologies Lab ManualCharles SandersNoch keine Bewertungen

- Mercury QTP TutorialDokument111 SeitenMercury QTP TutorialDhiraj PatilNoch keine Bewertungen