Beruflich Dokumente

Kultur Dokumente

On The Estimation of Entropy

Hochgeladen von

Sherjil Ozair0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

14 Ansichten20 SeitenThis paper introduces estimators of entropy and describe their properties.

Originaltitel

ON THE ESTIMATION OF ENTROPY

Copyright

© © All Rights Reserved

Verfügbare Formate

PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenThis paper introduces estimators of entropy and describe their properties.

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

14 Ansichten20 SeitenOn The Estimation of Entropy

Hochgeladen von

Sherjil OzairThis paper introduces estimators of entropy and describe their properties.

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 20

Ann. Inst. Statist. Math.

Vol. 45, No. 1, 69-88 (1993)

ON THE ESTIMATION OF ENTROPY

PETER HALL t AND SALLY C. MORTON 2

1 Centre f or Mat hemat i cs and its Applications, Aust ral i an National University,

G. P. O. Box 4, Canberra A. C. T. 2601, Australia

and CSI RO Division of Mat hemat i cs and St at i st i cs

2Statistical Research and Consulting Group, The RAND Corporation,

1700 Mai n Street, P. O. Box. 2138, Sant a Monica, CA 90407-2138, U.S.A.

(Received May 7, 1991; revised April 14, 1992)

Abst ract . Motivated by recent work of Joe (1989, Ann. I nst . St at i s t . Mat h. ,

41, 683-697), we introduce estimators of entropy and describe their proper-

ties. We study the effects of tail behaviour, distribution smoothness and di-

mensionality on convergence properties. In particular, we argue that root-n

consistency of entropy estimation requires appropriate assumptions about each

of these three features. Our estimators are different from Joe's, and may be

computed without numerical integration, but it can be shown that the same

interaction of tail behaviour, smoothness and dimensionality also determines

the convergence rate of Joe's estimator. We study both histogram and kernel

estimators of entropy, and in each case suggest empirical methods for choosing

the smoothing parameter.

Ke y words and phrases: Convergence rates, density estimation, entropy, his-

togram estimator, kernel estimator, projection pursuit, root-n consistency.

1. Introducti on

This paper was motivated by work of Joe (1989) on estimation of entropy.

Our work has three main aims: elucidating the role played by Joe' s key regularity

condition (A); developing theory for a class of estimators whose construction does

not involve numerical integration; and providing a concise account of the influence

of dimensionality on convergence rate properties of entropy estimators. Our main

results do not require Joe' s (1989) condition (A), which asks t hat tail properties

of the underlying distribution be ignorable. We show concisely how tail properties

influence estimator behaviour, including convergence rates, for estimators based

on bot h kernels and histograms. We point out t hat histogram estimators may be

used to construct root-n consistent entropy estimators in p = 1 dimension, and

t hat kernel estimators give root-n consistent entropy estimators in p = 1, 2 and 3

dimensions, but t hat neither type generally provides root-n consistent estimation

beyond this range, unless (for example) the underlying distribution is compactly

69

70 PETER HALL AND SALLY C. MORTON

support ed, or is part i cul arl y smoot h and bi as-reduct i on techniques are employed.

Joe (1989) develops t heor y for a r oot - n consistent est i mat or in t he case p = 4,

but he makes crucial use his condi t i on (A). Even for p = 1, r oot - n consi st ency

of our est i mat ors or of t hat est i mat or suggested by Joe (1989) requires cert ai n

propert i es of t he tails of t he underl yi ng distribution. Goldstein and Messer (1991)

briefly ment i on t he probl em of ent ropy est i mat i on, but like Joe t hey work under

t he assumpt i on (A).

To furt her el uci dat e our results it is necessary t o i nt roduce a little not at i on.

Let X1, X2, . . . ,X~ denot e a r andom sample drawn from a p-vari at e di st ri but i on

wi t h densi t y f , and put I = f f log f , where t he integral is assumed to converge

absolutely. Then - I denotes t he ent ropy of t he di st ri but i on det er mi ned by f . We

consider est i mat i on of I. Our est i mat ors are mot i vat ed by t he observation t hat

f = n -1 y]in__m log f ( Xi ) is unbi ased for I, and is root -n consistent if f f ( l og f)2 <

oc. Of course, since f is not known t hen _f is not a pract i cal est i mat or. However,

if f may be est i mat ed nonpar amet r i cal l y by f , say, t hen

n

(1.1) fm = - - 1 ~--~ log f ( Xi )

i =1

mi ght be an appropri at e al t ernat i ve to [.

Since f would t ypi cal l y depend on each Xi t hen t he expect ed value of E(/:I)

mi ght differ significantly from f r E( l og f ) . This observation mot i vat es an alter-

nat i ve est i mat or,

n

(1.2) ~f2 = n -L El o g f i ( Xi ) ,

i =1

where fi has t he same form as f except t hat it is comput ed for t he (n - 1)-sample

which excludes Xi. We develop a version of/*1 when f is a hi st ogram est i mat or,

and a version of I2 when f is a kernel est i mat or. A version of/*1 for kernel esti-

mat ors is also discussed. In bot h cases we prove t hat , under appropri at e regul ari t y

conditions, _T = [ op ( n- 1/ 2) . Then, a cent ral limit t heor em and ot her propert i es

of/~ follow i mmedi at el y from t hei r count erpart s for [.

The est i mat or i;2 is sensitive t o outliers, since t he densi t y est i mat or can be

very close to zero when eval uat ed at out l yi ng dat a values. This is one way of

viewing t he effects not ed by Hall (1987). There it is shown t hat t he adverse effects

of tail behaviour, or equivalently of outliers, may be alleviated by using a kernel

wi t h heavier tails. Dependi ng on t hei r ext ent , outliers can be probl emat i c when

using ent ropy est i mat ors in expl orat ory proj ect i on pursuit.

For bot h hi st ogram and kernel met hods, choice of smoot hi ng par amet er de-

t ermi nes t he performance of t he ent r opy est i mat or. We suggest pract i cal met hods

for smoot hi ng par amet er choice. For t he hi st ogram est i mat or of t ype (1.1) we

propose t hat a penal t y t er m be subt r act ed from/~1, t hat t he penalized version be

maxi mi zed wi t h respect t o hi st ogram binwidth, and t hat t he resulting bi nwi dt h

be used t o compute/~1. A version of this t echni que may also be developed for t he

kernel est i mat or of t ype (1.1). For t he kernel est i mat or of t ype (1.2) we suggest

ON THE ESTIMATION OF ENTROPY 71

that/~2 be maxi mi zed wi t h respect t o bandwi dt h, wi t hout regard for any penalty.

The performance of each approach is assessed by bot h t heoret i cal and numeri cal

analyses. Section 2 t r eat s t he case of hi st ogram est i mat ors, and kernel est i mat or s

are st udi ed in Section 3.

Our t heoret i cal account is based on argument s in Hall (1987, 1989), which

anal yse empirical propert i es of Kul l back-Lei bl er loss. We develop subst ant i al gen-

eralizations of t hat work which include, in t he case of hi st ogram est i mat ors, a

s t udy of/~l for a wide class of densities f having unbounded support .

Ent r opy est i mat ors may be empl oyed t o effect a t est for normal i t y (see e.g.

Vasicek (1986)) and t o const ruct measures of "interestingness" in t he cont ext of

proj ect i on pursui t (see e.g. Huber (1985), Fri edman (1987), Jones and Sibson

(1987), Hall (1989) and Mor t on (1989)). If one-dimensional proj ect i ons are used

t hen t he first st ep of expl or at or y proj ect i on pursui t is oft en t o choose t hat orienta-

t i on 0o which maximizes I(0) = f fo log fo, where 0 is a uni t p-vector, fo denot es

t he densi t y of t he pr oj ect ed scalar r andom variable 0. X, and u. v denot es t he dot

(i.e. scalar) pr oduct of vect ors u and v. The resul t s in Sections 2 and 3 show t hat ,

under appr opr i at e regul ari t y conditions,

= f ( 0 ) + op( ( 1 . 3 )

for each 0, where/~(0) denot es t he version of I comput ed from t he uni vari at e sampl e

0. X1 , . . . , 0 . X~, and [ ( 0 ) = n -1 ~in=llogfo(Xi). Res ul t ( 1 . 3 ) ma y readily be

proved t o hold uni forml y in uni t p-vect ors 0, by using Bernst ei n' s i nequal i t y and

not i ng t hat t he class of all uni t p-vect ors is compact . Arguing t hus we may show

t hat if 0, 0 are chosen t o maximize/*(0), [(0) respectively, t hen 0 - 0 = %( n - 1 / 2 ) .

A limit t heor em for 0, of t he form nl / ~( O- Oo) ~ Z (where Z is a normal r andom

variable wi t h mean zero) in di st ri but i on, is readily established. It t hen follows

i mmedi at el y t hat n 1/2 (0 - 00) ~ Z.

2. Histogram estimators

2.1 Summary

Subsect i on 2.2 proposes a hi st ogram est i mat or, I, of negat i ve entropy, I =

f f log f . Pr oper t i es of t he est i mat or in t he p-di mensi onal case are outlined, and

it is shown t hat t he est i mat or can only be r oot - n consi st ent when p = 1 or 2.

Furt hermore, onl y in t he case p = 1 can bi nwi dt h be chosen so t hat [ is identical

t o t he unbi ased est i mat or _f = n -1 }-~i=1 l o g f ( X i ) up t o t er ms of smaller order

t han n-i~2; when p = 2, any r oot - n consi st ent hi st ogram est i mat or of I has

significant bias, of size n -1/2. (For reasons of economy, det ai l ed proofs of some

of t hese results are omi t t ed; t hey are very similar t o count er par t s in t he case of

kernel est i mat ors, t r eat ed in Section 3.)

Thus, onl y for p = 1 di mensi on is t he hi st ogram est i mat or part i cul arl y at-

t ract i ve. Subsect i ons 2.3 et seq confine at t ent i on t o t hat cont ext . In part i cul ar,

Subsect i on 2.3 suggests an empirical rule for bandwi dt h selection when p = 1, and

descri bes its performance in t he case of densities wi t h regul arl y varyi ng tails. The

rule is based on maximizing a penalized version of/~, and is rel at ed t o t echni ques

72 PETER HALL AND SALLY C. MORTON

derived from Akaike's information criterion. Subsection 2.4 describes properties

of/~, and of the empirical rule, when the underlying density has exponentially

decreasing rather t han regularly varying tails. A numerical study of the rule is

presented in Subsection 2.5, and proofs are outlined in Subsection 2.6.

2.2 O u t l i n e o f g e n e r a l p r o p e r t i e s

We first introduce notation, then we define a histogram estimator of the en-

tropy of a p-variate density, and subsequently we describe its properties.

Let Z p denote the set of all integer p-vectors i = ( i 1 , . . . , i p ) r , let v = ( vl , . . . ,

Vp) T denote any fixed p-vector, and let

B i ---- { x ---- ( X l , . . . , x p ) T : l x j - - ( v j + i j h ) l < 1-h l < j < 2 ' - -

represent the histogram bin centred at v + i h . Here h, a number which decreases to

zero as sample size increases, represents the binwidth of the histogram. Write Ni

for the number of dat a values which fall into bin Bi. Then for x E B i , N i / ( n h p)

is an estimator of f ( x ) , and

: n - 1 E Ni l og { N i / ( nh p) } -~ 7~ - 1 E Ni l og N i - l og ( ha p)

i i

is an estimator of I = f f log f.

Let II" II denote the usual Euclidean metric in p-dimensional Euclidean space.

It may be shown t hat if f ( z ) has tails which decrease like a constant multiple of

I l x l l as I l x l t -~ ~, for example if f ( x ) = c l @2 + I t z l l ) for positive constants

Cl and c2, then/* admits an expansion of the form

(2.1) I = f + a l ( n h P ) - I + ( p / ~ ) - a 2 h 2 @Op{(nhP) -l+(p/c~) ~- h 2 } ,

where al, a2 are positive constants and _f = n -1 Elogf(X~). The term of size

( n h P ) - l + ( p / a ) in (2.1) comes from a "variance component" of I, and the t erm

of size h 2 comes from a "bias component". The constraint t hat f be integrable

dictates t hat a > p.

In the multivariate case, our assumption t hat the density' s tails decrease like

i l x l l serves only to illustrate, in a general way, the manner in which tail weight

affects convergence rate. We do not claim t hat this "symmetric" distribution might

be particularly useful as a practical model for real data. However, when p > 1

it is not possible to give a simple description of the impact of tail weight under

realistic models, because of the very large variety of ways in which tail weight

may vary in different directions. Variants of result (2.1) are available for a wide

range of multivariate densities, t hat decrease like Ilxll -~ in one or more directions

but decrease more rapidly in other directions. The nature of the result does not

change, but the power ( p / a ) does alter. There also exists an analogue of (2.1)

in the case of a multivariate normal density, where the quantity (nhP) -l +(p/ a) is

replaced by (nhP) -1 multiplied by a logarithmic term.

ON THE ESTI MATI ON OF ENTROP Y 73

However, in t he case of p = 1 di mensi on, our model is ampl y j ust i f i ed by

pr act i cal consi der at i ons. See for exampl e Hill (1975) and Zi pf (1965). We empl oy

t he model for general p in or der t o show t ha t , at l east in si mpl e di s t r i but i ons

and for t he hi s t ogr am est i mat or , opt i mal convergence r at es ma y onl y be obt ai ned

when p = 1. Then we focus on t he l at t er case, where t he model ma y be st r ongl y

mot i vat ed.

If 42 = f f ( l og f ) 2 _ 12 < oc t he n /~ is r oot - n consi st ent for I , and in fact

n l / 2 ( I - I ) is as ympt ot i cal l y nor mal N( 0 , ~2 ) . The ext ent t o whi ch /~ achi eves

t he same r at e of convergence, and t he same cent r al l i mi t t heor em, is det er mi ned

l argel y by t he size of t he di fference d ( h ) = a l ( n h P ) - I + ( p / ~ ) - a 2 h 2 in (2.1). In

pri nci pl e, h can be chosen so t h a t d ( h ) = 0, i.e.

h ---- ( a l / a 2 ) ~/{~(p+2)-p2 } n _ ( a _ p ) / { a ( p + 2 ) _ p 2 },

in whi ch case t he "r emai nder t er m" in (2.1) mus t be i nvest i gat ed. However, t hi s

is a s omewhat i mpr act i cal suggest i on. Fi r st of all, i t requi res careful es t i mat i on

of c~, al and a2, whi ch is far f r om st r ai ght f or war d, par t i cul ar l y when p > 2.

Secondl y, it does not i ndi cat e how we mi ght deal wi t h ci r cumst ances wher e t he

model f ( x ) ,-~ const.Hz[] - ~ is vi ol at ed.

The best we can real i st i cal l y hope t o achieve is t h a t h is chosen so t ha t

"vari ance" and "bias" cont r i but i ons are of t he same or der of magni t ude; t h a t is,

( n h P ) - l + ( P / ~ ) / h 2 is bounde d away f r om zero and i nf i ni t y as h --+ 0 and n --+ oc.

Subsect i on 2.3 will descri be an empi r i cal rul e for achi evi ng t hi s end when p = 1.

Achi evi ng t hi s bal ance requi res t aki ng h t o be of size n - a, where

a = - + 2 ) - p 2 } .

In t hi s case, d ( h ) is of size n- 2% I f f is t o be as ympt ot i cal l y equi val ent t o [ , up

t o t er ms of smal l er or der t h a n n - 1 / 2 , t hen we requi re 2a > 1/ 2, or equi val ent l y

a ( p - 2 ) < p ( p - 4 ) . I f p = 1 t he n t hi s condi t i on reduces t o c~ > 3, but i f p _> 2 t he n

t he condi t i on does not a dmi t any sol ut i ons a whi ch sat i sf y t he essent i al cons t r ai nt

c~ > p. Thus, t he mean squar ed error of f is gr eat er t h a n t ha t of [.

Thus, we concl ude t h a t t he hi s t ogr am me t hod for es t i mat i ng ent r opy is mos t

effective in t he case of p = 1 di mensi on. In ot her cases bi nwi dt h choice is a cri t i cal

pr obl em, and for p _> 2 it is vi r t ual l y i mpossi bl e t o achi eve r oot - n consi st ency.

We shal l show i n Sect i on 3 t h a t t hese di ffi cul t i es ma y be l argel y ci r cumvent ed by

empl oyi ng ker nel - t ype est i mat or s.

2.3 T h e c a s e p = 1: a n e m p i r i c a l r u l e

In t he case p = 1 it ma y be deduced f r om Theor em 4.1 of Hal l (1990) t ha t

(2.1) hol ds wi t h

(2.2) al = 2 b U a D ( a ) , a2 = ( 1 / 2 4 ) / ( f , ) 2 f - 1 ,

wher e b is t he cons t ant in t he r egul ar i t y condi t i on (2.3) below, and

/ 5

D( oz) = oz - 1 x - U c ~ E ( l o g [ x - l { M ( x ) + l } ] ) d x , o z > 1,

74 PETER HALL AND SALLY C. MORTON

wi t h M ( x ) denot i ng a Poi sson-di st ri but ed r andom variable wi t h mean x. The

following regul ari t y condi t i on is sufficient for (2.1) t o hold uni forml y in any col-

lection ~n such t hat for some (5, C > 0, n - l +e _< h _< n - e for each h E ~ n and

/ : ~n = O( n C) ; see Section 4 of Hall (1990):

( 2 . 3 )

f > 0 on ( - o c , oc), f ' exists and is cont i nuous on ( - o c , oc),

and for const ant s b > 0 and a > 1, f ' ( x ) ~ - b c e x - ~ - 1

and f ' ( - x ) ~ - b a x - ~ - ~ as x -+ oo.

In order t o det ermi ne an appropri at e bandwi dt h for t he est i mat or f we suggest

subt r act i ng a penal t y Q from/~, such t hat for a large class of densities,

( 2 . 4 ) [ - - i - Q = [ - s ( h ) + op{( h) + h 2 } ,

where

( 2.5)

S ( h ) = a 3 ( f t h ) - 1 + ( 1 / z ) @ a 2 h 2

and a2, a3 are pos i t i v e const ant s. In view of this positivity, maximizing f is

asympt ot i cal l y equivalent t o minimizing S ( h ) , and so t o minimizing t he di st ance

bet ween I and I. Thi s operat i on produces a bandwi dt h of size n - ( a- 1) / ( 3a- 1) ,

which we showed in Subsect i on 2.2 t o be t he opt i mal size.

We suggest t aki ng

( 2 . 6 ) Q = n -1 (number of nonempt y bins).

For this penal t y funct i on it is demonst r at ed in Hall ((1987), Section 4) t hat under

(2.3), formul a (2.4) holds wi t h a2 given by (2.2) and wi t h

a3 = 2b / {r(1 - _

It may be shown numeri cal l y t hat a3 > 0 for a > c~0 -~ 2.49, which corresponds t o

a densi t y wi t h a finite absol ut e moment of order great er t han 1.49. (For exampl e,

finite variance is sufficient.)

A quest i on arises as t o whet her t he penalized version of i , i.e. ], shoul d be

t aken as t he est i mat or of I, or whet her I itself should be used. From one poi nt

of view t he quest i on is academic, since if t he bandwi dt h is chosen t o maxi mi ze I,

and (2.3) holds, t hen/ ~ and [ are bot h first-order equivalent t o f: for J =/ * or [,

J = [_ -~- O p ( , r ~ - 2 ( e - 1 ) / ( 3 a - 1 ) ) = [ 4 - O p ( n - 1 / 2 ) ,

provi ded only t hat c~ > 3. These formul a follow from (2.1), (2.4) and (2.5) (in t he

cont ext of condi t i on (2.3), a > 3 is equivalent t o finite variance). However, it is of

pract i cal as well as t heoret i cal interest t o minimize t he second-order t erm, of size

n -2(~-1)/(3~-1). Indeed, t he si mul at i on s t udy out l i ned later in t hi s sect i on will

show t hat for sampl es of moder at e size, second-order effects can be significant. We

claim t hat if t he densi t y f has sufficiently light tails t hen I is preferabl e t o [.

ON THE ESTIMATION OF ENTROPY 75

To a p p r e c i a t e why, o b s e r v e t h a t wi t h 5n = r t - 2 ( a - 1 ) / ( 3 a - 1 ) , a n d b i n wi d t h

c h o s e n as s u g g y s t e d t h r e e p a r a g r a p h s a b o v e ( wi t h Q gi ve n b y ( 2. 3) ) , t h e s t a n d a r d

d e v i a t i o n s of I a n d I b o t h e q u a l n- 1/ 2~ + o ( 5 ~ ) , a n d b i a s e s e q u a l v + o( Sn) a n d

v + w + o( 5~) r e s p e c t i v e l y , wh e r e

v z a l ( n h ) -l --(1/ a) - a 2 h2, w z - 2 b l / ~ r ( 1 - a - 1 ) ( n h ) - l + ( 1 / ~ ) .

I f we r e g a r d f i r s t - o r d e r t e r ms as b e i n g of si ze n -112 a n d s e c o n d - o r d e r t e r ms as

b e i n g of s i z e ft - 2 ( a - 1 ) / ( 3 a - 1 ) , t h e n we s e e t h a t s t a n d a r d d e v i a t i o n s a r e i d e n t i c a l

t o f i r s t a n d s e c o n d or de r s , b u t t h a t wh i l e b i a s e s a gr e e t o f i r s t o r d e r t h e y di f f er t o

s e c o n d or de r . T h e s e c o n d - o r d e r b i a s t e r m is l ess f or f t h a n i t is f or [ i f a n d o n l y

i f Iv[ < I v + wl , or e q u i v a l e n t l y i f a n d o n l y i f - ( w + 2v) > 0, i.e.

( 2 . 7 )

b l / ~ { F ( 1 - a - 1 ) - 2 D ( a ) } ( n h ) - 1+0/ ~) + a2h 2 > O.

t t ma y b e s h o wn n u me r i c a l l y t h a t F ( 1 _ _ ~ - - 1 ) __ 2 D ( o L ) > 0 f or al l a > 7. 55, wh i c h

c o r r e s p o n d s t o a t l e a s t 6. 55 a b s o l u t e mo me n t s f i ni t e. Th e r e f o r e , s i nce a2 > 0, we

c a n e x p e c t ( 2. 7) t o hol d f or a l l s uf f i c i e nt l y l i g h t - t a i l e d d i s t r i b u t i o n s .

T h e " p e n a l t y me t h o d " f or s e l e c t i n g h is a p p r o p r i a t e i n a wi d e v a r i e t y of di f f er -

e n t cas es , i n c l u d i n g t h o s e wh e r e t h e d e n s i t y f h a s e x p o n e n t i a l l y d e c r e a s i n g , r a t h e r

t h a n r e g u l a r l y v a r y i n g , t ai l s . Ri g o r o u s a n a l y s i s of t h a t c a s e r e q u i r e s a l i t t l e a d d i -

t i o n a l t h e o r y , wh i c h is d e v e l o p e d i n t h e n e x t s u b s e c t i o n . S u b s e c t i o n 2. 5 p r e s e n t s

n u me r i c a l e x a mp l e s wh i c h i l l u s t r a t e t h e p e r f o r ma n c e of t h e p e n a l t y me t h o d .

2. 4 Theory f or di st ri but i ons whose densi t i es are not regularly varyi ng

T h e e mp i r i c a l r ul e d e v e l o p e d i n S u b s e c t i o n 2. 3 is f or t h e c a s e wh e r e f ( x )

blxl - ~ as ]x] ~ co, f or c o n s t a n t s b > 0 a n d a > 1. A s l i g h t l y mo r e g e n e r a l

cas e, wh e r e f ( x ) ~ bl x -~1 a n d f ( - x ) ~ b2x -~2 as x --+ oo, ma y b e t r e a t e d b y

a p p l y i n g r e s u l t s i n Ha l l ( ( 1987) , Se c t i o n 4). An d s i mi l a r a r g u me n t s ma y b e u s e d

t o devel op formul ae for t he ease where f ( x ) ~ x- lLl( ) and

as x ~ e c , wh e r e L1 a n d L2 a r e s l owl y v a r y i n g f u n c t i o n s . T h e wo r k i n t h e p r e s e n t

s u b s e c t i o n is a i me d a t d e v e l o p i n g t h e o r y a p p l i c a b l e t o t h e c a s e of d e n s i t i e s wh o s e

t a i l s d e c r e a s e e x p o n e n t i a l l y qui ckl y, a t a f a s t e r r a t e t h a n a n y o r d e r of r e g u l a r

v a r i a t i o n ; or wh i c h d e c r e a s e i n a ma n n e r wh i c h is n e i t h e r r e g u l a r l y v a r y i n g n o r

e x p o n e n t i a l l y d e c r e a s i n g . We d e a l o n l y wi t h t h e c a s e o f p = 1 d i me n s i o n .

Ou r f i r s t r e s u l t c o n c e r n s t h e c a s e of d i s t r i b u t i o n s wh o s e d e n s i t i e s d e c r e a s e l i ke

c ons t , e x p ( - c o n s t . [ x [ ~ ) , f or s o me a > 0. Ou r r e g u l a r i t y c o n d i t i o n , r e p l a c i n g ( 2. 3) ,

is

(2. 8)

f > 0 o n ( - o c , oc) , f~ e x i s t s a n d is c o n t i n u o u s on ( - o c , e c ) , a n d f or

c o n s t a n t s b11, b12, bin, b22, a l , a 2 > 0 we h a v e f ' ( x ) ~ ( d/ dz ) bl l

e x p ( - b 1 2 x a) a n d f ' ( - x ) ,.o (d/dx)b21 e x p ( - b 2 2 x ~) as x --~ oo.

De f i ne a2 as a t ( 2. 2) . Le t 7-t~ d e n o t e a c o l l e c t i o n of r e a l n u mb e r s h s a t i s f y i n g

n - l + e < h < n - e f or e a c h h C ~ a n d ~7-t ~ = O( n C) , wh e r e 5, C > 0 a r e f i xed

c o n s t a n t s .

76 PETER HALL AND SALLY C. MORTON

THEOREM 2.1. As s ume condition (2.8). Then

(2.9)

2

1 E b i 2 ( n h ) - l ( l g n h ) l / ~ - a2h2

i =1

+ (nh)- (lognh) + h

uni f orml y i n h E ?in, as n --* ~ .

Proofs of Theor ems 2.1 and 2.2 are out l i ned in Subsect i on 2.6.

The penal t y met hod of bandwi dt h choice, i nt roduced in Subsect i on 2.3 and

discussed t here in t he cont ext of densities wi t h regul arl y varyi ng tails, is also ap-

propri at e for t he present case of exponent i al l y decreasing densities. To appreci at e

t he t heor y appr opr i at e for this case we must first develop anal ogues of formulae

(2.4) and (2.5); t hese are,

(2.1o)

[ = _ f - Q = [ - S ( h ) + o p ( n h ) - l ( l o g n h ) 1 / ~ + h 2 ,

where

2

1

(2.11) S( h) = -~ E b i 2 ( n h ) - l ( l g n h ) ] / ~ + a2h2'

i =1

(The penal t y funct i on Q is defined by (2.6), and t he underl yi ng di st ri but i on is

assumed t o sat i sfy (2.8).) Formul ae (2.10) and (2.11) follow from (2.9) and t he

result

Q, : E b i 2 ( n h ) - l ( l g n h ) l / a i Op ( n h ) - l ( l o g n h ) 1/a~ ,

i =1

which may be proved by an argument similar t o t hat empl oyed t o derive Theo-

rem 2.1.

Appl i cat i on of t he penal t y met hod involves choosing h = h t o maxi mi ze [, and

t hen t aki ng _](h) as t he est i mat or of I. Of course, [ ( h) is an al t ernat i ve choice,

but we claim t hat t he asympt ot i c bi as of t he l at t er is larger in absol ut e value t han

^

in t he case of I ( h) . The st andar d devi at i ons of bot h est i mat ors are identical, up

t o and including t erms of t he same order as t he biases. To appreci at e t hese points,

put a = mi n( al , a2), b = (bl b2)/2 if o~ 1 -m- OJ2, b = b~/2 if a l ~ a2 and a = ai .

Observe t hat by (2.9) and (2.11),

S( h) ~ b ( n h ) - l ( l o g n h ) 1/~ + a2h 2,

- i = b ( n h ) - l ( l o g n h ) 1/~ a2h 2 + Op { ( n h ) - l ( l o g n h ) 1/~ h2}.

ON THE ESTIMATION OF ENTROPY 77

Ther ef or e, t h e b a n d wi d t h whi c h ma xi mi z e s [ , or ( a s y mp t o t i c a l l y equi val ent l y)

whi c h mi ni mi z e s S( h ) , sat i sfi es

~ { ( b / 2 a 2 ) ( 2 / 3 ) l / a r t _ l ( l o g n ) l / a } 1/3.

Hence,

f ( I z ) - / = ( 2 a 2 ) 1 / a b 2 / 3 ( 3 / 2 ) U(3a) { 1 - ~ ( 2 / 3 ) U a } { n - l ( l o g n ) Z / ~ } 2/3

+ O p [ { n - l ( l o g n ) U c ~ } 2 / a ] ,

- I - O p [ { T z - l ( l o g f t ) l / a } 2 / 3 ] .

No t i n g t he s e t wo f or mul ae, a n d t h e f act t h a t [ is unbi a s e d for I , we d e d u c e t h a t

/~(h) has a s y mp t o t i c a l l y pos i t i ve bi as, wher eas [ ( h ) has a s y mp t o t i c a l l y ne ga t i ve

bi as; a n d t h a t t h e a bs ol ut e val ue of t h e a s y mp t o t i c bi as is gr e a t e r i n t h e case of

[ ( h ) t h a n i t is for I ( h ) .

Ou r l ast r es ul t i n t h e pr e s e nt s ubs e c t i on t r e a t s a wi de var i et y of di f f er ent

cont ext s . I t is i n t e n d e d t o s how t h a t t h e r e exi st s a ver y l ar ge r a nge of s i t u a t i o n s

wher e, for s ui t a bl e choi ce of b i n wi d t h h, t h e r es ul t /~ = _f + o p ( n - 1 / 2 ) obt ai ns .

Thi s f or mul a i mpl i es t h a t _T is r o o t - n c ons i s t e nt for I , a n d al so t h a t n l / 2 ( f - I ) is

a s y mp t o t i c a l l y N( 0 , ~r2), wher e (7 2 = f f ( l o g f ) 2.

Si nce our a s s u mp t i o n s a b o u t f do n o t expl i ci t l y des cr i be t ai l b e h a v i o u r t h e n

i t is not possi bl e, i n t h e c ont e xt of t h e r es ul t s t a t e d bel ow, t o be as expl i ci t a b o u t

t h e size of s e c ond- or de r t e r ms as we wer e i n t h e case of di s t r i but i ons wi t h r e gul a r l y

va r yi ng or e xpone nt i a l l y de c r e a s i ng t ai l s.

We a s s ume t h e fol l owi ng r e gul a r i t y c o n d i t i o n on f :

( 2 . 1 2 )

f > 0 on ( - o o , ~ ) , f " exi st s on ( - o o , ~ ) a n d is mo n o t o n e on

( - o e , a) a n d on (b, co) for s ome - oo < a < b < oc, If"] + [ i f I f ]

/ ] f " l + / ( f , ) 2 f - 1 < ec, a n d for s ome e > 0, is b o u n d e d ,

s u p f l f " ( x ) l { l o g f ( x + y ) } 2 d x < oo.

lyl<_~a

Let x l n , X2n d e n o t e r es pect i vel y t h e l ar gest negat i ve, l ar ges t pos i t i ve s ol ut i ons of

t h e e q u a t i o n f ( x ) = ( n h ) - 1 .

THEOREM 2.2. A s s u m e c o n d i t i o n (2.12) o n f , a n d t h a t t h e b a n d w i d t h h i s

c h o s e n s o t h a t

(2.13)

f ( f [ l og f [ + h i = o ( n - 1 / 2 ) .

78 PETER HALL AND SALLY C. MORTON

T h e n E l f - f ] : o ( n - 1 / 2 ) as n ~ oo.

Two exampl es serve t o i l l ust r at e t ha t for a ver y wi de cl as s of di st r i but i ons,

h ma y be chosen t o ensure t ha t (2.13) holds. For t he first exampl e, assume t ha t

f ( x ) ~ btxl - a as Ixl --+ oo, where b > 0 and c~ > 3. The n x 2n - - Xl n ~ b ' ( n h ) 1/ ~,

where b' > 0, and

f t log f [ = O{(nh) -1+(1/~) l ognh} .

-oc,xl~)u(x2,~,oc)

It follows t ha t if h ~ const . n -(1/ 4)-e for some 0 < e < (c~ - 3)/{4(c~ - 1)} t he n

(2.13) holds.

For t he second exampl e, assume t ha t f ( x ) ~ bl exp ( - b2l xl ~) as txl --~ oc,

where bl, b2, a > 0. The n x2n - x l ~ ~ b 3 ( l o g n h ) 1/ ~, where b3 > 0, and

f ( f ] l o g f [ = O{(nh)-l (l ognh)l /~}.

-cc,xln)u(x2~,cc)

It follows t ha t if h ~ cons t . n -(1/4)-~ for some 0 < e < 1/ 4 t he n (2.13) holds.

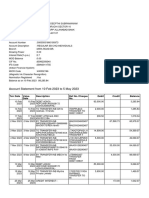

2.5 S i m u l a t i o n s t u d y

In t hi s subsect i on we di scuss t he resul t s of a s i mul at i on s t udy for five uni -

var i at e di st r i but i ons wi t h bi nwi dt h h chosen by t he empi r i cal rul e out l i ned in

Subsect i on 2.3. The five di s t r i but i ons chosen are a s t a nda r d nor mal (an exam-

ple of a di s t r i but i on wi t h exponent i al l y decr easi ng t ai l s), and four St udent ' s t

di s t r i but i ons (exampl es of di st r i but i ons wi t h r egul ar l y var yi ng t ai l s). The four t

di s t r i but i ons have degrees of f r eedom y equal t o 3, 4, 6 and 10. In t he not a t i on

o f t he previ ous subsect i ons, t he r at e of t ai l decrease a for a r egul ar l y var yi ng dis-

t r i but i on is equal t o u + 1. Thus, t he t di s t r i but i on wi t h t hr ee degrees of f r eedom

or c~ = 4, has t he smal l est i nt eger number of degrees of f r eedom for whi ch /~ is

as ympt ot i cal l y equi val ent t o [ up t o t er ms of smal l er or der t h a n n - 1 / 2 , as shown

in Subsect i on 2.2.

The t r ue val ue of negat i ve ent r opy I is known for t he s t a nda r d nor mal di st ri -

but i on and is equal t o l og( x/ - ~) + 1/ 2 = - 1. 42. Of course, t hi s di s t r i but i on has

ma xi mum ent r opy among all cont i nuous di st r i but i ons wi t h mean zero and st an-

dar d devi at i on one. Whi l e I is not known anal yt i cal l y for a t di st r i but i on, it ma y

be es t i mat ed vi a numer i cal i nt egr at i on.

For each of t he five di st r i but i ons we i nvest i gat ed t he behavi our of ] and [

for four di fferent sampl e sizes, n = 50, 100, 200, and 500. For each sampl e size

we conduct ed 100 si mul at i ons and appr oxi mat ed expect ed val ues by t aki ng t he

average over t he si mul at i ons. The quant i t i es r epor t ed are E( f ) , [ E ( f - I)211/2

(i.e. r oot of t he mean squar ed error), and E(/~) - I (bias). Si mi l ar quant i t i es are

cal cul at ed for [. The r esul t s are shown i n Tabl e 1.

For each par t i cul ar sampl e, we cal cul at e _ / = ~f - Q over a gri d of bi nwi dt h

val ues h = 0.1, 0 . 2 , . . . , H, where H is t he smal l est mul t i pl e of 0.1 whi ch is l arge

enough t o cont ai n t he ent i re sampl e. Th a t is, when h = H, t he hi s t ogr am has onl y

ON THE ES TI MATI ON OF E NT R OP Y

Tabl e 1. Per f or mance of hi s t ogr am est i mat or s, I and ~r.

79

n E( I ) [ E( I - i)211/2 E(-?) - I E( I ) [ E( I - i)211/2 E( I ) - I h* quant i l es

N( 0, t ) anal yt i c val ue = - 1 . 4 2

50 - 1 . 3 6 0.131 0.057 - 1 . 5 0 0.135 - 0. 079

100 - 1 . 3 8 0.089 0.035 - 1 . 4 7 0.093 - 0. 049

200 - 1 . 4 1 0.050 0.005 - 1 . 4 6 0.064 - 0. 042

500 - 1 . 4 2 0.030 0.002 - 1 . 4 4 0.037 - 0. 022

t (dof = 10) i nt egr at ed val ue = - 1 . 5 2

50 - 1 . 4 2 0.161 0.099 - 1 . 5 8 0.136 - 0 . 0 5 8

100 - 1 . 4 7 0.100 0.052 - 1 . 5 7 0.095 - 0. 045

200 - 1 . 5 1 0.062 0.012 - 1 . 5 6 0.075 - 0. 043

500 - 1 . 5 1 0.039 0.008 - 1 . 5 4 0.044 - 0. 022

t (dof = 6) i nt egr at ed val ue = - 1 . 5 9

50 - 1 . 4 7 0.185 0.125 - 1 . 6 3 0.129 - 0. 042

100 - 1 . 5 2 0.123 0.074 - 1 . 6 3 0.104 - 0 . 0 3 6

200 - 1 . 5 6 0.078 0.032 - 1 . 6 2 0.078 - 0. 033

500 - 1 . 5 8 0.042 0.006 - 1 . 6 2 0.051 - 0. 028

t (dof = 4) i nt egr at ed val ue = - 1 . 6 8

50 - 1 . 5 0 0.233 0.174 - 1 . 7 0 0.156 - 0. 022

100 - 1 . 5 8 0.145 0.100 - 1 . 7 1 0.105 - 0. 028

200 - 1 . 6 3 0.081 0.048 - 1 . 7 1 0.080 - 0 . 0 3 6

500 - 1 . 6 6 0.049 0.020 - 1 . 7 0 0.051 - 0. 022

t ( dof = 3) i nt egr at ed val ue = - 1 . 7 7

50 - 1 . 5 8 0.247 0.189 - 1 . 7 8 0.159 - 0. 019

100 - 1 . 6 5 0.159 0.114 - 1 . 7 9 0.112 - 0. 026

200 - 1 . 7 0 0.101 0.068 - 1 . 7 9 0.079 - 0. 026

500 - 1 . 7 4 0.058 0.030 - 1 . 7 9 0.055 - 0. 022

9.5, 0.8, 1.2, 1.7)

0.6, 0.7, 0.9, 1.0)

0.6, 0.6, 0.7, 0.9)

0. 5, 0. 5, 0. 6, 0. 6)

0.4, 0.8, 1.3, 2.4)

0. 5, 0. 7, 0. 9, 1. 1)

0. 6, 0. 7, 0. 8, 0. 9)

0.5, 0.5, 0.6, 0.7)

0. 4, 0. 7, 1. 4, 2. 5)

0.4, 0.6, 0.9, 1.2)

0 . 5 , 0 . 6 , 0 . 8 , 0 . 9 )

0.5, 0.6, 0.6, 0.7)

( 0 . 4 , 0 . 5 , 1 . 2 , 2 . 1 )

(0.4, 0.6, 0.8, 1.4)

(0.4, 0.5, 0.7, 0.9)

(0.5, 0.5, 0.6, 0.7)

( 0 . 4 , 0 . 6 , I . i , 2 . 3 )

( 0 . 4 , 0 . 5 , 0 . 9 , 1 . 2 )

(0.4, 0.5, 0.7, 0.9)

(0.4, 0.5, 0.6, 0.7)

one bin. For each particular h, the bins are centred at zero. The first bin covers

( - h i 2 , h i 2 ) , the second ( - 2h/ 2, - h / 2 ] , the third [ h / 2 , 3h/2), and so on. Thus for

each simulation we have [(h) for a grid of h values spaced by 0.1.

There are a number of options for choosing the optimal binwidth h*. We could

take as h* t hat h which maximizes ~f(h), as our empirical rule suggests. However,

in practice I(h) is bumpy due to sampling fluctuations and to the discreteness

of histogram estimators. Thus, we smooth [ ( h ) using running medians of seven,

producing 5~( h) say and thus choose the largest h which maximizes [ s , ~ ( h ) as

our h*. The lower quartile, median, upper quartile and the 90th percentile of h*

over the simulations are given in Table 1. Since our previous theory was based on

the unsmoothed/~(h) and ~f(h), we use as our estimates I(h*) and [ ( h * ) . In our

experience, this approach works well. A cursory investigation of other strategies,

such as basing the smoothing window width on sample size, using smoothed ver-

sions/~s,~(h*) and 5,~(h*) as estimates, or using the results of a search on a coarse

grid to target a search area on a finer grid, did not produce significant changes in

8 0 P E T E R H A L L A N D S A L L Y C . M O R T O N

t he results.

Several comment s may be made about t he results in Table 1. Fur t her sim-

ul at i on investigation is war r ant ed given t he fact t hat onl y 100 si mul at i ons were

conduct ed for each si t uat i on. However, expl orat i on revealed t hat t wo st andar d

devi at i on confidence intervals for t hese si mul at i on-based approxi mat i ons of/~ and

[ i ndi cat e devi at i ons of at most t hree poi nt s in t he last significant figure for all

sampl e sizes and di st ri but i ons considered. As t he number of degrees of freedom

increases from t hree t o effectively infinity for t he st andar d normal, and t hus t he

tail rat e of decrease c~ increases, t he mean squared error of/ * decreases for any

specific sampl e size n, as predi ct ed by (2.1). In addition, t hr oughout t he t abl e t he

rat i o of t he square root of t he mean squared errors for t wo sampl e sizes nl and

n2 is close t o n-11/2 : n-21/2. For t he chosen sampl e sizes n = 50, 100, 200, and

500, t hese rat i os are bet ween 0.63 and 0.71. The r epor t ed error ratios, for exampl e

0.123 : 0.185 = 0.67 for t he t di st ri but i on wi t h 6 degrees of freedom at n = 50 and

n = 100, are bet ween 0.53 and 0.70.

For most of t he di st ri but i ons, [ t ends t o be more bi ased t han /~, a resul t

shown in Subsect i on 2.3 t o be t rue asympt ot i cal l y: The bias of _/is negat i ve and

larger in absol ut e value t han t he positive bi as of I for t he st andar d normal. Thi s

relationship was shown in Subsect i on 2.4 t o be t rue asympt ot i cal l y for di st ri but i ons

wi t h exponent i al l y decreasing tails.

The vari at i on in t he value of h* decreases as n increases, due t o reduced

sampling fluctuation. In addition, it seems t o decrease slightly as t he tail rat e

increases.

2.6 Proofs

Outline of proof of Theorem 2.1

For t he sake of br evi t y we i ndi cat e only t hose places where t he pr oof differs

significantly from t hat of Theor em 4.1 of Hall (1990), t he differences occurri ng

in t he deri vat i on of appr oxi mat e formulae for "E(Tk2)" (k = 1, 2). The new

approxi mat e formulae, in t he case of large s and small r, may be descri bed as

follows. Put gk(x) = bkl exp (-bk2x~), and let M( x ) denot e a Poi sson-di st ri but ed

r andom variable having mean x. Then E(Tk2) is appr oxi mat ed by

f

h - l g ~ l { ( n h ) - 1}

tk =- n - 1 E( M{ nhg k( hx ) } log [M {nhgk (hx)}/nhg (hx)])dx

Jh-19~-1(1 )

f i

- - ( n h ) E[M(y) l o g { M ( y ) / y } ] d y g - l { ( n h ) - l y }

= ( n h ) -1 yE( l og[{M( y) + 1}/ y]) dy{b- ~l og( bkl nh/ y) } ~/ ~

1

Since E(log[{M(y) + 1}/y]) ~-, (2y) -1 as y --+ oo, t hen

j n h

t k ,",., (2?'th) - 1 d y { b k 2 1 1 o g ( b k l h / y ) } 1/ak

h-l/ k (2 h)-X(log

~k2

ON THE ESTIMATION OF ENTROPY 81

As in the proof of Theorem 4.1 of Hall (1990), the "variance component" of _T-[ is

asymptotic to t l + t2; this produces the series on the right-hand side of (2.9). The

next term, the quantity - a 2 h 2, represents the "bias component" and is obtained

in a manner identical to t hat in Hall (1990).

O u t l i n e o f p r o o f o f T h e o r e m 2.2

We begin with a little notation. Let

qi =n-I E (N~) : /B f ( x ) d x ,

i

representing the probability that a randomly chosen data value falls in bin Bi.

Put/~ = n -1 i Ni log ( q i / h ) . Theorem 2.2 is a corollary of the following result.

LEMMA 2.1. I f h = h ( n ) --+ 0 a n d n h --~ oo a s n ~ o o , a n d i f (2.12) h o l d s ,

t h e n

(2.14)

(2.15)

( 2 . 1 6 )

IE(I)-I[=O(h2),

var(I - - / ) = O( n- l h2) ,

E I - i l = O { ( n h ) - 1 ( x 2 - X l )

+ f(-zc,xl~)u(x2~,~c)

fl log f[ + h 2 } + o(n - 1/ 2)

a s n ----~ o c .

For the remainder, we confine attention to proving (2.14)-(2.16). We may

assume without loss of generality that the constant v, in the definition of the

centre v + i h of the histogram bin Bi, equals zero.

( i ) P R O O F O F ( 2 . 1 4 ) .

O b s e r v e t h a t E ( I ) = f f l o g g , w h e r e g ( x ) - - q i / h f o r x C B i . F o r s m a l l h ,

a n d a l l x ,

f 1/2

g ( x ) = - 1 / 2 f ( x + i h - x + h y ) d y = f ( x ) + ( i h - x ) f ' ( x ) + R e ( x ) '

where IRl(x)l _< C l h 2 { l f " ( x - h ) t + I f " ( x + h)l} and Ct, C2 , . . denote positive

constants. Thus,

(2.17) logg(x) = log f ( x) + ( i h - x ) f ' ( x ) f ( x ) - 1 R 2 ( x )

where

(2.18) [R2(x)[ <_ C2 ( h 2 [ { f ' ( x ) / f ( x ) } 2 + { I f " ( x - h ) t + [ f " ( x + h ) [ } f ( x ) - 1 ]

x I [ C z h 2 { l f ' ( x - h)l + I f " ( x + h ) l } f ( x ) - 1 < 1/2]

+ {]l ogf(x)l + l l o g / ( x - h)l + [ l ogf ( x + h)l }

x I [ C l h 2 { l f " ( x - h)l + I f " ( x + h ) [ } f ( x ) - 1 > 1/2]).

82 PETER HALL AND SALLY C. MORTON

The last i nequal i t y entails

Therefore, wri t i ng i = i ( x) for t he index of t he bl ock Bi cont ai ni ng x,

which establishes (2.14).

(ii) PROOF OF (2.15).

Observe t hat

whence

where g is as in (i) above. We may prove from (2.17) and (2.18) t hat

which t oget her wi t h (2.19) implies (2.15).

(iii) PROOF OF (2.16).

Define Ai = ( Ni - n q i ) / (nqi), and let ~-~', ~-~" denot e summat i on over values

of i such t hat nq~ > 1, nq~ < 1 respectively. Wri t e m for t he number of i' s such

t hat nq~ > 1. Since

ON THE ESTI MATI ON OF ENTROPY 83

a n d Il og(1 + u ) - u t <_ 2 { u 2 + l l o g ( l + u ) l f ( u < 1 / 2 ) } t h e n

E/ E '

( 2 . 2 0 ) n i l - 71 _< N~Zx~ + 2 N ~ Z x ~

i

i

+>_2 llog(l+Zxdl

i

Now, Ni Ai = ( nqi ) - 1 ( N i - nqi) 2 + N i - nqi, a n d so

/ I f

<_ m + n 1/2 1 - }- ~' q~ = m + o( n l / 2) ,

i

si nce 2 ~ ' q { ~ 1. Li kewi se, n o t i n g t h a t Ni A~ = ( n q i ) - 2 ( N i - nqi) 3 + ( n q i ) -

n q ) 2 - , we see t h a t

E ( ~ i ' N i A ~ ) = E ' ( n q i ) - l ( l - 3 q i + 2 q i ) + E ' ( i - q i ) i

I f Ai < - 1 / / 2 t h e n 1 + Ai = N i / ( n q i ) < 1/ 2, a n d so

Ni l l og (1 + A{)I = Ni l og { ( n q i ) / N { } <_ N i log (nq{),

whe nc e

( ) l j 2

2 ~ - ~ n q ~ l l o g ( n q i ) l P A i < - ,

i

t h e l ast l i ne by t h e Ca uc hy- Sc hwa r t z i nequal i t y. By Be r ns t e i n' s i nequal i t y,

P / ki < - - = P - n q i < - ~ n q i < exp - n q i ,

a n d so, def i ni ng C3 = s ups >0 xl log x I e x p ( - x / 3 2 ) , we have

E { ~ ' N i l l o g ( l A i ) l I ( A i < - - 2 ) } < - 2 C 3 m .

84 PETER HALL AND SALLY C. MORTON

Combi ni ng t he es t i mat es f r om (2.20) down we deduce t h a t

(2.21) n E [ I - I [ < ( 5 +4 C3 ) m+o ( n l / 2 ) + E{ Ni l l o g ( l + Ai ) l } .

i

Observe n e x t t h a t E { N i [ l o g ( 1 + A~)[} < E ( N i l o g N ~ ) + n q ~ l l o g ( n q ~ ) [ . I f

nqi _< 1 t h e n E( Ni l o g Ni ) <_ E( Ng) <_ 2nqi, a nd I l o g ( n q d l = - l o g ( n q i ) <

- log (qi/h), whence

}--~"

(2.22) E " E{ Ni l log( 1 + Ai ) l } -< 2 n Z " q i - n qi l og(qi / h).

i i i

Wr i t e B for t he uni on of Bi over i ndi ces i sat i sf yi ng ?tqi < 1, a nd l et g be as i n

(i). By t h a t r esul t , f f [ l og( f / 9) l = O( h2) , a nd so

E " q i l o g ( q i / h ) = f B f l o g g = f f l o g f +O(h2 ).

i

P u t D = ( - o c , Xln) U (x2n, ec) . In vi ew of t he monot oni c i t y of t he t ai l s of f ,

- - f B f l o g f = f D f l l o g f l + o ( n - 1 / 2 ) ,

i mpl yi ng t h a t

- qilog(qi/h) = f l l o g f l + O( h 2) + o(n-1/2).

i

Similarly,

z"lo

qi = f + O(h2) + o(n-U~) < f[ log f] + O(h2) + o(n-1/2),

i

whence by (2.22),

?'t-1 E E { N~I l o g ( 1 + ~X ~)[} ~ 3 f ~ f l l o g f l + O(h ~) + (~-~/~).

i

The desi r ed r esul t (2.16) follows f r om t hi s f or mul a a nd (2.21).

ON THE ESTIMATION OF ENTROPY 85

3. Kernel estimators

3.1 Methodology

Let XI , . . . , Xn denot e a r andom sampl e dr awn from a p-vari at e di st ri but i on

wi t h densi t y f , let K be a p-vari at e densi t y function, and wri t e h for a (scalar)

bandwi dt h. (In practice, t he dat a woul d t ypi cal l y be st andar di sed for scale before

using a single bandwi dt h; see Silverman ((1986), p. 84 ft.)) Then

/ , ( ~ ) = { ( n - 1 ) h ~ } - ~ E K{ (x- Xj)/h}

j #i

i s a " l e a v e - o n e - o u t " e s t i m a t o r o f f , a n d

fk = n- 1 ~ log f i ( Xi )

i =1

is an est i mat or of negat i ve entropy, I.

In t he case p = 1, propert i es of Ik have been st udi ed in t he cont ext of esti-

mat i ng Kul l back-Lei bl er loss (1987). Ther e it was shown t hat , provi ded t he kernel

funct i on has appr opr i at el y heavy tails (e.g. if K is a St udent ' s t density, r at her

t han a Normal densi t y), and if t he tails of f are decreasing like [xl - ~ as ]x] --* 0%

t hen

(3.1) I k = [ - { C t ( n h ) - 1+0/ ~) +C2 h 4 } +Op { ( n h ) -1+(1/~) +h 4 } ,

where C1, C2 > 0.

More generally, suppose p ~ 1 and t he tails of f decrease like []x[] - ~, say

f ( x ) = c1(c2 + ]Ix]]2) - ~/ 2 for Cx,C~ > 0 and a > p. (The l at t er condi t i on is

necessary t o ensure t hat this f is integrable. ) Then, defining [ = n -1 log f ( Xi ) ,

we have

(3. 2) ~ = i - { c l ( ~ h p ) - I ( p / ~ ) + c 2 h 4 } + o p { ( ~ h ~ ) - I ( p / ~ ) + h 4 } ,

for positive const ant s C1 and C2. The t echni ques of pr oof are very similar t o t hose

in Hall (1987), and so we shall not el abor at e on t he proof.

Of course, [ is unbi ased and r oot - n consi st ent for I, wi t h variance n - 1 .

{ f ( l o g f ) 2 f - Z2}. The second order t er m in (3.2) is

J = C1 ( nh p) -l +(p/ ~) + C2h4 '

and is minimized by t aki ng h t o be of size n - a where

( 3 . 3 ) a - - ( ~ - ; ) / { . ( ; + 4) - ; 2 }

Then J is of size re -4a, which is of smaller order t han n -1/ 2 if and onl y if 1 _< p < 3

and a > p(8 - p ) / ( 4 - p ) . When p = 1 this reduces t o c~ > 7/ 3, which is equivalent

86 PETER HALL AND SALLY C. MORTON

to existence of a moment higher t han the 1'rd. (For example, finite variance

s u m c e s . )

Recall from Subsection 2.2 t hat histogram estimators only allow root-n con-

sistent estimation of entropy when p = 1. We have just seen t hat nonnegative

kernel estimators extend this range to 1 _< p _< 3, and so t hey do have advantages.

The ease p = 2 is of practical interest since practitioners of exploratory projection

pursuit sometimes wish to project a high-dimensional distribution into two, rather

t han one, dimensions. As noted above, in the case of a density whose tails decrease

like I[xl! - a we need

> 2 ( s - 2 ) / ( 4 - 2 ) = 6

if we are to get /~ = f + op(n -1/2) in p = 2 dimensions. This corresponds to the

existence of a moment higher t han the fourth.

Since C1 and C2 in formula ( 3 . 2 ) are both positive then a simple practical,

empirical rule for choosing bandwidth is to select h so as to maximize =/~k (h).

Now, it may be proved t hat (3.2) is available uniformly over h' s in any set ~n

such t hat ~-~n ~ (n--1+6, n-6) for some 0 < (5 < 1/2 and #~n = O(n C) for

some C > 0. If the maximization is taken over a rich set of such h' s then/~k =

i + Ov(n-4a), where a is given by (3.3), and so/~k =/ v + %(n_1/2) if 1 _< p < 3

and a > p( 8- p) / ( 4- p) .

In principle, the estimator -Tk may be constructed without using "leave-one-

out" methods. If we define

n

f ( x ) = (naP) - 1 E K{ ( x - X j ) / h }

j =l

then an appropriate entropy estimator is given by

n

Zk z ~ - l E log f ( X i )

i=1

n

= n - 1 Z l o g { (1 - n - l ) f (x d +

i=1

Here, as noted above, it is essential t hat the kernel have appropriately heavy tails;

for example, K could be a Student' s t density.

Formulae (3.1) and (3.2) continue to hold in this case, except t hat the constant

C1 is no longer positive. Compare formula (2.1), which is also for the case of an

estimator t hat is not constructed by the "leave-one-out" method. Thus, the band-

width selection argument described in the previous paragraph is not appropriate.

A penalty term should be subtracted before at t empt i ng maximization, much as in

the case described in Section 2.

3.2 Simulation study

This subsection describes a simulation study of the behaviour of our kernel esti-

mator of negative entropy, Ik. It is similar to the previous simulation st udy of the

histogram estimator presented in Subsection 2.5 and its interpretation is subject

ON T HE ES TI MATI ON OF E NT R OP Y 87

t o t h e s a m e c a v e a t r e g a r d i n g t h e n u m b e r o f s i m u l a t i o n s . I n a l l u n i v a r i a t e c a s e s

s h o w n , t h e k e r n e l u s e d i s a S t u d e n t ' s t w i t h f o u r d e g r e e s o f f r e e d o m , w h i c h i s h e a v y -

t a i l e d . I n t h e b i v a r i a t e c a s e , t h e k e r n e l wa s a p r o d u c t o f t w o s u c h f u n c t i o n s . T h e

b a n d w i d t h h c h o s e n i s t h a t w h i c h m a x i m i z e s I k ( h ) , t h i s b e i n g t h e e m p i r i c a l r u l e

d i s c u s s e d p r e v i o u s l y . T h e f u n c t i o n I k ( h ) i s n o t s m o o t h e d f i r s t b e f o r e h i s c h o s e n

a s i t d o e s n o t f l u c t u a t e a s m u c h a s t h e i n h e r e n t l y d i s c r e t e h i s t o g r a m e s t i m a t o r .

As a r e s u l t , t h e q u a n t i l e s o f h v a r y m u c h l e s s f o r t h e k e r n e l e s t i m a t o r e x a m p l e s

p r e s e n t e d i n T a b l e 2 t h a n f o r t h e a s s o c i a t e d h i s t o g r a m e s t i m a t o r e x a m p l e s o f

T a b l e 1.

Tabl e 2. Per f or mace of kernel es t i mat or .

n E ( I k ) [ E( I k - i )2]1/ 2 E ( I k ) - I h quant i l es

One di mensi on

N( 0, 1) t r ue val ue = - 1 . 4 2

50 - 1 . 4 5 0.110 - 0 . 0 3 2 (0.3, 0. 4, 0. 4, 0. 5)

100 - 1 . 4 4 0.070 - 0 . 0 2 3 ( 0. 3, 0. 3, 0. 4, 0. 4)

200 - 1 . 4 4 0.046 - 0 . 0 1 6 ( 0. 2, 0. 3, 0. 3, 0. 3)

t (dof = 6) i nt egr at ed val ue = - 1 . 5 9

50 - 1 . 6 3 0.142 - 0 . 0 4 0 (0.4, 0.5, 0.5, 0.6)

100 - 1 . 5 9 0.092 - 0 . 0 0 1 (0. 3, 0. 4, 0. 5, 0. 5)

200 - 1 . 6 1 0.067 - 0 . 0 2 3 (0.2, 0.3, 0.4, 0.4)

t (dof = 4) i nt egr at ed val ue = - 1 . 6 8

50 - 1 . 6 8 0.134 - 0 . 0 0 4 ( 0. 3, 0. 5, 0. 6, 0. 7)

100 - 1 . 6 8 0.106 - 0 . 0 0 6 (0.2, 0.4, 0.5, 0.5)

200 - 1 . 6 7 0.078 0.007 (0.2, 0.3, 0.4, 0.5)

t (dof = 3) i nt egr at ed val ue = - 1 . 7 7

50 - 1 . 7 5 0.151 0.013 (0.2, 0.5, 0.6, 0.7)

100 - 1 . 7 5 0.131 0.015 (0.2, 0.4, 0.5, 0.6)

200 - 1 . 7 5 0.086 0.013 (0.2, 0.3, 0.4, 0.5)

Two di mensi ons

N( 0, I ) t r ue val ue = - 2 . 8 4

50 - 2 . 9 4 0.174 - 0 . 0 9 8 ( 0. 4, 0. 5, 0. 5, 0. 5)

100 - 2 . 9 1 0.117 - 0 . 0 7 6 (0.4, 0.4, 0.4, 0.5)

200 - 2 . 8 8 0.082 - 0 . 0 4 6 (0.3, 0.4, 0.4, 0.4)

N( 0, V) cor r el at i on 0.8 t r ue val ue = - 2 . 3 3

50 - 2 . 4 8 0.207 - 0 . 1 4 9 (0.3, 0.3, 0.3, 0.4)

100 - 2 . 4 5 0.150 - 0 . 1 1 9 (0.2, 0.3, 0.3, 0.3)

200 --2. 40 0.107 - 0 . 0 7 2 (0. 2, 0. 2, 0.2, 0.3)

F o u r u n i v a r i a t e e x a m p l e s a r e p r e s e n t e d : t h e n o r m a l a n d t h r e e S t u d e n C s t

w i t h 6, 4 a n d 3 d e g r e e s o f f r e e d o m r e s p e c t i v e l y . I n g e n e r a l , t h e b i a s i s n e g a t i v e ,

a s p r e d i c t e d b y ( 3 . 1 ) , a n d t h e e r r o r i s l e s s t h a n t h a t f o r t h e a s s o c i a t e d h i s t o g r a m

88 PETER HALL AND SALLY C. MORTON

est i mat or examples, again as expected. The bias is positive for t he most heavy-

t ai l ed distribution, t he St udent ' s t wi t h t hree degrees of freedom, perhaps due to

t he fact t hat hi gher-order t er ms are having a large effect on t he expansi on (3.1).

Two bivariate examples are presented. Bot h are bivariate normals; in t he first,

component s are i ndependent , and in t he second, t he correl at i on coefficient is 0.8. In

each case, t he t r ue negative ent ropy is known. The kernel est i mat or performs well

in bot h examples, given t he small sample size. However, t he comput at i onal work

requi red t o calculate t he di st ance bet ween every pair of points makes t he kernel

est i mat or i nt ract abl e for expl orat ory proj ect i on pursuit. The binning per f or med

in t he hi st ogram est i mat or reduces t he work requi red in t he p = 1 case from O (n 2)

t o O (m2), where rn is t he number of bins. Unf or t unat el y this approach cannot be

used in t he p = 2 case, as discussed in Section 2.

Acknowledgements

The aut hors would like to t hank t he referees for t hei r helpful comment s and

suggestions.

REFERENCES

Fri edman, J. H. (1987). Expl or at or y pr oj ect i on pursui t , J. Amer. Statist. Assoc., 76, 817- 823.

Gol dst ei n, L. and Messer, K. (1991). Opt i mal pl ug-i n est i mat or s for nonpar amet r i c funct i onal

est i mat i on, Ann. Statist. (t o appear ) .

Hall, P. (1987). On Kul l back-Lei bl er loss and densi t y est i mat i on, Ann. Statist., 15, 1491-1519.

Hall, P. (1989). On pol ynomi al - based proj ect i on indices for expl or at or y pr oj ect i on pursui t , Ann.

Statist., 17, 589-605.

Hall, P. (1990). Akai ke' s i nformat i on cri t eri on and Kul l back-Lei bl er loss for hi st ogr am densi t y

est i mat i on, Probab. Theory Related Fields, 85, 449-466.

Hill, B. M. (1975). A simple general approach t o inference about t he t ai l of a di st r i but i on, Ann.

Statist., 3, 1163-1174.

Huber, P. J. (1985). Pr oj ect i on pur sui t (with discussion), Ann. Statist., 13, 435-525.

Joe, H. (1989). Est i mat i on of ent ropy and ot her functionals of a mul t i var i at e density, Ann. Inst.

Statist. Math., 41, 683-697.

Jones, M. C. and Sibson, R. (1987). Wha t is pr oj ect i on pur sui t ? (wi t h discussion), J. Roy.

Statist. Soc. Set. B, 150, 1-36.

Mort on, S. C. (1989). I nt er pr et abl e pr oj ect i on pursui t , Ph. D. Di ssert at i on, St anford University,

California.

Silverman, B. W. (1986). Density Estimation for Statistics and Data Analysis, Chapman and

Hall, London.

Vasicek, O. (1986). A t est for nor mal i t y based on sampl e entropy, J. Roy. Statist. Soc. Set. B,

38, 54-59.

Zipf, G. K. (1965). Human Behaviour and the Principle of Least Effort: An Introduction to

Human Ecology (Facsimile of 1949 edn. ), Hafner, New York.

Das könnte Ihnen auch gefallen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- OMM807100043 - 3 (PID Controller Manual)Dokument98 SeitenOMM807100043 - 3 (PID Controller Manual)cengiz kutukcu100% (3)

- Diagrama Hilux 1KD-2KD PDFDokument11 SeitenDiagrama Hilux 1KD-2KD PDFJeni100% (1)

- Portland Cement: Standard Specification ForDokument9 SeitenPortland Cement: Standard Specification ForHishmat Ezz AlarabNoch keine Bewertungen

- Reclaimer PDFDokument8 SeitenReclaimer PDFSiti NurhidayatiNoch keine Bewertungen

- Cleartrip Flight Domestic E-TicketDokument1 SeiteCleartrip Flight Domestic E-TicketSherjil OzairNoch keine Bewertungen

- 5154130662669586953-Advisor Application FormDokument1 Seite5154130662669586953-Advisor Application FormSherjil OzairNoch keine Bewertungen

- Identification Requirements: All You Need To Know About Money Laundering Regulations and How This Affects YouDokument2 SeitenIdentification Requirements: All You Need To Know About Money Laundering Regulations and How This Affects YouSherjil OzairNoch keine Bewertungen

- Iron-Rich Green VegetablesDokument1 SeiteIron-Rich Green VegetablesSherjil OzairNoch keine Bewertungen

- Reflections On Rilke and Jojo Rabbit by Harriet - Poetry FoundationDokument1 SeiteReflections On Rilke and Jojo Rabbit by Harriet - Poetry FoundationSherjil OzairNoch keine Bewertungen

- Representations of Space and Time in The Maximization of Information Flow in The Perception-Action LoopDokument47 SeitenRepresentations of Space and Time in The Maximization of Information Flow in The Perception-Action LoopSherjil OzairNoch keine Bewertungen

- Approved Secure English Language Test Centres: Provider Country Name of Test Centre Address City Exam Test Centre NumberDokument6 SeitenApproved Secure English Language Test Centres: Provider Country Name of Test Centre Address City Exam Test Centre NumberSherjil OzairNoch keine Bewertungen

- Unifying Count-Based Exploration and Intrinsic Motivation: ON Tezuma S EvengeDokument26 SeitenUnifying Count-Based Exploration and Intrinsic Motivation: ON Tezuma S EvengeSherjil OzairNoch keine Bewertungen

- Random Synaptic Feedback Weights Support Error Backpropagation For Deep LearningDokument10 SeitenRandom Synaptic Feedback Weights Support Error Backpropagation For Deep LearningSherjil OzairNoch keine Bewertungen

- A13 IFT2125 Intra1 enDokument7 SeitenA13 IFT2125 Intra1 enSherjil OzairNoch keine Bewertungen

- Inqlusive Newsrooms LGBTQIA Media Reference Guide English 2023 E1Dokument98 SeitenInqlusive Newsrooms LGBTQIA Media Reference Guide English 2023 E1Disability Rights AllianceNoch keine Bewertungen

- SCHEMA - Amsung 214TDokument76 SeitenSCHEMA - Amsung 214TmihaiNoch keine Bewertungen

- Tournament Rules and MechanicsDokument2 SeitenTournament Rules and MechanicsMarkAllenPascualNoch keine Bewertungen

- PERSONAL DEVELOPMENT (What Is Personal Development?)Dokument37 SeitenPERSONAL DEVELOPMENT (What Is Personal Development?)Ronafe Roncal GibaNoch keine Bewertungen

- Lecture No. 11Dokument15 SeitenLecture No. 11Sayeda JabbinNoch keine Bewertungen

- New Cisco Certification Path (From Feb2020) PDFDokument1 SeiteNew Cisco Certification Path (From Feb2020) PDFkingNoch keine Bewertungen

- 103-Article Text-514-1-10-20190329Dokument11 Seiten103-Article Text-514-1-10-20190329Elok KurniaNoch keine Bewertungen

- 1013CCJ - T3 2019 - Assessment 2 - CompleteDokument5 Seiten1013CCJ - T3 2019 - Assessment 2 - CompleteGeorgie FriedrichsNoch keine Bewertungen

- Diffrent Types of MapDokument3 SeitenDiffrent Types of MapIan GamitNoch keine Bewertungen

- Feb-May SBI StatementDokument2 SeitenFeb-May SBI StatementAshutosh PandeyNoch keine Bewertungen

- Detailed Lesson Plan (Lit)Dokument19 SeitenDetailed Lesson Plan (Lit)Shan QueentalNoch keine Bewertungen

- Bus105 Pcoq 2 100%Dokument9 SeitenBus105 Pcoq 2 100%Gish KK.GNoch keine Bewertungen

- RESUME1Dokument2 SeitenRESUME1sagar09100% (5)

- Form 1 1 MicroscopeDokument46 SeitenForm 1 1 MicroscopeHarshil PatelNoch keine Bewertungen

- " Suratgarh Super Thermal Power Station": Submitted ToDokument58 Seiten" Suratgarh Super Thermal Power Station": Submitted ToSahuManishNoch keine Bewertungen

- SUNGLAO - TM PortfolioDokument60 SeitenSUNGLAO - TM PortfolioGIZELLE SUNGLAONoch keine Bewertungen

- Bulk Separator - V-1201 Method StatementDokument2 SeitenBulk Separator - V-1201 Method StatementRoshin99Noch keine Bewertungen

- American J of Comm Psychol - 2023 - Palmer - Looted Artifacts and Museums Perpetuation of Imperialism and RacismDokument9 SeitenAmerican J of Comm Psychol - 2023 - Palmer - Looted Artifacts and Museums Perpetuation of Imperialism and RacismeyeohneeduhNoch keine Bewertungen

- Mericon™ Quant GMO HandbookDokument44 SeitenMericon™ Quant GMO HandbookAnisoara HolbanNoch keine Bewertungen

- General Introduction: 1.1 What Is Manufacturing (MFG) ?Dokument19 SeitenGeneral Introduction: 1.1 What Is Manufacturing (MFG) ?Mohammed AbushammalaNoch keine Bewertungen

- EceDokument75 SeitenEcevignesh16vlsiNoch keine Bewertungen

- Sample TRM All Series 2020v1 - ShortseDokument40 SeitenSample TRM All Series 2020v1 - ShortseSuhail AhmadNoch keine Bewertungen

- Program Need Analysis Questionnaire For DKA ProgramDokument6 SeitenProgram Need Analysis Questionnaire For DKA ProgramAzman Bin TalibNoch keine Bewertungen

- Mortars in Norway From The Middle Ages To The 20th Century: Con-Servation StrategyDokument8 SeitenMortars in Norway From The Middle Ages To The 20th Century: Con-Servation StrategyUriel PerezNoch keine Bewertungen

- Module 1 Dynamics of Rigid BodiesDokument11 SeitenModule 1 Dynamics of Rigid BodiesBilly Joel DasmariñasNoch keine Bewertungen

- Module 0-Course Orientation: Objectives OutlineDokument2 SeitenModule 0-Course Orientation: Objectives OutlineEmmanuel CausonNoch keine Bewertungen