Beruflich Dokumente

Kultur Dokumente

Neural Networks by Hajek

Hochgeladen von

baalaajeeCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Neural Networks by Hajek

Hochgeladen von

baalaajeeCopyright:

Verfügbare Formate

Neural Networks By Hajek

Table of contents

1 Introduction.............................................................................................................4

1.1 What is a neural network?..................................................................................4

1.2 Benefits of neural networks ...............................................................................6

1.3 Recommended Literature...................................................................................7

1.3.1 Books .....................................................................................................7

1.3.2 Web sites................................................................................................8

1.4 Brief history .......................................................................................................9

1.5 Models of a neuron ............................................................................................9

1.6 Types of activation functions...........................................................................10

1.6.1 Threshold activation function (McCullochPitts model) ....................10

1.6.2 Piecewise-linear activation function ....................................................11

1.6.3 Sigmoid (logistic) activation function .................................................11

1.6.4 Hyperbolic tangent function ................................................................12

1.6.5 Softmax activation function.................................................................13

1.7 Multilayer feedforward network ......................................................................13

1.8 Problems ..........................................................................................................14

2 Learning process...............................................................................16

2.1 Error-correction learning .................................................................................17

2.2 Hebbian learning..............................................................................................18

2.3 Supervised learning..........................................................................................19

2.4 Unsupervised learning .....................................................................................19

2.5 Learning tasks ..................................................................................................20

2.5.1 Pattern recognition...............................................................................20

2.5.2 Function approximation .......................................................................21

2.6 Problems ..........................................................................................................22

3 Perceptron .................................................................................................23

3.1 Batch learning ..................................................................................................26

3.2 Sample-by-sample learning .............................................................................26

3.3 Momentum learning.........................................................................................27

3.4 Simulation results.............................................................................................27

3.4.1 Batch training.......................................................................................27

3.4.2 Sample-by-sample training ..................................................................29

3.5 Perceptron networks.........................................................................................30

3.6 Problems ..........................................................................................................32

4 Back-propagation networks.............................................................................................33

4.1 Forward pass ....................................................................................................34

4.2 Back-propagation.............................................................................................35

4.2.1 Update of weight matrix w2 .................................................................36

4.2.2 Update of weight matrix w1 .................................................................37

4.3 Two passes of computation..............................................................................39

4.4 Stopping criteria...............................................................................................39

4.5 Momentum learning.........................................................................................40

4.6 Rate of learning................................................................................................41

4.7 Pattern and batch modes of training.................................................................42

4.8 Weight initialization.........................................................................................42

4.9 Generalization..................................................................................................43

4.10 Training set size ...............................................................................................45

4.11 Network size ....................................................................................................45

2 Neural networks.doc

4.11.1 Complexity regularization .................................................................46

4.12 Training, testing, and validation sets ...............................................................47

4.13 Approximation of functions.............................................................................47

4.14 Examples..........................................................................................................48

4.14.1 Classification problem interlocked spirals......................................48

4.14.2 Classification statistics.......................................................................51

4.14.3 Classification problem overlapping classes ....................................52

4.14.4 Function approximation .....................................................................56

4.15 Problems ..........................................................................................................57

5 The Hopfield network.......................................................................................................61

5.1 The storage of patterns.....................................................................................62

5.1.1 Example ...............................................................................................63

5.2 The retrieval of patterns ...................................................................................63

5.3 Summary of the Hopfield model......................................................................64

5.4 Energy function................................................................................................65

5.5 Spurious states .................................................................................................66

5.6 Computer experiment.......................................................................................68

5.7 Combinatorial optimization problem...............................................................70

5.7.1 Energy function for TSP ......................................................................71

5.7.2 Weight matrix ......................................................................................72

5.7.3 An analog Hopfield network for TSP ..................................................74

5.8 Problems ..........................................................................................................75

6 Self-organizing feature maps ...........................................................................................76

6.1 Activation bubbles ...........................................................................................76

6.2 Self-organizing feature-map algorithm............................................................78

6.2.1 Adaptive Process..................................................................................79

6.2.2 Summary of the SOFM algorithm .......................................................80

6.2.3 Selection of Parameters........................................................................80

6.2.4 Reformulation of the topological neighbourhood................................81

6.3 Examples..........................................................................................................82

6.3.1 Classification problem overlapping classes ......................................82

6.4 Problems ..........................................................................................................84

7 Temporal processing with neural networks ...................................................................86

7.1 Spatio-temporal model of a neuron..................................................................87

7.2 Finite duration impulse response (FIR) model ................................................88

7.3 FIR back-propagation network ........................................................................90

7.3.1 Modelling time series...........................................................................93

7.4 Real-time recurrent network ............................................................................95

7.4.1 Real-time temporal supervised learning algorithm..............................96

8 Radial-basis function networks .......................................................................................99

8.1 Basic RBFN ...................................................................................................100

8.1.1 Fixed centre at each training sample..................................................100

8.1.2 Example of function approximation large RBFN...........................101

8.1.3 Fixed centres selected at random .......................................................102

8.1.4 Example of function approximation small RBFN ..........................102

8.1.5 Example of function approximation noisy data ..............................103

8.2 Generalized RBFN.........................................................................................104

8.2.1 Self-organized selection of centres ....................................................104

8.2.2 Example noisy data, self-organized centres....................................105

8.2.3 Supervised selection of centres..........................................................106

Neural networks.doc 3

9 Adaline (Adaptive Linear System) ................................................................................108

9.1 Linear regression............................................................................................108

9.2 Linear processing element .............................................................................109

9.3 Gradient method.............................................................................................109

9.3.1 Batch and sample-by-sample learning ...............................................110

9.4 Optimal hyperplane for linearly separable patterns (Adatron) ......................111

9.4.1 Separation boundary generated by a NeuroSolutions SVM ..............113

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (120)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- ChatgptDokument13 SeitenChatgptRezza Remax100% (5)

- DD2437 Lecture01 PHDokument32 SeitenDD2437 Lecture01 PHllNoch keine Bewertungen

- EE6351 Model QPDokument2 SeitenEE6351 Model QPwidepermitNoch keine Bewertungen

- Algorithms: A Novel Evolutionary Algorithm For Designing Robust Analog FiltersDokument22 SeitenAlgorithms: A Novel Evolutionary Algorithm For Designing Robust Analog FiltersbaalaajeeNoch keine Bewertungen

- ARPN JournalDokument12 SeitenARPN JournalbaalaajeeNoch keine Bewertungen

- Chemical Reaction Optimization For Population Transition in Peer-to-Peer Live StreamingDokument8 SeitenChemical Reaction Optimization For Population Transition in Peer-to-Peer Live StreamingbaalaajeeNoch keine Bewertungen

- Electrical Drives and ControlsDokument2 SeitenElectrical Drives and ControlsbaalaajeeNoch keine Bewertungen

- Hydro Thermal 93Dokument8 SeitenHydro Thermal 93Robben RainaNoch keine Bewertungen

- The PSO Family: Deduction, Stochastic Analysis and ComparisonDokument29 SeitenThe PSO Family: Deduction, Stochastic Analysis and ComparisonDheeraj Gopal DubeyNoch keine Bewertungen

- Combined Economic and Emission Dispatch Problems Using Biogeography-Based OptimizationDokument12 SeitenCombined Economic and Emission Dispatch Problems Using Biogeography-Based OptimizationbaalaajeeNoch keine Bewertungen

- Evco 1993 1 1Dokument25 SeitenEvco 1993 1 1baalaajeeNoch keine Bewertungen

- Improved Particle Swarm Optimization Combined With ChaosDokument11 SeitenImproved Particle Swarm Optimization Combined With ChaosbaalaajeeNoch keine Bewertungen

- A Comparison of Constraint-Handling Methods For The Application of ParticleDokument7 SeitenA Comparison of Constraint-Handling Methods For The Application of ParticlebaalaajeeNoch keine Bewertungen

- Multi-Objective Unbalanced Distribution Network Reconfiguration Through Hybrid Heuristic AlgorithmDokument8 SeitenMulti-Objective Unbalanced Distribution Network Reconfiguration Through Hybrid Heuristic AlgorithmbaalaajeeNoch keine Bewertungen

- MiaoDokument5 SeitenMiaobaalaajeeNoch keine Bewertungen

- Indian Railway Station Code IndexDokument3 SeitenIndian Railway Station Code IndexmayurshahNoch keine Bewertungen

- USA Is Commercially Strong Country The People of USA Are Very Hard WorkingDokument5 SeitenUSA Is Commercially Strong Country The People of USA Are Very Hard WorkingbaalaajeeNoch keine Bewertungen

- 01 LoadFlow R1Dokument209 Seiten01 LoadFlow R1Bryan MatulnesNoch keine Bewertungen

- Multiple Objective Particle Swarm Optimization Technique For Economic Load DispatchDokument8 SeitenMultiple Objective Particle Swarm Optimization Technique For Economic Load DispatchbaalaajeeNoch keine Bewertungen

- Neural NetworksDokument114 SeitenNeural Networksrian ngganden100% (2)

- Particle Swarm OptimizationDokument3 SeitenParticle Swarm OptimizationbaalaajeeNoch keine Bewertungen

- Tiruvarutpa of Ramalinga Atikal Palvakaiya Tanippatalkal (In Tamil Script, Tscii Format)Dokument59 SeitenTiruvarutpa of Ramalinga Atikal Palvakaiya Tanippatalkal (In Tamil Script, Tscii Format)api-19968843Noch keine Bewertungen

- ModellingDokument4 SeitenModellingbaalaajeeNoch keine Bewertungen

- Modeling and Simulation of Reduction ZoneDokument15 SeitenModeling and Simulation of Reduction ZoneJarus YdenapNoch keine Bewertungen

- USA Is Commercially Strong Country The People of USA Are Very Hard WorkingDokument5 SeitenUSA Is Commercially Strong Country The People of USA Are Very Hard WorkingbaalaajeeNoch keine Bewertungen

- Tiruvarutpa of Ramalinga Atikal Palvakaiya Tanippatalkal (In Tamil Script, Tscii Format)Dokument59 SeitenTiruvarutpa of Ramalinga Atikal Palvakaiya Tanippatalkal (In Tamil Script, Tscii Format)api-19968843Noch keine Bewertungen

- A New Optimizer Using Particle Swarm TheoryDokument5 SeitenA New Optimizer Using Particle Swarm TheorybaalaajeeNoch keine Bewertungen

- A New Optimizer Using Particle Swarm TheoryDokument5 SeitenA New Optimizer Using Particle Swarm TheorybaalaajeeNoch keine Bewertungen

- The Particle Swarm Optimization Algorithm, Convergence Analysis and Parameter SelectionDokument9 SeitenThe Particle Swarm Optimization Algorithm, Convergence Analysis and Parameter SelectionbaalaajeeNoch keine Bewertungen

- 7 Unit Test System DataDokument1 Seite7 Unit Test System DatabaalaajeeNoch keine Bewertungen

- The Particle Swarm Optimization Algorithm, Convergence Analysis and Parameter SelectionDokument9 SeitenThe Particle Swarm Optimization Algorithm, Convergence Analysis and Parameter SelectionbaalaajeeNoch keine Bewertungen

- Part7.2 Artificial Neural NetworksDokument51 SeitenPart7.2 Artificial Neural NetworksHarris Punki MwangiNoch keine Bewertungen

- CC511 Week 7 - Deep - LearningDokument33 SeitenCC511 Week 7 - Deep - Learningmohamed sherifNoch keine Bewertungen

- Introduction To Deep Learning: by Gargee SanyalDokument20 SeitenIntroduction To Deep Learning: by Gargee Sanyalgiani2008Noch keine Bewertungen

- الشبكات العصبيةDokument18 Seitenالشبكات العصبيةTouil Ghassen0% (1)

- Unit 1 Introduction To Neural NetworksDokument9 SeitenUnit 1 Introduction To Neural NetworksRegved PandeNoch keine Bewertungen

- 8.5 Recurrent Neural NetworksDokument5 Seiten8.5 Recurrent Neural NetworksRajaNoch keine Bewertungen

- Deep Speaker Embeddings For Short-Duration SpeakerDokument6 SeitenDeep Speaker Embeddings For Short-Duration SpeakerMuhammad Gozy Al VaizNoch keine Bewertungen

- RNN LSTMDokument49 SeitenRNN LSTMRajachandra VoodigaNoch keine Bewertungen

- Introduction To The Artificial Neural Networks: Andrej Krenker, Janez Bešter and Andrej KosDokument18 SeitenIntroduction To The Artificial Neural Networks: Andrej Krenker, Janez Bešter and Andrej KosVeren PanjaitanNoch keine Bewertungen

- CS5560 Lect12-RNN - LSTMDokument30 SeitenCS5560 Lect12-RNN - LSTMMuhammad WaqasNoch keine Bewertungen

- Fundamental Tente: Onputational UttmaDokument23 SeitenFundamental Tente: Onputational UttmaSathwik ChandraNoch keine Bewertungen

- CERN Deep Learning and VisionDokument72 SeitenCERN Deep Learning and VisionNarendra SinghNoch keine Bewertungen

- LSTM Recurrent Neural Networks - How To Teach A Network To Remember The Past - by Saul Dobilas - Towards Data ScienceDokument20 SeitenLSTM Recurrent Neural Networks - How To Teach A Network To Remember The Past - by Saul Dobilas - Towards Data Sciencetarunbandari4504Noch keine Bewertungen

- Answers For End-Sem Exam Part - 2 (Deep Learning)Dokument20 SeitenAnswers For End-Sem Exam Part - 2 (Deep Learning)Ankur BorkarNoch keine Bewertungen

- 2019 6S191 L3 PDFDokument71 Seiten2019 6S191 L3 PDFManash Jyoti KalitaNoch keine Bewertungen

- ECE3009 Neural-Networks-and-Fuzzy-Control ETH 1 AC40 PDFDokument2 SeitenECE3009 Neural-Networks-and-Fuzzy-Control ETH 1 AC40 PDFSmruti RanjanNoch keine Bewertungen

- Brand Logo Detection Using Convolutional Neural Network IJERTCONV6IS13121Dokument4 SeitenBrand Logo Detection Using Convolutional Neural Network IJERTCONV6IS13121Minh PhươngNoch keine Bewertungen

- Visual Image Caption Generator Using Deep LearningDokument7 SeitenVisual Image Caption Generator Using Deep LearningSai Pavan GNoch keine Bewertungen

- All Types of Numericals - MLDokument11 SeitenAll Types of Numericals - MLvarad deshmukhNoch keine Bewertungen

- Model Examinations-Psd3C Model Examinations - Psd3CDokument1 SeiteModel Examinations-Psd3C Model Examinations - Psd3CselvamNoch keine Bewertungen

- 7 Types of Neural Network Activation FunctionsDokument16 Seiten7 Types of Neural Network Activation FunctionsArindam PaulNoch keine Bewertungen

- Lecture9 Dropout Optimization CnnsDokument79 SeitenLecture9 Dropout Optimization CnnsSaeed FirooziNoch keine Bewertungen

- Lec 01Dokument31 SeitenLec 01indrahermawanNoch keine Bewertungen

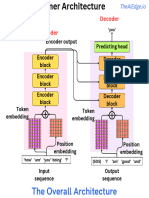

- The Transformer ArchitectureDokument9 SeitenThe Transformer Architecturealexandre albalustroNoch keine Bewertungen

- Self Organizing MapsDokument23 SeitenSelf Organizing MapsIbrahim IsleemNoch keine Bewertungen

- Neural Network Topologies: Input Layer Output LayerDokument30 SeitenNeural Network Topologies: Input Layer Output LayerSadia AkterNoch keine Bewertungen

- A Comparative Study On Selecting Acoustic Modeling Units in Deep Neural Networks Based Large Vocabulary Chinese Speech RecognitionDokument6 SeitenA Comparative Study On Selecting Acoustic Modeling Units in Deep Neural Networks Based Large Vocabulary Chinese Speech RecognitionGalaa GantumurNoch keine Bewertungen

- Unit 2 - MCQ Bank PDFDokument15 SeitenUnit 2 - MCQ Bank PDFDr.J. ThamilselviNoch keine Bewertungen