Beruflich Dokumente

Kultur Dokumente

Data Clustering Using Kernel Based

Hochgeladen von

ijitcajournalCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Data Clustering Using Kernel Based

Hochgeladen von

ijitcajournalCopyright:

Verfügbare Formate

International Journal of Information Technology, Control and Automation (IJITCA) Vol.4, No.

3, July 2014

DOI:10.5121/ijitca.2014.4301 1

DATA CLUSTERING USING KERNEL BASED

ALGORITHM

Mahdi'eh Motamedi

1

and Hasan Naderi

2

1

MS Software Enineering, Science and Research Branch, Islamic Azad University,

Lorestan, Iran

2

Facalty of Software Engineering,Science and Technology,Tehran,Iran

Abstract

In recent machine learning community, there is a trend of constructing a linear logarithm version of

nonlinear version through the kernel method for example kernel principal component analysis, kernel

fisher discriminant analysis, support Vector Machines (SVMs), and the current kernel clustering

algorithms. Typically, in unsupervised methods of clustering algorithms utilizing kernel method, a

nonlinear mapping is operated initially in order to map the data into a much higher space feature, and then

clustering is executed. A hitch of these kernel clustering algorithms is that the clustering prototype resides

in increased features specs of dimensions and therefore lack intuitive and clear descriptions without

utilizing added approximation of projection from the specs to the data as executed in the literature

presented. This paper aims to utilize the kernel method, a novel clustering algorithm, founded on the

conventional fuzzy clustering algorithm (FCM) is anticipated and known as kernel fuzzy c-means algorithm

(KFCM). This method embraces a novel kernel-induced metric in the space of data in order to interchange

the novel Euclidean matric norm in cluster prototype and fuzzy clustering algorithm still reside in the space

of data so that the results of clustering could be interpreted and reformulated in the spaces which are

original. This property is used for clustering incomplete data. Execution on supposed data illustrate that

KFCM has improved performance of clustering and stout as compare to other transformations of FCM for

clustering incomplete data.

Keywords:

Kernel method, kernel clustering algorithms, clustering prototype, fuzzy clustering algorithm, kernel-

induced metric.

1. INTRODUCTION

There are three different forms of grouping (group membership) is possible. In the non-

overlapping groups, each object, only a group (segment clusters) is allocated to an object may be

assigned to multiple groups at the overlapping groups, and wherein the fuzzy groups element

belongs to each group with a certain degree of applying. Hard methods (e.g. k-means , spectral

clustering, kernel PCA) arrange each data point to exactly one cluster, whereas in soft methods

(e.g. EM algorithm with mixture-of-Gaussians model) to each data point for each cluster a level is

assigned, with which this data point can be associated with this cluster. Soft methods are

especially useful when the data points are relatively homogeneously distributed in space and the

clusters are only as regions with increased data point density in appearance, that is, if there are,

for example, transitions between the clusters or background noise (hard methods in this case

useless) (Zhang & Lu, 2009).

International Journal of Information Technology, Control and Automation (IJITCA) Vol.4, No.3, July 2014

2

The objective of clustering is to partition the point of data into standardized groups, emerges in

many fields including machine learning, pattern recognition, image processing and determining.

Means is one of the most popular clustering algorithm, where cluster errors are minimized in

order to define the total of the distance of the squared Euclidean between every set of data point

and the analogous cluster center (Jain, 2010). This algorithm has two key shortcomings including

the dependency of solutions on the prior position of the cluster centers, rendering in low minima,

and it is capable of calculating only clusters, which are separable linearly.

A popular and simple effort to overcome first shortcoming is the execution of multiple restarts, in

which the centers of the clusters are placed randomly at distinct prior places, better local minima,

therefore, could be calculated. One still has to take decision of the quantity of the restarts and one

cannot certain about the initializations done are ample in order to attain a near-optimal minimum.

The worldwide -means algorithm has been projected in order to deal with the concern (Kulis,

Basu, Dhillon & Mooney, 2009). This implies that it is a local search process. This Kernel is

an expansion of the standard -means algorithm, which draws points of data from the spaces of

input in order to feature space by means of a nonlinear minimizes, and transformation the errors

in cluster in feature spaces. Clusters separated linearly, therefore, in input spaces are attained,

surpassing the second limitation of k-means.

This paper proposes the global kernel means algorithm, optimization of deterministic algorithm

for optimization of the cluster error, which utilizes kernel means as a process of local search for

solving the problem of clustering. In an instrumental fashion, the algorithm functions and solves

every intermediate problem with cluster utilizing kernel means. In order to reduce the complexity,

two schemes of spreading up are recommended known as global kernel means and fast global

kernel means with models of convex mixture. For each intermediate concern, the first variant

placed the points of data set which assurances the highest minimization in errors of clustering

when initializing the new cluster in order to add this point and perform kernel means just one

time from the initialization. Nevertheless, the other variant is positioned in the set of data, by

placing a model of convex mixture (Filippone, Camastra, Masulli & Rovetta, 2008). For every

intermediate problem of mixture then tries just examples as an initialization possible for the

cluster which are new rather than of the complete set of data.

The graphs presented emphasize on the nodes clustering. For optimization, spectral procedures

are applied efficiently and effectively. A number of graphs reduce objectives such as normalized

cut and association. The paper presents experimental outcomes, which contrast global kernel

means, kernel means with multiple starts on artificial digits, data, graphs, and face images. The

outcomes support the claim that global kernel means places near optimal solutions since it is

executed followed by multiple restarts in the form of clustering error.

2. MATERIAL and METHOD

2.1 K-Means

The example of K-means is taken from (EM) Exception-Maximization algorithm. In the second

and the third step respectively, the steps of maximization and exception are included. Owing to

the fact that exception-maximization is algorithm is converted to a local maximum. It is noted

that the K-means is, generally, a batch algorithm, such as every input is assessed before

adaptation process, dissimilar to on-line algorithm, which is followed by code book modification.

K-means lack robustness, which is a major drawback of the k-means. The concern can be

resolved easily by monitoring the effect of outliers in the execution of means in data set. In the k-

International Journal of Information Technology, Control and Automation (IJITCA) Vol.4, No.3, July 2014

3

means algorithm is the number K-set of clusters before the start. A function for calculating the

distance between two measurements must be added to the average value and compatible.

The algorithm is as follows:

1. Initialization: (random) selection of K-Cluster centers

2. Assignment: Each object is assigned closest cluster center

3. Recalculation: There are for each cluster, the cluster centers recalculated

4. Repeat: If now the assignment of objects changes, go to step 2, otherwise abort

2.2 Peculiarities k-Means Algorithm

The k-means algorithm delivers for different starting positions of the cluster centers may have

different results. It may be that a cluster remains empty in one step and thus (for lack of

predictability of a cluster center) can no longer be filled. It must be appropriately chosen k, and

the quality of the result depends strongly on the starting positions from. To find an optimal

clustering belongs to the complexity class NP . The k-means algorithm is not essentially the

optimal solution, but is very fast (Graves & Pedrycz, 2010).

To avoid these problems, we simply start the k-means algorithm new in the hope that in the next

run by other random cluster centers a different result is delivered. Because of the above

shortcomings of the theoretical k-means algorithm is considered heuristic because it still often

provides useful results.

3. EXTENSIONS and SPECIAL CASES

Extensions of the K-means algorithm are the k-median algorithm, the k-means algorithm + + or

the Fuzzy c-means . The Iso-data algorithm can be viewed as a special case of K-means. This

method has a typical k-Means, k-Medoids (Tran, Wehrens, & Buydens, 2006). The essential

impression of k-Means algorithm is the n data objects into k classes (which all kinds of pre-k

unknown), so that each class after the division of the data points to a minimum distance of such

centers.

K-means algorithm is essentially to achieve a basic idea of clustering: class within the data points

as close as possible, the class between data points farther the better (Riesen & Bunke, 2010). In

most cases, the above algorithm will eventually get the optimal clustering results of convergence,

but there are also likely to fall into local optima. There is still a problem; the above algorithm has

a premise that the specified value k, i.e. the number of clusters. In practical applications, k value

is not given in advance, so another focus of k-means algorithm is to find a suitable k, square error

count reaches a minimum. The general practice is to try a number of k, the square error (distance)

of the minimum k value.

K-Means algorithm step is to calculate the mean of the current cluster center of each cluster as a

new cluster center, which is the k-Means algorithm named reasons (Ayvaz, Karahan, & Aral,

2007). Calculated as

International Journal of Information Technology, Control and Automation (IJITCA) Vol.4, No.3, July 2014

4

Standards are not common standards whether the convergence detection steps, because to achieve

full convergence may not be realistic.

4.RESULT AND DISCUSSION

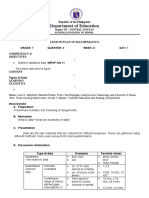

The set one of data is a 2D set developed by 424 points that is consisted of non-separable liner

classes. The two modules, therefore, are not separated by K-means utilizing just two code vectors

as shown in figure 1 (a).

Figure 1(a)

Like SOM, K-means describes the limitations with respect to other clustering algorithms (Wu,

Kumar, Quinlan, Ghosh, Yang, Motoda & Steinberg, 2008). The study then executed kernel

method on the set of data utilizing two distance centers.

Figure 1 (b)

International Journal of Information Technology, Control and Automation (IJITCA) Vol.4, No.3, July 2014

5

Figure 1b shows that two clusters can be separated by algorithm. It is crucial to note that the

center counter images in the input space are not present.

Figure 2

According to figure 2, the behavior of algorithm thought out the stages needed for convergence.

The data 2 is the most popular benchmark data employed in Machine Learning (Dong, Pei, Dong,

& Pei, 2007). Linearly, one class is separable from the rest of the two, whereas, the rest of the two

are not. Usually, IRIS data is epitomized by the projection of 4D data and the two key elements.

The study contrasted the attainable results against the Ng-Jordan algorithm, which is a spectral

clustering algorithm.

Figure 3 shows the results obtained utilizing K-Means and algorithm. In table 1, the second column

illustrates the mean performance of K-Means on 20 runs of SOM, Ng-Jordan, and Neural Gas the methods

International Journal of Information Technology, Control and Automation (IJITCA) Vol.4, No.3, July 2014

6

of the study attained with distinct parameter and initialization. The figures displayed show that this

algorithm seemed to function better as compare to the other algorithms.

5. Conclusion

This paper presents a practical approach to the evaluation of clustering algorithms and their

performance on various high-dimensional and sparse data sets; whereas this sets high demands on

the algorithms in terms of computational complexity and assumptions that must be made. The

paper discussed approaches for solving this optimization and multi-stage problem. Distance

matrices and recommender systems have been used to reduce the complexity of the problem and

to calculate missing values. The study focused in the comparison of the different methods in

terms of the similarity of the results, with the aim to find similar behavior. Another focus was on

the flexibility of the algorithms with respect to the records, as well as the sparseness of the data

and the dimensionalities have a major impact on the problem. In conclusion, it has been achieved

with a combination of recommender systems, hierarchical methods, and Affinity Propagation

good results. Kernel-based algorithms were sensitive with respect to changes in the output data

set.

References

[1] Ayvaz, M. T., Karahan, H., & Aral, M. M. (2007). Aquifer parameter and zone structure estimation

using kernel-based fuzzy< i> c</i>-means clustering and genetic algorithm. Journal of Hydrology,

343(3), 240-253.

[2] Dong, G., Pei, J., Dong, G., & Pei, J. (2007), Classification, Clustering, Features and Distances of

Sequence Data. Sequence Data Mining, 47-65.

[3] Filippone, M., Camastra, F., Masulli, F., & Rovetta, S. (2008). A survey of kernel and spectral

methods for clustering. Pattern recognition, 41(1), 176-190.

[4] Graves, D., & Pedrycz, W. (2010). Kernel-based fuzzy clustering and fuzzy clustering: A

comparative experimental study. Fuzzy sets and systems, 161(4), 522-543.

[5] Jain, A. K. (2010). Data clustering: 50 years beyond K-means. Pattern Recognition Letters, 31(8),

651-666.

[6] Kulis, B., Basu, S., Dhillon, I., & Mooney, R. (2009). Semi-supervised graph clustering: a kernel

approach. Machine Learning, 74(1), 1-22.

[7] Riesen, K., & Bunke, H. (2010). Graph classification and clustering based on vector space

embedding. World Scientific Publishing Co., Inc.

[8] Tran, T. N., Wehrens, R., & Buydens, L. (2006). KNN-kernel density-based clustering for high-

dimensional multivariate data. Computational Statistics & Data Analysis, 51(2), 513-525.

[9] Wu, X., Kumar, V., Quinlan, J. R., Ghosh, J., Yang, Q., Motoda, H., & Steinberg, D. (2008). Top 10

algorithms in data mining. Knowledge and Information Systems, 14(1), 1-37.

[10] Zhang, H., & Lu, J. (2009). Semi-supervised fuzzy clustering: A kernel-based approach. Knowledge-

based systems, 22(6), 477-481. Data Retrieved from:

Das könnte Ihnen auch gefallen

- Paper-2 Clustering Algorithms in Data Mining A ReviewDokument7 SeitenPaper-2 Clustering Algorithms in Data Mining A ReviewRachel WheelerNoch keine Bewertungen

- An Improved K-Means Clustering AlgorithmDokument16 SeitenAn Improved K-Means Clustering AlgorithmDavid MorenoNoch keine Bewertungen

- Journal of Computer Applications - WWW - Jcaksrce.org - Volume 4 Issue 2Dokument5 SeitenJournal of Computer Applications - WWW - Jcaksrce.org - Volume 4 Issue 2Journal of Computer ApplicationsNoch keine Bewertungen

- A Hybrid Algorithm Based On KFCM-HACO-FAPSO For Clustering ECG BeatDokument6 SeitenA Hybrid Algorithm Based On KFCM-HACO-FAPSO For Clustering ECG BeatInternational Organization of Scientific Research (IOSR)Noch keine Bewertungen

- Fuzzy C-Mean Clustering Algorithm Modification and Adaptation For ApplicationsDokument4 SeitenFuzzy C-Mean Clustering Algorithm Modification and Adaptation For ApplicationsWorld of Computer Science and Information Technology JournalNoch keine Bewertungen

- A Novel Approach of Implementing An Optimal K-Means Plus Plus Algorithm For Scalar DataDokument6 SeitenA Novel Approach of Implementing An Optimal K-Means Plus Plus Algorithm For Scalar DatasinigerskyNoch keine Bewertungen

- Chang Liang Et Al - Scaling Up Kernel Grower Clustering Method For Large Data Sets Via Core-SetsDokument7 SeitenChang Liang Et Al - Scaling Up Kernel Grower Clustering Method For Large Data Sets Via Core-SetsTuhmaNoch keine Bewertungen

- Cluster Analysis or Clustering Is The Art of Separating The Data Points Into Dissimilar Group With ADokument11 SeitenCluster Analysis or Clustering Is The Art of Separating The Data Points Into Dissimilar Group With AramaabbidiNoch keine Bewertungen

- Efficient K-Means Clustering Finds Correct Number of GroupsDokument7 SeitenEfficient K-Means Clustering Finds Correct Number of GroupsdurdanecobanNoch keine Bewertungen

- Multi-Layer PerceptronsDokument8 SeitenMulti-Layer PerceptronswarrengauciNoch keine Bewertungen

- Optimization of Fuzzy C Means With Darwinian Particle Swarm Optimization On MRI ImageDokument4 SeitenOptimization of Fuzzy C Means With Darwinian Particle Swarm Optimization On MRI ImageerpublicationNoch keine Bewertungen

- Parallel K-Means Algorithm For SharedDokument9 SeitenParallel K-Means Algorithm For SharedMario CarballoNoch keine Bewertungen

- PkmeansDokument6 SeitenPkmeansRubén Bresler CampsNoch keine Bewertungen

- An Effective Evolutionary Clustering Algorithm: Hepatitis C Case StudyDokument6 SeitenAn Effective Evolutionary Clustering Algorithm: Hepatitis C Case StudyAhmed Ibrahim TalobaNoch keine Bewertungen

- Cluster 2Dokument11 SeitenCluster 2Vimala PriyaNoch keine Bewertungen

- Azimi 2017Dokument26 SeitenAzimi 2017kalokosNoch keine Bewertungen

- Chapter - 1: 1.1 OverviewDokument50 SeitenChapter - 1: 1.1 Overviewkarthik0484Noch keine Bewertungen

- Ijcet: International Journal of Computer Engineering & Technology (Ijcet)Dokument11 SeitenIjcet: International Journal of Computer Engineering & Technology (Ijcet)IAEME PublicationNoch keine Bewertungen

- Ba 2419551957Dokument3 SeitenBa 2419551957IJMERNoch keine Bewertungen

- Generalized Fuzzy Clustering ModelDokument11 SeitenGeneralized Fuzzy Clustering ModelPriyanka AlisonNoch keine Bewertungen

- FUZZY PREDICTIVE MODELLING USING HIERARCHICAL CLUSTERING AND - 2007 - IFAC ProceDokument6 SeitenFUZZY PREDICTIVE MODELLING USING HIERARCHICAL CLUSTERING AND - 2007 - IFAC ProcesmeykelNoch keine Bewertungen

- K-Means Clustering and Affinity Clustering Based On Heterogeneous Transfer LearningDokument7 SeitenK-Means Clustering and Affinity Clustering Based On Heterogeneous Transfer LearningJournal of ComputingNoch keine Bewertungen

- Extracting Rules From Neural Networks Using Symbolic Algorithms: Preliminary ResultsDokument6 SeitenExtracting Rules From Neural Networks Using Symbolic Algorithms: Preliminary ResultsWill IamNoch keine Bewertungen

- Pam Clustering Technique ExplainedDokument11 SeitenPam Clustering Technique ExplainedsamakshNoch keine Bewertungen

- Introduction To Five Data ClusteringDokument10 SeitenIntroduction To Five Data ClusteringerkanbesdokNoch keine Bewertungen

- GA-based clustering speeds up fitness evaluationDokument10 SeitenGA-based clustering speeds up fitness evaluationtamal3110Noch keine Bewertungen

- Ijcttjournal V1i1p12Dokument3 SeitenIjcttjournal V1i1p12surendiran123Noch keine Bewertungen

- An Efficient Enhanced K-Means Clustering AlgorithmDokument8 SeitenAn Efficient Enhanced K-Means Clustering Algorithmahmed_fahim98Noch keine Bewertungen

- I Jsa It 04132012Dokument4 SeitenI Jsa It 04132012WARSE JournalsNoch keine Bewertungen

- A Genetic K-Means Clustering Algorithm Based On The Optimized Initial CentersDokument7 SeitenA Genetic K-Means Clustering Algorithm Based On The Optimized Initial CentersArief YuliansyahNoch keine Bewertungen

- Performance Evaluation of K-Means Clustering Algorithm With Various Distance MetricsDokument5 SeitenPerformance Evaluation of K-Means Clustering Algorithm With Various Distance MetricsNkiru EdithNoch keine Bewertungen

- Chameleon PDFDokument10 SeitenChameleon PDFAnonymous iIy4RsPCJ100% (1)

- A Wavelet-Based Anytime Algorithm For K-Means Clustering of Time SeriesDokument12 SeitenA Wavelet-Based Anytime Algorithm For K-Means Clustering of Time SeriesYaseen HussainNoch keine Bewertungen

- Machine Learning Algorithms in Web Page ClassificationDokument9 SeitenMachine Learning Algorithms in Web Page ClassificationAnonymous Gl4IRRjzNNoch keine Bewertungen

- Clustering Techniques and Their Applications in EngineeringDokument16 SeitenClustering Techniques and Their Applications in EngineeringIgor Demetrio100% (1)

- An Introduction To Kernel Methods: C. CampbellDokument38 SeitenAn Introduction To Kernel Methods: C. Campbellucing_33Noch keine Bewertungen

- A New Method For Dimensionality Reduction Using K-Means Clustering Algorithm For High Dimensional Data SetDokument6 SeitenA New Method For Dimensionality Reduction Using K-Means Clustering Algorithm For High Dimensional Data SetM MediaNoch keine Bewertungen

- An Integration of K-Means and Decision Tree (ID3) Towards A More Efficient Data Mining AlgorithmDokument7 SeitenAn Integration of K-Means and Decision Tree (ID3) Towards A More Efficient Data Mining AlgorithmJournal of ComputingNoch keine Bewertungen

- Dietmar Heinke and Fred H. Hamker - Comparing Neural Networks: A Benchmark On Growing Neural Gas, Growing Cell Structures, and Fuzzy ARTMAPDokument13 SeitenDietmar Heinke and Fred H. Hamker - Comparing Neural Networks: A Benchmark On Growing Neural Gas, Growing Cell Structures, and Fuzzy ARTMAPTuhmaNoch keine Bewertungen

- Video 18Dokument17 SeitenVideo 18kamutmazNoch keine Bewertungen

- Impact of Outlier Removal and Normalization in Modified k-Means ClusteringDokument6 SeitenImpact of Outlier Removal and Normalization in Modified k-Means ClusteringPoojaNoch keine Bewertungen

- Fuzzy K-NN & Fuzzy Back PropagationDokument7 SeitenFuzzy K-NN & Fuzzy Back PropagationInternational Journal of Application or Innovation in Engineering & ManagementNoch keine Bewertungen

- Research On K-Means Clustering Algorithm An Improved K-Means Clustering AlgorithmDokument5 SeitenResearch On K-Means Clustering Algorithm An Improved K-Means Clustering AlgorithmSomu NaskarNoch keine Bewertungen

- Automatic Clustering Using An Improved Differential Evolution AlgorithmDokument20 SeitenAutomatic Clustering Using An Improved Differential Evolution Algorithmavinash223Noch keine Bewertungen

- (IJCST-V1I3P1) : D.VanisriDokument8 Seiten(IJCST-V1I3P1) : D.VanisriIJETA - EighthSenseGroupNoch keine Bewertungen

- Dynamic Approach To K-Means Clustering Algorithm-2Dokument16 SeitenDynamic Approach To K-Means Clustering Algorithm-2IAEME PublicationNoch keine Bewertungen

- Incorporating Stability and Error Based Constraints For A Novel Partitional Clustering AlgorithmDokument10 SeitenIncorporating Stability and Error Based Constraints For A Novel Partitional Clustering Algorithmseptian maulanaNoch keine Bewertungen

- Optimizing of Fuzzy C-Means Clustering Algorithm Using GA: Mohanad Alata, Mohammad Molhim, and Abdullah RaminiDokument6 SeitenOptimizing of Fuzzy C-Means Clustering Algorithm Using GA: Mohanad Alata, Mohammad Molhim, and Abdullah RaminiazharfaheemNoch keine Bewertungen

- Ijert Ijert: Enhanced Clustering Algorithm For Classification of DatasetsDokument8 SeitenIjert Ijert: Enhanced Clustering Algorithm For Classification of DatasetsPrianca KayasthaNoch keine Bewertungen

- Efficient Data Clustering With Link ApproachDokument8 SeitenEfficient Data Clustering With Link ApproachseventhsensegroupNoch keine Bewertungen

- 期末報告-02clustering and Anomaly Detection Method Using Nearest and Farthest NeighborDokument15 Seiten期末報告-02clustering and Anomaly Detection Method Using Nearest and Farthest Neighbor鄭毓賢Noch keine Bewertungen

- Machine Learning Clustering ReportDokument19 SeitenMachine Learning Clustering ReportSunny SharanNoch keine Bewertungen

- Efficient Clustering of Dataset Based On Particle Swarm OptimizationDokument10 SeitenEfficient Clustering of Dataset Based On Particle Swarm OptimizationTJPRC PublicationsNoch keine Bewertungen

- Embed and Conquer: Scalable Embeddings For Kernel K-Means On MapreduceDokument9 SeitenEmbed and Conquer: Scalable Embeddings For Kernel K-Means On MapreducejefferyleclercNoch keine Bewertungen

- Research Article: Improved KNN Algorithm Based On Preprocessing of Center in Smart CitiesDokument10 SeitenResearch Article: Improved KNN Algorithm Based On Preprocessing of Center in Smart Citiesnoman iqbal (nomylogy)Noch keine Bewertungen

- A Global K-Modes Algorithm For Clustering Categorical DataDokument6 SeitenA Global K-Modes Algorithm For Clustering Categorical DataRubinder SinghNoch keine Bewertungen

- Feature Selection Embedded Robust K-Means ClusteringDokument12 SeitenFeature Selection Embedded Robust K-Means Clusteringkiran munagalaNoch keine Bewertungen

- Normalization Based K Means Clustering AlgorithmDokument5 SeitenNormalization Based K Means Clustering AlgorithmAntonio D'agataNoch keine Bewertungen

- Recent Advances in Clustering A Brief SurveyDokument9 SeitenRecent Advances in Clustering A Brief SurveyAnwar ShahNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- Call For Paper - International Journal of Information Technology, Control and Automation (IJITCA)Dokument3 SeitenCall For Paper - International Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- ISSN: 1839 - 6682: Scope & TopicsDokument2 SeitenISSN: 1839 - 6682: Scope & TopicsijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- ISSN: 1839 - 6682: Scope & TopicsDokument2 SeitenISSN: 1839 - 6682: Scope & TopicsijitcajournalNoch keine Bewertungen

- International Journal of Information Technology, Control and Automation (IJITCA)Dokument2 SeitenInternational Journal of Information Technology, Control and Automation (IJITCA)ijitcajournalNoch keine Bewertungen

- ISSN: 1839 - 6682: Scope & TopicsDokument2 SeitenISSN: 1839 - 6682: Scope & TopicsijitcajournalNoch keine Bewertungen

- Self-Evaluation Strategies For Effective TeachersDokument6 SeitenSelf-Evaluation Strategies For Effective TeachersSpringdaily By juliaNoch keine Bewertungen

- Problems and Prospects of Cashew Workers in KanyakumariDokument14 SeitenProblems and Prospects of Cashew Workers in KanyakumariDrGArumugasamyNoch keine Bewertungen

- HR Management CH 12 Review QuestionsDokument4 SeitenHR Management CH 12 Review QuestionsSalman KhalidNoch keine Bewertungen

- A Critical Review of Selyes Stress Theory The StaDokument9 SeitenA Critical Review of Selyes Stress Theory The Staestefania delli-gattiNoch keine Bewertungen

- Pump Performance Lab ReportDokument8 SeitenPump Performance Lab ReportEdgar Dizal0% (1)

- Research TerminologiesDokument2 SeitenResearch TerminologiesVanessa Loraine TanquizonNoch keine Bewertungen

- Journal Club Handbook: Sarah Massey Knowledge & Library Services Manager Illingworth LibraryDokument20 SeitenJournal Club Handbook: Sarah Massey Knowledge & Library Services Manager Illingworth Librarygehanath100% (2)

- TIMMS Technical ManualDokument265 SeitenTIMMS Technical Manualunicorn1979Noch keine Bewertungen

- Standard DeviationDokument8 SeitenStandard DeviationAmine BajjiNoch keine Bewertungen

- A Gentle Tutorial in Bayesian Statistics PDFDokument45 SeitenA Gentle Tutorial in Bayesian Statistics PDFmbuyiselwa100% (4)

- Risk Management Plan PDFDokument4 SeitenRisk Management Plan PDFnsadnanNoch keine Bewertungen

- Effectiveness of Qualitative Research Management in Scientific Writing (39Dokument7 SeitenEffectiveness of Qualitative Research Management in Scientific Writing (39be a doctor for you Medical studentNoch keine Bewertungen

- Department of Education: Lesson Plan in MathematicsDokument2 SeitenDepartment of Education: Lesson Plan in MathematicsShiera SaletreroNoch keine Bewertungen

- CRISC Review QAE 2015 Correction Page 65 XPR Eng 0615Dokument1 SeiteCRISC Review QAE 2015 Correction Page 65 XPR Eng 0615Sakil MahmudNoch keine Bewertungen

- The Mediating Effects of Meta Cognitive Awareness On The Relationship Between Professional Commitment On Job SatisfactionDokument16 SeitenThe Mediating Effects of Meta Cognitive Awareness On The Relationship Between Professional Commitment On Job SatisfactionInternational Journal of Innovative Science and Research TechnologyNoch keine Bewertungen

- Bathy500mf Manual PDFDokument58 SeitenBathy500mf Manual PDFJavier PérezNoch keine Bewertungen

- The Application of Mixed Methods Designs To Trauma Research PDFDokument10 SeitenThe Application of Mixed Methods Designs To Trauma Research PDFTomas FontainesNoch keine Bewertungen

- Probabilistic Seismic Hazard Analysis of Nepal: Bidhya Subedi, Hari Ram ParajuliDokument6 SeitenProbabilistic Seismic Hazard Analysis of Nepal: Bidhya Subedi, Hari Ram ParajuliPrayush RajbhandariNoch keine Bewertungen

- Motorola Projecting LifeSTYLEDokument2 SeitenMotorola Projecting LifeSTYLEDr K K Mishra75% (4)

- CE206 Curvefitting Interpolation 4Dokument20 SeitenCE206 Curvefitting Interpolation 4afsanaNoch keine Bewertungen

- Importance of Emotional Intelligence in Negotiation and MediationDokument6 SeitenImportance of Emotional Intelligence in Negotiation and MediationsimplecobraNoch keine Bewertungen

- Spa Literature ReviewDokument7 SeitenSpa Literature Reviewc5eakf6z100% (1)

- Chapter 9 HRM NotesDokument5 SeitenChapter 9 HRM NotesAREEJ KAYANINoch keine Bewertungen

- Turning Up The Heat - Full Service Fire Safety Engineering For Concrete StructuresDokument3 SeitenTurning Up The Heat - Full Service Fire Safety Engineering For Concrete StructuresKin ChenNoch keine Bewertungen

- Delayed Thesis Argument ExampleDokument4 SeitenDelayed Thesis Argument Exampleafkogftet100% (1)

- Why women wear lipstick: Preliminary findings reveal self-esteem boostDokument9 SeitenWhy women wear lipstick: Preliminary findings reveal self-esteem boostAkhila JhonNoch keine Bewertungen

- (OG) Characterizing Chinese Consumers Intention To Use Live Ecommerce ShoppingDokument13 Seiten(OG) Characterizing Chinese Consumers Intention To Use Live Ecommerce ShoppingMuhammad YusufaNoch keine Bewertungen

- Eapp Q2Dokument20 SeitenEapp Q2Angeline AdezaNoch keine Bewertungen

- Human Error in Pilotage OperationsDokument8 SeitenHuman Error in Pilotage OperationsFaris DhiaulhaqNoch keine Bewertungen

- Evaluation of Fire Protection Systems in Commercial HighrisesDokument9 SeitenEvaluation of Fire Protection Systems in Commercial HighrisesPrithvi RajNoch keine Bewertungen