Beruflich Dokumente

Kultur Dokumente

Glsvlsi189 Belsare

Hochgeladen von

eagle12580Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Glsvlsi189 Belsare

Hochgeladen von

eagle12580Copyright:

Verfügbare Formate

GPU Implementation of a Scalable Non-Linear Congruential

Generator for Cryptography Applications

Aditya Belsare

, Steve Liu

and Sunil P. Khatri

Texas A&M University, College Station, TX 77843

Abstract - Fast key generation algorithms which can

generate random sequences of varying atomic lengths and

throughput are important for secure data communica-

tion. In this paper, we present a non-linear congruen-

tial method for generating high quality random numbers

at exible throughput rates of upto 66 Gbps, on a GPU

platform. Each random number can have up to 4096 key

bits. The method can be easily extended for implemen-

tation on hardware platforms like FPGAs and ASICs as

well. Our key generator is comprised of N Linear Con-

gruential Generators (LCGs) running in parallel; we have

chosen N = 4096 for the GPU implementation. The out-

puts of the LCGs are combined using N encoded majority

functions. The encoded majority function used for any bit

is changed in every generation iteration. In our GPU im-

plementation of the Non-Linear Congruential Generator

(GPU-NLCG), it is possible to alter the LCG functions

on the y by changing the primes periodically without in-

terrupting the generation. Our GPU-NLCG can be used

for high speed cryptographic key generation for rates up to

66 Gbps and can be easily integrated into multi-threaded

applications in cryptography and Monte Carlo methods.

The GPU-NLCG passes the NIST, Diehard and Dieharder

battery of tests of randomness, which ensure the quality

of our ciphers.

Categories and Subject Descriptors

I.0 [Computing Methodologies]: General; E.3 [Data

Encryption]: Public Key Cryptosystems

Keywords

Cryptography, Graphics Processing Units, Block Cipher,

pseudo random sequence

Permission to make digital or hard copies of all or part of this

work for personal or classroom use is granted without fee

provided that copies are not made or distributed for prot

or commercial advantage and that copies bear this notice

and the full citation on the rst page. To copy otherwise,

or republish, to post on servers or to redistribute to lists,

requires prior specic permission and/or a fee.

GLSVLSI13, May 23, 2013, Paris, France.

Copyright 2013 978-1-4503-1902-7/13/05...$15.00.

Department of Electrical Engineering, TAMU.

Department of Computer Science and Engineering, TAMU.

Department of Electrical Engineering, TAMU.

I. Introduction

Random numbers nd varied applications in all spheres

of security, particularly for securing high speed data com-

munication systems. High speed secure communication for

communication standards like OC-768 on ber optic chan-

nels (which operate at 40 Gbps) consume random num-

bers at equally high rates. Random numbers are also used

for solving Monte Carlo based computational algorithms,

which rely on repeated random sampling to compute their

results. Such algorithms require high quality random num-

bers at extremely high rates.

For secured communication systems, the message to be

sent (called plaintext) is usually combined with a pseu-

dorandom sequence (called a key). The transformed mes-

sage, called the ciphertext is then transmitted to the recip-

ient over the communication channel. The cipher, which

is the algorithm used to combine plaintext and the key can

operate in the following two ways -

On the stream of plaintext one bit at a time, called a

stream cipher. Example - A bitwise XOR of key bits

and plaintext bits.

On a block of plaintext at a time, called a block cipher.

The block cipher encrypts a message by breaking it

down into xed or variable sized blocks and encrypt-

ing data in each block. Examples - DES [13] (64-

bit block size), AES [14] (128-bit block size), Die-

Hellman [15] and RSA [16] (variable block sizes).

At the receiving end, the original message is decrypted

using the same cipher and key that was used during en-

cryption. Block ciphers are preferred over stream ciphers

for many reasons. Stream ciphers are generally slower than

block ciphers. If the throughput of a stream cipher is to

be increased, very high clock rates need to be employed.

Block ciphers are better at resynchronization. In a syn-

chronous stream cipher, if a cipher text character is lost

during transmission, the sender and receiver must resyn-

chronize their key generators before transmission can be

resumed. In a context of a block cipher, the block can be

simply discarded or error-corrected using redundant bits

in the block. For these reasons, we chose the GPU-NLCG

to be a block cipher with adjustable block length, which

is actually equal to the number of generators used in our

CUDA implementation. The maximum block length can

be upto 4096 bits in our realization.

Linear generators like Linear Feedback Shift Registers

(LFSR) and Linear Congruential Generators (LCG) are

a popular choice for pseudo-random number generation,

mainly due to their simple hardware implementation and

fast performance. For cryptographic applications however,

linear generators like LFSRs and LCGs are not suitable.

In an n-bit LFSR for example, the feedback polynomial

can be inferred by observing 2n consecutive bits of its

sequence [1].

A Linear Congruential Generator (LCG) represents one

of the oldest and best known pseudo-random number gen-

erator algorithms. A general LCG function can be repre-

sented by the following recurrence relation:

X

n

= [mX

n1

+ p] mod (1)

where m is called the multiplier, p the increment, the

modulus and 0 X

0

< the initial seed. The period

of this LCG would be at most , and for some choices of

m and p much less than that. The LCG will have a full

period if and only if the following conditions are satised

[2]:

1. and p are co-primes (i.e. have no common factors

other than 1.)

2. m1 is divisible by all prime factors of .

3. If 4 divides , then 4 divides m1.

To satisfy these conditions, we choose = 2

32

, m as a

xed prime number such that 4 divides m 1 and p as a

variable prime number. Hence, and p now become co-

primes and hence rst condition is satised. Since m1 is

divisible by 4, it is also divisible by the prime factor of 2

32

,

i.e. 2. Hence, the second condition is satised. Also, the

third condition gets automatically satised based on our

choice of m and . For the LCG generators used in the

rst stage of our implementation, we x m to be a large

prime and vary p with dierent values of primes across

generators.

LCGs are fast, require minimal memory and are valu-

able for simulating multiple independent streams. How-

ever, when used on their own for cryptographic applica-

tions, they have certain severe shortcomings. If an LCG

is seeded with a character and then iterated once, the re-

sult is a simple classical cipher called an ane cipher; this

cipher is easily broken by standard frequency analysis [3].

At a high level, the NLCG is comprised of two computa-

tion stages. The rst stage constitutes of 4096 32-bit LCGs

operating in parallel. A separate primality test kernel op-

erates in parallel with the generating LCGs. After every

10000 random sequence generation by each congruential

generator, the primality test kernel supplies the LCG ker-

nel with a new set of 4096 prime numbers for p as required

by Eq. 1. For the second stage, the 32-bit outputs from

the LCGs are arranged as 32 rows of 4096-bits each. A

12-bit LFSR and the 12-bit index position is then used to

select a unique encoded majority function for each of the

4096 bit positions, where the encoded majority function

takes in 32 inputs per bit position and outputs a binary

value. Any encoded majority function is a 32-input and

1-output function, which is a 1 for 2

31

random input com-

binations. Thus, the NLCG outputs a block of 4096 bits

per iteration.

In GPU-NLCG, we have exploited parallelism by

launching a large number of independent generators, be-

fore being permuted in a non-linear fashion by the en-

coded majority functions. Each generator is mapped to

one CUDA thread. Hence, the throughput can be simply

adjusted by changing the number of LCG CUDA threads,

which makes the NLCG a suitable candidate for dierent

communication rates and standards. Moreover, it is secure

because we change the primes used during generation af-

ter every 10000 iterations and permute the generated bits

using a pool of encoded majority functions, which are se-

lected randomly and dynamically.

The primality test kernel in the rst stage of generator is

a parallelized implementation of the Miller-Rabin Primal-

ity Test, a fast yet probabilistic method for determining

primality of a number. In order to supply prime numbers

to the congruential functions, the primality test kernel be-

gins the search starting at a random oset number and

returns 4096 primes after it has scanned an appropriately

large range around the oset. This kernel can be used

as a standalone application, yielding a GPU implementa-

tion of a fast primality checking algorithm for a block of

numbers. Primality testing, which itself nds crucial ap-

plications for RSA algorithms where a product of two large

primes is used as a key, can make good use of our GPU

based fast primality test for nding a pool of candidate

primes quickly.

To evaluate the quality of random numbers generated

by the NLCG, we tested the results with 3 well known

batteries of tests of randomness, namely the NIST [10],

Diehard [11] and Dieharder [12]. The results passed for

all the the variants of these three test suites. These test

suites are stringent and exhaustive, and frequently used

for analyzing the quality of ciphers generated by various

algorithms.

The remainder of this paper is organized as follows. Sec-

tion II surveys the existing work done on LCGs, particu-

larly on the GPUs. Section III describes our NLCG design,

while Section IV reports experimental results, including

results from NIST and Dieharder test suites. Finally in

Section V, we make concluding comments and discuss fur-

ther work that needs to be done in this area.

II. Previous Work

We use a non-linear function which acts on the LCGs,

since LCGs have shortcomings when used on their own, as

described earlier. A non-linear generator utilizes a non-

linear function for the next state feedback or for the out-

put. In a theoretical study [5], a non-linear congruential

pseudo-random number generator has been shown to be

better than a conventional LCG from a statistical view

point. However, no implementation was proposed. Our

algorithm for such a non-linear function is inspired by the

Non-Linear Feedback Shift Register (NLFSR) proposed in

[4], with the important dierence of using a large array

of dynamically seeded LCGs with periodically changing

generating functions instead of the 3 LFSRs used in [4].

The advantage of our approach is that since it is software

based, it is not restricted to xed bit-widths as is the case

for a hardware approach like [4]. Additionally, we keep the

array length of the dynamic LCGs equal to the size of the

block cipher desired, and select functions from an ordered

array of majority functions instead of using a multiplexer

with functions as inputs.

There have been several eorts to exploit LCGs for non-

cryptographic purposes. In [6], for example, a GPU im-

plementation for LCG is presented where a large number

of LCGs are launched in parallel to obtain an immense

speedup on a Fermi GPU. No mention is however, made

about the quality of the resulting random numbers. As

discussed before, using LCGs for pseudorandom number

generation is not suitable for cryptographic applications.

We tested the random sequences generated by using LCGs

on the GPU, using the NIST and Diehard tests and found

that it failed many tests. In particular, the Runs Sta-

tistical Test on NIST (with a p-value of 0.003), and the

BlockFrequency Test (with a p-value of 0.005) performed

very poorly. The failure in BlockFrequency Test can be

attributed to the short period of an LCG, which can be

no more than 2

32

for a 32-bit LCG.

The cuRand library in CUDA provides two Random

Number Generators namely Mersenne Twister and XOR-

WOW algorithms. There are two problems with the

Mersenne Twister implementation - the rst being the fact

that it requires a very large state (624 words), which makes

it unwieldy if a random number sequence is desired per

CUDA thread, with tens of thousands of threads. Thus,

the size of the state rules out thread level parallelization.

Second, the Mersenne Twister is not suitable for cryptog-

raphy. Observing a sucient number of iterations (624 in

case of MT19937) allows one to predict all future iterations

[7]. The XORWOW algorithm introduced by Marsaglia [8]

belongs to the Xorshift class of generators. These generate

the next number in their sequence by repeatedly taking the

XOR of a number with a bit shifted version of itself. They

are a subclass of LFSRs, and hence they are fast. How-

ever since they are based on LFSRs, they are not suitable

for cryptographic applications. Moreover, the parameters

have to be chosen carefully in order to achieve a long pe-

riod [9]. Thus, the GPU-NLCG can be better suited for

cryptographic applications than CUDAs own implemen-

tation using Mersenne Twister and Xorshift generators.

III. Technical Approach

In this section, we present our NLCG design and its im-

plementation on CUDA. We rst describe the operation of

the LCGs used in rst stage of generation, then the sec-

ond stage which permutes the results of LCGs into a N-bit

output using encoded majority functions. For our CUDA

implementation, N is chosen to be 4096.

Stage 1: The LCGs

We use Linear Congruential Generators for the rst stage

of random number generation. A 32-bit LCG function can

be represented by Eq. 1. Parallelization of this function

can be achieved by varying either m or p or both at the

same time. For our implementation, we choose to x the

multiplier m for all generators, while varying p with values

obtained from the primality test. We have an implemen-

tation with the N generators, and the data structure for

the entire LCG generator is as shown:

typedef struct LCG{

unsigned int state[N];

unsigned int p[N];

} LCG_RNG;

The values of p are refreshed after every 10000 itera-

tions by a separate primality kernel, which implements the

Miller-Rabin Primality test for a block of numbers assessed

for primality. Each GPU thread assesses one number for

primality, implementing the Miller-Rabin algorithm

1

de-

scribed in Algorithm 1.

Algorithm 1 Miller-Rabin Algorithm

Inputs:

1. n > 3, an odd integer to be tested for primality.

2. k, a parameter that determines the accuracy of the

test.

Output: Probably prime if n is prime, else composite.

Write n 1 as 2

s

.d with d odd by factoring powers of 2

from n 1

Loop: Repeat k times:

Pick a random integer a in the range [2, n 2]

x a

d

mod n

if x = 1 or x = n 1 then do next Loop

for r = 1 . . . s 1

x x

2

mod n

if x = 1 then return composite

if x = n 1 then do next Loop

return composite

return probably prime

The test completes in

O(k log

3

n), where k is number of

test iterations. The worst-case error bound of the Miller-

Rabin primality test is 4

k

. We choose k = 1000, so the

probability that a composite number is incorrectly deter-

mined as a prime by this method is 4

1000

0.

As shown in Algorithm 1, the Miller-Rabin primality

test requires a random integer a in the range [2, n 2].

Each primality thread uses its own LFSR for this purpose,

1

http://en.wikipedia.org/wiki/Miller-Rabin_primality_

test

which runs k times. The feedback polynomial for this 32-

bit LFSR is given by:

x

31

+ x

21

+ x

1

+ x

0

Each LFSR uses the same feedback polynomial but dif-

ferent starting seed, which is provided along with the num-

ber to be tested for primality. Thus, at the end of every

iteration in Stage 1, we obtain N 32-bit random num-

bers, one from each of the LCGs, with the LCG functions

themselves changing every 10000 iterations. The choice of

10000 iterations is experimentally determined - using fewer

sequences may not yield 4096 primes, while using more se-

quences would allow the LCG coecients to be susceptible

to being deciphered. When algorithm 1 returns a compos-

ite, we ignore the result and continue the search till all the

N primes have been accumulated. The output of stage 1

is array of N primes in the CUDA global memory; this

array is used by Stage 2 of the computation.

Stage 2: The Non-Linear Permutation Block for

LCGs

Our proposed GPU-NLCG utilizes N LCGs, whose out-

puts are combined in a non-linear manner. The outputs of

the LCGs are represented as M rows of N bits each. We

have chosen N = 4096 and M = 32 for our CUDA imple-

mentation, as shown in Figure 1. We refer to each of these

M rows as transformed LCGs. The i

th

bit (0 i < N) of

each row j (0 j < M) is connected to an encoded ma-

jority function, and the output of this encoded majority

function is the i

th

bit of the resulting NLCG. Thus, output

of NLCG is N bits per iteration. The encoded majority

function is non-linear, hence the combined state of the N

majority outputs results in an NLCG.

We now describe the encoding majority functions and

their selection in greater detail. The encoded majority

function has the following characteristics -

The encoded majority function is dierent for each bit

position in any generation iteration (or clock cycle).

The encoded majority function for any bit position

changes at each iteration (or clock cycle).

Each encoded majority function has 32 inputs and one

output. The output is a 1 for 2

31

randomly arranged

inputs.

We have a pool of 4096 encoded majority functions, each

of which is mapped to a single CUDA thread. An encoded

majority function in our approach is a two-block function.

The rst is an encoding block which receives 32 binary in-

puts and outputs 5 binary bits, as shown on the top left

of Figure 1. Each encoded majority function is dierenti-

ated from another by the sequence (bit-position) of the 32

inputs. The order of these inputs determine the 5-bit out-

put, which is next provided to a majority function, dened

as below:

M(p

1

, p

2

, . . . , p

5

) =

1

2

+

5

i=1

p

i

1/2

5

Assuming an NLCG output length of N = 4096 and

32-bit LCGs, we need 4096 encoded majority functions

(f

M

0

f

M

4095

). Each encoded majority function has 32 in-

puts, one from each transformed LCG (see gure 1). The

number of such unique encoded majority functions possi-

ble is

2

32

C

2

31. We choose 4096 of them at random for our

implementation.

Now we describe how the encoded majority functions

are selected. The i

th

bit of each LCG is used as an input

to the i

th

function selection block M

i

(See bottom left,

Figure 1). In each function selection block, the inputs are

connected to all 4096 encoded majority functions and a

4096-1 multiplexer selects one of these functions for the i

th

bit NLCG output. For a software based implementation

like in a GPU, we do not need a multiplexer per se, so

we simply select the appropriate function from an ordered

array of 4096 functions. The selection signal is controlled

by XOR-ing the 12-bit bit index of the function selection

block M

i

with the output of the 12-bit LFSR (leftmost

part of Figure 1). The 12-bit LFSR outputs a new value at

every LCG generation iteration, and the output is shared

by all 4096 function selection blocks. Figure 1 shows only

one such block. Thus, all bit positions select a unique and

dierent encoded majority function every cycle.

Length Sequence of the NLCG

We now assert and prove that the NLCG of length MN

has an asymptotically maximal length sequence. Assume

that in any iteration j, the state of the transformed LCG

L

i

is V

j

i

(0 i 31). The NLCG operation is denoted as

N

j

= M(V

j

0

, V

j

2

, . . . , V

j

31

), where M is as shown in Figure

1. We show that the probability that the NLCG output

does not repeat within the composite sequence of 32 trans-

formed LCGs, the length of which is N M = 32 4096,

can be made asymptotically low. In other words, we need

to show that for two dierent iterations j and k in the

maximal sequence, the following holds true for an appro-

priately large n:

p(M(V

j

0

, V

j

2

, . . . , V

j

31

) = M(V

k

0

, V

k

2

, . . . , V

k

31

)) 0

To show this, we note that for the encoded majority

function computing the output of the NLCG, the output

will be the same across two iterations j and k if all 32 bits

that are input to the function selection block M

i

(for all

i) are identical. For any i, this probability is

1

2

M

. Since

the transformed LCGs have N columns the probability of

the output of the NLCG being same for two iterations j

and k is

1

2

N

. With a large M and N, this proba-

bility can be made arbitrarily small. This means that the

composite sequence (of length M N) is maximal. For

our implementation with M = 32 and N = 4096, this

probability is

1

2

32

4096

0.

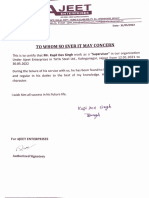

Figure 1: 4096-bit NLCG with Encoded Majority Function Blocks

0

1

2

31

0 1 2 4094 4095

M

31

M

31

M

31

M

31

M

31

NLCG Output

12 12

12

12-bit

LFSR

Index of

M Bo!"

409#-1 M$%

f

0

M

f

1

M

f

2

M

f

4095

M

0

1

2

30

31

&n!ode'

M()o'it*

fun!tion

&n!oded M()o'it* Fun!tion

Fun!tion See!tion Bo!" M

12-bit

LFSR

12

i

LCG

i

+32-bit,

0 1 2 31

IV. Experimental Results

We report our results both in terms of quantity of num-

bers generated (throughput) and the quality of random

numbers (results of NIST, Diehard and Dieharder bench-

marks).

A. Quantity of Random Numbers

We implemented the program for primality testing inde-

pendently, comparing results for CPU and GPU versions

of the program. We then integrated the primality tester

with the LCG kernel and observed the time taken for gen-

erating a xed quantity of random numbers.

The CPU used for comparison had a 64-bit Intel Xeon

Processor with 8 cores and 2 cores per thread. It had

16 GB of memory, 8 MB of L3 cache and was clocked

at 2.9 GHz. The CPU used Ubuntu 11.10 distribution

of the Linux operating system. For the GPU, we used

a NVIDIA Kepler GeForce GTX 680 card with 8 Mul-

tiprocessors (MP), 192 CUDA cores/MP, and 2 GB of

global memory. Table 1 reports the comparison of CPU

and GPU versions of the NLCG generators with 4096-bit

output. Note that we have assumed that the cryptography

application, Monte Carlo method or any random number

consuming application resides in the same kernel as the

NLCG generator itself. This makes the GPU-NLCG in-

tegrated with the consuming function, and removes the

overhead of transferring a large set of random numbers

generated every second to the CPU. The results reported

in Table 1 correspond to k = 1000 for the Miller-Rabin

algorithm and 4096-bit random numbers.

Table 1: Results for CPU and GPU versions of NLCG

Program

Program # Random Time Speedup

NLCG_CPU 10

9

3.01 h -

NLCG_GPU 10

9

61.9 s 175x

NLCG_CPU 2*10

9

6.22 h -

NLCG_GPU 2*10

9

124 s 180x

The GPU throughput calculated from Table 1 comes

to be 66.1 Gbps for the GPU version and 337 Mbps for

the CPU version of the program. Thus, the GPU-NLCG

can sustain data rates for communication standards like

OC-768, which operate at 40 Gbps.

B. Quality of Random Numbers

We used 3 benchmark tools for assessing the quality of

random numbers generated by our NLCG - Marsaglias

Diehard battery of tests of randomness [11], Dieharder (an

open source random number/uniform deviate generator

and tester [12]), and NIST, which is a statistical test suite

for the validation of RNGs and PRNGs for cryptographic

applications [10]. The Dieharder test suite incorporates

many of the tests included in NIST, and is available as

a standard software package in Ubuntus software repos-

itory, and can test either P-bit bitstrings (with P being

user specied) or double precision oating point numbers

in the range [0.0, 1.0). For the Diehard and NIST suites,

we had to split each 4096-bit random number into chunks

of 64-bit numbers for testing. As required by each of the

three test suites, we generated binary output les from the

NLCG with 8 million samples each.

The results of all three test suites are reported as p-

values. The p-value for a particular test is compared with

the following two parameters to decide the outcome of that

test:

Weak threshold - The test is deemed as weakly passed

if p-value is found less than the weak threshold. De-

fault is 0.005 for Dieharder tests. NIST does not use

this parameter.

Fail threshold - The test is deemed as certainly failed

if the p-value is found lesser than the fail thresh-

old. Dieharder recommends this to be 0.000001, while

NIST refers to this parameter as , with a recommen-

dation of 0.01 be used for all tests.

An of 0.001 indicates that one would expect one sequence

in 1000 sequences to be rejected by the test if the sequence

was random. For a p-value0.001, a sequence would be

considered to be random with a condence of 99.9%. For

a p-value0.001, a sequence would be considered to be

non-random with a condence of 99.9%.

We used default sample sizes recommended by the in-

dividual tests. For the GPU-NLCG, all the 15 tests in

Diehard, 12 major tests and their 162 variants in NIST,

and the 25 major tests and their 209 variants in Dieharder

passed. Table 2 lists the results of important tests from

all the 3 test suites. In this table, any test with a prex

NIST comes from the NIST suite, while a prex DHr

and DH refer to the Dieharder and Diehard suites re-

spectively. Column 2 lists the t-samples which is the total

random samples consumed by the test under considera-

tion.

Table 2: Results from Statistical Test Suites. (All tests

pass.)

Test-name t-samples p-value

NIST_Runs 100000 0.54890228

NIST_Serial 100000 0.21857258

NIST_BlockFrequency 100000 0.13538794

NIST_Monobit 100000 0.13499881

DHr_Bitstream 2097152 0.87918116

DHr_Squeeze 100000 0.36519217

DHr_Sums 100 0.35587054

DHr_rgb_lagged_sum 1000000 0.24969625

DHr_rgb_permutations 100000 0.17348102

DHr_min_distance 10000 0.53072256

DHr_3d_Sphere 4000 0.71973711

DH_rank_32x32 40000 0.00505979

DH_dna 2097152 0.23968055

V. Conclusion

We present a GPU-based parallelized implementation of a

simple linear congruential generator and extend it to serve

as a non-linear generator. The resulting implementation,

which we call GPU-NLCG, is an inexpensive method for

fast random number generation with variable bit-size on

any GPU based platform. The GPU-NLCG is perfectly

suited for fast key generation for cryptography applica-

tions. The NLCG uses 4096 independent LCGs, the out-

puts of which are permuted using 4096 encoded majority

functions selected randomly per bit per iteration. The

throughput of our system is limited not by the genera-

tor but by the bandwidth of the CPU-GPU PCI-e bus.

Hence, an FPGA or ASIC based implementation of our

NLCG may yield yet higher throughputs.

References

[1] E. Zenner, Cryptanalysis of LFSR-based pseudorandom genera-

tors - a survey, 2004.

[2] Severance, Frank (2001). System Modeling and Simulation, John

Wiley & Sons, Ltd. pp. 86. ISBN 0-471-49694-4.

[3] Joan Boyar (1989). Inferring sequences produced by pseudo-

random number generators, Journal of the ACM 36 (1): 129141.

[4] Kent Lin, Sunil Khatri (2010). VLSI Implementation of a Non-

Linear Feedback Shift Register for High-Speed Cryptography Ap-

plications, Proceedings of the 20th symposium on Great lakes

symposium on VLSI, Pages 381-384.

[5] J. Eichenauer, J. Lehn, A non-linear congruential pseudo random

number generator, Statist. Papers 27 (1986) 315-326.

[6] Shuang Gao and Gregory D. Peterson, GASPRNG: GPU Acceler-

ated Scalable Parallel Random Number Generator Library, Com-

puter Physics Communications.

[7] Reversing the Mersenne Twister RNG Temper Function.

http://b10l.com/?p=24

[8] G. Marsaglia. Xorshift RNGs. J. of Statistical Software, 8(14):1-6,

2003.

[9] Panneton, Franois (October 2005). On the xorshift random num-

ber generators, ACM Transactions on Modeling and Computer

Simulation (TOMACS) Vol. 15 (Issue 4).

[10] NIST computer security resource center.

http://csrc.nist.gov/groups/ST/toolkit/rng/index.html

[11] The Marsaglia Random Number CDROM includ-

ing the Diehard Battery of Tests of Randomness.

http://www.stat.fsu.edu/pub/diehard/

[12] Dieharder: A testing and benchmarking tool for random number

generators. Dieharder page for Ubuntu

[13] Data Encryption Standard (DES), U.S. Department of

Commerce/National Institute of Standards and Technology.

http://csrc.nist.gov/groups/ST/toolkit/rng/index.html

[14] Announcing the Advanced Encryption Standard (AES). Federal

Information Processing Standards Publication 197. United States

National Institute of Standards and Technology (NIST). November

26, 2001.

[15] New Directions in Cryptography. W. Die and M. E. Hellman,

IEEE Transactions on Information Theory, vol. IT-22, Nov. 1976,

pp: 644-654.

[16] Rivest, R.; A. Shamir; L. Adleman (1978). A Method for Obtain-

ing Digital Signatures and Public-Key Cryptosystems. Communi-

cations of the ACM 21 (2): 120-126.

Das könnte Ihnen auch gefallen

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5795)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1091)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- ASTM E407-07 Standard Practice For Microetching Metals and AlloysDokument22 SeitenASTM E407-07 Standard Practice For Microetching Metals and AlloysRifqiMahendraPutra100% (3)

- TTester 1.10k EN 140409-1Dokument89 SeitenTTester 1.10k EN 140409-1Domingo ArroyoNoch keine Bewertungen

- CommsII Problem Set 3 With AddendumDokument2 SeitenCommsII Problem Set 3 With AddendumMarlon BoucaudNoch keine Bewertungen

- 13bentonit SlurryDokument24 Seiten13bentonit SlurrykesharinareshNoch keine Bewertungen

- 5GHz VCODokument5 Seiten5GHz VCOnavinrkNoch keine Bewertungen

- TP 5990Dokument40 SeitenTP 5990Roberto Sanchez Zapata100% (1)

- Liebert Challenger 3000-3-5 Ton Installation ManualDokument76 SeitenLiebert Challenger 3000-3-5 Ton Installation ManualDanyer RosalesNoch keine Bewertungen

- Adobe Scan Aug 19, 2022Dokument3 SeitenAdobe Scan Aug 19, 2022neerajNoch keine Bewertungen

- Amine Gas Sweetening Systems PsDokument3 SeitenAmine Gas Sweetening Systems Pscanada_198020008918Noch keine Bewertungen

- TONGOD-PINANGAH Project DescriptionDokument4 SeitenTONGOD-PINANGAH Project DescriptionPenjejakAwanNoch keine Bewertungen

- Secutec Binder Ds UkDokument2 SeitenSecutec Binder Ds UkSuresh RaoNoch keine Bewertungen

- Prequalification HecDokument37 SeitenPrequalification HecSaad SarfarazNoch keine Bewertungen

- SAE Viscosity Grades For Engine OilsDokument1 SeiteSAE Viscosity Grades For Engine OilsFAH MAN100% (1)

- 3-D Finite Element Modeling in OpenSees For Bridge - Scoggins - ThesisDokument54 Seiten3-D Finite Element Modeling in OpenSees For Bridge - Scoggins - Thesisantonfreid100% (2)

- Create A Custom Theme With OpenCartDokument31 SeitenCreate A Custom Theme With OpenCartBalanathan VirupasanNoch keine Bewertungen

- SurgeTest EPCOSDokument33 SeitenSurgeTest EPCOSSabina MaukoNoch keine Bewertungen

- QAP For Pipes For Hydrant and Sprinkler SystemDokument3 SeitenQAP For Pipes For Hydrant and Sprinkler SystemCaspian DattaNoch keine Bewertungen

- 16N60 Fairchild SemiconductorDokument10 Seiten16N60 Fairchild SemiconductorPop-Coman SimionNoch keine Bewertungen

- STP CivilDokument25 SeitenSTP CivilRK PROJECT CONSULTANTSNoch keine Bewertungen

- Creep LawDokument23 SeitenCreep LawMichael Vincent MirafuentesNoch keine Bewertungen

- Fabricante de HPFF CableDokument132 SeitenFabricante de HPFF Cableccrrzz100% (1)

- Rubber FormulationsDokument17 SeitenRubber FormulationsAkash Kumar83% (6)

- Datasheet Ls 7222Dokument4 SeitenDatasheet Ls 7222Martín NestaNoch keine Bewertungen

- kd625 3 Om PDFDokument136 Seitenkd625 3 Om PDFLuis Quispe ChuctayaNoch keine Bewertungen

- MA WAFLInternals V2.0Dokument20 SeitenMA WAFLInternals V2.0Vimal PalanisamyNoch keine Bewertungen

- Over View API Spec 6aDokument2 SeitenOver View API Spec 6aAlejandro Garcia Fonseca100% (2)

- Earthquake Resistance of Buildings With A First Soft StoreyDokument9 SeitenEarthquake Resistance of Buildings With A First Soft StoreyZobair RabbaniNoch keine Bewertungen

- PIPE LAYING PROJECT (Sent To Ms. Analyn) PDFDokument1 SeitePIPE LAYING PROJECT (Sent To Ms. Analyn) PDFJamaica RolloNoch keine Bewertungen

- General Thread: No Flavor Hard Math Bad ArtDokument4 SeitenGeneral Thread: No Flavor Hard Math Bad ArtAnonymous q9PRRY25% (4)

- Health REST API Specification 2.9.6 WorldwideDokument55 SeitenHealth REST API Specification 2.9.6 WorldwideAll About Your Choose Entertain100% (5)