Beruflich Dokumente

Kultur Dokumente

Rfid Based Indoor Navigation Assistance Robot For The Blind and Visually Imapired

Hochgeladen von

tariq76Originalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Rfid Based Indoor Navigation Assistance Robot For The Blind and Visually Imapired

Hochgeladen von

tariq76Copyright:

Verfügbare Formate

RFID BASED INDOOR NAVIGATION ASSISTANCE ROBOT FOR THE

BLIND AND VISUALLY IMAPIRED

CHEONG WAI LEONG

UNIVERSITI TEKNOLOGI MALAYSIA

PSZ 19:16 (Pind. 1/07)

D DE EC CL LA AR RA AT TI IO ON N O OF F T TH HE ES SI IS S/ / U UN ND DE ER RG GR RA AD DU UA AT TE E P PR RO OJ J E EC CT T P PA AP PE ER R A AN ND D C CO OP PY YR RI IG GH HT T

Authors full name : CHEONG WAI LEONG

Date of birth : 19 JULY 1986

Title : RFID BASED INDOOR NAVIGATION ASSISTANCE ROBOT

FOR THE BLIND AND VISUALLY IMPAIRED

Academic Session : 2009/2010

I declare that this thesis is classified as:

CONFIDENTIAL

(Contains confidential information under the

Official Secret Act 1972)*

RESTRICTED

(Contains restricted information as specified by

the organization where research was done) *

OPEN ACCESS

I agree that my thesis to be published as online

open access (full text)

I acknowledged that Universiti Teknologi Malaysia reserves the right as follows :

1. The thesis is the property of Universiti Teknologi Malaysia.

2. The Library of Universiti Teknologi Malaysia has the right to make copies

for the purpose of research only.

3. The Library has the right to make copies of the thesis for academic

exchange.

..

Certified by :

..

SIGNATURE

SIGNATURE OF SUPERVISOR

860719-38-5889

(NEW IC NO. PASSPORTNO.)

DR. SALINDA BUYAMIN

NAME OF SUPERVISOR

Date: 04 MAY 2010 Date: 04 MAY 2010

UNIVERSITI TEKNOLOGI MALAYSIA

I hereby declare that I have read this thesis and in my opinion this thesis is

sufficient in terms of scope and quality for the award of the degree of Bachelor of

Electrical Engineering (Mechatronics).

Signature : __________________________

Name of Supervisor : __________________________

Date : __________________________

DR. SALINDA BUYAMIN

04 MAY 2010

RFID BASED INDOOR NAVIGATION ASSISTANCE ROBOT FOR THE BLIND

AND VISUALLY IMPAIRED

CHEONG WAI LEONG

A thesis submitted in partial fulfillment of

the requirements for the award of the degree

of Bachelor of Electrical Engineering (Mechatronics)

Faculty of Electrical Engineering

Universiti Teknologi Malaysia

MAY 2010

ii

I declare that this thesis entitled RFID Based Indoor Navigation Assistance Robot

for the Blind and Visually Impaired is the result of my own research except as cited

in the references. The thesis has not been accepted for any degree and is not

concurrently submitted in candidature of any other degree.

Signature : __________________________

Name of Author : __________________________

Date : __________________________

CHEONG WAI LEONG

04 MAY 2010

iii

Special dedicated to

My beloved family and friends

iv

ACKNOWLEDGEMENT

I would like to take this opportunity to express my deepest gratitude to my

supervisor, Dr. Salinda Buyamin who had been guided me along the project. She has

been motivating and inspired me to successfully complete my project. Her guidance,

advice, encouragement, patient and support given throughout the project are greatly

appreciated.

Sincere thanks also to my lecturers who had given me valuable suggestions

and helpful discussions to ensure successfulness of the project.

My appreciations also extend to my parents and friends for their caring and

support. Last but not least, I am thankful for those who directly or indirectly lent me

a hand in this project.

v

ABSTRACT

Blind and visually impaired is a group of people who lose their eye sight.

Consequently, there is a significant impact on their mobility directly. This paper

proposed an efficient algorithm for mobile robot to navigate in indoor environment

by using RFID system. Service robot with using this algorithm is able to aid this

group of people in their indoor navigation. There is one RFID reader installed at the

bottom chassis of the mobile robot to sense for passive RFID tag embedded under

carpet on the floor. Each tag will retrieve a unique identification number when

interrogated by appropriate frequency. By using the identification number, a

database for coordinate positions of each tag on the map is established. Therefore,

the position of the robot can be known in certainty in such environment. Besides,

orientation and direction to reach goal location can be obtained by using three

coordinate positions and trigonometry functions. The algorithm presents in this

paper categorized different movements into four categories so that direction and

turning angle can be obtained accurately. As a result, the robot is able to navigate to

goal location with smooth and shorter path. Another advantage, obstacle avoidance

is integrated into the navigation algorithm so that the robot can be implemented in a

dynamic environment. In addition to that, a stick with rotary base and elastic rubber

holder is built. Therefore, movements of user will leads to small impact on the robot

while navigating. Furthermore, different destination can be chosen by user through

the keypad attached. Sound indication is used to notify user on any dangerous

encountered by the robot along the path.

vi

ABSTRAK

Buta merupakan kejadian seseorang yang kehilangan penglihatan selama-

lamanya. Oleh sebab ini, pergerakan seseorang akan menjadi sukar. Dengan

berpandukan sistem RFID dan fungsi-fungsi trigonometri, robot khidmat dapat

bergerak secara efektif di dalam bangunan. Robot khidmat yang menggunakan cara

ini dapat membantu golongan buta untuk bergerak secara bebas di dalam bangunan.

Satu pembaca RFID akan dipasangkan di bahagian bawah robot untuk membaca tag-

tag RFID yang telah disusun di bawah permaidani. Setiap tag akan menghantar satu

set nombor yang unik kepada pembaca apabila terdedah kepada frekuensi yang

bersesuaian. Dengan menggunakan nombor-nombor unik ini, satu tapak data yang

menyimpan koordinat-koordinat bagi semua tag boleh disediakan. Jadi, kedudukan

dan juga orientasi robot dapat dihitungkan dengan meggunakan tiga koordinat dan

fungsi-fungsi trigonometri. Cara yang disampaikan akan mengasingkan pelbagai

pergerakan oleh robot kepada empat kumpulan. Dalam setiap kumpulan, terdapat

pengiraan sudut pusingan dan juga cara pemutusan arah yang tersendiri. Oleh sebab

itu, robot dapat bergerak secara lancar ke destinasi. Selain daripada itu, ciri

pengelakan halangan yang ditambah kepada robot menyebabkan robot khidmat ini

dapat digunakan dalam situasi dinamik. Satu tongkat yang boleh berputar di hujung

dipasang kepada robot ini. Di samping itu, pengguna boleh memegang pemegang

yang diperbuat daripada getah yang elastik. Dengan ini, pergerakan pengguna tidak

akan memberi impak yang besar kepada robot semasa bergerak. Pengguna boleh

menggunakan papan kunci yang tersedia untuk memilih lokasi yang ingin disampai.

Semasa bergerak, pelbagai bunyi akan dikeluarkan oleh robot untuk memberitahu

keadaan persekitaran kepada pengguna.

vii

TABLE OF CONTENTS

CHAPTER TITLE

PAGE

AUTHORS DECLARATION ii

DEDICATION iii

ACKNOWLEDGEMENT iv

ABSTRACT v

ABSTRAK vi

TABLE OF CONTENTS

LIST OF TABLES

vii

ix

LIST OF FIGURES x

LIST OF SYMBOL AND ABBREVIATIONS xiii

LIST OF APPENDICES xiv

1 INTRODUCTION

1.1 Background of Study 1

1.2 Statement of Problem 3

1.3 Research Objectives 5

1.4 Significance of Study 5

1.5 Scope of Study 5

2 LITERATURE REVIEW

2.1 The Guide Cane 6

2.2 RoboCart 7

2.3 Walking Guide Robot 9

2.4 Guide Dog 10

2.5 UBIRO 11

viii

3 METHODOLOGY

3.1 Mechanical Design 13

3.2 Circuit Design and Equipments 16

3.2.1 System Overview 16

3.2.2 Indoor Navigation

3.2.3 Obstacles Avoidance

18

22

3.2.4 User Interfacing 22

3.3 Software Design 23

3.3.1 Determination of Quadrant of

Movement

25

3.3.2 Determination of Angle for Previous

Position to Current Position and

Current Position to Goal Position

3.3.3 Categorization

27

28

3.3.4 Special Case 29

3.3.5 Interrupt Routine for Tag Detected 31

4 RESULT AND DISCUSSION

4.1 Keypad and Robot Control 33

4.2 Obstacle and Sensor response 34

4.3 Experiments for Navigation 38

4.4 Results on Navigation 40

4.5 Summary of Experiment 45

5 CONCLUSIONS AND RECOMMENDATIONS

5.1 Conclusions 47

5.2 Limitations and Future Recommendations 48

REFERENCES 49

APPENDICES 51

ix

LIST OF TABLES

TABLE NO. TITLE

PAGE

3.1 Summary of categorization 28

4.1 Response of middle sensor with respect to

distance of obstacle

35

4.2 Response of right sensor with respect to

distance of obstacle

36

4.3 Response of left sensor with respect to

distance of obstacle

37

4.4 Response of buzzer 38

x

LIST OF FIGURES

FIGURE NO. TITLE

PAGE

2.1 The Guide Cane 7

2.2 RoboCart 8

2.3 Placement of RFID tags for RoboCart

localization

9

2.4 Prototype of Walking Guide robot 9

2.5 Tactile displays for the Walking Guide Robot 10

2.6 The Guide Dog 11

2.7 UBIRO 12

3.1 Mechanical design (overview) 13

3.2 Top view and dimensions 14

3.3 Front view and dimensions 14

3.4 Side view and dimensions 15

3.5 Stick with rotary base, rubber holder and

adjustable length

15

3.6 Overview of system 16

3.7 Power supply circuit 17

3.8 PIC18F452 circuit connections 18

xi

3.9 RFID technology 19

3.10 RFID reader and passive tags 20

3.11 Signal conditioning circuit for RFID reader 20

3.12 Stepper motor 21

3.13 Motor part 21

3.14 Analog Infrared sensors 22

3.15 Keypad and buzzers for user interfacing 23

3.16 Navigation Algorithm 24

3.17 Quadrant Distribution 25

3.18 Determination of quadrant 26

3.19 Angle calculation 27

3.20 Type 3 movement 30

3.21 Type 4 movement 30

3.22 Interrupt flow chart 31

4.1 Voltage response of middle sensor against

distance of obstacle

35

4.2 Voltage response of right sensor against

distance of obstacle

36

4.3 Voltage response of left sensor against distance

of obstacle

37

4.4 Low resolution map with 40 RFID tags used 39

4.5 High resolution map with 60 RFID tags used 40

4.6 Result for case 1 (low resolution map) 41

4.7 Result for case 1 (high resolution map) 41

4.8 Result for case 2 (low resolution map) 42

4.9 Result for case 2 (high resolution map) 42

xii

4.10 Result for case 3 (low resolution map) 43

4.11 Result for case 3 (high resolution map) 43

4.12 Result for case 4 (low resolution map) 44

4.13 Result for case 4 (high resolution map) 45

xiii

LIST OF SYMBOL AND ABBREVIATIONS

WHO - World Health Organization

ETA - Electronics Travel Aids

GPS - Global Positioning System

IR - Infrared

RFID - Radio frequency identification

MCL - Monte Carlo Markov localization

TOF - Time of Flight

ITD - Inter-aural Time Difference

DC - Direct current

PDA - Personal digital assistant

PC - Personal computer

PE - Poly ethane

PCB - Printed circuit board

UART - Universal asynchronous receive transmit

LED - Light emitting diode

EMF - Electro motive force

ADC - Analog to digital converter

GUI - Graphic user interface

Q

P

- Quadrant of the previous movement

Q

N

- Quadrant of the next movement

- Resistance (ohm)

V - Volt

m - Meter

cm - Centimeter

mm - Milimeter

Hz - Frequency (Hertz)

xiv

LIST OF APPENDICES

APPENDIX NO. TITLE

PAGE

A Schematic drawing of main board 51

B Schematic drawing of motor 52

C Source code 53

CHAPTER 1

INTRODUCTION

1.1 Background of study

According to the statistic data from World Health Organization (WHO), there

are 314 million people are visually impaired worldwide reported on year 2009 and 45

million of them are blind. Most people with visual impaired are older. Besides,

females are more at risk at every age in every part of the world. From the findings

by WHO, it was found that 87% of the worlds visually impaired live in developing

countries. In Malaysia, there were total of 13,743 reported as visually impaired on

year 2000. In order to lend a hand on this group of people, Malaysian Association

for Blind has been established in year 1951 by Department of Social Welfare. This

organization provides services that help the blind and prevent the tragedy of

avoidable blindness. They held a lot of activities such as educational programs,

rehabilitation courses and etc.

Guiding tools were built from centuries ago to help the blind. Apparently,

blind men use sticks and animals to guide themselves from the beginning. Start from

the past three decades, advance technology was use to develop more convenient and

reliable tools which called electronics travel aids (ETAs)[1]. ETAs that developed

were such as SonicGuide, Sonic Pathfinder, Mowat sensor, Nav Belt, Guide Cane[2]

and etc.[3]

2

ETA is a form of assistive technology with the purpose of enhancing the

mobility of the blind or visually impaired. In order to accomplish this task, ETA

usually built from all types of different electronics circuits and mechanical

components. Besides, ETA would be able to spatially sense the environment at a

reasonable distance and display this information in easily understood format such as

hearing or touching to the user. Many types of sensors are mounted on the ETA to

sense the environment such as ultrasonic sensors, vision system and magnetic

compass for spatial sensing and direction finding purpose.

Since the blind or visually impaired person sensory system are limited to the

sense of smelling, hearing and touching for navigation purpose, therefore, ETA must

be able to convert the information of surrounding into those kinds of signals to

acknowledge them of their whereabouts. The most commonly used indication

systems are sound and skin stimulation. Sound has the following attributes to be the

indication system:

Intensity of sound

Frequency of sound

Direction of sound

Meanwhile, the stimulus of the skin can have the following attributes to act as

the indication system:

Intensity of vibration

Frequency of vibration

Location of vibration

With the aid of the indication system, ETA can also communicate with the

users about the environment conditions.

In order to make the mobile robot to be able to localize and estimate its

orientation in outdoor environment, Global Positioning System (GPS) is the most

3

suitable method[4]. However, this method is not applicable in indoor environment

[5]. One common localization algorithm used in indoor environment is dead

reckoning but this method having its limitation since it will accumulate the error in

its location estimation [6, 7]. Other approaches are adding external sensor such as

laser range finder, camera and etc. for accurate localization [8, 9]. In some

researches, ultrasonic sensor, infrared (IR) system, Wi-Fi, Bluetooth and etc. are

used for indoor localization. However, they have their shortcomings and

limitation[5]. For such, infrared system will be influenced by light, Bluetooth will be

cost non-effective if employ in large scale environment [5]. Hence, Radio Frequency

Identification (RFID) system turns out to be the suitable method for indoor

localization and orientation estimation [6-11].

RFID is a wireless recognition system that able to retrieve data store in a tag

in high speed when appropriate frequency is provided by the antenna. RFID system

has been applied into robot technology. If this technology is implemented properly

into service robot, this kind of robot can service human at any time and any where

[6]. Therefore, RFID system has been utilized for robot positioning [6-9, 11]. The

RFID tags are arrange in a particular pattern in the environment to provide absolute

position information. The benefit to use RFID tag is the tag will not affected by the

condition of the environment in terms of dirt, vibration and it is cheap to buy in bulk

quantities [5]. Therefore, it is suitable to be used in a large scale indoor environment.

Moreover, passive RFID tag used does need no power supply thus, maintenance fee

can be reduced. Other than that, RFID reader and tag is contactless while

transmitting data. Hence, RFID tag can be installed under carpet so people will not

notice its existence in the environment.

1.2 Statement of Problem

Visually impaired or blind persons will face a lot of inconvenience in their

daily life as they lost one of the most important sensors to interact with the world

which is sight. Therefore, some simple daily task such as pick up a phone, go to the

4

toilet which can be performed easily by ordinary human will become difficulties for

them. One of the most important features for living things is the mobility. The

mobility is defined as independently to travel from a start point to a destination. This

simple definition actually consists of a lot of complicated task as mobility needs a

person who is able to avoid obstacles while moving aimlessly through the space.

Besides, the moving person must first identify the purpose of reaching a destination.

Next, the person must know not only what those destinations are, but also where they

situated. They must be oriented and able to maintain the currency of orientation as

they moving through the space [12]. In short, all sub-tasks mentioned above will

become the problems for a visually impaired or blind person when they trying to

navigate through the space.

The most convention tools to aid blind or visually impaired person are white

cane and guide dogs. However, they have their drawbacks. For white cane, the users

must have sufficient training before using it. Besides, white cane requires users to

actively scan the small area around them. For the guide dogs, extensive training is

required and it is very costly. Furthermore, the guide dogs will only be useful for

about five years [2].

The development of ETA with GPS navigation devices provides the blind or

visually impaired with some degree of independence in unknown environments.

However, it seems like this kind of ETA focused on outdoor mobility for blind or

visually impaired. Problems due to indoor navigation remain largely unsolved [4].

The problems can be seen as the blinds or visually impaired person walking alone in

an unfamiliar large indoor environment such as airport, shopping malls, museum and

etc. It becomes very challenging for the blind or visually impaired person to

navigate with the correct orientation to a corner such as ticket counter, toilet, cash

register counter and etc. safely. Therefore, one type of ETA should be developed to

aid this group of people to navigate safely in such condition.

5

1.3 Research Objectives

1. To design a low cost assistance robot that is able to help the visually

impaired people to navigate in large indoor environment.

2. To integrate the concept of collision avoidance in the assistance robot.

3. To interface with the user on destination choosing and dangerous

indication along the path.

1.4 Significance of Study

At the end of the project, an assistance robot will be built to help the blind or

visually impaired person to navigate safely in a wide indoor environment. The robot

may be implemented in a huge building such as airport, supermarket, museum and

etc. It can be used to aid the visually impaired or blind people to move to their

desired destination in such environment. Therefore, the robot will be able to help

this group of people to move independently. Other than that, the robot will be able to

navigate without any line or wall following. Thus, this robot may differ with the

convention navigation robot.

1.5 Scope of Study

Throughout the project, the RFID technology will be used for the navigation

of robot in an indoor environment without line following or wall following. Besides,

sensors will be used to act as the eyes of the robot to detect the obstacles around it.

Thus, the robot will be able to avoid any obstacles. Other than that, the robot will be

able to interface with the user. The user may choose different destinations for the

robot to navigate. Furthermore, the robot will acknowledge the user the dangerous

along its navigation path.

CHAPTER 2

LITERATURE REVIEW

2.1 The Guide Cane

The Guide Cane was developed as an assistance robot for the blind and

visually impaired people by Ulrich, I. and Borenstein, J. Both of them were from

University of Michigan. The Guide Cane as shown in Figure 2.1 looks like a

convention cane used by visually impaired person. However, the cane is attached

with a robot which can determine the safe path for the user. Besides, the Guide Cane

can be used in indoor environment as well as outdoor environment. The Guide Cane

consists of three main modules which are housing, wheelbase and handle. At the

housing module, it contains most of the electronic components and ten Polaroid

ultrasonic sensors were mounted around the housing. With the aid of these sensors,

the Guide Cane is able to detect obstacles in the area ahead with a total angular

spacing of 120. Other than that, it is equipped with the ability of wall following by

locating two sonars at its sideway. At the wheelbase module, there are ball bearings

to support two unpowered wheels. The wheels are also built with encoder to

determine the relative motion of the wheels. Then, a servomotor, controlled by the

built-in computer, can steer the wheels left and right relative to the cane. For the

handle module, it acts as a main physical interface between the user and the Guide

Cane. Besides, a mini joystick located at the handle allows the user to specify a

desired direction of motion.

7

Figure 2.1 Guide Cane

During operation, the user pushes the lightweight Guide Cane forward.

When the Guide Canes ultrasonic sensors detects an obstacle, the embedded

computer determines a suitable direction of motion that steers the Guide Cane and

the user around it. The steering action results in a very noticeable force felt in the

handle, which easily guides the user without any conscious effort on his/her part.

2.2 RoboCart

As shown in Figure 2.2, Robocart is a trolley like robot specially builds for

independent shopping for the blind or visually impaired. This robot can perform

three main tasks which are navigation, product selection and product retrieval. In

order to complete the first task, the robot uses laser-based Monte Carlo Markov

localization (MCL) method. However, there are several problems arises in this

method. For example, the robot cannot localize itself when there is heavy shopper

traffic and this method will failed due to wheel slippage on wet floor. The main

drawback of this method is once its failed; it either never recovers, or recovers after

a long drift. Therefore, an RFID-enabled surface as shown in Figure 2.3 is used to

tackle these problems. Every RFID tags having its 2D coordinate. In this method,

the low frequency RFID tags are used with 134.2kHz frequency. The function of

RFID is to create a recalibration area. When the RoboCart reaches on this

recalibration area, its location is known with certainty. Besides, the robot also

utilizes laser range finder and camera for vision based line following to assist in the

navigation task.

8

Figure 2.2 RoboCart

For the second task which is product selection, the robot is equipped with a 9-

key numeric keypad, microphone and Bluetooth headphone for interfacing. The

robot uses three non-visual interfaces for product selection. The first one is a

browsing interface where shopper browses through the complete hierarchy to find the

desired product when given the product categories. The second will be typing

interface where the user required typing the search string using a numeric keypad.

The last interface is using speech based recognition where speech recognition is used

to form the search string.

After the RoboCart successfully lead the shopper to a target place, it is the

task for the robot to help shopper in retrieval of product from the shelf. In order to

complete the task, the robot is equipped with a wireless omni-directional barcode

reader. With the aid of this reader, shopper can scan the barcodes on the desired

shelf and the product name of scanned barcode is read to the shopper.

9

Figure 2.3 Placement of RFID tags for RoboCart localization

2.3 Walking Guide Robot

Walking guide robot as shown in Figure 2.4 is a robot introduced by

Chonbuk National University, Korea. This robot consists of a guide vehicle for the

obstacle detection and a tactile display device for the transfer of the obstacle

information. Besides, the robot offers the information of position and walking

direction acquired from GPS module to the user by voice.

Figure 2.4 Prototype of Walking Guide robot

In order to detect obstacle for the user, the sensing unit of the robot has six

ultrasonic sensors and four infrared ray sensors. Time of Flight (TOF) and Inter-

10

aural Time Difference (ITD) method are used to calculate the position of obstacles.

The Walking Guide Robot is driven and steered by two powered motors in actuating

unit. Meanwhile, separated direct current (DC) motors and drivers control two

wheels of the robot independently. In the navigation module, the robot consists of a

GPS, GPS map and a personal digital assistant (PDA) to provide the information of

position and walking direction for the user by voice. Since the touch senses of the

human are poor at determining absolute quantities but very sensitive to changes,

therefore vibrotactile stimulation is used to design the tactile display device. The

tactile display device located in the handle as shown in Figure 2.5 will offers the

processed obstacle information such as position, size, moving, shape of obstacle and

safe path.

Figure 2.5 Tactile displays for the Walking Guide Robot

2.4 Guide Dog

The Guide Dog as shown in Figure 2.6 was designed by Wong Hwee Ling

who was a student of Universiti Teknologi Malaysia in the year 2002. The main

purpose of design of the robot is to cultivate a guidance concept and to develop the

direction indicator for the blind. The Guide Dog consists of two main parts which

are the chassis and the handle.

11

The chassis is implanted with the main board and other circuitry boards.

Besides, there are five IR sensors equipped on the chassis. Therefore, the robot is

able to sense obstacles in seven directions including North, North-East, North-West,

West, East, upper staircase, holes and uneven surface. The IR sensor used in this

robot is capable to sense obstacle with distance at average 15cm. Furthermore, the

chassis includes two DC motor which is used to control two pairs of wheels of the

Guide Dog.

The handle is the tool for the user to hold when traveling with the robot. At

the end of the handle, there is a keypad and buzzer to interface between the user and

robot. For the keypad, there are twelve switches to control the robot. When an

obstacle is detected, user may utilize this keypad to check the direction of the

obstacle from the user. On the other hand, a buzzer is also used at the end of the

handle. The function of the buzzer is for indication when there are obstacles around

the robot. The buzzer is able to emit series of different sound signal in order to

indicate the direction of dangerous nearby.

Figure 2.6 The Guide Dog

2.5 UBIRO

UBIRO as shown in Figure 2.7 was developed based on an electric

wheelchair (EMC-230) for the elderly and disabled persons as a mobility device.

UBIRO consists of three main parts which are the personal computer (PC) for control,

12

the RFID system for obtaining the location information of robot and the mobile base

for navigation.

The PC attached on the robot is utilized as a control center. It is able to

handle the information of IC tags from the RFID system and sends command to

mobile base in order to reach the goal. On the other hand, the RFID system will read

the IC tags on the floor. Thus, UBIRO may deduce its location and pose. Finally,

the mobile base is controlled by the PC based on calculations result from the

information provided by the RFID system.

Other than that, the robot consists of external sensors such as distance sensors

and touch sensors. By using this kind of sensors, the robot is able to detect and avoid

obstacles. Meanwhile, in the mechanical design, the robots front wheels are free

rotating casters. In this case, it may cause some instability when moving.

Figure 2.7 UBIRO

CHAPTER 3

METHODOLOGY

3.1 Mechanical Design

Figure 3.1, 3.2, 3.3 and 3.4 shows the mechanical design in this project. The

robot comprises of two front wheels made up of poly ethane (PE). Besides, there are

six aluminium sensor holders mounted in front of the robot which are use for three

IR sensors installation. Other than that, one RFID reader is installed at the bottom

chassis of the robot which made up of Perspex. It is used for RFID tags detection on

the floor. Moreover, two aluminium buzzer holders are constructed at both sides for

buzzer attachment. In order to lead the user, a stick will be connected with the rotary

stick holder on the upper part. The middle part made up of a circuit board which

supported by printed circuit board (PCB) stands.

Figure 3.1 Mechanical design (overview)

14

Figure 3.2 Top view and dimensions

Figure 3.3 Front view and dimensions

15

Figure 3.4 Side view and dimensions

Figure 3.5 Stick with rotary base, rubber holder and adjustable length

Figure 3.5 shows the stick attached on the robot. The stick with rotary base

and rubber holder is built. The purpose of such design is to reduce the impact of the

movement of user on the robot while navigating. The user will hold on the elastic

rubber holder therefore the user will not accidently push and pull the robot. The

rotary base is used so that the robot is able to make smooth turning although the stick

16

is hold by the user through rubber holder. Furthermore, the length of the stick can be

adjusted to meet the height of the user.

3.2 Circuit Design and Equipments

The system design in this project involves three main parts which include

indoor navigation, obstacles avoidance, and user interfacing. Therefore, different

types of devices have to be used in order to complete the three tasks stated. A

microcontroller with model PIC18F452 was used to control the operation of the

robot.

3.2.1 System Overview

Figure 3.6 Overview of system

Refer to Figure 3.6, input to the microcontroller consist of power supply,

digital data from RFID reader, analog value from IR sensors and keypad reading. By

processing those data, microcontroller will determine the operations of actuator

which is stepper motors and buzzers.

17

There are two power supplies used in the robot, one is supplied to the

microcontroller. The other power supply is used to drive stepper motors, RFID

reader and IR sensors. Separate power supplies were used to avoid any unwanted

signal interference that may happen to microcontroller. As illustrated in Figure 3.7,

regulator LM7805 were used to regulate the power supply to 5V (VCC).

Successfully regulated voltage will be indicated by the LED installed. Besides, one

diode was used to protect the circuit in case the polarity of the power supply is

incorrect.

Figure 3.7 Power supply circuit

Figure 3.8 shows the pins connections of microcontroller. It can be noted that

the microcontroller is supplied by the 20MHz clock for its operation. Other than that,

eight pins were used for keypad connections, three pins for analog signal from IR

sensors, four pins for stepper motor control, two pins for buzzers and one pin was

used for universal asynchronous receive transmit (UART) connection to receive data

from RFID reader. Furthermore, other pins were used to connect to light emitting

diodes (LEDs) and push buttons.

Regulator

LM7805

18

Figure 3.8 PIC18F452 circuit connections

3.2.2 Indoor Navigation

In order to design the robot which is able to navigate in indoor environment

without line or wall following, the RFID technology will be used for localization and

pose estimation. On the other hand, two stepper motors will be involved in this

project in order to have a more accurate angle turning when the robot navigates to the

target.

In RFID technology as shown in Figure 3.9, it is always made up of two

components which are:

The tag or transponder, which has its own identification number.

The reader or interrogator, which is able to detect and receive the

identification number from the transponder.

19

Figure 3.9 RFID technology

In this project, there will be one RFID reader and sixty RFID passive tags

used. The model of RFID reader used is IDR-232 as shown in Figure 3.10. Both of

the reader and tags will be operated in the frequency of 125 kHz. Other than that, the

maximum range needed for the reader to sense for the tag is 5cm. In the project,

RFID tags will be implanted on the floor and covered by mat in 2D- Cartesian

coordinate manner. Every tag will have its own coordinate location. On the other

hand, the RFID reader will be mounted on the base of the robot. Therefore, when the

robot navigates through the mat which having RFID tags implanted under it, the

reader will be able to retrieve the data stored inside the tag. As a result, the location

and pose of the robot will be known. Therefore, the direction for the robot to

navigate can be determined by the microcontroller through trigonometry functions.

The advantage of RFID technology used in this project is the ability to work

under harsh environment conditions. For instance, the ultrasonic sensors will face

problems with the noise while the IR sensor will face difficulties when work under

various density of light. Other than that, the response time of the RFID is very fast.

Thus, the location of the robot can be determined in short time. Moreover, the shell

life of RFID is long since there is no battery needed for the passive tags which is

used in this project.

20

Figure 3.10 RFID reader and passive tags

The signal conditioning circuit for RFID reader is as shown in Figure 3.11.

The circuit is needed due to RFID reader is using RS232 communication type for

signal transmission. The transistor as shown in Figure 3.11 is responsible to toggle

signal from reader (-10V/+10V) to signal compatible with microcontroller (5V/0V).

When 10V is read from reader, the transistor will turn on and pull down the voltage

at Rx pin to ground. Conversely while -10V is read, the transistor will turn off and

Rx pin will pull up to 5V (VCC).

Figure 3.11 Signal conditioning circuit for RFID reader

The model of the stepper motor that is used in this project is 17PMK508 as

shown in Figure 3.12. This is an unipolar stepper motor. The holding torque given

Signal conditioning part

21

by the motor will up to 2.25 cm/kg. For simplification, a motor driver with model

UCN5804 will be used to drive the motor.

Figure 3.12 Stepper motor

Figure 3.13 shows the schematic drawing for one of the stepper motor. It can

be noted that the motor operates in 12V power supply. Besides, motor driver with

model UCN5804 was used to drive the motor. With two inputs which are Half_step

and One_phase driven to low, the motor is driven under two phase mode which will

produce highest torque to the motor. Two inputs of the driver are controlled by

microcontroller which will determine the direction of turning and speed of the motor.

The diode is needed for the circuit in order to protect the transistor inside the driver.

This is due to a large back-emf current will be produced when the particular coil is

turned off which may damage the transistor inside the driver.

Figure 3.13 Motor part

Power resistor

Motor driver

UCN5804

22

3.2.3 Obstacles Avoidance

In order to avoid any obstacles along the path of the robot, three analog IR

sensors will be equipped in front of the robot as shown in Figure 3.14. The model of

IR sensor used is GP2Y0A21YK. After testing, it was found that the sensor was able

to sense the object with distance up to 80 cm. Besides, it is less affected by the

surfaces colour of the object. The output of the sensor will be further process by the

analog to digital converter (ADC) of the microcontroller to obtain the distance of the

obstacle. At a critical distance, avoidance algorithm will be performed.

Figure 3.14 Analog Infrared sensors

3.2.4 User Interfacing

In line with the title of the project, the user who we are dealing with is blind

or visually impaired person. Therefore, interfacing with the user cannot be done by

using graphic user interface (GUI). In order to overcome this problem, one keypad is

implemented in the project. The function of the keypad is for the user to choose

different destinations for navigation. Other than that, two buzzers are attached at

both side of the robot. The buzzers are used for indication to the user about the

dangerous along the path. Therefore, user may be able to identify the condition of

23

their surroundings by notice which side of buzzer is generating beep sound. Figure

3.15 shows the keypad used and placement of buzzers.

Figure 3.15 Keypad and buzzers for user interfacing

3.3 Software Design

The most important part in software design is the navigation algorithm. The

navigation algorithm includes the direction decision making with the aid of RFID

tags. Other than that, the robot is able to determine its orientation by using three

coordinate positions. Figure 3.16 shows the algorithm of navigation.

Keypad connector Buzzers

24

Figure 3.16 Navigation Algorithm

Figure 3.16 shown is the algorithm executed by the robot from the start until

it reaches the target chooses by the user. In order to obtain the direction for the robot

to navigate, the trigonometric functions and Cartesian coordinates in a regular

gridline are used. The algorithm categorized different movements into four

categories and having four types of angle calculation. As a result, the robot is

expected to navigate with a smooth and shorter path. In order to categorize the

movements, there are three steps needed to be taken:

(i) Determination of quadrant of movement

(ii) Determination of angle for previous position to current position and

current position to goal position

(iii) Categorization

25

3.3.1 Determination of Quadrant of Movement

The first part in the algorithm is to determine which quadrant of the previous

movement (Q

P

) and the quadrant taken for the next movement to reach the goal (Q

N

).

This can be done by examine the coordinate position of the previous, current and

goal location. By referring to Figure 3.17, the distribution of quadrant is as shown.

Figure 3.17 Quadrant Distribution

Where:

1

st

quadrant: 0 90

2

nd

quadrant: 90 180

3

rd

quadrant: 180 270

4

th

quadrant: 270 360

For the situation shown in Figure 3.18, the path taken from previous location

to current location is in 0 90 (x

current

x

previous

and y

current

y

previous

) of the frame

of previous location. Therefore, Q

P

is 1

st

quadrant. While observe the path from

current location to goal location, it is in the portion of 90 180 (x

goal

x

current

and

y

goal

y

current

) of the frame of current location. As a result, Q

N

is 2

nd

quadrant.

26

Figure 3.18 Determination of quadrant

Conclusion for quadrant determination can be drawn by examine x, y

coordination of previous location, current location and goal location:

x

current

x

previous

and y

current

y

previous

Q

P

= 1

st

quadrant (3.1)

x

current

x

previous

and y

current

y

previous

Q

P

= 2

nd

quadrant (3.2)

x

current

x

previous

and y

current

y

previous

Q

P

= 3

rd

quadrant (3.3)

x

current

x

previous

and y

current

y

previous

Q

P

= 4

th

quadrant (3.4)

x

goal

x

current

and y

goal

y

current

Q

N

= 1

st

quadrant (3.5)

x

goal

x

current

and y

goal

y

current

Q

N

= 2

nd

quadrant (3.6)

x

goal

x

current

and y

goal

y

current

Q

N

= 3

rd

quadrant (3.7)

x

goal

x

current

and y

goal

y

current

Q

N

= 4

th

quadrant (3.8)

27

3.3.2 Determination of Angle for Previous Position to Current Position and

Current Position to Goal Position

Angle for previous position to current position (

previous

current

) and angle for

current position to goal position (

current

goal

) can be calculated by using

trigonometric functions.

Figure 3.19 Angle calculation

By referring to Figure 3.19, both angles can be obtained by using equations as

shown:

previous

current

= = tan

-1

[ (x

current

x

previous

) / (y

current

y

previous

)] (3.9)

current

goal

= = tan

-1

[ (x

goal

x

current

) / (y

goal

y

current

)] (3.10)

When meet certain condition where denominator eg. (y

current

y

previous

) or

(y

goal

y

current

) is zero, we may assign 90 to the result.

28

3.3.3 Categorization

By examine four conditions which are Q

P

, Q

N

,

previous

current

and

current

goal

,

all possible angles turning can be obtained. Table 3.1 shows the result of grouping

which having different direction and turning angle.

Table 3.1: Summary of categorization

Type 1 Type 2 Type 3* Type 4*

Q

P

Q

N

Q

P

Q

N

Q

P

Q

N

Q

P

Q

N

1

st

1

st

1

st

4

th

2

nd

4

th

2

nd

4

th

2

nd

2

nd

2

nd

3

rd

4

th

2

nd

4

th

2

nd

3

rd

3

rd

3

rd

2

nd

1

st

3

rd

1

st

3

rd

4

th

4

th

4

th

1

st

3

rd

1

st

3

rd

1

st

1

st

2

nd

3

rd

4

th

2

nd

1

st

4

th

3

rd

Each types having its own direction determination method as well as equation

to obtain turning angle,

rotation

. The * sign means special case.

Type 1:

Q

P

> Q

N

right

Q

P

< Q

N

left

Q

P

= Q

N

(i)

current

goal

>

previous

current

right

(ii)

current

goal

<

previous

current

left

rotation

= absolute (

current

goal

-

previous

current

) (3.11)

29

Type 2:

Q

P

= 1

st

, Q

N

= 4

th

right

Q

P

= 3

rd

, Q

N

= 2

nd

right

Q

P

= 4

th

, Q

N

= 1

st

left

Q

P

= 2

nd

, Q

N

= 3

rd

left

rotation

= 180 - [absolute(

current

goal

) + absolute(

previous

current

)] (3.12)

Type 3*:

Q

P

and Q

N

are even right

Q

P

and Q

N

are odd left

rotation

= 180 - [absolute(

current

goal

) - absolute(

previous

current

)] (3.13)

Type 4*:

Q

P

and Q

N

are even left

Q

P

and Q

N

are odd right

rotation

= 180 - [absolute(

previous

current

) - absolute(

current

goal

)] (3.14)

3.3.4 Special Case

It can be seen that Type 3 and Type 4 having the same component for Q

P

and

Q

N

. This can be explained through the situation shown in Figure 3.20 and Figure

3.21 where the movements are in opposite direction although having the same value

for Q

P

and Q

N

.

30

Figure 3.20 Type 3 movement

Figure 3.21 Type 4 movement

In both cases, it can be noted that Q

P

is 1

st

quadrant while Q

N

is 3

rd

quadrant.

However, the direction of turning for both condition are different.

In Figure 3.20,

current

goal

>

previous

current

rotation

= 180 - [absolute(

current

goal

) - absolute(

previous

current

)] (3.15)

Direction taken left

Where

current

goal

= and

previous

current

=

31

In Figure 3.21,

previous

current

>

current

goal

rotation

= 180 - [absolute(

previous

current

) - absolute(

current

goal

)] (3.16)

Direction taken right

Where

current

goal

= and

previous

current

=

As a result, we shall determine which condition stated below is fulfilled

before we categorize such movement in which category:

current

goal

>

previous

current

Type 3

previous

current

>

current

goal

Type 4

3.3.5 Interrupt Routine for Tag Detected

Figure 3.22 Interrupt flow chart

32

In order to make the robot is able to keep updating its current position in the

environment; an interrupt routine as shown in Figure 3.22 will be utilized. In the

routine, the identification number retrieve from tag will be used to find the

corresponding coordination position from database. Then, the retrieved coordination

position will be compared with the current position. If that is a new position, the

algorithm will update its previous and current position. As a result, the robot will be

able to keep track of its position although performing obstacle avoidance and

immediately trace for the new path to reach the destination after avoid obstacle.

CHAPTER 4

RESULT AND DISCUSSION

4.1 Keypad and Robot Control

In order to interface with user, the 4x4 matrix keypad is used. After power up

the robot, user may enter key A for mode A execution. Besides, user may enter key

B for mode B instead of mode A. In mode A, the robot is able to navigate to three

predefined destinations. User is required to key-in the number of destination to

travel. In mode B, the user may enter the xy coordinate of the destination wishes to

navigate. Below are the steps to control the robot in mode A and mode B.

Robot control in mode A:

1. Three destinations can be chosen by the user. User may enter key 1,

key 2 or key 3.

2. Key # must be pressed to enter the desired location number so that

the robot will start navigate.

3. After the robot reaches the destination, a long beep sound from

buzzers will be noticed. User may repeat step 1 and step 2 for next

location. User may enter key D for mode reset so that user can

switch between mode A and mode B

34

Robot control in mode B:

1. User may enter the desired location by key-in the xy-coordinate of the

destination. First, user is needed to enter x-coordinate.

2. Key # must be pressed to confirm the x-coordinate.

3. User may enter the y-coordinate of the desired destination.

4. Key # should be pressed so that the robot acquired the destination

and start to navigate.

5. Step 1 to step 4 can be repeated if the user wish to continue execute in

this mode. Otherwise, key D can be pressed for mode reset.

4.2 Obstacle and Sensor response

Three analog IR sensors used in this project playing an important role in

obstacle avoidance. The sensors will produce an increasing voltage as obstacle

approaching towards it. Therefore, output voltage corresponding to a particular

distance of obstacle for each sensor is gathered so that distance of the obstacle can be

known. Table 4.1, 4.2, 4.3 shows the response of middle, right and left sensor with

respect to distance of obstacle respectively. Besides, the average reading from each

sensor is plotted in Figure 4.1, 4.2 and 4.3 respectively.

35

Table 4.1: Response of middle sensor with respect to distance of obstacle

Distance

(cm)

Reading 1

(V)

Reading 2

(V)

Reading 3

(V)

Average Reading

(V)

>60 0.31 0.28 0.37 0.32

60 0.49 0.5 0.52 0.50

55 0.53 0.54 0.55 0.54

50 0.55 0.58 0.59 0.57

45 0.58 0.61 0.66 0.62

40 0.64 0.67 0.7 0.67

35 0.73 0.77 0.77 0.76

30 0.84 0.88 0.87 0.86

25 0.98 1.03 0.94 0.98

20 1.22 1.25 1.12 1.20

15 1.57 1.5 1.36 1.48

10 1.8 1.71 1.6 1.70

5 1.94 1.79 1.6 1.78

Figure 4.1 Voltage response of middle sensor against distance of obstacle

0.00

0.20

0.40

0.60

0.80

1.00

1.20

1.40

1.60

1.80

2.00

>60 60 55 50 45 40 35 30 25 20 15 10 5

V

o

l

t

a

g

e

(

V

)

Distance (cm)

Voltage of Middle Sensor (V) vs Obstacle Distance

(cm)

Average Reading (V)

36

Table 4.2: Response of right sensor with respect to distance of obstacle

Distance

(cm)

Reading 1

(V)

Reading 2

(V)

Reading 3

(V)

Average Reading

(V)

>60 0.46 0.25 0.29 0.33

60 0.53 0.55 0.56 0.55

55 0.56 0.59 0.59 0.58

50 0.59 0.63 0.63 0.62

45 0.65 0.68 0.69 0.67

40 0.71 0.74 0.76 0.74

35 0.76 0.81 0.84 0.80

30 0.86 0.91 0.94 0.90

25 1.01 1.06 1.02 1.03

20 1.25 1.27 1.25 1.26

15 1.59 1.6 1.56 1.58

10 1.93 1.94 1.71 1.86

5 1.94 1.94 1.82 1.90

Figure 4.2 Voltage response of right sensor against distance of obstacle

0.00

0.20

0.40

0.60

0.80

1.00

1.20

1.40

1.60

1.80

2.00

>60 60 55 50 45 40 35 30 25 20 15 10 5

V

o

l

t

a

g

e

(

V

)

Distance (cm)

Voltage of Right Sensor (V) vs Obstacle Distance

(cm)

Average Reading (V)

37

Table 4.3: Response of left sensor with respect to distance of obstacle

Distance

(cm)

Reading 1

(V)

Reading 2

(V)

Reading 3

(V)

Average Reading

(V)

>60 0.18 0.19 0.24 0.20

60 0.53 0.48 0.51 0.51

55 0.56 0.53 0.54 0.54

50 0.58 0.58 0.58 0.58

45 0.65 0.63 0.62 0.63

40 0.71 0.67 0.69 0.69

35 0.8 0.73 0.77 0.77

30 0.9 0.87 0.86 0.88

25 1 1.02 0.98 1.00

20 1.19 1.14 1.14 1.16

15 1.41 1.37 1.38 1.39

10 1.57 1.57 1.58 1.57

5 1.57 1.57 1.58 1.57

Figure 4.3 Voltage response of left sensor against distance of obstacle

By referring to Figure 4.1, 4.2 and 4.3, it can be noted that the response of

each sensor to a particular obstacle distance may not the same. This is due to the

0.00

0.20

0.40

0.60

0.80

1.00

1.20

1.40

1.60

1.80

2.00

>60 60 55 50 45 40 35 30 25 20 15 10 5

V

o

l

t

a

g

e

(

V

)

Distance (cm)

Voltage of Left Sensor (V) vs Obstacle Distance (cm)

Average Reading (V)

38

characteristic of each sensor is not the same and results in some tolerance in their

output voltage. Besides, environment factors such as density of light will affect the

response of sensor. Therefore, a software calibration on each sensor is made so that

all sensors can work effectively under different environment.

Whenever there is obstacle detected in front of the robot, different types of

beep sound will be produced by both sides of buzzer according to the distance of the

obstacle. Table 4.4 shows the response of the buzzers to the obstacle.

Table 4.4: Response of buzzer

Distance from the obstacle (cm) Response of buzzer

35 25 Beep once

25 20 Beep twice

20 10 Beep three times and perform avoidance

<10 Beep four times and move backward

If there is obstacle detected at the side of robot, the particular buzzer will

generate a beep sound to notify user. For example, when there is obstacle at left side,

left buzzer will generate beep sound. After notify user that there is obstacle, the

robot will search for a path to pass through it. After determine a new path, buzzer

will beep again to notify user. For instance, if the robot will make a turn to right side,

right buzzer will produce a beep sound.

4.3 Experiments for Navigation

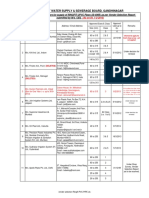

In order to test for the validity of the algorithm, two RFID based maps are

constructed as shown in Figure 4.4 and Figure 4.5. The map as shown in Figure 4.4

(low resolution map) is using 40 RFID tags to act as 2D coordinate position. All tags

are embedded under the carpet and separated to each other by 22.5 cm.

39

On the other hand, another map is built as shown in Figure 4.5 (high

resolution map). The purpose of the construction of this map is to obtain the result of

movement of the robot when implemented in a higher resolution of RFID based

environment. Therefore, 60 RFID tags are used for construction and every tag is

separated to each other by 15 cm. The 3 tags in yellow color indicated in both maps

are the destination which may choose by the user for navigation in this experiment.

Figure 4.4 Low resolution map with 40 RFID tags used

40

Figure 4.5 High resolution map with 60 RFID tags used

4.4 Results on Navigation

Case1:

The robot is required to navigate from start point to destination 1, destination 2, and

destination 3. There is no obstacle added in the map.

41

Figure 4.6 Result for case 1 (low resolution map)

Figure 4.7 Result for case1 (high resolution map)

From the result obtained as shown in Figure 4.6and Figure 4.7, it can be

noted that the robot is able to reach the entire destination. Referring to Figure 4.6,

the robot is able to make a correct angle turning with the proposed algorithm.

Therefore, the robot is able to take the shortest path to reach the target. Referring to

Figure 4.7, the path taken by the robot to reach the target is not smooth compare to

the result obtained in Figure 4.6. This is due to the detection range of the RFID

42

reader is approximately 5 cm. Therefore, the robot will detect other tag nearby along

its path and perform angle adjustment.

Case 2:

The robot is started in random orientations and needed to navigate to destination 2.

The map is clear of any obstacle.

Figure 4.8 Result for case 2 (low resolution map)

Figure 4.9 Result for case 2 (high resolution map)

43

Figure 4.8 and Figure 4.9 show that the robot is able to navigate to the

destination regardless to the starting pose. The path taken by the robot shown in

Figure 4.8 is smooth compared to the path taken in Figure 4.9, this is due to the range

of detection of the RFID reader as described in case 1.

Case 3:

In this case, there is one obstacle added into the map. The robot is commanded to

travel for destination 2.

Figure 4.10 Result for case 3 (low resolution map)

Figure 4.11 Result for case 3 (high resolution map)

44

From the result obtained from Figure 4.10 and Figure 4.11, the robot is able

to avoid any obstacle encountered in its path. After avoidance, it will trace for a new

path to reach the destination provided that there is tag detected. From observation,

the robot implemented in low resolution map will take longer distance to detect a tag

for position feedback due to the distance between tags is further. In some experiment,

the robot is ran out of the map due to no tag is detected after obstacle avoidance.

These phenomena become well when implement the robot in high resolution map.

Because of the more compact placement of the tags, the robot is able to detect a tag

fast after perform avoidance and execute new path finding.

Case 4:

The robot is commanded to navigate to destination with more obstacles added in the

map.

Figure 4.12 Result for case 3 (low resolution map)

45

Figure 4.13 Result for case 4 (high resolution map)

It can be seen that in Figure 4.12 and Figure 4.13, the robot is able to navigate

to the destination although there is more than 1 obstacle in the map. In low

resolution map, the robot becomes more difficult to trace for a new path after

perform avoidance. This is due to limited tag to act as feedback for the robot. The

difficulties is not face by the robot when using high resolution map since the robot

can easily detect a tag to act as position feedback.

4.5 Summary of Experiment

The result of experiments shows that the robot is able to make different

turning angle while navigating to the destination with the proposed algorithm under

different condition as long as there is tag detected to act as position and orientation

feedback. Besides, different condition on the map will make the robot take different

path to travel to the destination. Moreover, the robot will avoid any obstacle in its

path and a new path will be taken to reach the destination.

46

Since the robot will take the obstacle avoidance as the high priority than

reaching the goal location, the result shown above is only true under situation where

no obstacle is too close to the destination. Some experiments were failed when using

low resolution map due to the prototype map used is small and number of tag used is

limited. Consequently, the robot may run out of the map while performing obstacle

avoidance. However, this problem is solved easily when the robot is implemented in

a high resolution where more tags are utilized as the position and orientation

feedback to the robot. The drawback when using high resolution map is the RFID

reader may detect some unwanted tag which come closer to the robot when the robot

navigating. This is due to the reading range of the reader is approximately 5 cm.

Therefore, the path taken by the robot to the destination may not as smooth as the

path taken in low resolution map.

CHAPTER 5

CONCLUSIONS AND RECOMMENDATIONS

5.1 Conclusions

In conclusion, the robot is able to lead the user to various destinations as

commanded by the user. The user can select the destination by using the keypad

equipped on the robot.

As the result obtained from experiment, the robot is able to navigate to the

destination with the proposed algorithm by using RFID system and movements

categorization. Furthermore, the robot can be implemented in a dynamic

environment since the robot is able to gather the obstacle information in the

surroundings through the analog IR sensors mounted in front of the robot. Any

obstacle that detected by the robot will be displayed to the user by using beep sound

generated by the buzzers.

As a result, assistance robot for the blind and visually impaired may aid the

user to navigate in indoor environment more efficiently by using this algorithm.

48

5.2 Limitations and Future Recommendations

There are a few limitations encountered in developing the project. Firstly, the

use of the buzzers to indicate the user about the dangerous in the environment may

not a good solution. This is due to the user needed to further interpret the type of

beep sound generated to understand the information. Besides, the current draw by

the stepper motor is quite high which result in the battery is exhausted fast and

needed to recharge frequently. Other than that, a lot of heat is generated by the

power resistor that used in motor part result in high temperature. In certain condition,

the robot may make wide turn approximately 180 and face to the user. If the user

keeps move forward, he or she may kick on the robot.

Voice interface may be the best solution to interface with the user where it

can be used in place of the buzzers. By using voice interface, user may interpret the

message from robot easily. This may aid the movement of user especially when

performing obstacle avoidance. Besides, cooling system may be added to the motor

part since there is a lot of heat generated by the power resistor. Other than that, the

turning angle of the robot should be limited to less than 180 so that the robot will

take longer path to make wide turn. This will reduce the problem on crashing

between the user and robot.

49

REFERENCES

1. Tan, C.L., C.S. Tan, and Moghavvemi, Electronic Travel Aid for Visually

Impaired, in The AEESEAP International Conference 2005. 2005: KL,

Malaysia.

2. Ulrich, I. and J. Borenstein, The GuideCane-applying mobile robot

technologies to assist the visually impaired. Systems, Man and Cybernetics,

Part A: Systems and Humans, IEEE Transactions on, 2001. 31(2): p. 131-136.

3. Wong, H.L., Design of Direction Indicator of A Guide Dog for the Blind.

2002, University Technology Malaysia: Skudai,Johor.

4. Mau, S., Melchior, N.A., Makatchev, M. , Steinfeld, A., BlindAid: An

Electronic Aid for The Blind. 2008, Carnegie Mellon University, Robotics

Institute: Pittsburgh, PA.

5. Tesoriero, R., et al., Improving location awareness in indoor spaces using

RFID technology. Expert Systems with Applications. 37(1): p. 894-898.

6. HyungSoo, L., C. ByoungSuk, and L. JangMyung. An Efficient Localization

Algorithm for Mobile Robots based on RFID System. in SICE-ICASE, 2006.

International Joint Conference. 2006.

7. Sunhong, P. and S. Hashimoto, Autonomous Mobile Robot Navigation Using

Passive RFID in Indoor Environment. Industrial Electronics, IEEE

Transactions on, 2009. 56(7): p. 2366-2373.

50

8. Milella, A., et al. RFID-based environment mapping for autonomous mobile

robot applications. in Advanced intelligent mechatronics, 2007 ieee/asme

international conference on. 2007.

9. Gharpure, C.P. and V.A. Kulyukin, Robot-assisted shopping for the blind:

issues in spatial cognition and product selection. Intelligent Service Robotics,

2008. Volume 1(3): p. 15.

10. Bin, D., et al. The Research on Blind Navigation System Based on RFID. in

Wireless Communications, Networking and Mobile Computing, 2007. WiCom

2007. International Conference on. 2007.

11. Liu, J. and Y. Po. A Localization Algorithm for Mobile Robots in RFID

System. in Wireless Communications, Networking and Mobile Computing,

2007. WiCom 2007. International Conference on. 2007.

12. Electronic Travel Aids: New Directions for Research. 1986.

51

Appendix A

Schematic drawing of main board

52

Appendix B

Schematic drawing of motor

53

Appendix C

Source code

/******************Source code is under copyright*****************/

// For further information, please refer to Dr. Salinda Buyamin

Das könnte Ihnen auch gefallen

- 500+ Electronics Project Ideas1Dokument51 Seiten500+ Electronics Project Ideas1tariq7671% (24)

- Piping Handbook - Hydrocarbon Processing - 1968Dokument140 SeitenPiping Handbook - Hydrocarbon Processing - 1968VS271294% (16)

- Glass Inspection CriteriaDokument4 SeitenGlass Inspection CriteriabatteekhNoch keine Bewertungen

- Final Report On Autonomous Mobile Robot NavigationDokument30 SeitenFinal Report On Autonomous Mobile Robot NavigationChanderbhan GoyalNoch keine Bewertungen

- Transmicion de CF 500, CF 600 PDFDokument337 SeitenTransmicion de CF 500, CF 600 PDFgusspeSNoch keine Bewertungen

- Attendance and Access Control System Using RFID System - 24 PagesDokument24 SeitenAttendance and Access Control System Using RFID System - 24 PagesAmirul Akmal100% (1)

- Wireless Robot and Robotic Arm Control With Accelerometer Sensor ProjectDokument41 SeitenWireless Robot and Robotic Arm Control With Accelerometer Sensor ProjectAmandeep Singh KheraNoch keine Bewertungen

- Ibr CalculationsDokument9 SeitenIbr Calculationsaroonchelikani67% (3)

- Path Finder Autonomous RobotDokument6 SeitenPath Finder Autonomous RobotIJRASETPublicationsNoch keine Bewertungen

- Dogging Guide 2003 - WorkCover NSWDokument76 SeitenDogging Guide 2003 - WorkCover NSWtadeumatas100% (1)

- Third Eye For Blind (2.2)Dokument33 SeitenThird Eye For Blind (2.2)Uvan MNoch keine Bewertungen

- Pick and Place Robot Robot Using Rfid and XigbeeDokument92 SeitenPick and Place Robot Robot Using Rfid and XigbeeRohitKrsna0% (1)

- GSM Robot Final Spiral BindingDokument40 SeitenGSM Robot Final Spiral Bindingaashika shahNoch keine Bewertungen

- Analysis and Application Research of Mobile RobotDokument5 SeitenAnalysis and Application Research of Mobile RobotermaNoch keine Bewertungen

- Mine Detection and Marking RobotDokument61 SeitenMine Detection and Marking Robotpiyushji125Noch keine Bewertungen

- Final Project Report Crime DataDokument37 SeitenFinal Project Report Crime Datakz9057Noch keine Bewertungen

- HUMAN DocumentationDokument20 SeitenHUMAN DocumentationJayanth ReddyNoch keine Bewertungen

- Human Follower RobotDokument8 SeitenHuman Follower RobotIJRASETPublicationsNoch keine Bewertungen

- FTheodoulou DissertationcDokument61 SeitenFTheodoulou DissertationcAmino fileNoch keine Bewertungen

- InTech-Unevenness Point Descriptor For Terrain Analysis in Mobile Robot ApplicationsDokument11 SeitenInTech-Unevenness Point Descriptor For Terrain Analysis in Mobile Robot Applicationskamaleshsampath.nittNoch keine Bewertungen

- Arduino Based Surveillance Robot: Bachelor of Science (Information Technology)Dokument26 SeitenArduino Based Surveillance Robot: Bachelor of Science (Information Technology)PRATHAMESH JAMBHALENoch keine Bewertungen

- Smart Assistive Shoes and Cane To Guide Visually Challenged Persons Using Arduino MicrocontrollerDokument50 SeitenSmart Assistive Shoes and Cane To Guide Visually Challenged Persons Using Arduino MicrocontrollerSandèép YadavNoch keine Bewertungen

- Obstacle Avoiding RobotDokument24 SeitenObstacle Avoiding Robotmkafle101Noch keine Bewertungen

- Lidar-Based Obstacle Avoidance For The Autonomus RobotDokument7 SeitenLidar-Based Obstacle Avoidance For The Autonomus RobotangelNoch keine Bewertungen

- Research-Paper On Sign GloveDokument68 SeitenResearch-Paper On Sign GloveAland AkoNoch keine Bewertungen

- Vehicle Following and Collision Avoidance Mobile RobotDokument93 SeitenVehicle Following and Collision Avoidance Mobile RobotseawhNoch keine Bewertungen

- Warehouse Management BOT Using ArduinoDokument6 SeitenWarehouse Management BOT Using ArduinoIJRASETPublicationsNoch keine Bewertungen

- Visvesvaraya Technological University: BELAGAVI-590 018Dokument28 SeitenVisvesvaraya Technological University: BELAGAVI-590 018Kiran KeeruNoch keine Bewertungen

- Travel Chaperon 4052Dokument83 SeitenTravel Chaperon 4052addssdfaNoch keine Bewertungen

- Hand Gesture Controlled Robot Using AccelerometerDokument51 SeitenHand Gesture Controlled Robot Using AccelerometerjaiNoch keine Bewertungen

- Motion DectectorDokument34 SeitenMotion DectectorINDIAN ICONICNoch keine Bewertungen

- Review 3 ReportDokument17 SeitenReview 3 ReportSadanala KarthikNoch keine Bewertungen

- Control Systems FINAL REPORTDokument20 SeitenControl Systems FINAL REPORTSOHAN SARKAR 20BEC0767Noch keine Bewertungen

- A Project Report On: Ultrasonic Blind Walking StickDokument29 SeitenA Project Report On: Ultrasonic Blind Walking StickprateshNoch keine Bewertungen

- Lidar-Based Obstacle Avoidance For The Autonomous Mobile RobotDokument7 SeitenLidar-Based Obstacle Avoidance For The Autonomous Mobile RobotMonica CocutNoch keine Bewertungen

- UG Major Project - Wireless Bomb Detection RobotDokument79 SeitenUG Major Project - Wireless Bomb Detection RobotAditya75% (8)

- Batch 8 Final ReportDokument53 SeitenBatch 8 Final ReportGøkùlNoch keine Bewertungen

- Advance Voting MachineDokument55 SeitenAdvance Voting MachineSuguna PriyaNoch keine Bewertungen

- IJRTI2304047Dokument4 SeitenIJRTI2304047Himanshu KumarNoch keine Bewertungen

- Blind Persons Aid For Indoor Movement Using RFIDDokument6 SeitenBlind Persons Aid For Indoor Movement Using RFIDSajid BashirNoch keine Bewertungen

- Nano RoboticsDokument19 SeitenNano RoboticsAluxy tiwariNoch keine Bewertungen

- Automated Navigation System With Indoor Assistance For The BlindDokument33 SeitenAutomated Navigation System With Indoor Assistance For The Blindveda sreeNoch keine Bewertungen

- Localization and Navigation For Aoutonomous Mobile RobotDokument12 SeitenLocalization and Navigation For Aoutonomous Mobile RobotEkky Yonathan GunawanNoch keine Bewertungen

- IOT Springer1Dokument10 SeitenIOT Springer1munugotisumanthraoNoch keine Bewertungen

- Abstract by EEE18008Dokument15 SeitenAbstract by EEE18008smart telugu guruNoch keine Bewertungen

- Campus NavigationIJETRMDokument8 SeitenCampus NavigationIJETRMVYSAKH vysakhNoch keine Bewertungen

- PHD ThesisDokument130 SeitenPHD ThesisUshnish SarkarNoch keine Bewertungen

- Object Detection Techniques For Mobile Robot Navigation in Dynamic Indoor Environment: A ReviewDokument11 SeitenObject Detection Techniques For Mobile Robot Navigation in Dynamic Indoor Environment: A ReviewiajerNoch keine Bewertungen

- 1361 Robot Arm PDFDokument82 Seiten1361 Robot Arm PDFMahayudin SaadNoch keine Bewertungen

- Smart Luggage SystemDokument8 SeitenSmart Luggage SystemIJRASETPublicationsNoch keine Bewertungen

- R L Jalappa Institute of Technology: "Robotic Arm Controlled Using IOT Applications"Dokument15 SeitenR L Jalappa Institute of Technology: "Robotic Arm Controlled Using IOT Applications"Gunavardhanareddy ChinnaNoch keine Bewertungen

- MANGO LEAF DISEASE DETECTION USING IMAGE PROCESSING TECHNIQUE - farhanPSM1Dokument47 SeitenMANGO LEAF DISEASE DETECTION USING IMAGE PROCESSING TECHNIQUE - farhanPSM1sarahNoch keine Bewertungen

- Project - Sample ReportDokument62 SeitenProject - Sample ReportParas SharmaNoch keine Bewertungen

- Security Access Control System Based On Radio Frequency Identification (RFID) and Arduino TechnologiesDokument68 SeitenSecurity Access Control System Based On Radio Frequency Identification (RFID) and Arduino Technologiesub8c2869Noch keine Bewertungen

- Solar Floor Cleaner RobotDokument4 SeitenSolar Floor Cleaner RobotIJRASETPublicationsNoch keine Bewertungen

- Algorithms For 5G Physical LayerDokument225 SeitenAlgorithms For 5G Physical LayerMikatechNoch keine Bewertungen

- Ardunio Mega Based Smart Traffic Control System PDFDokument11 SeitenArdunio Mega Based Smart Traffic Control System PDFnitin z ranjanNoch keine Bewertungen

- SynopsisDokument14 SeitenSynopsisXinNoch keine Bewertungen

- A Low-Cost Indoor Navigation System For Visually Impaired and BlindDokument19 SeitenA Low-Cost Indoor Navigation System For Visually Impaired and BlindEy See BeeNoch keine Bewertungen

- UGThesis UGV MyDokument58 SeitenUGThesis UGV Myغ نيلىNoch keine Bewertungen

- Modified Imp Vision Based Obstacle Avoidance For Mobile Robot Using Optical Flow ProcessDokument5 SeitenModified Imp Vision Based Obstacle Avoidance For Mobile Robot Using Optical Flow ProcessLord VolragonNoch keine Bewertungen

- Qr-Map: Byod Indoor Map Directory Service: BY Ang Jenn NingDokument67 SeitenQr-Map: Byod Indoor Map Directory Service: BY Ang Jenn NingPradeep NegiNoch keine Bewertungen

- OrganizedDokument33 SeitenOrganizednaveenallapur2001Noch keine Bewertungen

- Facemask Detection Using A I and IotDokument49 SeitenFacemask Detection Using A I and IotRaZa UmarNoch keine Bewertungen

- BPSKDokument1 SeiteBPSKtariq76Noch keine Bewertungen

- Aminou Mtech Elec Eng 2014 PDFDokument193 SeitenAminou Mtech Elec Eng 2014 PDFtariq76Noch keine Bewertungen

- Television Engg PDFDokument74 SeitenTelevision Engg PDFtariq76Noch keine Bewertungen

- Advanced Microprocessor & Microcontroller Lab Manual PDFDokument22 SeitenAdvanced Microprocessor & Microcontroller Lab Manual PDFtariq76Noch keine Bewertungen

- E1-Vlsi Signal ProcessingDokument2 SeitenE1-Vlsi Signal Processingtariq76Noch keine Bewertungen

- Television and Video Engineering Lab Manual PDFDokument77 SeitenTelevision and Video Engineering Lab Manual PDFtariq76100% (2)

- NGP 1415 CEP1195 SynopsisDokument3 SeitenNGP 1415 CEP1195 Synopsistariq76Noch keine Bewertungen

- Pedal Operated Water PurificationDokument5 SeitenPedal Operated Water Purificationtariq7675% (4)

- How To Send and Receive SMS Using GSM ModemDokument1 SeiteHow To Send and Receive SMS Using GSM Modemtariq76Noch keine Bewertungen

- Rucker Thesis Rev13Dokument186 SeitenRucker Thesis Rev13tariq76Noch keine Bewertungen

- 17voltage SagSwell Compensation Using Z Source - 1 PDFDokument1 Seite17voltage SagSwell Compensation Using Z Source - 1 PDFtariq76Noch keine Bewertungen