Beruflich Dokumente

Kultur Dokumente

MPI-parallelized Radiance On Sgi Cow and SMP: Abstract. For Lighting Simulations in Architecture There Is The Need For

Hochgeladen von

karz031600 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

16 Ansichten10 Seitenfdfsdf

Originaltitel

Mpi

Copyright

© © All Rights Reserved

Verfügbare Formate

PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenfdfsdf

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

16 Ansichten10 SeitenMPI-parallelized Radiance On Sgi Cow and SMP: Abstract. For Lighting Simulations in Architecture There Is The Need For

Hochgeladen von

karz03160fdfsdf

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 10

in: Parallel Computation, 4th International ACPC Conference, Salzburg, Austria,

LNCS 1557, pages 549558, February 1999.

MPI-parallelized Radiance on

SGI CoW and SMP

Roland Koholka, Heinz Mayer, Alois Goller

Institute for Computer Graphics and Vision (ICG), University of Technology,

M unzgrabenstrae 11, A8010 Graz, Austria

{koholka,mayer,goller}@icg.tu-graz.ac.at,

WWW home page: http://www.icg-tu.graz.ac.at/~Radiance

Abstract. For lighting simulations in architecture there is the need for

correct illumination calculation of virtual scenes. The Radiance Synthetic

Imaging System delivers an excellent solution to that problem. Unfortu-

nately, simulating complex scenes leads to long computation times even

for one frame. This paper proposes a parallelization strategy which is

suited for scenes with medium to high complexity to decrease calcula-

tion time. For a set of scenes the obtained speedup indicates the good

performance of the chosen load balancing method. The use of MPI deliv-

ers a platform independent solution for clusters of workstations (CoWs)

as well as for shared-memory multiprocessors (SMPs).

1 Introduction

Both ray-tracing and radiosity represent a solution for one of the two main

global lighting eects, specular and diuse interreection. To extend one of the

two models to get a complete solution for arbitrary global lighting suers from

long lasting calculations even for scenes with medium complexity.

The Radiance software package oers an accurate solution for that problem

which will be described in more detail in section 2. A project about comparison

of indoor lighting simulation with real photographs shows that for scenes with

high complexity and detailed surface and light source description simulation can

take hours or even days [7]. Parallelization seems to be an appropriate method

for faster rendering but keeping the level of quality.

Especially in Radiance there exists a gap between rapid prototyping which

is possible with the rview program, and the production of lm sequences which

is best done using queuing systems. Our parallel version of Radiance allows fast

generation of images from realistic scenes without any loss of quality within a

few minutes.

Since massive parallel systems still are rare and expensive, we primarily focus

on parallelizing Radiance for architectures commonly used at universities and

in industry, which are CoWs and SMPs. Platform independence and portability

are major advantages of Radiance from the systems point of view. These points

are met basing our code on the Message Passing Interface (MPI).

2

2 Structure of Radiance

As any rendering system Radiance tries to oer a solution for the so-called

rendering equation [11] for global lighting of a computer generated scene. The

basic intention was to support a complete accurate solution. One main dierence

between Radiance and most other rendering systems is that it can accurately

calculate the luminance of the scene which is done by physical denition of light

sources and surface properties. For practical issues the founder of the Radiance

Synthetic Imaging System implemented a lot of CAD-le translators for easy

import of dierent geometry data. This forms a versatile framework for anyone

who has to do physically correct lighting simulations [6]. Technically, Radiance

uses a light-backwards ray-tracing algorithm with a special extension to global

diuse interreections. Global specular lighting is the main contribution of this

algorithm and is well situated in computer graphics [1]. The diculty lies in

extending this algorithm to global diuse illumination which is briey explained

in the next section.

2.1 Calculating Ambient Light

Normally a set of rays distributed over the hemisphere is used for calculating

diuse interreections which is called distributed ray-tracing. One can imagine

that in the case of valuable scene complexity calculating multiple reections of

sets of rays is very time consuming. Radiance solves this problem by estimating

the gradient in global diuse illumination for each pixel, then it decides whether

the current diuse illumination can be interpolated from points in the neighbor-

hood or must be calculated from scratch [5]. Exactly calculated points are cached

in an octree for fast neighborhood search. Performance is further increased since

diuse interreections are only calculated at points necessary for a particular

view in contrast to other radiosity algorithms. However, all these optimizations

appear to be not enough to render a complex scene with adequate lighting con-

ditions in reasonable time on a single workstation. Parallelization seems to be

appropriate for further reduction of execution time.

2.2 Existing Parallelization: rpiece

Since version 2.3, there is an extra program for parallel execution of Radiance,

called rpiece. To keep installation as simple as possible, rpiece only bases on

standard UNIX commands like the pipe command. All data are exchanged via

les, requiring that NFS runs on all computation nodes. While the presence of

NFS usually is no problem, some le lock managers are not designed for fast and

frequent change of the writing process. Additionally, remote processes are not

initiated automatically but must be invoked by the user.

In his release notes, Ward [4] claimed a speedup of 8.2 on 10 workstations. We

can conrm this result only on suitable scenes, and where sequential computation

lasts more than one day. However, rpiece performs badly if execution does

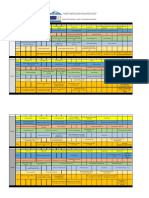

not last at least several 10 minutes in parallel. Table 1 shows the time rpiece

3

needs on a CoW for smaller scenes. Obviously, there is an enormous overhead in

partitioning and splitting the scene as well as in communicating these pieces via

NFS. Consequently, rpiece helps rendering very large scenes in shorter time,

but does not produce quick results one might be willing to await.

le rpict (1 proc.) rpiece (1 proc.) rpiece (3 proc.) rpiece (10 proc.)

A 1:49.66 19:02.09 14:22.93 14:48.25

B 5:26.79 17:38.85 12:49.82 14:23.04

C 17:03.23 29:11.56 14:15.85 15:12.59

Table 1. Performance (time) of rpiece on a cluster of workstations (CoW).

3 Parallelization Strategies

Because of the nature of ray-tracing these algorithms can be easily parallelized

by dividing the image space into equal blocks. But since no one can predict

the scene complexity per block which depends on the model itself and the cur-

rent viewpoint, we now discuss strategies which are general and deliver a good

speedup for almost all cases.

3.1 The Standard Interface: MPI

Using standards is benecial in many aspects, since they ensure portability and

platform-independence is also given. Moreover, standards are well documented

and software is well maintained. PVM was a de-facto standard prior to the

denition of MPI. Consequently, we use MPI (Message Passing Interface) since

now it is the standard for communication on parallel architectures and has widely

been accepted.

MPI only denes the interface (API), thus it is subject to the implementation

how to handle a specic architecture. Many implementations of MPI exist, the

most popular free versions are MPICH from Argonne National Lab. [10] and

LAM, which was developed at the Ohio Supercomputer Center and is currently

housed with the Laboratory for Scientic Computing at the University of Notre

Dame, Indiana [9]. There are also several commercial implementations, as from

SGI especially adapted and optimized to run on their PowerChallenge and Origin

shared-memory machines. We use MPICH for both the CoW and the SMP, and

additionally SGIs own version. MPICH proved to be easy to install, to support

many dierent architectures and communication devices, and to run stable on

all tested platforms.

Another possibility would have been to use shared memory communication

directly. However, this is only applicable to SMPs. There does not exist any

standard for virtual shared memory that is similarly accepted and widespread

4

as MPI. Since Radiance is a software package designed to run on nearly any

platform, not using a common means for communication would be a drawback.

Thus, we refrained from using shared memory calls, although it might have been

benecial for more ecient distribution of the calculated ambient light values,

and for holding the scene description. The upcoming extension to MPI as dened

in the MPI-2 standard seems to solve these insuciencies. We look forward to a

stable and publicly available implementation of MPI-2.

3.2 Data Decomposition

There are two strategies to implement parallel ray-tracing. One way is to divide

the scene into parts, introducing virtual walls [8]. Due to diculties in load-

balancing and the need for very high-bandwidth communication channels virtual

walls seem to be not appropriate for the architectures we want to run Radiance.

The second strategy is to divide the frame, implementing the manager/worker

paradigm [2]. The manager coordinates the execution of all workers and deter-

mines how to split the frame. The workers perform the actual rendering and

send the results to the manager, who combines the pieces and writes the output

frame to disk. Since a global shared memory cannot be assumed on any archi-

tecture, every worker must hold its own version of the scene. This requires much

memory and also slows down initialization since every single worker has to read

the octree le from disk.

3.3 Concurrency Control

Since Radiance calculates a picture line by line, the frame is divided into blocks

consisting of successive lines. The manager only has to transmit the start and

the end line of the block. Most of the time the manager waits for data. To avoid

busy-waiting the manager is suspended for one second each time the test for

nished blocks ends negative, as illustrated in gure 1. This way the manager

needs less than one percent of CPU-time, and therefore it is possible to run the

manager and one worker on the same processor. Suspending the manager for

one second causes a nondeterministic behavior that results in slight variations

of execution time.

One feature of Radiance is that it can handle diuse interreection. These

values must be distributed to every worker to avoid needlessly calculations.

Broadcasting every new calculated value would cause too much communication

(O(n

2

)), n being the number of workers. To reduce communication trac, only

blocks of 50 values are delivered. This communication must to be non-blocking,

because these blocks are only received by a worker when he enters the subroutine

for calculating diuse interreection.

3.4 Load Balancing

The time it takes to calculate a pixel diers for every casted ray, depending on

the complexity of the intersections that occur. Therefore load-balancing prob-

lems arise when distributing large parts. Radiance uses an advanced ray-tracing

5

Send equal blocks

with BL= EL/(3*N)

to all workers

RL = EL * 2/3

Wait a second

Any task

finished

New job with

BL= RL/(3*(N +1))

RL= RL - BL

RL = 0

YES

YES

NO

N number of worker

BL blocklength

RL remaining lines

EL endline

Wait for all jobs,

save and exit

NO

Fig. 1. Dynamic load balancing strategy.

algorithm that uses information of neighboring rays to improve speed. Thus, rays

are not independent anymore, and scattering the frame in small parts would solve

the load-balancing problem, but also decreases the prot from the advanced al-

gorithm.

A compromise that meets these two controversial requirements is to combine

dynamic partitioning and dynamic load-balancing as stated in [3]. At the begin-

ning of the calculation relatively large parts (see gure 1) of the frame are sent

to the workers to take advantage of the fast algorithm. The distributed portions

of the frame become smaller with the progress of the calculation. Near the end

the tasks are that small so that they can be executed fast enough to get a good

load-balance.

4 Computing Platforms

We evaluated performance on computers accessible at our department and at

partner institutes. At rst, we concentrated on evaluating two dierent but com-

mon architectures.

The rst architecture is a cluster of workstations (CoW). It was built at our

institute by connecting 11 O2 workstations (see table 2) from SGI via a 10 Mbit

Ethernet network. All workstations are equally powerful except machines sg50

and sg51, which are about 10% faster. They are only used for experiments with

10 and 11 computing nodes.

The second platform is a PowerChallenge from SGI containing 20 processors.

Each R10000 processor is clocked with 194 MHz and equipped with primary

data and instruction cache of 32 Kbytes each, and 2 Mbyte secondary cache

(p:I32k+D32k, s:2M). All 20 processors share an 8-way interleaved, global main

memory of 2.5 GByte.

6

Name CPU-clock RAM Processor Cache

[MHz] [Mbyte] primary, secondary

sg41 150 128 R10000 p:I32k+D32k, s:1M

sg42 . . . sg49 180 128 R5000 p:I32k+D32k, s:512k

sg50, sg51 200 128 R5000 p:I32k+D32k, s:1M

Table 2. Workstations used for the cluster experiments at ICG.

5 Test Environment

We selected a set of scenes with completely dierent requirements for the render-

ing algorithm and its parallelization: One very simple unrealistic scene (spheres)

with a bad scene complexity distribution over the image space, an indoor (hall)

and an outdoor lighting simulation (townhouse). These 3 scenes come along with

the Radiance distribution. The most important test scene is the detailed model

of an oce at our department with a physical description of light sources (bu-

reau). All scenes and the corresponding parameter settings are listed in table 3,

and the corresponding pictures are shown in gure 2.

name octree size image size options comment

hall0 2212157 -x 2000 -y 1352 -ab 0 large hall (indoor simulation)

spheres0 251 -x 1280 -y 960 -ab 0 3 spheres, pure ray-tracing

spheres2 251 -x 1280 -y 960 -ab 2 3 spheres, ambient lighting

bureau0 5221329 -x 512 -y 512 -ab 0 bureau room at ICG

bureau1 5221329 -x 512 -y 512 -ab 1 + ambient lighting

bureau2 5221329 -x 512 -y 512 -ab 2 + increased ambient lighting

townhouse0 3327443 -x 2000 -y 1352 -ab 0 townhouse (outdoor simulation)

townhouse1 3327443 -x 2000 -y 1352 -ab 1 + ambient lighting

Table 3. Used scenes for evaluation of the MPI-parallel version of Radiance.

This test set covers a wide spectrum in size of the octree, complexity of

the scene, time required for rendering and visual impression. All scenes were

computed with and without diuse interreection. Table 4 summarizes the times

measured for the sequential version.

Due to the manager/worker paradigm, x processes result in x 1 workers.

Consequently, whenever we mention n processors (nodes), we start n+1 processes

where one of them is the manager. Since the manager is idle nearly all the time,

we do not count it for the speedup on the SMP. This is acceptable since we know

from tests on the CoW, where the manager and one worker is running on the

same workstation, that the result is falsied less than 1%.

On very small scenes no big advantages will be seen. However, an (almost)

linear speedup should be noticed for larger scenes if no diuse interreections

7

A: Hall (clipped).

B: 3 spheres.

C: ICG room.

D: Townhouse.

Fig. 2. The 4 scenes used for evaluation.

name time on sg48 time on SMP

hall0 1:19:13.48 33:50.34

spheres0 40.89 19.27

spheres2 8:27:00 3:10.11

bureau0 2:32:55.84 1:14:42.73

bureau1 3:56:03.65 1:54:23.19

bureau2 5:13:30.72 2:29:16.35

townhouse0 5:17.98 2:40.64

townhouse1 9:21.82 3:55.07

Table 4. Execution times of the sequential Radiance for the test scenes.

8

are to be calculated (-ab 0). Due to heavy all-to-all communication to distribute

newly calculated ambient lighting values, performance will decrease on such runs

(-ab 2). This eect will mainly inuence the performance on the CoW, since

network bandwidth is very limited. It should show nearly no eects on the SMP.

6 Results

The times for sequential execution T(1) are already shown in table 4. For good

comparison of the platforms and to visualize the performance, we use the speedup

S(n) :=

T(1)

T(n)

and the eciency (n) :=

S(n)

n

, being n the number of processors.

Performance on CoW

0

2

4

6

8

10

12

1 2 3 4 5 6 7 8 9 10 11

# Workers

S

p

e

e

d

u

p

S

hall0 sphere0

sphere2 bureau0

bureau1 bureau2

town0 town1

A: Speedup.

Performance on CoW (2)

0

0,2

0,4

0,6

0,8

1

1,2

1 2 3 4 5 6 7 8 9 10 11

# Workers

E

f

f

i

c

i

e

n

c

y

hall0 sphere0

sphere2 bureau0

bureau1 bureau2

town0 town1

B: Eciency.

Fig. 3. Performance on the cluster of workstations (CoW).

Although one node of the cluster is only half as fast as one SMP-processor, all

larger scenes show a good parallel performance. Utilizing 11 processors, time for

rendering the bureau scene without ambient lighting decreases from 2:32:55.84

to 15:14.35 giving a speedup of 10. Even more processors could be utilized since

the eciency always exceeds 85%, as shown in gure 3.

The four smaller scenes illustrate that speedup cannot be increased if the

time for parallel execution falls below about one minute. This is mainly caused

by the remote shells MPICH has to open in combination with the (slow) 10-

Mbit Ethernet network. However, MPICH proved to run stable during all test.

Furthermore, the chosen load-balancing strategy appears to be well suited even

for the CoW, since no communication bottlenecks occurred during the all-to-all

communication when calculating diuse interreection.

When comparing gures 3 and 4 one can see no big dierence. The four

smaller scenes are rendered a little bit more eciently on the SMP, but above 13

workers, eects of the all-to-all communication decrease performance. Changing

the communication pattern to log n-broadcast instead of sequentially communi-

cating to every other node would reduce this eect. Improving this communica-

tion pattern will be a near future goal.

Most surprisingly, execution time could not be moved well below two minutes,

even if there would have been enough processors. As we experienced, sequential

9

Performance with MPICH on SMP

0

2

4

6

8

10

12

14

16

18

20

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

# Workers

S

p

e

e

d

u

p

S

hall0 sphere0

sphere2 bureau0

bureau1 bureau2

town0 town1

A: Speedup.

Performance with MPICH on SMP (2)

0

0,2

0,4

0,6

0,8

1

1,2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

# Workers

E

f

f

i

c

i

e

n

c

y

hall0 sphere0

sphere2 bureau0

bureau1 bureau2

town0 town1

B: Eciency.

Fig. 4. Performance on the PowerChallenge using MPICH.

overhead starting the parallel tasks and minor load imbalances prohibit scaling

execution time down to seconds even on a shared-memory machine. As on the

cluster, MPICH also worked well on this architecture.

Performance with SGIMPI on SMP

0

2

4

6

8

10

12

14

16

18

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

# Workers

S

p

e

e

d

u

p

S

hall0

sphere0

sphere2

bureau0

bureau1

bureau2

town0

town1

A: Speedup.

Performance with SGIMPI on SMP (2)

0

0,2

0,4

0,6

0,8

1

1,2

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20

# Workers

E

f

f

i

c

i

e

n

c

y

hall0 sphere0

sphere2 bureau0

bureau1 bureau2

town0 town1

B: Eciency.

Fig. 5. Performance on the PowerChallenge using native MPI.

Initially we expected the native MPI version of SGI to be much faster than

MPICH. However, as gure 5 illustrates, only a few scenes could be rendered

using an arbitrary number of processors. The number of open communication

channels seems to be the problem. Moreover, we could nd no advantage regard-

ing performance when using native MPI.

7 Conclusion and Future Work

Our main goal was to nd a parallelized version of the Radiance Synthetic Imag-

ing System for scenes with medium to high complexity to close the gap between

the rview and rpict programs. Another prerequisite was the platform indepen-

dence of the overall system. In section 6 we show that our implementation works

well for most of the selected scenes running on an SMP and also on a CoW.

10

The near to linear speedup indicates a good load balancing for these cases. MPI

helps us to remain platform independent but this was only tested for SGI CoWs

and SMPs. Testing on other platforms will be a topic for future work as well

as improving data I/O during the initialization phase. Broadcasting the octree

over the network will probably eliminate the NFS bottleneck during the scene

reading phase of all workers. Another important point for further investigations

is to evaluate the time consumption for diuse interreection calculation com-

pared to trac. We look forward that these investigations will lead to a more

sophisticated load balancing.

Acknowledgments

This work is nanced by the Austrian Research Funds Fonds zur Forderung

der wissenschaftlichen Forschung (FWF), Project 7001, Task 1.4 Mathemat-

ical and Algorithmic Tools for Digital Image Processing. We also thank the

Research Institute for Software Technology (RIST++), University of Salzburg,

for allowing us to run our code on their 20-node PowerChallenge.

References

1. Watt A. and Watt M. Advanced Animation and Rendering Techniques, Theory

and Practice. ACM Press New York, Addison-Wesley, 1992.

2. Zomaya A. Y. H., editor. Parallel and Distributed Computing Handbook, chapter

9, Partitioning and Scheduling. McGraw-Hill, 1996.

3. Pandzic I.-S. and Magnenat-Thalmann N. Parallel raytracing on the IBM SP2 and

T3D. In Supercomputing Review, volume 7, November 1995.

4. Ward G. J. Parallel rendering on the ICSD SPARC-10s. Radiance Reference

Notes, http://radsite.lbl.gov/radiance/refer/Notes/parallel.html.

5. Ward G. J. A ray tracing solution for diuse interreexion. In Computer Graphics

(SIGGRAPH 88 Proceedings), volume 22, pages 8592, August 1988.

6. Ward G. J. The radiance lighting simulation and rendering system. In Computer

Graphics (SIGGRAPH 94 Proceedings), volume 28, pages 459472, July 1994.

7. Karner K. Assessing the Realism of Local and Global Illumination Models. PhD

thesis, Computer Graphics and Vision (ICG), Graz University of Technology, 1996.

8. Menzel K. Parallel Rendering Techniques for Multiprocessor Systems. In Pro-

ceedings of the Spring School on Computer Graphics (SSCG 94), pages 91103.

Comenius University Press, 1994.

9. LAM/MPI parallel computing. Home page of LAM Local Area Multicomputer:

http://www.lsc.nd.edu/lam/.

10. MPICH A portable implementation of MPI. Home page of MPICH:

http://www.mcs.anl.gov/mpi/mpich/.

11. Kajiya J. T. The rendering equation. In Computer Graphics (SIGGRAPH 86

Proceedings), volume 20, pages 143150, August 1986.

Das könnte Ihnen auch gefallen

- Rating Fee Guidelines For FIDE Rated and National Rated Events v2Dokument4 SeitenRating Fee Guidelines For FIDE Rated and National Rated Events v2karz03160Noch keine Bewertungen

- Zenfone 2 - See What Others Can'T SeeDokument33 SeitenZenfone 2 - See What Others Can'T Seekarz03160Noch keine Bewertungen

- Technical Note: e MMC™ Linux EnablementDokument8 SeitenTechnical Note: e MMC™ Linux Enablementkarz03160Noch keine Bewertungen

- Mic Emmc PartitioningDokument14 SeitenMic Emmc Partitioningkarz03160Noch keine Bewertungen

- Image Compression and Face Recognition: Two Image Processing Applications of Principal Component AnalysisDokument3 SeitenImage Compression and Face Recognition: Two Image Processing Applications of Principal Component Analysiskarz03160Noch keine Bewertungen

- KamikazeDokument16 SeitenKamikazekarz03160Noch keine Bewertungen

- K 20Dokument2 SeitenK 20karz03160Noch keine Bewertungen

- Award 14104Dokument6 SeitenAward 14104karz03160Noch keine Bewertungen

- Overdraft CalculatorDokument2 SeitenOverdraft Calculatorkarz03160Noch keine Bewertungen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5795)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Lab Design and Analysis of Active CS and Cascode AmplifierDokument3 SeitenLab Design and Analysis of Active CS and Cascode AmplifierAzure HereNoch keine Bewertungen

- 10ECL47 MICROCONTROLLER Lab ManualDokument142 Seiten10ECL47 MICROCONTROLLER Lab ManualVishalakshi B HiremaniNoch keine Bewertungen

- Kiln Shell Laser ManualDokument19 SeitenKiln Shell Laser Manualบิ๊ก บิ๊กNoch keine Bewertungen

- CareerCentre Resume and Cover Letter Toolkit - UofTDokument32 SeitenCareerCentre Resume and Cover Letter Toolkit - UofTMallikaShakyaNoch keine Bewertungen

- 3PDokument4 Seiten3PWookie T BradfordNoch keine Bewertungen

- Define Field SelectionDokument3 SeitenDefine Field SelectionSenthil NayagamNoch keine Bewertungen

- Race and Gender in Silicon Valley - SyllabusDokument2 SeitenRace and Gender in Silicon Valley - SyllabusCampus ReformNoch keine Bewertungen

- Case Study NasariaDokument20 SeitenCase Study NasariaHarsh SinhaNoch keine Bewertungen

- Interview HuaweiDokument6 SeitenInterview HuaweiRaden juliNoch keine Bewertungen

- Mohammed M. Windows Forensics Analyst Field Guide... 2023Dokument318 SeitenMohammed M. Windows Forensics Analyst Field Guide... 2023rick.bullard6348Noch keine Bewertungen

- MM420 Parameter ListDokument142 SeitenMM420 Parameter ListJuan RoaNoch keine Bewertungen

- How To Configure An Anybus Controlnet Adapter/Slave Module With Rsnetworx For ControlnetDokument26 SeitenHow To Configure An Anybus Controlnet Adapter/Slave Module With Rsnetworx For ControlnetEcaterina IrimiaNoch keine Bewertungen

- AaiPe NeoBankingDokument17 SeitenAaiPe NeoBankingPuneet SethiNoch keine Bewertungen

- An Exposure of Automatic Meter Reading Anticipated For Instant Billing and Power Controlling ApplicationsDokument4 SeitenAn Exposure of Automatic Meter Reading Anticipated For Instant Billing and Power Controlling ApplicationsEditor IJRITCCNoch keine Bewertungen

- PI Calliope SQN3223 3 20160205 Web PDFDokument2 SeitenPI Calliope SQN3223 3 20160205 Web PDFrqueirosNoch keine Bewertungen

- TutorialsDokument17 SeitenTutorialsnandini chinthalaNoch keine Bewertungen

- Type 2625 and 2625NS Volume BoostersDokument4 SeitenType 2625 and 2625NS Volume Boostershamz786Noch keine Bewertungen

- 8a. Scratch-3.0-for-Inclusive-LearningDokument31 Seiten8a. Scratch-3.0-for-Inclusive-LearningAvram MarianNoch keine Bewertungen

- Government Approved Workshop Gazette NotificationDokument9 SeitenGovernment Approved Workshop Gazette NotificationadheesNoch keine Bewertungen

- Safety Manual (B-80687EN 10)Dokument35 SeitenSafety Manual (B-80687EN 10)Jander Luiz TomaziNoch keine Bewertungen

- Allison 5000/6000 Series Off-Highway Transmissions Parts CatalogDokument3 SeitenAllison 5000/6000 Series Off-Highway Transmissions Parts CatalogMaya OlmecaNoch keine Bewertungen

- Proposal - Axis BankDokument51 SeitenProposal - Axis BankRahul S DevNoch keine Bewertungen

- ATM - PPT - de Guzman Aladin Quinola PascuaDokument25 SeitenATM - PPT - de Guzman Aladin Quinola PascuaJohn Michael Gaoiran GajotanNoch keine Bewertungen

- Generator Protection - 7UM85 - Technical DataDokument42 SeitenGenerator Protection - 7UM85 - Technical DataDoan Anh TuanNoch keine Bewertungen

- Mini210s Manual 20120531Dokument233 SeitenMini210s Manual 20120531cor01Noch keine Bewertungen

- Biomedical 7-8Dokument20 SeitenBiomedical 7-8முத்துலிங்கம். பால்ராஜ்Noch keine Bewertungen

- Time Table Spring 2022-2023 V2Dokument3 SeitenTime Table Spring 2022-2023 V2moad alsaityNoch keine Bewertungen

- Implementing Accounting HubDokument258 SeitenImplementing Accounting HubNarendra ReddyNoch keine Bewertungen

- NEW Colchester Typhoon Twin Spindle CNC Turning Centre Brochure 2018Dokument12 SeitenNEW Colchester Typhoon Twin Spindle CNC Turning Centre Brochure 2018Marlon GilerNoch keine Bewertungen

- Imtiaz Internet MarketingDokument2 SeitenImtiaz Internet MarketingHamna Maqbool NoshahiNoch keine Bewertungen