Beruflich Dokumente

Kultur Dokumente

Blocking of Index

Hochgeladen von

Nerissa de LeonCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Blocking of Index

Hochgeladen von

Nerissa de LeonCopyright:

Verfügbare Formate

Blocking of Index

Index

- Enables efficient retrieval of a record given the value of certain attributes.

(indexing attributes)

Indexing

- Is a way of sorting a number of records on multiple field. Creating an index in a

table creates another data structure which holds the field value and pointer to the

record.

Disadvantage of Index

The downside to indexing is that these indexes require additional space on disk, since

the indexes are stored together in a table using the MyISAM engine, this file can quickly

reach the size limits of the underlying file system if file system in many fields within the

same table are indexes. The more indexes a table has, the slower the execution

becomes.

How does it work?

Firstly, lets outline a sample database table schema;

Field name Data type Size on disk

id (Primary key) Unsigned INT 4 bytes

firstName Char(50) 50 bytes

lastName Char(50) 50 bytes

emailAddress Char(100) 100 bytes

Note: char was used in place of varchar to allow for an accurate size on disk value. This

sample database contains five million rows, and is unindexed. The performance of

several queries will now be analyzed. These are a query using the id (a sorted key field)

and one using the firstName (a non-key unsorted field).

Example 1

Given our sample database of r = 5,000,000 records of a fixed size giving a record

length of R = 204 bytes and they are stored in a table using the MyISAM engine which

is using the default block size B = 1,024 bytes. The blocking factor of the table would

be bfr = (B/R) = 1024/204 = 5 records per disk block. The total number of blocks

required to hold the table is N = (r/bfr) = 5000000/5 = 1,000,000 blocks.

A linear search on the id field would require an average of N/2 = 500,000 block

accesses to find a value given that the id field is a key field. But since the id field is also

sorted a binary search can be conducted requiring an average of log2 1000000 = 19.93

= 20 block accesses. Instantly we can see this is a drastic improvement.

Now the firstName field is neither sorted, so a binary search is impossible, nor are the

values unique, and thus the table will require searching to the end for an exact N =

1,000,000 block accesses. It is this situation that indexing aims to correct.

Given that an index record contains only the indexed field and a pointer to the original

record, it stands to reason that it will be smaller than the multi-field record that it points

to. So the index itself requires fewer disk blocks that the original table, which therefore

requires fewer block accesses to iterate through. The schema for an index on

the firstName field is outlined below;

Field name Data type Size on disk

firstName Char(50) 50 bytes

(record pointer) Special 4 bytes

Note: Pointers in MySQL are 2, 3, 4 or 5 bytes in length depending on the size of the

table.

Example 2

Given our sample database of r = 5,000,000 records with an index record length of R =

54 bytes and using the default block size B = 1,024 bytes. The blocking factor of the

index would be bfr = (B/R) = 1024/54 = 18 records per disk block. The total number of

blocks required to hold the table is N = (r/bfr) = 5000000/18 = 277,778 blocks.

Now a search using the firstName field can utilise the index to increase performance.

This allows for a binary search of the index with an average of log2 277778 = 18.08 =

19 block accesses. To find the address of the actual record, which requires a further

block access to read, bringing the total to 19 + 1 = 20 block accesses, a far cry from the

277,778 block accesses required by the non-indexed table.

When should it be used?

Given that creating an index requires additional disk space (277,778 blocks extra from

the above example), and that too many indexes can cause issues arising from the file

systems size limits, careful thought must be used to select the correct fields to index.

Since indexes are only used to speed up the searching for a matching field within the

records, it stands to reason that indexing fields used only for output would be simply a

waste of disk space and processing time when doing an insert or deletes operation, and

thus should be avoided. Also given the nature of a binary search, the cardinality or

uniqueness of the data is important. Indexing on a field with a cardinality of 2 would split

the data in half, whereas a cardinality of 1,000 would return approximately 1,000

records. With such a low cardinality the effectiveness is reduced to a linear sort, and the

query optimizer will avoid using the index if the cardinality is less than 30% of the record

number, effectively making the index a waste of space

Das könnte Ihnen auch gefallen

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- English Summative Test No 3 MELCDokument3 SeitenEnglish Summative Test No 3 MELCNerissa de Leon100% (1)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

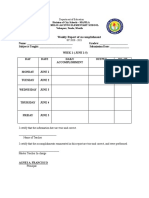

- Emilio Jacinto Elementary School: Velasquez, Tondo, ManilaDokument2 SeitenEmilio Jacinto Elementary School: Velasquez, Tondo, ManilaNerissa de LeonNoch keine Bewertungen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5795)

- English Summative Test No 2 MELC 1Dokument2 SeitenEnglish Summative Test No 2 MELC 1Nerissa de LeonNoch keine Bewertungen

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- English Summative Test No 1 MELCDokument3 SeitenEnglish Summative Test No 1 MELCNerissa de Leon100% (1)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- ROD REYES LAC Form-4 Module 1Dokument3 SeitenROD REYES LAC Form-4 Module 1Rodrigo Reyes88% (8)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Day 1: Day 2: Day 3: Day 4: Day 5:: 1.1 Identify CAD Software Features According To The Software ProviderDokument4 SeitenDay 1: Day 2: Day 3: Day 4: Day 5:: 1.1 Identify CAD Software Features According To The Software ProviderNerissa de LeonNoch keine Bewertungen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Describe The Appearance and Uses Uniform and Non-Uniform Mixtures (S6MT-Ia-c-1)Dokument4 SeitenDescribe The Appearance and Uses Uniform and Non-Uniform Mixtures (S6MT-Ia-c-1)Nerissa de LeonNoch keine Bewertungen

- Teachers Weekly WorkplanDokument2 SeitenTeachers Weekly WorkplanNerissa de LeonNoch keine Bewertungen

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- English No. 1Dokument3 SeitenEnglish No. 1Nerissa de LeonNoch keine Bewertungen

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Links QnADokument3 SeitenLinks QnANerissa de LeonNoch keine Bewertungen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Mapping Functions:: Field A Mapping Field C Date: Mm/dd/yyDokument2 SeitenMapping Functions:: Field A Mapping Field C Date: Mm/dd/yyNerissa de LeonNoch keine Bewertungen

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- DepEd Mission, VisionDokument2 SeitenDepEd Mission, VisionNerissa de Leon100% (4)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Emilio Jacinto Elementary School: Division of City Schools Velasquez, Tondo, ManilaDokument2 SeitenEmilio Jacinto Elementary School: Division of City Schools Velasquez, Tondo, ManilaNerissa de LeonNoch keine Bewertungen

- Requirement Gathering ProcessDokument28 SeitenRequirement Gathering Processsourabhcs76100% (3)

- 5.2 Cp0201, Cp0291, Cp0292: X20 Module - Compact Cpus - Cp0201, Cp0291, Cp0292Dokument9 Seiten5.2 Cp0201, Cp0291, Cp0292: X20 Module - Compact Cpus - Cp0201, Cp0291, Cp0292calripkenNoch keine Bewertungen

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Concurrent Managers Inactive - No Manager.R12.1.3Dokument161 SeitenConcurrent Managers Inactive - No Manager.R12.1.3arun k100% (1)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- En Data Sheet 1258Dokument2 SeitenEn Data Sheet 1258Fakhri GhrairiNoch keine Bewertungen

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- JavaScript Multiple Choice Questions and AnswersDokument10 SeitenJavaScript Multiple Choice Questions and AnswersMargerie FrueldaNoch keine Bewertungen

- Proxy KampretDokument4 SeitenProxy KampretEdi SukriansyahNoch keine Bewertungen

- Enterprise Application Development - SyllabusDokument3 SeitenEnterprise Application Development - SyllabusKundan KumarNoch keine Bewertungen

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- ADS4020Dokument2 SeitenADS4020diegoh_silva0% (1)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1091)

- Quick Start Guide: Ibm Infosphere Data ArchitectDokument2 SeitenQuick Start Guide: Ibm Infosphere Data ArchitectSultanat RukhsarNoch keine Bewertungen

- Table of Specification Final Exam Elective 2 Database Management System 1st Semester, SY 2019-2020Dokument6 SeitenTable of Specification Final Exam Elective 2 Database Management System 1st Semester, SY 2019-2020Jeraldine RamisoNoch keine Bewertungen

- Itp 4Dokument28 SeitenItp 4Sushil PantNoch keine Bewertungen

- Pic16f8x PDFDokument126 SeitenPic16f8x PDFGilberto MataNoch keine Bewertungen

- Boot Screen Converter enDokument2 SeitenBoot Screen Converter enGary Paul HvNoch keine Bewertungen

- SFCF - Book EbookDokument232 SeitenSFCF - Book EbookabcdcattigerNoch keine Bewertungen

- What Does PLC' Mean?: Disadvantages of PLC ControlDokument2 SeitenWhat Does PLC' Mean?: Disadvantages of PLC Controlmanjunair005Noch keine Bewertungen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- K85006-0068 - FireWorks Incident Management PlatformDokument4 SeitenK85006-0068 - FireWorks Incident Management PlatformOmarNovoaNoch keine Bewertungen

- PeopleSoft Technical - Interview QuestionsDokument33 SeitenPeopleSoft Technical - Interview QuestionsSiji SurendranNoch keine Bewertungen

- How To Convert Degrees - Minutes - Seconds Angles To or From Decimal AnglesDokument5 SeitenHow To Convert Degrees - Minutes - Seconds Angles To or From Decimal AnglesMihaiMereNoch keine Bewertungen

- Working With DrillholesDokument87 SeitenWorking With DrillholesTessfaye Wolde Gebretsadik100% (1)

- Oracle RAC DBA Training :DBA TechnologiesDokument29 SeitenOracle RAC DBA Training :DBA TechnologiesDBA Technologies0% (1)

- Kuhn Tucker ConditionsDokument15 SeitenKuhn Tucker ConditionsBarathNoch keine Bewertungen

- Cplus FaqDokument287 SeitenCplus FaqGangadhar Reddy TavvaNoch keine Bewertungen

- EmployeeDokument21 SeitenEmployeeeyobNoch keine Bewertungen

- LFD259 Labs - V2019 01 14Dokument86 SeitenLFD259 Labs - V2019 01 14Bill Ho100% (2)

- Using The DS Storage Manager (DSSM) Simulator To Demonstrate or Configure Storage On The DS3000 and DS5000 Storage Subsystems (sCR53)Dokument33 SeitenUsing The DS Storage Manager (DSSM) Simulator To Demonstrate or Configure Storage On The DS3000 and DS5000 Storage Subsystems (sCR53)Niaz AliNoch keine Bewertungen

- StormMQ WhitePaper - A Comparison of AMQP and MQTTDokument6 SeitenStormMQ WhitePaper - A Comparison of AMQP and MQTTMarko MestrovicNoch keine Bewertungen

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- TCS Sample QuestionsDokument5 SeitenTCS Sample QuestionsAshokNoch keine Bewertungen

- Symmetrix BIN FilesDokument2 SeitenSymmetrix BIN FilesSrinivas GollanapalliNoch keine Bewertungen

- Lect09 Solver LU SparseDokument60 SeitenLect09 Solver LU SparseTeto ScheduleNoch keine Bewertungen

- Python Cheat Sheet (2009) PDFDokument1 SeitePython Cheat Sheet (2009) PDFJulia fernandezNoch keine Bewertungen

- Optimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesVon EverandOptimizing DAX: Improving DAX performance in Microsoft Power BI and Analysis ServicesNoch keine Bewertungen