Beruflich Dokumente

Kultur Dokumente

2.ADV - Math EigenValues

Hochgeladen von

ShawonChowdhuryOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

2.ADV - Math EigenValues

Hochgeladen von

ShawonChowdhuryCopyright:

Verfügbare Formate

PPT021

Chapter.2 Matrix Eigenvalues Problems

Copyright The McGraw-Hill Companies, Inc. Permission required for reproduction or display.

Chapter. 2

Linear algebra:

Matrix Eigenvalues Problems

Fall 2014

HeeChang LIM Ph.D.

Engineering Building 9. (213)

Tel. 051-510-2302

Reference:

1. Hildebrand, F. B. (1965), Methods of Applied Mathematics, Prentice-Hall: Englewood Cliffs, New Jersey.

2. Kreyszig, Erwin (2006), Advanced Engineering Mathematics, Wiley

PPT022

Chapter.2 Matrix Eigenvalues Problems

- From the viewpoint of engineering applications, eigenvalue problems are

among the most important problems in connection with matrices. We begin by

defining the basic concepts and show how to solve these problems

- Let =

be a given matrix and consider the vector equation

Here is an unknown vector and an unknown scalar. Our task is to determine

s and s that satisfy (1).

- Geometrically, we are looking for vectors for which the multiplication by has

the same effect as the multiplication by a scalar ; in other words, should be

proportional to .

- Clearly, the zero vector = 0 is a solution of (1) for any value of , because

= . This is of no interest. A value of for which (1) has a solution is

called an eigenvalue or characteristic value (or latent root) of the matrix . (the

corresponding solutions of (1) are called the eigenvectors of

corresponding to that eigenvalue .

Linear Algebra: Matrix Eigenvalues Problems

=

(1)

PPT023

Chapter.2 Matrix Eigenvalues Problems

How to Find Eigenvalues and Eigenvectors

- The problem of determining the eigenvalues and eigenvectors of a matrix is

called an eigenvalue problem.

- Such problems occur in physical, technical, geometric, and other applications.

Example 1

PPT024

Chapter.2 Matrix Eigenvalues Problems

PPT025

Chapter.2 Matrix Eigenvalues Problems

Generalization of this procedure

- Eqn(1) written in components is

PPT026

Chapter.2 Matrix Eigenvalues Problems

- By Cramers theorem, this homogeneous linear system of equations has a

nontrivial solution if and only if the corresponding determinant of the

coefficients is zero.

Theorem 1

PPT027

Chapter.2 Matrix Eigenvalues Problems

Theorem 2

Proof

PPT028

Chapter.2 Matrix Eigenvalues Problems

Example 2

PPT029

Chapter.2 Matrix Eigenvalues Problems

PPT0210

Chapter.2 Matrix Eigenvalues Problems

Example 1

PPT0211

Chapter.2 Matrix Eigenvalues Problems

PPT0212

Chapter.2 Matrix Eigenvalues Problems

PPT0213

Chapter.2 Matrix Eigenvalues Problems

Example 2

PPT0214

Chapter.2 Matrix Eigenvalues Problems

PPT0215

Chapter.2 Matrix Eigenvalues Problems

PPT0216

Chapter.2 Matrix Eigenvalues Problems

Symmetric, Skew-Symmetric and Orthogonal Matrices

Definitions

PPT0217

Chapter.2 Matrix Eigenvalues Problems

Example 1

Example 2

PPT0218

Chapter.2 Matrix Eigenvalues Problems

An orthogonal matrix is a square matrix with real entries whose columns and

rows are orthogonal unit vectors (i.e., orthonormal vectors), i.e.

where is the identity matrix ().

This leads to the equivalent characterization: a matrix Q is orthogonal if its

transpose is equal to its inverse:

An orthogonal matrix is necessarily invertible (with inverse

1

=

),

unitary (

1

=

) and therefore normal (

) in the reals. The

determinant of any orthogonal matrix is either +1 or 1. As a linear

transformation, an orthogonal matrix preserves the dot product of vectors,

and therefore acts as an isometry() of Euclidean space(3),

such as a rotation or reflection. In other words, it is a unitary transformation.

Orthogonal Matrix ?

=

1

PPT0219

Chapter.2 Matrix Eigenvalues Problems

Theorem 1

Example 3

PPT0220

Chapter.2 Matrix Eigenvalues Problems

Orthogonal Transformations and Orthogonal Matrices

PPT0221

Chapter.2 Matrix Eigenvalues Problems

Theorem 2

Theorem 3

Theorem 4

PPT0222

Chapter.2 Matrix Eigenvalues Problems

Theorem 5

Example5

PPT0223

Chapter.2 Matrix Eigenvalues Problems

Eigenbases. Diagonalization. Quadratic Forms

So far we have emphasized properties of eigenvalues. We now turn to

general properties of eigenvectors. Eigenvectors of an matrix may

(or may not!) form a basis for

. Eigenbasis (basis of eigenvectors) is of

great advantage because then we can represent any in

uniquely as a

linear combination of the eigenvectors

, ,

, say,

=

1

1

+

2

2

+ +

.

And, denoting the corresponding eigenvalues of the matrix by

1

, ,

,

we have

, so that we simply obtain

= =

1

1

+

2

2

++

=

1

1

++

=

1

1

++

Now if the eigenvalues are all different, we do obtain a basis:

PPT0224

Chapter.2 Matrix Eigenvalues Problems

Theorem 1

Example 1

Example 2

Theorem 2

PPT0225

Chapter.2 Matrix Eigenvalues Problems

Diagonalization of Matrices

Definition

Eigenbases also play a role in reducing a matrix to a diagonal matrix whose

entries are the eigenvalues of . This is done by a similarity transformation.

The key property of this transformation is that it preserves the eigenvalues

of :

Theorem 3

Proof

PPT0226

Chapter.2 Matrix Eigenvalues Problems

Example 3

PPT0227

Chapter.2 Matrix Eigenvalues Problems

Theorem 4

Example 4

PPT0228

Chapter.2 Matrix Eigenvalues Problems

Quadratic forms (). Transformation to Principal Axes

By definition, a quadratic form in the components

1

, ,

of a vector

is a sum of

2

terms, namely,

=

is called the coefficient matrix of the form. We may assume is

symmetric, because we can take off-diagonal terms together in pairs and

write the result as a sum of two equal terms (see the example).

-------(7)

PPT0229

Chapter.2 Matrix Eigenvalues Problems

Example 5

Quadratic forms occur in physics and geometry, for instance, in connection

with conic sections (ellipses

1

2

2

+

2

2

2

= 1 , etc.) and quadratic

surfaces (cones, etc.). Their transformation to principal axes is an important

practical task related to the diagonalization of matrices, as follows.

PPT0230

Chapter.2 Matrix Eigenvalues Problems

Remind ! Theorem 2 (the symmetric coefficient matrix of (7) has an

orthonormal basis of eigenvectors). Hence if we take these as column

vectors, we obtain a matrix that is orthogonal, so that

. Thus, we

have =

. Substitution into (7) gives

(8) =

.

If we set

= , then, since

, we get

(9) = .

Furthermore, in (8) we have

and

= , so that

becomes simply

(10) =

=

1

1

2

+

2

2

2

++

2

.

This proves the following basic theorem.

PPT0231

Chapter.2 Matrix Eigenvalues Problems

Theorem 5

Example 6

PPT0232

Chapter.2 Matrix Eigenvalues Problems

Example 6

PPT0233

Chapter.2 Matrix Eigenvalues Problems

Das könnte Ihnen auch gefallen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5795)

- Models - Heat.cold Water GlassDokument14 SeitenModels - Heat.cold Water GlassShawonChowdhuryNoch keine Bewertungen

- Models - Cfd.boiling WaterDokument26 SeitenModels - Cfd.boiling WaterShawonChowdhuryNoch keine Bewertungen

- Introduction To CF D ModuleDokument44 SeitenIntroduction To CF D ModuleShawonChowdhuryNoch keine Bewertungen

- Comsol Asee FluidsDokument10 SeitenComsol Asee FluidsShawonChowdhuryNoch keine Bewertungen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Solving Dynamics Problems in Mathcad: Brian D. HarperDokument142 SeitenSolving Dynamics Problems in Mathcad: Brian D. HarperErdoan MustafovNoch keine Bewertungen

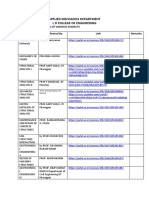

- Applied Mechanics Department L D College of EngineeringDokument20 SeitenApplied Mechanics Department L D College of EngineeringRushil Shah100% (1)

- Chap 5Dokument67 SeitenChap 5Amsalu WalelignNoch keine Bewertungen

- 2007 - Modal Acoustic Transfer Vector Approach in A FEM BEM Vibroacoustic AnalysisDokument11 Seiten2007 - Modal Acoustic Transfer Vector Approach in A FEM BEM Vibroacoustic AnalysisGuilherme Henrique GodoiNoch keine Bewertungen

- f1-4 Knec Syllabus MathsDokument13 Seitenf1-4 Knec Syllabus MathsKipkemoi NicksonNoch keine Bewertungen

- M.Tech - SRIT R23 - Syllabus - I SEMDokument35 SeitenM.Tech - SRIT R23 - Syllabus - I SEMpadmavathiNoch keine Bewertungen

- R Cheat SheetDokument4 SeitenR Cheat SheetHaritha AtluriNoch keine Bewertungen

- 06.20246.jau Hsiung WangDokument238 Seiten06.20246.jau Hsiung WangTrinh Luong MienNoch keine Bewertungen

- Switching Models WorkbookDokument239 SeitenSwitching Models WorkbookAllister HodgeNoch keine Bewertungen

- Tutorial Set 2Dokument3 SeitenTutorial Set 2poweder gunNoch keine Bewertungen

- Iit Madras Detailed SyllabusDokument14 SeitenIit Madras Detailed SyllabusSuperdudeGauravNoch keine Bewertungen

- 2014 SP CHSLDokument20 Seiten2014 SP CHSLRaghu RamNoch keine Bewertungen

- Linear Algebra and Its Applications Selected SolutionsDokument10 SeitenLinear Algebra and Its Applications Selected Solutionsscribd_torrentsNoch keine Bewertungen

- cs450 Chapt02Dokument101 Seitencs450 Chapt02Davis LeeNoch keine Bewertungen

- HW1 SolutionsDokument5 SeitenHW1 Solutionspackman465Noch keine Bewertungen

- Solution Manual For Introduction To Mathcad 15 3 e Ronald W LarsenDokument36 SeitenSolution Manual For Introduction To Mathcad 15 3 e Ronald W Larsenrepcitron8mvy100% (43)

- Coercive NessDokument13 SeitenCoercive NessArchi DeNoch keine Bewertungen

- Alaff FinalDokument4 SeitenAlaff FinalArmaan BhullarNoch keine Bewertungen

- Adobe Scan 23-Feb-2024Dokument4 SeitenAdobe Scan 23-Feb-2024muzwalimub4104Noch keine Bewertungen

- Class 12 MATRICES AND DETERMINANTS EXAMDokument1 SeiteClass 12 MATRICES AND DETERMINANTS EXAMPriyankAhujaNoch keine Bewertungen

- Que Paper MAIR 11 T1 8.30 - 9.10 AmDokument2 SeitenQue Paper MAIR 11 T1 8.30 - 9.10 AmSai GokulNoch keine Bewertungen

- Linear Programming FinalDokument62 SeitenLinear Programming FinalHappy SongNoch keine Bewertungen

- Business MathematicsDokument5 SeitenBusiness MathematicsSunil Shekhar Nayak100% (1)

- A BVP Solver Based On Residual ControlDokument18 SeitenA BVP Solver Based On Residual ControlLorem IpsumNoch keine Bewertungen

- MATH1019 Linear Algebra and Statistics For Engineers Semester 1 2024 Bentley Perth Campus INTDokument13 SeitenMATH1019 Linear Algebra and Statistics For Engineers Semester 1 2024 Bentley Perth Campus INTjissmon jojoNoch keine Bewertungen

- C AssignmentDokument30 SeitenC AssignmentSourav RoyNoch keine Bewertungen

- CSE Syllabus III-IV SemesterDokument32 SeitenCSE Syllabus III-IV SemesterJatan TiwariNoch keine Bewertungen

- ODA 1995 Guidance Note On How To Do A Stakeholder Analysis PDFDokument10 SeitenODA 1995 Guidance Note On How To Do A Stakeholder Analysis PDFGoodman HereNoch keine Bewertungen

- Chapter 1: Systems of Linear Equations and MatricesDokument7 SeitenChapter 1: Systems of Linear Equations and MatricesMr Selvagahnesh PrasadNoch keine Bewertungen

- Sce Bca Itims&Ct Syllabus 2018-21Dokument117 SeitenSce Bca Itims&Ct Syllabus 2018-21Chetna BhuraniNoch keine Bewertungen