Beruflich Dokumente

Kultur Dokumente

Arsitektur Komputer Lanjutan

Hochgeladen von

Meita Dian HapsariOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Arsitektur Komputer Lanjutan

Hochgeladen von

Meita Dian HapsariCopyright:

Verfügbare Formate

Meita Dian Hapsari - 1104114135

Superscalar Mircroarchitecture

Instruction Fetch & Branch Prediction

Fetch phase must fetch multiple instructions per cycle from cache memory to keep a

steady feed of instructions going to the other stages. The number of instructions fetched

per cycle should match or be greater than the peak instruction decode & execution rate (to

allow for cache misses or occasions where the max # of instructions cant be fetched). For

conditional branches, fetch mechanism must be redirected to fetch instructions from branch

targets.

4 Step to processing conditional branch instruction :

1. Recognizing that in the instruction there is a conditional branch

2. Determining the branch outcome (taken or not)

3. Computing the branch target

4. Transferring control by redirecting instruction fetch

Dynamic prediction faster than static prediction, even its complex

Processing Conditional Branches

1. Recognizing Conditional Branches

2. Determining Branch Outcome

3. Computing Branch Targets

4. Transferring Control

Multicore Architecture

- A multicore design in which a single physical processor contain the core logic

of more than 1 processor

- Goal enable a system to run more task simultaneously achieving greater

overall performance

Hyper threading or multicore?

- Early PC capable of doing single task at a time

- Intels multi threading called Hyper threading

Multicore Processor

- Each core has its execution pipeline

- No limitation for number of cores that can be paced in a single chip

Meita Dian Hapsari - 1104114135

- Two cores run at slower speeds and lower temperature

- But the combined throughput better than single processor

Fundamental relationship between frequency and power can be used to multiply the

no of cores from 2,4,8 or even higher

Grid Computing

Grid computing is the collection of computer resources from multiple locations to reach a

common goal. The grid can be thought of as a distributed system with non-interactive

workloads that involve a large number of files. Grid computing is distinguished from

conventional high performance computing systems such as cluster computing in that grid

computers have each node set to perform a different task/application

Advantage of Grid Computing

Core networking technology now accelerates at a much faster rate than advances in

microprocessor speeds

Exploiting under utilized resources

Parallel CPU capacity

Virtual resources and virtual organizations for collaboration

Access to additional resources

Types of Resources

Computation

Storage

Communications

Software and licenses

Special equipment, capacities, architectures, and policies

Security

Access policy - What is shared? Who is allowed to share? When can sharing

occur?

Authentication - How do you identify a user or resource?

Authorization -How do you determine whether a certain operation is

consistent with the rules?

Meita Dian Hapsari - 1104114135

Grid Architecture

Fabric layer: Provides the resources to which shared access is mediated by Grid

protocols.

Connectivity layer: Defines the core communication and authentication protocols

required for grid-specific network functions.

Resource layer: Defines protocols, APIs, and SDKs for secure negotiations, initiation,

monitoring control, accounting and payment of sharing operations on individual

resources.

Collective Layer: Contains protocols and services that capture interactions among a

collection of resources.

Application Layer: These are user applications that operate within VO environment.

GRID INTERNET

Application

Collective

Resource

Connectivity Transport

Fabric Internet

Key Components

1. Portal or User Interface

2. Security

3. Broker ( MDS )

4. Scheduler

5. Data Management ( GASS )

6. Job and resource Management ( GRAM )

Globus Toolkit 4

Is An open source toolkit for building computing and provided by the globus

aliance.

Ex Applications with GT4 :

- Southern California Eartquake Center ( Visualize eartquake simualtion data )

- Computational schientists at brown university ( Simulate the flow of blood )

- etc

Meita Dian Hapsari - 1104114135

Cell Processor

Overview

- A chip with 1 PPC hyper-thread core called PPE and 8 specialized cores called SPEs

- The challenge to be solved by the cell was to put all thse cores together

Cell Processor Component

- Power PC processor (PPE), PPE made out 2 main units :

1. The power processor unit (PPU)

2. Power Processor Storage subsystem (PPSS)

PPU is hyper-threaded and support 2 simultanious threads and consist of :

o A full set of 64 bit PowerPC register

o 32 128bit vector multimedia register

o A 32kb L1 instruciton cache

o 32kb L1 data cache

PPSS handles all memory from the PPE and nearest made to the PPE by other processor or

i/o devices and consist of :

o Unified 521kb L2 instruction & data cache

o Various queques

o A bus interfaces unti that handles bus arbitration and pacing on the element

interconnect bus

- Synergistic Processor Element (SPE)

o Each cell has 8 SPE

o They are 128bit RISC processor

o Consist of 2 main unit :

1. Synergistic processor unit (SPU)

2. Memory flow controller (MFC)

- Synergistic processor unit deals with control & execution

- SPU implemented a set of SIMD instruction, specific to cell

- Each SPU is independent & has its own program counter

- Instruction fetched into local store

- Data load & store in local store

- Memory flow counter actually the interface between SPU, the rest of the cell chip

- MFC interfaces the SPU with EIB

- Addition to whole set of MMIO register, this contains a DMA controller

Das könnte Ihnen auch gefallen

- MP Assignment 1Dokument9 SeitenMP Assignment 1Fatima SheikhNoch keine Bewertungen

- Operating System 2 Marks and 16 Marks - AnswersDokument45 SeitenOperating System 2 Marks and 16 Marks - AnswersDiana Arun75% (4)

- Apollo 9603: Powerful and Versatile Access-to-Metro Optical Transport PlatformDokument2 SeitenApollo 9603: Powerful and Versatile Access-to-Metro Optical Transport PlatformDeepak Kumar SinghNoch keine Bewertungen

- Tightly Coupled ArchitectureDokument11 SeitenTightly Coupled Architecturesudhnwa ghorpadeNoch keine Bewertungen

- Military College of Signal1Dokument8 SeitenMilitary College of Signal1Fatima SheikhNoch keine Bewertungen

- Fetch Decode ExecuteDokument67 SeitenFetch Decode ExecuteJohn Kenneth ArellonNoch keine Bewertungen

- ParticipantsDokument8 SeitenParticipantsSoban MarufNoch keine Bewertungen

- PankajDokument27 SeitenPankajsanjeev2838Noch keine Bewertungen

- Arkom 13-40275Dokument32 SeitenArkom 13-40275Harier Hard RierNoch keine Bewertungen

- Cpe 631 Pentium 4Dokument111 SeitenCpe 631 Pentium 4rohitkotaNoch keine Bewertungen

- Chapter 4 Marie-Intro Simple ComputerDokument87 SeitenChapter 4 Marie-Intro Simple ComputerYasir ArfatNoch keine Bewertungen

- MARIE: An Introduction To A Simple Computer: Chapter 4 ObjectivesDokument39 SeitenMARIE: An Introduction To A Simple Computer: Chapter 4 ObjectivessupertraderNoch keine Bewertungen

- Lecture 1 Part 2 - Central Processing UnitDokument28 SeitenLecture 1 Part 2 - Central Processing UnitmeshNoch keine Bewertungen

- Data Transfers Between Processes in An SMP System: by Jayesh PatelDokument16 SeitenData Transfers Between Processes in An SMP System: by Jayesh PatelJayesh PatelNoch keine Bewertungen

- Part 1: Concepts and PrinciplesDokument8 SeitenPart 1: Concepts and PrinciplesMingNoch keine Bewertungen

- Embedded Systems BasicsDokument16 SeitenEmbedded Systems BasicsOpenTsubasaNoch keine Bewertungen

- Chapter 4Dokument74 SeitenChapter 4aboodbas31Noch keine Bewertungen

- ACA Assignment 4Dokument16 SeitenACA Assignment 4shresth choudharyNoch keine Bewertungen

- Advanced Microprocessors and Microcontrollers: A. NarendiranDokument14 SeitenAdvanced Microprocessors and Microcontrollers: A. NarendiranMa SeenivasanNoch keine Bewertungen

- 3 Embedded Systems - Raj KamalDokument37 Seiten3 Embedded Systems - Raj KamalAshokkumar ManickamNoch keine Bewertungen

- MARIE: An Introduction To A Simple ComputerDokument68 SeitenMARIE: An Introduction To A Simple ComputerAbdurahmanNoch keine Bewertungen

- Lesson 7: System Performance: ObjectiveDokument2 SeitenLesson 7: System Performance: ObjectiveVikas GaurNoch keine Bewertungen

- Multi Processor Architecture: A Short IntroductionDokument16 SeitenMulti Processor Architecture: A Short IntroductionmohamdsoftNoch keine Bewertungen

- Need For Memory Hierarchy: (Unit-1,3) (M.M. Chapter 12)Dokument23 SeitenNeed For Memory Hierarchy: (Unit-1,3) (M.M. Chapter 12)Alvin Soriano AdduculNoch keine Bewertungen

- 212 Chapter06Dokument50 Seiten212 Chapter06HAU LE DUCNoch keine Bewertungen

- Final 2014Dokument3 SeitenFinal 2014Mustafa Hamdy MahmoudNoch keine Bewertungen

- Coa Unit-3,4 NotesDokument17 SeitenCoa Unit-3,4 NotesDeepanshu krNoch keine Bewertungen

- Intel 80586 (Pentium)Dokument24 SeitenIntel 80586 (Pentium)Soumya Ranjan PandaNoch keine Bewertungen

- Solution JUNEJULY 2018Dokument15 SeitenSolution JUNEJULY 2018Yasha DhiguNoch keine Bewertungen

- 123 - EE8691, EE6602 Embedded Systems - NotesDokument78 Seiten123 - EE8691, EE6602 Embedded Systems - NotesNavin KumarNoch keine Bewertungen

- CA Classes-11-15Dokument5 SeitenCA Classes-11-15SrinivasaRaoNoch keine Bewertungen

- OS CrashcourseDokument151 SeitenOS CrashcourseRishabh SinghNoch keine Bewertungen

- Single Producer - Multiple Consumers Ring Buffer Data Distribution System With Memory ManagementDokument12 SeitenSingle Producer - Multiple Consumers Ring Buffer Data Distribution System With Memory ManagementCarlosHENoch keine Bewertungen

- Multicore Processor ReportDokument19 SeitenMulticore Processor ReportDilesh Kumar100% (1)

- Introduction To Os 2mDokument10 SeitenIntroduction To Os 2mHelana ANoch keine Bewertungen

- 2) The CPU proc-WPS OfficeDokument4 Seiten2) The CPU proc-WPS OfficeDEBLAIR MAKEOVERNoch keine Bewertungen

- CS8493 2marks PDFDokument36 SeitenCS8493 2marks PDFSparkerz S Vijay100% (1)

- Lecture 10Dokument34 SeitenLecture 10MAIMONA KHALIDNoch keine Bewertungen

- Chapter 9 COADokument31 SeitenChapter 9 COAJijo XuseenNoch keine Bewertungen

- Introduction To OSDokument34 SeitenIntroduction To OSTeo SiewNoch keine Bewertungen

- OS - 2 Marks With AnswersDokument28 SeitenOS - 2 Marks With Answerssshyamsaran93Noch keine Bewertungen

- EE6304 Lecture12 TLPDokument70 SeitenEE6304 Lecture12 TLPAshish SoniNoch keine Bewertungen

- Os Chapter1Dokument53 SeitenOs Chapter1Saba Ghulam RasoolNoch keine Bewertungen

- Lec 2Dokument21 SeitenLec 2Prateek GoyalNoch keine Bewertungen

- Hyper ThreadingDokument15 SeitenHyper ThreadingMandar MoreNoch keine Bewertungen

- B.tech CS S8 High Performance Computing Module Notes Module 4Dokument33 SeitenB.tech CS S8 High Performance Computing Module Notes Module 4Jisha ShajiNoch keine Bewertungen

- 1) Define MIPS. CPI and MFLOPS.: Q.1 Attempt Any FOURDokument10 Seiten1) Define MIPS. CPI and MFLOPS.: Q.1 Attempt Any FOURYashNoch keine Bewertungen

- Multicore ComputersDokument21 SeitenMulticore Computersmikiasyimer7362Noch keine Bewertungen

- Terms of OSDokument4 SeitenTerms of OSgdwhhdhncddNoch keine Bewertungen

- Questions To Practice For Cse323 Quiz 1Dokument4 SeitenQuestions To Practice For Cse323 Quiz 1errormaruf4Noch keine Bewertungen

- Meeting2-Block 1-Part 3Dokument29 SeitenMeeting2-Block 1-Part 3Husam NaserNoch keine Bewertungen

- Operating Systems Chapter (1) Review Questions: Memory or Primary MemoryDokument8 SeitenOperating Systems Chapter (1) Review Questions: Memory or Primary MemoryNataly AdelNoch keine Bewertungen

- Final Report: Multicore ProcessorsDokument12 SeitenFinal Report: Multicore ProcessorsJigar KaneriyaNoch keine Bewertungen

- 2mark and 16 OperatingSystemsDokument24 Seiten2mark and 16 OperatingSystemsDhanusha Chandrasegar SabarinathNoch keine Bewertungen

- MARIE: An Introduction To A Simple ComputerDokument98 SeitenMARIE: An Introduction To A Simple ComputerGreen ChiquitaNoch keine Bewertungen

- Marie: An Introduction To A Simple ComputerDokument80 SeitenMarie: An Introduction To A Simple Computercmike99999Noch keine Bewertungen

- OS 2marks With Answers For All UnitsDokument19 SeitenOS 2marks With Answers For All UnitsPraveen MohanNoch keine Bewertungen

- Unit-6 MultiprocessorsDokument21 SeitenUnit-6 MultiprocessorsMandeepNoch keine Bewertungen

- Operating Systems Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesVon EverandOperating Systems Interview Questions You'll Most Likely Be Asked: Job Interview Questions SeriesNoch keine Bewertungen

- PLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.Von EverandPLC: Programmable Logic Controller – Arktika.: EXPERIMENTAL PRODUCT BASED ON CPLD.Noch keine Bewertungen

- Material Service Group VERSUS CLASS REFERENCEDokument5 SeitenMaterial Service Group VERSUS CLASS REFERENCEZain AbidiNoch keine Bewertungen

- Hardware AccessoriesDokument2 SeitenHardware Accessoriessam29760% (1)

- Main Board Diagram: 945GCDMS-6H A2Dokument2 SeitenMain Board Diagram: 945GCDMS-6H A2andymustopaNoch keine Bewertungen

- Managed Switch ConfigurationDokument10 SeitenManaged Switch ConfigurationSEED SEEDNoch keine Bewertungen

- 7.3.2.10 Lab - Research Laptop DrivesDokument1 Seite7.3.2.10 Lab - Research Laptop DrivesHohnatanNoch keine Bewertungen

- OS ASS 1 (Solved)Dokument7 SeitenOS ASS 1 (Solved)Muhammad MaazNoch keine Bewertungen

- FC-102 Vs FC-101 Vs FC-360Dokument1 SeiteFC-102 Vs FC-101 Vs FC-360sidparikh254Noch keine Bewertungen

- Procedure & Macros: Microprocessor (EE-502) Lab AssignmentDokument8 SeitenProcedure & Macros: Microprocessor (EE-502) Lab AssignmentSajjad HussainNoch keine Bewertungen

- สำเนาของ สำเนาของ Arduino Uno R3 Driver Windows 10 64 Bit Download - ColaboratoryDokument5 Seitenสำเนาของ สำเนาของ Arduino Uno R3 Driver Windows 10 64 Bit Download - Colaboratorywtaepattana pokpradidNoch keine Bewertungen

- MC9S08SH32Dokument316 SeitenMC9S08SH32Juan CarlosNoch keine Bewertungen

- Prelim Exam - Ece 415Dokument2 SeitenPrelim Exam - Ece 415RichardsNoch keine Bewertungen

- NewSDK Introduction (V33SP1)Dokument21 SeitenNewSDK Introduction (V33SP1)bbvietnam comNoch keine Bewertungen

- Unit 3 Lecturer NotesDokument48 SeitenUnit 3 Lecturer NotessureshgurujiNoch keine Bewertungen

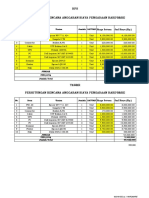

- HPS Perhitungan Rencana Anggaran Biaya Pengadaan Hardware: No. Item Uraian Jumlah SATUANDokument2 SeitenHPS Perhitungan Rencana Anggaran Biaya Pengadaan Hardware: No. Item Uraian Jumlah SATUANYanto AstriNoch keine Bewertungen

- Installation Manual Fish Finder/ Hi-Res Fish Finder/ Fish Size Indicator FCV-1900/B/GDokument56 SeitenInstallation Manual Fish Finder/ Hi-Res Fish Finder/ Fish Size Indicator FCV-1900/B/GPedro Pabo Prieto MonzonNoch keine Bewertungen

- Rev0 (Technical and User's Manual)Dokument72 SeitenRev0 (Technical and User's Manual)mohamedNoch keine Bewertungen

- Preparing Hand Tools and Equipment For Computer Hardware ServicingDokument31 SeitenPreparing Hand Tools and Equipment For Computer Hardware ServicingJeric Enteria CantillanaNoch keine Bewertungen

- Edge AI Inference Computer Powered by NVIDIA GPU Cards - P19Dokument15 SeitenEdge AI Inference Computer Powered by NVIDIA GPU Cards - P19Rafael FloresNoch keine Bewertungen

- Cisco Redundant Power System 2300: Flexibility and High AvailabilityDokument10 SeitenCisco Redundant Power System 2300: Flexibility and High AvailabilityGoogool YNoch keine Bewertungen

- CMPSB Instruction in Assembly Language of 8086 MicroprocessorDokument6 SeitenCMPSB Instruction in Assembly Language of 8086 MicroprocessorJanna Tammar Al-WardNoch keine Bewertungen

- EdgeTech JSF DATA FILE Description 0004824 - Rev - 1 - 20Dokument38 SeitenEdgeTech JSF DATA FILE Description 0004824 - Rev - 1 - 20razakumarklNoch keine Bewertungen

- Ebook Digital Design and Computer Architecture Risc V Edition PDF Full Chapter PDFDokument67 SeitenEbook Digital Design and Computer Architecture Risc V Edition PDF Full Chapter PDFjimmie.kirby650100% (27)

- Acer EMachines EL1800 Service GuideDokument84 SeitenAcer EMachines EL1800 Service Guidedff1967dffNoch keine Bewertungen

- Keykeriki v2 Cansec v1.1Dokument63 SeitenKeykeriki v2 Cansec v1.1WALDEKNoch keine Bewertungen

- LT-6045 FleXNet Application GuideDokument200 SeitenLT-6045 FleXNet Application GuidePrecaución TecnológicaNoch keine Bewertungen

- Geeks3d Gputest LogDokument1 SeiteGeeks3d Gputest LogHakonBlumNoch keine Bewertungen

- ProLite X2483HSU-B3 - enDokument3 SeitenProLite X2483HSU-B3 - enikponmwosa olotuNoch keine Bewertungen

- 8085 MCQDokument20 Seiten8085 MCQniteshNoch keine Bewertungen

- Instruction CycleDokument4 SeitenInstruction Cyclepawan pratapNoch keine Bewertungen