Beruflich Dokumente

Kultur Dokumente

ICIAC11 1E LTompson

Hochgeladen von

Chika AlbertOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

ICIAC11 1E LTompson

Hochgeladen von

Chika AlbertCopyright:

Verfügbare Formate

Advanced time-series analysis

Lisa Tompson

Research Associate

UCL Jill Dando Institute of Crime Science

l.tompson@ucl.ac.uk

UCL DEPARTMENT OF SECURITY AND CRIME SCIENCE

Overview

Fundamental principles of time-ordered data

Introduction to time-series analysis

Revealing trends

Forecasting

Evaluating interventions

Where to find out more

Time-ordered data

Observations are never pure measures of a phenomena

Crime rates can fluctuate over time for a variety of reasons

Such variation is known as random noise

Observations close together in time will be more closely

related than observations further apart

This is known as propinquity

[proh-ping-kwi-tee]

Time-series: an introduction

Time-series analysis is used when observations (e.g.

counts of crime) are made repeatedly

should be over 50 if you want to account for seasonal trends

Three main aims of this type of analysis

To help reveal or clarify trends

To forecast future patterns of events

To test the impact of interventions (IVs)

Time-series analysis for revealing trends

Time-series analysis for revealing trends

All time-series data have three basic parts:

A trend component;

A seasonal component; and

A random component.

Trends are often masked because of the combination of

these three components

Especially the random noise!

Time-series analysis for revealing trends

Two types of patterns are important:

Linear or non-linear trends - i.e., upwards or downwards or both

(quadratic); and

Seasonal effects which follow the same overall trend but repeat

themselves in systematic intervals over time.

Time-series analysis for revealing trends

Smoothing techniques can be used to filter out the random

noise

Moving average is the simplest form of smoothing technique

Time-series analysis for revealing trends

More advanced smoothing techniques are available in

statistical packages (such as SPSS, Stata, R)

These can estimate three parameters which are used to

generate an equation that best describes the time-series:

The parameter gamma () determines how the trend is modelled

The parameter alpha () determines how much weighting should

be assigned to recent and distant observations

The parameter delta () models the seasonal component

Need to vary the parameters to see which provides the

best fit against your data (e.g. most accurate model)

For this you can use the Sum of Squared Errors (SSE)

Time-series analysis for revealing trends

A sophisticated method for revealing trends is known as

seasonal decomposition

R screenshot

The power of visualisation

5

1

5

2

5

3

5

d

a

t

a

-

6

-

2

2

4

6

s

e

a

s

o

n

a

l

1

0

1

5

2

0

2

5

t

r

e

n

d

-

1

0

0

5

1

0

2008 2009 2010 2011

r

e

m

a

i

n

d

e

r

time

Time-series analysis for forecasting

Time-series analysis for forecasting

Where crime rates follow regular patterns, this can be

useful for predicting future levels of crime

Forecasts are commonly produced so that the workload of

criminal justice agencies can be managed

The accuracy of forecasts is likely to be limited, given the

complexity of influencing factors

Predictions rely on the assumption that evident trends and

seasonal effects will continue (i.e. it is a stable pattern)

Short-term predictions will be more reliable that long-term

predictions

Time-series analysis for forecasting

You could use a moving-average model to forecast future

patterns

Commonly you would ascertain the value of doing this with data

you already have (i.e. can use as a test against the forecast scores)

The value would be derived from comparing the error scores

between the predicted data points and the real data points

If the model is sufficiently accurate you could then use it to make a

forecast. However, if it is not very accurate, it would be unwise to

use the model.

As you know, more sophisticated models exist (e.g. using

gamma () alpha () delta ())

Time-series analysis for evaluating interventions

Time-series analysis for evaluating interventions

Pre-test Treatment applied Post-test

Experimental O

1

O

2

O

3

O

4 ...

X O

6

O

7

O

8

O

9 ...

Control O

1

O

2

O

3

O

4 ...

O

6

O

7

O

8

O

9 ...

Evaluation in the real world

0

5

10

15

20

25

30

J

a

n

-

0

3

A

p

r

-

0

3

J

u

l

-

0

3

O

c

t

-

0

3

J

a

n

-

0

4

A

p

r

-

0

4

J

u

l

-

0

4

O

c

t

-

0

4

J

a

n

-

0

5

A

p

r

-

0

5

J

u

l

-

0

5

O

c

t

-

0

5

J

a

n

-

0

6

A

p

r

-

0

6

J

u

l

-

0

6

O

c

t

-

0

6

J

a

n

-

0

7

A

p

r

-

0

7

J

u

l

-

0

7

O

c

t

-

0

7

J

a

n

-

0

8

A

p

r

-

0

8

N

u

m

b

e

r

o

f

R

e

f

e

r

r

a

l

s

Before-after design?

0

5

10

15

20

25

30

J

a

n

-

0

3

A

p

r

-

0

3

J

u

l

-

0

3

O

c

t

-

0

3

J

a

n

-

0

4

A

p

r

-

0

4

J

u

l

-

0

4

O

c

t

-

0

4

J

a

n

-

0

5

A

p

r

-

0

5

J

u

l

-

0

5

O

c

t

-

0

5

J

a

n

-

0

6

A

p

r

-

0

6

J

u

l

-

0

6

O

c

t

-

0

6

J

a

n

-

0

7

A

p

r

-

0

7

J

u

l

-

0

7

O

c

t

-

0

7

J

a

n

-

0

8

A

p

r

-

0

8

N

u

m

b

e

r

o

f

R

e

f

e

r

r

a

l

s

Average crime rate

12 months post-test

(Apr07-Mar-08): 20.2

Average crime rate

12 months pre-test

(Jan06-Dec06): 18.3

Why cant we just use a before-after design?

It might be tempting to use a t-test to assess whether there

was any statistical evidence of a change in group means

HOWEVER one of the assumptions of a t-test is that the groups

consist of independent observations with equal variances

This is violated by time-series data due to the temporal dependency

(known as autocorrelation)

For this reason we need to use a method known as

autoregression (AR) which accounts for the autocorrelation

ARIMA modelling

Auto-Regressive, Integrated, Moving-Average (p, d, q)

A simpler version of this is ARMA (sometimes called a

BoxJenkins model)

p d q

Auto-regressive

element represents

the lingering effects

of previous scores

Integrated element

represents the

trends present in

the data

Moving-average element

represents lingering

effects of the preceding

random shocks

ARIMA modelling

First, you have to perform some preliminary analyses

before you fit an ARIMA model to your data

These steps can be summarised as:

1. Identification

2. Estimation

3. Diagnosis

Identification and estimation of a time-series is the process

of finding the integer values of p, d, and q.

These are typically small (0, 1, 2)

When these values are 0, the element is not needed in the model

Identification of a time-series - transformation

The middle element of (p, d, q) is investigated first

The goal is to determine if the data are stationary

Stationary refers to a constant mean and variance

If the mean is changing (i.e. the trend is going up or down), the

trend can be removed by differencing

Identification of a time-series - transformation

Stationary refers to a constant mean and variance

If the variability is changing, the data may be made stationary by

logarithmic transformation (the LOG formula in Excel)

You need to plot it again to ascertain if the variance has stabilised

after transformation

Once you are happy that the mean and variance are as

constant as possible you can assign d a value

If d = 0 the data are stationary and there is no trend;

If d = 1 the data need to be differenced once so the linear trend is

removed;

If d = 2 the data need to be difference twice so that the linear and

quadratic trends are removed.

Identification of a time-series autocorrelation

The values of P and Q (p, d, q) are investigated second

p relates to autoregressive components, q relates to

moving-average components

Usually a model has either an autoregressive component

OR a moving-average component, but very occasionally, a

series has both

How do we tell? We look at autocorrelation patterns

Assessing autocorrelation patterns

You can use a correlogram to assess whether

autocorrelation are present in the time-series data

This is an image of correlation statistics

It is commonly used for checking randomness in a data set

Measuring autocorrelation

If adjacent observations are highly correlated, then so too

will those that are - say - two or three observations apart

We therefore need to consider the lag of the autocorrelation we

are measuring

To control for this we use an autocorrelation function (ACF)

in conjunction with a partial autocorrelation function

(PACF)

ACFs and PACFs

An autocorrelation function

(ACF) shows the serial

correlation coefficients for

consecutive lags

A partial autocorrelation function

(PACF) partials out the

immediate autocorrelations and

estimates the autocorrelation at

a specific lag (e.g. 2 lags)

Estimating a time-series model

Models are estimated through patterns in their ACFs and

PACFs

Auto-regressive

Moving-average

Estimating a time-series model

AR: first order regressive process (p)

Large value for lag 1, with the ACF decaying over greater lags and

the PACF only being significant for lag 1

When p = 0 there is no relationship between adjacent observations

When p = 1 there is a relationship between observations at lag 1

When p = 2 there is a relationship between observations at lag 2

MA: a moving-average process (q)

Large value for lag 1, and the ACF will only be significant for this

lag whilst the PACF decays over greater lags

When q = 0 there are no moving average components

When q = 1 there is a relationship between the current score and the random

shock at lag 1

When q = 2 there is a relationship between the current score and the random

shock at lag 2

Time to run a model or ten

arima count_4_10, arima (1,0,0) nolog

Diagnosing a model

This involves assessing to see how well your model fits

your data

E.g. Are the values of the observations predicted from the model

close to actual ones?

We assess this through examining the residuals (differences

between actual & predicted values)

If the model is good, the residuals

will be a series of random errors

We assess this through ACFs and

PACFs

ARMAX?

Simply an ARIMA model with independent variables (IVs)

Can dummy code an intervention variable

So that 0 = pre-intervention, 1 = post-intervention

Or can use other IVs

E.g. number of police officers, number of ex-prisoners released

Need to identify, estimate and diagnose your model based

on pre-intervention data before adding the IVs

Add in your IV to the model

The model fit The beta

coefficient

The p value

Then diagnose once more

Types of questions you may want to answer

What kind of trend do I have going on?

Do these trends differ by area?

Are there distinct seasonal patterns in my data?

Are they stable enough to be able to predict the next month,

quarter, year?

What has been the impact of an intervention?

Are the before and after trends different?

What relationship does an IV (e.g. no of Police Officers on

the beat) have with my DV (e.g. count of crime)?

The value of using time-series analysis

Determining whether crime patterns vary in specific ways

over time has implications for our understanding of the

problem

Time-series analysis helps to reveal the patterns and to establish

the statistical significance of them

Predictable patterns create opportunities for crime

prevention and detection

Deployment of policing resources

Designing crime prevention initiatives

Where to go for more information

Some further reading

Campbell, D.T. & Stanley, J.C (1963). Experimental and Quasi-

Experimental Designs for Research. Boston: Houghton Mifflin

Company.

Shadish, W.R.; Cook, T.D. & Campbell, D.T. (2002). Experimental and

Quasi-Experimental Designs for Generalized Causal Inference.

Boston: Houghton Mifflin Company.

Tabachnick, B. & Fidel, L (2007). Using multivariate

statistics. 5th ed. Boston: Allyn and Bacon.

Chapter 17

Thank you

Lisa Tompson

Research Associate

UCL Jill Dando Institute of Crime Science

l.tompson@ucl.ac.uk

Das könnte Ihnen auch gefallen

- Univariate Time SeriesDokument83 SeitenUnivariate Time SeriesShashank Gupta100% (1)

- Univariate Time SeriesDokument83 SeitenUnivariate Time SeriesVishnuChaithanyaNoch keine Bewertungen

- Time Series Analysis BookDokument202 SeitenTime Series Analysis Bookmohamedmohy37173Noch keine Bewertungen

- A Comprehensive Guide To Time Series AnalysisDokument26 SeitenA Comprehensive Guide To Time Series AnalysisMartin KupreNoch keine Bewertungen

- Datamining and Analytics Unit VDokument102 SeitenDatamining and Analytics Unit VAbinaya CNoch keine Bewertungen

- JML ArimaDokument37 SeitenJML Arimapg aiNoch keine Bewertungen

- Unit 7 - Forecasting and Time Series - Advanced TopicsDokument54 SeitenUnit 7 - Forecasting and Time Series - Advanced TopicsRajdeep SinghNoch keine Bewertungen

- Time Series AnalysisDokument36 SeitenTime Series AnalysisVinayakaNoch keine Bewertungen

- ARIMA ModelDokument30 SeitenARIMA ModelAmado SaavedraNoch keine Bewertungen

- Arima ModelDokument30 SeitenArima ModelSarbarup BanerjeeNoch keine Bewertungen

- Lecture 4Dokument21 SeitenLecture 4Dimpho Sonjani-SibiyaNoch keine Bewertungen

- Time Series ForecastingDokument29 SeitenTime Series ForecastingKhyati ChhabraNoch keine Bewertungen

- ARIMA Modelling and ForecastingDokument30 SeitenARIMA Modelling and ForecastingRaymond SmithNoch keine Bewertungen

- Time Series AnalysisDokument15 SeitenTime Series Analysisseenubarman12Noch keine Bewertungen

- Time Series Analysis NotesDokument14 SeitenTime Series Analysis NotesRISHA SHETTYNoch keine Bewertungen

- Time Series Modeling: Shouvik Mani April 5, 2018Dokument46 SeitenTime Series Modeling: Shouvik Mani April 5, 2018Salvador RamirezNoch keine Bewertungen

- Quantitative Chapter10Dokument27 SeitenQuantitative Chapter10iceineagNoch keine Bewertungen

- Business Forecasting Using RDokument32 SeitenBusiness Forecasting Using RshanNoch keine Bewertungen

- Statistical Fundamentals: Prepared By: Kath Lea Busalanan Glorietta MontanezDokument11 SeitenStatistical Fundamentals: Prepared By: Kath Lea Busalanan Glorietta MontanezKath BusalananNoch keine Bewertungen

- Box JerkinDokument7 SeitenBox Jerkinyash523Noch keine Bewertungen

- Financial EconometricsDokument16 SeitenFinancial EconometricsVivekNoch keine Bewertungen

- Box-Jenkins Methodology Forecasting BasicsDokument11 SeitenBox-Jenkins Methodology Forecasting BasicsAdil Bin KhalidNoch keine Bewertungen

- Topic 4-StudentDokument54 SeitenTopic 4-StudentAqim AzamNoch keine Bewertungen

- Rocess Ontrol Tatistical: C C I P P S E EDokument71 SeitenRocess Ontrol Tatistical: C C I P P S E EalwaleedrNoch keine Bewertungen

- Lecture 3 ForecastingDokument31 SeitenLecture 3 ForecastingsanaullahbndgNoch keine Bewertungen

- Ch07 - ForecastDokument20 SeitenCh07 - ForecastAya_NoahNoch keine Bewertungen

- Lecture Notes (1) : - Definition of Financial EconometricsDokument21 SeitenLecture Notes (1) : - Definition of Financial Econometricsachal_premiNoch keine Bewertungen

- Ai - Digital Assignmen1Dokument11 SeitenAi - Digital Assignmen1SAMMY KHANNoch keine Bewertungen

- Time Series AnalysisDokument10 SeitenTime Series AnalysisJayson VillezaNoch keine Bewertungen

- A Comprehensive Guide To Time Series AnalysisDokument18 SeitenA Comprehensive Guide To Time Series AnalysisAdriano Marcos Rodrigues FigueiredoNoch keine Bewertungen

- SPCDokument62 SeitenSPCRajesh DwivediNoch keine Bewertungen

- ArimaDokument65 SeitenArimarkarthik403Noch keine Bewertungen

- Time Series AnalysisDokument3 SeitenTime Series AnalysisSamarth VäìşhNoch keine Bewertungen

- Time Series AnalysisDokument3 SeitenTime Series AnalysisSamarth VäìşhNoch keine Bewertungen

- Time Series AnalysisDokument3 SeitenTime Series AnalysisSamarth VäìşhNoch keine Bewertungen

- Forecasting MethodsDokument26 SeitenForecasting MethodsPurvaNoch keine Bewertungen

- Unit 5Dokument60 SeitenUnit 5ShubhadaNoch keine Bewertungen

- Time SeriesDokument40 SeitenTime Seriesvijay baraiNoch keine Bewertungen

- Box JenkinsDokument53 SeitenBox JenkinsalexanderkyasNoch keine Bewertungen

- TOD 212-PPT 2 For Students - Monsoon 2023Dokument26 SeitenTOD 212-PPT 2 For Students - Monsoon 2023dhyani.sNoch keine Bewertungen

- End Term Project (BA)Dokument19 SeitenEnd Term Project (BA)atmagaragNoch keine Bewertungen

- Exploratory Data AnalysisDokument14 SeitenExploratory Data AnalysisGagana U KumarNoch keine Bewertungen

- Statistical Process ControlDokument79 SeitenStatistical Process ControlKrunal PandyaNoch keine Bewertungen

- 2 Forecasting Techniques Time Series Regression AnalysisDokument47 Seiten2 Forecasting Techniques Time Series Regression Analysissandaru malindaNoch keine Bewertungen

- Forecasting Is The Process of Making Statements About Events Whose Actual OutcomesDokument4 SeitenForecasting Is The Process of Making Statements About Events Whose Actual OutcomesshashiNoch keine Bewertungen

- Six Sigma Green Belt Exam Study NotesDokument11 SeitenSix Sigma Green Belt Exam Study NotesKumaran VelNoch keine Bewertungen

- Timeseries - AnalysisDokument37 SeitenTimeseries - AnalysisGrace YinNoch keine Bewertungen

- Arima: Autoregressive Integrated Moving AverageDokument32 SeitenArima: Autoregressive Integrated Moving AverageJoshy_29Noch keine Bewertungen

- Time SeriesDokument91 SeitenTime SeriesRajachandra VoodigaNoch keine Bewertungen

- Time SeriesDokument61 SeitenTime Serieskomal kashyapNoch keine Bewertungen

- Time Series Analysis of Air Duality DataDokument7 SeitenTime Series Analysis of Air Duality DataECRD100% (1)

- Module 5 PDFDokument23 SeitenModule 5 PDFJha JeeNoch keine Bewertungen

- Statistical Quality Control: Simple Applications of Statistics in TQMDokument57 SeitenStatistical Quality Control: Simple Applications of Statistics in TQMHarpreet Singh PanesarNoch keine Bewertungen

- Statistical QCDokument57 SeitenStatistical QCJigar NagvadiaNoch keine Bewertungen

- Six Sigma Green Belt Exam Study NotesDokument12 SeitenSix Sigma Green Belt Exam Study Notessys-eng90% (48)

- Time Series with Python: How to Implement Time Series Analysis and Forecasting Using PythonVon EverandTime Series with Python: How to Implement Time Series Analysis and Forecasting Using PythonBewertung: 3 von 5 Sternen3/5 (1)

- Time Series Analysis in the Social Sciences: The FundamentalsVon EverandTime Series Analysis in the Social Sciences: The FundamentalsNoch keine Bewertungen

- Elliott Wave Timing Beyond Ordinary Fibonacci MethodsVon EverandElliott Wave Timing Beyond Ordinary Fibonacci MethodsBewertung: 4 von 5 Sternen4/5 (21)

- Site Data & Test Report-Node B New SiteDokument47 SeitenSite Data & Test Report-Node B New SiteChika AlbertNoch keine Bewertungen

- Mobile Handset Cellular NetworkDokument64 SeitenMobile Handset Cellular NetworkSandeep GoyalNoch keine Bewertungen

- Computer Village Pre-Drive Test Report For AIRTEL Network: Date: 30-08-2018Dokument39 SeitenComputer Village Pre-Drive Test Report For AIRTEL Network: Date: 30-08-2018Chika AlbertNoch keine Bewertungen

- Cluster Drive Report: Port-Harcourt - ObigboDokument50 SeitenCluster Drive Report: Port-Harcourt - ObigboChika AlbertNoch keine Bewertungen

- Cluster Drive Report: Port-Harcourt - ObigboDokument50 SeitenCluster Drive Report: Port-Harcourt - ObigboChika AlbertNoch keine Bewertungen

- About ModelingDokument5 SeitenAbout ModelingChika AlbertNoch keine Bewertungen

- Cluster Drive Report: Port-Harcourt - ObigboDokument50 SeitenCluster Drive Report: Port-Harcourt - ObigboChika AlbertNoch keine Bewertungen

- Final R5 Data Report TemplateDokument43 SeitenFinal R5 Data Report TemplateChika AlbertNoch keine Bewertungen

- Lg9114 - 2g SSV ReportDokument24 SeitenLg9114 - 2g SSV ReportChika AlbertNoch keine Bewertungen

- Case A α AGCH overload at the BTS β: Technology Cases Causes Cause Code Solution CodeDokument8 SeitenCase A α AGCH overload at the BTS β: Technology Cases Causes Cause Code Solution CodeChika AlbertNoch keine Bewertungen

- Table View DataDokument32 SeitenTable View DataChika AlbertNoch keine Bewertungen

- The Inexhaustible GodDokument6 SeitenThe Inexhaustible GodChika AlbertNoch keine Bewertungen

- 2G C11&C26 - 45 Sites - 2.25Dokument12 Seiten2G C11&C26 - 45 Sites - 2.25Chika AlbertNoch keine Bewertungen

- 2G C11&C26 - 45 Sites - 2.25Dokument12 Seiten2G C11&C26 - 45 Sites - 2.25Chika AlbertNoch keine Bewertungen

- Gariki Cluster Pre - Post Report Poor StretchesDokument14 SeitenGariki Cluster Pre - Post Report Poor StretchesChika AlbertNoch keine Bewertungen

- Abuja Physical Optimization Audit ReportDokument32 SeitenAbuja Physical Optimization Audit ReportChika AlbertNoch keine Bewertungen

- Allocated SiteDokument18 SeitenAllocated SiteChika AlbertNoch keine Bewertungen

- GIM 11 5 Kaufman 200498 SDC1Dokument11 SeitenGIM 11 5 Kaufman 200498 SDC1jawadg123Noch keine Bewertungen

- Ss 2 TFDokument10 SeitenSs 2 TFChika AlbertNoch keine Bewertungen

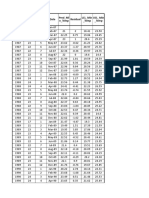

- Months Month Date Residual Min - Te MP Pred - Mi N - Temp LCL - Min - Temp UCL - Min - TempDokument13 SeitenMonths Month Date Residual Min - Te MP Pred - Mi N - Temp LCL - Min - Temp UCL - Min - TempChika AlbertNoch keine Bewertungen

- Eni Office To Md's Residence Tss - Los - Summary ReportDokument13 SeitenEni Office To Md's Residence Tss - Los - Summary ReportChika AlbertNoch keine Bewertungen

- Abuja Physical Optimization Audit ReportDokument32 SeitenAbuja Physical Optimization Audit ReportChika AlbertNoch keine Bewertungen

- Eni Office To Md's Residence Tss - Los - Summary ReportDokument13 SeitenEni Office To Md's Residence Tss - Los - Summary ReportChika AlbertNoch keine Bewertungen

- Eni Office To Villagio Tss - Los - Summary ReportDokument13 SeitenEni Office To Villagio Tss - Los - Summary ReportChika AlbertNoch keine Bewertungen

- PS PS Attach Detach MS1Dokument6 SeitenPS PS Attach Detach MS1Chika AlbertNoch keine Bewertungen

- Eni Office To Md's Residence Tss - Los - Summary ReportDokument13 SeitenEni Office To Md's Residence Tss - Los - Summary ReportChika AlbertNoch keine Bewertungen

- S/N Site ID Site Address Site Cordinate: No3, Iweanya Ugbogoh Crecent, Lekki Phase1Dokument9 SeitenS/N Site ID Site Address Site Cordinate: No3, Iweanya Ugbogoh Crecent, Lekki Phase1Chika AlbertNoch keine Bewertungen

- All Links TSS - LOS - Summary ReportDokument14 SeitenAll Links TSS - LOS - Summary ReportChika AlbertNoch keine Bewertungen

- PS Attach Setup Time (MS)Dokument2 SeitenPS Attach Setup Time (MS)Chika AlbertNoch keine Bewertungen

- PS PS Attach Detach MS1Dokument6 SeitenPS PS Attach Detach MS1Chika AlbertNoch keine Bewertungen