Beruflich Dokumente

Kultur Dokumente

Speech Processing Research Paper 8

Hochgeladen von

impariveshOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Speech Processing Research Paper 8

Hochgeladen von

impariveshCopyright:

Verfügbare Formate

Wavelet Packet Analysis for Reducing the Effect of Spectral Masking to

Improve Auditory Perception

P. A. Dhulekar#1

#

Department of E & TC

Dr. B. A. Tech. University

Lonere 402103, Maharashtra, India

1

pravindhulekar@gmail.com

S.L.Nalbalwar#2

J.J.Chopade*3

Department of E & TC

Dr. B. A. Tech. University

Lonere 402103, Maharashtra, India

2

slnalbalwar@dbatu.ac.in

Department of E & TC

SNJBs LSKBJ COE

Chandwad, Maharashtra, India

3

jchopade@yahoo.com

membrane, which contains sensory hair cells. There are

about 3,000 to 3,500 inner hair cells, each with about 40

hairs and 20,000 to 25,000 outer hair cells, each with about

140 hairs. The tectorial membrane is situated above the

hairs.

The information about sounds is conveyed via inner hair

cells as the inner cells stimulate the afferent neurons. Outer

hair cells improve the basilar membrane responses thus

produce high sensitivity, and sharp the tuning (frequency

selectivity) to the basilar membrane [2].

Abstract Sensorineural hearing impaired person experiences

degraded frequency selectivity of the ear, due to increase in

spectral masking along the cochlear partition, results in

degraded speech perception. Splitting the speech signal by

means of filtering and down sampling at each decomposition

level, using wavelet packets with different wavelet functions,

helps to reduce the effect of spectral masking, through which it

improve speech intelligibility. This experiment was carried out

with vowel-consonant-vowel syllables for fifteen English

consonants; they are decomposed in eight frequency bands

with desired band selection. Dichotic presentation of the

processed speech signal showed improvement in speech quality,

recognition scores and less response time in the reception of

speech feature of voicing, place, and manner, indicating

usefulness of the scheme for better reception of spectral

characteristics.

Outer ear

Middle

ear

Inner ear

O ssicles

Vestibular apparatus

with semicircular

canals

Keywords- Sensorineural hearing impairment, Frequency

selectivity, Spectral masking, Wavelet packet, Dichotic

Presentation.

I.

Malleus

Incus

Stapes

Vestibular

nerve

Figure 1. Structure of the auditory system. Adapted from

Cochlear

Moore (1997), Fig.1-7

External

INTRODUCTION

Pinna

Cochlea or Inner ear is a snail shaped cavity like a spiral

shaped structure, filled with fluid. Fig. 1 shows the structure

of the auditory system. The start of the cochlea, where the

oval window is placed is known as the basal end and its

other end is known as the apical end [1]. The cochlea is

separated along its length into three chambers namely: scala

vestibuli, scala media, and scala tympani. Scala vestibuli

and scala media are split by a thin membrane called

Reissners membrane, and scala media is separated from

scala tympani by basilar membrane [1]. Incoming signal

causes the oval window for movement and pressure

differences at the tympanic membrane are applied, resulting

in cochlear fluid movement which provides upward and

downward movement of the basilar membrane. The

vibration occurs depends on the mechanical properties of

the basilar membrane and the frequency of the input signal

which fluctuate significantly from base to apex. The basilar

membrane is comparatively stiff and narrow at the basal end,

and less stiff and wider at the apex. The high frequency

signals produce maximum deflection at the base while low

frequency signals produce maximum deflection at the apex

[2]. Fig. 2 shows the vibration of the basilar membrane for

high, medium and low frequencies. The organ of Corti is

placed between the basilar membrane and tectorial

nerve

canal

Cochlea

Eardrum

(Tympanic

membrane)

Oval window

Round window

Eustachian tube

Nasal cavity

Fig. 1 Structure of the auditory system. Adapted from Moore (1997),

Fig.1-7

Frequency

4000

2000

1000

600

400

200

Rel. Amplitude

8000

10

15

20

Distance from stapes (mm)

25

30

35

Fig. 2 Amplitude patterns of vibration of basilar membrane for different

frequencies.

The impairment due to defect in the cochlea is known as

cochlear (sensory) impairment. Sensorineural hearing

impairment is caused by exposure to intense sound,

___________________________________

978-1-4244 -8679-3/11/$26.00 2011 IEEE

409

reduction in the effect of masking, so VCV syllables are

used.

For the evaluation of the speech processing strategies, a

set of fifteen nonsense syllables in VCV context with

consonants / p, b, t, d, k, g, m, n, s, z, f, v, r, l, y / and vowel

/a/ as in farmer were used. The features selected for study

were voicing (voiced: / b d g m n z v r l y / and unvoiced: / p

t k s f /), place (front: / p b m f v /, middle: / t d n s z r l /, and

back: / k g y /), manner (oral stop: / p b t d k g l y /, fricative:

/ s z f v r /, and nasals: / m n /), nasality (oral: / p b t d k g s z

f v r l y /, nasal: /m n /), frication (stop: / p b t d k g m n l y /,

fricative: / s z f v r /), and duration (short: / p b t d k g m n f v

l / and long: /s z r y /).

congenital defects leading to loss of cochlear hair cells,

damage to auditory neurons. The audiograms of the

sensorineural impairment show typical shapes depending on

the pathology such as high frequency hearing impairment,

elevated thresholds at low frequencies. There are different

impairments of moderate to severe bilateral sensorineural

hearing impairment, bilateral and profound deafness, and

unilateral very severe or profound deafness. Apart from the

elevated thresholds, sensorineural hearing impairment is

observed as by loudness recruitment, reduced frequency

selectivity and temporal resolution, and increased spectral

and temporal masking [3],[4].

Spectral masking or simultaneous masking is a

frequency-domain version of temporal masking, and tends

to occur in sounds with similar frequencies: a powerful

spike at 1 kHz will tend to mask out a lower-level tone at

1.1 kHz. It is masking between two concurrent sounds.

Sometimes called frequency masking since it is often

observed when the sounds share a frequency band e.g. two

sine tones at 440 and 450Hz can be perceived clearly when

separated. They cannot be perceived clearly when

simultaneous. In masking a sound is made inaudible by a

"masker", a noise or unwanted sound of the same duration

as the original sound [5],[6].

The discrete wavelet transform divide the signal spectrum

into frequency bands that are narrow in the lower

frequencies and wide in the higher frequencies [7], [8]. This

limits how wavelet coefficients in the upper half of the

signal spectrum are classified [9]. Wavelet packets divide

the signal spectrum into frequency bands that are evenly

spaced and have equal bandwidth and will be explored for

use in identifying transient and quasisteady-state speech.

WP are efficient tools for speech analysis, involve using

two-band splitting of the input signal by means of filtering

and downsampling at each decomposition level. Designing

the WP filter-bank involves choosing the decomposition tree

and then selecting the filters for each decomposition level of

the tree. For each decomposition level, there is a different

time-frequency resolution. Once the decomposition tree has

been selected, the next step involves selecting an

appropriate wavelet filter for each decomposition level of

the tree.

The decomposition of the input signal which includes

vowel-consonant-vowel for fifteen English consonants is

carried out by using Daubechies, Symlets, Biorthogonal

wavelets of different orders, a possible solution to problem

of spectral masking.

II.

B. The Speech Processing Strategies

For many signals, the low-frequency content is the most

important part. It is what gives the signal its identity. The

high-frequency content, on the other hand, imparts flavor or

nuance. Consider the human voice. If you remove the highfrequency components, the voice sounds different, but you

can still tell what's being said. However, if you remove

enough of the low-frequency components, you hear

gibberish.

In basic filtering process, the original signal passes

through two complementary filters and emerges as two

signals. Unfortunately, if we actually perform this operation

on a real digital signal, we wind up with twice as much data

as we started with. Suppose, for instance, the original signal

consists of 1000 samples of data. Then the resulting signals

will each have 1000 samples, for a total of 2000.These

signals A and D are interesting, but we get 2000 values

instead of the 1000.

C. Multiple Level Decomposition by DWT

There exists a more subtle way to perform the

decomposition using wavelets. By looking carefully at the

computation, we may keep only one point out of two in each

of the two 2000-length samples to get the complete

information. This is the notion of downsampling. We

produce two sequences called cA and cD, which includes

downsampling, produces DWT coefficients [8].

The decomposition process can be iterated, with

successive approximations being decomposed in turn, so

that one signal is broken down into many lower resolution

components. This is called the wavelet decomposition tree.

Fig. 3 shows multilevel decomposition by DWT up to level

3.

MATERIALS AND METHODS

A. The Speech Material

Earlier studies have used CV, VC, CVC, and VCV

syllables. It has been reported earlier that greater masking

takes place in intervocalic consonants due to the presence of

vowels on both sides [10], [11]. Since our primary objective

is to study improvement in consonantal identification due to

Fig. 3

410

Multilevel Decomposition by DWT up to Level 3

Wavelet packet method is a generalization of wavelet

decomposition that offers a richer range of possibilities for

signal analysis. In wavelet analysis, a signal is split into an

approximation and a detail. The approximation is then itself

split into a second-level approximation and detail, and the

process is repeated. For n-level decomposition, there are n+1

possible ways to decompose or encode the signal. This yields

more than 22^ (n-1) different ways to encode the signal [9].

D. Multiple Level Decomposition by Wavelet Packets

The problem with DWT is that it splits only

approximations at each decomposition level while in

wavelet packet analysis, the details as well as the

approximations can be split also approximation coefficient

and detail coefficient for both low and high frequency are

found out. Fig. 4 shows multilevel decomposition by

Wavelet Packets up to level 3.

III.

EXPERIMENTAL RESULTS

In this analysis we have decomposed fifteen nonsense

syllables in VCV context with consonants / p, b, t, d, k, g, m,

n, s, z, f, v, r, l, y / and vowel /a/ as in farmer, using wavelet

packets with different wavelets like Daubechies, Symlets and

Biorthogonal. Fig. 5 shows the Multilevel Decomposition of

VCV context /ada/ which is obtained by sym5 gives

Approximation & Detail Coefficients. Decomposition Tree

with data at node for VCV context /ada/ by sym5 is shown

in Fig. 6.The perfect reconstruction error is shown in Table I

TABLE I

Discrete Wavelet Transform

Fig. 4

Wavelet Packet

Multilevel Decomposition by Wavelet Packets up to Level 3

Fig. 5

6.6877e-011

9.7225e-012

Decomposition of context /ada/ up to level 3 with Approximation coefficients and Detail coefficients using sym5

411

Fig. 6

IV.

Decomposition tree with data for node of context /ada/ up to level 3 using sym5

For reducing the effect of increased spectral masking, speech

processing schemes based on spectral splitting of speech

signal, by using comb filters with complementary pass bands,

for binaural dichotic presentation have been reported earlier.

Splitting the speech signal and compressing the frequency

bands is a possible solution to reduce the effect of increased

masking. In this study, a processing scheme of speech signal,

by using discrete wavelet transform with different types of

wavelets with different orders has been investigated to

decompose the speech signal into two for binaural dichotic

presentation, presented to the two ears which may help in

improving the perception of various consonantal features.

The speech signals were decomposed with discrete wavelet

transform at various levels to get low frequency and high

frequency signal components. For each decomposition level,

there is a different time-frequency resolution. Once the

decomposition tree has been selected, the next step involves

selecting an appropriate wavelet type depending on

orthogonally, symmetry such as Daubechies, Symlets and

Biorthogonal wavelet functions. It helps in reducing

temporal masking and spectral masking simultaneously,

thereby improving the speech perception.

DISCUSSION

Sensorineural hearing impairment is associated with

increased masking. Increased spectral masking associated

with broad auditory filters results in smearing of spectral

peaks and valleys, and leads to difficulty in perception of

consonantal feature. Increased forward and backward

temporal masking of weak acoustic segments by strong ones

causes reduction in discrimination of voice-onset-time,

formant transition, and burst duration that are required for

consonant identification. Thus the overall effect of two

types of masking is a difficulty in discrimination of

consonants, resulting in relatively degraded speech

perception by persons with sensorineural impairment.

Masking takes place primarily at the peripheral auditory

system. In speech perception, the information received from

both the ears gets integrated. Hence splitting of speech

signal into two complementary signals such that signal

components likely to mask each other get presented to the

different ears can be used for reducing the effect of

increased masking. This technique can be used for

improving speech reception by persons with moderate

bilateral sensorineural impairment, i.e. residual hearing in

both the ears.

This study shows that, hearing impaired subjects are able

to perceptually integrate the dichotically presented speech

signal. Processing schemes for splitting the speech signal in

a complementary fashion to reduce the effects of increased

masking at the peripheral level improved speech reception.

The dichotic presentation also decreases the load on the

perception process. For hearing impaired subjects, the

improvement in consonantal reception and reduction in

response time do not follow the same trend.

REFERENCES

[1]

[2]

[3]

[4]

412

B. C. J. Moore, An Introduction to Psychology of Hearing. 4th ed.

London: Academic, 1997.

B. C. J. Moore, Speech processing for hearing impaired:

successes, failures, and implications for speech mechanisms,

Speech Communication., vol. 41, pp. 81-91, 2003

L. R. Rabiner and R. W. Schafer, Digital Processing of the Speech

Signals, Englewood Cliffs, NJ: Printice Hall, 1978.

T. Arai, K. Yasu, and N. Hodoshima, Effective speech processing

for various impaired listeners, Proc. 18th International Congress

Acoustics (ICA), 2004, pp. 1389 - 1392.

[5]

[6]

[7]

P.N.Kulkarni and P.C.Pandey,Optimizing the comb filters for

spectral splitting of

speech to reduce the effect of spectral

masking, in Proc. International conference on signal processing,

Communication and Networking (MIT,Chennai,India), pp.6973,Jan 4-6, 2008.

A. N. Cheeran, and P. C. Pandey, Evaluation of speech processing

schemes using binaural dichotic presentation to reduce the effect of

masking in hearing-impaired listeners, in Proc. 18th International

Congress on Acoustics (ICA 2004, Kyoto, Japan), pp. 1523 - 1526,

Apr. 49, 2004.

I. Cheikhrouhou, R.B. Atitallah, K. Ouni, A.B. Hamida, N.

Mamoudi,and N. Ellouze, Speech analysis using wavelet

transforms dedicated to cochlear prosthesis stimulation strategy,

1st Intern. Symp. On Control, Communications and Signal

Processing, 2004, pp. 639642.

[8]

[9]

[10]

[11]

413

Alessia Paglialonga, Gabriella Tognola, Giuseppe Baselli, Marta

Parazzini, Paolo Ravazzani, and Ferdinando Grandori Speech

Processing for Cochlear Implants with the Discrete Wavelet

Transform: Feasibility Study and Performance Evaluation Proc.

28th IEEEEMBS Annual International Conference, New York City,

USA, Aug 30-Sept 3, 2006 pp.3763-3766.

S. Mallat, A theory for multiresolution signal decomposition: the

wavelet representation, IEEE Trans. on Patt. Anal. Machine Intell,

vol. 11(2), pp. 674-694, 1989.

Chaudhari D. S. and Pandey P. C. Dichotic presentation of speech

signa1 using critical filter bank for bilateral sensorineural hearing

impaired. Proceedings of I6* International Congress on

Acoustics, Seattle, Washington, 1998, vol. 1, pages 213-214

D. S. Chaudhari, and P. C. Pandey, Dichotic presentation of

speech signal with critical band filtering for improving speech

perception, in Proc. ICASSP 98, Seattle, Washington,

USA,pp.3601-3604.

Das könnte Ihnen auch gefallen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (890)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Checklist: Mobile Crane SafetyDokument2 SeitenChecklist: Mobile Crane SafetyJohn Kurong100% (5)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- 6.water Treatment and Make-Up Water SystemDokument18 Seiten6.water Treatment and Make-Up Water Systempepenapao1217100% (1)

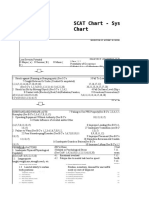

- SCAT Chart - Systematic Cause Analysis Technique - SCAT ChartDokument6 SeitenSCAT Chart - Systematic Cause Analysis Technique - SCAT ChartSalman Alfarisi100% (1)

- Healthcare Financing in IndiADokument86 SeitenHealthcare Financing in IndiAGeet Sheil67% (3)

- Consider Recycled Water PDFDokument0 SeitenConsider Recycled Water PDFAnonymous 1XHScfCINoch keine Bewertungen

- Terminal Tractors and Trailers 6.1Dokument7 SeitenTerminal Tractors and Trailers 6.1lephuongdongNoch keine Bewertungen

- Diploma Pharmacy First Year - Hap - MCQSDokument13 SeitenDiploma Pharmacy First Year - Hap - MCQSAnitha Mary Dambale91% (33)

- The Turbo Air 6000 Centrifugal Compressor Handbook AAEDR-H-082 Rev 05 TA6000Dokument137 SeitenThe Turbo Air 6000 Centrifugal Compressor Handbook AAEDR-H-082 Rev 05 TA6000Rifki TriAditiya PutraNoch keine Bewertungen

- D Formation Damage StimCADE FDADokument30 SeitenD Formation Damage StimCADE FDAEmmanuel EkwohNoch keine Bewertungen

- Speech Processing Research Paper 1Dokument9 SeitenSpeech Processing Research Paper 1impariveshNoch keine Bewertungen

- Speech Processing Research Paper 6Dokument4 SeitenSpeech Processing Research Paper 6impariveshNoch keine Bewertungen

- Speech Processing Research Paper 2Dokument4 SeitenSpeech Processing Research Paper 2impariveshNoch keine Bewertungen

- Speech Processing Research Paper 10Dokument4 SeitenSpeech Processing Research Paper 10impariveshNoch keine Bewertungen

- Speech Processing Research Paper 9Dokument5 SeitenSpeech Processing Research Paper 9impariveshNoch keine Bewertungen

- Speech Processing Research Paper 7Dokument5 SeitenSpeech Processing Research Paper 7impariveshNoch keine Bewertungen

- Speech Processing Research Paper 11Dokument6 SeitenSpeech Processing Research Paper 11impariveshNoch keine Bewertungen

- Speech Processing Research Paper 13Dokument6 SeitenSpeech Processing Research Paper 13impariveshNoch keine Bewertungen

- Speech Processing Research Paper 18Dokument4 SeitenSpeech Processing Research Paper 18impariveshNoch keine Bewertungen

- Speech Processing Research Paper 14Dokument5 SeitenSpeech Processing Research Paper 14impariveshNoch keine Bewertungen

- Speech Processing Research Paper 12Dokument4 SeitenSpeech Processing Research Paper 12impariveshNoch keine Bewertungen

- Speech Processing Research Paper 19Dokument1 SeiteSpeech Processing Research Paper 19impariveshNoch keine Bewertungen

- Speech Processing Research Paper 16Dokument6 SeitenSpeech Processing Research Paper 16impariveshNoch keine Bewertungen

- Speech Processing Research Paper 15Dokument13 SeitenSpeech Processing Research Paper 15impariveshNoch keine Bewertungen

- Speech Processing Research Paper 17Dokument4 SeitenSpeech Processing Research Paper 17impariveshNoch keine Bewertungen

- Speech Processing Research Paper 24Dokument4 SeitenSpeech Processing Research Paper 24impariveshNoch keine Bewertungen

- Speech Processing Research Paper 21Dokument5 SeitenSpeech Processing Research Paper 21impariveshNoch keine Bewertungen

- Speech Processing Research Paper 27Dokument12 SeitenSpeech Processing Research Paper 27impariveshNoch keine Bewertungen

- Speech Processing Research Paper 22Dokument4 SeitenSpeech Processing Research Paper 22impariveshNoch keine Bewertungen

- Speech Processing Research Paper 23Dokument4 SeitenSpeech Processing Research Paper 23impariveshNoch keine Bewertungen

- Speech Processing Research Paper 26Dokument5 SeitenSpeech Processing Research Paper 26impariveshNoch keine Bewertungen

- Speech Processing Research Paper 25Dokument4 SeitenSpeech Processing Research Paper 25impariveshNoch keine Bewertungen

- Recombinant DNA TechnologyDokument14 SeitenRecombinant DNA TechnologyAnshika SinghNoch keine Bewertungen

- Separation/Termination of Employment Policy SampleDokument4 SeitenSeparation/Termination of Employment Policy SampleferNoch keine Bewertungen

- 220/132 KV Sub-Station Bhilai-3: Training Report ONDokument24 Seiten220/132 KV Sub-Station Bhilai-3: Training Report ONKalyani ShuklaNoch keine Bewertungen

- Chin Cup Therapy An Effective Tool For The Correction of Class III Malocclusion in Mixed and Late Deciduous DentitionsDokument6 SeitenChin Cup Therapy An Effective Tool For The Correction of Class III Malocclusion in Mixed and Late Deciduous Dentitionschic organizerNoch keine Bewertungen

- VERALLIA WHITE-BOOK EN March2022 PDFDokument48 SeitenVERALLIA WHITE-BOOK EN March2022 PDFEugenio94Noch keine Bewertungen

- SafewayDokument70 SeitenSafewayhampshireiiiNoch keine Bewertungen

- Notes Lecture No 3 Cell Injury and MechanismDokument5 SeitenNotes Lecture No 3 Cell Injury and MechanismDr-Rukhshanda RamzanNoch keine Bewertungen

- Final Profile Draft - Zach HelfantDokument5 SeitenFinal Profile Draft - Zach Helfantapi-547420544Noch keine Bewertungen

- 9 Oet Reading Summary 2.0-195-213Dokument19 Seiten9 Oet Reading Summary 2.0-195-213Vijayalakshmi Narayanaswami0% (1)

- Buhos SummaryDokument1 SeiteBuhos Summaryclarissa abigail mandocdocNoch keine Bewertungen

- Carbon Cycle Game Worksheet - EportfolioDokument2 SeitenCarbon Cycle Game Worksheet - Eportfolioapi-264746220Noch keine Bewertungen

- Olpers MilkDokument4 SeitenOlpers MilkARAAJ YOUSUFNoch keine Bewertungen

- Plant Cell Culture: Genetic Information and Cellular MachineryDokument18 SeitenPlant Cell Culture: Genetic Information and Cellular MachineryYudikaNoch keine Bewertungen

- CV Dang Hoang Du - 2021Dokument7 SeitenCV Dang Hoang Du - 2021Tran Khanh VuNoch keine Bewertungen

- Understanding Anxiety Disorders and Abnormal PsychologyDokument7 SeitenUnderstanding Anxiety Disorders and Abnormal PsychologyLeonardo YsaiahNoch keine Bewertungen

- Grade 9 P.EDokument16 SeitenGrade 9 P.EBrige SimeonNoch keine Bewertungen

- Rice Research: Open Access: Black Rice Cultivation and Forming Practices: Success Story of Indian FarmersDokument2 SeitenRice Research: Open Access: Black Rice Cultivation and Forming Practices: Success Story of Indian Farmersapi-420356823Noch keine Bewertungen

- Liquid - Liquid ExtractionDokument19 SeitenLiquid - Liquid ExtractionApurba Sarker ApuNoch keine Bewertungen

- ABB Leaflet Comem BR-En 2018-06-07Dokument2 SeitenABB Leaflet Comem BR-En 2018-06-07Dave ChaudhuryNoch keine Bewertungen

- Environmental Science OEdDokument9 SeitenEnvironmental Science OEdGenevieve AlcantaraNoch keine Bewertungen

- 632 MA Lichauco vs. ApostolDokument2 Seiten632 MA Lichauco vs. ApostolCarissa CruzNoch keine Bewertungen