Beruflich Dokumente

Kultur Dokumente

311 - Paper Neuronal

Hochgeladen von

Francis ValerioOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

311 - Paper Neuronal

Hochgeladen von

Francis ValerioCopyright:

Verfügbare Formate

Proceedings of the International Conference on New Interfaces for Musical Expression

Musical Composition by Regressional Mapping of

Physiological Responses to Acoustic Features

D. J. Valtteri Wikstrm

Cognitive Brain Research Unit

University of Helsinki

and

Media Lab Helsinki

Aalto University

valtteri.wikstrom@aalto.fi

ABSTRACT

second basis, is created and evaluated in a small experimental study. The current system works offline, but special

attention has been given to using tools and methods that

make real-time processing possible.

The generation of new sounds is achieved by using corpusbased concatenative sound synthesis, which is a method for

matching a certain sequence of small sound units to create a

new sound [25]. In concatenative sound synthesis, the corpus is a database of sound units that includes the descriptors for each unit. The descriptors can be extracted from

the source signal, or external descriptors can be attributed

to the sounds. The selection for the synthesised sequence

of units is made by composing with the descriptors. The

unit selection algorithm is used to find the best match according to certain criteria, such as distance and continuity.

Using the regression output of this study as a composition

source for driving the unit selection algorithm, with a corpus of sounds analysed for the same musical information,

new sounds can be reconstructed as a representation of the

users physiology. The resulting sound is a recreation of the

physiological state of the subject, based on how sound has

affected the subject before.

In a musical context this creates an elegant feedback loop

essentially the sound generated by the system expresses

the users physiological state, which in turn is affected by

the sound properties. Assuming that the model is imperfect and that factors external to the music can influence the

user, the non-stationary sound generated by this system is

an extension of the subjects involuntary expression as well

as a self-reinforcing loop that could have interesting properties for example for music therapy. The reconstruction

of sound and music can be approached in various ways in

the future by using different kinds of data to create the regression model with physiology. For example higher level

data, such as chords, harmonies and lyrics could be used,

as well as synthesis parameters of a specific synthesiser or

a sound-generating system.

In this paper an emotionally justified approach for controlling sound with physiology is presented. Measurements of

listeners physiology, while they are listening to recorded

music of their own choosing, are used to create a regression

model that predicts features extracted from music with the

help of the listeners physiological response patterns. This

information can be used as a control signal to drive musical composition and synthesis of new sounds an approach

involving concatenative sound synthesis is suggested. An

evaluation study was conducted to test the feasibility of the

model. A multiple linear regression model and an artificial

neural network model were evaluated against a constant regressor, or dummy model. The dummy model outperformed

the other models in prediction accuracy, but the artificial

neural network model achieved significant correlations between predictions and target values for many acoustic features.

Keywords

music composition, biosignal, physiology, music information

retrieval, concatenative synthesis, psychoacoustics

1.

INTRODUCTION

The goal of this study is to explore the link between music

and emotion through the effect that music has on the body

of the listener, and to use that as a basis for a justifiable representation of emotions through sound. By creating a statistical model between the listeners physiological responses

and the features of the sound, reconstruction of new sounds

can be achieved directly, without the need of explicitly assigning emotional meaning to sound features. Earlier attempts to generate emotionally relevant music with physiological measurements have used a somewhat arbitrary mapping between the biosignals and the generative composition

[6, 10]. Other approaches for music generation from biosignals, such as BioMuse [26], have treated the biosignals as

control data for synthesis.

In this study, a regression model to predict acoustically

analyzable musical information of songs from measurements

of the listeners physiology during listening, on a second-by-

2.

BACKGROUND

William James argued in an essay from 1884 that emotions

are separate from cognitive processes, and that the body

acts as a mediator between the cognitive and emotional

systems [11]. This theory was disputed, and the origins

of emotions have been a hot topic in affective science ever

since [4, 22, 17]. In any case, an idea first proposed by

James, that emotions exist in the human physiology as distinguishable patterns, has garnered substantial evidence [1,

9, 18]. Music is considered to be capable of influencing the

listeners emotions [19, 12]. Because music influences emotions, and emotions are recognisable from physiology, the

assumption that a link exists between the content of music

Permission to make digital or hard copies of all or part of this work for

personal or classroom use is granted without fee provided that copies are

not made or distributed for profit or commercial advantage and that copies

bear this notice and the full citation on the first page. To copy otherwise, to

republish, to post on servers or to redistribute to lists, requires prior specific

permission and/or a fee.

NIME14, June 30 July 03, 2014, Goldsmiths, University of London, UK.

Copyright remains with the author(s).

549

Proceedings of the International Conference on New Interfaces for Musical Expression

and the physiological responses of the listener is justifiable.

The range and causes of musical emotions are still under

debate, and musical emotions have been described both in

dimensional and categorical ways [23, 24, 27]. The field of

music emotion recognition is concerned with the automatic

detection of emotional content in music. A common way to

approach this problem is to predict the emotion perceived

and reported by the listener based on the content of a song

or segment of a song. Studies have been able to predict

emotional ratings of music from acoustic descriptors with

regression models [24, 7]. Other studies have successfully

predicted emotional content reported by the listener based

on physiological measurements during the listening [13, 21].

3.

RECREATING MUSIC WITH THE BODY

The purpose of the model explained in this section is to reconstruct sounds in a perceptually and emotionally meaningful way. The approach suggested here involves creating

a regression model between musical content and physiology,

so that new musical content can be predicted on the basis

of learned relationships between music and the users physiology (Figure 1). The prediction result can then be used

further to create a musical display of the psychophysiological state of the user.

and respiration inductance plethysmography (RIP). ECG

measures the electrical activity of the heart muscle, making accurate heart beat detection possible. EDA, or skin

conductance measures the activity of the sweat glands on

the palm, representing sympathetic nervous system activity

and the fight-or-flight response. RIP is measured with a

band across the chest to quantify the subjects breathing.

An open source tool for the real-time analysis of physiological data could not be identified, so a decision was made

to begin the development of a new software tool1 for this

purpose, using verified analysis algorithms found in literature [5, 2]. This tool is based on a modular structure, where

the data stream from the data gathering device driver is

buffered and piped to different analysis modules. The result is either stored to disk, visualised or sent to another

application as Open Sound Control (OSC) messages (Figure 2).

OSC message

Main application

Recording module

Buffer

a)

Analyzed data

Analysis module

Raw data

Data analysis

Device driver

Analysis module

Data analysis

Model

learning

Data stream

...

Analysis module

Data analysis

b)

Figure 2: The software tool for real-time analysis of

physiological data has a modular architecture.

Model

prediction

In this study, a total of ten different variables were extracted from the physiological signals. From the EDA signal, the electrodermal level (EDL), the amplitude and the

rise-time of the electrodermal response (EDR) were analysed. From the ECG signal, heart rate, the square root

of the mean squared differences (RMSSD) of the heart-rate

variability (HRV) time series, and the standard deviation of

the HRV time series were calculated. The RIP signal was

analysed for respiration rate and inhale and exhale durations.

Figure 1: The regression model functions in two

phases. a) The model learns the relationships between the listeners physiology and the musical information. b) The model reconstructs musical information based on the users physiology.

To approach the task of modelling the relationship between music and a listeners physiological responses, finding solutions for the analysis of audio and physiological data

was necessary. The system was developed with the goal that

all the components can be run in real time. At this point,

the analysis itself was done offline, but the analysis methods afford for creating a fully online system. This required

certain qualities from the signal processing algorithms, especially the ability to analyse subsequent chunks of data as

they are measured, instead of the whole data set at once.

Physiological variables have typically a several-second lag

from the stimulus, and certain physiological components

need a longer time window to be analysable [24, 14]. A

compromise was made to analyse all the data with a sliding

10-second time window with a 1-second hop between subsequent data points, and 0, 1, 2, 3 and 4 second lags for the

physiological variables. Continuous control data can still

be created by interpolating between the regression result of

subsequent seconds.

3.1

3.2

Music information retrieval

The automatic analysis of music information retrieval (MIR)

features was approached with an existing tool, MIRToolbox

developed by Olivier Lartillot [16]. The current version of

MIRToolbox does not support real-time analysis, but the

variables chosen for this study are all analysable in a realtime setting as well. Other tools can be used in the future

for real-time MIR analysis, such as Libxtract [3]. MIRToolbox was chosen at this point, as it runs in Matlab (The

MathWorks Inc., Natick, MA), which was used also for regression modelling.

Eight variables were extracted from the audio signal. In

MIRToolbox, root mean square (RMS) is calculated directly

from the signal envelope. Fluctuation strength is calculated

from the Mel-scale spectrum. Key strength and mode are

estimated from the chromagram. Both the Mel-scale spectrum and chromagram are calculated from the frequency

data which is derived from the fast Fourier transform (FFT).

Real-time analysis of physiological data

The physiological measurements that were used in this study

are electrocardiogram (ECG), electrodermal activation (EDA)

550

https://github.com/vatte/rt-bio

Proceedings of the International Conference on New Interfaces for Musical Expression

The FFT and signal envelope are used to calculate the spectral centroid. Tempo and pulse clarity are estimated in a

complex way using a filterbank, onset detection and auto

correlation. Structural novelty is assessed with a method

that operates without the need of future events for determining the novelty value at a given time. [16, 15]

3.3

bluetooth amplifier (MindMedia BV, Herten, Netherlands).

Four songs chosen by another subject were added to the

stack of stimuli, for a total of eight songs per subject. The

justification for this was that when more data has been gathered, group wise comparisons of the effect on model performance of familiar versus unfamiliar songs can be made.

Between the playback of each song, 2 minutes of silence

was inserted, as well as in the beginning and the end of the

measurement. The order of the songs was randomised.

Regression model

The prediction of a MIR descriptor with the help of several

physiological variables can be described as a many-to-one

mapping problem. Because the underlying relationships in

the data are for a large part unknown, it was decided that

two very different models would be evaluated and compared.

Multiple linear regression (MLR) is a simple algorithm

for regression with many input variables [20]. It solves a

linear polynomial equation by minimising a loss function,

such as the mean squared error. -values for the physiological variables were used with the MLR model, representing

the change between the current time window and the preceding time window of the same length. -values for the

MIR descriptors were evaluated as targets for both models.

MLR was chosen as a baseline model, to be compared

with a more complex model, in this case a non-linear autoregressive exogenous (NARX) neural network model [8].

This type of model is especially suited for the prediction of

longitudinal data, as it includes an autoregressive component. Both models were compared against a dummy model,

a constant linear regressor created from the learning data.

4.

a)

Pulse clarity

Real value

Prediction

20

40

60

80

100

120

140

160

180

200

seconds

b)

RMS

50

100

150

200

250

seconds

Figure 3: Prediction results for the NARX model.

a) Pulse clarity, subject 3 (song: Miguel - Adorn,

R2 : 0.17, p-value:1.5 1009 ). b) RMS, subject 7

(song: Placebo - Without You Im Nothing, R2 :

0.26, p-value: 2.2 1016 ).

EVALUATION STUDY

A small evaluation study was conducted to test the system and the feasibility of the regression model. Six test

subjects participated in the experiment. Each participant

brought four songs to the experiment with the instruction:

Choose four songs that you would like to listen to right

now. Physiological data was recorded in a closed test room,

where the test subject was sitting alone. The subject could

choose to have the lights on or off, and whether they wanted

to listen through loudspeakers or headphones. Physiological data was measured with the Nexus-10 MkII wireless

The regression models were trained and tested individually for each subject. Feature selection was performed for

the MLR model, with a forward selection paradigm. Both

the MLR and the NARX models were trained with a leaveone-song-out cross-validated paradigm, to avoid bias from

using the same song in both training and testing. All the

songs were truncated according to the length of the shortest

song in the data set, so that each song would have an equal

effect on the model.

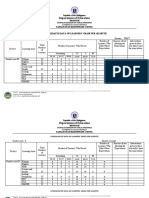

Table 1: Comparison of constant (const), multiple linear regression) MLR and NARX artificial neural

network (ANN) models. The values that represents best performance in the different model evaluation

tests are underlined for each MIR target. The MIR targets are divided into absolute values and -values,

which represent the change in subsequent time windows. The model evaluation parameters are mean square

error (MSE), coefficient of determination (R2 ) and the probability that there is no correlation between the

prediction and the target value (p-value). Statistical significance (p-value < 0.05) is marked with a *.

MIR feature

MSE const MSE MLR MSE ANN R2 MLR R2 ANN p-value

p-value

MLR

ANN

RMS

2.50 105

2.61 105

3.60 105

0.0195

0.0293

0.051

0.010*

Fluctuation

1.98 104

1.98 104

3.83 104

0.0138

0.0917

0.079

0.000*

Tempo

175

191

440

0.0009

0.0399

0.677

0.003*

Pulse clarity

4.53 103

7.09 103

3.47 102

0.0164

0.1663

0.074

0.000*

Spectral centroid

1.54 103

3.32 103

1.626 103

0.0471

0.1010

0.002*

0.000*

Key strength

8.87 109

8.99 109

4.23 108

0.0014

0.0090

0.606

0.156

Mode

4.25 104

4.51 104

8.93 103

0.0118

0.0191

0.188

0.052

Novelty

0.850

0.985

1.82

0.0166

0.0180

0.054

0.104

RMS

3.07 106

3.10 106

8.40 105

0.0147

0.0068

0.070

0.318

Fluctuation

5.79 103

5.19 103

7.05 103

0.0455

0.0071

0.001*

0.219

Tempo

87.5

87.7

984

0.0048

0.0076

0.401

0.203

Pulse clarity

2.01 103

3.07 103

3.29 102

0.0034

0.0178

0.483

0.046*

Spectral centroid

3.79 102

7.21 102

2.15 103

0.0430

0.0120

0.011*

0.184

Key strength

1.94 108

1.95 108

7.02 108

0.0004

0.0028

0.753

0.430

Mode

4.24 104

2.34 102

0.0544

0.000*

Novelty

0.520

0.647

3.63

0.0028

0.0229

0.438

0.023*

551

Proceedings of the International Conference on New Interfaces for Musical Expression

Median values for the mean squared prediction error and

the p-value for a pairwise correlation test were used to evaluate the performance of the models. The evaluation results

were mixed, as can be seen in Table 1. The dummy model

outperformed both models in prediction accuracy, but the

NARX model achieved significant correlations (p < 0.05) for

fluctuation, tempo, pulse clarity, spectral centroid, -pulse

clarity, -mode and -novelty. The MLR model achieved

significant correlations for spectral centroid, -fluctuation

and -spectral centroid. Examples of the NARX models

prediction can be seen in Figure 3.

5.

[8] E. Diaconescu. The use of NARX neural networks to

predict chaotic time series. WSEAS Transactions on

Computer Research, 3(3):182191, 2008.

[9] P. Ekman, R. Levenson, and W. Friesen. Autonomic

nervous system activity distinguishes among

emotions. Science, 221(4616):12081210, 1983.

[10] S. Giraldo and R. Ramirez. Brain-activity-driven

real-time music emotive control. In Proceedings of the

3rd International Conference on Music & Emotion,

2013.

[11] W. James. What is an emotion? Mind, 9(34):188205,

1884.

[12] P. N. Juslin and P. Laukka. Expression, perception,

and induction of musical emotions: A review and a

questionnaire study of everyday listening. Journal of

New Music Research, 33(3):217238, 2004.

[13] J. Kim and E. Andre. Emotion recognition based on

physiological changes in music listening. IEEE

Transactions on Pattern Analysis and Machine

Intelligence, 30(12):2067, 2008.

[14] S. Kreibig, G. Schaefer, and T. Brosch.

Psychophysiological response patterning in emotion:

Implications for affective computing. In K. Scherer,

T. B

anziger, and E. Roesch, editors, A Blueprint for

Affective Computing: A sourcebook and manual.

Oxford University Press, 2010.

[15] O. Lartillot, D. Cereghetti, K. Eliard, and

D. Grandjean. A simple, high-yield method for

assessing structural novelity. In Proceedings of the 3rd

International Conference on Music & Emotion, 2013.

[16] O. Lartillot and P. Toiviainen. A matlab toolbox for

musical feature extraction from audio. In

International Conference on Digital Audio Effects,

pages 237244, 2007.

[17] R. Lazarus. Emotion and adaptation. Oxford

University Press New York, 1991.

[18] R. Levenson. Autonomic nervous system differences

among emotions. Psychological science, 3(1):2327,

1992.

[19] L. Meyer. Emotion and meaning in music. University

of Chicago Press, 1956.

[20] M. Mohri, A. Rostamizadeh, and A. Talwalkar.

Foundations of machine learning. The MIT Press,

2012.

[21] F. Russo, N. Vempala, and G. Sandstrom. Predicting

musically induced emotions from physiological inputs:

linear and neural network models. Frontiers in

psychology, 4, 2013.

[22] S. Schachter and J. Singer. Cognitive, social, and

physiological determinants of emotional state.

Psychological review, 69(5):379, 1962.

[23] K. Scherer. Which emotions can be induced by music?

what are the underlying mechanisms? and how can

we measure them? Journal of New Music Research,

33(3):239251, 2004.

[24] E. Schubert. Modeling perceived emotion with

continuous musical features. Music Perception,

21(4):561585, 2004.

[25] D. Schwarz. Corpus-based concatenative synthesis.

Signal Processing Magazine, IEEE, 24(2):92104,

2007.

[26] A. Tanaka. Musical performance practice on

sensor-based instruments. Trends in Gestural Control

of Music, 13:389405, 2000.

[27] Y.-H. Yang and H. Chen. Music Emotion Recognition.

CRC Press, 2011.

CONCLUSIONS

A new approach for mapping physiological signals to acoustical variables has been presented in this paper. A regression

model that learns in a music listening situation and predicts acoustical variables from physiological responses was

constructed and evaluated. Currently, acoustical variables

can be predicted with the regression model. The results of

the evaluation study were promising, especially the neural

network model achieved significant correlations for many of

the acoustical variables. This can be seen as an indicator

that non-linear models with autocorrelating capabilities are

feasible for future development. To further assess the performance and feasibility of the regression model, a larger

dataset needs to be collected.

Currently, individual models for each subject were created. In the future, the performance of individual models

can be evaluated against an inter-subject-model. Also the

effect of familiarity to the music listened to can be explored.

Regarding the sound generating strategy, a user study can

be made to collect the users own impressions of the relevance of the generated sound to their emotions. Will the

sounds and music created through regression be identifiable

to the user as an auditory display of their emotions?

6.

ACKNOWLEDGMENTS

I would like to thank Professor Mari Tervaniemi and the

Finnish Centre of Excellence in Interdisciplinary Music Research for providing the opportunity and funding for carrying out this research. I would also like to thank Tommi

Makkonen, Tommi Vatanen and Olivier Lartillot for their

advise and support.

7.

REFERENCES

[1] M. Arnold. Physiological differentiation of emotional

states. Psychological Review, 52(1):35, 1945.

[2] W. Boucsein. Electrodermal activity. Springer, 2012.

[3] J. Bullock. Libxtract: A lightweight library for audio

feature extraction. In Proceedings of the International

Computer Music Conference, volume 43, 2007.

[4] W. Cannon. The James-Lange theory of emotions: A

critical examination and an alternative theory. The

American Journal of Psychology, 39(1):106124, 1927.

[5] I. Christov. Real time electrocardiogram QRS

detection using combined adaptive threshold.

BioMedical Engineering OnLine, 3(1):28, 2004.

[6] N. Coghlan and R. B. Knapp. Sensory chairs: A

system for biosignal research and performance. In In

Proceedings of 2008 Conference on New Instruments

for Musical Expression (NIME08)., 2008.

[7] E. Coutinho. Musical emotions: Predicting

second-by-second subjective feelings of emotion from

low-level psychoacoustic features and physiological

measurements. Emotion, 11(4):921, 2011.

552

Das könnte Ihnen auch gefallen

- 2007 ATRA Seminar ManualDokument272 Seiten2007 ATRA Seminar Manualtroublezaur100% (3)

- Irish Session Tunes (John Walsh) PDFDokument25 SeitenIrish Session Tunes (John Walsh) PDFNilpimaNoch keine Bewertungen

- Structured Additive Synthesis: Towards A Model of Sound Timbre and Electroacoustic Music FormsDokument4 SeitenStructured Additive Synthesis: Towards A Model of Sound Timbre and Electroacoustic Music FormsJuan Ignacio Isgro100% (1)

- 2013-01-28 203445 International Fault Codes Eges350 DTCDokument8 Seiten2013-01-28 203445 International Fault Codes Eges350 DTCVeterano del CaminoNoch keine Bewertungen

- Structural Design Basis ReportDokument31 SeitenStructural Design Basis ReportRajaram100% (1)

- The Neurosciences and Music PDFDokument150 SeitenThe Neurosciences and Music PDFIrinelBogdan100% (1)

- VOTOL EMController Manual V2.0Dokument18 SeitenVOTOL EMController Manual V2.0Nandi F. ReyhanNoch keine Bewertungen

- P3 Past Papers Model AnswersDokument211 SeitenP3 Past Papers Model AnswersEyad UsamaNoch keine Bewertungen

- Brain Music System Assisted Living FinalDokument4 SeitenBrain Music System Assisted Living Finaladrian_attard_trevisanNoch keine Bewertungen

- EEG-Based Emotion Recognition in Music ListeningDokument9 SeitenEEG-Based Emotion Recognition in Music ListeningDuncan WilliamsNoch keine Bewertungen

- El Dia Que Me Quieras - Tango - UnknownDokument2 SeitenEl Dia Que Me Quieras - Tango - UnknownFrancis ValerioNoch keine Bewertungen

- Darkle Slideshow by SlidesgoDokument53 SeitenDarkle Slideshow by SlidesgoADITI GUPTANoch keine Bewertungen

- PDS DeltaV SimulateDokument9 SeitenPDS DeltaV SimulateJesus JuarezNoch keine Bewertungen

- Keller, P. E. (2012) - Mental Imagery in Music Performance. Underlying Mechanisms and Potential Benefits. Annals of The New York Academy of Sciences, 1252 (1), 206-213.Dokument8 SeitenKeller, P. E. (2012) - Mental Imagery in Music Performance. Underlying Mechanisms and Potential Benefits. Annals of The New York Academy of Sciences, 1252 (1), 206-213.goni56509Noch keine Bewertungen

- (Jancke.2008) Music, Memory and EmotionDokument5 Seiten(Jancke.2008) Music, Memory and EmotionHikari CamuiNoch keine Bewertungen

- Eccentric FootingDokument3 SeitenEccentric FootingVarunn VelNoch keine Bewertungen

- 21 LeGroux-ICMC08Dokument4 Seiten21 LeGroux-ICMC08Judap FlocNoch keine Bewertungen

- Listen To The Brain in Real Time-The Chengdu Brainwave MusicDokument6 SeitenListen To The Brain in Real Time-The Chengdu Brainwave MusicStarLink1Noch keine Bewertungen

- Inducing Physiological ResponseDokument6 SeitenInducing Physiological ResponseJudap FlocNoch keine Bewertungen

- EmotionsDokument14 SeitenEmotionsz mousaviNoch keine Bewertungen

- Neuroplasticity Beyond Sounds: Neural Adaptations Following Long-Term Musical Aesthetic ExperiencesDokument23 SeitenNeuroplasticity Beyond Sounds: Neural Adaptations Following Long-Term Musical Aesthetic ExperiencesMark ReybrouckNoch keine Bewertungen

- Bangert, M., Peschel, T., Schlaug, G., Rotte, M., Drescher, D., Hinrichs, H., ... & Altenmüller, E. (2006). Shared networks for auditory and motor processing in professional pianists- evidence from fMRI conjunction. Neuroimage, 30(3), 917-926.Dokument10 SeitenBangert, M., Peschel, T., Schlaug, G., Rotte, M., Drescher, D., Hinrichs, H., ... & Altenmüller, E. (2006). Shared networks for auditory and motor processing in professional pianists- evidence from fMRI conjunction. Neuroimage, 30(3), 917-926.goni56509Noch keine Bewertungen

- The Pursuit of Happiness in Music: Retrieving Valence With High-Level Musical DescriptorsDokument5 SeitenThe Pursuit of Happiness in Music: Retrieving Valence With High-Level Musical Descriptorstutifornari100% (1)

- Emociones Representadas en La MusicaDokument14 SeitenEmociones Representadas en La Musicajohn fredy perezNoch keine Bewertungen

- Musical Imagery Involves Wernicke's Area in Bilateral and Anti-Correlated Network Interactions in Musicians - Scientific ReportsDokument47 SeitenMusical Imagery Involves Wernicke's Area in Bilateral and Anti-Correlated Network Interactions in Musicians - Scientific ReportsKariny KelviaNoch keine Bewertungen

- AI and Automatic Music Generation For MindfulnessDokument10 SeitenAI and Automatic Music Generation For Mindfulnessjack.arnold120Noch keine Bewertungen

- Music Can Be Reconstructed From Human Auditory Cortex Activity Using Nonlinear Decoding ModelsDokument27 SeitenMusic Can Be Reconstructed From Human Auditory Cortex Activity Using Nonlinear Decoding ModelsDiego Soto ChavezNoch keine Bewertungen

- Music Emotion Maps in Arousal-Valence Space: September 2016Dokument11 SeitenMusic Emotion Maps in Arousal-Valence Space: September 2016Jose Angel100% (1)

- Structural 06 Jas Is TDokument11 SeitenStructural 06 Jas Is TGreg SurgesNoch keine Bewertungen

- Berkowitz, A. L., & Ansari, D. (2010) - Expertise-Related Deactivation of The Right Temporoparietal Junction During Musical Improvisation. Neuroimage, 49 (1), 712-719.Dokument8 SeitenBerkowitz, A. L., & Ansari, D. (2010) - Expertise-Related Deactivation of The Right Temporoparietal Junction During Musical Improvisation. Neuroimage, 49 (1), 712-719.goni56509100% (1)

- Towards Music Imagery Information Retrieval: Introducing The Openmiir Dataset of Eeg Recordings From Music Perception and ImaginationDokument7 SeitenTowards Music Imagery Information Retrieval: Introducing The Openmiir Dataset of Eeg Recordings From Music Perception and ImaginationAngelRibeiro10Noch keine Bewertungen

- The Columbine Massacre - Barack Obama - Zionist Wolf in Sheep's (PDFDrive)Dokument18 SeitenThe Columbine Massacre - Barack Obama - Zionist Wolf in Sheep's (PDFDrive)Roman TilahunNoch keine Bewertungen

- Automatic Mood Detection and Tracking of Music Audio SignalsDokument14 SeitenAutomatic Mood Detection and Tracking of Music Audio Signalstri lestariNoch keine Bewertungen

- TMP 304 CDokument27 SeitenTMP 304 CFrontiersNoch keine Bewertungen

- Using Deep Learning To Recognize Therapeutic Effects of Music Based On EmotionsDokument13 SeitenUsing Deep Learning To Recognize Therapeutic Effects of Music Based On EmotionsJan LAWNoch keine Bewertungen

- Analyzing Sound Tracings - A Multimodal Approach To Music Information RetrievalDokument6 SeitenAnalyzing Sound Tracings - A Multimodal Approach To Music Information RetrievalMiri CastilloNoch keine Bewertungen

- Brain and Music - Music Genre Classification Using Brain SignalsDokument5 SeitenBrain and Music - Music Genre Classification Using Brain SignalssinisasubNoch keine Bewertungen

- Barin Sig Abs2Dokument1 SeiteBarin Sig Abs2sharanshabarishNoch keine Bewertungen

- Music Emotion Classification and Context-Based Music RecommendationDokument28 SeitenMusic Emotion Classification and Context-Based Music RecommendationpicklekeytrackballNoch keine Bewertungen

- Sound Structure GeneratorDokument17 SeitenSound Structure GeneratorDevrim CK ÇetinkayalıNoch keine Bewertungen

- 02 Extracting EmotionsDokument10 Seiten02 Extracting EmotionsNeha GuptaNoch keine Bewertungen

- Action in Perception: Prominent Visuo-Motor Functional Symmetry in Musicians During Music ListeningDokument18 SeitenAction in Perception: Prominent Visuo-Motor Functional Symmetry in Musicians During Music ListeningVlad PredaNoch keine Bewertungen

- Perception of Music and Dimensional Complexity of Brain ActivityDokument14 SeitenPerception of Music and Dimensional Complexity of Brain ActivityThomas MpourtzalasNoch keine Bewertungen

- Can Gesture InfluenceDokument10 SeitenCan Gesture InfluenceNatalia Canales CanalesNoch keine Bewertungen

- Prosody-Preserving Voice Transformation To Evaluate Brain Representations of Speech SoundsDokument13 SeitenProsody-Preserving Voice Transformation To Evaluate Brain Representations of Speech SoundsAfshan KaleemNoch keine Bewertungen

- Jose Fornari CMMR Springer ArticleDokument18 SeitenJose Fornari CMMR Springer ArticletutifornariNoch keine Bewertungen

- Predicting Emotional Prosody of Music With High-Level Acoustic FeaturesDokument4 SeitenPredicting Emotional Prosody of Music With High-Level Acoustic Featurestutifornari100% (1)

- Multi-Feature Modeling of Pulse Clarity From Audio: Olivier Lartillot, Tuomas Eerola, Petri Toiviainen, Jose FornariDokument9 SeitenMulti-Feature Modeling of Pulse Clarity From Audio: Olivier Lartillot, Tuomas Eerola, Petri Toiviainen, Jose FornaritutifornariNoch keine Bewertungen

- Paper 5Dokument2 SeitenPaper 5Fabian MossNoch keine Bewertungen

- A Closed-Loop Brain-Computer Music Interface For Continuous Affective InteractionDokument4 SeitenA Closed-Loop Brain-Computer Music Interface For Continuous Affective InteractionAngelRibeiro10Noch keine Bewertungen

- Clark, T., & Williamon, A. (2011) - Imaging The Music. A Context-Specific Method For Assessing Imagery Ability. Psychology of Music.Dokument6 SeitenClark, T., & Williamon, A. (2011) - Imaging The Music. A Context-Specific Method For Assessing Imagery Ability. Psychology of Music.goni56509Noch keine Bewertungen

- Brain-Structures and - MusiciansDokument14 SeitenBrain-Structures and - MusiciansEmerson Ferreira Bezerra100% (1)

- Martínez-Rodrigo Et Al. - 2017 - Neural Correlates of Phrase Rhythm An EEG Study oDokument9 SeitenMartínez-Rodrigo Et Al. - 2017 - Neural Correlates of Phrase Rhythm An EEG Study oAlejo DuqueNoch keine Bewertungen

- Using Face Expression Detection, Provide Music RecommendationsDokument7 SeitenUsing Face Expression Detection, Provide Music RecommendationsIJRASETPublicationsNoch keine Bewertungen

- Analisis Emocional ErlkoningDokument20 SeitenAnalisis Emocional ErlkoningNuria Sánchez PulidoNoch keine Bewertungen

- 2011 CerebCortex 2011 Abrams 1507 18Dokument12 Seiten2011 CerebCortex 2011 Abrams 1507 18karmagonNoch keine Bewertungen

- Overlapped Music Segmentation Using A New Effective Feature and Random ForestsDokument9 SeitenOverlapped Music Segmentation Using A New Effective Feature and Random ForestsIAES IJAINoch keine Bewertungen

- Music, Memory and EmotionDokument6 SeitenMusic, Memory and EmotionLuciane CuervoNoch keine Bewertungen

- Quality, Emotion, and MachinesDokument5 SeitenQuality, Emotion, and MachinesRichard HallumNoch keine Bewertungen

- Project Fact SheetDokument2 SeitenProject Fact SheetxmlbioxNoch keine Bewertungen

- Improved Tactile Frequency Discrimination in MusiciansDokument6 SeitenImproved Tactile Frequency Discrimination in MusiciansAmelia ThorntonNoch keine Bewertungen

- Can Music Influence Language and CognitionDokument18 SeitenCan Music Influence Language and CognitionRafael Camilo Maya CastroNoch keine Bewertungen

- Anatomically Distinct Dopamine Release During Anticipation and Experience of Peak Emotion To MusicDokument8 SeitenAnatomically Distinct Dopamine Release During Anticipation and Experience of Peak Emotion To MusicMitch MunroNoch keine Bewertungen

- Generative Model For The Creation of Musical Emotion, Meaning, and FormDokument6 SeitenGenerative Model For The Creation of Musical Emotion, Meaning, and FormFer LabNoch keine Bewertungen

- Music Generation and Emotion Estimation From EEG Signals For Inducing Affective StatesDokument5 SeitenMusic Generation and Emotion Estimation From EEG Signals For Inducing Affective StatesDicky RiantoNoch keine Bewertungen

- Music Emotion Recognition With Standard and Melodic Audio FeaturesDokument35 SeitenMusic Emotion Recognition With Standard and Melodic Audio FeaturesPablo EstradaNoch keine Bewertungen

- Artificial IntellegenceDokument3 SeitenArtificial IntellegencemamatubisNoch keine Bewertungen

- Artigo de Congresso: Sociedade de Engenharia de ÁudioDokument4 SeitenArtigo de Congresso: Sociedade de Engenharia de ÁudiotutifornariNoch keine Bewertungen

- Emotional Responses To ImprovisationDokument21 SeitenEmotional Responses To ImprovisationAlejandro OrdásNoch keine Bewertungen

- BotnetDokument4 SeitenBotnetFrancis ValerioNoch keine Bewertungen

- BotnetDokument4 SeitenBotnetFrancis ValerioNoch keine Bewertungen

- Chapter 25 Collection of Material For Laboratory Examination 2009 Medical History and Physical Examination in Companion Animals Second EditionDokument11 SeitenChapter 25 Collection of Material For Laboratory Examination 2009 Medical History and Physical Examination in Companion Animals Second EditionFrancis ValerioNoch keine Bewertungen

- 947-S001 NeuronalDokument7 Seiten947-S001 NeuronalFrancis ValerioNoch keine Bewertungen

- Botany Bay (SpongeBob Soundtrack)Dokument1 SeiteBotany Bay (SpongeBob Soundtrack)Francis ValerioNoch keine Bewertungen

- The Abduction From The SeraglioDokument3 SeitenThe Abduction From The SeraglioFrancis ValerioNoch keine Bewertungen

- Guard Apes Pal DasDokument1 SeiteGuard Apes Pal DasFrancis ValerioNoch keine Bewertungen

- Government Hazi Muhammad Mohsin College Chattogram: Admission FormDokument1 SeiteGovernment Hazi Muhammad Mohsin College Chattogram: Admission FormThe Helper SoulNoch keine Bewertungen

- KRAS QC12K-4X2500 Hydraulic Shearing Machine With E21S ControllerDokument3 SeitenKRAS QC12K-4X2500 Hydraulic Shearing Machine With E21S ControllerJohan Sneider100% (1)

- Exploring-Engineering-And-Technology-Grade-6 1Dokument5 SeitenExploring-Engineering-And-Technology-Grade-6 1api-349870595Noch keine Bewertungen

- Point and Figure ChartsDokument5 SeitenPoint and Figure ChartsShakti ShivaNoch keine Bewertungen

- TPDokument10 SeitenTPfaisal gaziNoch keine Bewertungen

- 2010 - Howaldt y Schwarz - Social Innovation-Concepts, Research Fields and International - LibroDokument82 Seiten2010 - Howaldt y Schwarz - Social Innovation-Concepts, Research Fields and International - Librovallejo13Noch keine Bewertungen

- Department of Education: Consolidated Data On Learners' Grade Per QuarterDokument4 SeitenDepartment of Education: Consolidated Data On Learners' Grade Per QuarterUsagi HamadaNoch keine Bewertungen

- Determinant of Nurses' Response Time in Emergency Department When Taking Care of A PatientDokument9 SeitenDeterminant of Nurses' Response Time in Emergency Department When Taking Care of A PatientRuly AryaNoch keine Bewertungen

- CM2192 - High Performance Liquid Chromatography For Rapid Separation and Analysis of A Vitamin C TabletDokument2 SeitenCM2192 - High Performance Liquid Chromatography For Rapid Separation and Analysis of A Vitamin C TabletJames HookNoch keine Bewertungen

- English For Academic and Professional Purposes - ExamDokument3 SeitenEnglish For Academic and Professional Purposes - ExamEddie Padilla LugoNoch keine Bewertungen

- Stress Management HandoutsDokument3 SeitenStress Management HandoutsUsha SharmaNoch keine Bewertungen

- Thesis TipsDokument57 SeitenThesis TipsJohn Roldan BuhayNoch keine Bewertungen

- Job Satisfaction of Library Professionals in Maharashtra State, India Vs ASHA Job Satisfaction Scale: An Evaluative Study Dr. Suresh JangeDokument16 SeitenJob Satisfaction of Library Professionals in Maharashtra State, India Vs ASHA Job Satisfaction Scale: An Evaluative Study Dr. Suresh JangeNaveen KumarNoch keine Bewertungen

- Analisis Kebutuhan Bahan Ajar Berbasis EDokument9 SeitenAnalisis Kebutuhan Bahan Ajar Berbasis ENur Hanisah AiniNoch keine Bewertungen

- Object Oriented ParadigmDokument2 SeitenObject Oriented ParadigmDickson JohnNoch keine Bewertungen

- How To Install Metal LathDokument2 SeitenHow To Install Metal LathKfir BenishtiNoch keine Bewertungen

- 7 - Monte-Carlo-Simulation With XL STAT - English GuidelineDokument8 Seiten7 - Monte-Carlo-Simulation With XL STAT - English GuidelineGauravShelkeNoch keine Bewertungen

- Entrepreneurial Capacity Building: A Study of Small and Medium Family-Owned Enterprisesin PakistanDokument3 SeitenEntrepreneurial Capacity Building: A Study of Small and Medium Family-Owned Enterprisesin PakistanMamoonaMeralAysunNoch keine Bewertungen

- Project Name: Repair of Afam Vi Boiler (HRSG) Evaporator TubesDokument12 SeitenProject Name: Repair of Afam Vi Boiler (HRSG) Evaporator TubesLeann WeaverNoch keine Bewertungen

- Đề Tuyển Sinh Lớp 10 Môn Tiếng AnhDokument11 SeitenĐề Tuyển Sinh Lớp 10 Môn Tiếng AnhTrangNoch keine Bewertungen

- WWW Ranker Com List Best-Isekai-Manga-Recommendations Ranker-AnimeDokument8 SeitenWWW Ranker Com List Best-Isekai-Manga-Recommendations Ranker-AnimeDestiny EasonNoch keine Bewertungen

- Trucks Part NumbersDokument51 SeitenTrucks Part NumbersBadia MudhishNoch keine Bewertungen