Beruflich Dokumente

Kultur Dokumente

Dougherty - Hyp Testing

Hochgeladen von

HimanshuVermaCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Dougherty - Hyp Testing

Hochgeladen von

HimanshuVermaCopyright:

Verfügbare Formate

Christopher Dougherty

EC220 - Introduction to econometrics

(chapter 2)

Slideshow: t test of a hypothesis relating to a regression coefficient

Original citation:

Dougherty, C. (2012) EC220 - Introduction to econometrics (chapter 2). [Teaching Resource]

2012 The Author

This version available at: http://learningresources.lse.ac.uk/128/

Available in LSE Learning Resources Online: May 2012

This work is licensed under a Creative Commons Attribution-ShareAlike 3.0 License. This license allows

the user to remix, tweak, and build upon the work even for commercial purposes, as long as the user

credits the author and licenses their new creations under the identical terms.

http://creativecommons.org/licenses/by-sa/3.0/

http://learningresources.lse.ac.uk/

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

s.d. of b2 known

discrepancy between

hypothetical value and sample

estimate, in terms of s.d.:

b2 20

z

s.d.

5% significance test:

reject H0: 2 = 20 if

z > 1.96 or z < 1.96

The diagram summarizes the procedure for performing a 5% significance test on the slope

coefficient of a regression under the assumption that we know its standard deviation.

1

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

s.d. of b2 known

s.d. of b2 not known

discrepancy between

hypothetical value and sample

estimate, in terms of s.d.:

discrepancy between

hypothetical value and sample

estimate, in terms of s.e.:

b2 20

z

s.d.

b2 20

t

s.e.

5% significance test:

reject H0: 2 = 20 if

z > 1.96 or z < 1.96

This is a very unrealistic assumption. We usually have to estimate it with the standard

error, and we use this in the test statistic instead of the standard deviation.

2

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

s.d. of b2 known

s.d. of b2 not known

discrepancy between

hypothetical value and sample

estimate, in terms of s.d.:

discrepancy between

hypothetical value and sample

estimate, in terms of s.e.:

b2 20

z

s.d.

b2 20

t

s.e.

5% significance test:

reject H0: 2 = 20 if

z > 1.96 or z < 1.96

Because we have replaced the standard deviation in its denominator with the standard

error, the test statistic has a t distribution instead of a normal distribution.

3

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

s.d. of b2 known

s.d. of b2 not known

discrepancy between

hypothetical value and sample

estimate, in terms of s.d.:

discrepancy between

hypothetical value and sample

estimate, in terms of s.e.:

b2 20

z

s.d.

b2 20

t

s.e.

5% significance test:

5% significance test:

reject H0: 2 = 20 if

z > 1.96 or z < 1.96

reject H0: 2 = 20 if

t > tcrit or t < tcrit

Accordingly, we refer to the test statistic as a t statistic. In other respects the test

procedure is much the same.

4

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

s.d. of b2 known

s.d. of b2 not known

discrepancy between

hypothetical value and sample

estimate, in terms of s.d.:

discrepancy between

hypothetical value and sample

estimate, in terms of s.e.:

b2 20

z

s.d.

b2 20

t

s.e.

5% significance test:

5% significance test:

reject H0: 2 = 20 if

z > 1.96 or z < 1.96

reject H0: 2 = 20 if

t > tcrit or t < tcrit

We look up the critical value of t and if the t statistic is greater than it, positive or negative,

we reject the null hypothesis. If it is not, we do not.

5

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.4

normal

0.3

0.2

0.1

0

-6

-5

-4

-3

-2

-1

Here is a graph of a normal distribution with zero mean and unit variance

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.4

normal

t, 10 d.f.

0.3

0.2

0.1

0

-6

-5

-4

-3

-2

-1

A graph of a t distribution with 10 degrees of freedom (this term will be defined in a

moment) has been added.

7

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.4

normal

t, 10 d.f.

0.3

0.2

0.1

0

-6

-5

-4

-3

-2

-1

When the number of degrees of freedom is large, the t distribution looks very much like a

normal distribution (and as the number increases, it converges on one).

8

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.4

normal

t, 10 d.f.

0.3

0.2

0.1

0

-6

-5

-4

-3

-2

-1

Even when the number of degrees of freedom is small, as in this case, the distributions are

very similar.

9

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.4

normal

t, 10 d.f.

0.3

t, 5 d.f.

0.2

0.1

0

-6

-5

-4

-3

-2

-1

Here is another t distribution, this time with only 5 degrees of freedom. It is still very similar

to a normal distribution.

10

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.4

normal

t, 10 d.f.

0.3

t, 5 d.f.

0.2

0.1

0

-6

-5

-4

-3

-2

-1

So why do we make such a fuss about referring to the t distribution rather than the normal

distribution? Would it really matter if we always used 1.96 for the 5% test and 2.58 for the

1% test?

11

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.4

normal

t, 10 d.f.

0.3

t, 5 d.f.

0.2

0.1

0

-6

-5

-4

-3

-2

-1

The answer is that it does make a difference. Although the distributions are generally quite

similar, the t distribution has longer tails than the normal distribution, the difference being

the greater, the smaller the number of degrees of freedom.

12

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.1

normal

t, 10 d.f.

t, 5 d.f.

0

-6

-5

-4

-3

-2

-1

As a consequence, the probability of obtaining a high test statistic on a pure chance basis

is greater with a t distribution than with a normal distribution.

13

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.1

normal

t, 10 d.f.

t, 5 d.f.

0

-6

-5

-4

-3

-2

-1

This means that the rejection regions have to start more standard deviations away from

zero for a t distribution than for a normal distribution.

14

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.1

normal

t, 10 d.f.

t, 5 d.f.

0

-6

-5

-4

-3 -1.96

-2

-1

The 2.5% tail of a normal distribution starts 1.96 standard deviations from its mean.

15

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.1

normal

t, 10 d.f.

t, 5 d.f.

0

-6

-5

-4

-3 -2.33

-2

-1

The 2.5% tail of a t distribution with 10 degrees of freedom starts 2.33 standard deviations

from its mean.

16

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

0.1

normal

t, 10 d.f.

t, 5 d.f.

0

-6

-5

-4

-2.57-2

-3

-1

That for a t distribution with 5 degrees of freedom starts 2.57 standard deviations from its

mean.

17

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

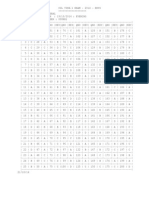

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

1.734

2.101

2.552

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

For this reason we need to refer to a table of critical values of t when performing

significance tests on the coefficients of a regression equation.

18

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

1.734

2.101

2.552

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

At the top of the table are listed possible significance levels for a test. For the time being

we will be performing two-sided tests, so ignore the line for one-sided tests.

19

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

1.734

2.101

2.552

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

Hence if we are performing a (two-sided) 5% significance test, we should use the column

thus indicated in the table.

20

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

Number of degrees

of

freedom

in a regression

= number of observations

number

estimated.

1.734

2.101

2.552of parameters

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

The left hand vertical column lists degrees of freedom. The number of degrees of freedom

in a regression is defined to be the number of observations minus the number of

parameters estimated.

21

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

1.734

2.101

2.552

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

In a simple regression, we estimate just two parameters, the constant and the slope

coefficient, so the number of degrees of freedom is n - 2 if there are n observations.

22

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

1.734

2.101

2.552

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

If we were performing a regression with 20 observations, as in the price inflation/wage

inflation example, the number of degrees of freedom would be 18 and the critical value of t

for a 5% test would be 2.101.

23

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

1.734

2.101

2.552

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

Note that as the number of degrees of freedom becomes large, the critical value converges

on 1.96, the critical value for the normal distribution. This is because the t distribution

converges on the normal distribution.

24

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

s.d. of b2 known

s.d. of b2 not known

discrepancy between

hypothetical value and sample

estimate, in terms of s.d.:

discrepancy between

hypothetical value and sample

estimate, in terms of s.e.:

b2 20

z

s.d.

b2 20

t

s.e.

5% significance test:

5% significance test:

reject H0: 2 = 20 if

z > 1.96 or z < 1.96

reject H0: 2 = 20 if

t > tcrit or t < tcrit

Hence, referring back to the summary of the test procedure,

25

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

s.d. of b2 known

s.d. of b2 not known

discrepancy between

hypothetical value and sample

estimate, in terms of s.d.:

discrepancy between

hypothetical value and sample

estimate, in terms of s.e.:

b2 20

z

s.d.

b2 20

t

s.e.

5% significance test:

5% significance test:

reject H0: 2 = 20 if

z > 1.96 or z < 1.96

reject H0: 2 = 20 if

t > 2.101 or t < 2.101

we should reject the null hypothesis if the absolute value of t is greater than 2.101.

26

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

1.734

2.101

2.552

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

If instead we wished to perform a 1% significance test, we would use the column indicated

above. Note that as the number of degrees of freedom becomes large, the critical value

converges to 2.58, the critical value for the normal distribution.

27

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

t Distribution: Critical values of t

Degrees of Two-sided test

freedom One-sided test

1

2

3

4

5

18

19

20

600

10%

5%

5%

2.5%

2%

1%

1%

0.5%

0.2%

0.1%

0.1%

0.05%

6.314 12.706 31.821 63.657 318.31 636.62

2.920

4.303

6.965

9.925 22.327 31.598

2.353

3.182

4.541

5.841 10.214 12.924

2.132

2.776

3.747

4.604

7.173

8.610

2.015

2.571

3.365

4.032

5.893

6.869

1.734

2.101

2.552

2.878

3.610

3.922

1.729

2.093

2.539

2.861

3.579

3.883

1.725

2.086

2.528

2.845

3.552

3.850

1.647

1.964

2.333

2.584

3.104

3.307

1.645

1.960

2.326

2.576

3.090

3.291

For a simple regression with 20 observations, the critical value of t at the 1% level is 2.878.

28

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

s.d. of b2 known

s.d. of b2 not known

discrepancy between

hypothetical value and sample

estimate, in terms of s.d.:

discrepancy between

hypothetical value and sample

estimate, in terms of s.e.:

b2 20

z

s.d.

b2 20

t

s.e.

5% significance test:

1% significance test:

reject H0: 2 = 20 if

z > 1.96 or z < 1.96

reject H0: 2 = 20 if

t > 2.878 or t < 2.878

So we should use this figure in the test procedure for a 1% test.

29

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Example:

p 1 2 w u

We will next consider an example of a t test. Suppose that you have data on p, the average

rate of price inflation for the last 5 years, and w, the average rate of wage inflation, for a

sample of 20 countries. It is reasonable to suppose that p is influenced by w.

30

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Example:

p 1 2 w u

H 0 : 2 1; H1 : 2 1

You might take as your null hypothesis that the rate of price inflation increases uniformly

with wage inflation, in which case the true slope coefficient would be 1.

31

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Example:

p 1 2 w u

H 0 : 2 1; H1 : 2 1

p 1.21 0.82w

(0.05) (0.10)

Suppose that the regression result is as shown (standard errors in parentheses). Our

actual estimate of the slope coefficient is only 0.82. We will check whether we should reject

the null hypothesis.

32

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Example:

p 1 2 w u

H 0 : 2 1; H1 : 2 1

p 1.21 0.82w

(0.05) (0.10)

b2 20 0.82 1.00

t

1.80.

s.e.(b2 )

0.10

We compute the t statistic by subtracting the hypothetical true value from the sample

estimate and dividing by the standard error. It comes to 1.80.

33

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Example:

p 1 2 w u

H 0 : 2 1; H1 : 2 1

p 1.21 0.82w

(0.05) (0.10)

b2 20 0.82 1.00

t

1.80.

s.e.(b2 )

0.10

n 20; degrees of freedom 18

There are 20 observations in the sample. We have estimated 2 parameters, so there are 18

degrees of freedom.

34

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Example:

p 1 2 w u

H 0 : 2 1; H1 : 2 1

p 1.21 0.82w

(0.05) (0.10)

b2 20 0.82 1.00

t

1.80.

s.e.(b2 )

0.10

n 20; degrees of freedom 18

tcrit ,5% 2.101

The critical value of t with 18 degrees of freedom is 2.101 at the 5% level. The absolute

value of the t statistic is less than this, so we do not reject the null hypothesis.

35

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Y 1 2 X u

In practice it is unusual to have a feeling for the actual value of the coefficients. Very often

the objective of the analysis is to demonstrate that Y is influenced by X, without having any

specific prior notion of the actual coefficients of the relationship.

36

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Y 1 2 X u

H 0 : 2 0; H1 : 2 0

In this case it is usual to define 2 = 0 as the null hypothesis. In words, the null hypothesis

is that X does not influence Y. We then try to demonstrate that the null hypothesis is false.

37

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Y 1 2 X u

H 0 : 2 0; H1 : 2 0

b2 20

b2

t

s.e.(b2 ) s.e.(b2 )

For the null hypothesis 2 = 0, the t statistic reduces to the estimate of the coefficient

divided by its standard error.

38

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

Y 1 2 X u

H 0 : 2 0; H1 : 2 0

b2 20

b2

t

s.e.(b2 ) s.e.(b2 )

This ratio is commonly called the t statistic for the coefficient and it is automatically printed

out as part of the regression results. To perform the test for a given significance level, we

compare the t statistic directly with the critical value of t for that significance level.

39

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

. reg EARNINGS S

Source |

SS

df

MS

-------------+-----------------------------Model | 19321.5589

1 19321.5589

Residual | 92688.6722

538 172.283777

-------------+-----------------------------Total | 112010.231

539 207.811189

Number of obs

F( 1,

538)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

540

112.15

0.0000

0.1725

0.1710

13.126

-----------------------------------------------------------------------------EARNINGS |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------S |

2.455321

.2318512

10.59

0.000

1.999876

2.910765

_cons | -13.93347

3.219851

-4.33

0.000

-20.25849

-7.608444

------------------------------------------------------------------------------

Here is the output from the earnings function fitted in a previous slideshow, with the t

statistics highlighted.

40

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

. reg EARNINGS S

Source |

SS

df

MS

-------------+-----------------------------Model | 19321.5589

1 19321.5589

Residual | 92688.6722

538 172.283777

-------------+-----------------------------Total | 112010.231

539 207.811189

Number of obs

F( 1,

538)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

540

112.15

0.0000

0.1725

0.1710

13.126

-----------------------------------------------------------------------------EARNINGS |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------S |

2.455321

.2318512

10.59

0.000

1.999876

2.910765

_cons | -13.93347

3.219851

-4.33

0.000

-20.25849

-7.608444

------------------------------------------------------------------------------

You can see that the t statistic for the coefficient of S is enormous. We would reject the null

hypothesis that schooling does not affect earnings at the 0.1% significance level without

even looking at the table of critical values of t.

41

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

. reg EARNINGS S

Source |

SS

df

MS

-------------+-----------------------------Model | 19321.5589

1 19321.5589

Residual | 92688.6722

538 172.283777

-------------+-----------------------------Total | 112010.231

539 207.811189

Number of obs

F( 1,

538)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

540

112.15

0.0000

0.1725

0.1710

13.126

-----------------------------------------------------------------------------EARNINGS |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------S |

2.455321

.2318512

10.59

0.000

1.999876

2.910765

_cons | -13.93347

3.219851

-4.33

0.000

-20.25849

-7.608444

------------------------------------------------------------------------------

The t statistic for the intercept is also enormous. However, since the intercept does not hve

any meaning, it does not make sense to perform a t test on it.

42

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

. reg EARNINGS S

Source |

SS

df

MS

-------------+-----------------------------Model | 19321.5589

1 19321.5589

Residual | 92688.6722

538 172.283777

-------------+-----------------------------Total | 112010.231

539 207.811189

Number of obs

F( 1,

538)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

540

112.15

0.0000

0.1725

0.1710

13.126

-----------------------------------------------------------------------------EARNINGS |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------S |

2.455321

.2318512

10.59

0.000

1.999876

2.910765

_cons | -13.93347

3.219851

-4.33

0.000

-20.25849

-7.608444

------------------------------------------------------------------------------

The next column in the output gives what are known as the p values for each coefficient.

This is the probability of obtaining the corresponding t statistic as a matter of chance, if the

null hypothesis H0: = 0 is true.

43

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

. reg EARNINGS S

Source |

SS

df

MS

-------------+-----------------------------Model | 19321.5589

1 19321.5589

Residual | 92688.6722

538 172.283777

-------------+-----------------------------Total | 112010.231

539 207.811189

Number of obs

F( 1,

538)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

540

112.15

0.0000

0.1725

0.1710

13.126

-----------------------------------------------------------------------------EARNINGS |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------S |

2.455321

.2318512

10.59

0.000

1.999876

2.910765

_cons | -13.93347

3.219851

-4.33

0.000

-20.25849

-7.608444

------------------------------------------------------------------------------

If you reject the null hypothesis H0: = 0, this is the probability that you are making a

mistake and making a Type I error. It therefore gives the significance level at which the null

hypothesis would just be rejected.

44

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

. reg EARNINGS S

Source |

SS

df

MS

-------------+-----------------------------Model | 19321.5589

1 19321.5589

Residual | 92688.6722

538 172.283777

-------------+-----------------------------Total | 112010.231

539 207.811189

Number of obs

F( 1,

538)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

540

112.15

0.0000

0.1725

0.1710

13.126

-----------------------------------------------------------------------------EARNINGS |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------S |

2.455321

.2318512

10.59

0.000

1.999876

2.910765

_cons | -13.93347

3.219851

-4.33

0.000

-20.25849

-7.608444

------------------------------------------------------------------------------

If p = 0.05, the null hypothesis could just be rejected at the 5% level. If it were 0.01, it could

just be rejected at the 1% level. If it were 0.001, it could just be rejected at the 0.1% level. This is

assuming that you are using two-sided tests.

45

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

. reg EARNINGS S

Source |

SS

df

MS

-------------+-----------------------------Model | 19321.5589

1 19321.5589

Residual | 92688.6722

538 172.283777

-------------+-----------------------------Total | 112010.231

539 207.811189

Number of obs

F( 1,

538)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

540

112.15

0.0000

0.1725

0.1710

13.126

-----------------------------------------------------------------------------EARNINGS |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------S |

2.455321

.2318512

10.59

0.000

1.999876

2.910765

_cons | -13.93347

3.219851

-4.33

0.000

-20.25849

-7.608444

------------------------------------------------------------------------------

In the present case p = 0 to three decimal places for the coefficient of S. This means that we

can reject the null hypothesis H0: 2 = 0 at the 0.1% level, without having to refer to the table

of critical values of t. (Testing the intercept does not make sense in this regression.)

46

t TEST OF A HYPOTHESIS RELATING TO A REGRESSION COEFFICIENT

. reg EARNINGS S

Source |

SS

df

MS

-------------+-----------------------------Model | 19321.5589

1 19321.5589

Residual | 92688.6722

538 172.283777

-------------+-----------------------------Total | 112010.231

539 207.811189

Number of obs

F( 1,

538)

Prob > F

R-squared

Adj R-squared

Root MSE

=

=

=

=

=

=

540

112.15

0.0000

0.1725

0.1710

13.126

-----------------------------------------------------------------------------EARNINGS |

Coef.

Std. Err.

t

P>|t|

[95% Conf. Interval]

-------------+---------------------------------------------------------------S |

2.455321

.2318512

10.59

0.000

1.999876

2.910765

_cons | -13.93347

3.219851

-4.33

0.000

-20.25849

-7.608444

------------------------------------------------------------------------------

It is a more informative approach to reporting the results of test and widely used in the

medical literature. However in economics standard practice is to report results referring to

5% and 1% significance levels, and sometimes to the 0.1% level.

47

Copyright Christopher Dougherty 2011.

These slideshows may be downloaded by anyone, anywhere for personal use.

Subject to respect for copyright and, where appropriate, attribution, they may be

used as a resource for teaching an econometrics course. There is no need to

refer to the author.

The content of this slideshow comes from Section 2.6 of C. Dougherty,

Introduction to Econometrics, fourth edition 2011, Oxford University Press.

Additional (free) resources for both students and instructors may be

downloaded from the OUP Online Resource Centre

http://www.oup.com/uk/orc/bin/9780199567089/.

Individuals studying econometrics on their own and who feel that they might

benefit from participation in a formal course should consider the London School

of Economics summer school course

EC212 Introduction to Econometrics

http://www2.lse.ac.uk/study/summerSchools/summerSchool/Home.aspx

or the University of London International Programmes distance learning course

20 Elements of Econometrics

www.londoninternational.ac.uk/lse.

11.07.25

Das könnte Ihnen auch gefallen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (119)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (265)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (587)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2219)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (890)

- Introduction To Environmental Eng - Gilbert M. Masters & Wendell P. ElaDokument1.568 SeitenIntroduction To Environmental Eng - Gilbert M. Masters & Wendell P. Elamhbenne67% (60)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- OLS Finite Sample PropertiesDokument686 SeitenOLS Finite Sample Propertiesateranimus50% (2)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- 3rd Quarter Exam - Statistics and ProbabilityDokument11 Seiten3rd Quarter Exam - Statistics and ProbabilityLORENZO RAMIREZ100% (2)

- 300 Solved Problems in Geotechnical EngineeringDokument0 Seiten300 Solved Problems in Geotechnical Engineeringmote3488% (17)

- HUL Valuation Using DCFDokument14 SeitenHUL Valuation Using DCFShuvam KoleyNoch keine Bewertungen

- Business analytics insights from Google Play Store datasetDokument32 SeitenBusiness analytics insights from Google Play Store datasetkallika100% (2)

- Analysis of Repeated Measures and Time SeriesDokument90 SeitenAnalysis of Repeated Measures and Time Series2874970Noch keine Bewertungen

- Accenture Achieving High Performance With Theft Analytics PDFDokument20 SeitenAccenture Achieving High Performance With Theft Analytics PDFAna MedanNoch keine Bewertungen

- JNM CVR CurrSci Landlevel 2005Dokument3 SeitenJNM CVR CurrSci Landlevel 2005HimanshuVermaNoch keine Bewertungen

- CadresDokument1 SeiteCadresHimanshuVermaNoch keine Bewertungen

- JNM CVR CurrSci Landlevel 2005Dokument3 SeitenJNM CVR CurrSci Landlevel 2005HimanshuVermaNoch keine Bewertungen

- csp2014 SyllabusDokument1 Seitecsp2014 SyllabusTarunKumarSinghNoch keine Bewertungen

- CE 331 Soil Mechanics Homework 1Dokument1 SeiteCE 331 Soil Mechanics Homework 1HimanshuVermaNoch keine Bewertungen

- A Preview of Soil Behavior - DR PK Basudhar - Dept of Civil Eng - IITK KanpurDokument75 SeitenA Preview of Soil Behavior - DR PK Basudhar - Dept of Civil Eng - IITK KanpurFila MerahNoch keine Bewertungen

- Assignment 4Dokument3 SeitenAssignment 4HimanshuVermaNoch keine Bewertungen

- KEY1910EDokument8 SeitenKEY1910EAvinash YadavNoch keine Bewertungen

- Coning, Fanning, Fumigation, LoftingDokument30 SeitenConing, Fanning, Fumigation, LoftingSiva Reddy100% (7)

- NOTE FOR BEGINNERS: Target CSE 2015 and Beyond... : WWW - Upsc.gov - inDokument2 SeitenNOTE FOR BEGINNERS: Target CSE 2015 and Beyond... : WWW - Upsc.gov - inHimanshuVermaNoch keine Bewertungen

- UPSC Civil Services Exam Notice 2014Dokument44 SeitenUPSC Civil Services Exam Notice 2014Pradeep SharmaNoch keine Bewertungen

- ARC 8threport Ch3Dokument16 SeitenARC 8threport Ch3HimanshuVermaNoch keine Bewertungen

- Assignment 1Dokument2 SeitenAssignment 1HimanshuVermaNoch keine Bewertungen

- Geological Civil Structure PDFDokument21 SeitenGeological Civil Structure PDFHimanshuVermaNoch keine Bewertungen

- Coning, Fanning, Fumigation, LoftingDokument30 SeitenConing, Fanning, Fumigation, LoftingSiva Reddy100% (7)

- ARC 8threport Ch3Dokument16 SeitenARC 8threport Ch3HimanshuVermaNoch keine Bewertungen

- Report On Combatting Terrorism' Genesis and Different Types of TerrorismDokument14 SeitenReport On Combatting Terrorism' Genesis and Different Types of TerrorismsubhashsinghkislayNoch keine Bewertungen

- ARC 8threport Ch5Dokument30 SeitenARC 8threport Ch5HimanshuVermaNoch keine Bewertungen

- ARC 8threport Ch4Dokument42 SeitenARC 8threport Ch4HimanshuVermaNoch keine Bewertungen

- Answers To Mid-Sem Exam Paper of CE 362Dokument5 SeitenAnswers To Mid-Sem Exam Paper of CE 362HimanshuVermaNoch keine Bewertungen

- 2 Determinants of Capital Structure in Indian Automobile Companies A Case of Tata Motors and Ashok Leyland R.M. Indi PDFDokument7 Seiten2 Determinants of Capital Structure in Indian Automobile Companies A Case of Tata Motors and Ashok Leyland R.M. Indi PDFInfinity TechNoch keine Bewertungen

- Development of Demand Forecasting Models For Improved Customer Service in Nigeria Soft Drink Industry - Case of Coca-Cola Company EnuguDokument8 SeitenDevelopment of Demand Forecasting Models For Improved Customer Service in Nigeria Soft Drink Industry - Case of Coca-Cola Company EnuguijsretNoch keine Bewertungen

- F 11 FinalDokument22 SeitenF 11 Finaldeba_subiNoch keine Bewertungen

- Ch 03 - Demand ForecastingDokument81 SeitenCh 03 - Demand ForecastingAasif MOCKADDAMNoch keine Bewertungen

- Performing Unit Root Tests in EviewsDokument9 SeitenPerforming Unit Root Tests in Eviewssmazadamha sulaimanNoch keine Bewertungen

- Surface Roughness Modeling in The Turning of AISI 12L14 Steel by Factorial Design ExperimentDokument6 SeitenSurface Roughness Modeling in The Turning of AISI 12L14 Steel by Factorial Design ExperimentaliNoch keine Bewertungen

- Surveying - IntroDokument65 SeitenSurveying - IntroZEKINAH JAHZIEL AGUASNoch keine Bewertungen

- Developing Models For The Prediction of Hospital Healthcare Waste Generation RateDokument6 SeitenDeveloping Models For The Prediction of Hospital Healthcare Waste Generation RatemangoNoch keine Bewertungen

- Food Chemistry: A. Hidalgo, M. Rossi, F. Clerici, S. RattiDokument8 SeitenFood Chemistry: A. Hidalgo, M. Rossi, F. Clerici, S. RattiAnna Luiza AraújoNoch keine Bewertungen

- Weston, Gore JR., 2006Dokument34 SeitenWeston, Gore JR., 2006aboabd2007Noch keine Bewertungen

- Unit 5. Model Selection: María José Olmo JiménezDokument15 SeitenUnit 5. Model Selection: María José Olmo JiménezAlex LovisottoNoch keine Bewertungen

- SCM 2.0Dokument71 SeitenSCM 2.0Pooja NevewaniNoch keine Bewertungen

- MGT 400 Presentation - ReviewDokument20 SeitenMGT 400 Presentation - ReviewCheeNoch keine Bewertungen

- Analysis of Variances ANOVA PDFDokument24 SeitenAnalysis of Variances ANOVA PDFShaukat OrakzaiNoch keine Bewertungen

- Multivariate Linear RegressionDokument30 SeitenMultivariate Linear RegressionesjaiNoch keine Bewertungen

- The Effect of Capital Structure On The Financial Performance of Small and Medium Enterprises in Thika Sub-County, KenyaDokument6 SeitenThe Effect of Capital Structure On The Financial Performance of Small and Medium Enterprises in Thika Sub-County, KenyaJules JonathanNoch keine Bewertungen

- Uji Normalitas Data SPSS - Puspita Utari D042202010Dokument7 SeitenUji Normalitas Data SPSS - Puspita Utari D042202010Ahmad Hunain SupyanNoch keine Bewertungen

- How a metalware company used multiple regression analysis to optimize manufacturing processesDokument29 SeitenHow a metalware company used multiple regression analysis to optimize manufacturing processesLucyl MendozaNoch keine Bewertungen

- Lecture 4A Introduction To Geostatistical Estimation (Unza)Dokument12 SeitenLecture 4A Introduction To Geostatistical Estimation (Unza)Chaka MbeweNoch keine Bewertungen

- Ijest NG Vol1 No1 Complete IssueDokument298 SeitenIjest NG Vol1 No1 Complete IssueUkemebookieNoch keine Bewertungen

- The Empowering Leadership Questionnaire The Construction and Validation of A New Scale For Measuring Leader BehaviorsDokument22 SeitenThe Empowering Leadership Questionnaire The Construction and Validation of A New Scale For Measuring Leader BehaviorsKEE POH LEE MoeNoch keine Bewertungen

- Elias Blue Journal 02Dokument26 SeitenElias Blue Journal 02María Alejandra Zapata MendozaNoch keine Bewertungen

- Lec2 Linear Regression With One VariableDokument48 SeitenLec2 Linear Regression With One VariableZakaria AllitoNoch keine Bewertungen

- (REFERENCE) Yr 11 ExemplarDokument15 Seiten(REFERENCE) Yr 11 ExemplarHannah LeeNoch keine Bewertungen