Beruflich Dokumente

Kultur Dokumente

HMC3

Hochgeladen von

Hemanth Sreej OCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

HMC3

Hochgeladen von

Hemanth Sreej OCopyright:

Verfügbare Formate

HYBRID MEMORY CUBE

1. INTRODUCTION

In the past years, computers are made with single core processors. They

used DDR3, DDR2, DDR1, RDRAM etc. as the primary memory for computing.

For better performance and high speed of computing, the vendors introduced

multi-core processors. Multi-core processors are processors with more than one

CPU. For example, Intels Core 2 Duo is a dual core processor, i3,i5 and i7 are

quad core processors. But the limitation of these processors is, they still uses

DDR3 or DDR2 RAM. The performance of these systems is limited by memory

system bandwidth.

In this situation, Micron inc. introduced a new memory technology called

Hybrid Memory Cube (HMC) which have entirely new architecture in which

DRAM layers are arranged in a three dimensional architecture that improves

latency, band width, power consumption and density.

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

2. RANDOM ACCESS MEMORY (RAM)

The RAM can be both read and, written, and is used to hold the programs,

operating system, and data

required by a computer system. In embedded

systems, it holds the stack and temporary variables of the programs, operating

system, and data.

RAM is generally volatile, does not retain the data stored in it when

the system 's power is turned off. Any data that needs to be stored while

the system is off must be written to a permanent storage device, such as a flash

memory or hard disk.

2.1. CLASSIFICATION OF RAM

Fig.1: classification of RAM

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

2.1.1 SRAM

SRAM chip uses bi-stable latches or flip-flop to store each bit. As

computer processes data it changes its states from 1 or 0 and thus refreshing is not

required so SRAM are very fast. SRAM is the nearest of memory or cache in

processor. Also it is very expensive, as it requires much larger density. Four to six

transistors are required to store a bit using SRAM.

Fig.2: Basic SRAM cell

2.1.2 DRAM

DRAM (Dynamic Random Access Memory) is the main memory used for

all desktop and larger computers. Each elementary DRAM cell is made up of a

single MOS transistor and a storage capacitor. So, high package density and

cheaper than SRAM . Each storage cell contains one bit of information. This

charge, however, leaks off the capacitor due to the sub-threshold current of the

cell transistor. Therefore, the charge must be refreshed several times each second.

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

Fig.3: Basic DRAM cell

2.1.3 ASYNCHRONOUS SRAM

Asynchronous SRAM works with control signals such as CE(chip enable),

CS(chip select),OE(output enable), WE(write enable), RE(read enable) etc. Its

operation is not synchronized with system clock and also it is a low speed

memory.

2.1.4 SYNCHRONOUS SRAM

As computer system clocks increased, the demand for very fast SRAMs

necessitated variations on the standard asynchronous fast SRAM. The result was

the synchronous SRAM (SSRAM).Synchronous SRAMs have their read or write

cycles synchronized with the microprocessor clock and therefore can be used in

very high-speed applications. An important application for synchronous SRAMs

is cache SRAM used in Pentium- or PowerPC-based PCs and workstations.

2.1.5 RAMBUS DRAM (RDRAM)

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

RDARM is a type of synchronous dynamic RAM

by Rambus inc.

in

the

mid-1990s

as

replacement

developed

for

then-

prevalent DIMM SDRAM memory architecture. It has higher speed than SDRAM

memory architecture since data is transferred on both the rising and falling edges

of the clock signal. Compared to other contemporary standards, Rambus shows a

significant increase in latency, heat output, manufacturing complexity, and cost.

Because of the way Rambus designed RDRAM, RDRAM's die size is inherently

larger than similar SDRAM chips. RDRAM's die size is larger because it is

required to house the added interface and results in a 10-20 percent price premium

at 16-megabit densities and adds about a 5 percent penalty at 64MB.

2.1.6 DDR SDRAM

Double data rate synchronous dynamic random-access memory (DDR

SDRAM) is a class of memory integrated circuits used in computers. Compared

to single data rate (SDR) SDRAM, the DDR SDRAM interface makes higher

transfer rates possible by more strict control of the timing of the electrical data

and clock signals. Implementations often have to use schemes such as phaselocked loops and self-calibration to reach the required timing accuracy. The

interface uses double pumping (transferring data on both the rising and falling

edges of the clock signal) to lower the clock frequency. One advantage of keeping

the clock frequency down is that it reduces the signal integrity requirements on

the circuit board connecting the memory to the controller. The name "double data

rate" refers to the fact that a DDR SDRAM with a certain clock frequency

achieves nearly twice the bandwidth of a SDR SDRAM running at the same clock

frequency, due to this double pumping.

2.1.7 DDR1

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

Double Data Rate-SDRAM, or simply DDR1, was designed to replace

SDRAM. DDR1 was originally referred to as DDR-SDRAM or simple DDR.

When DDR2 was introduced, DDR became referred to as DDR1. The principle

applied in DDR is exactly as the name implies double data rate. The DDR

actually doubles the rate data is transferred by using both the rising and falling

edges of a typical digital pulse. Earlier memory technology such as SDRAM

transferred data after one complete digital pulse. DDR transfers data twice as fast

by transferring data on both the rising and falling edges of the digital pulse. The

bandwidth of DDR1 is 2.66 GBps.

2.1.8 DDR2

DDR2 is the next generation of memory developed after DDR. DDR2

increased the data transfer rate referred to as bandwidth by increasing the

operational frequency to match the high FSB frequencies and by doubling the prefetch buffer data rate. There will be more about the memory pre-fetch buffer data

rate later in this section. DDR2 is a 240 pin DIMM design that operates at 1.8

volts. The lower voltage counters the heat effect of the higher frequency data

transfer. DRR operates at 2.5 volts and is a 188 pin DIMM design. DDR2 uses a

different motherboard socket than DDR, and is not compatible with motherboards

designed for DDR. The DDR2 DIMM key will not align with DDR DIMM key. If

the DDR2 is forced into the DDR socket, it will damage the socket and the

memory will be exposed to a high voltage level. Also be aware the DDR is 188

pin DIMM design and DDR2 is a 240 pin DIMM design. The bandwidth of

DDR2 is 5.34 GBps.

2.1.9 DDR3

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

DDR3 was the next generation memory introduced in the summer of 2007

as the natural successor to DDR2. DDR3 increased the pre-fetch buffer size to 8bits an increased the operating frequency once again resulting in high data transfer

rates than its predecessor DDR2. In addition, to the increased data transfer rate

memory chip voltage level was lowered to 1.5 V to counter the heating effects of

the high frequency. By now you can see the trend of memory to increase pre-fetch

buffer size and chip operating frequency, and lowering the operational voltage

level to counter heat. The bandwidth of DDR3 is 10.66 GBps.

Fig.4: DDR2 and DDR3 RAM

2.1.10 DDR4

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

In computing, DDR4 SDRAM, an abbreviation for double data rate type

four synchronous dynamic random-access memory, is a type of dynamic randomaccess memory (DRAM) with a high bandwidth interface expected to be released

to market in 2012. It is one of several variants of DRAM which have been in use

since the early 1970s and is not compatible with any earlier type of random access

memory (RAM) due to different signaling voltages, physical interface and other

factors. The bandwidth of DDR4 is 21.34 GBps.

3. PROBLEMS WITH EXISTING MEMORY

TECHNOLOGIES

3.1 Latency (memory wall)

Latency is the measure of delay experienced in a system. It is well known

that DRAM access latencies have not decreased at the same rate as

microprocessor cycle times. This leads to the situation where the relative memory

access time (in CPU cycles) keeps increasing from one generation to the next.

This problem is popularly referred to as the Memory Wall.

3.2 Bandwidth related issues

As processor clocks increased, the cost of fast memory did not. Static

(SRAM) memory is faster and does not need to be refreshed, but is expensive.

Dynamic memory (DRAM) needs to be periodically refreshed is slower and cost

less than static memory. In general, the speed difference between a processor and

DRAM is a factor of 50 (more or less). That means if a core needs something

directly from memory, it must wait 50 cycles until the data is available.

3.3 Power or energy

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

Since conventional wire bond technology is used for horizontal wiring in

memory chips, inductive losses occur. Also, power is wasted by buffers (repeaters

that propel signals through lengthy circuits). So the power consumption of

currently using DDR3 RAM is 517mW/GB/sec. This causes heating of the

memory chips. It may results in damage if proper cooling mechanism is not

adopted.

3.4 Random request rate

Since multi-core processors have more than 1 CPU, they operate on

different instructions simultaneously. So, they may need different data from

different memory locations. So, the processor generates random requests.

3.5 Scalability

To increase the bandwidth of memory, more number of data lines are

needed. It does not possible to a large extent in currently using RAM modules.

So, scalability of memory is not possible.

3.6 Memory capacity per unit footprint

The silicon area required for making a 1GB RAM is 294 mm 2 . So, the

sizes of memory modules are large. DDR3 have a form factor of

133.35mmx30mmx10mm.

Dept. of ECE, College of Engg., Poonjar

HYBRID MEMORY CUBE

4. HYBRID MEMORY CUBE (HMC)

The HMC is a stack of heterogeneous die. A standard DRAM building

block can be combined with various versions of application-specific logic. Each 1

Gb DRAM layer is optimized for concurrency and high bandwidth. As shown in

Fig. 5, the HMC device uses through-silicon via (TSV) technology and fine pitch

copper pillar interconnect. Common DRAM logic is off- loaded to a highperformance logic die. DRAM transistors have traditionally been designed for

low cost and low leakage. The logic die, with high-performance transistors, is

responsible for DRAM sequencing, refresh, data routing, error correction and

high-speed interconnect to the host. TSVs enable thousands of connections in the

Z direction. This greatly reduces the distance data needs to travel, resulting in

improved power. The stacking of many dense DRAM

Fig.5: HMC system

devices produces a very high-density footprint. The HMC was constructed with

1866 TSVs on ~60 pitch. Energy per bit was measured at 3.7 pj/bit for the

DRAM layers and 6.78 pj/bit for the logic layer, netting 10.48 pj/bit total for the

HMC prototype. This compares to 65pj/bit for existing DDR 3 modules.

Dept. of ECE, College of Engg., Poonjar

10

HYBRID MEMORY CUBE

5. ARCHITECTURE OF HMC

The HMC DRAM is a 68mm2, 50nm, 1Gb die segmented into multiple

autonomous partitions. Each partition includes two independent memory banks

for a total of 128 banks per HMC. Each partition supports a closed page policy

and full cache line transfers, 32 to 256 bytes. Memory vaults are vertical stacks of

DRAM partitions (Fig.6).

Fig.6: HMC Structure

This structure allows more than 16 concurrent operations per stack. ECC

is included with the data to support reliability, availability and serviceability

(RAS) features. The efficient page size and short distance traveled results in very

low energy per bit. The TSV signaling rate was targeted at less than 2 Gb/s to

ensure yield. This resulted in more robust timing while reducing the need for

high-speed transistors in our DRAM process. Each partition consists of 32 data

TSV connections and additional command/address/ECC connections. The tiled

Dept. of ECE, College of Engg., Poonjar

11

HYBRID MEMORY CUBE

architecture and large number of banks results in lower system latency under

heavy load. The DRAM is optimized for random transactions typical of multicore processors. The DRAM layers are designed to support short cycle times,

utilize short data paths and have highly localized addressing/control paths. Each

partition, including the TSV pattern, is optimized to keep the on-die wiring and

energy-consuming capacitance to a minimum. As depicted in Fig. 7, the DRAM

data path extends spatially only from the data TSVs to the helper flip flop (HFF)

and write driver blocks, which are tucked up against the memory arrays. The

address/command TSVs are located along the right side of Fig.7. The

address/command circuits reside in very close proximity to the partition memory

arrays. The memory arrays are designed with 256-byte pages.

Fig.7: HMC DRAM partition floor plan

5.1 Logic base

Dept. of ECE, College of Engg., Poonjar

12

HYBRID MEMORY CUBE

Many of the traditional functions found in a DRAM have been moved to

the logic layer, including the high-speed host interface, data distribution,

address/control, refresh control and array repair functions. The DRAM is a slave

to the logic layer timing control. The logic layer contains adaptive timing and

calibration capabilities that are hidden from the host. This allows the DRAM

layers to be optimized for a given logic layer. Timing, refresh and thermal

management are adaptive and local. The logic layer contains a DRAM sequencer

per vault. Local, distributed control minimizes complexity. The local sequencers

are connected through a cross bar switch with the host interfaces. Any host link

interface can connect to any local DRAM sequencer. The switch-based

interconnect also enables a mesh of memory cubes for expandability.

Fig.8: Structure of logic base

Dept. of ECE, College of Engg., Poonjar

13

HYBRID MEMORY CUBE

5.2 Host Interface

The logic layer is implemented with high-performance logic transistors.

This makes the high-speed SERDES (15 Gb/s) possible. HMC uses a simple

abstracted protocol versus a traditional DRAM. The host sends read and write

commands versus the traditional RAS and CAS. This effectively hides the natural

silicon variations and bank conflicts within the cube and away from the host.

The DRAM can be optimized on a per- value basis. Out of-order

execution resolves resource conflicts within the cube. This results in

nondeterministic and simple control for the host similar to most modern system

interfaces. The abstracted protocol enables advanced DRAM management.

Fig.8: HMC-Host interface

Dept. of ECE, College of Engg., Poonjar

14

HYBRID MEMORY CUBE

6. DRAM ARRAY AND ACCESS

DRAM is usually arranged in a rectangular array of charge storage cells

consisting of one capacitor and transistor per data bit. The figure below shows a

simple example with a 4 by 4 cell matrix. Modern DRAM matrices are many

thousands of cells in height and width.

Fig.9: simple 4x4 DRAM array

The long horizontal lines connecting each row are known as word-lines.

Each column of cells is composed of two bit-lines, each connected to every other

storage cell in the column (the illustration to the right does not include this

important detail). They are generally known as the + and bit-lines. A sense

amplifier is essentially a pair of cross-connected inverters between the bit-lines.

The first inverter is connected with input from the + bit-line and output to the

bit-line. The second inverter's input is from the bit-line with output to the + bitline. This results in positive feedback which stabilizes after one bit-line is fully at

its highest voltage and the other bit-line is at the lowest possible voltage.

Dept. of ECE, College of Engg., Poonjar

15

HYBRID MEMORY CUBE

6.1 Operations to read a data bit from a DRAM storage cell

1. The sense amplifiers are disconnected.

2. The bit-lines are pre-charged to exactly equal voltages that are in between

high and low logic levels. The bit-lines are physically symmetrical to

keep the capacitance equal, and therefore the voltages are equal.

3. The pre-charge circuit is switched off. Because the bit-lines are relatively

long, they have enough capacitance to maintain the pre-charged voltage

for a brief time. This is an example of dynamic logic.

4. The desired row's word-line is then driven high to connect a cell's storage

capacitor to its bit-line. This causes the transistor to conduct,

transferring charge between the storage cell and the connected bit-line. If

the storage cell's capacitor is discharged, it will greatly decrease the

voltage on the bit-line as the pre-charge is used to charge the storage

capacitor. If the storage cell is charged, the bit-line's voltage only

decreases very slightly. This occurs because of the high capacitance of the

storage cell capacitor compared to the capacitance of the bit-line, thus

allowing the storage cell to determine the charge level on the bit-line.

5. The sense amplifiers are connected to the bit-lines. Positive feedback then

occurs from the cross-connected inverters, thereby amplifying the small

voltage difference between the odd and even row bit-lines of a particular

column until one bit line is fully at the lowest voltage and the other is at

the maximum high voltage. Once this has happened, the row is "open"

(the desired cell data is available).

Dept. of ECE, College of Engg., Poonjar

16

HYBRID MEMORY CUBE

6. All storage cells in the open row are sensed simultaneously, and the sense

amplifier outputs latched. A column address then selects which latch bit to

connect to the external data bus. Reads of different columns in the same

row can be performed without a row opening delay because, for the open

row, all data has already been sensed and latched.

7. While reading of columns in an open row is occurring, current is flowing

back up the bit-lines from the output of the sense amplifiers and

recharging the storage cells. This reinforces (i.e. "refreshes") the charge in

the storage cell by increasing the voltage in the storage capacitor if it was

charged to begin with, or by keeping it discharged if it was empty. Note

that due to the length of the bit-lines there is a fairly long propagation

delay for the charge to be transferred back to the cell's capacitor. This

takes significant time past the end of sense amplification, and thus

overlaps with one or more column reads.

8. When done with reading all the columns in the current open row, the

word-line is switched off to disconnect the storage cell capacitors (the row

is "closed") from the bit-lines. The sense amplifier is switched off, and the

bit lines are pre-charged again

Dept. of ECE, College of Engg., Poonjar

17

HYBRID MEMORY CUBE

7. HOW A MEMORY LOCATION IS ACCESSED IN HMC?

CPU sends address, control signals and clock to the logic base of HMC

through the high speed link.

The memory map in the memory controller decodes the memory address

into (layer, bank, row & column).

The crossbar switch activates the desired memory location.

Command

generator

generates

commands

for

the

target

memory(activate ,read ,write ,pre charge ,refresh ).

The requested location is copied to the row buffer of the selected bank

(memory read).

The requested location of the selected bank is written by data in the row

buffer (memory write).

Dept. of ECE, College of Engg., Poonjar

18

HYBRID MEMORY CUBE

8. TSV TECHNOLOGY

TSV is an important developing technology that utilizes short, vertical

electrical connections or vias that pass through a silicon wafer in order to

establish an electrical connection from the active side to the backside of the die,

thus providing the shortest interconnect path and creating an avenue for the

ultimate in 3D integration. TSV technology offers greater space efficiencies and

higher interconnects densities than wire bonding and flips chip stacking. When

combined with micro bump bonding and advanced flip chip technology, TSV

technology enables a higher level of functional integration and performance in a

smaller form factor.

While two die could be combined using conventional wire bonding

techniques, the inductive losses would reduce the speed of data exchange, in turn

eroding the performance benefit. TSV addresses the data exchange issues of wire

bonding, and offers several other attractive advantages. For example, TSV allows

for shorter interconnects between the die, reducing power consumption caused by

long horizontal wiring, and eliminating the space and power wasted by buffers

(repeaters that propel a signal through a lengthy circuit). TSV also reduces

electrical parasitic in the circuit (i.e., unintended electrical effects), increasing

device switching speeds. Moreover, TSV accommodates much higher

input/output density than wire bonding, which consumes much more space.

Fig.10: TSV interconnections

Dept. of ECE, College of Engg., Poonjar

19

HYBRID MEMORY CUBE

8.1 CHALLENGES TO TSV IMPLEMENTATION

8.1.1 Cost

Implementation cost, determined by numerous aspects of design and

manufacturing, is the biggest barrier to broad commercialization of TSV

technology. In particular, the current major cost barriers to TSV are bonding/debonding and via barrier/fill, as explained below. The value of TSV can be

tremendous once production costs fit the roadmap. For example, todays smart

phones integrate an RF base band chip, Flash memory, and an ARM processor

onto plastic at a cost of approximately $12-17. Heterogeneously integrating these

chips using TSV could cut integration costs to well under $5. The industry

currently considers a 30% cost increase (over wire bonding) to be acceptable for

TSV, given TSVs much higher return in functionality and performance. This

equates to a target cost-per wafer below $150 for 300mm substrates.

8.1.2 Design

As TSV is adopted in increasingly complex applications that combine

different types of chips, design guidelines and software must keep pace to address

a variety of issues. With several thousand interconnections between die, chip

architecture and layout must undergo fundamental changes. Designers for each

chip type used in the integration scheme will have to leverage the same master

layout to line up connection points between the chips. At the same time, designers

will need to consider possible heat generation issues. Stacked chips may overheat

if some thermal management mechanism is not included in the design. Hot spots

and temperature gradients strongly affect reliability. Conventional twodimensional (2D) thermal management techniques will not be adequate for 3D

problems; more sophisticated solutions will be required.

Dept. of ECE, College of Engg., Poonjar

20

HYBRID MEMORY CUBE

8.1.3 Manufacturing

For greatest cost-effectiveness, the full manufacturing sequence requires

seamless integration and optimization between traditional steps in wafer

processing and back-end packaging. The entire flow must be optimized to deliver

the greatest performance (yield, reliability) for the highest productivity (cost). In

TSV applications, the process sequence differs, depending on whether the via first

or via last approach is used (as addressed below). In either approach, the TSV

stack undergoes bonding, thinning, wafer processing on bonded/thinned wafers,

and subsequent de-bonding. Wafers are typically bonded to carriers (glass or

dummy silicon) and thinned down to a thickness ranging from 30 to 125m. This

introduces new manufacturing challenges, including thermal budget control. To

preserve the adhesive integrity of the bonding material once wafers are bonded,

processing temperatures cannot exceed 200C. Another manufacturing challenge

is the need for new automatic test capabilities compatible with 3D integration to

ensure electrical functionality of the finished devices. Chip stacking can be die-todie (chip-to-chip), die-to-wafer or two whole wafers stacked together. For early

mainstream applications, die-to-wafer integration will be most common,

involving the stacking of a single Known Good Die (KGD) onto a KGD on a

wafer. Wafer-to-wafer integration will become more common only as consistently

high yielding wafers are produced.

Dept. of ECE, College of Engg., Poonjar

21

HYBRID MEMORY CUBE

8.2 TSV PROCESSES AND INTEGRATION

8.2.1 Via Creation

Vias for TSV 3D integration were initially created using laser ablation.

However, with the increase in the number of vias and concerns regarding damage

and defects, deep reactive ion etch technology, well proven in traditional

semiconductor manufacturing, has become the process of choice. Depending on

application, a via first or via last process sequence is followed. Via First: Vias are

etched during front-end-of-line processing (i.e., during transistor creation) from

the front-side of a full-thickness wafer. This approach is favored by logic

suppliers and is the most challenging by far. The smallest via diameters for viafirst schemes tend to be 5 to 10m; aspect ratios are higher (10:1), posing

challenges for liner and barrier step coverage, and for the quality of copper fill.

Via Last: Vias are etched during or after back-end-of-line processing (i.e.,

interconnect formation) from the front-side of a full-thickness wafer or backside

of a thinned wafer. This approach is used in image sensors and stacked DRAM.

CMOS image sensors have via diameters exceeding 40m with aspect ratios of

2:1. In other devices, the vias range from 10 to 25m with aspect ratios of 5:1.

8.2.2 Via Liners

To avoid shorting to the silicon, the etched vias must be lined with an

insulating layer of oxide (dielectric) before being filled with metal. If a copper fill

is used, a second metal barrier liner is also needed. Copper will most likely be

used in mainstream applications for its conductivity and cost benefits. Chemical

vapor deposition processes are ideal for TSV dielectric liner applications because

of their inherently conformal deposition. Conformality is critical for good step

coverage in the subsequent titanium metal barrier and copper seed steps. With the

Dept. of ECE, College of Engg., Poonjar

22

HYBRID MEMORY CUBE

high-productivity Applied Producer InVia system, Applied Materials offers a

highly conformal process for depositing robust insulating liners for high aspect

ratio via-first and via-middle TSV applications. The InVia film possesses good

breakdown voltage and leakage current properties with excellent adhesion to the

industry standard barrier metals (Ta, Ti and TaN/Ta). Applieds plasma-based

processes are ideal for integration schemes where thermal budget considerations

are critical, producing oxides and nitrides that combine high quality film

properties and low-temperature (~200C) deposition. Depositing the barrier layer

and subsequently filling the via with metal are among the most challenging and

expensive processes in the TSV flow. Titanium or tantalum are favored as barrier

materials for copper TSVs just as they are for advanced logic devices in which

good step coverage is also essential. These materials are deposited using physical

vapor deposition (PVD) processes for which Appliers most advanced system, the

Applied Endura Extensa PVD, delivers highly uniform step coverage and sheet

resistance, allowing a thinner barrier to be deposited to promote void-free metal

fill. The system delivers the industrys best defect performance and operates at

25% lower cost of consumables than other available barrier deposition systems

with thinner film also helping to lower the cost of ownership per wafer.

8.2.3 Via Fill

Experiments have been performed using tungsten and poly silicon as the

conductive fill material for TSV vias, especially for the via-first approach.

However, both tungsten and poly silicon do not conduct as well as copper and

hence copper fill is the mainstream approach today. For via fill with copper

electroplating, Applied is partnered with Semi tool to develop integrated process

sequences to jointly optimize etch, dielectric liner, barrier/seed, and plating steps.

8.2.4 Chemical-Mechanical Polishing

Dept. of ECE, College of Engg., Poonjar

23

HYBRID MEMORY CUBE

Depending on the TSV processing sequence adopted, chemical-mechanical

polishing (CMP) can be used to remove oxide or metal. Challenges include rapid

removal of thick materials at low CoO without compromising wafer topography.

Applied has years of CMP experience with its Applied Reflexion family of

products.

8.3 VIA-FIRST AND VIA-LAST TSV

When it comes to via formation, one of the questions that must be

answered is whether to form the TSV before or after the IC is completed i.e.

"via-first" or "via-last". Several companies have developed bonding technologies

for both via-first and via-last process flows as follows (Figure 11).

Fig.11: (A) Via-last and (B) Via-first technologies

8.3.1 Vias-Last

Dept. of ECE, College of Engg., Poonjar

24

HYBRID MEMORY CUBE

In via-last technology, vias are formed after the die have been fabricated,

i.e, after both the front end of line (FEOL) and BEOL processes have been

completed. While direct oxide bonding is normally carried out at elevated

temperatures (i.e. > 1000C) to achieve a strong, robust bond interface, the higher

temperatures required are not always compatible with processed wafers. In order

to bond completed ICs, a low temperature direct bond process is essential. Direct

oxide bonding often produces very high interface bond strength at low

temperatures. In one embodiment, the technology (Figure 12) involves a quick

plasma treatment (activation) followed by an aqueous ammonium hydroxide rinse

(termination). Such activation/termination processes can be easily implemented at

CMOS wafer foundries, IDMs or OSATs. Subsequent to direct bonding, vias

would then be formed by etching through the top chip down to the connecting pad

for the lower chip.

Fig.12: Low-temperature direct oxide bonding process.

Dept. of ECE, College of Engg., Poonjar

25

HYBRID MEMORY CUBE

8.3.2Vias-first

In via-first technology, vias are introduced into the wafers either

before device formation[ (FEOL) or just before BEOL interconnect. In either

case, this would occur in the fab prior to completion of the die (wafer). Metal

metal bonding is favored by the industry for 3D IC integration because it

simultaneously forms both the mechanical and electrical bond. It is also generally

accepted that via-first technologies will be significantly easier to manufacture

since processing is at wafer scale and the vias are shallower/smaller. However, a

significant drawback for one process copper thermal compression (CuTC)

bonding is throughput. This bonding process involves heating the bonded

wafers to 350 400C for 30+ minutes under pressure, requiring that the prebonded wafer pair must spend considerable time at one bonding station. To

address this bottleneck, commercial aligner/bonder tool manufacturers have

developed multiple bonding stations, which add significant costs to these tools.

Without question, the industry is looking for a lower CoO method to bond wafers

for 3D integration.

The direct oxide bond is initiated in a few seconds with a standard

pick-and-place tool (W2W or D2W). Wafers are subsequently batch heated in a

cleanroom oven to ~ 300C to form a low resistance electrical bond at the aligned

Ni-Ni interface. Since the activated/terminated oxide layers are bonded together

with high strength, the Ni-Ni interface is subject to sufficient internal pressure so

that when the nickel expands at elevated temperature due to higher coefficient of

thermal expansion (CTE), a reliable metallic bond results. The combination of a

lower cost pick-and-place tool and high wafer throughput lead to lower CoO by

using this low temperature oxide bonding process.

Dept. of ECE, College of Engg., Poonjar

26

HYBRID MEMORY CUBE

9. MEMORY TECHNOLOGY COMPARISON

Fig.13: Bandwidth Equivalence

Dept. of ECE, College of Engg., Poonjar

27

HYBRID MEMORY CUBE

10. ADVANTAGES

Reduced latency With vastly more responders built into HMC, we expect

lower queue delays and higher bank availability, which can provide a substantial

system latency reduction, which is especially attractive in network system

architectures.

Increased bandwidth A single HMC can provide more than 15x the

performance of a DDR3 module. Speed is increased by the very fast, innovative

interface, unlike the slower parallel interface used in current DRAM modules.

Power reductions HMC is exponentially more efficient than current

memory, using 70% less energy per bit than DDR3.

Smaller physical systems HMCs stacked architecture uses nearly 90% less

space than todays RDIMMs.

Pliable to multiple platforms Logic-layer flexibility allows HMC to

be tailored to multiple platforms and applications.

11. DISADVANTAGES

Cost is high (30%higher than DDR3)

Designing is complex.

Manufacturing issues.

Need new motherboard form factor.

Dept. of ECE, College of Engg., Poonjar

28

HYBRID MEMORY CUBE

12. FUTURE

Now that the baseline technology has been verified, additional variations

of HMC will follow. DRAM process geometry and cell size will continue to

shrink to half the prize it is today. This and improved stacking will allow greater

density for a given cube bandwidth and area. HMC devices will extend beyond

8GB. The number of TSV connections will double to create a cube capable of 320

GB/s and beyond.

New interconnects will be optimized for a given system topology. Shortreach SERDES are being developed that are capable of less than 1 pj/bit.

Medium-reach SERDES will serve 810 inches of FR4. Silicon photonics will

extend the reach to 10 meters and beyond. Atomic memory operation, scatter

/gather, floating point processors, cache coherency and meta data are natural

candidates for inclusion in the HMC. Data manipulation closest to where the data

resides is the highest-bandwidth, lowest-power solution.

13. CONCLUSION

Dept. of ECE, College of Engg., Poonjar

29

HYBRID MEMORY CUBE

DDR3 and DDR4 aren't enough - it's time for a "revolution" in system

memory that will offer exponential improvements in bandwidth, latency, and

power efficiency. The Hybrid Memory Cube developed by Micron inc. is the

fastest and most efficient Dynamic Random Access Memory (DRAM) ever built.

Through collaboration with IBM, Micron will provide the industry's most capable

memory offering. The goal is to get the first 3D chip modules to market by 2013.

14. REFERENCES

Dept. of ECE, College of Engg., Poonjar

30

HYBRID MEMORY CUBE

http://ieeexplore.ieee.org

VLSI Technology (VLSIT), 2012 Symposium on

Date of Conference: 12-14 June 2012

Author(s): Jeddeloh, J. Keeth, B.

Page(s): 87 - 88

Product Type: Conference Publications

www.google.com

http://www.micron.com

http://en.wikipedia.org

www.youtube.com

Dept. of ECE, College of Engg., Poonjar

31

Das könnte Ihnen auch gefallen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Super Position and Statically Determinate BeamDokument25 SeitenSuper Position and Statically Determinate BeamHemanth Sreej ONoch keine Bewertungen

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Instructions For Data EntryDokument1 SeiteInstructions For Data EntryHemanth Sreej ONoch keine Bewertungen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Hybrid Memory CubeDokument22 SeitenHybrid Memory CubeHemanth Sreej ONoch keine Bewertungen

- UranlungalDokument18 SeitenUranlungalHemanth Sreej O100% (1)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Flat Plate Flooring SystemDokument21 SeitenFlat Plate Flooring SystemHemanth Sreej O100% (5)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Flat Plate Flooring SystemDokument21 SeitenFlat Plate Flooring SystemHemanth Sreej O100% (5)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Different Types of RAM SDRAM (Synchronous DRAM)Dokument2 SeitenDifferent Types of RAM SDRAM (Synchronous DRAM)Pro KodigoNoch keine Bewertungen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- mx46533v PDFDokument108 Seitenmx46533v PDFLiunix BuenoNoch keine Bewertungen

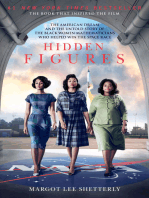

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Rambus Memory SystemDokument3 SeitenRambus Memory Systemapi-3850195Noch keine Bewertungen

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- DLL Design Examples, Design Issues - TipsDokument56 SeitenDLL Design Examples, Design Issues - TipsdinsulpriNoch keine Bewertungen

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- 1.1 1 - Computer - Hardware Converted2 1Dokument40 Seiten1.1 1 - Computer - Hardware Converted2 1Diana grace nabecesNoch keine Bewertungen

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Cache Memory: Computer Architecture Unit-1Dokument54 SeitenCache Memory: Computer Architecture Unit-1KrishnaNoch keine Bewertungen

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- Different RAM Types and Its UsesDokument8 SeitenDifferent RAM Types and Its Usesdanielle leigh100% (6)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- A Chapter 6Dokument88 SeitenA Chapter 6maybellNoch keine Bewertungen

- BY: For:: Ahmad Khairi HalisDokument19 SeitenBY: For:: Ahmad Khairi HalisKhairi B HalisNoch keine Bewertungen

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- RAM TechnologiesDokument25 SeitenRAM TechnologiesAmarnath M DamodaranNoch keine Bewertungen

- Dsa 00481045Dokument2 SeitenDsa 00481045Johnny LolNoch keine Bewertungen

- RAM Job Interview Questions and AnswersDokument8 SeitenRAM Job Interview Questions and AnswersmohamedNoch keine Bewertungen

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Chapter 2 COM101Dokument5 SeitenChapter 2 COM101Christean Val Bayani ValezaNoch keine Bewertungen

- RetroMagazine 05 EngDokument86 SeitenRetroMagazine 05 Engionut76733Noch keine Bewertungen

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Dpco Unit 3,4,5 QBDokument18 SeitenDpco Unit 3,4,5 QBAISWARYA MNoch keine Bewertungen

- Chapter 8 - Memory Basics: Logic and Computer Design FundamentalsDokument38 SeitenChapter 8 - Memory Basics: Logic and Computer Design FundamentalsAboNawaFNoch keine Bewertungen

- m01 Connect Internal HardwareDokument64 Seitenm01 Connect Internal Hardwaretewachew mitikeNoch keine Bewertungen

- Computer Hardware Servicing 8: Perform Mensuration and CalculationDokument14 SeitenComputer Hardware Servicing 8: Perform Mensuration and CalculationkreamerNoch keine Bewertungen

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- Motherboard ProjectDokument52 SeitenMotherboard Projectvarunsen95% (19)

- Digital Planet 8 Teachers ManualDokument44 SeitenDigital Planet 8 Teachers Manualur mom100% (2)

- Coa CH4Dokument6 SeitenCoa CH4vishalNoch keine Bewertungen

- UNIT 1 - Computer System ServicingDokument109 SeitenUNIT 1 - Computer System ServicingRodrigo CalapanNoch keine Bewertungen

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Css For Grade 7&8 - FQL9 - CC2 - 2. Input Data Into ComputerDokument11 SeitenCss For Grade 7&8 - FQL9 - CC2 - 2. Input Data Into ComputerRicky NeminoNoch keine Bewertungen

- 11 CSS Module Week 3Dokument23 Seiten11 CSS Module Week 3Alexander IbarretaNoch keine Bewertungen

- Chapter 9 - Memory Basics: Logic and Computer Design FundamentalsDokument33 SeitenChapter 9 - Memory Basics: Logic and Computer Design FundamentalsYou Are Not Wasting TIME HereNoch keine Bewertungen

- Assignment: Embedded SystemsDokument6 SeitenAssignment: Embedded SystemsSudarshanNoch keine Bewertungen

- Coa-2-Marks-Q & ADokument30 SeitenCoa-2-Marks-Q & AArup RakshitNoch keine Bewertungen

- TEJ3M1 Sample Test#1 Ch#1,4Dokument7 SeitenTEJ3M1 Sample Test#1 Ch#1,4Kaylon JosephNoch keine Bewertungen

- Synchronous Dynamic Random-Access MemoryDokument21 SeitenSynchronous Dynamic Random-Access MemorychahoubNoch keine Bewertungen

- CHN Mod 2Dokument16 SeitenCHN Mod 2AlenNoch keine Bewertungen

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)