Beruflich Dokumente

Kultur Dokumente

Zero-Error Data Compression and Huffman Codes

Hochgeladen von

ah_shali0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

22 Ansichten27 SeitenInformation_Theory_Chapte

Originaltitel

20150101 Information Theory Chapter 4

Copyright

© © All Rights Reserved

Verfügbare Formate

PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenInformation_Theory_Chapte

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

22 Ansichten27 SeitenZero-Error Data Compression and Huffman Codes

Hochgeladen von

ah_shaliInformation_Theory_Chapte

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 27

Ch4.

Zero-Error Data Compression

Yuan Luo

Content

Ch4. Zero-Error Data Compression

4.1 The Entropy Bound

4.2 Prefix Codes

4.2.1 Definition and Existence

4.2.2 Huffman Codes

4.3 Redundancy of Prefix Codes

4.1 The Entropy Bound

Definition 4.1 A D-ary source code

for a source

random variable is a mapping from , the

set of all finite length sequences of symbols

taken from a D-ary code alphabet.

4.1 The Entropy Bound

Definition 4.2 A code C is uniquely decodable if for

any finite source sequence, the sequence of code

symbols corresponding to this source sequence is

different from the sequence of code symbols

corresponding to any other (finite) source sequence.

4.1 The Entropy Bound

Example 1. Let

defined by

, , ,

. Consider the code C

Then all the three source sequence AAD,ACA, and AABA

produce the code sequence 0010. Thus from the code

sequence 0010, we cannot tell which of the three

source sequences it comes from. Therefore, C is not

uniquely decodable.

4.1 The Entropy Bound

Theorem (Kraft Inequality)

Let C be a D-ary source code, and let , , , be

the lengths of the codewords. If C is uniquely

decodable, then

1

4.1 The Entropy Bound

Example 2. Let

defined by

We know

| |, so

2

, , ,

. Consider the code C

4.1 The Entropy Bound

Let X be a source random variable with probability

distribution

, ,,

,

where

2. When we use a uniquely decodable code C

to encode the outcome of , the expected length of a

codeword is given by

4.1 The Entropy Bound

Theorem (Entropy Bound)

Let

be a D-ary uniquely decodable code for a

( ). Then

source random variable X with entropy

the expected length of C is lower bounded by

( ),

i.e. ,

( )

This lower bound is tight if and only if

.

4.1 The Entropy Bound

Definition 4.8. The redundancy R of a D-ary

uniquely decodable code is the difference between

the expected length of the code and the entropy of

the source.

4.2 Prefix Codes

4.2.1 Definition and Existence

Definition 4.9. A code is called a prefix-free code

if no codeword is a prefix of any other codeword.

For brevity, a prefix-free code will be referred to

as a prefix code.

4.2.1 Definition and Existence

Theorem

There exists a D-ary prefix code with codeword

lengths , , , ,if and only if the Kraft

inequality

1

is satisfied.

4.2.1 Definition and Existence

A probability distribution

such that for all

,where is a positive integer, is called a

,

D-adic distribution. When 2;

is called a

dyadic distribution.

4.2.1 Definition and Existence

Corollary 4.12. There exists a D-ary prefix code

which achieves the entropy bound for a distribution

if and only if

is D-adic.

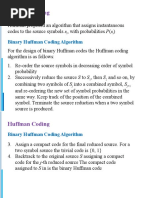

4.2.2 Huffman Codes

As we have mentioned, the efficiency of a uniquely

decodable code is measured by its expected length.

Thus for a given source X, we are naturally

interested in prefix codes which have the minimum

expected length. Such codes, called optimal codes,

can be constructed by the Huffman procedure, and

these codes are referred to as Huffman codes.

4.2.2 Huffman Codes

The Huffman procedure is to form a code tree such

that the expected length is minimum. The procedure

is described by a

very simple rule:

Keep merging the two smallest probability masses

until one probability mass ( . . , 1) is left.

4.2.2 Huffman Codes

Lemma 4.15. In an optimal code, shorter codewords

are assigned to larger probabilities.

Lemma 4.16. There exists an optimal code in which

the codewords assigned to the two smallest

probabilities are siblings, i.e., the two

codewords have the same length and they differ

only in the last symbol.

4.2.2 Huffman Codes

Theorem

The Huffman procedure produces an optimal prefix

code.

4.2.2 Huffman Codes

Theorem

The expected length of a Huffman code, denoted by

,satisfies

1.

This bound is the tightest among all the upper bounds

on

which depends only on the source entropy.

From the entropy bound and the above theorem, we have

1

4.3 Redundancy of Prefix Codes

Let X be a source random variable with probability

distribution

,

,,

where

2. A D-ary prefix code for X can be

represented by a D-ary code tree with m leaves,

where each leaf corresponds to a codeword.

4.3 Redundancy of Prefix Codes

: the index set of all the internal nodes

( including the root ) in the code tree.

: the probability of reaching an internal node

during the decoding process.

The probability

is called the reaching

probability of internal node . Evidently,

is

equal to the sum of the probabilities of all the

leaves descending from node .

4.3 Redundancy of Prefix Codes

: the probability that the

branch of node

is

taken during the decoding process. The probabilities

1 , are called the branching

, , 0

probabilities of node , and

,

4.3 Redundancy of Prefix Codes

Once node k is reached, the conditional branching

distribution is

,

,,

Then define the conditional entropy of node k by

,

,,

4.3 Redundancy of Prefix Codes

Lemma 4.19.

Lemma 4.20.

..

4.3 Redundancy of Prefix Codes

Define the local redundancy of an internal node k by

(1

Theorem (Local Redundancy Theorem)

Let L be the expected length of a D-ary prefix code

for a source random variable X, and R be the

redundancy of the code. Then

4.3 Redundancy of Prefix Codes

Corollary 4.22 (Entropy Bound). Let

be the

redundancy of a prefix code. Then

0 with

equality if and only if all the internal nodes in

the code tree are balanced.

Thank you!

Das könnte Ihnen auch gefallen

- Lossless Compression: Huffman Coding: Mikita Gandhi Assistant Professor AditDokument39 SeitenLossless Compression: Huffman Coding: Mikita Gandhi Assistant Professor Aditmehul03ecNoch keine Bewertungen

- EC 401 Information Theory & Coding: 4 CreditsDokument50 SeitenEC 401 Information Theory & Coding: 4 CreditsMUHAMAD ANSARINoch keine Bewertungen

- Information Theory and Coding PDFDokument150 SeitenInformation Theory and Coding PDFhawk eyesNoch keine Bewertungen

- Huffman Coding TechniqueDokument13 SeitenHuffman Coding TechniqueAnchal RathoreNoch keine Bewertungen

- Huffman CodingDokument23 SeitenHuffman CodingNazeer BabaNoch keine Bewertungen

- L12, L13, L14, L15, L16 - Module 4 - Source CodingDokument59 SeitenL12, L13, L14, L15, L16 - Module 4 - Source CodingMarkkandan ShanmugavelNoch keine Bewertungen

- ECE4007 Information Theory and Coding: DR - Sangeetha R.GDokument40 SeitenECE4007 Information Theory and Coding: DR - Sangeetha R.GTanmoy DasNoch keine Bewertungen

- Huffman Coding 1Dokument54 SeitenHuffman Coding 1dipu ghoshNoch keine Bewertungen

- Huffman Coding - WikipediaDokument11 SeitenHuffman Coding - Wikipediarommel baldagoNoch keine Bewertungen

- (Karrar Shakir Muttair) CodingDokument33 Seiten(Karrar Shakir Muttair) CodingAHMED DARAJNoch keine Bewertungen

- 5.3 Kraft Inequality and Optimal Codeword Length: Theorem 22 Let XDokument11 Seiten5.3 Kraft Inequality and Optimal Codeword Length: Theorem 22 Let XreachsapanNoch keine Bewertungen

- Information Theory and Coding: What You Need To Know in Today's ICE Age!Dokument44 SeitenInformation Theory and Coding: What You Need To Know in Today's ICE Age!Vikranth VikiNoch keine Bewertungen

- Source CodesDokument37 SeitenSource CodesVikashKumarGuptaNoch keine Bewertungen

- O o o o o o o o o o o o o : The Message Length K The Block Length N The Rate R The Distance DDokument10 SeitenO o o o o o o o o o o o o : The Message Length K The Block Length N The Rate R The Distance DAmmad Saifullah 5502Noch keine Bewertungen

- Assignment of SuccessfulDokument5 SeitenAssignment of SuccessfulLe Tien Dat (K18 HL)Noch keine Bewertungen

- Data CompressionDokument35 SeitenData CompressionSurenderMalanNoch keine Bewertungen

- Data Compression Codeword Length OptimizationDokument43 SeitenData Compression Codeword Length OptimizationManoj SamarakoonNoch keine Bewertungen

- Coding Theory and ApplicationsDokument5 SeitenCoding Theory and Applicationsjerrine20090% (1)

- All CodingDokument52 SeitenAll CodingAHMED DARAJNoch keine Bewertungen

- Data Compression TechniquesDokument11 SeitenData Compression Techniquessmile00972Noch keine Bewertungen

- An efficient MLD algorithm for linear block codesDokument8 SeitenAn efficient MLD algorithm for linear block codesMingyangNoch keine Bewertungen

- Lect06 Greedy HuffmanDokument7 SeitenLect06 Greedy HuffmanAnthony-Dimitri ANoch keine Bewertungen

- Raptor Codes: Amin ShokrollahiDokument1 SeiteRaptor Codes: Amin ShokrollahiRajesh HarikrishnanNoch keine Bewertungen

- Group Presentation Digital Communication SystemsDokument29 SeitenGroup Presentation Digital Communication SystemsShawn MoyoNoch keine Bewertungen

- Lecture 3: Linearity TestingDokument7 SeitenLecture 3: Linearity TestingSushmita ChoudharyNoch keine Bewertungen

- Unit 2 - Part 7 Coding Information Sources: 1 Adaptive Variable-Length CodesDokument5 SeitenUnit 2 - Part 7 Coding Information Sources: 1 Adaptive Variable-Length CodesfsaNoch keine Bewertungen

- Introduction To Digital Communications and Information TheoryDokument8 SeitenIntroduction To Digital Communications and Information TheoryRandall GloverNoch keine Bewertungen

- Random Neural Network Decoder For Error Correcting CodesDokument5 SeitenRandom Neural Network Decoder For Error Correcting CodesKiran maruNoch keine Bewertungen

- Data Compression Can Be Achieved by Assigning To of The Data Source andDokument42 SeitenData Compression Can Be Achieved by Assigning To of The Data Source andSoftelgin Elijah MulizwaNoch keine Bewertungen

- Chapter 5 Solved ProblemDokument3 SeitenChapter 5 Solved ProblemNatyBNoch keine Bewertungen

- Transactions Letters: Viterbi Decoding of The Hamming Code-Implementation and Performance ResultsDokument4 SeitenTransactions Letters: Viterbi Decoding of The Hamming Code-Implementation and Performance ResultsJeevith PaulNoch keine Bewertungen

- Static Huffman Coding Term PaperDokument23 SeitenStatic Huffman Coding Term PaperRavish NirvanNoch keine Bewertungen

- Point-to-Point Wireless Communication (III) :: Coding Schemes, Adaptive Modulation/Coding, Hybrid ARQ/FECDokument156 SeitenPoint-to-Point Wireless Communication (III) :: Coding Schemes, Adaptive Modulation/Coding, Hybrid ARQ/FECBasir UsmanNoch keine Bewertungen

- Notes For Turbo CodesDokument15 SeitenNotes For Turbo CodesMaria AslamNoch keine Bewertungen

- Huffman and Lempel-Ziv-WelchDokument14 SeitenHuffman and Lempel-Ziv-WelchDavid SiegfriedNoch keine Bewertungen

- Understanding Information TheoryDokument19 SeitenUnderstanding Information TheorySudesh KumarNoch keine Bewertungen

- Uniquely Decodable CodesDokument10 SeitenUniquely Decodable Codesshashank guptaNoch keine Bewertungen

- In Coding TheoryDokument5 SeitenIn Coding TheorysunithkNoch keine Bewertungen

- Algorithmic Results in List DecodingDokument91 SeitenAlgorithmic Results in List DecodingIip SatrianiNoch keine Bewertungen

- Mini ProjectDokument26 SeitenMini ProjectKarpagam KNoch keine Bewertungen

- Source CodingDokument35 SeitenSource CodingJoy NkiroteNoch keine Bewertungen

- MMC Module 3 Chapter 1Dokument32 SeitenMMC Module 3 Chapter 1Sourabh dhNoch keine Bewertungen

- A Huffman-Based Text Encryption Algorithm: H (X) Leads To Zero Redundancy, That Is, Has The ExactDokument7 SeitenA Huffman-Based Text Encryption Algorithm: H (X) Leads To Zero Redundancy, That Is, Has The ExactMalik ImranNoch keine Bewertungen

- Huffman Coding SchemeDokument59 SeitenHuffman Coding SchemeSadaf RasheedNoch keine Bewertungen

- 1 History of Coding TheoryDokument7 Seiten1 History of Coding TheoryAbdalmoedAlaiashyNoch keine Bewertungen

- Using Linear Programming To Decode Binary Linear CodecsDokument19 SeitenUsing Linear Programming To Decode Binary Linear CodecsTony StarkNoch keine Bewertungen

- Universal Code (Data Compression)Dokument3 SeitenUniversal Code (Data Compression)nigel989Noch keine Bewertungen

- INFORMATION THEORY NOTESDokument3 SeitenINFORMATION THEORY NOTESvanchip28Noch keine Bewertungen

- Huffman Codes and Its Implementation: Submitted by Kesarwani Aashita Int. M.Sc. in Applied Mathematics (3 Year)Dokument28 SeitenHuffman Codes and Its Implementation: Submitted by Kesarwani Aashita Int. M.Sc. in Applied Mathematics (3 Year)headgoonNoch keine Bewertungen

- Raptorq-Technical-Overview 4Dokument12 SeitenRaptorq-Technical-Overview 4Jean Baptiste LE STANGNoch keine Bewertungen

- Chapter Two - Part 1Dokument21 SeitenChapter Two - Part 1Saif AhmedNoch keine Bewertungen

- Short QuesDokument3 SeitenShort QuesSuraj RamakrishnanNoch keine Bewertungen

- MIT 6.02 Lecture Notes on Source Coding AlgorithmsDokument17 SeitenMIT 6.02 Lecture Notes on Source Coding Algorithmsrafael ribasNoch keine Bewertungen

- Encoding Algorithms and Source Coding TheoremDokument15 SeitenEncoding Algorithms and Source Coding Theoremabhilash gowdaNoch keine Bewertungen

- 2.1. List Decoding of Polar Codes 2015Dokument14 Seiten2.1. List Decoding of Polar Codes 2015Bui Van ThanhNoch keine Bewertungen

- EE256!! - Sheet 6 - Spring2023Dokument1 SeiteEE256!! - Sheet 6 - Spring2023Osama Mohamed Mohamed ShabanaNoch keine Bewertungen

- Codes and Binary Codes: Group 5Dokument5 SeitenCodes and Binary Codes: Group 5Cham RosarioNoch keine Bewertungen

- Journal of Discrete Algorithms: Satoshi Yoshida, Takuya KidaDokument12 SeitenJournal of Discrete Algorithms: Satoshi Yoshida, Takuya KidaChaRly CvNoch keine Bewertungen

- 062 Teorija Informacije Aritmeticko KodiranjeDokument22 Seiten062 Teorija Informacije Aritmeticko KodiranjeAidaVeispahicNoch keine Bewertungen

- Error-Correction on Non-Standard Communication ChannelsVon EverandError-Correction on Non-Standard Communication ChannelsNoch keine Bewertungen

- Computer Assignment 2 EE430Dokument1 SeiteComputer Assignment 2 EE430ah_shaliNoch keine Bewertungen

- CR IntroDokument68 SeitenCR Introah_shaliNoch keine Bewertungen

- Chaos Based-Cryptogarphy ReportDokument5 SeitenChaos Based-Cryptogarphy Reportah_shaliNoch keine Bewertungen

- Ieee PoliciesDokument80 SeitenIeee Policiesah_shaliNoch keine Bewertungen

- Self PlagiarismDokument1 SeiteSelf Plagiarismah_shaliNoch keine Bewertungen

- HomeworkDokument3 SeitenHomeworkah_shaliNoch keine Bewertungen

- Coding Theory Course 1 - Block Code and Finite FieldDokument70 SeitenCoding Theory Course 1 - Block Code and Finite Fieldah_shaliNoch keine Bewertungen

- Part 2 Linear Block Codes: 1.14 Definition and Generator MatrixDokument12 SeitenPart 2 Linear Block Codes: 1.14 Definition and Generator Matrixah_shaliNoch keine Bewertungen

- 20150101c Chapter 5plus6Dokument28 Seiten20150101c Chapter 5plus6ah_shaliNoch keine Bewertungen

- Coding Theory Course 1 - Block Code and Finite FieldDokument70 SeitenCoding Theory Course 1 - Block Code and Finite Fieldah_shaliNoch keine Bewertungen

- Self PlagiarismDokument1 SeiteSelf Plagiarismah_shaliNoch keine Bewertungen

- CFP LocalizationDokument1 SeiteCFP Localizationah_shaliNoch keine Bewertungen

- Module 2 Introduction To SIMULINKDokument15 SeitenModule 2 Introduction To SIMULINKnecdetdalgicNoch keine Bewertungen

- Adaptive Coding Modulation ACMDokument28 SeitenAdaptive Coding Modulation ACMah_shaliNoch keine Bewertungen

- ICW2784Dokument7 SeitenICW2784ah_shaliNoch keine Bewertungen

- Cooperative Sensing Among Cognitive RadiosDokument6 SeitenCooperative Sensing Among Cognitive Radiosah_shaliNoch keine Bewertungen

- Maximizing MIMO Capacity with Space-Time CodingDokument57 SeitenMaximizing MIMO Capacity with Space-Time Codingah_shaliNoch keine Bewertungen

- Maximizing MIMO Capacity with Space-Time CodingDokument57 SeitenMaximizing MIMO Capacity with Space-Time Codingah_shaliNoch keine Bewertungen

- Emerging 2010 5 30 40053Dokument6 SeitenEmerging 2010 5 30 40053ah_shaliNoch keine Bewertungen

- Matlab Tutorial IntermediateDokument8 SeitenMatlab Tutorial Intermediateah_shaliNoch keine Bewertungen

- Kalman Filtering: Overview of This Case StudyDokument11 SeitenKalman Filtering: Overview of This Case Studyah_shali100% (1)

- An Overview of MIMO Systems inDokument70 SeitenAn Overview of MIMO Systems inkrishnagdeshpande0% (1)

- Volume 3number 1PP 13 18Dokument6 SeitenVolume 3number 1PP 13 18ah_shaliNoch keine Bewertungen

- Functional Analysis Theory and Applications R E EdwardsDokument795 SeitenFunctional Analysis Theory and Applications R E EdwardsIng Emerson80% (5)

- 10 1 1 48 9579-4 PsDokument10 Seiten10 1 1 48 9579-4 Psah_shaliNoch keine Bewertungen

- Fundamentals FFT Based AnalysisDokument20 SeitenFundamentals FFT Based AnalysisPriyanka BakliwalNoch keine Bewertungen

- Estimation TheoryDokument40 SeitenEstimation TheoryCostanzo ManesNoch keine Bewertungen

- Fundamentals FFT Based AnalysisDokument20 SeitenFundamentals FFT Based AnalysisPriyanka BakliwalNoch keine Bewertungen

- Blind Signal Separationdeconvolution Using Recurrent Neural NetworksDokument4 SeitenBlind Signal Separationdeconvolution Using Recurrent Neural Networksah_shali100% (1)

- Boiler Check ListDokument4 SeitenBoiler Check ListFrancis VinoNoch keine Bewertungen

- Individual Assignment ScribdDokument4 SeitenIndividual Assignment ScribdDharna KachrooNoch keine Bewertungen

- Understanding Oscilloscope BasicsDokument29 SeitenUnderstanding Oscilloscope BasicsRidima AhmedNoch keine Bewertungen

- Obiafatimajane Chapter 3 Lesson 7Dokument17 SeitenObiafatimajane Chapter 3 Lesson 7Ayela Kim PiliNoch keine Bewertungen

- Easa Ad Us-2017-09-04 1Dokument7 SeitenEasa Ad Us-2017-09-04 1Jose Miguel Atehortua ArenasNoch keine Bewertungen

- Module 2Dokument42 SeitenModule 2DhananjayaNoch keine Bewertungen

- Childrens Ideas Science0Dokument7 SeitenChildrens Ideas Science0Kurtis HarperNoch keine Bewertungen

- The Singular Mind of Terry Tao - The New York TimesDokument13 SeitenThe Singular Mind of Terry Tao - The New York TimesX FlaneurNoch keine Bewertungen

- Feasibility StudyDokument47 SeitenFeasibility StudyCyril Fragata100% (1)

- Artificial IseminationDokument6 SeitenArtificial IseminationHafiz Muhammad Zain-Ul AbedinNoch keine Bewertungen

- New Brunswick CDS - 2020-2021Dokument31 SeitenNew Brunswick CDS - 2020-2021sonukakandhe007Noch keine Bewertungen

- Control SystemsDokument269 SeitenControl SystemsAntonis SiderisNoch keine Bewertungen

- Furnace ITV Color Camera: Series FK-CF-3712Dokument2 SeitenFurnace ITV Color Camera: Series FK-CF-3712Italo Rodrigues100% (1)

- Your Inquiry EPALISPM Euro PalletsDokument3 SeitenYour Inquiry EPALISPM Euro PalletsChristopher EvansNoch keine Bewertungen

- Amana PLE8317W2 Service ManualDokument113 SeitenAmana PLE8317W2 Service ManualSchneksNoch keine Bewertungen

- NGPDU For BS SelectDokument14 SeitenNGPDU For BS SelectMario RamosNoch keine Bewertungen

- CGV 18cs67 Lab ManualDokument45 SeitenCGV 18cs67 Lab ManualNagamani DNoch keine Bewertungen

- Creatures Since Possible Tanks Regarding Dengue Transmission A Planned Out ReviewjnspeDokument1 SeiteCreatures Since Possible Tanks Regarding Dengue Transmission A Planned Out Reviewjnspeclientsunday82Noch keine Bewertungen

- CGSC Sales Method - Official Sales ScriptDokument12 SeitenCGSC Sales Method - Official Sales ScriptAlan FerreiraNoch keine Bewertungen

- 2019-10 Best Practices For Ovirt Backup and Recovery PDFDokument33 Seiten2019-10 Best Practices For Ovirt Backup and Recovery PDFAntonius SonyNoch keine Bewertungen

- Emerson Park Master Plan 2015 DraftDokument93 SeitenEmerson Park Master Plan 2015 DraftRyan DeffenbaughNoch keine Bewertungen

- The Learners Demonstrate An Understanding Of: The Learners Should Be Able To: The Learners Should Be Able ToDokument21 SeitenThe Learners Demonstrate An Understanding Of: The Learners Should Be Able To: The Learners Should Be Able ToBik Bok50% (2)

- Brain, Behavior, and Immunity: Alok Kumar, David J. LoaneDokument11 SeitenBrain, Behavior, and Immunity: Alok Kumar, David J. LoaneRinaldy TejaNoch keine Bewertungen

- SiBRAIN For PIC PIC18F57Q43 SchematicDokument1 SeiteSiBRAIN For PIC PIC18F57Q43 Schematicivanfco11Noch keine Bewertungen

- Course: Citizenship Education and Community Engagement: (8604) Assignment # 1Dokument16 SeitenCourse: Citizenship Education and Community Engagement: (8604) Assignment # 1Amyna Rafy AwanNoch keine Bewertungen

- LON-Company-ENG 07 11 16Dokument28 SeitenLON-Company-ENG 07 11 16Zarko DramicaninNoch keine Bewertungen

- Extensive Reading Involves Learners Reading Texts For Enjoyment and To Develop General Reading SkillsDokument18 SeitenExtensive Reading Involves Learners Reading Texts For Enjoyment and To Develop General Reading SkillsG Andrilyn AlcantaraNoch keine Bewertungen

- Course Tutorial ASP - Net TrainingDokument67 SeitenCourse Tutorial ASP - Net Traininglanka.rkNoch keine Bewertungen

- Identifying The TopicDokument2 SeitenIdentifying The TopicrioNoch keine Bewertungen

- Investigatory Project Pesticide From RadishDokument4 SeitenInvestigatory Project Pesticide From Radishmax314100% (1)