Beruflich Dokumente

Kultur Dokumente

01 DataStage and IBM Information Server

Hochgeladen von

Christopher WilliamsCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

01 DataStage and IBM Information Server

Hochgeladen von

Christopher WilliamsCopyright:

Verfügbare Formate

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

Module 1 DataStage and IBM Information Server

DataStage is one of a number of products that execute in the environment

called Information Server. These products share a number of services,

such as login/security, reporting and metadata delivery, supplied via an

application server and accessing a common repository that is at the heart

of Information Server.

This module outlines the architecture of Information Server and shows

how one or more DataStage servers, and other products, are able to share

the services. It helps you to understand the topology and, consequently,

why mechanisms such as the login mechanism for DataStage work as they

do.

Objectives

Having completed this module you will be able:

to name the three servers that must be available in order for

DataStage to execute jobs and to state the purpose of each

to name the three DataStage client tools used in version 8 and to

state the purpose of each

to state what DataStage does

Page 1-1

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

IBM Information Server

Following their acquisition of Ascential Software in late 2005, IBM used

the parallel execution technology that came with that acquisition to build a

software platform on which many related products could operate in a very

collaborative fashion, sharing a number of resources including security,

metadata handling and information delivery. This platform was called

IBM Information Server.

Initially the IBM Information Server and its associated products were

grouped under the WebSphere brand but, in 2008, IBM introduced another

brand, InfoSphere, and incorporated the information integration products

under that brand. Thus, for example, version 8.0 of DataStage was called

IBM WebSphere DataStage but version 8.1 is called IBM InfoSphere

DataStage.

Therefore, technically, the full name is now IBM InfoSphere Information

Server, and of DataStage it is IBM InfoSphere DataStage. These are too

much of a mouthful for regular use, so they tend to be shortened to just

"Information Server" and "DataStage" respectively. The same is true of all

the other products in the InfoSphere brand.

Information Server is a data integration platform consisting of a unified

metadata layer, a number of services and a suite of products that consume

those services and perform various information integration tasks such as

data profiling, cleansing, transformation and delivery, all on a parallel

execution platform that provides for unlimited scalability (provided that

the systems on which it is running can deliver sufficient resources).

The Information Server server itself can be installed on a number of UNIX

operating systems (AIX, Solaris and HP-UX), on selected Linux operating

systems (including zLinux, which is on a zSeries mainframe) and on

Windows Server 2003 Service Pack 2.1

Information can be sourced from, and written to, a very large range of

heterogeneous sources through various protocols including native APIs,

ODBC, streams I/O (for sequential files), FTP and others. Connectivity is

provided to structured, unstructured, mainframe and application-sourced

data.

This information is correct at time of writing but may change over time. Always consult

the IBM website for up to date information about supported operating systems. There are also

operating system version dependencies; for example Information Server version 8.0 only versions

9 and 10 of the Solaris operating system are supported. At the time of writing the website to

consult is http://www.ibm.com/software/data/infosphere/info-server/overview/requirements2.html

Page 1-2

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

Information Server Database

Associated with Information Server is a database that serves as a common

repository for metadata used by any of the products in the suite. Metadata

integration allows the various products in the suite to share the same

metadata, permitting easy collaboration between business analysts, data

architects, DataStage developers, business intelligence report developers

and even end-users of the data.

The database may be (at the time of writing) DB2 version 9, Oracle

version 10g or Microsoft SQL Server 2003 (the last only when installed on

a Windows operating system).

Although with appropriate DBA tools you could investigate the structure

of this database it is quite complex, containing 874 related tables, so it is

recommended to regard it as opaque. By default the schema name for this

database is XMETA.

Products do not access the data directly. Instead they invoke services

(delivered through a WebSphere Application Server) that provide access to

the metadata.

Page 1-3

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

Information Server Services

Information Server includes a number of services that perform specific

tasks. Among these are services that manage:

login and security

metadata delivery

connectivity

reporting

The services themselves are invisible to the products that use them. They

are delivered through a WebSphere Application Server (WAS) over what

is called the "Application Server Backbone" (ASB). If the product server

such as a DataStage server is on a different machine than the WAS,

then an ASB agent process must be started to effect delivery of services to

the particular product. This, too, is invisible to the user of the product.

For example to connect a DataStage client to a DataStage server, the user

supplies the name (or IP address) of the machine hosting the Information

Server, plus a user ID and password. This is relayed by the login/security

service to the Information Server which authenticates the user then, if due

authority exists, sends back to the login screen via the login/security

service again a list of available DataStage servers and possibly,

depending on which client is being used, the projects associated with each.

While this may seem quite complex it actually simplifies administration

because, for example, there is a single place where security information

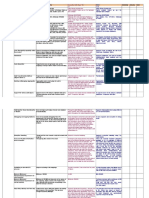

needs to be maintained, that is the Information Server. Figure 1-1 shows

the relationship between Information Server, its services and products.

Page 1-4

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

Figure 1-1 Relationship between Information Server and its Product Suite

Page 1-5

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

Information Server Products

The range of Information Server products is indicated in Figure 1-1 and

discussed more fully below.

Each of the products is separately licensed (that is, optionally purchased

with Information Server). Table 1-1 is a list of Information Server

products in existence at the time of writing. Roadmaps for future products

and for enhancements to existing products tend to be announced at major

conferences, such as IBM Information on Demand.

Again, be aware that IBM prefixes each of the product names shown in

Table 1-1 with "IBM InfoSphere" this is important when dealing with

IBM and its resellers and support agencies.

Page 1-6

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

Table 1-1 Information Server Products

Product

Description

Business Glossary

Creates, manages and searches metadata and

associates technical, process and business

metadata.2

Change Data Capture

Real time change capture (from transaction logs).

At the time of writing available only for DB2

and Oracle.

Connectivity Software

Efficient and cost-effective cross-platform, high

speed, real time, batch and change-only

integration for data sources.

DataStage

Extracts, transforms and loads data between

multiple sources and targets.

FastTrack

Simplifies and streamlines communication

between business analyst and developer by

capturing business requirements and translating

into DataStage ETL jobs.

Federation Server

Defines integrated views across diverse and

distributed information sources including costbased query optimization and integrated cache.

Information Analyzer

Profiles and establishes an understanding of

source systems and monitors data rules.

Information Services

Director

Allows information integration and access

processes to be publishable as reusable services

in a service-oriented architecture.

Metadata Workbench

Provides end-to-end metadata management,

depicting the relationships between sources and

consumers.

QualityStage

Standardizes and matches information from

heterogenous sources using probabilistic

algorithms.

What Is DataStage?

We begin with a few words from IBM marketing.

2

Business Glossary Anywhere was introduced in version 8.1. This allows any term

selected in any Windows application to be looked up in the business glossary.

Page 1-7

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

DataStage supports the collection, integration and transformation of large

volumes of data, with data structures ranging from simple to highly

complex. DataStage manages data arriving in real-time as well as data

received on a periodic or scheduled basis.

The scalable platform enables companies to solve large-scale business

problems through high-performance processing of massive data volumes.

By leveraging the parallel processing capabilities of multiprocessor

hardware platforms, DataStage Enterprise Edition can scale to satisfy the

demands of ever-growing data volumes, stringent real-time requirements,

and ever shrinking batch windows.

Comprehensive source and target support for a virtually unlimited number

of heterogeneous data sources and targets in a single job includes text

files; complex data structures in XML; ERP systems such as SAP and

PeopleSoft; almost any database (including partitioned databases); web

services; and business intelligence tools like SAS.

Real-time data integration support operates in real-time. It captures

messages from Message Oriented Middleware (MOM) queues using JMS

or WebSphere MQ adapters to seamlessly combine data into conforming

operational and historical analysis perspectives. Information Services

Director provides a service-oriented architecture (SOA) for publishing

data integration logic as shared services that can be reused across the

enterprise. These services are capable of simultaneously supporting highspeed, high reliability requirements of transactional processing and the

high volume bulk data requirements of batch processing.

Advanced maintenance and development enables developers to maximize

speed, flexibility and effectiveness in building, deploying, updating and

managing their data integration infrastructure. Full data integration

reduces the development and maintenance cycle for data integration

projects by simplifying administration and maximizing development

resources.

Complete connectivity between any data source and any application

ensures that the most relevant, complete and accurate data is integrated

and used by the most popular enterprise application software brands,

including SAP, Siebel, Oracle, and PeopleSoft.

Flexibility to perform information integration directly on the mainframe:

DataStage for Linux on System z provides ability to leverage existing

mainframe resources in order to maximize the value of your IT

investments; scalability, security, manageability and reliability of the

mainframe; and the ability to add mainframe information integration

work load without added z/OS operational costs.

So what is DataStage really?

Page 1-8

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

DataStage is, first and foremost, an "ETL" tool. In this context the

acronym stands for "extraction, transformation and load".

"Programming", or developing, with DataStage involves drawing a picture

of what you want to happen during the ETL processing, compiling that

into something that can be executed (a "job"), and then requesting its

execution and monitoring the results. Once the job has been properly

tested it can then be put into production.

Figure 1-2 DataStage Designer Design Area (Canvas)

Figure 1-2 shows the design of a simple parallel job. With knowledge of

what the various icons represent you could perform a walkthrough of

this code to explain it to someone else. For example:

Distribution data are received from the mainframe and detail and header

records retrieved separately from that before being joined so that each

detail row includes its header information.

One copy of these data is stored for further processing. The other copy of

these data is summarized and the summary is stored for further

processing.

Page 1-9

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

DataStage Editions

There are three different editions of DataStage, indicating what job types

are available.

Server edition allows only server jobs (which must be executed in

a single stream on a server) and job sequences. Transformer stages

in server jobs generate code in a language called DataStage

BASIC. Server routines also use this language.

Enterprise edition allows server jobs, parallel jobs and job

sequences. It is with parallel jobs that these training materials are

concerned. Transformer stages in parallel jobs generate code in a

language called C++ (therefore require that a compatible C++

exists on the DataStage server). Parallel routines are also written

in C++.

Enterprise MVS edition allows server jobs, parallel jobs,

mainframe jobs and job sequences. Mainframe jobs are designed

using the Designer client but generate COBOL source code and job

control language (JCL) for compiling and executing that code on a

mainframe machine.

DataStage Clients

There are three DataStage client tools that are installed when DataStage

client software is installed on a Windows PC. These client tools are as

follows (the illustrated shortcut icons may be installed on the desktop

when the DataStage client software is installed).

Administrator client is used

by an administrator to

create, delete and configure

DataStage projects and

may be used by authorized

DataStage developers to edit some of the properties of a

project.

Designer client is used to

draw the pictures of

DataStage job designs (for

example Figure 1-2), to

compile job designs and to manage metadata associated

with those designs.

Director client may be used

to request execution of

compiled DataStage jobs, to

Page 1-10

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

review their logs, and to monitor their execution. Director

may also be used by a DataStage administrator to alter

default values of job parameters in a particular project.

We will examine each of these client tools in subsequent modules.

Organization of DataStage Server

On a DataStage server there are two engines and one or more projects.

One engine (the server engine) is responsible for server jobs and job

sequences. This engines location may be determined as the value of the

DSHOME environment variable and/or the pathname stored in the hidden

file .dshome in the root directory. If the DataStage server is installed on a

Windows operating system then the location of the server engine is stored

in the Windows Registry. The name of the directory in which the server

engine is located is Engine (UNIX) or DSEngine (Windows).

The other engine (the parallel engine) is responsible for parallel jobs,

and components of it must be accessible to every node involved in the

execution of a parallel job. This engines location may be determined as

the value of the APT_ORCHHOME environment variable, which may be

repeated as the PXHOME environment variable. The name of the

directory in which the parallel engine is located is PXEngine.

Ordinarily these engines are in sibling directories. That is, the Engine and

PXEngine directories share the same parent directory.

A DataStage project is a directory containing various files related to

storage of metadata. As well, a DataStage project is a schema in a local

database in which other items of metadata are stored. There is a separate

module on metadata and metadata management.

Typically a DataStage server has one project per subject area, and has

separate projects within a subject area for development, testing and

production. These do not have to be on the same server. Additional

projects may be created for training and experimentation.

DataStage Terminology

DataStage is programmed by drawing a picture of the desired ETL

process. This is then compiled into an executable object called a job.

Each job is made up of stages, which perform specific tasks, and links

between then, which represent the flow of data between stages.

Page 1-11

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

Stages are represented in the graphic design by rectangular icons, and

links are represented in the graphic design by arrows joining pairs of

stages.

In the special case of job sequences, which exert control rather than

processing data, we refer to activities rather than stages, and triggers

rather than links.

Page 1-12

DataStage Fundamentals (version 8 parallel jobs)

Staffordshire, December 2010

Review

There are three servers that must be available in order for DataStage to

execute jobs or job sequences. These servers are:

IBM Information Server, which hosts the common metadata

repository for all Information Server products.

WebSphere Application Server, which exposes services through

which products access metadata in the common repository.

DataStage Server, which hosts DataStage projects and where job

execution is initiated.

The three DataStage clients available in version 8.0 are as follows.

Administrator client creates and deletes projects, manages NLS

settings and sets project-wide properties including environment

variables accessible to the project.

Designer client manages metadata and provides a graphical user

interface for designing and compiling DataStage jobs.

Director client allows jobs status and logs to be inspected, and

jobs to be monitored. There is ability to run jobs immediately or

using the operating system scheduler.

DataStage is an ETL (extraction, transformation, load) tool. It can retrieve

data from many different sources, effect transformations on those data,

and load those data, or prepare those data for loading, into many different

targets.

Further Reading

Introduction to IBM Information Server (in documentation set3)

particularly Chapters 2 and 7.

IBM Information Server information center

(http://publib.boulder.ibm.com/infocenter/iisinfsv/v8r0/index.jsp)

Parallel Job Developer Guide (in documentation set) Chapter 1

Start > All Programs > IBM Information Server > Documentation

Page 1-13

Das könnte Ihnen auch gefallen

- DataStage How To Kick StartDokument133 SeitenDataStage How To Kick StartAlex Lawson100% (2)

- Ds QuestionsDokument11 SeitenDs Questionsabreddy2003Noch keine Bewertungen

- ETL Tools Business CaseDokument12 SeitenETL Tools Business Casebdeepak80Noch keine Bewertungen

- Business Case: Why Do We Need ETL Tools?Dokument14 SeitenBusiness Case: Why Do We Need ETL Tools?rajepatiNoch keine Bewertungen

- Etl Tool ComparisionDokument7 SeitenEtl Tool ComparisionShravan KumarNoch keine Bewertungen

- Icrosoft SQL Server Is A Relational Database ManagementDokument16 SeitenIcrosoft SQL Server Is A Relational Database Managementabijith1Noch keine Bewertungen

- Informatica Material BeginnerDokument29 SeitenInformatica Material BeginneretlvmaheshNoch keine Bewertungen

- Analysis and DesignDokument9 SeitenAnalysis and DesignsanaNoch keine Bewertungen

- White Paper Product Release InformationDokument29 SeitenWhite Paper Product Release InformationkifrakNoch keine Bewertungen

- Web MehodsDokument21 SeitenWeb MehodsNehaNoch keine Bewertungen

- Pipeline Parallelism 2. Partition ParallelismDokument12 SeitenPipeline Parallelism 2. Partition ParallelismVarun GuptaNoch keine Bewertungen

- 000 Basics and IntroductionDokument7 Seiten000 Basics and IntroductionsureshtNoch keine Bewertungen

- Ibm IisDokument103 SeitenIbm IisManjunathNoch keine Bewertungen

- DA Unit 5Dokument28 SeitenDA Unit 5MadhukarNoch keine Bewertungen

- DataStage Metadata Management (version 8 jobsDokument23 SeitenDataStage Metadata Management (version 8 jobsChristopher WilliamsNoch keine Bewertungen

- Data Warehouse EnterpriseDokument15 SeitenData Warehouse Enterprisepadmabati_vaniNoch keine Bewertungen

- Technology Architecture and ETL Tools OverviewDokument20 SeitenTechnology Architecture and ETL Tools OverviewJorge GarzaNoch keine Bewertungen

- Fabric Get StartedDokument423 SeitenFabric Get StartedBrajesh Kumar (Bhati)Noch keine Bewertungen

- Fabric Get StartedDokument99 SeitenFabric Get StartedIsmail CassiemNoch keine Bewertungen

- Beginning Jakarta EE: Enterprise Edition for Java: From Novice to ProfessionalVon EverandBeginning Jakarta EE: Enterprise Edition for Java: From Novice to ProfessionalNoch keine Bewertungen

- Cs ProjectDokument20 SeitenCs ProjectAthish J MNoch keine Bewertungen

- 1 Das SDIL Smart Data TestbedDokument8 Seiten1 Das SDIL Smart Data TestbedfawwazNoch keine Bewertungen

- Introduction To InformaticaDokument66 SeitenIntroduction To InformaticaShravan KumarNoch keine Bewertungen

- BI Products-ComparisonDokument40 SeitenBI Products-Comparisonsharpan5Noch keine Bewertungen

- Sap Integration MethodsDokument5 SeitenSap Integration MethodsTariq AliNoch keine Bewertungen

- Datastage Overview: Processing Stage TypesDokument32 SeitenDatastage Overview: Processing Stage Typesabreddy2003Noch keine Bewertungen

- Med InsigDokument8 SeitenMed InsigShekhar MalooNoch keine Bewertungen

- What Is Informatica?Dokument5 SeitenWhat Is Informatica?Tapaswini NNoch keine Bewertungen

- ETL Tools: Basic Details About InformaticaDokument121 SeitenETL Tools: Basic Details About InformaticaL JanardanaNoch keine Bewertungen

- Server Roles and FeaturesDokument16 SeitenServer Roles and FeaturesCatherine AquinoNoch keine Bewertungen

- Oracle SQL ServerDokument24 SeitenOracle SQL ServerAlexPaniagua92Noch keine Bewertungen

- Oracle Data Integrator Installation GuideDokument47 SeitenOracle Data Integrator Installation GuideAmit Sharma100% (1)

- JD Edwards Building BlocksDokument4 SeitenJD Edwards Building BlocksKunal KapseNoch keine Bewertungen

- Assign1 SLDokument7 SeitenAssign1 SLAbhijith UpadhyaNoch keine Bewertungen

- Service Oriented Architecture: An Integration BlueprintVon EverandService Oriented Architecture: An Integration BlueprintNoch keine Bewertungen

- Metadata Tool ComparisonDokument72 SeitenMetadata Tool ComparisonVishant Shenoy K0% (1)

- Electronic Shop Management SystemDokument25 SeitenElectronic Shop Management SystemTata100% (2)

- DBMS Continuous Assessment 1Dokument27 SeitenDBMS Continuous Assessment 1Asna AnasNoch keine Bewertungen

- Datastage: Ascential SoftwareDokument4 SeitenDatastage: Ascential SoftwareAnonymous pDZaZjNoch keine Bewertungen

- Data Warehousing & BIDokument2 SeitenData Warehousing & BISatish MarruNoch keine Bewertungen

- Data Services ComponentsDokument25 SeitenData Services ComponentsACBNoch keine Bewertungen

- Azure Data Factory Interview Questions and AnswerDokument12 SeitenAzure Data Factory Interview Questions and AnswerMadhumitha PodishettyNoch keine Bewertungen

- Data Warehouse Operational Architecture: Keywords: DB2, Oracle, Data Warehouse Data Warehouse EnvironmentDokument14 SeitenData Warehouse Operational Architecture: Keywords: DB2, Oracle, Data Warehouse Data Warehouse EnvironmentRoberto GavidiaNoch keine Bewertungen

- Rto ReportDokument77 SeitenRto ReportAnup Gowda100% (1)

- What Is MySQLDokument7 SeitenWhat Is MySQLIsmail safeeraNoch keine Bewertungen

- InformaticalakshmiDokument15 SeitenInformaticalakshmiMahidhar LeoNoch keine Bewertungen

- HolisticBigData Transcript 5Dokument4 SeitenHolisticBigData Transcript 5ManikandanNoch keine Bewertungen

- Fusion Architecture and OverviewDokument4 SeitenFusion Architecture and OverviewOwais AhmedNoch keine Bewertungen

- Informatica PowercentreDokument298 SeitenInformatica PowercentreN ShaikNoch keine Bewertungen

- Relational Database ComponentsDokument6 SeitenRelational Database ComponentsSarathoos KhalilNoch keine Bewertungen

- Ab Initio ETL Tool OverviewDokument24 SeitenAb Initio ETL Tool OverviewVankayalapati SrikanthNoch keine Bewertungen

- Operational Business Intelligence Interview QuestionsDokument8 SeitenOperational Business Intelligence Interview QuestionsGurmesa DugasaNoch keine Bewertungen

- White Paper - What Is DataStageDokument10 SeitenWhite Paper - What Is DataStagevinaykumarnaveenNoch keine Bewertungen

- Ug en Di TosdiDokument452 SeitenUg en Di TosdiChristopher WilliamsNoch keine Bewertungen

- DataStage Metadata Management (version 8 jobsDokument23 SeitenDataStage Metadata Management (version 8 jobsChristopher WilliamsNoch keine Bewertungen

- DS Parallel Job Developers GuideDokument637 SeitenDS Parallel Job Developers Guideprinceanilb50% (2)

- Tyranny of WordsDokument143 SeitenTyranny of Wordsimmortamsmb100% (2)

- 03 Connecting Authentication and CredentialsDokument9 Seiten03 Connecting Authentication and CredentialsChristopher WilliamsNoch keine Bewertungen

- InTech-Fingerprint Matching Using A Hybrid Shape and Orientation DescriptorDokument33 SeitenInTech-Fingerprint Matching Using A Hybrid Shape and Orientation DescriptorChristopher WilliamsNoch keine Bewertungen

- 02 Principles of Parallel Execution and PartitioningDokument23 Seiten02 Principles of Parallel Execution and PartitioningChristopher WilliamsNoch keine Bewertungen

- InTech-Fingerprint Matching Using A Hybrid Shape and Orientation DescriptorDokument33 SeitenInTech-Fingerprint Matching Using A Hybrid Shape and Orientation DescriptorChristopher WilliamsNoch keine Bewertungen

- SharePoint 2013 Admin ManualDokument263 SeitenSharePoint 2013 Admin ManualChristopher Williams100% (2)

- Simple Image Processing Filters in CDokument4 SeitenSimple Image Processing Filters in CChristopher WilliamsNoch keine Bewertungen

- InTech-Fingerprint Matching Using A Hybrid Shape and Orientation DescriptorDokument33 SeitenInTech-Fingerprint Matching Using A Hybrid Shape and Orientation DescriptorChristopher WilliamsNoch keine Bewertungen

- Sp2013 Three TierDokument14 SeitenSp2013 Three TierChristopher WilliamsNoch keine Bewertungen

- DataStage Fundamentals EE v8 CollateralDokument2 SeitenDataStage Fundamentals EE v8 CollateralChristopher WilliamsNoch keine Bewertungen

- About Volante TechnologiesDokument5 SeitenAbout Volante TechnologiesLuis YañezNoch keine Bewertungen

- Tableau Competitive BattlecardDokument1 SeiteTableau Competitive BattlecardMiguel Mari BarojaNoch keine Bewertungen

- Discussion Questions BADokument11 SeitenDiscussion Questions BAsanjay.diddeeNoch keine Bewertungen

- From OBIEE or OBIA To Cloud: The FundamentalsDokument33 SeitenFrom OBIEE or OBIA To Cloud: The FundamentalsAnil KumarNoch keine Bewertungen

- Business intelligence projects for 2018-2019 policies and innovationsDokument3 SeitenBusiness intelligence projects for 2018-2019 policies and innovationsgearangelusNoch keine Bewertungen

- Data Analytics For The Iot /M2M Data: Lesson 9Dokument25 SeitenData Analytics For The Iot /M2M Data: Lesson 9Jayasree BaluguriNoch keine Bewertungen

- Guided Tutorial For Pentaho Data Integration Using OracleDokument41 SeitenGuided Tutorial For Pentaho Data Integration Using OracleAsalia ZavalaNoch keine Bewertungen

- Unit 7 Data Transformation and Loading: StructureDokument24 SeitenUnit 7 Data Transformation and Loading: StructuregaardiNoch keine Bewertungen

- What S New With SAP BW - 4HANA 2 PDFDokument39 SeitenWhat S New With SAP BW - 4HANA 2 PDFmayank gopalNoch keine Bewertungen

- OCIO - DBHIDS Data Governance Framework Implementation Plan v1Dokument62 SeitenOCIO - DBHIDS Data Governance Framework Implementation Plan v1Jonatan Loaiza VegaNoch keine Bewertungen

- Factsheet B2B PunchOut en 140623Dokument2 SeitenFactsheet B2B PunchOut en 140623Curtis GibsonNoch keine Bewertungen

- Magic Quadrant For Data Integration Tools 2007Dokument16 SeitenMagic Quadrant For Data Integration Tools 2007Sagardeep RoyNoch keine Bewertungen

- Integration Architecture Guide For Cloud and Hybrid Landscapes PDFDokument83 SeitenIntegration Architecture Guide For Cloud and Hybrid Landscapes PDFJann Paul Cortes SerratoNoch keine Bewertungen

- UE21CS342AA2 - Unit-1 Part - 2Dokument110 SeitenUE21CS342AA2 - Unit-1 Part - 2abhay spamNoch keine Bewertungen

- What's New: Informatica Cloud Data Integration April 2022Dokument29 SeitenWhat's New: Informatica Cloud Data Integration April 2022Prawin RamNoch keine Bewertungen

- BI Unit4Dokument83 SeitenBI Unit4ShadowOPNoch keine Bewertungen

- Software Engineering For Modern Web ApplicationsDokument403 SeitenSoftware Engineering For Modern Web ApplicationsPipe VergaraNoch keine Bewertungen

- Data Warehouse As A Backbone For Business IntelligenceDokument14 SeitenData Warehouse As A Backbone For Business IntelligencegilloteenNoch keine Bewertungen

- Talendopenstudio Di Ug 5.4.0 enDokument396 SeitenTalendopenstudio Di Ug 5.4.0 enDiogoMartinsNoch keine Bewertungen

- AI Magazine - 2022 - Chaudhri - Knowledge Graphs Introduction History and PerspectivesDokument13 SeitenAI Magazine - 2022 - Chaudhri - Knowledge Graphs Introduction History and PerspectivesSaty RaghavacharyNoch keine Bewertungen

- Chapter 1: Cognos 8Dokument12 SeitenChapter 1: Cognos 8siva_mmNoch keine Bewertungen

- Model Management 2.0 - Manipulating Richer MappingsDokument12 SeitenModel Management 2.0 - Manipulating Richer MappingsvthungNoch keine Bewertungen

- Bezu 2001Dokument133 SeitenBezu 2001Amol JdvNoch keine Bewertungen

- Administering Data Integration For EPMDokument172 SeitenAdministering Data Integration For EPMomda4uuNoch keine Bewertungen

- DS QlikView Connector For DataRoket enDokument5 SeitenDS QlikView Connector For DataRoket enbmanjula3658Noch keine Bewertungen

- Informatica PowerCenter and Data Quality Deliver Up to 5X Faster Performance on Oracle ExadataDokument8 SeitenInformatica PowerCenter and Data Quality Deliver Up to 5X Faster Performance on Oracle Exadatajakeer hussainNoch keine Bewertungen

- Informatica ReleaseDokument22 SeitenInformatica Releasenandy39Noch keine Bewertungen

- Gartner Reprint - TigergraphDokument13 SeitenGartner Reprint - TigergraphHenkNoch keine Bewertungen

- Data Integration Overview May 2020Dokument37 SeitenData Integration Overview May 2020zaymounNoch keine Bewertungen