Beruflich Dokumente

Kultur Dokumente

Open-Source Matlab Tools For Interpolation of Sdif Sinusoidal Synthesis Parameters

Hochgeladen von

passme3690 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

39 Ansichten4 SeitenICMC-AdditiveInterp

Originaltitel

ICMC-AdditiveInterp

Copyright

© © All Rights Reserved

Verfügbare Formate

PDF, TXT oder online auf Scribd lesen

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenICMC-AdditiveInterp

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

0 Bewertungen0% fanden dieses Dokument nützlich (0 Abstimmungen)

39 Ansichten4 SeitenOpen-Source Matlab Tools For Interpolation of Sdif Sinusoidal Synthesis Parameters

Hochgeladen von

passme369ICMC-AdditiveInterp

Copyright:

© All Rights Reserved

Verfügbare Formate

Als PDF, TXT herunterladen oder online auf Scribd lesen

Sie sind auf Seite 1von 4

OPEN-SOURCE MATLAB TOOLS FOR INTERPOLATION

OF SDIF SINUSOIDAL SYNTHESIS PARAMETERS

Matthew Wright

U.C. Berkeley and Stanford

CNMAT and CCRMA

matt@{cnmat.berkeley,ccrma.stanford}.edu

http://ccrma.stanford.edu/~matt/additive

ABSTRACT

We have implemented an open-source additive

synthesizer in the matlab language that reads sinusoidal

models from SDIF files and synthesizes them with a

variety of methods for interpolating frequency,

amplitude, and phase between frames. Currentlyimplemented interpolation techniques include linear

frequency and amplitude ignoring all but initial phase,

dB scale amplitude interpolation, stair-step, and cubic

polynomial phase interpolation. A plug-in architecture

separates the common handling of SDIF, births and

deaths of partials, etc., from the specifics of each

interpolation technique, making it easy to add more

interpolation techniques as well as increasing code

clarity and pedagogical value. We ran all synthesis

interpolation techniques on a collection of 107 SDIF

files and briefly discuss the perceptual differences

among the techniques for various cases.

1.

INTRODUCTION

Sinusoidal models are a favourite of the

analysis/synthesis community [1-7], as Risset and

Wessel describe in their review [8]. Sinusoidal analysis

almost always happens on a per-frame basis, so that the

continuous frequency, amplitude, and phase of each

resulting sinusoidal track are sampled, e.g., every 5ms.

An additive synthesizer must interpret these sampled

parameter values to produce continuous amplitude and

phase trajectories.

Although the basic idea of

synthesizing a sum of sinusoids each with given timevarying frequencies and amplitudes is clear enough,

there are many subtle variants having to do with the

handling of phase and with the interpolation of

frequency, amplitude, and phase between frames.

An additive synthesizer that simply keeps frequency

and amplitude constant from one analysis frame until

the next analysis frame generally introduces amplitude

and frequency discontinuities at every analysis frame,

resulting in undesirable synthesis artifacts. Therefore,

all additive synthesizers use some form of interpolation.

One of the main findings of the ICMC 2000

Analysis/Synthesis Comparison panel session [9] was

that sinusoidal modelling is not a single sound

model, but that each analysis technique makes

assumptions about how the synthesizer will interpolate

parameters. Here are some examples:

Linear interpolation of frequency and amplitude

between frames, ignoring all but initial phase of

each partial (SNDAN's pvan [10])

Julius O. Smith III

Stanford University

Center for Computer Research in Music and

Acoustics (CCRMA)

jos@ccrma.stanford.edu

Cubic polynomial phase interpolation allowing

frequency and phase continuity at each frame

point (IRCAM [11, 12], SNDAN's mqan)

Spline interpolation (PartialBench [13])

Linear interpolation of amplitude on dB scale

(SMS [14])

Adjust each frequency trajectory to meet the

partial's stated instantaneous phase by the time

of the next frame, e.g., by inserting a new

breakpoint halfway between two frames and

using linear interpolation (IRCAM)

Match phase only at transient times (Levine

[15], Loris [16])

So questions arise: Theoretically, should all of these

sinusoidal models be considered different sound

models or variants of the same model? Practically, how

much quality is lost when a sound model is synthesized

with a different interpolation method from the one

assumed by the analysis? How meaningful is it to

interchange sound descriptions between differing

assumptions about how synthesizers will interpolate?

How do we switch gracefully between a sinusoidal

model that preserves phase and one that doesnt, e.g.,

for preserving phase only at signal transients?

To address these practical questions, we have

implemented an open-source framework for sinusoidal

synthesis of SDIF (Sound Description Interchange

Format) [17] files. This allows us to synthesize

according to any sinusoidal model in an SDIF file (e.g.,

any of the analysis results of the ICMC 2000

Analysis/Synthesis Comparison) with any of a variety

of inter-frame parameter interpolation techniques.

2.

SDIF SINUSOIDAL TRACK MODELS

An SDIF file can contain any number of SDIF streams;

each SDIF stream represents one sound description.

(Most SDIF files contain a single stream, but SDIF can

also be used as an archive format.) An SDIF stream

consists of a time-ordered series of SDIF frames, each

with a 64-bit floating-point time tag. SDIF frames need

not be uniformly distributed in time, as is typical in

additive synthesis. Each SDIF Stream contains SDIF

Matrices; the interpretation of the rows and columns

depends on the Matrix Type ID.

SDIF has two nearly-identical sound description

types for sinusoidal models: 1TRC for arbitrary

sinusoidal tracks, and 1HRM for pseudo-harmonic

sinusoidal tracks. 1TRC frame matrices have a column

for partial index, an arbitrary number used to maintain

the identity of sinusoidal tracks across frames at

different times (as they potentially are born and die).

For 1HRM matrices the equivalent column specifies

harmonic number but otherwise has the same

semantics. For both types, the other three columns are

frequency (Hz), amplitude (linear), and phase (radians).

We use the terminology analysis frame as a

synonym for SDIF frame, because for sinusoidal track

models, each SDIF frame generally results from the

analysis (FFT, peak picking, etc.) of a single windowed

frame of the original input sound. We say synthesis

frame to indicate the time spanned by two adjacent

SDIF frames, because the synthesizer must interpolate

all sinusoid parameters from one analysis frame to the

next. In other words, an analysis frame is a single

SDIF frame, while a synthesis frame is the time

spanned by two adjacent SDIF frames.

3.

SOFTWARE ARCHITECTURE

Our synthesizer is implemented in the matlab language

and is available for download.1 It uses IRCAMs SDIF

Extension for Matlab [18], also available for download,2

to read SDIF files from disk into Matlab data structures

in memory. We designed the software architecture to

make it as easy as possible to plug in different methods

for interpolating sinusoidal track parameters between

SDIF frames. Therefore there are two parts of the

program:

1. The general-purpose part:

reading from an SDIF file

selecting a particular SDIF stream

selecting an arbitrary subset of the SDIF frames

error checking (for bad SDIF data)

making sure every partial track begins and ends

with zero amplitude, possibly extending a

partials duration by one frame to allow the

ampltude ramp.

matching partial tracks from frame to frame

(handling births and deaths of tracks, etc.)

allocating an array of current phase values for all

partial tracks and an array for output timedomain samples

dispatching to the appropriate synthesis

subroutine and calling it for each synthesis

frame

returning the resulting time-domain signal as a

matlab array

2. The interpolation-method-specific part: A collection

of synthesis inner loop subroutines, each

implementing one interpolation method. Each of

these routines is passed the starting and ending

frequency, amplitude, and phase for each partial

alive in the synthesis frame. The routines handle

the actual synthesis and accumulate synthesized

output samples into a given output array.

The arguments to our general-purpose synthesizer are

1. The name of the SDIF file

2. The sampling rate for the synthesis

3. The synthesis type (i.e., form of interpolation)

1

2

http://ccrma.stanford.edu/~matt/additive

http://www.ircam.fr/sdif/download.html

4.

5.

6.

(Optional) SDIF stream number indicating which

stream to synthesize. (If this argument is omitted,

the function uses the first stream of type 1TRC or

1HRM that appears in the file.)

(Optional) list of which frames (by number) to

synthesize. (If this argument is omitted, the

function synthesizes all the frames of the stream.)

(Optional) maximum partial index number.

The two halves of the program communicate via an

additive synthesis to-do list matrix whose rows are all

the partials that appear in a given synthesis frame and

whose columns are the frequency, amplitude, and phase

at the beginning and end of the synthesis frame, plus

the partial/harmonic index number (so that a synthesizer

can keep track of each partials running phase between

frames).

The other parameters of each synthesis

function are starting and ending sample numbers to

synthesize, and the synthesis sampling interval

(reciprocal of the synthesis sampling rate). The two

halves of the program also share an array called output

which is a global variable so that it can be passed by

reference.

4.

INTERPOLATION TECHNIQUES

4.1. Stair Step (no interpolation)

Here is the stair-step interpolation

technique,

performing no interpolation whatsoever, given as the

simplest example of a synthesis function that works in

this architecture. Note that it does not use the new

values of frequency, amplitude, or phase; all three

parameters may be discontinuous at synthesis frame

boundaries.

function

stairstep_synth(fromSamp, toSamp, todo, T)

for j

f =

a =

p =

= 1:size(todo,1)

todo(j, OLDFREQ) * 2 * pi;

todo(j, OLDAMP);

todo(j, OLDPHASE);

dp = f * T;

% Inner loop:

for i = fromSamp+1:toSamp

output(i)=output(i)+a*sin(p);

p = p + dp;

end

end

return

4.2. Stair-Step with Running Phase

This synthesis function also does no interpolation, but

it avoids phase discontinuities by keeping track of the

integral of instantaneous frequency for each partial.

Each partials running phase is stored in the persistent

global array phase, which is indexed by each partials

SDIF partial index.

4.3. Linear Amplitude & Frequency, Running Phase

This function linearly interpolates amplitude and

frequency across each synthesis frame. Initial phase for

each partial is respected when it first appears; afterwards,

phase is just the integral of the instantaneous frequency.

The inner loop is based on two variables: dp, the

per-sample change in phase (proportional to frequency),

and ddp, the per-sample change in dp (to provide

linear interpolation of instantaneous frequency):

% Additive synthesis inner loop:

dp = oldf * T;

ddp = (newf-oldf) * T / R;

a = olda;

R = toSample fromSample;

da = (newa-olda) / R;

for i = fromSample+1:toSample

output(i)=output(i)+a*sin(phases(index));

phases(index) = phases(index) + dp;

dp = dp + ddp;

a = a + da;

end

4.4. Linear Frequency, dB amplitude, Running Phase

This function is the same as the previous one, except

that amplitude is linearly interpolated on a dB scale

(with 0 dB equal to a linear amplitude of 1).

4.5. Cubic Polynomial Phase Interpolation

This function uses a cubic polynomial to interpolate the

unwrapped phase values [19]. Since frequency is the

derivative of phase, the given starting and ending

frequencies and phases provide a system of four

equations that can be solved to determine the four

coefficients of the cubic polynomial.

The unwrapping of phase works by first estimating

the number of complete cycles each sinusoid would go

through if the frequency were linearly interpolated. This

is then adjusted so that the ending phase, when wrapped

modulo 2*pi, gives the correct ending phase as specified

in the SDIF file. It can be shown that this rule is

equivalent to the closed-form expression derived in [16]

for obtaining maximally smooth phase trajectories.

5.

PERCEPTUAL RESULTS

The original reason for writing this program was to test

the audibility of the difference between linear frequency

interpolation and cubic phase interpolation.

We

conducted informal listening tests to compare the

different interpolation techniques

Comparing cubic phase to linear frequency, we found

lots of problems with the files synthesized by cubic

phase interpolation, which we attribute to unreasonable

phase values in the SDIF files. For example, in many

SDIF files, partials would often have nearly the same

frequency in consecutive frames, but with the phase in

the second frame nowhere near the initial phase plus the

integral of the average frequency. Thus the cubic

polynomial has to have a large frequency excursion in

order to meet the given phase at both endpoints,

resulting in very perceptible changes of pitch for nearly

all sounds. Another typical problem with cubic phase

interpolation is a raspy frame-rate artifact.

In a few cases the cubic phase interpolation sounds

better. For example, for the SDIF files produced by the

IRCAM analysis, the bongo roll is more crisp and the

angry cat has more depth with cubic phase

interpolation. In many cases the difference between

running phase and cubic phase was not audible,

including the tampura, giggle, speech, and the acoustic

guitar from the IRCAM analysis.

The stair-step technique, as one would expect, had

buzzing artifacts caused by frequency and amplitude

discontinuities at the synthesis-frame-rate. However, a

surprising number of SDIF files sounded good when

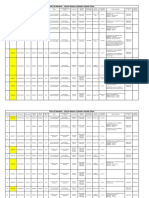

synthesized by this technique. Heres the breakdown of

the 23 sinusoidal models from the IRCAM analysis for

stair-step interpolation:

15 sounded terrible

acoustic guitar was near-perfect

suling and saxophone phrases were nearly

acceptable during steady held notes, but terrible

for note transitions

the tampura was ok (possibly because the

original sound is so buzzy that the artifacts are

masked)

trumpet and khyal vocals had only a few

artifacts with running phase; discontinuous

phase was much worse.

To our surprise, the yodel sounded perfect with

stair-step interpolation and discontinuous phase;

running phase had substantial artifacts.

In almost all cases there was no perceptual difference

between linear interpolation and dB amplitude

interpolation. The one exception was the berimbao,

which had slightly more depth with dB interpolation.

6.

FUTURE WORK

Naturally our convenient plug-in architecture invites the

implementation of additional interpolation techniques.

In particular, we would like to include some methods

based on overlap-add.

We would like to see members of the community

download our software and synthesize their own

sinusoidal models with multiple interpolation methods

to see how they sound.

We believe that this implementation has pedagogical

value in teaching additive synthesis; we would like to

develop further explanatory material, student projects,

etc.

For cases in which our informal listening tests found

that various interpolation techniques sounded the same,

it would be good to conduct proper psychoacoustic

listening tests so as to be able to quantify the similarity

properly.

Obviously the problems with most SDIF files

synthesized by our cubic phase interpolation warrant

further study.

We would like to extend SDIF to allow an explicit

representation of interpolation type for each parameter.

For a small amount of overhead, one could know what

form of synthesis interpolation was assumed when the

file was created; using an alternate form of interpolation

would then be an explicit transformation of the sound

model on par with frequency scaling, rather than a guess

on the part of the person running the synthesizer.

7.

ACKNOWLEDGEMENTS

Chris Chafe, Adrian Freed, Diemo Schwarz, David

Wessel.

8.

[1] 1.

[2] 2.

[3] 3.

[4] 4.

[5] 5.

[6] 6.

[7] 7.

[8] 8.

[9] 9.

[10] 10.

[11] 11.

[12] 12.

[13] 13.

[14] 14.

[15] 15.

REFERENCES

Risset, J.C. and M.V. Mathews, Analysis of

musical-instrument tones. Physics Today, 1969.

22(2): p. 23-32.

Schafer, R.W. and L.R. Rabiner, Design and

simulation of a speech analysis-synthesis system

based on short-time Fourier analysis. IEEE

Transactions on Audio and Electroacoustics, 1973.

AU-21(3): p. 165-74.

Crochiere, R.E., A weighted overlap-add method of

short-time Fourier analysis/synthesis. IEEE

Transactions on Acoustics, Speech and Signal

Processing, 1980. ASSP-28(1): p. 99-102.

McAulay, R.J. and T.F. Quatieri. Mid-rate coding

based on a sinusoidal representation of speech.

in IEEE International Conference on Acoustics,

Speech, and Signal Processing. 1985. Tampa, FL,

USA: IEEE.

Julius O. Smith, I., PARSHL Bibliography. 2003.

(http://ccrma.stanford.edu/~jos/parshl/Bibliograp

hy.html)

Amatriain, X., et al., Spectral Processing, in DAFX Digital Audio Effects, U. Zolzer, Editor. 2002, John

Wiley and Sons, LTD: West Sussex, England. p.

373-438.

Quatieri, T.F. and R.J. McAulay, Audio Signal

Processing Based on Sinusoidal

Analysis/Synthesis, in Applications of DSP to

Audio & Acoustics, M.K.a.K. Brandenburg, Editor.

1998, Kluwer Academic Publishers:

Boston/Dordrecht/London. p. 343-416.

Risset, J.-C. and D. Wessel, Exploration of Timbre

by Analysis Synthesis, in The Psychology of Music,

D. Deutsch, Editor. 1999, Academic Press: San

Diego.

Wright, M., et al., Analysis/Synthesis Comparison.

Organised Sound, 2000. 5(3): p. 173-189.

Beauchamp, J.W. Unix Workstation Software for

Analysis, Graphics, Modification, and Synthesis

of Musical Sounds. in Audio Engineering Society.

1993.

Rodet, X. The Diphone program: New features,

new synthesis methods, and experience of musical

use. in ICMC. 1997. Thessaloniki, Hellas: ICMA.

Rodet, X. Sound analysis, processing and

synthesis tools for music research and

production. in XIII CIM00. 2000. l'Aquila, Italy.

Rbel, A. Adaptive Additive Synthesis of Sound. in

ICMC. 1999. Beijing, China: ICMA.

Serra, X. and J. Smith, III, Spectral modeling

synthesis: a sound analysis/synthesis system

based on a deterministic plus stochastic

decomposition. Computer Music Journal, 1990.

14(4): p. 12-24.

Levine, S.N., Audio Representations for Data

Compression and Compressed Domain

Processing, in Electrical Engineering. 1998,

Stanford: Palo Alto, CA.

(http://ccrma.stanford.edu/~scottl/thesis.html)

[16] 16. Fitz, K., L. Haken, and P. Christensen. Transient

Preservation Under Transformation in an

Additive Sound Model. in ICMC. 2000. Berlin,

Germany: ICMA.

[17] 17. Wright, M., et al., Audio Applications of the Sound

Description Interchange Format Standard. 1999,

Audio Engineering Society: Audio Engineering

Society 107th Convention, preprint #5032.

(http://cnmat.CNMAT.Berkeley.EDU/AES99/docs/

AES99-SDIF.pdf)

[18] 18. Schwarz, D. and M. Wright. Extensions and

Applications of the SDIF Sound Description

Interchange Format. in International Computer

Music Conference. 2000. Berlin, Germany: ICMA.

[19] 19. McAulay, R.J. and T.F. Quatieri, Speech

Analysis/Synthesis Based on a Sinusoidal

Representation. IEEE Trans. Acoustics, Speech,

and Signal Processing, 1986. ASSP-34(4): p. 744754.

[20]

Das könnte Ihnen auch gefallen

- HenyaDokument6 SeitenHenyaKunnithi Sameunjai100% (1)

- Ec8562 Digital Signal Processing Laboratory 1953309632 Ec8562 Digital Signal Processing LabDokument81 SeitenEc8562 Digital Signal Processing Laboratory 1953309632 Ec8562 Digital Signal Processing LabVenkatesh KumarNoch keine Bewertungen

- DSP SamplingDokument36 SeitenDSP Samplingin_visible100% (1)

- Unit 1 - Gear Manufacturing ProcessDokument54 SeitenUnit 1 - Gear Manufacturing ProcessAkash DivateNoch keine Bewertungen

- Phased Array System ToolboxDokument7 SeitenPhased Array System Toolboxvlsi_geek100% (2)

- The Impact of School Facilities On The Learning EnvironmentDokument174 SeitenThe Impact of School Facilities On The Learning EnvironmentEnrry Sebastian71% (31)

- Molecules of Emotion: How Biochemicals Impact FeelingsDokument4 SeitenMolecules of Emotion: How Biochemicals Impact Feelingsmeliancurtean4659100% (2)

- Sirisha Kurakula G00831237 ProjectDokument12 SeitenSirisha Kurakula G00831237 ProjectSirisha Kurakula0% (1)

- Digital Spectral Analysis MATLAB® Software User GuideVon EverandDigital Spectral Analysis MATLAB® Software User GuideNoch keine Bewertungen

- Q& A Session 01 - NoisiaDokument18 SeitenQ& A Session 01 - Noisiapanner_23Noch keine Bewertungen

- Video Raster Parameters Analog Systems BandwidthDokument5 SeitenVideo Raster Parameters Analog Systems BandwidthAdeoti OladapoNoch keine Bewertungen

- Lab 06Dokument17 SeitenLab 06Nga V. DaoNoch keine Bewertungen

- Structure of The DemoDokument9 SeitenStructure of The DemoSrinivas HeroNoch keine Bewertungen

- How To Perform Frequency-Domain Analysis With Scilab - Technical ArticlesDokument8 SeitenHow To Perform Frequency-Domain Analysis With Scilab - Technical ArticlesRadh KamalNoch keine Bewertungen

- Simulation of EMI Filters Using MatlabDokument4 SeitenSimulation of EMI Filters Using MatlabinventionjournalsNoch keine Bewertungen

- Simulation of EMI Filters Using Matlab: M.Satish Kumar, Dr.A.JhansiraniDokument4 SeitenSimulation of EMI Filters Using Matlab: M.Satish Kumar, Dr.A.JhansiraniDhanush VishnuNoch keine Bewertungen

- Ec8562-Digital Signal Processing Laboratory-1953309632-Ec8562-Digital-Signal-Processing-LabDokument80 SeitenEc8562-Digital Signal Processing Laboratory-1953309632-Ec8562-Digital-Signal-Processing-LabRahul Anand K MNoch keine Bewertungen

- Generating artificial earthquake motions from response spectraDokument8 SeitenGenerating artificial earthquake motions from response spectraAngel J. AliceaNoch keine Bewertungen

- 1.DSP IntroductionDokument16 Seiten1.DSP IntroductionShubham BhaleraoNoch keine Bewertungen

- Ec8562 Digital Signal Processing LabDokument73 SeitenEc8562 Digital Signal Processing LabRaghul M100% (3)

- Experiment 9: Frame - Based ProcessingDokument7 SeitenExperiment 9: Frame - Based ProcessingTsega TeklewoldNoch keine Bewertungen

- Amil NIS: Department of Radioelectronics Faculty of Electrical Engineering Czech Tech. University in PragueDokument30 SeitenAmil NIS: Department of Radioelectronics Faculty of Electrical Engineering Czech Tech. University in PragueAr FatimzahraNoch keine Bewertungen

- Overcoming Signal Integrity Channel Modeling Issues Rev2 With FiguresDokument10 SeitenOvercoming Signal Integrity Channel Modeling Issues Rev2 With FiguresJohn BaprawskiNoch keine Bewertungen

- Modeling Heterogeneous Systems Using Systemc-Ams Case Study: A Wireless Sensor Network NodeDokument6 SeitenModeling Heterogeneous Systems Using Systemc-Ams Case Study: A Wireless Sensor Network Nodesimon9085Noch keine Bewertungen

- An Experimental SDIF Sampler in MaxMSPDokument4 SeitenAn Experimental SDIF Sampler in MaxMSPdesearcherNoch keine Bewertungen

- Lab # 4Dokument4 SeitenLab # 4rizwanghaniNoch keine Bewertungen

- SYSC 4607 - Lab4Dokument5 SeitenSYSC 4607 - Lab4Aleksandra DiotteNoch keine Bewertungen

- TheMatchedFilterTutorial Oct 2017Dokument4 SeitenTheMatchedFilterTutorial Oct 2017Ade Surya PutraNoch keine Bewertungen

- OFDM Paper - Independent Study - RIT - Rafael SueroDokument22 SeitenOFDM Paper - Independent Study - RIT - Rafael SueroRafael Clemente Suero SamboyNoch keine Bewertungen

- RF Design With Aplac Aaltonen1997Dokument6 SeitenRF Design With Aplac Aaltonen1997benitogaldos19gmail.comNoch keine Bewertungen

- 87291_92961v00_Modeling_WirelessDokument11 Seiten87291_92961v00_Modeling_WirelessHANZ V2Noch keine Bewertungen

- Introduction To Communications Toolbox in Matlab 7Dokument97 SeitenIntroduction To Communications Toolbox in Matlab 7Meonghun LeeNoch keine Bewertungen

- Interfacing and Applications of DSP Processor: UNIT-8Dokument24 SeitenInterfacing and Applications of DSP Processor: UNIT-8Bonsai TreeNoch keine Bewertungen

- Ieee 802.11N: On Performance of Channel Estimation Schemes Over Ofdm Mimo Spatially-Correlated Frequency Selective Fading TGN ChannelsDokument5 SeitenIeee 802.11N: On Performance of Channel Estimation Schemes Over Ofdm Mimo Spatially-Correlated Frequency Selective Fading TGN Channelszizo1921Noch keine Bewertungen

- Cognitive Radio Implemenation IeeeDokument6 SeitenCognitive Radio Implemenation Ieeeradhakodirekka8732Noch keine Bewertungen

- Real-Time Implementation of A 1/3-Octave Audio Equalizer Simulink Model Using TMS320C6416 DSPDokument6 SeitenReal-Time Implementation of A 1/3-Octave Audio Equalizer Simulink Model Using TMS320C6416 DSPBruno Garcia TejadaNoch keine Bewertungen

- OFDM Acoustic Modem Channel EstimationDokument4 SeitenOFDM Acoustic Modem Channel EstimationibrahimNoch keine Bewertungen

- Voice Analysis Using Short Time Fourier Transform and Cross Correlation MethodsDokument6 SeitenVoice Analysis Using Short Time Fourier Transform and Cross Correlation MethodsprasadNoch keine Bewertungen

- IEEE TRANSACTIONS ON COMMUNICATIONS, VOL. COM-34, NO. 5 , MAY 1986A BPSK/QPSK Timing-Error DetectorDokument7 SeitenIEEE TRANSACTIONS ON COMMUNICATIONS, VOL. COM-34, NO. 5 , MAY 1986A BPSK/QPSK Timing-Error DetectorFahmi MuradNoch keine Bewertungen

- Ansoft Designer ManualDokument11 SeitenAnsoft Designer ManualJorge SantamariaNoch keine Bewertungen

- Continuously Variable Fractional Rate Decimator: Application Note: Virtex-5, Virtex-4, Spartan-3Dokument11 SeitenContinuously Variable Fractional Rate Decimator: Application Note: Virtex-5, Virtex-4, Spartan-3ergatnsNoch keine Bewertungen

- Performance Analysis of OFDM For Different Modulation TechniquesDokument6 SeitenPerformance Analysis of OFDM For Different Modulation Techniquesrahman08413Noch keine Bewertungen

- IJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchDokument6 SeitenIJCER (WWW - Ijceronline.com) International Journal of Computational Engineering ResearchInternational Journal of computational Engineering research (IJCER)Noch keine Bewertungen

- V3S3-6 JamalPriceReportDokument10 SeitenV3S3-6 JamalPriceReportKrishna PrasadNoch keine Bewertungen

- Yasir Fawad (Presentation)Dokument20 SeitenYasir Fawad (Presentation)qazi hussain shahNoch keine Bewertungen

- Analysis of MSE-OFDM System in Multipath Fading Channels: Poonam Singh Saswat Chakrabarti R. V. Raja KumarDokument5 SeitenAnalysis of MSE-OFDM System in Multipath Fading Channels: Poonam Singh Saswat Chakrabarti R. V. Raja KumarehsanshahlaNoch keine Bewertungen

- Channel Estimation For OFDM Systems Using Adaptive Radial Basis Function NetworksDokument12 SeitenChannel Estimation For OFDM Systems Using Adaptive Radial Basis Function NetworksKala Praveen BagadiNoch keine Bewertungen

- Supporting The Sound Description Interchange Format in The Max/MSP EnvironmentDokument4 SeitenSupporting The Sound Description Interchange Format in The Max/MSP EnvironmentpasNoch keine Bewertungen

- Improved Analog Waveform Generation Using DDSDokument4 SeitenImproved Analog Waveform Generation Using DDSgezahegnNoch keine Bewertungen

- Sukh 04560087 SukhDokument7 SeitenSukh 04560087 SukhmalhiavtarsinghNoch keine Bewertungen

- Faculty of Engineering and Technology Multimedia University: ETM 4096 Digital Signal Processing TRIMESTER 1 (2012/2013)Dokument25 SeitenFaculty of Engineering and Technology Multimedia University: ETM 4096 Digital Signal Processing TRIMESTER 1 (2012/2013)鲁肃津Noch keine Bewertungen

- Module 3 - Lab-3Dokument4 SeitenModule 3 - Lab-3مبنيةنميب نتمبيةنNoch keine Bewertungen

- Performance Binary and Quaternary Direct-Sequence Spread-Spectrum Multiple-Access Systems With Random Signature SequencesDokument12 SeitenPerformance Binary and Quaternary Direct-Sequence Spread-Spectrum Multiple-Access Systems With Random Signature SequencesFrank PintoNoch keine Bewertungen

- Robust Frequency and Timing Synchronization For OFDM - Timothy M. SchmidlDokument9 SeitenRobust Frequency and Timing Synchronization For OFDM - Timothy M. SchmidlLuu Van HoanNoch keine Bewertungen

- DSP Lab - SAMPLE Viva QuestionsDokument8 SeitenDSP Lab - SAMPLE Viva Questionsadizz4Noch keine Bewertungen

- Manual 19032012Dokument33 SeitenManual 19032012Hirankur KhillareNoch keine Bewertungen

- Design and Implementation of OFDM Trans-Receiver For IEEE 802.11 (WLAN)Dokument13 SeitenDesign and Implementation of OFDM Trans-Receiver For IEEE 802.11 (WLAN)IJMERNoch keine Bewertungen

- Developing A MATLAB Code For Fundamental Frequency and Pitch Estimation From Audio SignalDokument16 SeitenDeveloping A MATLAB Code For Fundamental Frequency and Pitch Estimation From Audio SignalTrần ĐứcNoch keine Bewertungen

- Cadence Spectre Matlab ToolboxDokument11 SeitenCadence Spectre Matlab Toolboxabhi_nxp0% (1)

- OFDM Complete Block DiagramDokument2 SeitenOFDM Complete Block Diagramgaurav22decNoch keine Bewertungen

- Dynamic Power and Signal Integrity Analysis For Chip-Package-Board Co-Design and Co-SimulationDokument4 SeitenDynamic Power and Signal Integrity Analysis For Chip-Package-Board Co-Design and Co-SimulationApikShafieeNoch keine Bewertungen

- VoiceboxDokument10 SeitenVoiceboxManas AroraNoch keine Bewertungen

- OpenAirInterfaceTraining 072011Dokument123 SeitenOpenAirInterfaceTraining 072011niyassaitNoch keine Bewertungen

- Samir Kapoor, Daniel J. Marchok Yih-Fang HuangDokument4 SeitenSamir Kapoor, Daniel J. Marchok Yih-Fang HuangmalhiavtarsinghNoch keine Bewertungen

- DSP Lab Manual SignalsDokument73 SeitenDSP Lab Manual SignalsmaheshNoch keine Bewertungen

- Test 2Dokument1 SeiteTest 2passme369Noch keine Bewertungen

- EE264 w2015 Final Project HermanDokument4 SeitenEE264 w2015 Final Project Hermanpassme369Noch keine Bewertungen

- Waveshapesynth 4upDokument8 SeitenWaveshapesynth 4uppassme369Noch keine Bewertungen

- Magnetic Tape Manual 1Dokument128 SeitenMagnetic Tape Manual 1passme369Noch keine Bewertungen

- Image AnalogiesDokument14 SeitenImage Analogiespassme369Noch keine Bewertungen

- Surface Roughness of Thin Gold Films and Its Effects On The Proton Energy Loss StragglingDokument4 SeitenSurface Roughness of Thin Gold Films and Its Effects On The Proton Energy Loss Stragglingpassme369Noch keine Bewertungen

- VIRUS MIDI System Exclusive Implementation GuideDokument24 SeitenVIRUS MIDI System Exclusive Implementation Guidepassme369Noch keine Bewertungen

- Q Amp A Session 01 NoisiaDokument18 SeitenQ Amp A Session 01 Noisiapassme369Noch keine Bewertungen

- Time WalkerDokument1 SeiteTime Walkerpassme369Noch keine Bewertungen

- 665 Volterra 2008 PDFDokument50 Seiten665 Volterra 2008 PDFpassme369100% (1)

- VOLTERRA 2010 Dafx NovakDokument4 SeitenVOLTERRA 2010 Dafx Novakpassme369Noch keine Bewertungen

- WhatsApp Security WhitepaperDokument9 SeitenWhatsApp Security WhitepaperGreg BroilesNoch keine Bewertungen

- Design and Properties of FIR FiltersDokument67 SeitenDesign and Properties of FIR FiltersJohn NashNoch keine Bewertungen

- 499 Poster FinalDokument2 Seiten499 Poster Finalpassme369Noch keine Bewertungen

- Fractal Audio Systems MIMIC (TM) TechnologyDokument11 SeitenFractal Audio Systems MIMIC (TM) Technology55barNoch keine Bewertungen

- 499 Poster FinalDokument2 Seiten499 Poster Finalpassme369Noch keine Bewertungen

- Chapter 14 Hilbert FilterDokument58 SeitenChapter 14 Hilbert Filterpassme369Noch keine Bewertungen

- 499 Poster FinalDokument2 Seiten499 Poster Finalpassme369Noch keine Bewertungen

- RF 1Dokument31 SeitenRF 1passme369Noch keine Bewertungen

- Analysis/Synthesis Comparison: Organised Sound November 2000Dokument30 SeitenAnalysis/Synthesis Comparison: Organised Sound November 2000passme369Noch keine Bewertungen

- AKG C414 B Xls XlII ServiceDokument3 SeitenAKG C414 B Xls XlII Servicepassme369Noch keine Bewertungen

- Mateer ThesisDokument340 SeitenMateer Thesispassme369Noch keine Bewertungen

- Des MethodDokument60 SeitenDes MethodCaroline SimpkinsNoch keine Bewertungen

- 125-Classes of FireDokument4 Seiten125-Classes of FireMrinal Kanti RoyNoch keine Bewertungen

- Samsung DVD v6700 Xsa-BmDokument53 SeitenSamsung DVD v6700 Xsa-Bmpassme369Noch keine Bewertungen

- Selection and Location of Fire DetectorsDokument4 SeitenSelection and Location of Fire DetectorsakramhomriNoch keine Bewertungen

- Standards for Interagency Hotshot Crew Operations GuideDokument41 SeitenStandards for Interagency Hotshot Crew Operations Guidepassme369Noch keine Bewertungen

- 2023 Test Series-1Dokument2 Seiten2023 Test Series-1Touheed AhmadNoch keine Bewertungen

- Neuropsychological Deficits in Disordered Screen Use Behaviours - A Systematic Review and Meta-AnalysisDokument32 SeitenNeuropsychological Deficits in Disordered Screen Use Behaviours - A Systematic Review and Meta-AnalysisBang Pedro HattrickmerchNoch keine Bewertungen

- Built - in BeamsDokument23 SeitenBuilt - in BeamsMalingha SamuelNoch keine Bewertungen

- Maj. Terry McBurney IndictedDokument8 SeitenMaj. Terry McBurney IndictedUSA TODAY NetworkNoch keine Bewertungen

- Olympics Notes by Yousuf Jalal - PDF Version 1Dokument13 SeitenOlympics Notes by Yousuf Jalal - PDF Version 1saad jahangirNoch keine Bewertungen

- Chennai Metro Rail BoQ for Tunnel WorksDokument6 SeitenChennai Metro Rail BoQ for Tunnel WorksDEBASIS BARMANNoch keine Bewertungen

- ServiceDokument47 SeitenServiceMarko KoširNoch keine Bewertungen

- The Ultimate Advanced Family PDFDokument39 SeitenThe Ultimate Advanced Family PDFWandersonNoch keine Bewertungen

- Multiple Choice: CH142 Sample Exam 2 QuestionsDokument12 SeitenMultiple Choice: CH142 Sample Exam 2 QuestionsRiky GunawanNoch keine Bewertungen

- Impact of IT On LIS & Changing Role of LibrarianDokument15 SeitenImpact of IT On LIS & Changing Role of LibrarianshantashriNoch keine Bewertungen

- Federal Complaint of Molotov Cocktail Construction at Austin ProtestDokument8 SeitenFederal Complaint of Molotov Cocktail Construction at Austin ProtestAnonymous Pb39klJNoch keine Bewertungen

- DECA IMP GuidelinesDokument6 SeitenDECA IMP GuidelinesVuNguyen313Noch keine Bewertungen

- MODULE+4+ +Continuous+Probability+Distributions+2022+Dokument41 SeitenMODULE+4+ +Continuous+Probability+Distributions+2022+Hemis ResdNoch keine Bewertungen

- Pita Cyrel R. Activity 7Dokument5 SeitenPita Cyrel R. Activity 7Lucky Lynn AbreraNoch keine Bewertungen

- 2-Port Antenna Frequency Range Dual Polarization HPBW Adjust. Electr. DTDokument5 Seiten2-Port Antenna Frequency Range Dual Polarization HPBW Adjust. Electr. DTIbrahim JaberNoch keine Bewertungen

- Additional Help With OSCOLA Style GuidelinesDokument26 SeitenAdditional Help With OSCOLA Style GuidelinesThabooNoch keine Bewertungen

- 621F Ap4405ccgbDokument8 Seiten621F Ap4405ccgbAlwinNoch keine Bewertungen

- Os PPT-1Dokument12 SeitenOs PPT-1Dhanush MudigereNoch keine Bewertungen

- CMC Ready ReckonerxlsxDokument3 SeitenCMC Ready ReckonerxlsxShalaniNoch keine Bewertungen

- Web Api PDFDokument164 SeitenWeb Api PDFnazishNoch keine Bewertungen

- Exercises2 SolutionsDokument7 SeitenExercises2 Solutionspedroagv08Noch keine Bewertungen

- Intro To Gas DynamicsDokument8 SeitenIntro To Gas DynamicsMSK65Noch keine Bewertungen

- Traffic LightDokument19 SeitenTraffic LightDianne ParNoch keine Bewertungen

- GMWIN SoftwareDokument1 SeiteGMWIN SoftwareĐào Đình NamNoch keine Bewertungen

- Assignment 2 - Weather DerivativeDokument8 SeitenAssignment 2 - Weather DerivativeBrow SimonNoch keine Bewertungen

- Katie Tiller ResumeDokument4 SeitenKatie Tiller Resumeapi-439032471Noch keine Bewertungen