Beruflich Dokumente

Kultur Dokumente

Journal On Lyapunov Stability Theory Based Control of Uncertain Dynamical Systems

Hochgeladen von

niluOriginalbeschreibung:

Originaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Journal On Lyapunov Stability Theory Based Control of Uncertain Dynamical Systems

Hochgeladen von

niluCopyright:

Verfügbare Formate

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.

php

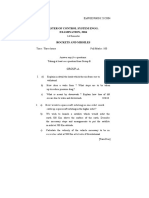

SlAM J. CONTROL AND OPTIMIZATION

Vol. 21, No. 2, March 1983

1983 Society for Industrial and Applied Mathematics

0363-0129/83/2102-0006 $01.25/0

A NEW CLASS OF STABILIZING CONTROLLERS FOR UNCERTAIN

DYNAMICAL SYSTEMS*

B. R. BARMISH?, M. CORLESS

AND

G. LEITMANN

Abstract. This paper is concerned with the problem of designing a stabilizing controller for a class of

uncertain dynamical systems. The vector of uncertain parameters q(.) is time-varying, and its values q(t)

lie within a prespecified bounding set Q in R p. Furthermore, no statistical description of q(.) is assumed,

and the controller is shown to render the closed loop system "practically stable" in a so-called guaranteed

sense; that is, the desired stability properties are assured no matter what admissible uncertainty q(.) is

realized. Within the perspective of previous research in this area, this paper contains one salient feature:

the class of stabilizing controllers which we characterize is shown to include linear controllers when the

nominal system happens to be linear and time-invariant. In contrast, in much of the previous literature

(see, for example, [1], [2], [7], and [9]), a linear system is stabilized via nonlinear control. Another feature

of this paper is the fact that the methods of analysis and design do not rely on transforming the system

into a more convenient canonical form; e.g., see [3]. It is also interesting to note that a linear stabilizing

controller can sometimes be constructed even when the system dynamics are nonlinear. This is illustrated

with an example.

Key words, stability, uncertain dynamical systems, guaranteed performance

1. Introduction. During recent years, a number of papers have appeared which

deal with the design of stabilizing controllers for uncertain dynamical systems; e.g.,

see [1]-[7]. In these papers the uncertain quantities are described only in terms of

bounds on their possible sizes; that is, no statistical description is assumed. Within

this framework, the objective is to find a class of controllers which guarantee "stable"

operation for all possible variations of the uncertain quantities.

Roughly speaking, the results to date fall into two categories. There are those

results which might appropriately be termed structural in nature; e.g., see [1]-[3], [6].

By this we mean that the uncertainty cannot enter arbitrarily into the state equations;

certain preconditions must be met regarding the locations of the uncertainty within

the system description. Such conditions are often referred to as matching assumptions.

We note that in this situation uncertainties can be tolerated with an arbitrarily large

prescribed bound. A second body of results might appropriately be termed nonstrucrural in nature; e.g., see [4] and [5]. Instead of imposing matching assumptions on

the system, these authors permit more general uncertainties at the expense of

"sufficient smallness" assumptions on the allowable sizes of the uncertainties.

This work falls within the class of structural results mentioned above. Our

motivation comes from a simple observation. Namely, given a theory which yields

stabilizing controllers for a class of uncertain nonlinear systems, it is often desirable

for this theory to have the following property: upon specializing the "recipe" for

controller construction from nonlinear to linear systems, one of the possible stabilizing

control laws should be linear in form. It is of importance to note that existing results

do not have this property. Upon specialization to the linear case, one typically obtains

controllers of the discontinuous "bang-bang" variety; e.g., see [1] and [2]. One can

often approximate these controllers using a so-called saturation nonlinearity; e.g., see

* Received by the editors March 4, 1981, and in revised form January 15, 1982.

Department of Electrical Engineering, University of Rochester, Rochester, New York 14627. The

work of this author was supported by the U.S. Department of Energy under contract no. ET-78-S-01-3390.

Department of Mechanical Engineering, University of California at Berkeley, Berkeley, California

94720. The work of these authors was supported by the National Science Foundation under grant

ENG 78-13931.

"

246

STABILIZING CONTROLLERS FOR UNCERTAIN DYNAMICAL SYSTEMS

247

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

[7]. Such an approach leads to uniform ultimate boundedness of the state to an

arbitrarily small neighborhood of the origin; this type of behavior might be termed

practical stability.

Our desire in this paper is to develop a controller which is linear when the system

dynamics are linear. By taking known results (such as in [3]) which were developed

exclusively for linear systems, one encounters a fundamental difficulty when attempting

to generalize 2 to a class of nonlinear systems; namely, it is no longer possible to

transform the system dynamics to a more convenient canonical form. The subsequent

analysis is free of such transformations.

2. Systems, assumptions and the concept of practical stability. We consider an

uncertain dynamical system described by the state equation

2(t) =f(x(t), t)+ Af(x (t), q(t), t)

+[B(x(t), t)+ AB(x(t), q(t), t)]u(t),

where x(t)R is the state, u(t)R is the control, q(t)R p is the uncertainty and

f(x, t), Af(x, q, t), B (x, t) and AB (x, q, t) are matrices of appropriate dimensions which

depend on the structure of the system. Furthermore, it is assumed that the uncertainty,

q(.):R-->R p, is Lebesgue measurable and its values q(t) lie within a prespecified

bounding set Q cR for all R. We denote this by writing q(.)M(Q).

As mentioned in the introduction, given that "stabilization" is the goal, we must

impose additional conditions on the manner in which q(t) enters structurally into the

state equations. We refer to such conditions as matching assumptions.

Assumption 1. There are mappings

h(.):R"xRPxRR" and E():R"xRPxR-R

such that

Af(x, q, t) B (x, t)h (x, q, t),

AB(x, q, t)= B(x, t)E(x, q, t),

liE (x, q, t)ll < 1

R.

for all x R q O and

We note that this assumption can sometimes be weakened. For example, in [9]

a certain measure of mis-match is introduced and results are obtained under the

proviso that this measure does not exceed a certain critical level termed the mis-match

threshold.

Our second assumption reflects the fact that the uncertainties must be bounded

in order to permit one to guarantee stability.

Assumption 2. The set O c R" is compact.

Our next assumption is introduced to guarantee the existence of solutions of the

state equations.

Assumption 3. The mappings f(. ): R x R

E(.) (see Assumption 1) are continuous. 3

R n, B (): R x R

R mxn, h (.) and

This notion is not to be interpreted in the sense of Lasalle and Lefschetz [12] but as defined

subsequently.

That is, one begins with a linear control law for a linear system and generalizes the controller in

such a way that is applies to a class of nonlinear systems.

In fact, one can modify the analysis to follow so as to allow mappings which are Carath6odory and

satisfy certain integrability conditions. See, for example, Corless and Leitmann [7]. All the results of this

paper still hold under this weakening of hypotheses.

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

248

B. R. BARMISH, M. CORLESS AND G. LEITMANN

In order to satisfy our final assumption, one may need to "precompensate" the

so-called nominal system, that is, the system with Af(x, q, t)=--O and AB(x, q, t)----0;

e.g., see [2]. Thus, prior to controlling the effects of the uncertainty, it may be necessary

to employ a portion of the control to obtain an uncontrolled nominal system

(UC)

(t)=f(x(t),t)

that has certain stability properties embodied in the next assumption.

R and, moreover, there exist a C function

Assumption 4. f(0, t)- 0 for all

V(.):R xR [0, o0) and strictly increasing continuous functions y(.), y2(),

y3(): [0, 00) [0, 00) satisfying4 yl(0) y2(0)--y3(0) 0 and limr_. yl(r)

limr_. TE(r) lim_.oo ya(r) o, such that for all (x, t) e R" R,

(2.2)

v (llxll)

Moreover, defining the Lyapunov derivative o(" ): R

R by

a 0 V(x, t)

o(X, t) =+VxV(x,t)f(x,t),

(2.3)

where

"R

at

denotes the transpose of the gradient operation, we also require that

t) -<_- -w(llx II)

for all pairs (x, t)eR R. This assumption, in effect, asserts the existence of a

Lyapunov function for the uncontrolled nominal system (UC). Consequently, the

origin, x 0, is a uniformly asymptotically stable equilibrium point for the uncontrolled

nominal system (UC).

The stability concept employed in this paper differs slightly from the traditional

Lyapunov-type stability. To motivate this change of definition, consider the following

very simple example of a system satisfying (2.1) and the associated assumptions:

(t) x(t)+q(t)+ u(t), with initial condition X(to) 1 and uncertainty q(. such that

[q (t)] -<_ 1. Furthermore, suppose the control is a linear feedback of the form u (t) kx (t),

with k <-1. Then, if a state x(t) <-1/(1+ k) is reached, an admissible uncertainty

q(t) =-1 results in motion of the state away from zero. Hence, although we cannot

guarantee uniform asymptotic stability (using a finite gain), we can nevertheless drive

the state to an arbitrarily small neighborhood of the origin. 5 The following uniform

ultimate boundedness-type definition captures this notion.

DEFINITION 1. The uncertain dynamical system (2.1) is said to be practically

stabilizable if, given any _d >0, there is a control law pg(.):R x R R for which,

given any admissible uncertainty q(.)eM(Q), any initial time to eR and any initial

state x0 R the following conditions hold

(i) The closed loop system

(2.4)

(t) f(x(t), t) + Af(x(t), q(t), t)

+[B(x(t), t)+ AB(x(t), q(t), t)]pa_(x(t), t)

possesses a solution x(. ): [to, tx] R n, X(to)

4

Xo.

The limit condition on 33( can in fact be removed at the expense of a somewhat more technical

development; e.g., see [7].

This is not to be confused with Lyapunov stability, because the required gain k depends on the size

of the neighborhood to which we wish to drive the state.

STABILIZING CONTROLLERS FOR UNCERTAIN DYNAMICAL SYSTEMS

249

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

(ii) Given any r>0 and any solution x(.):[to, tl]-->R n, X(to)=Xo, of (2.4) with

Ilxoll <--r, there is a constant d(r)> 0 such that

IIx (t)ll <-- d(r) for all

[to, tl].

(iii) Every solution x(. ): [to, t]--> R can be continued over [to, oo).

(iv) Given any d >_- _d, any r > 0 and any solution x (.)" [to, oo) --> R ", x (to) Xo, of

(2.4) with Ilxoll--<r, there exists a finite time T(a, r)< oo, possibly dependent on r but

not on to, such that Ilx (t)ll-<- a7 for all >_- to + T(a, r).

(v) Given any d ->_d and any solution x(): [to, oo)-->R", X(to) =Xo, of (2.4), there

is a constant 6 (a)> 0 such that Ilxoll-<- (a7) implies that

IIx (t)ll--< a7

for all

>= to.

3. Controller construction. We take _d > 0 as given and proceed to construct a

control law p _e(" which will later be shown to satisfy conditions (i)-(v) in the definition

of practical stabilizability.

Construction of pa_(). The first step is to select functions AI(.) and A2(.)R"

R --> R satisfying

(3.1)

A(x, t)-->_max Ilh(x, q, t)ll,

(3.2)

1 > A).(x, t) >-max liE(x, q, t)l[.

The standing Assumptions 1-4 assure that there is a A2(x, t) such that

1) A2(x, t)<l can be satisfied for all (x, t)eR"R;

2) A(. and A2(.) can be chosen to be continuous; e.g., see [10, p. 116].

Now, one simply selects any continuous function y(. ):R" R --> [0, oo) satisfying

(3.3)

"g(x,

where

a(x,t)

t)>-4[1_ AE(x t)][C2-Cl.o(X, t)]

C and C2 are any (designer chosen) nonnegative constants such that

a) C1< 1;

b) either C1 # 0 or C2 # 0;

(3.4)

c) C2 # 0 whenever limx_,0 [A(x, t)/o(X, t)] does not exist;

d)

C (

<

v v)(_d).

1

-C

Note that these conditions can indeed be satisfied because of continuity of the 3(.)

and the fact that lim_,o y(r)= 0 for

1, 2, 3.

This construction then enables one to let

(3.5)

Pa(X, t)a---y(x, t)B(x, t)VV(x, t).

Remark. In fact, (3.3) and (3.5) describe a class of controllers yielding practical

stability. It will be shown in 5 that this class includes linear controllers when the

nominal system happens to be linear and time-invariant.

4. Main result and stability estimates. The theorem below and its proof differ

from existing results (see [1], [2] and [6]) in one fundamental way: The control p_a()

which leads to the satisfaction of the conditions for practical stabilizability degenerates

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

250

B. R. BARMISH, M. CORLESS AND G. LEITMANN

into a linear controller whenever the nominal system, obtained by setting

Af(x(t), q(t), t)=--O and AB(x(t), q(t), t)=-O in (2.1), is linear and time-invariant. This

will be demonstrated in the sequel. In fact, even for certain nonlinear nominal systems,

the controller turns out to be linear. This phenomenon will be illustrated with an

example of a nonlinear pendulum. Central to the proof of the theorem below is one

fundamental concept" a system satisfying Assumptions 1-4 admits a control such that

the Lyapunov function for the nominal system (UC) is also a Lyapunov function for

the uncertain system (2.1).

THEOREM 1. Subfect to Assumptions 1-4, the uncertain dynamical system (2.1)

is practically stabilizable.

Proof. For a given _d>0 and a given uncertainty q(.)M(Q), the Lyapunov

derivative (.)Rn R R for the closed loop system obtained with the feedback

control (3.5) is given by

(x, t)a_ o(X, t)+ VV(x, t){Af(x, q(t), t)

+[B (x, t)+ aB (x, q(t), t)]pa_(x, t)}.

(4.1)

By using the matching assumptions in conjunction with (3.5), (4.1) becomes

q(x, t)= f0(x, t)-3/(x, t)llB(x, t)VxV(x,

t)ll

+ VV(x, t)B(x, t)[h(x, q(t), t)

-y(x, t)E(x, q(t), t)B(x, t)VxV(x, t)].

Letting

(.)" R

R --> R be given by

&(x, t) a---B(x, t)VxV(x, t),

and recalling the definition of AI(. and Az(. ), a straightforward computation yields

(x, t)_-< 0(x, t)-[1-A2(x, t)]y(x, t)ll (x, t)l[ =

+ +/-(x, t)ll (x, t)[I.

Now there are two cases to consider.

Case 1. The pair (x, t) is such that Al(x, t) 0. It then follows from the preceding

inequality that

(x, t) <=o(X, t).

Case 2. The pair (x, t) is such that A(x, t) 0. Then it follows from (3.3) that

,(x, t) > 0. Moreover, in view of (3.3) and the conditions on the Ci,

o(x, t)_<-.0(x, t)-[1-A2(x, t)]y(x, t)ll (x, t)ll2 + A(x, t)ll (x,

Lo(X, t) +

a(x,t)

4V(x,t)(1-A2(x,t))

A(x, t)

+/-(x, t)ll (x, t)ll-2(1_

t)[

a=(x

t))3,(x, t)

-x

(1-A(x, t))y(x,

A(x, t)

- <- o(X, t) + 4y(x, t)(1- Az(x, t))

<-- (1-- C1)o(X, t) + C2.

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

STABILIZING CONTROLLERS FOR UNCERTAIN DYNAMICAL SYSTEMS

251

Combining Cases 1 and 2, and noting that C1 < 1, we conclude (as a consequence of

Assumption 4) that

(x, t) <-( -C)o(X, t)+ C <-- ( -C,)(llx II)+ C

(4.2)

for all (x, t) e R" x R. Having this inequality available now enables one to apply directly

the results of [7]. That is, the closed loop system (2.4) possesses a solution x(. ): [to, t]-->

R ", x (to) Xo, which is required by condition (i) in the definition of practical stabilizability. Moreover, in accordance with [7], if Ilx0ll--< r, one can satisfy the uniform boundedness requirement (ii) by selecting

ifr<-R,

(,y-I y2)(r) if r > R,

where

d(r)&{(y-ly2)(R)

R V (C2/1

It now follows that there is no finite escape time so that the solution is continuable

over [to, m) and hence condition (iii) holds. Again for aT>_-_d, using the estimates

provided in [7], one can define

0

T(d-, r) A

(4.3)

if r _-< (yl

y2(r) Z(]/_l

(1 C1)(y3

yl)(a),

yl "y1)(d)

"- "

"1)= C2

otherwise,

and in accordance with [7], the desired uniform ultimate boundedness condition (iv)

holds with the proviso that

(1 C1)(y3 yl yl)(a)- C2 >0.

(4.4)

Note that this requirement is implied by the satisfaction of condition (d) of (3.4) which

entered into the construction of the controller.

Finally, to complete the proof, it remains to establish the desired uniform stability

property. Indeed, let d->_d be specified and notice that if 6(d)= R, the following

property will hold" Given any solution x()[t0, )R", X(to)=Xo of (2.4) with

tlx011=<3(d), it follows (in view of the uniform boundedness property (ii) and the

requirements on the Ci) that [[x(t)ll<=d(R)<= for all t->t0. ]

5. Specialization to linear systems. The objective of this section is to show that

the flexibility permitted in choosing y(x, t) (see (3.3)) can be exploited in a "nice

way" when the nominal system dynamics happen to be linear and time-invariant; that

is, the control P4() in (3.5) can be selected to be a linear time-invariant feedback

of the state. We consider the special case when

(5.)

(t) [A + AA(q(t))]x(t)+[B + AB(q(t))]u(t)+ w(q(t)),

X(to)-Xo,

where A, AA (q (t)), B and AB (q (t)) are matrices of appropriate dimensions and w (q (t))

is an n-dimensional vector. In light of Assumptions 1 and 3 given in 2, it follows that

for all q e Q

AA(q)=BD(q),

(5.2)

AB (q

BE (q ),

w(q)=Bv(q),

E(q)ll< 1

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

252

B. R. BARMISH, M. CORLESS AND G. LEITMANN

where D (.), E (.) and v (.) have appropriate dimensions and depend continuously on

their arguments. In accordance with Assumption 4, the matrix A must be asymptotically stable. To obtain a Lyapunov function for the uncontrolled nominal system, we

select simply an n x n positive-definite symmetric matrix H and solve the equation

AP + PA -H

(5.3)

for P which is positive-definite; see [11]. Then we have

V(x, t) xPx

(5.4)

and

(5.5)

0(x, t)=-xHx.

It is clear from (5.4) and (5.5) that one can take the bounding functions yi() to be

yl(r) h min[e]r 2, "y2 (r) -& h max[.P]r 2, "y3 (r) h min[H]r 2,

(5.6)

where h max(min)[ denotes the operation of taking the largest (smallest) eigenvalue.

Construction of the controller. We take _d >0 as prescribed and construct the

controller p_d(" given in 3. Using the notation above, we define first 6

po a--maxl[O(q)[I, pz a-maxl[E(q)ll<l p a=maxllv(q)[I.

(5.7)

qO

qO

qO

Then, in agreement with (3.1) and (3.2), we may take

(5.8)

Al(x, t)

0ollxll + 0,

A=(x, t) pz.

Using these choices in (3.3) and the fact that o(X, t)<-- Amin[H]llx[[ 2, one can select

3() such that

(po +

>

y(x, t)_(5.9)

2

IIx

4(1 pz)(Chmin[H]llxll + C)

with the constants Cx and C2 yet to be specified. We shall examine three possible

cases and see that in all instances one can take y(x, t) constant. Of course, this

implies that the control p_a(" is a linear time-invariant feedback; that is,

(5.10)

pa_ (x, t)=-2yoBPx,

where 30 is the constant value of 3(" ), which will be specified.

Case 1. po > O, p O. In this case, we may select C2- 0 and

sequently, we can satisfy (5.9) by choosing

C (0, 1). Con-

y(x, t)=-- yo >-

(5.11)

Case 2. po

(5.12)

Po

4(1 -pE)Chmin[H]"

O, p > O. Clearly, it suffices to take C -0 and

y(x, t)_= y0 >

02

4(1 p)C2

where C2 is required to satisfy condition d) of (3.4). Using the descriptions of the

yi(. given in (5.6), this amounts to restricting C2 by

(5.13)

with

6

X min[e]

C2 < Amin[H]_d

2

1 C1 Amax[P]

C1 0 in the above.

One can in fact use overestimates to and tSE for Po and pE as long as the inequality tSz < is satisfied.

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

STABILIZING CONTROLLERS FOR UNCERTAIN DYNAMICAL SYSTEMS

Case 3. Oo >0, p >0. Now, in order to satisfy (5.9), we select

satisfying (5.13) and

(por +p)2

y(x, t) =- yo >max

(5.14)

r0

4(1-pE)[Clhmin[n]r 2-t- C2]

253

Ca (0, 1), C2

Letting f(r) denote the bracketed quantity in (5.14) above, a straightforward but

lengthy differentiation yields

1

(5.15)

maxf(r)=

r_->0

020

4(1-Oz) Clhmin[H]

+P22}

Hence, any 3o equal to or exceeding this maximum value will be appropriate in (5.14).

6. Illustrative example. We consider now the simple pendulum which was

analyzed in [7]. However, here it will be shown that the desired practical stability can

actually be achieved via a linear control. This may seem somewhat surprising in light

of the fact that the nominal system dynamics are nonlinear. A pendulum of length

is subjected to a control moment u (.) (per unit mass). The point of support is subject

to an uncertain acceleration q(.), with [q(t)[ <_-l =- constant. Letting x denote the

angle between the pendulums arm and a vertical reference line, one obtains the state

equations

21(t)=x2(t),

(6.1)

22(t)=_a

sinx(t)+u(t)_q(t) cos x(t)

where a > 0 is a given constant. In order to satisfy the assumptions of 2 one must

assure a uniformly asymptotically stable equilibrium for (UC), the uncontrolled

nominal system. Hence, for a given _d > 0, we propose a controller of the form

(6.2)

u(t) =-bx(t)-CXE(t)+pa_(x(t), t),

where b and c are positive constants and p_a(.) will be specified later in accordance

with the results of 3. The linear portion of the controller (6.2) is used to obtain a

stable nominal system. Substitution of (6.2) into (6.1) now yields the state equation

(6.3)

2 (t)

f (x (t), t) + B [p (X (t), t) + h (x (t), q(t), t)],

where

(6.4)

f(x, t)

h(x, q, t)

-bx-cxE-a sinx

-q cos xl

A suitable Lyapunov function for the uncontrolled nominal system (with x- 0 as

equilibrium) is

(6.5)

V(x,t)=(b+gc 2\)x2

+cxxE+x +2a(1-cosx),

and, provided b is sufficiently large, the associated 3"(. are given by

3"(r)=Alr 2,

2r

/3(r) X3r

+ 4a

if r >

254

B. R. BARMISH, M. CORLESS AND G. LEITMANN

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

,5

(6.7)

P=[ b+c2 ]

(6.8)

min {/, c },

&b+amin

x

Following the procedure described in

p (.), we select first

( x) >0.

si

3 for the construction of the controller

l(X,t)=lcosxl,

(6.9)

,.

Xmax[P],

=(x, t) 0.

Inequality (3.3) can then be assured by requiring

2

e(x, t)

(6. 0)

COS X

4[

Given our desire for a linear feedback, one can select

choosing

C 0

and satisfy (6.10) by

(6.11)

(x, t)v0>

=4C"

To complete the design, C must be selected to satisfy condition d) of (3.4). The

analysis must account for two cases, depending on the size of the given radius > 0.

Case 1.

h+ 4a. The required conditions on C are

a>

(6.12)

0<C<(3oa

h3

o)(g)=(h_d

-4a).

Case 2. hlNh +4a. In this case, the constraints imposed by (6.12) are

C > 0 is chosen suNciently small so that

met if

(6.13)

2a(

h2C2

+ 1-cos

<d.

Having now selected C, the controller is specified by (3.5) in conjunction with (6.11);

that is,

p(x, ) -oB(x, t)%(x, t) -o(cx: + 2x),

with the proviso that ToN/4. It is interesting to note that there is an obvious

tradeoff between the required gain constant T0 and the given radius > 0. As the

radius decreases, C decreases, which in turn implies that T0 increases. In contrast,

(6.14)

the nonlinear saturation controller of [7] remains bounded by the bound of the

uncertainty, and the radius can be decreased by increasing the nonlinear gain; i.e.,

by approaching a discontinuous control.

eels. This paper addresses the so-called problem of practical stabilizability for a class of uncertain dynamical systems. In contrast to previous work on

problems of this sort, the main emphasis here is on the structure of the controller. It

is shown that by choosing the function T( in a special way, the resultant control law

can often be realized as a linear time-invariant feedback.

REFERENCES

[1] G. LEITMANN, Guaranteed asymptotic stability for some linear systems with bounded uncertainties, J.

Dynamic Systems, Meaurement and Control, 101 (1979), pp. 212-216.

[2] S. GUTMAN, Uncertain dynamical systems, a Lyapunov rain-max approach, IEEE Trans. Automat.

Control, AC-24 (1979), pp. 437-443; correction, 25 (1980), p. 613.

Downloaded 01/03/13 to 128.148.252.35. Redistribution subject to SIAM license or copyright; see http://www.siam.org/journals/ojsa.php

STABILIZING CONTROLLERS FOR UNCERTAIN DYNAMICAL SYSTEMS

255

[3] J. S. THORP AND B. R. BARMISH, On Guaranteed Stability of Uncertain Linear Systems via Linear

Control, J. Optim. Theory and Applications, in press.

[4] S. S. L. CHANG AND T. K. C. PENG, Adaptive Guaranteed Cost Control of Systems with Uncertain

Parameters, IEEE Transactions on Automatic Control, AC-17 (1972), pp. 474-483.

[5] A. VINKLER AND I. J. WOOD, Multistep Guaranteed Cost Control of Linear Systems with Uncertain

Parameters, J. Guidance and Control, 2, 6 (1980), pp. 449-456.

[6] P. MOLANDER, Stabilization of Uncertain Systems, Report LUTFD2/(TRFT-1020)/1-111/(1979),

Lund Institute of Technology, August 1979.

[7] M. CORLESS AND G. LEITMANN, Continuous State Feedback Guaranteeing Uniform Ultimate

Boundedness for Uncertain Dynamic Systems, IEEE Trans. Automat. Control, AC-26, 5 (1981),

pp. 1139-1144.

AND I. J. WOOD, A Comparison of Several Techniques for Designing Controllers of

Uncertain Dynamic Systems, Proceedings of the IEEE Conference on Decision and Control, San

[8] A. VINKLER

Diego CA, 1979.

[9] B. R. BARMISH AND G. LEITMANN, On Ultimate Boundedness Control of Uncertain Systems in the

Absence of Matching Conditions, IEEE Transactions on Automatic Control, AC-27 (1982), pp.

153-157.

[10] C. BERGE, Topological Spaces, Oliver and Boyd, London, (1963).

[11] N. N. KRASOVSKII, Stability of Motion, Stanford University Press, Stanford, CA, (1963).

12] J. P. LASALLE AND S. LEFSCHETZ, Stability by Liapunovs Direct Method with Applications, Academic

Press, New York, (1961).

Das könnte Ihnen auch gefallen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Pentecostal HealingDokument28 SeitenPentecostal Healinggodlvr100% (1)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- Reliability Technoology For Submarine Repeaters PDFDokument8 SeitenReliability Technoology For Submarine Repeaters PDFbolermNoch keine Bewertungen

- Uptime Elements Passport: GineerDokument148 SeitenUptime Elements Passport: GineerBrian Careel94% (16)

- Chinese AstronomyDokument13 SeitenChinese Astronomyss13Noch keine Bewertungen

- A Guide To Relativity BooksDokument17 SeitenA Guide To Relativity Bookscharles luisNoch keine Bewertungen

- MasterMind 1 Unit 5 Extra LifeSkills Lesson 2Dokument2 SeitenMasterMind 1 Unit 5 Extra LifeSkills Lesson 2Hugo A FENoch keine Bewertungen

- Nielsen & Co., Inc. v. Lepanto Consolidated Mining Co., 34 Phil, 122 (1915)Dokument3 SeitenNielsen & Co., Inc. v. Lepanto Consolidated Mining Co., 34 Phil, 122 (1915)Abby PajaronNoch keine Bewertungen

- Basic Translation TerminologyDokument7 SeitenBasic Translation TerminologyHeidy BarrientosNoch keine Bewertungen

- M C S E E, 2010: Aster OF Ontrol Ystems Ngineering XaminationDokument2 SeitenM C S E E, 2010: Aster OF Ontrol Ystems Ngineering XaminationniluNoch keine Bewertungen

- Ex/PGE/9603/121/2004: Master of Control System Engg. Examination, 2004Dokument5 SeitenEx/PGE/9603/121/2004: Master of Control System Engg. Examination, 2004niluNoch keine Bewertungen

- M C S E - E, 2009: Group-ADokument2 SeitenM C S E - E, 2009: Group-AniluNoch keine Bewertungen

- Journal On Robust Design of PID Controller Using IMC Technique For Integrating Process Based On Maximum SensitivityDokument10 SeitenJournal On Robust Design of PID Controller Using IMC Technique For Integrating Process Based On Maximum SensitivityniluNoch keine Bewertungen

- PID Controller Design For Integrating Processes With Time DelayDokument9 SeitenPID Controller Design For Integrating Processes With Time DelayniluNoch keine Bewertungen

- Analytical Design of PID Controller Cascaded With A Lead-Lag Filter For Time-Delay ProcessesDokument9 SeitenAnalytical Design of PID Controller Cascaded With A Lead-Lag Filter For Time-Delay ProcessesniluNoch keine Bewertungen

- Journal On Automatic Generation Control in Power Plant Using PID Controller PDFDokument8 SeitenJournal On Automatic Generation Control in Power Plant Using PID Controller PDFniluNoch keine Bewertungen

- The Shahbag Uprising: War Crimes and ForgivenessDokument3 SeitenThe Shahbag Uprising: War Crimes and ForgivenessniluNoch keine Bewertungen

- Jornnal On Analysis and Control of An Omnidirectional Mobile Robot PDFDokument6 SeitenJornnal On Analysis and Control of An Omnidirectional Mobile Robot PDFniluNoch keine Bewertungen

- Chapter #8: Finite State Machine DesignDokument57 SeitenChapter #8: Finite State Machine DesignniluNoch keine Bewertungen

- Symmetric Send-On-Delta PI Control of A Greenhouse SystemDokument6 SeitenSymmetric Send-On-Delta PI Control of A Greenhouse SystemniluNoch keine Bewertungen

- 1973 PorbaixM LEC PDFDokument351 Seiten1973 PorbaixM LEC PDFDana Oboroceanu100% (1)

- Proper AdjectivesDokument3 SeitenProper AdjectivesRania Mohammed0% (2)

- Finding The NTH Term of An Arithmetic SequenceDokument3 SeitenFinding The NTH Term of An Arithmetic SequenceArdy PatawaranNoch keine Bewertungen

- Assignment Class X Arithmetic Progression: AnswersDokument1 SeiteAssignment Class X Arithmetic Progression: AnswersCRPF SchoolNoch keine Bewertungen

- Clinincal Decision Support SystemDokument10 SeitenClinincal Decision Support Systemم. سهير عبد داؤد عسىNoch keine Bewertungen

- tf00001054 WacDokument22 Seitentf00001054 WacHritik RawatNoch keine Bewertungen

- 9m.2-L.5@i Have A Dream & Literary DevicesDokument2 Seiten9m.2-L.5@i Have A Dream & Literary DevicesMaria BuizonNoch keine Bewertungen

- Influencing Factors Behind The Criminal Attitude: A Study of Central Jail PeshawarDokument13 SeitenInfluencing Factors Behind The Criminal Attitude: A Study of Central Jail PeshawarAmir Hamza KhanNoch keine Bewertungen

- Playwriting Pedagogy and The Myth of IntrinsicDokument17 SeitenPlaywriting Pedagogy and The Myth of IntrinsicCaetano BarsoteliNoch keine Bewertungen

- FJ&GJ SMDokument30 SeitenFJ&GJ SMSAJAHAN MOLLANoch keine Bewertungen

- Serological and Molecular DiagnosisDokument9 SeitenSerological and Molecular DiagnosisPAIRAT, Ella Joy M.Noch keine Bewertungen

- Chhabra, D., Healy, R., & Sills, E. (2003) - Staged Authenticity and Heritage Tourism. Annals of Tourism Research, 30 (3), 702-719 PDFDokument18 SeitenChhabra, D., Healy, R., & Sills, E. (2003) - Staged Authenticity and Heritage Tourism. Annals of Tourism Research, 30 (3), 702-719 PDF余鸿潇Noch keine Bewertungen

- Awareness and Usage of Internet Banking Facilities in Sri LankaDokument18 SeitenAwareness and Usage of Internet Banking Facilities in Sri LankaTharindu Thathsarana RajapakshaNoch keine Bewertungen

- Why Do Firms Do Basic Research With Their Own Money - 1989 - StudentsDokument10 SeitenWhy Do Firms Do Basic Research With Their Own Money - 1989 - StudentsAlvaro Rodríguez RojasNoch keine Bewertungen

- Sukhtankar Vaishnav Corruption IPF - Full PDFDokument79 SeitenSukhtankar Vaishnav Corruption IPF - Full PDFNikita anandNoch keine Bewertungen

- Final PS-37 Election Duties 06-02-24 1125pm)Dokument183 SeitenFinal PS-37 Election Duties 06-02-24 1125pm)Muhammad InamNoch keine Bewertungen

- In The World of Nursing Education, The Nurs FPX 4900 Assessment Stands As A PivotalDokument3 SeitenIn The World of Nursing Education, The Nurs FPX 4900 Assessment Stands As A Pivotalarthurella789Noch keine Bewertungen

- Lorraln - Corson, Solutions Manual For Electromagnetism - Principles and Applications PDFDokument93 SeitenLorraln - Corson, Solutions Manual For Electromagnetism - Principles and Applications PDFc. sorasNoch keine Bewertungen

- Kurukku PadaiDokument4 SeitenKurukku PadaisimranNoch keine Bewertungen

- Fansubbers The Case of The Czech Republic and PolandDokument9 SeitenFansubbers The Case of The Czech Republic and Polandmusafir24Noch keine Bewertungen

- Great Is Thy Faithfulness - Gibc Orch - 06 - Horn (F)Dokument2 SeitenGreat Is Thy Faithfulness - Gibc Orch - 06 - Horn (F)Luth ClariñoNoch keine Bewertungen

- A Guide To Effective Project ManagementDokument102 SeitenA Guide To Effective Project ManagementThanveerNoch keine Bewertungen