Beruflich Dokumente

Kultur Dokumente

Human Voice Localization in Noisy Environment by SRP-PHAT and MFCC

Hochgeladen von

IRJAESCopyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Human Voice Localization in Noisy Environment by SRP-PHAT and MFCC

Hochgeladen von

IRJAESCopyright:

Verfügbare Formate

International Research Journal of Advanced Engineering and Science

ISSN: 2455-9024

Human Voice Localization in Noisy Environment by

SRP-PHAT and MFCC

Arjun Das H1, Lisha Gopalakrishnan Pillai2, Muruganantham C3

1, 2, 3

Department of ECE, University of Calicut, Pambady, Thrissur, Kerala, India-680588

AbstractThis paper proposes an efficient method based on the

steered response power (SRP) technique for sound source

localization using microphone array: the SRP-PHAT and MFCC. As

compared to the previous SRP technique, the proposed method using

MFCC is more efficient in localizing the human speech. The previous

method focuses only on the loudest sound source in a given area and

such sound source may also contain noises which have high spectral

peaks than human speech. The proposed method will recognize the

speech through vowel corpus comparison and enhance the speaker

speech. And finally by using MFCC feature extraction technique the

human speech can be extracted from the noisy environment and this

speech signal is used for sound source localization using SRP-PHAT.

known as voice activity detection (VAD). This scheme utilizes

formants present in the human vowels which are distinctive

spectrals that are likely to be remained even after the noise has

severely corrupted it.

Efficient sound source localization can be performed if we

can extract and enhance the human voice signal accurately in

all noisy environments. For this SRP-PHAT and VAD method

is modified with MEL-frequency extraction technique which

can more accurately derive the human voice.

The remainder of this paper is organized as follows

description on Steered Response Voice Power (SRVP) based

localization in noisy environment is presented in Section II.

Section III describes about the proposed method and IV

compares between both the localization methods while

conclusion closes the paper in Section V

Keywords Mel-frequency cepstral coefficient (MFCC), peak valley

difference (PVD, sound source localization (SSL), time difference of

arrival (TDOA).

I.

INTRODUCTION

II.

Nowadays, the sound source localization (SSL) had become

an attractive field of research in human robot interaction. The

problem of locating a human speech in space has become an

important criterion in this approach. For this reason many

investigations has been done in this research area and several

alternative approaches have been proposed in the last few

decades. Traditionally, the algorithm for the sound source

localization begins with the estimation of direction of arrival

of sound signals based on time differences at the microphone

pairs through generalized cross-correlation with the phase

transform. When several microphones are available for the

source position estimation, a point in the space can be

accurately estimated as source position that satisfies the set of

Time Difference of Arrival (TDOA) values by applying SRPPHAT algorithm.

SRP-PHAT is one of the robust methods used for sound

source localization in noisy and reverberant environment.

However, the direct use of SRP-PHAT in real life speech

application has shown some negative impact on the

performance because, the SRP-PHAT steers the microphone

array towards the location having the maximum output power.

Also the sum of the cross-correlation values for each

microphone signal is measured as output power of the

beamformer. Hence, for a given location the SRP-PHAT

estimates the power of the voice signal by using only the cross

correlation values of the input microphone signal. However if

the source of noise is having maximum output power, then the

noise signal is determined rather than the voice signal.

To handle such problems, higher weights should be

assigned to the speech signal rather than the loud noises for

this we consider human speech characteristics into account.

The scheme that distinguishes human speech from noise is

SRVP BASED LOCALIZATION

A. SRP-PHAT

SRP-PHAT based SSL uses an array of N microphones,

where the source is given by signal, xn,(t) received by the n-th

microphone at time t, the output, y(t,s), of the delay-and-sum

beamformer is defined as follows:

(1)

Where

is the direct time of travel from location s to the nth

microphone. To deal with complex noises, filter-and-sum

beamformers using a weighting function may be used in

reverberation cases. In the frequency domain, the filter-andsum version of Eq. 8 can be written as:

(2)

Where, are the Fourier transforms of the nth microphone signal

and its associated filter is represented by Xn() and Gn()

respectively. In Equation (2) the microphone signals are

phase-aligned by the steering delays and summed after the

filter is applied.

The sound source localization algorithm based on the SRP

steers the microphone array to focus on each spatial point, s,

and for the focused point s one can calculate the output power,

P(s), for the microphone array as follows:

33

Arjun Das H, Lisha Gopalakrishnan Pillai, and Muruganantham. C, Human voice localization in noisy environment by SRP-PHAT and

MFCC, International Research Journal of Advanced Engineering and Science, Volume 1, Issue 3, pp. 33-37, 2016.

International Research Journal of Advanced Engineering and Science

ISSN: 2455-9024

Where N is the dimension of the spectrum. For every

registered spectral peak signature the above similarity

measurement is computed and the maximum value for the

spectral peak energy location q can be determined as follows:

(7)

(3)

where,

. To reduce the effect of

reverberation the filter used in SRP-PHAT is defined as

follows

The location,

of the human voice can be determined by

following simple linear algorithm

(8)

(4)

Where PVDmax is the maximum value of PVD while Pmax is

the maximum value of the steered mean output power.

After calculating the SRP, P(s), for the each candidate

location, the point that has the highest output power is

selected as the location of the sound source, i.e.,

(5)

III.

SRP-PHAT AND MFCC BASED LOCALIZATION

A. Mel- Frequency Extraction

In this method the mel-frequency extraction technique is

applied along with SRP-PHAT algorithm in order to

accurately extract and enhance the human speech in noisy

environment. The advantage of using mel-frequency scaling in

sound source localization is it is very approximate to the

frequency response of human auditory system and can be used

to capture the important characteristics of speech.

The speech extraction and enhancement process can be done

using a mel-frequency cepstral coefficient (MFCC) processor.

This processing can be explained in five main steps.

B. Voice Activity Detection (VAD) using Formants in Vowel

Sounds

Though SRP-PHAT is the popular SSL technique it may

not be adequate for human speech localization is noisy

environment. Because of its inability to distinguish between

the voice and the noise signals and it simply compute the

output power of the input signals.

So to solve this problem VAD method is employed, which

will boost the peaks from the speech signal and reduce peaks

from the noise signals.

Human vowel sound contains formants which are well

known distinctive spectral peaks and are likely to remain even

after the noise has severally corrupted it. These are used for

checking the similarities with human speech and distinguish

with the noise.

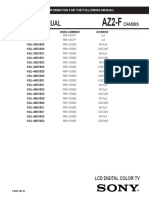

Frame

blocking

Frames

Continuous

speech

Windowing

C. Peak valley difference (PVD)

The method that combines two algorithms SRP-PHAT and

VAD leads to SRVP [5]. Here to consider the content within

the voice signal VAD is utilized. The method mentioned here

composed three steps:

SRP is calculated for every candidate. Then the top n- best

candidates are selected

By using an adaptive beamforming method the

microphones are focused on the top n-best candidates

Maximum voice similarity of the beamformed signal is

evaluated by comparing it with the pre-trained samples

present in the vowel corpus.

Therefore the algorithm computes the voice similarity

between the input spectrums to the pre-trained spectrals

present in the vowel corpus. So, if the spectral peak is present

then the average energies of the spectral bands for that peak

will be much higher than the average energies of other bands.

It means the peak valley difference (PVD) will be higher.

Using the PVD values one can measure the voice similarity.

The similarity of the beamformed input spectrum Zq and the

signature S of the given binary spectral peak can be calculated

as follows

FFT

Spectrum

Mel Frequency

wrapping

Mel

cepstrum

Cepstum

Mel

spectrum

Fig. 1. Block diagram for MFCC processor.

In the first step, the continuous speech signal is blocked

into frames of N samples, where the adjacent samples are

separated by M. (M<N)

In the next step windowing is performed on each of the

individual frame so as to minimize the signal

discontinuities at the beginning and end of the each frame.

(6)

34

Arjun Das H, Lisha Gopalakrishnan Pillai, and Muruganantham. C, Human voice localization in noisy environment by SRP-PHAT and

MFCC, International Research Journal of Advanced Engineering and Science, Volume 1, Issue 3, pp. 33-37, 2016.

International Research Journal of Advanced Engineering and Science

ISSN: 2455-9024

The third step composed of Fast Fourier Transform, which

converts each frames of N samples into frequency domain

from time domain.

The fourth step is called Mel-frequency wrapping, where

we compute mels for a given frequency f in Hz by

following formula

mel(f)= 2595*log10(1+f/700)

(9)

for simulating the subjective spectrum a filter bank can be

used as an approach, one filter for each desired mel-frequency

component. The filter bank used here has a triangular band

pass frequency response, where the bandwidth and spacing of

the filter is determined by a constant mel-frequency interval.

The last step converts the log mel spectrum to time domain.

The result is known as mel-frequency cepstral coefficient

(MFCC).

This will reduce the amount of computations needed when

comparing in later stages. Even though the codebook is

smaller than the original sample, it still accurately represents

command characteristics. The only difference is that there will

be some spectral distortion.

C. Codebook Generation

Since command recognition depends on the generated

codebooks, it is important to select an algorithm that will best

represent the original sample. There is a well-known

algorithm, namely LBG algorithm [Linde, Buzo and Gray,

1980], for clustering a set of L training vectors into a set of M

codebook vectors (also known as the binary split algorithm) is

used. The algorithm is implemented by the following recursive

procedure:

1. Design a 1-vector codebook; this is the centroid of the

entire set of training vectors (hence, no iteration is required

here).

2. Double the size of the codebook by splitting each current

codebook according to the rule: where n varies from 1 to the

current size of the codebook, and is the splitting parameter.

(10)

3. Nearest-Neighbor Search: for each training vector, find the

centroid in the current codebook that is closest (in terms of

similarity measurement), and assign that vector to the

corresponding cell (associated with the closest centroid). This

is done using the K-means iterative algorithm.

4. Centroid Update: update the centroid in each cell using the

centroid of the training vectors assigned to that cell.

5. Iteration 1: repeat steps 3 and 4 until the average distance

falls below a preset threshold

6. Iteration 2: repeat steps 2, 3, and 4 until a codebook of size

is reached.

Intuitively, the LBG algorithm designs an M-vector codebook

in stages. It starts first by designing a 1-vector codebook, then

uses a splitting technique on the codewords to initialize the

search for a 2-vector codebook, and continues the splitting

process until the desired M-vector codebook is obtained.

In the recognition phase the features of unknown command

are extracted and represented by a sequence of feature vectors

{x1 xn}. Each feature vector in the sequence X is compared

with all the stored codewords in codebook and the codeword

with the minimum distance from the feature vectors is selected

as proposed command. For each codebook a distance measure

is computed, and the command with the lowest distance is

chosen.

B. Vector Quatization

Vector quantization (VQ) is used for command

identification in our system. VQ is a process of mapping

vectors of a large vector space to a finite number of regions in

that space. Each region is called a cluster and is represented by

its center (called a centroid). A collection of all the centroids

make up a codebook. The amount of data is significantly less,

since the number of centroids is at least ten times smaller than

the number of vectors in the original sample.

Competing Noise

Original speech

Background

noise

sound source localization

SRP-PHAT

Speech enhancement using MFCC

feature extraction

Peak signature

Vowel corpus

D. Direction of arrival

We know that the near field situation, the microphones are

situated very close to the acoustic effective source and in the

same time for the element of microphone array, there are M

directions of arrival which is commonly used in DOA

(Direction of Arrival). Each and every DOA is the direct path

from the microphones point to the acoustic source.

Mathematically, we can express the full process by a point on

the unit vector expression.

Voice similarity measurement

Vowel source location

Fig. 2. Functional block diagram of system model.

(11)

35

Arjun Das H, Lisha Gopalakrishnan Pillai, and Muruganantham. C, Human voice localization in noisy environment by SRP-PHAT and

MFCC, International Research Journal of Advanced Engineering and Science, Volume 1, Issue 3, pp. 33-37, 2016.

International Research Journal of Advanced Engineering and Science

ISSN: 2455-9024

Where m=1,2,3...M,

In far field condition, we know that the microphones are

located far away from the acoustic sources and all

microphones in the array design maintained the same

Direction of arrival (DOA), which is commonly chosen as the

path of the system from the origin of the array design to the

active acoustic source. We can express the full process

mathematically, where the origin is expressed as O in the

coordinate system.

IV.

COMPARISON BETWEEN SRP-PHAT BASED SSL AND

SRP-PHAT AND MFCC BASED SSL

The comparison between these two methods is done based on

the peak signal to noise ratio (PSNR), otherwise known as

PSNR it is the ratio between the maximum possible power of a

signal and the power of corrupting noise that affects the

fidelity of its representation. PSNR is most easily defined via

mean squared error (MSE). Given a noiseless mn

monochrome image I and its noisy approximation K, MSE is

defined as,

(12)

In this process, we can express the Direction of Arrival (DOA)

through the standard Azimuth Angle and the Elevation Angle.

In this angle measurement, we can express it educationally in

the following way

The PSNR value in DB is defined as:

(13)

In the far filed condition, the distance or the range between the

microphone array and the effective acoustic source cannot be

determined in the acoustic source localization problems. The

Direction of Arrival is the only spatial information about the

Source.

NO

YES

Find centroid

(14)

TABLE I. PSNR value obtained for both methods at various noisy

environment.

PSNR values obtained for SRPPSNR values obtained for enhanced

PHAT

MFCC

60

65

61

67

65

72

68

76

71

80

73

84

77

88

81

92

82

96

88

100

m<M

Split each centroid

S

t

o

p

m = 2*m

Cluster

V.

Especially under the higher noise conditions, spoken

languages in real environments where speech and noise can

occur simultaneously. Computation of Steered Response

Power Phase Transformation (SRP-PHAT) is important to

localize the active sound source. The increase in the accuracy

of sound source localization allows location-based interactions

between the user and various speech operated devices. The

voice similarity of the enhanced signals is computed and

combined with the steered response power. The final output is

then interpreted as a steered response voice power, and the

maximum SRVP location is selected as the speaker location.

The computational cost is relatively low because only the top

n best candidate locations are considered for similarity

measurements. The method focuses only on vowel sounds, so

a possible extension for the proposed method to improve the

accuracy of the voice activity detection involves the use of

additional features suitable for consonants. Further extraction

and enhancement of the speech signal using MFCC helps in

accurately locating the sound source.

vectors

Find centroids

D = D

Compute D

(distortion)

NO

CONCLUSION

YES

Fig. 3. Flow diagram for LBG algorithm.

36

Arjun Das H, Lisha Gopalakrishnan Pillai, and Muruganantham. C, Human voice localization in noisy environment by SRP-PHAT and

MFCC, International Research Journal of Advanced Engineering and Science, Volume 1, Issue 3, pp. 33-37, 2016.

International Research Journal of Advanced Engineering and Science

ISSN: 2455-9024

[8]

REFERENCES

[1]

[2]

[3]

[4]

[5]

[6]

[7]

Y. Oh, J. Yoon, J. Park, M. Kim, and H. Kim, A name recognition

based call-and-come service for home robots, IEEE Transactions on

Consumer Electronics, vol. 54, no. 2, pp. 247-253, 2008.

K.Kwak and S.Kim, Sound source localization with the aid of

excitation source information in home robot environments, IEEE

Transactions on Consumer Electronics, vol. 54, no. 2, pp. 852-856,

2008.

J. Park, G. Jang, J. Kim, and S. Kim, Acoustic interference cancellation

for a voice-driven interface in smart TVs, IEEE Transactions on

Consumer Electronics, vol. 59, no. 1, pp. 244-249, 2013.

T. Kim, H. Park, S. Hong, and Y. Chung, Integrated system of face

recognition and sound localization for a smart door phone, IEEE

Transactions on Consumer Electronics, vol. 59, no. 3, pp. 598-603, 2013

Y. Cho, D. Yook, S. Chang, and H. Kim, Sound source localization for

robot auditory systems, IEEE Transactions on Consumer Electronics,

vol. 55, no. 3, pp. 1663-1668, 2009.

Hyeontaek Lim, In-Chul Yoo, Youngkyu Cho, and Dongsuk

Yook,Speaker Localization in Noisy Environments Using Steered

Response Voice Power IEEE Transactions on Consumer Electronics,

vol. 61, no. 1, 2015.

A. Sekmen, M. Wikes, and K. Kawamura, An application of passive

human-robot interaction: Human tracking based on attention

distraction, IEEE Transactions on Systems, Man, and Cybernetics Part A, vol. 32, no. 2, pp. 248-259, 2002.

[9]

[10]

[11]

[12]

[13]

[14]

[15]

[16]

X. Li and H. Liu, Sound source localization for HRI using FOC-based

time difference feature and spatial grid matching, IEEE Transactions

on Cybernetics, vol. 43, no. 4, pp. 1199-1212, 2013.

T. Kim, H. Park, S. Hong, and Y. Chung, Integrated system of face

recognition and sound localization for a smart door phone, IEEE

Transactions on Consumer Electronics, vol. 59, no. 3, pp. 598-603,

2013.

C. Knapp and G. Carter, The generalized correlation method for

estimation of time delay, IEEE Transactions on Acoustics, Speech, and

Signal Processing, vol. ASSP-24, no. 4, pp. 320-327, 1976.

R. Schmidt, Multiple emitter location and signal parameter estimation,

IEEE Transactions on Antennas and Propagation, vol. 34, no. 3, pp.

276-280, 1986.

B. Mungamuru and P. Aarabi, Enhanced sound localization, IEEE

Trans. Systems, Man, and Cybernetics - Part B, vol. 34, no. 3, pp. 15261540, 2004.

V. Willert, J. Eggert, J. Adamy, R. Stahl, and E. Korner, A probabilistic

model for binaural sound localization, IEEE Transactions on Systems,

Man, and Cybernetics - Part B, vol. 36, no. 5, pp. 982-994, 2006.

Y. Cho, Robust speaker localization using steered response voice

power, Ph.D. Dissertation, Korea University, 2011.

J. Sohn, N. Kim, and W. Sung, A statistical model-based voice activity

detection, IEEE Signal Processing Letters, vol. 6, no. 1, pp. 1-3, 1999.

I. Yoo and D. Yook, Robust voice activity detection using the spectral

peaks of vowel sounds, ETRI Journal, vol. 31, no. 4, pp. 451-453,

2009.

37

Arjun Das H, Lisha Gopalakrishnan Pillai, and Muruganantham. C, Human voice localization in noisy environment by SRP-PHAT and

MFCC, International Research Journal of Advanced Engineering and Science, Volume 1, Issue 3, pp. 33-37, 2016.

Das könnte Ihnen auch gefallen

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (399)

- Analysis of Unsynchronization Carrier Frequency Offset For OFDM System Using Moose Estimation MethodDokument4 SeitenAnalysis of Unsynchronization Carrier Frequency Offset For OFDM System Using Moose Estimation MethodIRJAESNoch keine Bewertungen

- Effects of Deficit Irrigation Treatments On Fatty Acid Contents and Mediterranean Corn Borer Infection Rates of Some Maize (Zea Mays Indentata Sturt.) GenotypesDokument3 SeitenEffects of Deficit Irrigation Treatments On Fatty Acid Contents and Mediterranean Corn Borer Infection Rates of Some Maize (Zea Mays Indentata Sturt.) GenotypesIRJAESNoch keine Bewertungen

- Human Resource Management in Service OrganisationsDokument5 SeitenHuman Resource Management in Service OrganisationsIRJAESNoch keine Bewertungen

- Genetic Factors and Environmental Factors Affecting Male InfertilityDokument4 SeitenGenetic Factors and Environmental Factors Affecting Male InfertilityIRJAESNoch keine Bewertungen

- The Effect of Distances Between Soakaway and Borehole On Groundwater Quality in Calabar, South-South, NigeriaDokument5 SeitenThe Effect of Distances Between Soakaway and Borehole On Groundwater Quality in Calabar, South-South, NigeriaIRJAESNoch keine Bewertungen

- The Mechanism of Cell MigrationDokument5 SeitenThe Mechanism of Cell MigrationIRJAESNoch keine Bewertungen

- A Business Model For Insurance in IndiaDokument6 SeitenA Business Model For Insurance in IndiaIRJAESNoch keine Bewertungen

- Localization in IoT - A ReviewDokument4 SeitenLocalization in IoT - A ReviewIRJAES100% (1)

- IoT - Smart Meter ReadingDokument3 SeitenIoT - Smart Meter ReadingIRJAESNoch keine Bewertungen

- Edge Detection Algorithms Using Brain Tumor Detection and Segmentation Using Artificial Neural Network TechniquesDokument6 SeitenEdge Detection Algorithms Using Brain Tumor Detection and Segmentation Using Artificial Neural Network TechniquesIRJAESNoch keine Bewertungen

- Assessment of Bovine Dung Based Bio-Energy and Emission Mitigation Potential For MaharashtraDokument6 SeitenAssessment of Bovine Dung Based Bio-Energy and Emission Mitigation Potential For MaharashtraIRJAESNoch keine Bewertungen

- Feasibility Analysis of A Community Biogas Power Plant For Rural Energy AccessDokument5 SeitenFeasibility Analysis of A Community Biogas Power Plant For Rural Energy AccessIRJAESNoch keine Bewertungen

- A Recent Study of Various Encryption and Decryption TechniquesDokument3 SeitenA Recent Study of Various Encryption and Decryption TechniquesIRJAESNoch keine Bewertungen

- Development and Evaluation of A GUI Based Tool For Deployment of Sensors in A WSN Using Genetic AlgorithmDokument5 SeitenDevelopment and Evaluation of A GUI Based Tool For Deployment of Sensors in A WSN Using Genetic AlgorithmIRJAESNoch keine Bewertungen

- Investigation of Nitrate and Nitrite Contents in Well Water in Ozoro Town, Delta StateDokument3 SeitenInvestigation of Nitrate and Nitrite Contents in Well Water in Ozoro Town, Delta StateIRJAESNoch keine Bewertungen

- Evaluation of Some Mechanical Properties of Composites of Natural Rubber With Egg Shell and Rice HuskDokument5 SeitenEvaluation of Some Mechanical Properties of Composites of Natural Rubber With Egg Shell and Rice HuskIRJAESNoch keine Bewertungen

- Privacy Preserving in Big Data Using Cloud VirtulaizationDokument7 SeitenPrivacy Preserving in Big Data Using Cloud VirtulaizationIRJAESNoch keine Bewertungen

- Physicochemical Analysis of The Quality of Sachet Water Marketed in Delta State Polytechnic, OzoroDokument5 SeitenPhysicochemical Analysis of The Quality of Sachet Water Marketed in Delta State Polytechnic, OzoroIRJAESNoch keine Bewertungen

- Assessment of Heavy Metal Concentrations in Borehole-Hole Water in Ozoro Town, Delta StateDokument5 SeitenAssessment of Heavy Metal Concentrations in Borehole-Hole Water in Ozoro Town, Delta StateIRJAESNoch keine Bewertungen

- An Improved Performance of 2D ASCO-OFDM in Wireless CommunicationDokument5 SeitenAn Improved Performance of 2D ASCO-OFDM in Wireless CommunicationIRJAESNoch keine Bewertungen

- Intelligent Robot For Agricultural Plants Health Detection Using Image ProcessingDokument2 SeitenIntelligent Robot For Agricultural Plants Health Detection Using Image ProcessingIRJAESNoch keine Bewertungen

- Several Analysis On Influence of Soft Ground To Piled Embankment Reinforced With GeosyntheticsDokument7 SeitenSeveral Analysis On Influence of Soft Ground To Piled Embankment Reinforced With GeosyntheticsIRJAESNoch keine Bewertungen

- Conceal Message and Secure Video TransmissionDokument5 SeitenConceal Message and Secure Video TransmissionIRJAESNoch keine Bewertungen

- Security Threats in Wi-Fi NetworksDokument6 SeitenSecurity Threats in Wi-Fi NetworksIRJAESNoch keine Bewertungen

- Process Planning Possibilities in Chemical EngineeringDokument6 SeitenProcess Planning Possibilities in Chemical EngineeringIRJAESNoch keine Bewertungen

- Moving Object Detection and Tracking Using Hybrid ModelDokument5 SeitenMoving Object Detection and Tracking Using Hybrid ModelIRJAESNoch keine Bewertungen

- Modern Secured Approach For Authentication System by Using RF-Id and Face RecognitionDokument4 SeitenModern Secured Approach For Authentication System by Using RF-Id and Face RecognitionIRJAESNoch keine Bewertungen

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (894)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (587)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (265)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (73)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (344)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2219)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (119)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- ZXSDR BS8900A Quick Installation Guide R2.0 - CH - ENDokument37 SeitenZXSDR BS8900A Quick Installation Guide R2.0 - CH - ENMuhammad AliNoch keine Bewertungen

- Smart Antenna System OverviewDokument15 SeitenSmart Antenna System OverviewGR Techno SolutionsNoch keine Bewertungen

- AV Receiver: Owner'S Manual Manual de InstruccionesDokument430 SeitenAV Receiver: Owner'S Manual Manual de InstruccionesSebas SinNoch keine Bewertungen

- 1.2 MultimediaDokument55 Seiten1.2 Multimediakoimin203Noch keine Bewertungen

- TD-W8961N V1 Datasheet PDFDokument3 SeitenTD-W8961N V1 Datasheet PDFRomadi AhmadNoch keine Bewertungen

- JD374A Datasheet: Check Its PriceDokument4 SeitenJD374A Datasheet: Check Its PriceRodrigo SenadorNoch keine Bewertungen

- SM LCD TV Philips Chassis Tpm9.1e LaDokument91 SeitenSM LCD TV Philips Chassis Tpm9.1e LaSergey GorbatovNoch keine Bewertungen

- Efectos Del Zoom:: Dinamica y FiltroDokument9 SeitenEfectos Del Zoom:: Dinamica y FiltroMichael Mike Duran MejiaNoch keine Bewertungen

- Hcia 5G PDFDokument6 SeitenHcia 5G PDFKuda BetinaNoch keine Bewertungen

- Am Monitors Headphones12Dokument40 SeitenAm Monitors Headphones12dejan89npNoch keine Bewertungen

- Deep Sea 5310 Manual PDFDokument65 SeitenDeep Sea 5310 Manual PDFMario Emilio Castro TineoNoch keine Bewertungen

- EDA Companies and Tools GuideDokument9 SeitenEDA Companies and Tools GuideLaxmanaa GajendiranNoch keine Bewertungen

- Cabling – Unit 1: Capacitive and Inductive CouplingDokument58 SeitenCabling – Unit 1: Capacitive and Inductive CouplingAjayPanickerNoch keine Bewertungen

- Aurora SonataDokument336 SeitenAurora Sonatapepe perezNoch keine Bewertungen

- Mad - Imp QuestionsDokument3 SeitenMad - Imp QuestionsBhagirath GogikarNoch keine Bewertungen

- Infineon TLE9832 DS v01 01 enDokument94 SeitenInfineon TLE9832 DS v01 01 enBabei Ionut-MihaiNoch keine Bewertungen

- 832 Series ManualDokument10 Seiten832 Series ManualBECHER CAPCHANoch keine Bewertungen

- Sony+KDL 32EX521 1 PDFDokument43 SeitenSony+KDL 32EX521 1 PDFpolloNoch keine Bewertungen

- Qdoc - Tips Codigo de Falhas IvecopdfDokument16 SeitenQdoc - Tips Codigo de Falhas IvecopdfJackson Da Silva100% (2)

- Fire Safety Data Sheet for HTRI Interface ModulesDokument2 SeitenFire Safety Data Sheet for HTRI Interface ModulesOrlando SantofimioNoch keine Bewertungen

- Common Language Location Codes (CLLI™ Codes) Description For Location IdentificationDokument172 SeitenCommon Language Location Codes (CLLI™ Codes) Description For Location IdentificationJS BNoch keine Bewertungen

- ManualDokument100 SeitenManualstephen dinopolNoch keine Bewertungen

- Input/Line Transformers: Models WMT1A, TL100 & TL600Dokument2 SeitenInput/Line Transformers: Models WMT1A, TL100 & TL600syedhameed uddinNoch keine Bewertungen

- Service Manual: CSD-TD51 CSD-TD52 CSD-TD53Dokument30 SeitenService Manual: CSD-TD51 CSD-TD52 CSD-TD53parascoliNoch keine Bewertungen

- Applications and Characteristics of Overcurrent Relays (ANSI 50, 51)Dokument6 SeitenApplications and Characteristics of Overcurrent Relays (ANSI 50, 51)catalinccNoch keine Bewertungen

- Lab 1 ReportDokument12 SeitenLab 1 ReportHarining TyasNoch keine Bewertungen

- An H50Dokument4 SeitenAn H50Hugo RojasNoch keine Bewertungen

- Cisco. (2016)Dokument5 SeitenCisco. (2016)Alga GermanottaNoch keine Bewertungen

- LCFC Confidential NM-B461 Rev0.1 SchematicDokument99 SeitenLCFC Confidential NM-B461 Rev0.1 SchematicBoris PeñaNoch keine Bewertungen

- 12 Com123n TXDokument7 Seiten12 Com123n TXCauVong JustinNoch keine Bewertungen