Beruflich Dokumente

Kultur Dokumente

Damage Detection in Bridges Using Image Processing

Hochgeladen von

IAEME PublicationOriginaltitel

Copyright

Verfügbare Formate

Dieses Dokument teilen

Dokument teilen oder einbetten

Stufen Sie dieses Dokument als nützlich ein?

Sind diese Inhalte unangemessen?

Dieses Dokument meldenCopyright:

Verfügbare Formate

Damage Detection in Bridges Using Image Processing

Hochgeladen von

IAEME PublicationCopyright:

Verfügbare Formate

International Journal of Civil Engineering and Technology (IJCIET)

Volume 7, Issue 2, March-April 2016, pp. 215225, Article ID: IJCIET_07_02_019

Available online at

http://www.iaeme.com/IJCIET/issues.asp?JType=IJCIET&VType=7&IType=2

Journal Impact Factor (2016): 9.7820 (Calculated by GISI) www.jifactor.com

ISSN Print: 0976-6308 and ISSN Online: 0976-6316

IAEME Publication

DAMAGE DETECTION IN BRIDGES USING

IMAGE PROCESSING

Azmat Hussain

Department of civil engineering, Kurukshetra University, India

Saba Bashir

Department of computer Science and engineering, NIT Srinagar, India

Saima Maqbool

Department of computer science and engineering,

Islamic university of science and technology, Srinagar, India

ABSTRACT

Road network such as bridges, culverts have vital role before, during and

after extreme events to reduce the vulnerability of the community being served.

The bridge may be damaged due to severe accidents occurring over it. The

bridge may be damaged fully or partially due to heavy and unexpected gale.

The cost for the maintenance may be high enough and still no one can ensure

us about safety of the bridges or any other structure in future. Whenever there

is disaster, there is damage to the public property. So, we certainly need tools

to detect the Various methods for the rehabilitation of bridges are currently

available including the addition of structural reinforcement components as

steel rebar or reinforced concrete (RC) jackets and bonded steel plates.

Key words: Edge Extraction, Image Processing Pruning, Pixels, Texture

Analysis

Cite this Article: Azmat Hussain, Damage Detection In Bridges Using Image

Processing, International Journal of Civil Engineering and Technology, 7(2),

2016, pp. 215225.

http://www.iaeme.com/IJCIET/issues.asp?JType=IJCIET&VType=7&IType=2

1. INTRODUCTION

Many reinforced concrete bridges in highway systems are deteriorated and/or

distressed to such a degree that structural strengthening of the bridge or reducing the

allowable truck loading on the bridge by load posting is necessary to extend the

service life of the bridge. Besides, in motorway networks, over the last few decades,

there was a rapid increase in traffic volume and weight of heavy vehicles and

contemporarily there was a rapid increase in transit speed on railway networks. Many

http://www.iaeme.com/IJCIET/index.asp

215

editor@iaeme.com

Azmat Hussain, Saba Bashir and Saima Maqbool

bridges, which were built with now obsolete design standards, are not able to carry on

the recent traffic requirements and they require either weight or speed restriction, the

strengthening, or, even, the total replacement. Two methods are currently proving to

be very useful in increasing the cross-section capacity of the bridge beam: The

strengthening by fibres reinforced polymer (FRP) or fiber reinforced cementitious

mortar (FRCM) [310] and the reinforcement with external tendons.

Identifying appropriate applications for technology to assess the health and safety

of bridges is an important issue for bridge owners around the world. Traditionally,

highway bridge conditions have been monitored through visual inspection methods

with structural deficiencies being manually identified and classified by qualified

engineers and inspectors.

It takes longer time to grasp damage, even if serious damages are focused on.

Because it is time-consuming to detect damage by human eyes, it is effective to apply

image processing. When there is unusual weather or an earthquake occurs,

appropriate crisis management is required, including making information available by

detecting and seizing the disaster situations. For example, a road administrator must

quickly grasp a road disaster and, based on the presence/absence of disaster, make

passable routes in the disaster area to the public to provide assistance for the damaged

area, secure traffic safety for road users, and make suitable emergency repairs on

important routes to quickly reopen the roads by removing obstacles. At a serious

earthquake, it takes longer time to grasp damage by facility inspection patrol, even if

we ignore small damage and focus on serious damage. In such a situation, remote

sensing technology can play crucial roles. For Areal-type damage,

1.

2.

3.

4.

Edge extraction,

Unsupervised classification,

Texture analysis, and

Edge enhancement is appropriate to detect damaged area.

For Liner-type damage, Edge extraction is done to detect the damage portion by

image processing.

http://www.iaeme.com/IJCIET/index.asp

216

editor@iaeme.com

Damage Detection In Bridges Using Image Processing

2. RELATED WORK

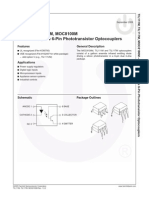

2.1 Flow chart

Images for detection

Separating RGB component

Histogram

Histogram Equalization

Feature Extraction Algorithm

Morphological Operations on Images

Classification and Matching

GUI based demonstration

3. PROBLEM FORMULATION

3.1. Correlation of the problem

At a serious disaster, it takes longer time to grasp damage by facility inspection patrol,

even if we ignore small damage and focus on serious damage. In such a situation,

remote sensing technology can play crucial roles. To put it concretely, first,

applicability of image processing in order to detect damage from images is presented.

Next, information extraction by human is discussed. Each image processing has its

area of strength. It means that several image processings should be applied to detect

various types of damage. Extraction by human has an advantage that it can apply to

all types of damage. However, a disadvantage is that it is time-consuming to check all

over the images by human eyes. Therefore, method to help personnel to examine

images by using facility data is developed.

4. PROBLEM IMPLEMENTATION

4.1. Images for detection

The pictures of both pre and post damaged bridges are collected.

4.2. Separation of RGB Components

RGB is the format for colour images. It represents an image with three matrices of

sizes matching the image format. Each matrix corresponds to one of the colors red,

http://www.iaeme.com/IJCIET/index.asp

217

editor@iaeme.com

Azmat Hussain, Saba Bashir and Saima Maqbool

green or blue and gives an instruction of how much of each of these colors a certain

pixel should use.

4.3. Histogram

An image histogram is a chart that shows the distribution of intensities in an indexed

or greyscale image. We can use the information in a histogram to choose an

appropriate enhancement operation. For example, if an image histogram shows that

the range of intensity values is small, we can use an intensity adjustment function to

spread the values across a wider range.

4.4. Histogram equalization

The process of adjusting intensity values can be done automatically by the histeq

function. Histeq performs histogram equalization, which involves transforming the

intensity values so that the histogram of the output image approximately matches a

specified histogram. Histeq tries to match a flat histogram with 64 bins.

4.5. Feature Extraction Algorithm

The algorithm which is being used is Feature Extraction Algorithm and textural

analysis, spectral extraction and geometric extraction is further done using feature

extraction.

4.5.1 Textural Feature Extraction

For Texture Analysis Co-occurrence matrix method is being used. The use of colour

image features in the visible light spectrum provides additional image characteristic

features over the traditional gray-scale representation.

4.5.2 Spectral Feature Extraction

Spectral clustering techniques make use of the spectrum of the similarity matrix of the

data to perform dimensionality reduction for clustering in fewer dimensions.

4.5.3 Geometric Feature Extraction

In Geometric Feature Extraction, Edge detection method is used.

4.6. Morphological operations on the images

The following operations are performed on images

4.6.1 Dilation: Dilation adds pixels to the boundaries of objects in an image. The

value of the output pixel is the maximum value of all the pixels in the input pixel's

neighborhood. In a binary image, if any of the pixels is set to the value 1, the output

pixel is set to 1.

4.6.2 Erosion: Erosion removes pixels on object boundaries. The value of the output

pixel is the minimum value of all the pixels in the input pixel's neighborhood. In a

binary image, if any of the pixels is set to 0, the output pixel is set to 0.

4.6.3 Opening: Morphological opening is used to remove small objects from an

image while preserving the shape and size of larger objects in the image.

4.6.4 Closing: The Morphological Close object performs morphological closing on an

intensity or binary image. The Morphological Close System object performs a dilation

operation followed by an erosion operation using a predefined neighborhood or

structuring element. This System object uses flat structuring elements only.

http://www.iaeme.com/IJCIET/index.asp

218

editor@iaeme.com

Damage Detection In Bridges Using Image Processing

4.6.5 Pruning: The pruning algorithm is a technique used in digital image

processing based on mathematical morphology. It is used as a complement to

the skeleton and thinning algorithms to remove unwanted parasitic components.

These components can often be created by edge detection algorithms or digitisation.

4.6.6 Skeltonization: To reduce all objects in an image to lines, without changing the

essential structure of the image. This process is known as skeletonization.

4.7. Matching Threshold

During the thresholding process, individual pixels in an image are marked as "object"

pixels if their value is greater than some threshold value (assuming an object to be

brighter than the background) and as "background" pixels otherwise. This convention

is known as threshold above. Variants include threshold below, which is opposite of

threshold above; threshold inside, where a pixel is labelled "object" if its value is

between two thresholds; and threshold outside, which is the opposite of threshold

inside . Typically, an object pixel is given a value of 1 while a background pixel is

given a value of 0. Finally, a binary image is created by colouring each pixel white

or black, depending on a pixel's labels.

4.8 GUI based demonstration:

A GUI (graphical user interface) allows users to perform tasks interactively through

controls such as buttons and sliders. Within MATLAB, GUI tools enable us to

perform tasks such as creating and customizing plots, fitting curves and surfaces, and

analysing and filtering signals. We can also create custom GUIs for others to use

either by running them in MATLAB or as standalone applications.

5. METHOD

The type of algorithm which is used is feature extraction algorithm.

5.1. Features Extraction

In feature extraction algorithm Textural Analysis, Spectral Extraction and Geometric

Extraction is further done.

5.1.1. Co-occurrence Methodology for Texture Analysis

The image analysis technique selected is the CCM method. The use of colour image

features in the visible light spectrum provides additional image characteristic features

over the traditional gray-scale representation. The CCM methodology established

consists of three major mathematical processes. First, the RGB images of leaves are

converted into Hue Saturation Intensity (HSI) colour space representation. Once this

process is completed, each pixel map is used to generate a colour co-occurrence

matrix, resulting in three CCM matrices, one for each of the H, S and I pixel maps.

(HSI) space is also a popular colour space because it is based on human colour

perception. Electromagnetic radiation in the range of wavelengths of about 400 to 700

nanometers is called visible light because the human visual system is sensitive to this

range. Hue is generally related to the wavelength of a light and intensity shows the

amplitude of a light. Lastly, saturation is a component that measures the

colourfulness in HSI space. Colour spaces can be transformed from one space to

another easily.

http://www.iaeme.com/IJCIET/index.asp

219

editor@iaeme.com

Azmat Hussain, Saba Bashir and Saima Maqbool

The colour co-occurrence texture analysis method was developed through the use

of Spatial Gray-level Dependence Matrices (SGDMs). The gray level co-occurrence

methodology is a statistical way to describe shape by statistically sampling the way

certain grey-levels occur in relation to other grey-levels. These matrices measure the

probability that a pixel at one particular gray level will occur at a distinct distance and

orientation from any pixel given that pixel has a second particular gray level. For a

position operator p, we can define a matrix Pij that counts the number of times a pixel

with grey level i occurs at position p from a pixel with grey-level j. The SGDMs are

represented by the function P (i, j, d, ) where I represents the gray level of the

location (x, y) in the image I(x, y), and j represents the gray level of the pixel at a

distance d from location (x, y) at an orientation angle of . The reference pixel at

image position (x, y) is shown as an asterix. All the neighbors from 1 to 8 are

numbered in a clockwise direction. Neighbors 1 and 5 are located on the same plane

at a distance of 1 and an orientation of 0 degrees. An example image matrix and its

SGDM are already given in the three equations above.

In this, a one pixel offset distance and a zero degree orientation angle was used.

After the transformation processes, the feature set for H and S are calculated, and (I)

is being dropped since it does not give extra information.

5.1.2 Texture Features Identification

The following features set were computed for the components H and S:

The angular moment is used to measure the homogeneity of the image, and is

defined as shown in Equation

The product moment (cov) is analogous to the covariance of the intensity cooccurrence matrix and is defined as shown in Equation

The sum and difference entropies which are computed using Equations below

The entropy feature is a measure of the amount of order in an image, and is

computed as

http://www.iaeme.com/IJCIET/index.asp

220

editor@iaeme.com

Damage Detection In Bridges Using Image Processing

The information measures of correlation is defined

Where:

Contrast of an image can be measured by the inverse difference moment as shown

Correlation is a measure of intensity linear dependence in the image and is defined

as

5.2. Spectral Feature Extraction

The algorithm used for spectral analysis is spectral clustering.

Given a data set S = {s1... Sn} to be clustered

1. Calculate the affinity matrix Aij = exp (-||si sj||2/22), if i j and Aii= 0 where 2 is

the scaling parameter

2. Define D to be the diagonal matrix whose (i,i)-element is the sum of As i-th row,

and construct the matrix L = D-1/2 AD-1/2

3. Find k largest eigenvectors of L and form the matrix X = [x1 x2xk ]

4. Form the matrix Y from X by normalizing each of Xs rows to have unit length, Yij =

Xij/(j Xij2)1/2

5. Treating each row of Y as a point, cluster them into k clusters via K means or any

other algorithm.

6. Assign the original point si to cluster j if and only if row i of the matrix Y was

assigned to cluster j.

5.3. Geometric Feature Extraction:

In Geometric Feature Extraction the Edge detection algorithm is used. Edge detection

algorithms operate on the premise that each pixel in a grayscale digital image has

a first derivative, with regard to the change in intensity at that point, if a significant

change occurs at a given pixel in the image, then a white pixel is placed in the binary

image, otherwise, a black pixel is placed there instead. In general, the gradient is

calculated for each pixel that gives the degree of change at that point in the image.

The question basically amounts to how much change in the intensity should be

required in order to constitute an edge feature in the binary image. A threshold

value, T, is often used to classify edge points. Some edge finding techniques calculate

the second derivative to more accurately find points that correspond to a local

maximum or minimum in the first derivative. This technique is often referred to as a

Zero Crossing because local maxima and minima are the places where the second

derivative equal zero, and its left and right neighbors are non-zero with opposite

signs.

http://www.iaeme.com/IJCIET/index.asp

221

editor@iaeme.com

Azmat Hussain, Saba Bashir and Saima Maqbool

6. IMPLEMENTATION OF ALGORITHMS

The three sets of both pre and post damaged bridges are collected on which texture

analysis feature extraction is performed. The figure below shows a normal bridge

followed by its rgb2gray conversion, its histogram and histogram equalization

The figure below shows the damaged bridge followed by its rgb2gray conversion,

its histogram and histogram equalization

http://www.iaeme.com/IJCIET/index.asp

222

editor@iaeme.com

Damage Detection In Bridges Using Image Processing

Texture Analysis of damaged bridge

7. CONCLUSION

Using the images after the Damage, the spectral characteristics of damaged bridges

can be investigated. For areal-type damage, 1) edge extraction, 2) unsupervised

classification, 3) texture analysis, 4) edge enhancement and 5) operations between

images are suitable to detect damaged area. In this way, it is possible to find areal-

http://www.iaeme.com/IJCIET/index.asp

223

editor@iaeme.com

Azmat Hussain, Saba Bashir and Saima Maqbool

type damage. However, For Liner-type damage, Edge extraction is done to detect the

damage portion by image processing

REFERENCES

[1]

[2]

[3]

[4]

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

Alexander, D., 1991. Information technology in real-time for monitoring and

managing natural disasters. Progress in Physical Geography, 15(3), pp. 238-260.

Gornyi, V.I. and Latypov, I.S., 2002. Possibility for the development of a

scanning radiometer with synthesized aperture: Experimental verification.

Doklady Earth Sciences, 387(8), pp. 962-964.

Hasegawa, H., Aoki, H., Yamazaki, F., Matsuoka, M. and Sekimoto, I., 2000a.

Automated detection of damaged buildings using aerial HDTV images,

International Geoscience and Remote Sensing Symposium, Honolulu, USA,

IEEE, CD-ROM.

Hasegawa, H., Yamazaki, F., Matsuoka, M. and Sekimoto, I., 2000b. Extraction

of building damage due to earthquakes using aerial television images, 12th world

conference on earthquake engineering, Auckland, New Zealand, No. 1722: CDROM.

Kerle, N. and Oppenheimer, C., 2002. Satellite remote sensing as a tool in lahar

disaster management. Disasters, 26(2), pp. 140-160.

Mitomi, H., Yamzaki, F. and Matsuoka, M., 2000. Automated detection of

building damage due to recent earthquakes using aerial television images, Asian

Conference on Remote Sensing, Taipei, Taiwan.

Ozisik, D. and Kerle, N., 2004. Post-earthquake damage assessment using

satellite and airborne data in the case of the Istanbul, Turkey. van Westen, C.J.

and Hofstee, P., 2001.

The role of remote sensing and GIS in risk mapping and damange assessment for

disaster in urban areas, Second forum catastrophy mitigation : natural disasters,

impact, mitigation, tools, Leipzig, Germany, pp. 442-450.

Walter, L.S., 1994. Natural hazard assessment and mitigation from space: the

potential of remote sensing to meet operational requirements, Natural hazard

assessment and mitigation: the unique role of remote sensing, Royal Society,

London, UK, pp. 7-12.

Wang, Z. and Bovik, A.C., 2002. A universal image quality index. IEEE Signal

Processing Letters, 9(3), pp. 81-84. Yamazaki, F., 2001. Applications of remote

sensing and GIS for damage assessment, 8th International Conference on

Structural Safety and Reliability, Newport Beach, USA.

R. Gonzales and R. Woods, Digital image processing, Prentice Hall, Upper

Saddle River, New Jersey.

A. Medda and V. DeBrunner, Near-Field Sub-band Beam forming for Damage

Detection in Bridges, Structural Health Monitoring, and International Journal,

to be Published.

M. Matsuoka and F. Yamazaki, Characteristics of Satellite Images of Damaged

Areas due to the 1995 Kobe Earthquake, Proc. of 2 nd Conference on the

Applications of Remote Sensing and GIS for Disaster Management, The George

Washington University, CD-ROM, 1999.

R.T. Eguchi, C.K. Huyck, B. Houshmand, B. Mansouri, M. Shinozuka, F.

Yamazaki and M. Matsuoka, The Marmara Earthquake: A View from space:

The Marmara, Turkey Earthquake of August 17, 1999: Reconnaissance Report,

Technical Report MCEER-00-0001, pp.151- 169, 2000.

http://www.iaeme.com/IJCIET/index.asp

224

editor@iaeme.com

Damage Detection In Bridges Using Image Processing

[15]

[16]

[17]

[18]

[19]

[20]

[21]

[22]

[23]

[24]

[25]

[26]

[27]

[28]

M. Estrada, F. Yamazaki and M. Matsuoka, Use of Landsat Images for the

Identification of Damage due to the 1999 Kocaeli, Turkey Earthquake, Proc. of

21st Asian Conference on Remote Sensing, pp. 1185-1190, Singapore, 2001

H. Mitomi, J. Saita, M. Matsuoka and F. Yamazaki, Automated Damage

Detection of Buildings from Aerial Television Images of the 2001 Gujarat, India

Earthquake, Proc. of the IGARSS 2001, IEEE, CDROM, 3p, 2001.

K. Saito, R.J.S. Spence, C. Going and M. Markus, Using high resolution satellite

images for post-earthquake building damage assessment: a study following the 26

January 2001 Gujarat Earthquake, Earthquake Spectra, vol. 20 (1), pp. 145169,

2004.

T.T. Vu, M. Matsuoka and F. Yamazaki, Detection and Animation of Damage

Using Very High-resolution Satellite Data Following the 2003 Bam, Iran,

Earthquake Earthquake Spectra, vol. 21 (S1), pp. S319- S327, 2005.

F. Yamazaki, Y. Yano and M. Matsuoka, Visual Damage Interpretation of

Buildings in Bam City Using Quick Bird Images Following the 2003 Bam, Iran,

Earthquake, Earthquake Spectra, vol. 21 (S1), S329-S336, 2005.

M. Matsuoka and F. Yamazaki, Use of SAR Imagery for Monitoring Areas

Damaged Due to The 2006 Mid Java, Indonesia Earthquake, Proc. of 4th

International workshop on Remote Sensing for Post- Disaster Response,

Cambridge, United Kingdom, 2006.

K. Matsumoto, T.T. Vu and F. Yamazaki, Extraction of damaged buildings

using high resolution satellite images in the 2006 Central Java Earthquake, Proc.

of ACRS 2006, Ulaanbaatar, Mongolia, 2006.

L. Gusella, B.J. Adams, G. Bitelli, C.K. Huyck, and A. Mognol, Object oriented

image understanding and post-earthquake damage assessment for the 2003 Bam,

Iran, earthquake. Earthquake Spectra, vol. 21 (S1), pp. S225-S238, 2005.

T.T. Vu, M. Matsuoka and F. Yamazaki, Preliminary results in Development of

an Object-based Image Analysis Method for Earthquake Damage Assessment,

Proc. of 3rd International workshop on Remote Sensing for Post-Disaster

Response, Chiba, Japan, 2005.

T.T. Vu, M. Matsuoka and F. Yamazaki, Towards object-based damage

detection, Proc. of ISPRS workshop DIMAI2005, Bangkok, Thailand, 2005.

T. T. Vu, M. Matsuoka, and F. Yamazaki, Multi-level detection of building

damage due to earthquakes from high resolution satellite images. Proceeding of

SPIE Asia-Pacific Remote Sensing International Symposium, Goa, India, 13-17

Nov. 2006.

Dr. K.v. Ramana reddy, Aerodynamic Stability of A Cable Stayed Bridge,

International Journal of Civil Engineering and Technology, 5(5), 2014, pp. 88

86.

Evinur Cahya, Toshitaka Yamao and Akira Kasai, Seismic Response Behavior

Using Static Pushover Analysis and Dynamic Analysis of Half-Through Steel

Arch Bridge Under Strong Earthquakes, International Journal of Civil

Engineering and Technology, 5(1), 2014, pp. 7388

L. Vincent, Morphological Area Opening and Closings for Greyscale Images,

Proc. NATO Shape in Picture workshop, Driebergen, The Netherlands, SpringerVerlag, pp.197-208, 1992.

http://www.iaeme.com/IJCIET/index.asp

225

editor@iaeme.com

Das könnte Ihnen auch gefallen

- Hidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceVon EverandHidden Figures: The American Dream and the Untold Story of the Black Women Mathematicians Who Helped Win the Space RaceBewertung: 4 von 5 Sternen4/5 (895)

- The Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeVon EverandThe Subtle Art of Not Giving a F*ck: A Counterintuitive Approach to Living a Good LifeBewertung: 4 von 5 Sternen4/5 (5794)

- Modeling and Analysis of Surface Roughness and White Later Thickness in Wire-Electric Discharge Turning Process Through Response Surface MethodologyDokument14 SeitenModeling and Analysis of Surface Roughness and White Later Thickness in Wire-Electric Discharge Turning Process Through Response Surface MethodologyIAEME PublicationNoch keine Bewertungen

- Voice Based Atm For Visually Impaired Using ArduinoDokument7 SeitenVoice Based Atm For Visually Impaired Using ArduinoIAEME PublicationNoch keine Bewertungen

- Broad Unexposed Skills of Transgender EntrepreneursDokument8 SeitenBroad Unexposed Skills of Transgender EntrepreneursIAEME PublicationNoch keine Bewertungen

- Influence of Talent Management Practices On Organizational Performance A Study With Reference To It Sector in ChennaiDokument16 SeitenInfluence of Talent Management Practices On Organizational Performance A Study With Reference To It Sector in ChennaiIAEME PublicationNoch keine Bewertungen

- Impact of Emotional Intelligence On Human Resource Management Practices Among The Remote Working It EmployeesDokument10 SeitenImpact of Emotional Intelligence On Human Resource Management Practices Among The Remote Working It EmployeesIAEME PublicationNoch keine Bewertungen

- Attrition in The It Industry During Covid-19 Pandemic: Linking Emotional Intelligence and Talent Management ProcessesDokument15 SeitenAttrition in The It Industry During Covid-19 Pandemic: Linking Emotional Intelligence and Talent Management ProcessesIAEME PublicationNoch keine Bewertungen

- A Study of Various Types of Loans of Selected Public and Private Sector Banks With Reference To Npa in State HaryanaDokument9 SeitenA Study of Various Types of Loans of Selected Public and Private Sector Banks With Reference To Npa in State HaryanaIAEME PublicationNoch keine Bewertungen

- A Multiple - Channel Queuing Models On Fuzzy EnvironmentDokument13 SeitenA Multiple - Channel Queuing Models On Fuzzy EnvironmentIAEME PublicationNoch keine Bewertungen

- Role of Social Entrepreneurship in Rural Development of India - Problems and ChallengesDokument18 SeitenRole of Social Entrepreneurship in Rural Development of India - Problems and ChallengesIAEME PublicationNoch keine Bewertungen

- A Study On The Impact of Organizational Culture On The Effectiveness of Performance Management Systems in Healthcare Organizations at ThanjavurDokument7 SeitenA Study On The Impact of Organizational Culture On The Effectiveness of Performance Management Systems in Healthcare Organizations at ThanjavurIAEME PublicationNoch keine Bewertungen

- A Study On Talent Management and Its Impact On Employee Retention in Selected It Organizations in ChennaiDokument16 SeitenA Study On Talent Management and Its Impact On Employee Retention in Selected It Organizations in ChennaiIAEME PublicationNoch keine Bewertungen

- EXPERIMENTAL STUDY OF MECHANICAL AND TRIBOLOGICAL RELATION OF NYLON/BaSO4 POLYMER COMPOSITESDokument9 SeitenEXPERIMENTAL STUDY OF MECHANICAL AND TRIBOLOGICAL RELATION OF NYLON/BaSO4 POLYMER COMPOSITESIAEME PublicationNoch keine Bewertungen

- A Review of Particle Swarm Optimization (Pso) AlgorithmDokument26 SeitenA Review of Particle Swarm Optimization (Pso) AlgorithmIAEME PublicationNoch keine Bewertungen

- Knowledge Self-Efficacy and Research Collaboration Towards Knowledge Sharing: The Moderating Effect of Employee CommitmentDokument8 SeitenKnowledge Self-Efficacy and Research Collaboration Towards Knowledge Sharing: The Moderating Effect of Employee CommitmentIAEME PublicationNoch keine Bewertungen

- Application of Frugal Approach For Productivity Improvement - A Case Study of Mahindra and Mahindra LTDDokument19 SeitenApplication of Frugal Approach For Productivity Improvement - A Case Study of Mahindra and Mahindra LTDIAEME PublicationNoch keine Bewertungen

- Various Fuzzy Numbers and Their Various Ranking ApproachesDokument10 SeitenVarious Fuzzy Numbers and Their Various Ranking ApproachesIAEME PublicationNoch keine Bewertungen

- Optimal Reconfiguration of Power Distribution Radial Network Using Hybrid Meta-Heuristic AlgorithmsDokument13 SeitenOptimal Reconfiguration of Power Distribution Radial Network Using Hybrid Meta-Heuristic AlgorithmsIAEME PublicationNoch keine Bewertungen

- Dealing With Recurrent Terminates in Orchestrated Reliable Recovery Line Accumulation Algorithms For Faulttolerant Mobile Distributed SystemsDokument8 SeitenDealing With Recurrent Terminates in Orchestrated Reliable Recovery Line Accumulation Algorithms For Faulttolerant Mobile Distributed SystemsIAEME PublicationNoch keine Bewertungen

- A Proficient Minimum-Routine Reliable Recovery Line Accumulation Scheme For Non-Deterministic Mobile Distributed FrameworksDokument10 SeitenA Proficient Minimum-Routine Reliable Recovery Line Accumulation Scheme For Non-Deterministic Mobile Distributed FrameworksIAEME PublicationNoch keine Bewertungen

- Analysis of Fuzzy Inference System Based Interline Power Flow Controller For Power System With Wind Energy Conversion System During Faulted ConditionsDokument13 SeitenAnalysis of Fuzzy Inference System Based Interline Power Flow Controller For Power System With Wind Energy Conversion System During Faulted ConditionsIAEME PublicationNoch keine Bewertungen

- Financial Literacy On Investment Performance: The Mediating Effect of Big-Five Personality Traits ModelDokument9 SeitenFinancial Literacy On Investment Performance: The Mediating Effect of Big-Five Personality Traits ModelIAEME PublicationNoch keine Bewertungen

- Moderating Effect of Job Satisfaction On Turnover Intention and Stress Burnout Among Employees in The Information Technology SectorDokument7 SeitenModerating Effect of Job Satisfaction On Turnover Intention and Stress Burnout Among Employees in The Information Technology SectorIAEME PublicationNoch keine Bewertungen

- Quality of Work-Life On Employee Retention and Job Satisfaction: The Moderating Role of Job PerformanceDokument7 SeitenQuality of Work-Life On Employee Retention and Job Satisfaction: The Moderating Role of Job PerformanceIAEME PublicationNoch keine Bewertungen

- Sentiment Analysis Approach in Natural Language Processing For Data ExtractionDokument6 SeitenSentiment Analysis Approach in Natural Language Processing For Data ExtractionIAEME PublicationNoch keine Bewertungen

- Prediction of Average Total Project Duration Using Artificial Neural Networks, Fuzzy Logic, and Regression ModelsDokument13 SeitenPrediction of Average Total Project Duration Using Artificial Neural Networks, Fuzzy Logic, and Regression ModelsIAEME PublicationNoch keine Bewertungen

- A Overview of The Rankin Cycle-Based Heat Exchanger Used in Internal Combustion Engines To Enhance Engine PerformanceDokument5 SeitenA Overview of The Rankin Cycle-Based Heat Exchanger Used in Internal Combustion Engines To Enhance Engine PerformanceIAEME PublicationNoch keine Bewertungen

- Analysis On Machine Cell Recognition and Detaching From Neural SystemsDokument9 SeitenAnalysis On Machine Cell Recognition and Detaching From Neural SystemsIAEME PublicationNoch keine Bewertungen

- Evaluation of The Concept of Human Resource Management Regarding The Employee's Performance For Obtaining Aim of EnterprisesDokument6 SeitenEvaluation of The Concept of Human Resource Management Regarding The Employee's Performance For Obtaining Aim of EnterprisesIAEME PublicationNoch keine Bewertungen

- Formulation of The Problem of Mathematical Analysis of Cellular Communication Basic Stations in Residential Areas For Students of It-PreparationDokument7 SeitenFormulation of The Problem of Mathematical Analysis of Cellular Communication Basic Stations in Residential Areas For Students of It-PreparationIAEME PublicationNoch keine Bewertungen

- Ion Beams' Hydrodynamic Approach To The Generation of Surface PatternsDokument10 SeitenIon Beams' Hydrodynamic Approach To The Generation of Surface PatternsIAEME PublicationNoch keine Bewertungen

- Shoe Dog: A Memoir by the Creator of NikeVon EverandShoe Dog: A Memoir by the Creator of NikeBewertung: 4.5 von 5 Sternen4.5/5 (537)

- Grit: The Power of Passion and PerseveranceVon EverandGrit: The Power of Passion and PerseveranceBewertung: 4 von 5 Sternen4/5 (588)

- The Yellow House: A Memoir (2019 National Book Award Winner)Von EverandThe Yellow House: A Memoir (2019 National Book Award Winner)Bewertung: 4 von 5 Sternen4/5 (98)

- The Little Book of Hygge: Danish Secrets to Happy LivingVon EverandThe Little Book of Hygge: Danish Secrets to Happy LivingBewertung: 3.5 von 5 Sternen3.5/5 (400)

- Never Split the Difference: Negotiating As If Your Life Depended On ItVon EverandNever Split the Difference: Negotiating As If Your Life Depended On ItBewertung: 4.5 von 5 Sternen4.5/5 (838)

- Elon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureVon EverandElon Musk: Tesla, SpaceX, and the Quest for a Fantastic FutureBewertung: 4.5 von 5 Sternen4.5/5 (474)

- A Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryVon EverandA Heartbreaking Work Of Staggering Genius: A Memoir Based on a True StoryBewertung: 3.5 von 5 Sternen3.5/5 (231)

- The Emperor of All Maladies: A Biography of CancerVon EverandThe Emperor of All Maladies: A Biography of CancerBewertung: 4.5 von 5 Sternen4.5/5 (271)

- Devil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaVon EverandDevil in the Grove: Thurgood Marshall, the Groveland Boys, and the Dawn of a New AmericaBewertung: 4.5 von 5 Sternen4.5/5 (266)

- The Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersVon EverandThe Hard Thing About Hard Things: Building a Business When There Are No Easy AnswersBewertung: 4.5 von 5 Sternen4.5/5 (345)

- On Fire: The (Burning) Case for a Green New DealVon EverandOn Fire: The (Burning) Case for a Green New DealBewertung: 4 von 5 Sternen4/5 (74)

- The World Is Flat 3.0: A Brief History of the Twenty-first CenturyVon EverandThe World Is Flat 3.0: A Brief History of the Twenty-first CenturyBewertung: 3.5 von 5 Sternen3.5/5 (2259)

- Team of Rivals: The Political Genius of Abraham LincolnVon EverandTeam of Rivals: The Political Genius of Abraham LincolnBewertung: 4.5 von 5 Sternen4.5/5 (234)

- The Unwinding: An Inner History of the New AmericaVon EverandThe Unwinding: An Inner History of the New AmericaBewertung: 4 von 5 Sternen4/5 (45)

- The Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreVon EverandThe Gifts of Imperfection: Let Go of Who You Think You're Supposed to Be and Embrace Who You AreBewertung: 4 von 5 Sternen4/5 (1090)

- The Sympathizer: A Novel (Pulitzer Prize for Fiction)Von EverandThe Sympathizer: A Novel (Pulitzer Prize for Fiction)Bewertung: 4.5 von 5 Sternen4.5/5 (121)

- Her Body and Other Parties: StoriesVon EverandHer Body and Other Parties: StoriesBewertung: 4 von 5 Sternen4/5 (821)

- Training Contents - WebmethodsDokument6 SeitenTraining Contents - WebmethodsShyamini Dhinesh0% (1)

- 2021-Final-Examination Update 05jan2022Dokument5 Seiten2021-Final-Examination Update 05jan2022Tâm TrầnNoch keine Bewertungen

- ArgosMiniII BrochureDokument1 SeiteArgosMiniII BrochureMishaCveleNoch keine Bewertungen

- Proven Perimeter Protection: Single-Platform SimplicityDokument6 SeitenProven Perimeter Protection: Single-Platform SimplicitytecksanNoch keine Bewertungen

- Ezra Pound PDFDokument2 SeitenEzra Pound PDFGaryNoch keine Bewertungen

- A Benchmark of Dual Constellations GNSS Solutions For Vehicle Localization in Container TerminalsDokument7 SeitenA Benchmark of Dual Constellations GNSS Solutions For Vehicle Localization in Container TerminalsjfrasconNoch keine Bewertungen

- Li4278 Spec Sheet en UsDokument2 SeitenLi4278 Spec Sheet en UsErwin RamadhanNoch keine Bewertungen

- Daehan Lim Resume 09 30 17Dokument1 SeiteDaehan Lim Resume 09 30 17api-379778562Noch keine Bewertungen

- Microsync Rx800 AcdcDokument68 SeitenMicrosync Rx800 AcdcUMcuatrocerocincoNoch keine Bewertungen

- 4hana 1909Dokument60 Seiten4hana 1909ddharNoch keine Bewertungen

- It - Mega - Courses 1Dokument13 SeitenIt - Mega - Courses 1Telt100% (4)

- EIS SM Booster Antim Prahar Charts 2022 23Dokument94 SeitenEIS SM Booster Antim Prahar Charts 2022 23Vinamra GuptaNoch keine Bewertungen

- Enlaces Descargas Windows Dan RatiaDokument4 SeitenEnlaces Descargas Windows Dan RatiaEnrique LinerosNoch keine Bewertungen

- Memory Management Unit 5Dokument58 SeitenMemory Management Unit 5Nishant NalawadeNoch keine Bewertungen

- AC To DC Power Conversion IEEEDokument40 SeitenAC To DC Power Conversion IEEEvthiyagainNoch keine Bewertungen

- TIL111M, TIL117M, MOC8100M General Purpose 6-Pin Phototransistor OptocouplersDokument11 SeitenTIL111M, TIL117M, MOC8100M General Purpose 6-Pin Phototransistor Optocouplersahm_adNoch keine Bewertungen

- Coursera EF7ZHT9EAX5ZDokument1 SeiteCoursera EF7ZHT9EAX5ZBeyar. ShNoch keine Bewertungen

- Refrigeration Controlling Digital Scroll Technical Information en GB 4214506Dokument12 SeitenRefrigeration Controlling Digital Scroll Technical Information en GB 4214506stefancuandreiNoch keine Bewertungen

- LogcatDokument2 SeitenLogcatBLACK RAKESH YTNoch keine Bewertungen

- Autocad Lab ManualDokument2 SeitenAutocad Lab ManualriyaNoch keine Bewertungen

- cs2201 Unit1 Notes PDFDokument16 Seitencs2201 Unit1 Notes PDFBal BolakaNoch keine Bewertungen

- Advanced Strategic ManagementDokument110 SeitenAdvanced Strategic ManagementDr Rushen SinghNoch keine Bewertungen

- Blockchain and Shared LedgersDokument121 SeitenBlockchain and Shared LedgersEunice Rivera-BuencaminoNoch keine Bewertungen

- 9tut Q&aDokument40 Seiten9tut Q&alucaluca2Noch keine Bewertungen

- EE133 Lab Report 4Dokument6 SeitenEE133 Lab Report 4nikmah supriNoch keine Bewertungen

- CST Studio Suite - Release NotesDokument16 SeitenCST Studio Suite - Release NotesEman GuiruelaNoch keine Bewertungen

- Form 2 March 2020 Break Computer Studies AssignmentDokument16 SeitenForm 2 March 2020 Break Computer Studies AssignmentKarari WahogoNoch keine Bewertungen

- Faceless LL 2Dokument59 SeitenFaceless LL 2mnabcNoch keine Bewertungen

- 458 Challenge BrochureDokument19 Seiten458 Challenge BrochureKent WaiNoch keine Bewertungen

- Artificial Int Text BookDokument100 SeitenArtificial Int Text BookAshwinNoch keine Bewertungen